Dynamic Expectation–Satisfaction Relationship in Sustainable Experiences with Product: A Comparative Study of Durable Goods, FMCG, and Digital Products

Abstract

1. Introduction

2. Related Work

2.1. Dynamic Expectancy–Disconfirmation Framework

2.2. Factors of the User Experience

2.3. Expectation and Experience

2.4. Experience Baseline

3. Materials and Methods

3.1. Participants

3.2. Experimental Materials and Grouping

3.3. Experimental Procedure

3.4. Measures

4. Results

4.1. Descriptive Statistics

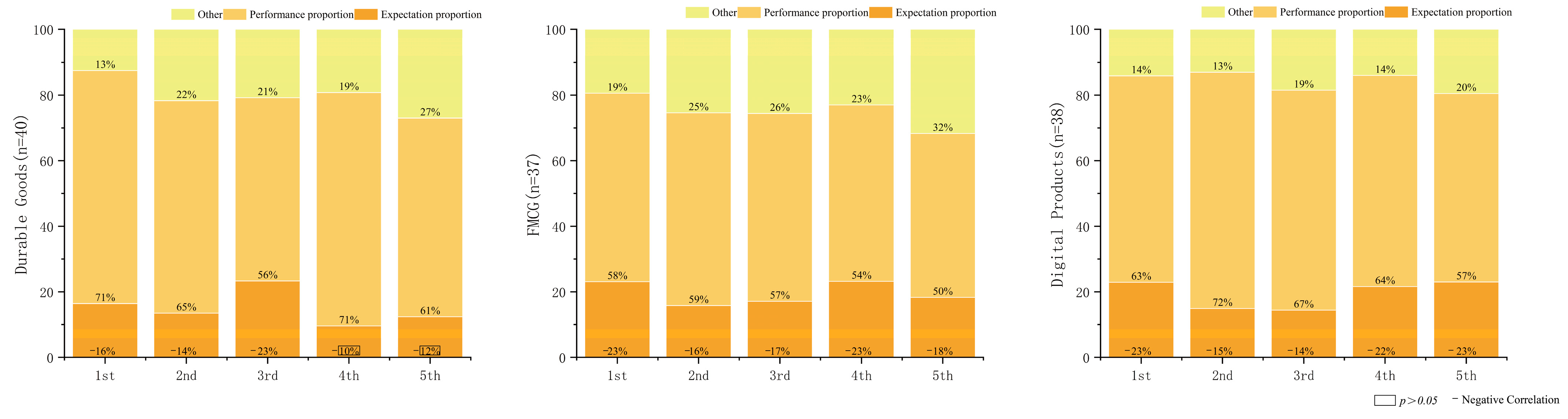

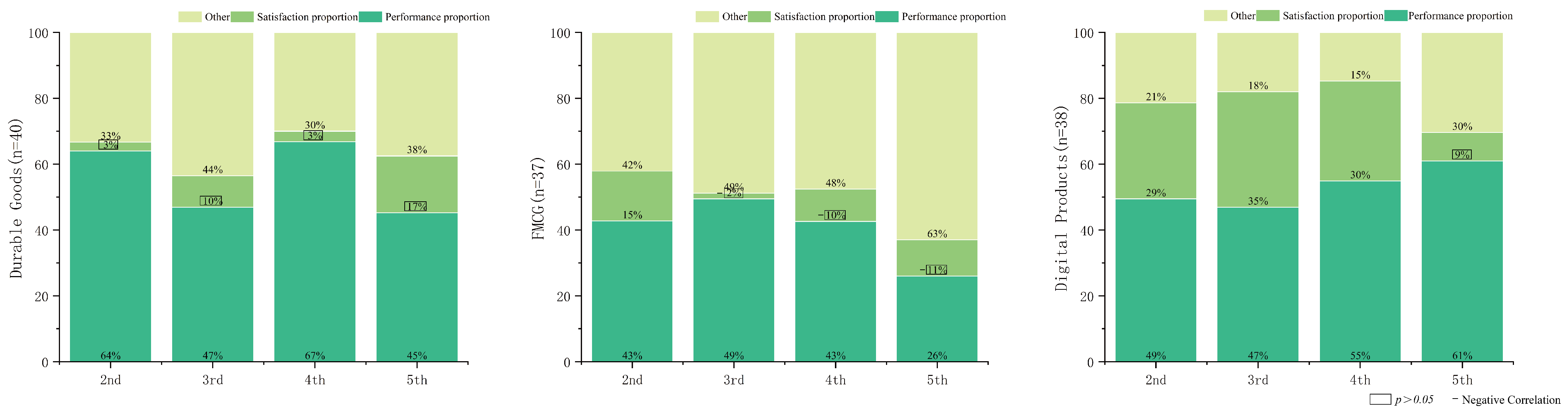

4.2. Explanatory Power and Correlation

5. Discussion

5.1. Changes in the Impact of Elements Within the Group

5.2. Characterization of Trends Between Groups

5.3. The Disconfirmation Degree and Satisfaction

5.4. Implications for Design and Sustainability

6. Conclusions

- The Expectancy–Disconfirmation Theory remains a robust framework for modeling satisfaction across diverse product types. However, the influence of individual components such as expectation, perceived performance, and disconfirmation varies notably between categories. For digital products, satisfaction is more heavily shaped by users’ pre-existing mental models and expectations. This suggests that digital experiences are more cognitively driven and perception-sensitive, reinforcing the need for anticipatory design strategies that align digital performance with evolving user expectations to sustain satisfaction over time.

- While final satisfaction levels may converge across product types, the underlying temporal mechanisms differ significantly. For physical products, satisfaction tends to become increasingly influenced by external contextual factors, such as environmental conditions and social feedback. This suggests that adaptability to changing contexts and sustained functional or social relevance are critical for long-term satisfaction. In contrast, satisfaction with digital products is more dependent on internal dynamics, including interaction quality, personalization, and system adaptability. This highlights the importance of continuous optimization and implementing user-centered feedback loops in digital product design to support long-term user engagement and well-being.

- The early stages of use play a disproportionately critical role in shaping satisfaction with physical products by establishing a relatively fixed baseline for future evaluations. In contrast, digital product experiences tend to be more fluid and adaptive, allowing satisfaction to be reshaped over time. This finding highlights the potential of dynamic experience management strategies such as real-time updates, adaptive interfaces, and context-aware feedback as effective strategies for maintaining user satisfaction in digital environments.

7. Limitations and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hult, G.T.M.; Morgeson, F.V.; Morgan, N.A.; Mithas, S.; Fornell, C. Do managers know what their customers think and why? J. Acad. Mark. Sci. 2017, 45, 37–54. [Google Scholar] [CrossRef]

- Oliver, R.L. A cognitive model of the antecedents and consequences of satisfaction decisions. J. Mark. Res. 1980, 17, 460–469. [Google Scholar] [CrossRef]

- Schiebler, T.; Lee, N.; Brodbeck, F.C. Expectancy-disconfirmation and consumer satisfaction: A meta-analysis. J. Acad. Mark. Sci. 2025, 1–22. [Google Scholar] [CrossRef]

- Hill, N.; Brierley, J. How to Measure Customer Satisfaction; Routledge: Oxfordshire, UK, 2017. [Google Scholar]

- Hassenzahl, M. The thing and I: Understanding the relationship between user and product. In Funology 2: From Usability to Enjoyment; Springer: Berlin/Heidelberg, Germany, 2018; pp. 301–313. [Google Scholar]

- Kwortnik Jr, R.J.; Thompson, G.M. Unifying service marketing and operations with service experience management. J. Serv. Res. 2009, 11, 389–406. [Google Scholar] [CrossRef]

- Keiningham, T.; Aksoy, L.; Bruce, H.L.; Cadet, F.; Clennell, N.; Hodgkinson, I.R.; Kearney, T. Customer experience driven business model innovation. J. Bus. Res. 2020, 116, 431–440. [Google Scholar] [CrossRef]

- Yoo, Y.; Henfridsson, O.; Lyytinen, K. Research commentary—The new organizing logic of digital innovation: An agenda for information systems research. Inf. Syst. Res. 2010, 21, 724–735. [Google Scholar] [CrossRef]

- Porter, M.E.; Heppelmann, J.E. How smart, connected products are transforming competition. Harv. Bus. Rev. 2014, 92, 64–88. [Google Scholar]

- Yang, M.; Fu, M.; Zhang, Z. The adoption of digital technologies in supply chains: Drivers, process and impact. Technol. Forecast. Soc. Change 2021, 169, 120795. [Google Scholar] [CrossRef]

- Nozari, H.; Fallah, M.; Kazemipoor, H.; Najafi, S.E. Big data analysis of IoT-based supply chain management considering FMCG industries. Бизнес-инфoрматика 2021, 15, 78–96. [Google Scholar] [CrossRef]

- Abhishek, V.; Guajardo, J.A.; Zhang, Z. Business models in the sharing economy: Manufacturing durable goods in the presence of peer-to-peer rental markets. Inf. Syst. Res. 2021, 32, 1450–1469. [Google Scholar] [CrossRef]

- Senachai, P.; Julagasigorn, P.; Chumwichan, S. The role of retail mix elements in enhancing customer engagement: Evidence from Thai fast-moving consumer goods retail sector. ABAC J. 2023, 43, 106–124. [Google Scholar]

- Niedermeier, A.; Emberger-Klein, A.; Menrad, K. Which factors distinguish the different consumer segments of green fast-moving consumer goods in Germany? Bus. Strategy Environ. 2021, 30, 1823–1838. [Google Scholar] [CrossRef]

- Lazirkha, D.P.; Hom, J.; Melinda, V. Quality analysis of digital business services in improving customer satisfaction. Startupreneur Bus. Digit. (SABDA J.) 2022, 1, 156–166. [Google Scholar] [CrossRef]

- Shou, Z.; Wang, F.; Jia, J. The Measurement of Cumulative Satisfaction: An Analytical Model based on Dynamic Customer Expectation. Nankai Bus. Rev. 2011, 14, 142–150. [Google Scholar]

- Kerschbaumer, R.H.; Foscht, T.; Eisingerich, A.B. Is ownership of brands passe? A new model of temporary usage for durable goods. J. Bus. Strategy 2024, 45, 305–313. [Google Scholar] [CrossRef]

- Mulyawan, A.; Alamsyah, Z. How digital platform changing people way to buy FMCG products. Proc. IOP Conf. Ser. Earth Environ. Sci. 2022, 1063, 012050. [Google Scholar] [CrossRef]

- Subramoniam, R.; Sundin, E.; Subramoniam, S.; Huisingh, D. Riding the digital product life cycle waves towards a circular economy. Sustainability 2021, 13, 8960. [Google Scholar] [CrossRef]

- Lemon, K.N.; Verhoef, P.C. Understanding customer experience throughout the customer journey. J. Mark. 2016, 80, 69–96. [Google Scholar] [CrossRef]

- Li, Y.; Jing, F. Research on Dynamic Satisfaction of Post Impulsive Buying Behavior—The Regulation Analysis of High/Low Internal Assessment Product. Soft Sci. 2012, 26, 132–136. [Google Scholar]

- Ramya, N.; Kowsalya, A.; Dharanipriya, K. Service quality and its dimensions. EPRA Int. J. Res. Dev. 2019, 4, 38–41. [Google Scholar]

- Keshavarz, Y.; Jamshidi, D. Service quality evaluation and the mediating role of perceived value and customer satisfaction in customer loyalty. Int. J. Tour. Cities 2018, 4, 220–244. [Google Scholar] [CrossRef]

- Hepola, J.; Karjaluoto, H.; Hintikka, A. The effect of sensory brand experience and involvement on brand equity directly and indirectly through consumer brand engagement. J. Prod. Brand Manag. 2017, 26, 282–293. [Google Scholar] [CrossRef]

- Anifa, N.; Sanaji, S. Augmented reality users: The effect of perceived ease of use, perceived usefulness, and customer experience on repurchase intention. J. Bus. Manag. Rev. 2022, 3, 252–274. [Google Scholar] [CrossRef]

- DeLone, W.H.; McLean, E.R. The DeLone and McLean model of information systems success: A ten-year update. J. Manag. Inf. Syst. 2003, 19, 9–30. [Google Scholar]

- Rehman, A.U.; Elahi, Y.A. How semiotic product packaging, brand image, perceived brand quality influence brand loyalty and purchase intention: A stimulus-organism-response perspective. Asia Pac. J. Mark. Logist. 2024, 36, 3043–3060. [Google Scholar] [CrossRef]

- Saffer, D. Microinteractions: Designing with Details; O’Reilly Media, Inc.: Cambridge, MA, USA, 2013. [Google Scholar]

- Wixom, B.H.; Todd, P.A. A theoretical integration of user satisfaction and technology acceptance. Inf. Syst. Res. 2005, 16, 85–102. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Xu, J.; Benbasat, I.; Cenfetelli, R.T. Integrating service quality with system and information quality: An empirical test in the e-service context. MIS Q. 2013, 37, 777–794. [Google Scholar] [CrossRef]

- Baghirov, F.; Zhang, Y. Assessment of the association between aesthetic products and perceived product quality: An analysis of customer attitudes. J. Consum. Mark. 2024, 41, 789–803. [Google Scholar] [CrossRef]

- Hultén, B. Sensory marketing: The multi-sensory brand-experience concept. Eur. Bus. Rev. 2011, 23, 256–273. [Google Scholar] [CrossRef]

- Verma, P. After-sales service shaping assortment satisfaction and online repatronage intention in the backdrop of social influence. Int. J. Qual. Serv. Sci. 2022, 14, 595–614. [Google Scholar] [CrossRef]

- Babin, B.J.; Babin, L. Seeking something different? A model of schema typicality, consumer affect, purchase intentions and perceived shopping value. J. Bus. Res. 2001, 54, 89–96. [Google Scholar] [CrossRef]

- Chen, Y.-H.; Liu, C.-C.; Keng, C.-J. Understanding customers’ discontinuance intention toward curated subscription commerce via the expectation disconfirmation theory. Chiao Manag. Rev. 2023, 43, 65–96. [Google Scholar]

- Hassenzahl, M.; Tractinsky, N. User experience-a research agenda. Behav. Inf. Technol. 2006, 25, 91–97. [Google Scholar] [CrossRef]

- Albert, B.; Tullis, T. Measuring the User Experience: Collecting, Analyzing, and Presenting Usability Metrics; Newnes: New South Wales, Australia, 2013. [Google Scholar]

- Garett, J. The Elements of User Experience: User-Centered Design for the Web and Beyond, New Riders, Peachpit. Interactions 2011, 10, 49–51. [Google Scholar]

- Norman, D.A. The Design of Everyday Things Basic Books; Perseus: New York, NY, USA, 2002. [Google Scholar]

- Bhattacherjee, A. Understanding information systems continuance: An expectation-confirmation model. MIS Q. 2001, 25, 351–370. [Google Scholar] [CrossRef]

- Kujala, S.; Roto, V.; Väänänen-Vainio-Mattila, K.; Sinnelä, A. Identifying hedonic factors in long-term user experience. In Proceedings of the 2011 Conference on Designing Pleasurable Products and Interfaces, Milano, Italy, 22–25 June 2011. [Google Scholar] [CrossRef]

- Chen, X.; Sun, X.; Yan, D.; Wen, D. Perceived sustainability and customer engagement in the online shopping environment: The rational and emotional perspectives. Sustainability 2020, 12, 2674. [Google Scholar] [CrossRef]

- Shneiderman, B.; Plaisant, C. Designing the User Interface: Strategies for Effective Human-Computer Interaction; Pearson Education India: Uttar Pradesh, India, 2010. [Google Scholar]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Ntoa, S. Usability and user experience evaluation in intelligent environments: A review and reappraisal. Int. J. Hum. Comput. Interact. 2025, 41, 2829–2858. [Google Scholar] [CrossRef]

- Ulrich, K.T.; Eppinger, S.D. Product Design and Development; McGraw-hill: Singapore, 2016. [Google Scholar]

- Lewis, H.; Gertsakis, J.; Grant, T.; Morelli, N.; Sweatman, A. Design + Environment: A Global Guide to Designing Greener Goods; Routledge: Sheffield, UK, 2017. [Google Scholar]

- Koenigsberg, O.; Kohli, R.; Montoya, R. The design of durable goods. Mark. Sci. 2011, 30, 111–122. [Google Scholar] [CrossRef]

- Azhagaiah, R.; Ezhilarasi, E. Consumer behavior regarding durable goods. Indian J. Mark. 2012, 42, 27–39. [Google Scholar]

- Ni, M.N.; Wang, L.; Li, Y. Study On the Design Methods of Fast Moving Consumer Goods. Adv. Mater. Res. 2013, 605, 276–282. [Google Scholar] [CrossRef]

- De Sordi, J.O.; Reed, E.N.; Meireles, M.; da Silveira, M.A. Development of digital products and services: Proposal of a framework to analyze versioning actions. Eur. Manag. J. 2016, 34, 564–578. [Google Scholar] [CrossRef]

- Koukova, N.T.; Kannan, P.; Kirmani, A. Multiformat digital products: How design attributes interact with usage situations to determine choice. J. Mark. Res. 2012, 49, 100–114. [Google Scholar] [CrossRef]

- Wang, G. Digital reframing: The design thinking of redesigning traditional products into innovative digital products. J. Prod. Innov. Manag. 2022, 39, 95–118. [Google Scholar] [CrossRef]

- Waldman, M. Durable goods theory for real world markets. J. Econ. Perspect. 2003, 17, 131–154. [Google Scholar] [CrossRef]

- Kotler, P.; Armstrong, G. Principles of Marketing; Pearson Education: New York, NY, USA, 2010. [Google Scholar]

- Gowrisankaran, G.; Rysman, M. Dynamics of consumer demand for new durable goods. J. Political Econ. 2012, 120, 1173–1219. [Google Scholar] [CrossRef]

- Peiris, T.K.A.; Jasingha, D.; Rathnasiri, M.S.H. Examining the effect of consumption values on green FMCG purchase behaviour: A focus on the theory of consumption values. Manag. Sustain. Arab Rev. 2024, 3, 385–403. [Google Scholar]

- Rusch, M.; Schöggl, J.P.; Baumgartner, R.J. Application of digital technologies for sustainable product management in a circular economy: A review. Bus. Strategy Environ. 2023, 32, 1159–1174. [Google Scholar] [CrossRef]

- ISO 9241-210:2010; Ergonomics of Human System Interaction-Part 210: Human-Centred Design for Interactive Systems. International Standardization Organization (ISO): Geneva, Switzerland, 2009.

- Hui, K.L.; Chau, P.Y. Classifying digital products. Commun. ACM 2002, 45, 73–79. [Google Scholar] [CrossRef]

- Tassell, C.; Aurisicchio, M. Refill at home for fast-moving consumer goods: Uncovering compliant and divergent consumer behaviour. Sustain. Prod. Consum. 2023, 39, 63–78. [Google Scholar] [CrossRef]

- Sun, J.J.; Bellezza, S.; Paharia, N. Buy less, buy luxury: Understanding and overcoming product durability neglect for sustainable consumption. J. Mark. 2021, 85, 28–43. [Google Scholar] [CrossRef]

- Pino, G.; Amatulli, C.; Nataraajan, R.; De Angelis, M.; Peluso, A.M.; Guido, G. Product touch in the real and digital world: How do consumers react? J. Bus. Res. 2020, 112, 492–501. [Google Scholar] [CrossRef]

- Tidwell, J. Designing Interfaces: Patterns for Effective Interaction Design; O’Reilly Media, Inc.: Cambridge, MA, USA, 2010. [Google Scholar]

- Cochoy, F.; Licoppe, C.; McIntyre, M.P.; Sörum, N. Digitalizing consumer society: Equipment and devices of digital consumption. J. Cult. Econ. 2020, 13, 1–11. [Google Scholar] [CrossRef]

- Mu, J.; Thomas, E.; Qi, J.; Tan, Y. Online group influence and digital product consumption. J. Acad. Mark. Sci. 2018, 46, 921–947. [Google Scholar] [CrossRef]

- Sun, J.; Harris, K.; Vazire, S. Is well-being associated with the quantity and quality of social interactions? J. Personal. Soc. Psychol. 2020, 119, 1478. [Google Scholar] [CrossRef]

- Austin, P.C.; Steyerberg, E.W. The number of subjects per variable required in linear regression analyses. J. Clin. Epidemiol. 2015, 68, 627–636. [Google Scholar] [CrossRef] [PubMed]

- Kumari, K.; Yadav, S. Linear regression analysis study. J. Pract. Cardiovasc. Sci. 2018, 4, 33–36. [Google Scholar] [CrossRef]

- Cameron, A.C.; Trivedi, P.K. Microeconometrics: Methods and Applications; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Montgomery, D.C.; Peck, E.A.; Vining, G.G. Introduction to Linear Regression Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2021. [Google Scholar]

- Yuan, M.; Lin, Y. Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Ser. B Stat. Methodol. 2006, 68, 49–67. [Google Scholar] [CrossRef]

- Yoon, S.J.; Kim, J.H. An empirical validation of a loyalty model based on expectation disconfirmation. J. Consum. Mark. 2000, 17, 120–136. [Google Scholar] [CrossRef]

- Venkatesh, V.; Goyal, S. Expectation disconfirmation and technology adoption: Polynomial modeling and response surface analysis. MIS Q. 2010, 34, 281–303. [Google Scholar] [CrossRef]

- Churchill Jr, G.A.; Surprenant, C. An investigation into the determinants of customer satisfaction. J. Mark. Res. 1982, 19, 491–504. [Google Scholar] [CrossRef]

- Song, R.; Zheng, Y. Predicting Continuance Intention to Use Learning Management Systems among Undergraduates: The Moderating Effect of Intrinsic Motivation. SAGE Open 2024, 14, 21582440241271319. [Google Scholar] [CrossRef]

- Tyrväinen, O. The Use of Digital Technologies in Omnichannel Retailing: Understanding Integrated Customer Experience Across Diverse Touchpoints. Ph.D. Thesis, Jyväskylän yliopisto, Jyväskylä, Finland, 2022. [Google Scholar]

- Ostertagova, E.; Ostertag, O. Forecasting using simple exponential smoothing method. Acta Electrotech. Inform. 2012, 12, 62. [Google Scholar] [CrossRef]

- Brownlee, J. Introduction to Time Series Forecasting with Python: How to Prepare Data and Develop Models to Predict the Future; Machine Learning Mastery: Victoria, Australia, 2017. [Google Scholar]

- Gelper, S.; Fried, R.; Croux, C. Robust forecasting with exponential and Holt–Winters smoothing. J. Forecast. 2010, 29, 285–300. [Google Scholar] [CrossRef]

- George, D.; Mallery, P. IBM SPSS Statistics 26 Step by Step: A Simple Guide and Reference; Routledge: Sheffield, UK, 2019. [Google Scholar]

- Voorhees, C.M.; Fombelle, P.W.; Gregoire, Y.; Bone, S.; Gustafsson, A.; Sousa, R.; Walkowiak, T. Service encounters, experiences and the customer journey: Defining the field and a call to expand our lens. J. Bus. Res. 2017, 79, 269–280. [Google Scholar] [CrossRef]

- Hoe, L.C.; Mansori, S. The effects of product quality on customer satisfaction and loyalty: Evidence from Malaysian engineering industry. Int. J. Ind. Mark. 2018, 3, 20. [Google Scholar] [CrossRef]

- Li, T.; Fan, Y.; Li, Y.; Tarkoma, S.; Hui, P. Understanding the long-term evolution of mobile app usage. IEEE Trans. Mob. Comput. 2021, 22, 1213–1230. [Google Scholar] [CrossRef]

- Oliver, R.L. Cognitive, affective, and attribute bases of the satisfaction response. J. Consum. Res. 1993, 20, 418–430. [Google Scholar] [CrossRef]

- Lazaris, C.; Sarantopoulos, P.; Vrechopoulos, A.; Doukidis, G. Effects of increased omnichannel integration on customer satisfaction and loyalty intentions. Int. J. Electron. Commer. 2021, 25, 440–468. [Google Scholar] [CrossRef]

- Blom, A.; Lange, F.; Hess, R.L. Omnichannel promotions and their effect on customer satisfaction. Eur. J. Mark. 2021, 55, 177–201. [Google Scholar] [CrossRef]

- White, K.; Habib, R.; Hardisty, D.J. How to SHIFT consumer behaviors to be more sustainable: A literature review and guiding framework. J. Mark. 2019, 83, 22–49. [Google Scholar] [CrossRef]

- Mittal, V.; Kamakura, W.A. Satisfaction, repurchase intent, and repurchase behavior: Investigating the moderating effect of customer characteristics. J. Mark. Res. 2001, 38, 131–142. [Google Scholar] [CrossRef]

- Guru, R.R.D.; Paulssen, M.; Japutra, A. Role of brand attachment and satisfaction in driving customer behaviors for durables: A longitudinal study. Eur. J. Mark. 2024, 58, 217–254. [Google Scholar] [CrossRef]

- Do, A.M.; Rupert, A.V.; Wolford, G. Evaluations of pleasurable experiences: The peak-end rule. Psychon. Bull. Rev. 2008, 15, 96–98. [Google Scholar] [CrossRef] [PubMed]

| Mean | Variance | Coefficient of Variation | |||||||

|---|---|---|---|---|---|---|---|---|---|

| I | II | III | I | II | III | I | II | III | |

| EXP1 | 4.950 | 4.378 | 5.079 | 1.587 | 1.742 | 1.967 | 25.451% | 30.142% | 27.611% |

| EXP2 | 4.300 | 3.973 | 4.474 | 1.856 | 1.360 | 2.905 | 31.686% | 29.357% | 38.096% |

| EXP3 | 4.125 | 3.757 | 4.421 | 1.497 | 1.356 | 2.250 | 29.659% | 30.995% | 33.931% |

| EXP4 | 3.875 | 3.486 | 4.263 | 1.240 | 0.979 | 1.875 | 28.741% | 28.379% | 32.118% |

| EXP5 | 3.625 | 3.297 | 4.553 | 1.061 | 1.215 | 1.443 | 28.414% | 33.426% | 26.387% |

| PER1 | 4.600 | 4.351 | 4.605 | 1.067 | 0.845 | 1.813 | 22.452% | 21.130% | 29.237% |

| PER2 | 4.550 | 4.351 | 4.421 | 1.536 | 1.012 | 1.926 | 27.238% | 23.119% | 31.391% |

| PER3 | 4.450 | 4.270 | 4.342 | 1.485 | 0.980 | 1.691 | 27.381% | 23.188% | 29.945% |

| PER4 | 4.375 | 4.162 | 4.395 | 1.420 | 1.195 | 1.326 | 27.236% | 26.266% | 26.207% |

| PER5 | 4.200 | 4.054 | 4.632 | 1.344 | 1.053 | 0.455 | 27.598% | 25.307% | 14.567% |

| SATI1 | 4.375 | 4.189 | 4.368 | 2.753 | 1.769 | 3.915 | 37.926% | 31.747% | 45.292% |

| SATI2 | 4.750 | 4.297 | 4.500 | 1.987 | 1.270 | 2.743 | 29.677% | 26.227% | 36.806% |

| SATI3 | 4.625 | 4.324 | 4.342 | 1.471 | 1.114 | 2.501 | 26.225% | 24.409% | 36.424% |

| SATI4 | 4.550 | 4.270 | 4.474 | 1.792 | 1.425 | 2.580 | 29.424% | 27.954% | 35.907% |

| SATI5 | 4.525 | 4.324 | 4.737 | 1.435 | 1.003 | 1.605 | 26.476% | 23.160% | 26.742% |

| Dependent Variable | Independent Variable | B | D-W | Adjusted R2 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| I | II | III | I | II | III | I | II | III | ||

| SAIT1 | EXP1 | −0.353 * | −0.492 * | −0.496 * | 2.890 | 2.419 | 2.179 | 0.868 | 0.792 | 0.851 |

| PER1 | 1.529 * | 1.281 * | 1.367 * | |||||||

| EXP2 | PER1 | 1.016 * | 0.484 * | 0.621 * | 2.467 | 2.064 | 1.732 | 0.645 | 0.554 | 0.774 |

| SAIT1 | 0.042 | 0.376 * | 0.368 * | |||||||

| SAIT2 | EXP2 | −0.223 * | −0.301 * | −0.256 * | 2.309 | 1.807 | 1.999 | 0.771 | 0.732 | 0.863 |

| PER2 | 1.117 * | 1.118 * | 1.243 * | |||||||

| EXP3 | PER2 | 0.615 * | 0.800 * | 0.530 * | 1.702 | 2.106 | 1.520 | 0.541 | 0.505 | 0.809 |

| SAIT2 | 0.125 | −0.030 | 0.396 * | |||||||

| SAIT3 | EXP3 | −0.506 * | −0.342 * | −0.249 * | 2.553 | 2.623 | 2.293 | 0.781 | 0.729 | 0.804 |

| PER3 | 1.216 * | 1.147 * | 1.157 * | |||||||

| EXP4 | PER3 | 0.735 * | 0.891 * | 0.601 * | 1.927 | 1.770 | 1.609 | 0.683 | 0.496 | 0.844 |

| SAIT3 | 0.035 | −0.206 | 0.331 * | |||||||

| SAIT4 | EXP4 | −0.124 | −0.521 * | −0.407 * | 1.791 | 2.306 | 1.560 | 0.797 | 0.757 | 0.825 |

| PER4 | 0.920 * | 1.208 * | 1.217 * | |||||||

| EXP5 | PER4 | 0.464 * | 0.906 * | 0.685 * | 1.961 | 2.058 | 1.323 | 0.605 | 0.332 | 0.549 |

| SAIT4 | 0.177 | −0.385 | 0.097 | |||||||

| SAIT5 | EXP5 | −0.207 | −0.368 * | −0.418 * | 2.393 | 2.171 | 1.231 | 0.714 | 0.664 | 0.756 |

| PER5 | 1.012 * | 1.005 * | 1.045 * | |||||||

| S0 | Alpha | |||||

|---|---|---|---|---|---|---|

| Exp | Per | Sati | Exp | Per | Sati | |

| Durable Goods | 4.458 | 4.533 | 4.583 | 0.30 | 0.60 | 0.05 |

| FMCG | 4.017 | 4.313 | 4.280 | 0.40 | 0.70 | 0.05 |

| Digital Products | 4.653 | 4.453 | 4.427 | 0.05 | 0.05 | 0.30 |

| Variance | Mean | |||||

|---|---|---|---|---|---|---|

| I | II | III | I | II | III | |

| DIS1 | 2.131 | 2.330 | 3.067 | −0.350 | −0.054 | −0.474 |

| DIS2 | 0.859 | 0.686 | 2.376 | 0.250 | 0.378 | −0.053 |

| DIS3 | 0.687 | 0.811 | 2.669 | 0.325 | 0.541 | −0.079 |

| DIS4 | 0.667 | 0.725 | 3.090 | 0.500 | 0.676 | 0.132 |

| DIS5 | 0.610 | 0.800 | 1.696 | 0.575 | 0.757 | 0.079 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Z.; Ono, K.; Wu, Y. Dynamic Expectation–Satisfaction Relationship in Sustainable Experiences with Product: A Comparative Study of Durable Goods, FMCG, and Digital Products. Sustainability 2025, 17, 7045. https://doi.org/10.3390/su17157045

Wu Z, Ono K, Wu Y. Dynamic Expectation–Satisfaction Relationship in Sustainable Experiences with Product: A Comparative Study of Durable Goods, FMCG, and Digital Products. Sustainability. 2025; 17(15):7045. https://doi.org/10.3390/su17157045

Chicago/Turabian StyleWu, Zhenhua, Kenta Ono, and Yuting Wu. 2025. "Dynamic Expectation–Satisfaction Relationship in Sustainable Experiences with Product: A Comparative Study of Durable Goods, FMCG, and Digital Products" Sustainability 17, no. 15: 7045. https://doi.org/10.3390/su17157045

APA StyleWu, Z., Ono, K., & Wu, Y. (2025). Dynamic Expectation–Satisfaction Relationship in Sustainable Experiences with Product: A Comparative Study of Durable Goods, FMCG, and Digital Products. Sustainability, 17(15), 7045. https://doi.org/10.3390/su17157045