Forecasting Return Quantity, Timing and Condition in Remanufacturing with Machine Learning: A Mixed-Methods Approach

Abstract

1. Introduction

- RQ1: Which data characteristics are commonly used to train machine learning models for forecasting the availability of returned cores in remanufacturing? This question identifies the types of input variables (features) commonly selected to develop predictive models. Understanding the nature and relevance of these features is essential for designing accurate and practically implementable models in real-world remanufacturing environments.

- RQ2: Which data sources are commonly used for training machine learning models for forecasting the availability of returned cores in remanufacturing? This includes exploring the origins of the datasets used to train and evaluate models. Clarifying the advantages and limitations of each data source helps assess their transferability to remanufacturing applications, where data availability is often limited or inconsistent.

- RQ3: Which supervised machine learning models are commonly applied to forecast return quantity, timing, and condition of cores? The aim is to analyze the types of supervised learning problems addressed in the literature and assess the prevalence and suitability of different ML algorithms.

2. Materials and Methods

2.1. Expert Interviews

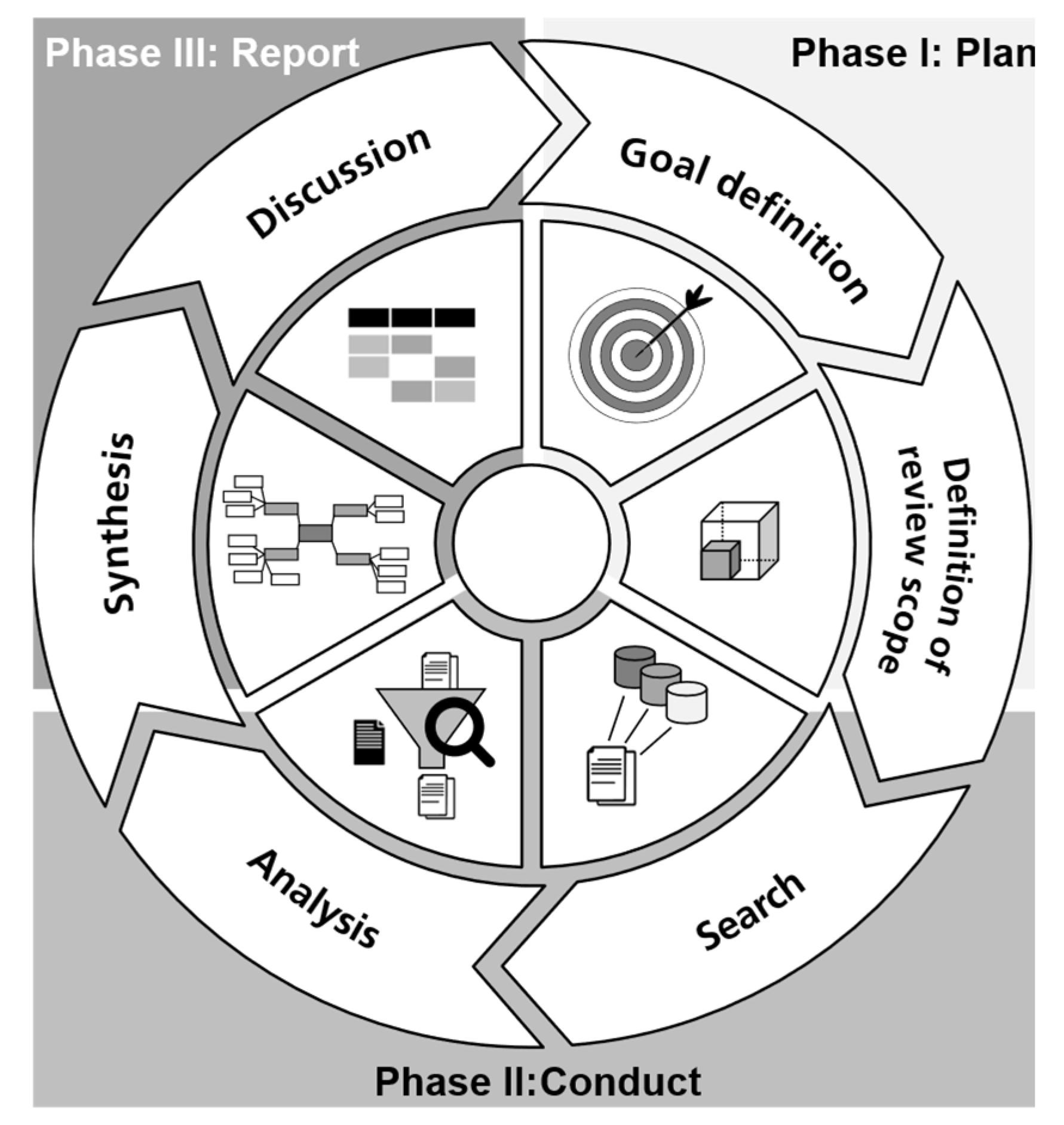

2.2. Literature Review

- For return quantity and timing, the scope was extended to include publications on spare parts demand forecasting due to structural similarities such as uncertain return flows, failure rates, and non-regular demand patterns.

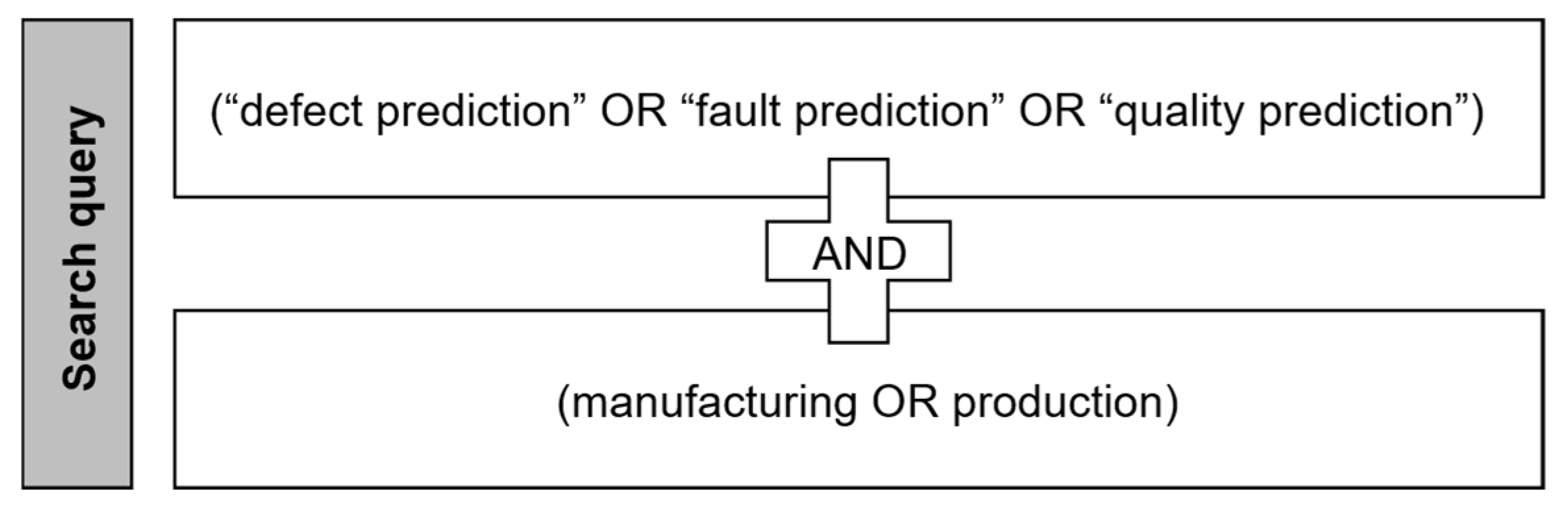

- For condition prediction, the review focused on studies in manufacturing that apply ML to quality, defect, and fault prediction, based on the conceptual overlap with assessing the condition of returned parts.

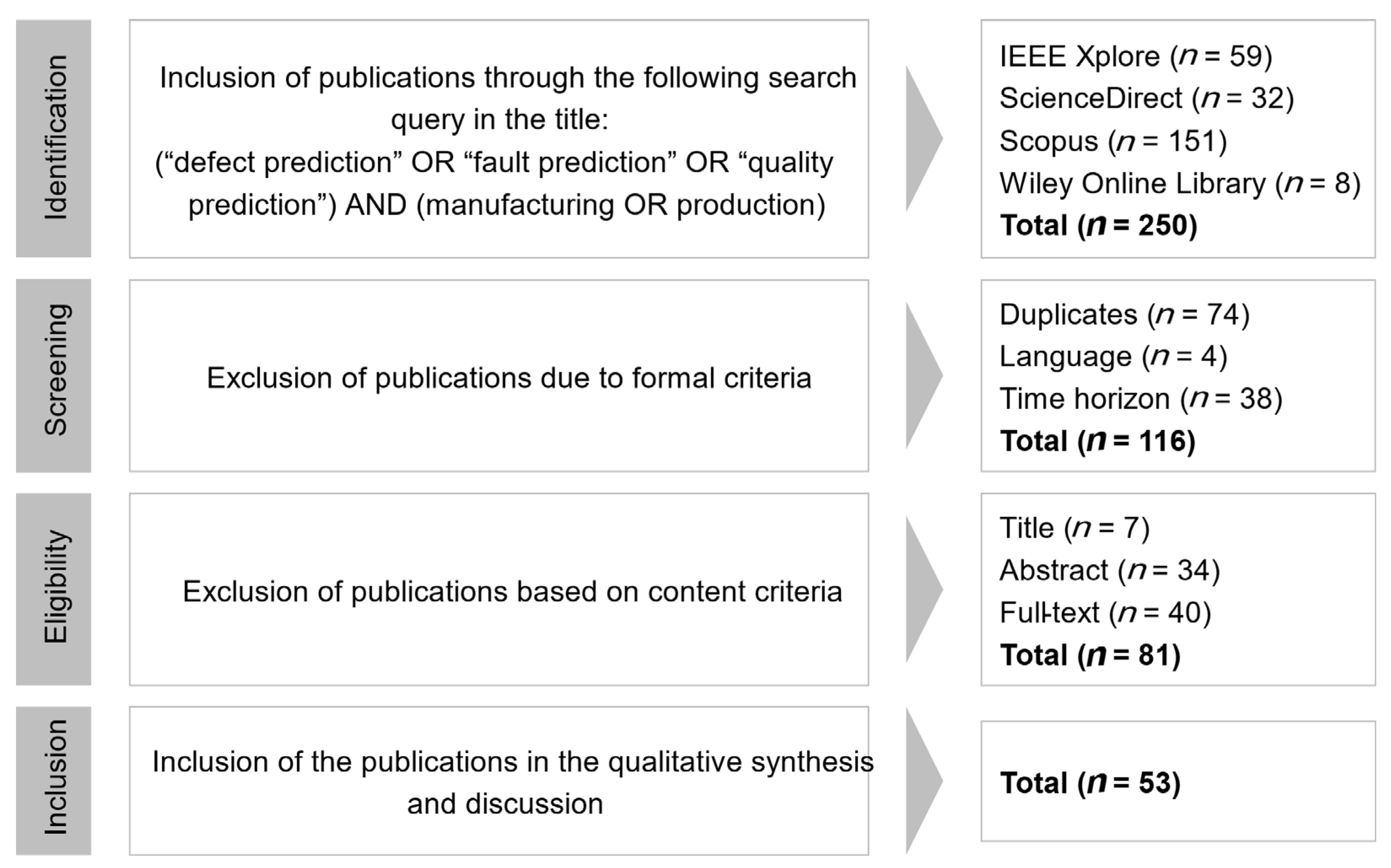

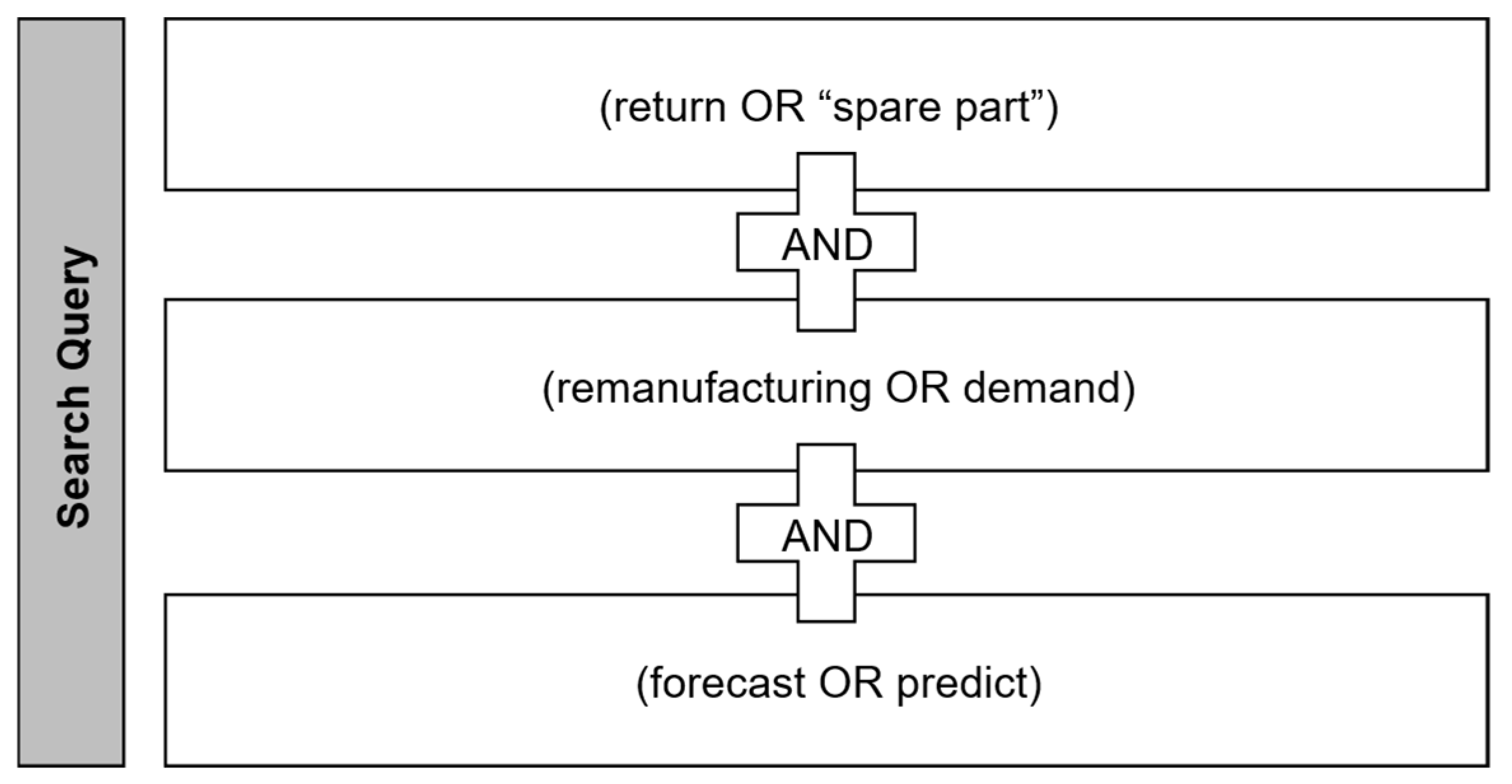

2.2.1. Forecasting Return Quantity and Timing

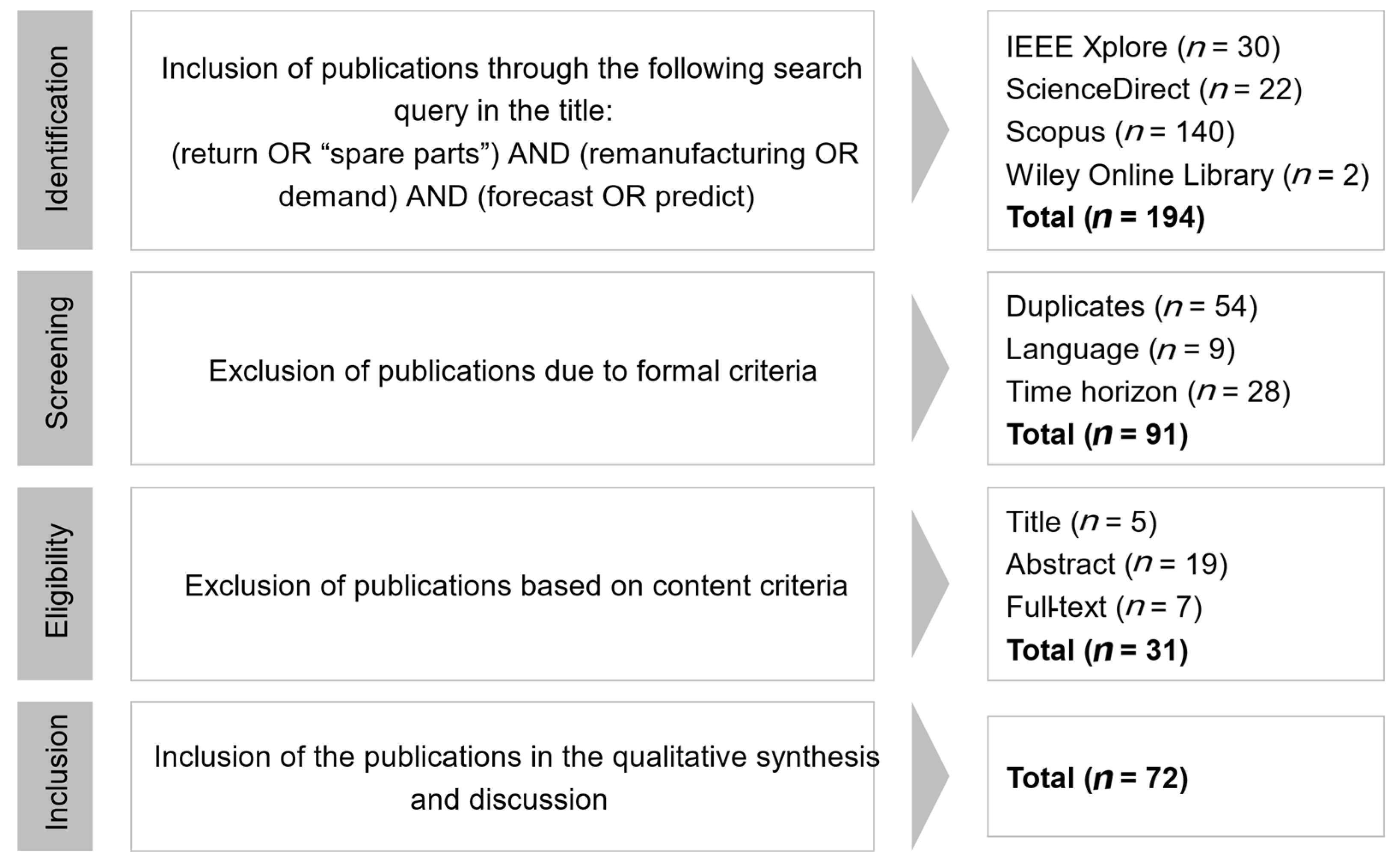

2.2.2. Forecasting the Condition of Cores

3. Results

3.1. Identification of Influencing Factors of Return Quantity, Timing, and Condition of Cores

- Ownership-based take-back;

- Contractual take-back agreements (e.g., service contracts, contract repairs);

- 1:1 returns;

- Returns tied to discounts on remanufactured goods;

- Purchase-based returns;

- Voluntary returns.

3.2. Machine Learning Methods for Forecasting Return Quantity and Timing of Cores

3.2.1. Data Set Sources

- Simulated data generated through computational models;

- Freely available benchmark datasets, often created in the context of competitions or for comparative research purposes;

- Real-world data.

3.2.2. Machine Learning Methods

3.3. Machine Learning Methods for Forecasting the Condition of Cores

3.3.1. Data Set Sources

3.3.2. Machine Learning Methods

4. Discussion

4.1. Transferability of Influencing Factors and Data Sources

4.2. Applicability of Machine Learning Models in Remanufacturing

4.3. Challenges and Research Gaps

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ANN | Artificial Neural Network |

| CR | Croston |

| DT | Decision Tree |

| ES | Exponential Smoothing |

| LSTM | Long-Short-Term-Memory |

| MA | Moving Average |

| ML | Machine Learning |

| R | Regression |

| RF | Random Forest |

| RQ | Research question |

| SBA | Syntetos-Boylan-Approximation |

| SVM | Support Vector Machine |

| XGB | Extreme Gradient Boost |

References

- European Commission. The Clean Industrial Deal: A Joint Roadmap for Competitiveness and Decarbonisation; European Commission: Brussels, Belgium, 2025. [Google Scholar]

- Kammerer, F.; Kappe, T. Nationale Kreislaufwirtschaftsstrategie; Bundesministerium für Umwelt, Naturschutz, nukleare Sicherheit und Verbraucherschutz: Berlin, Germany, 2024. [Google Scholar]

- BS 8887-2:2009; Design for Manufacture, Assembly, Disassembly and End-of-Life Processing (MADE). British Standards Institution: London, UK, 2009.

- Grosse Erdmann, J.; Mahr, A.; Derr, P.; Walczak, P.; Koller, J. Comparative Life Cycle Assessment of Conventionally Manufactured and Additive Remanufactured Electric Bicycle Motors. In Proceedings of the Conference on Production Systems and Logistics: CPSL 2023-2; Herberger, D., Hübner, M., Eds.; Publish-Ing: Hannover, Germany, 2023. [Google Scholar]

- Köhler, D.C.F. Regenerative Supply Chains: Regenerative Wertschöpfungsketten. Ph.D. Thesis, Universität Bayreuth, Bayreuth, Germany, 2011. [Google Scholar]

- Steinhilper, R. Remanufacturing: The Ultimate form of Recycling; Fraunhofer-IRB-Verl: Stuttgart, Germany, 1998; ISBN 978-3816752165. [Google Scholar]

- Bobba, S.; Tecchio, P.; Ardente, F.; Mathieux, F.; dos Santos, F.M.; Pekar, F. Analysing the contribution of automotive remanufacturing to the circularity of materials. Procedia CIRP 2020, 90, 67–72. [Google Scholar] [CrossRef]

- Nasr, N.Z.; Russell, J.D. Re-Defining Value–The Manufacturing Revolution: Remanufacturing, Refurbishment, Repair and Direct Reuse in the Circular Economy; International Resource Panel: Nairobi, Kenya, 2018. [Google Scholar]

- Lange, U. Ressourceneffizienz durch Remanufacturing—Industrielle Aufarbeitung von Altteilen; VDI Zentrum Ressourceneffizienz GmbH: Berlin, Germany, 2017. [Google Scholar]

- Kurilova-Palisaitiene, J.; Sundin, E.; Poksinska, B. Remanufacturing challenges and possible lean improvements. J. Clean. Prod. 2018, 172, 3225–3236. [Google Scholar] [CrossRef]

- Guide, V.R. Production planning and control for remanufacturing: Industry practice and research needs. J. Oper. Manag. 2000, 18, 467–483. [Google Scholar] [CrossRef]

- de Brito, M.P.; van der Laan, E.A. Inventory control with product returns: The impact of imperfect information. Eur. J. Oper. Res. 2009, 194, 85–101. [Google Scholar] [CrossRef]

- Wei, S.; Tang, O.; Sundin, E. Core (product) Acquisition Management for remanufacturing: A review. J. Remanuf. 2015, 5, 4. [Google Scholar] [CrossRef]

- Maurer, I.; Dertouzos, J.; Goel, U.; Scarinci, E.; Tolstinev, D. Powering the Remanufacturing Renaissance with AI; McKinsey & Company: New York, NY, USA, 2025. [Google Scholar]

- Saraswati, D.; Sari, D.K.; Puspitasari, F.; Amalia, F. Forecasting product returns using artificial neural network for remanufacturing processes. AIP Conf. Proc. 2023, 2485, 110005. [Google Scholar] [CrossRef]

- Goh, T.N.; Varaprasad, N. A Statistical Methodology for the Analysis of the Life-Cycle of Reusable Containers. IIE Trans. 1986, 18, 42–47. [Google Scholar] [CrossRef]

- Kelle, P.; Silver, E.A. Forecasting the returns of reusable containers. J. Oper. Manag. 1989, 8, 17–35. [Google Scholar] [CrossRef]

- Marx-Gómez, J.; Rautenstrauch, C.; Nürnberger, A.; Kruse, R. Neuro-fuzzy approach to forecast returns of scrapped products to recycling and remanufacturing. Knowl.-Based Syst. 2002, 15, 119–128. [Google Scholar] [CrossRef]

- Clottey, T.; Benton, W.C.; Srivastava, R. Forecasting Product Returns for Remanufacturing Operations. Decis. Sci. 2012, 43, 589–614. [Google Scholar] [CrossRef]

- Geda, M.; Kwong, C.K. An MCMC based Bayesian inference approach to parameter estimation of distributed lag models for forecasting used product returns for remanufacturing. J. Remanuf. 2021, 11, 175–194. [Google Scholar] [CrossRef]

- Matsumoto, M.; Komatsu, S. Demand forecasting for production planning in remanufacturing. Int. J. Adv. Manuf. Technol. 2015, 79, 161–175. [Google Scholar] [CrossRef]

- Ponte, B.; Cannella, S.; Dominguez, R.; Naim, M.M.; Syntetos, A.A. Quality grading of returns and the dynamics of remanufacturing. Int. J. Prod. Econ. 2021, 236, 108129. [Google Scholar] [CrossRef]

- Stamer, F.; Sauer, J. Optimizing quality and cost in remanufacturing under uncertainty. Prod. Eng. Res. Devel. 2024, 19, 369–390. [Google Scholar] [CrossRef]

- Liang, X.; Jin, X.; Ni, J. Forecasting product returns for remanufacturing systems. J. Remanuf. 2014, 4, 8. [Google Scholar] [CrossRef][Green Version]

- Huster, S.; Rosenberg, S.; Glöser-Chahoud, S.; Schultmann, F. Remanufacturing capacity planning in new markets—Effects of different forecasting assumptions on remanufacturing capacity planning for electric vehicle batteries. J. Remanuf. 2023, 13, 283–304. [Google Scholar] [CrossRef]

- Brink, A. Anfertigung Wissenschaftlicher Arbeiten: Ein Prozessorientierter Leitfaden zur Erstellung von Bachelor-, Master- und Diplomarbeiten; Aktualisierte und erweiterte Auflage; Springer Gabler: Wiesbaden, Germany, 2013; ISBN 978-3-658-02510-6. [Google Scholar]

- Gläser, J.; Laudel, G. Experteninterviews und Qualitative Inhaltsanalyse als Instrumente Rekonstruierender Untersuchungen; VS Verlag für Sozialwissenschaften: Wiesbaden, Germany, 2009; ISBN 978-3-531-15684-2. [Google Scholar]

- Brinkmann, S.; Kvale, S. InterViews: Learning the Craft of Qualitative Research Interviewing, 3rd ed.; SAGE: Los Angeles, CA, USA; London, UK; New Delhi, India; Singapore; Washington, DC, USA, 2015; ISBN 978-1-4522-7572-7. [Google Scholar]

- Bogner, A.; Menz, W. The Theory-Generating Expert Interview: Epistemological Interest, Forms of Knowledge, Interaction. In Interviewing Experts; Bogner, A., Littig, B., Menz, W., Kittel, B., Eds.; Palgrave Macmillan: Houndmills, UK, 2009; pp. 43–80. ISBN 978-0-230-22019-5. [Google Scholar]

- Bandara, W.; Furtmueller, E.; Gorbacheva, E.; Miskon, S.; Beekhuyzen, J. Achieving Rigor in Literature Reviews: Insights from Qualitative Data Analysis and Tool-Support. CAIS 2015, 37, 8. [Google Scholar] [CrossRef]

- Levy, Y.; Ellis, T.J. A Systems Approach to Conduct an Effective Literature Review in Support of Information Systems Research. Informing Sci. Int. J. Emerg. Transdiscipl. 2006, 9, 181–212. [Google Scholar] [CrossRef]

- Li, J.; Wu, Z. Remanufacturing Processes, Planning and Control. In New Frontiers of Multidisciplinary Research in STEAM-H (Science, Technology, Engineering, Agriculture, Mathematics, and Health); Toni, B., Ed.; Springer International Publishing: Cham, Switzerland, 2014; pp. 329–356. ISBN 978-3-319-07754-3. [Google Scholar]

- vom Brocke, J.; Simons, A.; Niehaves, B.; Riemer, K.; Plattfaut, R.; Cleven, A. Reconstructing the Giant: On the Importance of Rigour in Documenting the Literature Search Process. In Proceedings of the 17th European Conference on Information Systems (ECIS 2009), Verona, Italy, 8–10 June 2009. [Google Scholar]

- Kraus, S.; Breier, M.; Dasí-Rodríguez, S. The art of crafting a systematic literature review in entrepreneurship research. Int. Entrep. Manag. J. 2020, 16, 1023–1042. [Google Scholar] [CrossRef]

- Brendel, A.B.; Marrone, M.; Trang, S.T.N.; Lichtenberg, S.; Kolbe, L.M. What to do for a Literature Review?—A Synthesis of Literature Review Practices. In Proceedings of the 26th Americas Conference on Information Systems (AMCIS 2020), Salt Lake City, UT, USA, 10–14 August 2020; pp. 1–11, ISBN 978-1-7336325-4-6. [Google Scholar]

- Koller, J.; Häfner, R.; Döpper, F. Decentralized Spare Parts Production for the Aftermarket using Additive Manufacturing—A Literature Review. Procedia CIRP 2022, 107, 894–901. [Google Scholar] [CrossRef]

- Banker, R.D.; Kauffman, R.J. 50th Anniversary Article: The Evolution of Research on Information Systems: A Fiftieth-Year Survey of the Literature in Management Science. Manag. Sci. 2004, 50, 281–298. [Google Scholar] [CrossRef]

- Booth, A.; Sutton, A.; Papaioannou, D. Systematic Approaches to a Successful Literature Review, 2nd ed.; SAGE: Los Angeles, CA, USA; London, UK; New Delhi, India; Singapore; Washington, DC, USA; Melbourne, Australia, 2016; ISBN 978-1-4739-1245-8. [Google Scholar]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef] [PubMed]

- Kamiske, G.F.; Brauer, J.-P. Qualitätsmanagement von A bis Z: Wichtige Begriffe des Qualitätsmanagements und ihre Bedeutung; Hanser: München, Germany; Wien, Austria, 2011; ISBN 978-3-446-42581-1. [Google Scholar]

- Schmitt, R.; Pfeifer, T. Qualitätsmanagement: Strategien-Methoden-Techniken; Hanser: München, Germany, 2015; ISBN 978-3-446-43432-5. [Google Scholar]

- Ma, J.; Kim, H.M. Predictive Model Selection for Forecasting Product Returns. J. Mech. Des. Trans. ASME 2016, 138, 054501. [Google Scholar] [CrossRef]

- Cui, H.; Rajagopalan, S.; Ward, A.R. Predicting product return volume using machine learning methods. Eur. J. Oper. Res. 2020, 281, 612–627. [Google Scholar] [CrossRef]

- Östlin, J.; Sundin, E.; Björkman, M. Importance of closed-loop supply chain relationships for product remanufacturing. Int. J. Prod. Econ. 2008, 115, 336–348. [Google Scholar] [CrossRef]

- Sundin, E.; Sakao, T.; Lind, S.; Kao, C.-C.; Joungerious, B. Map of Remanufacturing Business Model Landscape; European Remanufacturing Network: Aylesbury, UK, 2016. [Google Scholar]

- Agrawal, S.; Singh, R.K. Forecasting product returns and reverse logistics performance: Structural equation modelling. MEQ 2020, 31, 1223–1237. [Google Scholar] [CrossRef]

- Jung, G.; Park, J.; Kim, Y.; Kim, Y.B. A modified bootstrap method for intermittent demand forecasting for rare spare parts. Int. J. Ind. Eng. Theory Appl. Pract. 2017, 24, 245–254. [Google Scholar]

- Tsiliyannis, C.A. Markov chain modeling and forecasting of product returns in remanufacturing based on stock mean-age. Eur. J. Oper. Res. 2018, 271, 474–489. [Google Scholar] [CrossRef]

- Geda, M.W.; Kwong, C.K. Forecasting of Used Product Returns for Remanufacturing. In Proceedings of the 2018 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM), Bangkok, Thailand, 16–19 December 2018; pp. 889–893. [Google Scholar]

- Zhou, L.; Xie, J.; Gu, X.; Lin, Y.; Ieromonachou, P.; Zhang, X. Forecasting return of used products for remanufacturing using Graphical Evaluation and Review Technique (GERT). Int. J. Prod. Econ. 2016, 181, 315–324. [Google Scholar] [CrossRef]

- Zhu, S.; Dekker, R.; van Jaarsveld, W.; Renjie, R.W.; Koning, A.J. An improved method for forecasting spare parts demand using extreme value theory. Eur. J. Oper. Res. 2017, 261, 169–181. [Google Scholar] [CrossRef]

- van der Auweraer, S.; Boute, R. Forecasting spare part demand using service maintenance information. Int. J. Prod. Econ. 2019, 213, 138–149. [Google Scholar] [CrossRef]

- Kim, J.-D.; Kim, T.-H.; Han, S.W. Demand Forecasting of Spare Parts Using Artificial Intelligence: A Case Study of K-X Tanks. Mathematics 2023, 11, 501. [Google Scholar] [CrossRef]

- Choi, B.; Suh, J.H. Forecasting spare parts demand of military aircraft: Comparisons of data mining techniques and managerial features from the case of South Korea. Sustainability 2020, 12, 6045. [Google Scholar] [CrossRef]

- Kim, J. Text Mining-based Approach for Forecasting Spare Parts Demand of K-X Tanks. In Proceedings of the 2018 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM), Bangkok, Thailand, 16–19 December 2018; pp. 1652–1656. [Google Scholar]

- Chandriah, K.K.; Naraganahalli, R.V. RNN/LSTM with modified Adam optimizer in deep learning approach for automobile spare parts demand forecasting. Multimed. Tools Appl. 2021, 80, 26145–26159. [Google Scholar] [CrossRef]

- Pawar, N.; Tiple, B. Analysis on Machine Learning Algorithms and Neural Networks for Demand Forecasting of Anti-Aircraft Missile Spare Parts. In Proceedings of the 2019 International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 17–19 July 2019; pp. 854–859. [Google Scholar]

- Chien, C.-F.; Ku, C.-C.; Lu, Y.-Y. Ensemble learning for demand forecast of After-Market spare parts to empower data-driven value chain and an empirical study. Comput. Ind. Eng. 2023, 185, 109670. [Google Scholar] [CrossRef]

- Guo, F.; Diao, J.; Zhao, Q.; Wang, D.; Sun, Q. A double-level combination approach for demand forecasting of repairable airplane spare parts based on turnover data. Comput. Ind. Eng. 2017, 110, 92–108. [Google Scholar] [CrossRef]

- do Rego, J.R.; De Mesquita, M.A. Demand forecasting and inventory control: A simulation study on automotive spare parts. Int. J. Prod. Econ. 2015, 161, 1–16. [Google Scholar] [CrossRef]

- Dombi, J.; Jónás, T.; Tóth, Z.E. Modeling and long-term forecasting demand in spare parts logistics businesses. Int. J. Prod. Econ. 2018, 201, 1–17. [Google Scholar] [CrossRef]

- Amirkolaii, K.N.; Baboli, A.; Shahzad, M.K.; Tonadre, R. Demand Forecasting for Irregular Demands in Business Aircraft Spare Parts Supply Chains by using Artificial Intelligence (AI). IFAC-PapersOnLine 2017, 50, 15221–15226. [Google Scholar] [CrossRef]

- Baisariyev, M.; Bakytzhanuly, A.; Serik, Y.; Mukhanova, B.; Babai, M.Z.; Tsakalerou, M.; Papadopoulos, C.T. Demand forecasting methods for spare parts logistics for aviation: A real-world implementation of the Bootstrap method. Procedia Manuf. 2021, 55, 500–506. [Google Scholar] [CrossRef]

- İfraz, M.; Aktepe, A.; Ersöz, S.; Çetinyokuş, T. Demand forecasting of spare parts with regression and machine learning methods: Application in a bus fleet. J. Eng. Res. 2023, 11, 100057. [Google Scholar] [CrossRef]

- Mobarakeh, N.A.; Shahzad, M.K.; Baboli, A.; Tonadre, R. Improved Forecasts for uncertain and unpredictable Spare Parts Demand in Business Aircraft’s with Bootstrap Method. IFAC-PapersOnLine 2017, 50, 15241–15246. [Google Scholar] [CrossRef]

- AlAlaween, W.H.; Abueed, O.A.; AlAlawin, A.H.; Abdallah, O.H.; Albashabsheh, N.T.; AbdelAll, E.S.; Al-Abdallat, Y.A. Artificial neural networks for predicting the demand and price of the hybrid electric vehicle spare parts. Cogent Eng. 2022, 9, 2075075. [Google Scholar] [CrossRef]

- Armenzoni, M.; Montanari, R.; Vignali, G.; Bottani, E.; Ferretti, G.; Solari, F.; Rinaldi, M. An integrated approach for demand forecasting and inventory management optimisation of spare parts. Int. J. Simul. Process Model. 2015, 10, 223–240. [Google Scholar] [CrossRef]

- Babaveisi, V.; Teimoury, E.; Gholamian, M.R.; Rostami-Tabar, B. Integrated demand forecasting and planning model for repairable spare part: An empirical investigation. Int. J. Prod. Res. 2023, 61, 6791–6807. [Google Scholar] [CrossRef]

- Boukhtouta, A.; Jentsch, P. Support Vector Machine for Demand Forecasting of Canadian Armed Forces Spare Parts. In Proceedings of the 2018 6th International Symposium on Computational and Business Intelligence (ISCBI), Basel, Switzerland, 27–29 August 2018; pp. 59–64. [Google Scholar]

- Dali, H.; Chengcheng, L. Demand forecast of equipment spare parts based on EEMD-LSTM. In Proceedings of the 2021 6th International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Oita, Japan, 25–27 November 2021; pp. 230–234. [Google Scholar]

- Han, Y.; Wang, L.; Gao, J.; Xing, Z.; Tao, T. Combination forecasting based on SVM and neural network for urban rail vehicle spare parts demand. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 4660–4665. [Google Scholar]

- Jónás, T.; Tóth, Z.E.; Dombi, J. A knowledge discovery based approach to long-term forecasting of demand for electronic spare parts. In Proceedings of the 2015 16th IEEE International Symposium on Computational Intelligence and Informatics (CINTI), Budapest, Hungary, 19–21 November 2015; pp. 291–296. [Google Scholar]

- Lee, H.; Kim, J. A Predictive Model for Forecasting Spare Parts Demand in Military Logistics. In Proceedings of the 2018 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM), Bangkok, Thailand, 16–19 December 2018; pp. 1106–1110. [Google Scholar]

- Liu, Y.; Zhang, Q.; Fan, Z.-P.; You, T.-H.; Wang, L.-X. Maintenance Spare Parts Demand Forecasting for Automobile 4S Shop Considering Weather Data. IEEE Trans. Fuzzy Syst. 2019, 27, 943–955. [Google Scholar] [CrossRef]

- Ma, Z.; Wang, C.; Zhang, Z. Deep Learning Algorithms for Automotive Spare Parts Demand Forecasting. In Proceedings of the 2021 International Conference on Computer Information Science and Artificial Intelligence (CISAI), Kunming, China, 17–19 September 2021; pp. 358–361. [Google Scholar]

- de Melo Menezes, B.A.; de Siqueira Braga, D.; Hellingrath, B.; de Lima Neto, F.B. An evaluation of forecasting methods for anticipating spare parts demand. In Proceedings of the 2015 Latin America Congress on Computational Intelligence (LA-CCI), Curitiba, Brazil, 13–16 October 2015; pp. 1–6. [Google Scholar]

- Niu, P.; Wang, Z.; Lei, Y.; Wan, J. Demand Forecast of Spare Parts of Air Materials Based on Wavelet Analysis and GM(1,1)-AR(p) Model. In Proceedings of the 2020 International Conference on Artificial Intelligence and Electromechanical Automation (AIEA), Tianjin, China, 26–28 June 2020; pp. 665–669. [Google Scholar]

- Pawar, N.; Tiple, B. Demand Forecasting of Anti-Aircraft Missile Spare Parts Using Neural Network. In Proceedings of the 2019 3rd International conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 12–14 June 2019; pp. 572–578. [Google Scholar]

- Qiu, Q.; Qin, C.; Shi, J.; Zhou, H. Research on Demand Forecast of Aircraft Spare Parts Based on Fractional Order Discrete Grey Model. In Proceedings of the 2019 IEEE 5th International Conference on Computer and Communications (ICCC), Chengdu, China, 6–9 December 2019; pp. 2212–2216. [Google Scholar]

- Wang, H.; Liu, H.; Shao, S.; Zhang, Z. Demand Forecasting and Impact Analysis of Spare Parts Based on Large Components of the Warship. In Proceedings of the 2023 5th International Conference on System Reliability and Safety Engineering (SRSE), Beijing, China, 20–23 October 2023; pp. 38–43. [Google Scholar]

- Wang, Z.; Wen, J.; Hua, D. Research on distribution network spare parts demand forecasting and inventory quota. In Proceedings of the 2014 IEEE PES Asia-Pacific Power and Energy Engineering Conference (APPEEC), Hong Kong, China, 7–10 December 2014; pp. 1–6. [Google Scholar]

- Wu, X.; Bian, W. Demand analysis and forecast for spare parts of perishable hi-tech products. In Proceedings of the 2015 International Conference on Logistics, Informatics and Service Sciences (LISS), Barcelona, Spain, 27–29 July 2015; pp. 1–6. [Google Scholar]

- Xing, R.; Shi, X. A BP-SVM combined model for intermittent spare parts demand prediction. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019; pp. 1085–1090. [Google Scholar]

- Yang, C.; Xu, Q.; Qin, H.; Xuan, K. Grey Forecasting Method of Equipment Spare Parts Demand Based on Swarm Intelligence Optimization. In Proceedings of the 2021 IEEE 5th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 March 2021; pp. 1–4. [Google Scholar]

- Cao, Y.; Li, Y. A two-stage approach of forecasting spare parts demand using particle swarm optimization and fuzzy neural network. J. Comput. Inf. Syst. 2014, 10, 6785–6793. [Google Scholar]

- Carmo, T.; Cruz, M.; Santos, J.; Ramos, S.; Barroso, S.; Araújo, P. Statistical and Machine Learning Methods for Automotive Spare Parts Demand Prediction. Math. Ind. 2022, 39, 471–476. [Google Scholar] [CrossRef]

- Caserta, M.; D’Angelo, L. Intermittent demand forecasting for spare parts with little historical information. J. Oper. Res. Soc. 2024, 76, 294–309. [Google Scholar] [CrossRef]

- Ding, J.; Liu, Y.; Cao, Y.; Zhang, L.; Wang, J. Spare part demand prediction based on context-aware matrix factorization. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2015; Volume 9313, pp. 304–315. [Google Scholar] [CrossRef]

- Fan, L.; Liu, X.; Mao, W.; Yang, K.; Song, Z. Spare Parts Demand Forecasting Method Based on Intermittent Feature Adaptation. Entropy 2023, 25, 764. [Google Scholar] [CrossRef]

- Guimaraes, C.B.; Marques, J.M.; Tortato, U. Demand forecasting for high-turnover spare parts in agricultural and construction machines: A case study. S. Afr. J. Ind. Eng. 2020, 31, 116–128. [Google Scholar] [CrossRef]

- Hong, K.; Ren, Y.; Li, F.; Mao, W.; Gao, X. Robust Interval Prediction of Intermittent Demand for Spare Parts Based on Tensor Optimization. Sensors 2023, 23, 7182. [Google Scholar] [CrossRef] [PubMed]

- Hu, Q.; Bai, Y.; Zhao, J.; Cao, W. Modeling spare parts demands forecast under two-dimensional preventive maintenance policy. Math. Probl. Eng. 2015, 2015, 728241. [Google Scholar] [CrossRef][Green Version]

- Hu, Y.G.; Sun, S.; Wen, J.Q. Agricultural machinery spare parts demand forecast based on BP neural network. Appl. Mech. Mater. 2014, 635–637, 1822–1825. [Google Scholar] [CrossRef]

- Huang, G.; Yang, Y.; Li, W.; Cao, X.; Yang, Z. A convolutional neural network- back propagation based three-layer combined forecasting method for spare part demand. RAIRO-Oper. Res. 2024, 58, 4181–4195. [Google Scholar] [CrossRef]

- Innuphat, S.; Toahchoodee, M. The Implementation of Discrete-Event Simulation and Demand Forecasting Using Temporal Fusion Transformers to Validate Spare Parts Inventory Policy for The Petrochemicals Industry. ECTI Trans. Comput. Inf. Technol. 2022, 16, 247–258. [Google Scholar] [CrossRef]

- Jiang, P.; Huang, Y.; Liu, X. Intermittent demand forecasting for spare parts in the heavy-duty vehicle industry: A support vector machine model. Int. J. Prod. Res. 2021, 59, 7423–7440. [Google Scholar] [CrossRef]

- Kačmáry, P.; Malindžák, D.; Spišák, J. The Design of Forecasting System Used for Prediction of Electro-Motion Spare Parts Demands as an Improving Tool for an Enterprise Management. Manag. Syst. Prod. Eng. 2019, 27, 242–249. [Google Scholar] [CrossRef]

- Kim, J.-D.; Hwang, J.-H.; Doh, H.-H. A Predictive Model with Data Scaling Methodologies for Forecasting Spare Parts Demand in Military Logistics. Def. Sci. J. 2023, 73, 666–674. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, Y.; Yan, X.; Peng, Z. A novel prediction model for aircraft spare part intermittent demand in aviation transportation logistics using multi-components accumulation and high resolution analysis. Proc. Inst. Mech. Eng. Part G J. Aerosp. Eng. 2015, 229, 384–395. [Google Scholar] [CrossRef]

- Liu, M. Equipment Spare Parts Demand Forecasting and Ordering Decision Based on Holt and MPG. Front. Artif. Intell. Appl. 2023, 373, 306–312. [Google Scholar] [CrossRef]

- Lucht, T.; Alieksieiev, V.; Kämpfer, T.; Nyhuis, P. Spare Parts Demand Forecasting in Maintenance, Repair & Overhaul. In Proceedings of the Conference on Production Systems and Logistics; Publish-Ing: Hannover, Germany, 2022. [Google Scholar] [CrossRef]

- Ma, J.; Kim, H.M. Predictive modeling of product returns for remanufacturing. In Proceedings of the ASME Design Engineering Technical Conference; American Society of Mechanical Engineers:: New York, NY, USA, 2015; Volume 2A-2015. [Google Scholar] [CrossRef]

- Mao, H.L.; Gao, J.W.; Chen, X.J.; Gao, J.D. Demand prediction of the rarely used spare parts based on the BP neural network. Appl. Mech. Mater. 2014, 519–520, 1511–1517. [Google Scholar] [CrossRef]

- Özbay, E.; Hacialioğlu, B.; Dokuyucu, B.İ.; Şahin, H.; Saçlı, M.M.; Genç, M.N.; Staiou, E.; Paldrak, M. Developing a Spare Parts Demand Forecasting System. In Proceedings of the International Symposium for Production Research 2019; Lecture Notes in Mechanical Engineering. Springer: Cham, Switzerland, 2020; pp. 676–691. [Google Scholar] [CrossRef]

- Qiu, C.; Zhao, B.; Liu, S.; Zhang, W.; Zhou, L.; Li, Y.; Guo, R. Data Classification and Demand Prediction Methods Based on Semi-Supervised Agricultural Machinery Spare Parts Data. Agriculture 2023, 13, 49. [Google Scholar] [CrossRef]

- Ren, X.; Zhang, X.-F. Spare Parts Demand Forecasting based on ARMA Model. In Proceedings of the SPIE-The International Society for Optical Engineering, Xi’an, China, 16–18 September 2022; Volume 12462. [Google Scholar] [CrossRef]

- Rosienkiewicz, M. Accuracy Assessment of Artificial Intelligence-Based Hybrid Models for Spare Parts Demand Forecasting in Mining Industry. Adv. Intell. Syst. Comput. 2020, 1052, 176–187. [Google Scholar] [CrossRef]

- Sun, Y.; Yan, X.; Wang, Z. Demand Forecast of Aviation Spare Parts Based on Grey Model. In Proceedings of the SPIE-The International Society for Optical Engineering, Kaifeng, China, 26–28 May 2023; Volume 12784. [Google Scholar] [CrossRef]

- Tsao, Y.-C.; Kurniati, N.; Pujawan, I.N.; Yaqin, A.M.A. Spare parts demand forecasting in energy industry: A stacked generalization-based approach. In Proceedings of the 2019 International Conference on Management Science and Industrial Engineering, Phuket, Thailand, 24–26 May 2019. [Google Scholar] [CrossRef]

- Tsao, Y.-C.; Yaqin, A.; Lu, J.-C.; Kurniati, N.; Pujawan, N. Intelligent Demand Forecasting Approaches for Spare Parts in the Energy Industry. Int. J. Ind. Eng. Theory Appl. Pract. 2024, 31, 560–576. [Google Scholar] [CrossRef]

- Vaitkus, V.; Zylius, G.; Maskeliunas, R. Electrical spare parts demand forecasting. Elektron. Elektrotechnika 2014, 20, 7–10. [Google Scholar] [CrossRef]

- Vasumathi, B.; Saradha, A. Enhancement of intermittent demands in forecasting for spare parts industry. Indian J. Sci. Technol. 2015, 8, 1–8. [Google Scholar] [CrossRef]

- Xu, H.; Zhao, W.; Lin, S.; Niu, J.; Li, P. A Demand Forecast Method for Expressway Spare Parts Based on Analysis of Influencing Factors. In Proceedings of the CICTP 2020: Transportation Evolution Impacting Future Mobility-Selected Papers from the 20th COTA International Conference of Transportation Professionals, Xi’an, China, 14–16 August 2020. [Google Scholar]

- Yang, Y.; Liu, W.; Zeng, T.; Guo, L.; Qin, Y.; Wang, X. An Improved Stacking Model for Equipment Spare Parts Demand Forecasting Based on Scenario Analysis. Sci. Program. 2022, 2022, 5415702. [Google Scholar] [CrossRef]

- Zhu, Q.; Yang, L.; Liu, Y. Research on vehicle spare parts demand forecast based on XGBoost-LightGBM. In Proceedings of the 2023 5th International Conference on Pattern Recognition and Intelligent Systems, Shenyang, China, 28–30 July 2023; pp. 109–114. [Google Scholar] [CrossRef]

- Alenezi, D.F.; Biehler, M.; Shi, J.; Li, J. Physics-Informed Weakly-Supervised Learning for Quality Prediction of Manufacturing Processes. IEEE Trans. Autom. Sci. Eng. 2024, 22, 2006–2019. [Google Scholar] [CrossRef]

- Wang, Y.; Hu, W.; Zhang, H.; He, Y. Causal Graph Attention Networks for Quality Prediction of Mass Customization Production Process. In Proceedings of the 2024 IEEE 13th Data Driven Control and Learning Systems Conference (DDCLS), Kaifeng, China, 17–19 May 2024; pp. 559–564. [Google Scholar]

- Zhou, H.; Yu, K.-M.; Chen, Y.-C.; Hsu, H.-P. A Hybrid Feature Selection Method RFSTL for Manufacturing Quality Prediction Based on a High Dimensional Imbalanced Dataset. IEEE Access 2021, 9, 29719–29735. [Google Scholar] [CrossRef]

- Kao, H.-A.; Hsieh, Y.-S.; Chen, C.-H.; Lee, J. Quality prediction modeling for multistage manufacturing based on classification and association rule mining. MATEC Web Conf. 2017, 123, 00029. [Google Scholar] [CrossRef]

- Psarommatis, F.; Zhou, B.; Kharlamov, E. Implementation of Zero Defect Manufacturing using quality prediction: A spot welding case study from Bosch. Procedia Comput. Sci. 2024, 232, 1299–1308. [Google Scholar] [CrossRef]

- Bai, Y.; Xie, J.; Wang, D.; Zhang, W.; Li, C. A manufacturing quality prediction model based on AdaBoost-LSTM with rough knowledge. Comput. Ind. Eng. 2021, 155, 107227. [Google Scholar] [CrossRef]

- Caihong, Z.; Zengyuan, W.; Chang, L. A Study on Quality Prediction for Smart Manufacturing Based on the Optimized BP-AdaBoost Model. In Proceedings of the 2019 IEEE International Conference on Smart Manufacturing, Industrial & Logistics Engineering (SMILE), Hangzhou, China, 20–21 April 2019; pp. 1–3. [Google Scholar]

- Liu, D.; Hu, S.; Zhao, X.; Qiu, Q.; Jiang, Y.; Fan, P. Multi-condition Quality Prediction of Production Process Based on Hybrid Transfer Learning. In Proceedings of the 2024 6th International Conference on System Reliability and Safety Engineering (SRSE), Hangzhou, China, 11–14 October 2024; pp. 353–358. [Google Scholar]

- Peng, C.; Cheng, Z.; Ren, H.; Lu, R. A Quality Prediction Hybrid Model of Manufacturing Process Based on Genetic Programming. In Proceedings of the 2022 IEEE 11th Data Driven Control and Learning Systems Conference (DDCLS), Chengdu, China, 3–5 August 2022; pp. 77–81. [Google Scholar]

- Zhang, D.; Liu, Z.; Jia, W.; Liu, H.; Tan, J. Path Enhanced Bidirectional Graph Attention Network for Quality Prediction in Multistage Manufacturing Process. IEEE Trans. Ind. Inform. 2022, 18, 1018–1027. [Google Scholar] [CrossRef]

- Bai, Y.; Sun, Z.; Deng, J.; Li, L.; Long, J.; Li, C. Manufacturing quality prediction using intelligent learning approaches: A comparative study. Sustainability 2018, 10, 85. [Google Scholar] [CrossRef]

- Bai, Y.; Sun, Z.; Zeng, B.; Long, J.; Li, L.; de Oliveira, J.V.; Li, C. A comparison of dimension reduction techniques for support vector machine modeling of multi-parameter manufacturing quality prediction. J. Intell. Manuf. 2019, 30, 2245–2256. [Google Scholar] [CrossRef]

- Demirel, K.C.; Şahin, A.; Albey, E. A web-based decision support system for quality prediction in manufacturing using ensemble of regressor chains. Commun. Comput. Inf. Sci. 2020, 1255 CCIS, 96–114. [Google Scholar] [CrossRef]

- Deng, J.; Bai, Y.; Li, C. A deep regression model with low-dimensional feature extraction for multi-parameter manufacturing quality prediction. Appl. Sci. 2020, 10, 2522. [Google Scholar] [CrossRef]

- Chien, C.-H.; Trappey, A.J.; Wang, C.-C. ARIMA-AdaBoost hybrid approach for product quality prediction in advanced transformer manufacturing. Adv. Eng. Inform. 2023, 57, 102055. [Google Scholar] [CrossRef]

- Wang, H.; Li, B.; Xuan, F.-Z. A dimensionally augmented and physics-informed machine learning for quality prediction of additively manufactured high-entropy alloy. J. Mater. Process. Technol. 2022, 307, 117637. [Google Scholar] [CrossRef]

- Shim, J.; Kang, S.; Cho, S. Active inspection for cost-effective fault prediction in manufacturing process. J. Process Control. 2021, 105, 250–258. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, P.; Gao, R.X. Modeling of Layer-wise Additive Manufacturing for Part Quality Prediction. Procedia Manuf. 2018, 16, 155–162. [Google Scholar] [CrossRef]

- Wang, M.; Wang, J.; Gao, W.; Guo, M. E-YQP: A self-adaptive end-to-end framework for quality prediction in yarn spinning manufacturing. Adv. Eng. Inform. 2024, 62, 102623. [Google Scholar] [CrossRef]

- Huynh, N.-T. Multi-stage defect prediction and classification model to reduce the inspection time in semiconductor back end manufacturing process and an empirical application. Comput. Ind. Eng. 2024, 187, 109778. [Google Scholar] [CrossRef]

- Wang, P.; Qu, H.; Zhang, Q.; Xu, X.; Yang, S. Production quality prediction of multistage manufacturing systems using multi-task joint deep learning. J. Manuf. Syst. 2023, 70, 48–68. [Google Scholar] [CrossRef]

- Nikita, S.; Thakur, G.; Jesubalan, N.G.; Kulkarni, A.; Yezhuvath, V.B.; Rathore, A.S. AI-ML applications in bioprocessing: ML as an enabler of real time quality prediction in continuous manufacturing of mAbs. Comput. Chem. Eng. 2022, 164, 107896. [Google Scholar] [CrossRef]

- Schorr, S.; Möller, M.; Heib, J.; Fang, S.; Bähre, D. Quality Prediction of Reamed Bores Based on Process Data and Machine Learning Algorithm: A Contribution to a More Sustainable Manufacturing. Procedia Manuf. 2020, 43, 519–526. [Google Scholar] [CrossRef]

- Kobayashi, S.; Miyakawa, M.; Takemasa, S.; Takahashi, N.; Watanabe, Y.; Satoh, T.; Kano, M. Transfer Learning for Quality Prediction in a Chemical Toner Manufacturing Process. In 14th International Symposium on Process Systems Engineering; Yamashita, Y., Kano, M., Eds.; Elsevier: Amsterdam, The Netherlands, 2022; pp. 1663–1668. [Google Scholar]

- Sun, X.; Beghi, A.; Susto, G.A.; Lv, Z. Deep learning-based quality prediction for multi-stage sequential hot rolling processes in heavy rail manufacturing. Comput. Ind. Eng. 2024, 196, 110466. [Google Scholar] [CrossRef]

- Yang, X.; Li, Z.; Cao, L.; Chen, L.; Huang, Q.; Bi, G. Process optimization and quality prediction of laser aided additive manufacturing SS 420 based on RSM and WOA-Bi-LSTM. Mater. Today Commun. 2024, 38, 107882. [Google Scholar] [CrossRef]

- Al-Kharaz, M.; Ananou, B.; Ouladsine, M.; Combal, M.; Pinaton, J. Quality Prediction in Semiconductor Manufacturing processes Using Multilayer Perceptron Feedforward Artificial Neural Network. In Proceedings of the 2019 8th International Conference on Systems and Control (ICSC), Marrakesh, Morocco, 23–25 October 2019; pp. 423–428. [Google Scholar]

- Bai, Y.; Li, C.; Sun, Z.; Chen, H. Deep neural network for manufacturing quality prediction. In Proceedings of the 2017 Prognostics and System Health Management Conference (PHM-Harbin), Harbin, China, 9–12 July 2017; pp. 1–5. [Google Scholar]

- Forsberg, B.; Williams, H.; Macdonald, B.; Chen, T.; Hamzeh, R.; Hulse, K. Utilising Explainable Techniques for Quality Prediction in a Complex Textiles Manufacturing Use Case. In Proceedings of the 2024 IEEE 20th International Conference on Automation Science and Engineering (CASE), Bari, Italy, 28 August–1 September 2024; pp. 245–251. [Google Scholar]

- Jiang, J.-R.; Yen, C.-T. Markov Transition Field and Convolutional Long Short-Term Memory Neural Network for Manufacturing Quality Prediction. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-Taiwan), Taoyuan, Taiwan, 28–30 September 2020; pp. 1–2. [Google Scholar]

- Ju, L.; Zhou, J.; Zhang, X. Corundum production quality prediction based on support vector regression. In Proceedings of the 2017 12th IEEE Conference on Industrial Electronics and Applications (ICIEA), Siem Reap, Cambodia, 18–20 June 2017; pp. 2028–2032. [Google Scholar]

- Lee, K.-T.; Lee, Y.-S.; Yoon, H. Development of Edge-based Deep Learning Prediction Model for Defect Prediction in Manufacturing Process. In Proceedings of the 2019 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 16–18 October 2019; pp. 248–250. [Google Scholar]

- Matzka, S. Using Process Quality Prediction to Increase Resource Efficiency in Manufacturing Processes. In Proceedings of the 2018 First International Conference on Artificial Intelligence for Industries (AI4I), Laguna Hills, CA, USA, 26–28 September 2018; pp. 110–111. [Google Scholar]

- Mohammadi, P.; Wang, Z.J. Machine learning for quality prediction in abrasion-resistant material manufacturing process. In Proceedings of the 2016 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), Vancouver, BC, Canada, 15–18 May 2016; pp. 1–4. [Google Scholar]

- Trappey, A.J.C.; Chien, C.-H. Intelligent Product Quality Prediction for Highly Customized Complex Production Adopting Ensemble Learning Model. In Proceedings of the 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Honolulu, Oahu, HI, USA, 1–4 October 2023; pp. 4575–4580. [Google Scholar]

- Yuan, B.-W.; Zhang, Z.-L.; Luo, X.-G.; Yu, Y.; Sun, J.-Y.; Zou, X.-H.; Zou, X.-D. Defect prediction of low pressure die casting in crankcase production based on data mining methods. In Proceedings of the 2020 Chinese Control and Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 2560–2564. [Google Scholar]

- Beckschulte, S.; Mohren, J.; Huebser, L.; Buschmann, D.; Schmitt, R.H. Benchmarking Control Charts and Machine Learning Methods for Fault Prediction in Manufacturing. In Lecture Notes in Production Engineering; Springer: Berlin/Heidelberg, Germany, 2023; Part F1163; pp. 545–554. [Google Scholar] [CrossRef]

- Chen, M.; Wei, Z.; Li, L.; Zhang, K. Edge computing-based proactive control method for industrial product manufacturing quality prediction. Sci. Rep. 2024, 14, 1288. [Google Scholar] [CrossRef]

- Chien, C.-H.; Trappey, A.J. AdaBoost-Based Transfer Learning Approach for Highly-Customized Product Quality Prediction in Smart Manufacturing. Adv. Transdiscipl. Eng. 2024, 60, 236–244. [Google Scholar] [CrossRef]

- Deuse, J.; Schmitt, J.; Bönig, J.; Beitinger, G. Dynamic X-ray testing in electronics production-Application of data mining techniques for quality prediction; [Dynamische Röntgenprüfung in der Elektronikproduktion: Einsatz von Data-Mining-Verfahren zur Qualitätsprognose]. ZWF Z. Fuer Wirtsch. Fabr. 2019, 114, 264–267. [Google Scholar] [CrossRef]

- Huang, Y.; Yue, C.; Tan, X.; Zhou, Z.; Li, X.; Zhang, X.; Zhou, C.; Peng, Y.; Wang, K. Quality Prediction for Wire Arc Additive Manufacturing Based on Multi-source Signals, Whale Optimization Algorithm–Variational Modal Decomposition, and One-Dimensional Convolutional Neural Network. J. Mater. Eng. Perform. 2024, 33, 11351–11364. [Google Scholar] [CrossRef]

- Jun, J.; Chang, T.-W.; Jun, S. Quality prediction and yield improvement in process manufacturing based on data analytics. Process. 2020, 8, 1068. [Google Scholar] [CrossRef]

- Jung, H.; Jeon, J.; Choi, D.; Park, A.J.-Y. Application of machine learning techniques in injection molding quality prediction: Implications on sustainable manufacturing industry. Sustainability 2021, 13, 4120. [Google Scholar] [CrossRef]

- Kim, A.; Oh, K.; Park, H.; Jung, J.-Y. Comparison of quality prediction algorithms in manufacturing process. ICIC Express Lett. 2017, 11, 1127–1132. [Google Scholar]

- Lee, J.H.; Do Noh, S.; Kim, H.-J.; Kang, Y.-S. Implementation of cyber-physical production systems for quality prediction and operation control in metal casting. Sensors 2018, 18, 1428. [Google Scholar] [CrossRef]

- Li, R.; Wang, X.; Wang, Z.; Zhu, Z.; Liu, Z. Multistage Quality Prediction Using Neural Networks in Discrete Manufacturing Systems. Appl. Sci. 2023, 13, 8776. [Google Scholar] [CrossRef]

- Olowe, M.; Ogunsanya, M.; Best, B.; Hanif, Y.; Bajaj, S.; Vakkalagadda, V.; Fatoki, O.; Desai, S. Spectral Features Analysis for Print Quality Prediction in Additive Manufacturing: An Acoustics-Based Approach. Sensors 2024, 24, 4864. [Google Scholar] [CrossRef]

- Sankhye, S.; Hu, G. Machine Learning Methods for Quality Prediction in Production. Logistics 2020, 4, 35. [Google Scholar] [CrossRef]

- Tercan, H.; Meisen, T. Online Quality Prediction in Windshield Manufacturing using Data-Efficient Machine Learning. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Long Beach, CA, USA, 6–10 August 2023. [Google Scholar] [CrossRef]

- Tian, S.; Zhang, Z.; Xie, X.; Yu, C. A new approach for quality prediction and control of multistage production and manufacturing process based on Big Data analysis and Neural Networks. Adv. Prod. Eng. Manag. 2022, 17, 326–338. [Google Scholar] [CrossRef]

- Tsou, C.-S.; Liou, C.; Cheng, L.; Zhou, H. Quality prediction through machine learning for the inspection and manufacturing process of blood glucose test strips. Cogent Eng. 2022, 9, 2083475. [Google Scholar] [CrossRef]

- Xiao, X.; Waddell, C.; Hamilton, C.; Xiao, H. Quality Prediction and Control in Wire Arc Additive Manufacturing via Novel Machine Learning Framework. Micromachines 2022, 13, 137. [Google Scholar] [CrossRef] [PubMed]

- Zhang, A.; Zhao, Y.; Li, X.; Fan, X.; Ren, X.; Li, Q.; Yue, L. Development of a Hybrid AI Model for Fault Prediction in Rod Pumping System for Petroleum Well Production. Energies 2024, 17, 5422. [Google Scholar] [CrossRef]

| Expert | Function | Sector |

|---|---|---|

| E1 | Product management | Commercial vehicle |

| E2 | Quality management | Rail transport |

| E3 | Business development | Passenger vehicle |

| E4 | Product management | Commercial vehicle |

| E5 | Technical project manager | Bike systems |

| Data Source | Publications | Percentage [%] |

|---|---|---|

| Simulation | [20,24,47,48,49,50,51,52] | 11 |

| Benchmark | [53,54,55,56,57] | 7 |

| Real-world data | [15,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115] | 82 |

| Traditional Statistical Models | Traditional ML Models | Deep Learning Models | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Publications * | MA | ARIMA | ES | SBA | CR | R | SVM | RF | ANN | LSTM |

| Saraswati et al. [15] | x | |||||||||

| Tsiliyannis [48] | x | |||||||||

| Zhu et al. [51] | x | x | ||||||||

| Van der Auweraer & Boute [52] | x | x | ||||||||

| Kim et al. [53] | x | x | x | x | x | x | ||||

| Choi & Suh [54] | x | x | x | x | ||||||

| Kim [55] | x | x | x | x | x | |||||

| Chandriah & Naraganahalli [56] | x | x | x | x | ||||||

| Chien et al. [58] | x | x | ||||||||

| Guo et al. [59] | x | x | ||||||||

| do Rego & de Mesquita [60] | x | x | ||||||||

| Dombi et al. [61] | x | x | x | |||||||

| Amirkolaii et al. [62] | x | x | x | x | x | |||||

| Ifraz et al. [64] | x | x | x | x | ||||||

| Mobarakeh et al. [65] | x | x | x | x | ||||||

| AlAlaween et al. [66] | x | x | ||||||||

| Babaveisi et al. [68] | x | x | x | |||||||

| Boukhtouta & Jentsch [69] | x | x | x | |||||||

| Dali & Chengcheng [70] | x | x | x | |||||||

| Han et al. [71] | x | x | ||||||||

| Lee & Kim [73] | x | x | x | |||||||

| Liu et al. [74] | x | x | ||||||||

| Ma et al. [75] | x | x | ||||||||

| Melo et al. [76] | x | x | x | |||||||

| Pawar & Tiple [78] | x | x | x | x | ||||||

| Wang et al. [81] | x | x | ||||||||

| Wu & Bian [82] | x | |||||||||

| Xing & Shi [83] | x | x | x | |||||||

| Cao & Li [85] | x | x | x | |||||||

| Carmo et al. [86] | x | |||||||||

| Caserta & D’Angelo [87] | x | x | x | |||||||

| Ding et al. [88] | x | x | x | |||||||

| Fan et al. [89] | x | x | x | x | ||||||

| Guimaraes et al. [90] | x | |||||||||

| Hong et al. [91] | x | x | x | x | ||||||

| Hu et al. [93] | x | |||||||||

| Huang et al. [94] | x | x | x | x | ||||||

| Jiang et al. [96] | x | x | x | x | x | x | x | |||

| Kacmary et al. [97] | x | x | ||||||||

| Kim et al. [98] | x | x | x | x | x | x | ||||

| Li et al. [99] | x | x | x | x | x | |||||

| Liu [100] | x | |||||||||

| Lucht et al. [101] | x | |||||||||

| Ma & Kim [102] | x | |||||||||

| Mao et al. [103] | x | |||||||||

| Özbay et al. [104] | x | x | ||||||||

| Qiu et al. [105] | x | |||||||||

| Ren & Zhang [106] | x | |||||||||

| Rosienkiewicz [107] | x | x | x | x | x | x | ||||

| Tsao et al. [109] | x | x | x | x | x | x | x | x | ||

| Tsao et al. [110] | x | x | x | x | x | x | x | x | ||

| Vaitkus et al. [111] | x | x | x | x | ||||||

| Vasumathi & Saradha [112] | x | |||||||||

| Xu et al. [113] | x | x | x | |||||||

| Yang et al. [114] | x | x | ||||||||

| Zhu et al. [115] | x | |||||||||

| Total | 17 | 15 | 21 | 13 | 15 | 12 | 21 | 12 | 31 | 9 |

| Data Source | Publications | Percentage [%] |

|---|---|---|

| Simulation | [116,117] | 3 |

| Benchmark | [118,119,120,121,122,123,124,125,126,127,128,129] | 23 |

| Real-world data | [130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,155,156,157,158,159,160,161,162,163,164,165,166,167,168] | 74 |

| Regression | Classification | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Publications * | R | SVM | RF | ANN | LSTM | DT | RF | XGB | SVM | ANN |

| Alenezi et al. [116] | x | x | x | |||||||

| Wang et al. [117] | x | |||||||||

| Psarommatis et al. [120] | x | x | ||||||||

| Bai et al. [121] | x | x | ||||||||

| Caihong et al. [122] | x | |||||||||

| Liu et al. [123] | x | |||||||||

| Zhang et al. [125] | x | x | x | x | ||||||

| Bai et al. [126] | x | x | ||||||||

| Bai et al. [127] | x | |||||||||

| Demirel et al. [128] | x | |||||||||

| Deng et al. [129] | x | x | ||||||||

| Chien et al. [130] | ||||||||||

| Wang et al. [131] | x | x | x | |||||||

| Zhang et al. [133] | x | x | x | |||||||

| Wang et al. [134] | x | x | x | |||||||

| Wang et al. [136] | x | x | ||||||||

| Nikita et al. [137] | x | x | x | |||||||

| Schorr et al. [138] | x | |||||||||

| Kobayashi et al. [139] | x | x | ||||||||

| Sun et al. [140] | x | x | x | |||||||

| Yang et al. [141] | x | x | x | |||||||

| Bai et al. [143] | x | x | ||||||||

| Jiang & Yen [145] | x | x | ||||||||

| Ju et al. [146] | x | x | ||||||||

| Trappey & Chien [150] | x | |||||||||

| Beckschulte et al. [152] | x | |||||||||

| Kim et al. [159] | x | x | x | x | ||||||

| Li et al. [161] | x | x | ||||||||

| Tercan & Meisen [164] | x | x | x | x | ||||||

| Xiao et al. [167] | x | x | ||||||||

| Zhou et al. [118] | x | |||||||||

| Kao et al. [119] | x | x | x | |||||||

| Shim et al. [132] | x | x | ||||||||

| Huynh [135] | x | |||||||||

| Forsberg at al. [144] | x | x | x | |||||||

| Lee et al. [147] | x | x | ||||||||

| Matzka [148] | x | x | x | |||||||

| Mohammadi & Wang [149] | x | |||||||||

| Yuan et al. [151] | x | x | ||||||||

| Chen et al. [153] | x | x | x | x | ||||||

| Deuse et al. [155] | x | x | ||||||||

| Huang et al. [156] | x | x | ||||||||

| Jun et al. [157] | x | x | x | x | ||||||

| Jung et al. [158] | x | x | x | |||||||

| Lee et al. [160] | x | x | x | x | ||||||

| Olowe et al. [162] | x | x | x | x | ||||||

| Sankhye & Hu [163] | x | x | ||||||||

| Tian et al. [165] | x | |||||||||

| Zhang et al. [168] | x | x | ||||||||

| Total | 11 | 16 | 14 | 16 | 6 | 10 | 10 | 6 | 12 | 8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Grosse Erdmann, J.; Ahmeti, E.; Wolf, R.; Koller, J.; Döpper, F. Forecasting Return Quantity, Timing and Condition in Remanufacturing with Machine Learning: A Mixed-Methods Approach. Sustainability 2025, 17, 6367. https://doi.org/10.3390/su17146367

Grosse Erdmann J, Ahmeti E, Wolf R, Koller J, Döpper F. Forecasting Return Quantity, Timing and Condition in Remanufacturing with Machine Learning: A Mixed-Methods Approach. Sustainability. 2025; 17(14):6367. https://doi.org/10.3390/su17146367

Chicago/Turabian StyleGrosse Erdmann, Julian, Engjëll Ahmeti, Raphael Wolf, Jan Koller, and Frank Döpper. 2025. "Forecasting Return Quantity, Timing and Condition in Remanufacturing with Machine Learning: A Mixed-Methods Approach" Sustainability 17, no. 14: 6367. https://doi.org/10.3390/su17146367

APA StyleGrosse Erdmann, J., Ahmeti, E., Wolf, R., Koller, J., & Döpper, F. (2025). Forecasting Return Quantity, Timing and Condition in Remanufacturing with Machine Learning: A Mixed-Methods Approach. Sustainability, 17(14), 6367. https://doi.org/10.3390/su17146367