Abstract

Throughout history, natural disasters have caused severe damage to people and properties worldwide. Flooding is one of the most disastrous types of natural disasters. A key feature of flood assessment has been making use of the information derived from remote-sensing imagery from optical sensors on satellites using spectral indices. Here, a study was conducted about a recent spectral index, the Normalised Difference Inundation Index, and a new ensemble spectral index, the Concatenated Normalised Difference Water Index, and two mature spectral indices: Normalised Difference Water Index and the differential Normalised Difference Water Index with four different machine learning algorithms: Decision Tree, Random Forest, Naive Bayes, and K-Nearest Neighbours applied to the PlanetScope satellite imagery about the Brisbane February 2022 flood which is in urban environment. Statistical analysis was applied to evaluate the results. Overall, the four algorithms provided no significant difference in terms of accuracy and F1 score. However, there were significant differences when some variations in the indices and the algorithms were combined. This research provides a validation of existing measures to identify floods in an urban environment that can help to improve sustainable development.

1. Introduction

Natural disasters have caused severe damage to the environment and society globally, in places such as Australia [1], Western Europe [2], Asia [3], and the United States (US) [4]. Reducing the impact of natural disasters is connected with the United Nations Sustainable Development Goals 4, 9, and 11 [5,6]. More than 450 million people have been affected by 700 natural disasters from 2010 to 2012 [7]. Due to the effects of climate change, the frequency of natural disasters is increasing globally [8,9]. Natural disasters can also cause psychological distress to those who experienced them [10]. Flooding is a particularly serious type of natural disaster that impacts people and the environment. Compared to a non-urban environment, urban floods impact people differently due to higher numbers of housing and businesses, as well as the presence of essential services such as hospitals and schools. Urban floods happen more frequently and with a larger impact, which have detrimental impacts on both the economy and people [11]. Here, the city of Brisbane, in Queensland, Australia, which suffered a flood in February 2022, will be used as a case study. The cost of buying and retrofitting the properties was AUD 741 million [12].

Remote sensing, in particular the capturing and analysing of satellite images which contain more than one spectral band [13,14], has aided in addressing natural disasters [15] such as floods [16]. Remote sensing has been applied to detect forests [17], buildings [18], droughts [19], vegetation [20], land cover [21,22], volcanic disasters [23,24], forest fires [25], earthquakes [26,27], geothermal anomalies from basins [28], avalanches [29], water area identification [30], and floods [31]. Satellite images have been used to map the spatial extent of floods; however, there are notable inaccuracies when applied to an urban environment. For example, building areas could be classified as flooding areas when applying the existing popular spectral index Normalised Difference Water Index [32,33]. There is insufficient research on flooding in an urban environment about specifically why this occurs; however, it could be due to: (1) the diversity of urban environments which contain a mixture of houses, apartments, roads, parks, gardens, and pools, compared to vegetation areas which contain farms or forests and (2) the resolution of satellite images is large, such as 10 m2 and 30 m2, meaning that all the diverse features are merged together.

The development of earth observation technology has led to over 900 satellites orbiting the Earth. This extends the remote-sensing database for contributing to various domains [34]. Satellites provide: (1) a comprehensive view of Earth observation; (2) repetitive monitoring for changeable detection using calibrated sensors; and (3) different resolution images. Together, these can allow better management of resources throughout the unpredictable and diverse spectrum of hazards [35,36].

Satellites are able to capture reflected sunlight from the Earth. Since different objects have different light reflection and absorption intensities at different wavelengths [37] across the light spectrum, it is possible to use this information to identify different objects. Rather than capturing the entire spectrum, the satellite partitions the intensity values into groups referred to as bands. For each band, the mean of the intensity is stored as the band value. Spectral bands are spread across the visible and non-visible parts of the spectrum [38]. Table 1 shows the details of common spectral bands.

Table 1.

Details of spectral bands (blue, green, red, NIR, and SWIR) with band type and wavelength ranges.

The PlanetScope dataset is operated by Planet which assembles images from approximately one hundred and thirty satellites. The large number of satellites means that approximately the entire land surface of the Earth can be captured every day, but this is biased towards areas close to the North Pole. A review of Planet’s temporal capturing [39] showed an overall mean capture of 38.2 h and medium of 30.2 h. The case study presented here (Brisbane) has a recapture rate of 24–36 h. The satellites are able to capture images with a resolution of approximately three meters per pixel [40]. PlanetScope satellite imagery is a relatively new source that has only seen limited application in flood detection so far, and its unique characteristics (daily updates and higher resolution images) make it a compelling source of data for this research. However, it has not been used as much as other popular satellite data sources such as Landsat 8 and Sentinel 2. Despite this, it has already shown to be a useful data source for capturing satellite images of disasters and reconstruction from disasters [29], detecting floods [41], verifying the accuracy of flood-classification [42], and enhancing monitoring coastal aquatic systems [43].

To enhance the information gained from satellite data, simple mathematical formulas can be applied to band values, referred to as indices. These indices make delineation between Earth’s objects, such as land and water, easier. Table 2 shows the details of common spectral bands.

Table 2.

Spectral indices formula.

The Normalised Difference Water Index (NDWI) was used to enhance the delineation of open water to other land features and is strongly related to plant water content [44]. It is popularly used to extract open water features from satellite images [45,46,47,48].

The differential Normalised Difference Water Index (dNDWI) is a single band spectral index over two time periods: pre-flood and peak-flood [49]. After applying the NDWI formula to two images, the dNDWI is calculated by subtracting the pre-NDWI from peak-NDWI images.

Recently, a new spectral index was developed for mapping inundated areas: the Normalised Difference Inundation Index (NDII) [32]. The NDII was used to obtain the spectral differences between the period before the flood and during the flood. The index needs one band: the NIR band from two time-series images: pre-flood and peak-flood images because the NIR band changes dramatically in a flood event. This index can address the issue from McFeeter’s NDWI whereby built areas were misclassified [33].

The development of computer science has made a large volume of data available and higher processing power for algorithms, especially for machine learning (ML) algorithms [50,51]. These ML algorithms can sometimes be used to recognise and predict natural disasters more effectively than more conventional approaches [52]. Decision Tree (DT), Random Forest (RF), Naive Bayes (NB), and K-Nearest Neighbour (KNN) are popular in existing research [53,54,55,56,57,58], whereas the majority of research into remote sensing and natural disasters have been performed by environmental scientists, using a computer science approach; for example, using a shared truth dataset, can lead to different insights.

Information about the algorithms is summarised in Table 3, where n represents the size of samples, m represents the number of features, c represents the number of the classes, v represents the number of vectors, and t represents the number of trees [59]. The selection of these algorithms allowed us to compare proximity-based algorithms (KNN), state trees (DT, RF), and conditional probability trees (NB); single (KNN, DT, NB) and ensemble (RF) methods; non-linear (DT, RF, KNN) and linear (NB) classification categories and the time complexity.

Table 3.

Machine learning algorithms big O complexity in training and test time.

This study aims to evaluate the performance of combining machine learning algorithms and spectral indices in urban floods, which can provide a new view of assessing flood events and push forward the development of flood classification approaches. The case study is the Brisbane, February 2022 floods. A flood map created by the Brisbane City Council [60], in response to this event, provided a labelled dataset for this case study. The major objectives of this study were:

- To apply existing ML algorithms and indices to the Brisbane floods to evaluate their effectiveness using a labelled dataset.

- To propose a new index, the Concatenated Normalised Difference Water Index (CNDWI) and compare it with existing indices, particularly if the time-series NDWI can perform better than a single NDWI image.

- To investigate the four ML algorithms’ performance in an urban flood case study, particularity to validate existing criticism of the NDWI.

- To investigate using PlanetScope imagery and bands.

2. Materials and Methods

2.1. Study Area

The capital of Queensland, Brisbane, is Australia’s third largest metropolitan area and has about 2.6 million people living within the greater Brisbane area. Brisbane has experienced five large historically recorded floods in 1841, 1893, 1974, 2011, and 2022. Since the February 2022 Brisbane floods [61] is a recent event, it suffers from a lack of research. As a result, this research was intended to help fill the gap and discuss the Brisbane 2022 flood.

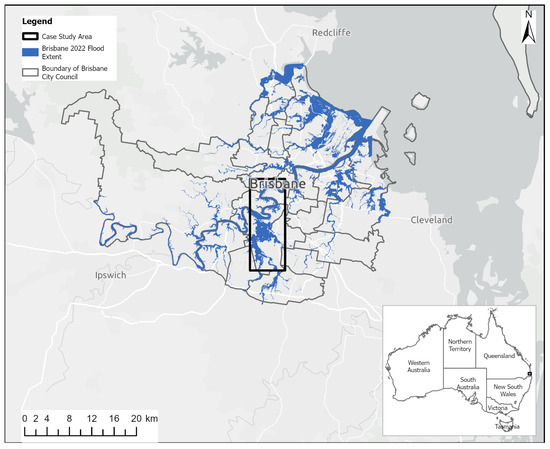

The specific study area is near the Brisbane River and surrounding suburbs and mostly consists of houses [32], parks, some large buildings, and two universities. As a result, the PlanetScope imagery with a resolution of three meters per pixel is sufficient for flood detection for this case study and has been used in previous research [32]. However, this is not typical in other urban places, limiting its generalisability. The geolocations of the study area are (152.68, −26.80) to (153.16, −28.16). For the temporal aspect, one pre-flood image from 9 February 2022 and a peak-flood image from 28 February 2022 were collected. These were the same dates used by a previous study [32]. Figure 1 shows the scope map of February 2022 Brisbane flood.

Figure 1.

The study area scope map about the February 2022 Brisbane flood using a dataset created by the Brisbane City Council. The entire flood extent was larger, containing other areas in Queensland and New South Wales. The case study is small compared to all of Australia.

2.2. Dataset

PlanetScope was used as the dataset source for this research, which was downloaded from its application programming interface (API) [62]. Images with more than 30% cloud cover were excluded. No Landsat 8–9 images satisfied the parameters in terms of dates and cloud cover.

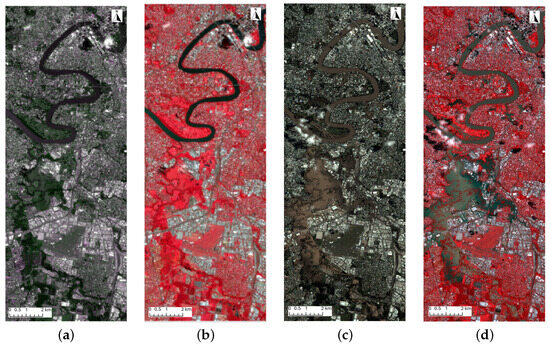

Two new images representing the pre-flooding date and peak-flooding date included blue, green, red, and near-infrared bands. Figure 2 shows pre-flooding and peak-flooding PlanetScope images with natural colour and false colour. Figure 2a shows the pre-flooding image with natural colour. To highlight water, Figure 2b shows the pre-flooding image with NIR in red and green false colour. Figure 2c shows the peak-flooding image with natural colour, Figure 2d shows the peak-flooding image with the false colour as Figure 2b.

Figure 2.

(a) Pre-flooding PlanetScope image with natural colour, 9 February 2022. The case study contained an area from Brisbane’s CBD, through to the St. Lucia campus of the University of Queensland, to the flood plain of Oxley Creek in the south and Archerfield Airport; (b) pre-flooding PlanetScope image with false colour (NRG), 9 February 2022; (c) peak-flooding PlanetScope image with natural colour, 28 February 2022; (d) peak-flooding PlanetScope image with false colour (NRG), 28 February 2022.

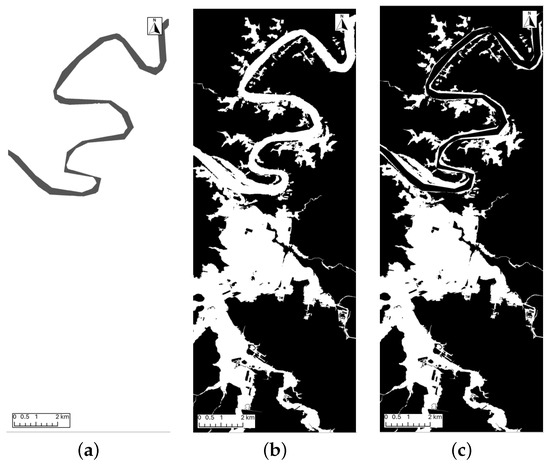

The labelled dataset of the flood map was combined with the flood map from the Brisbane City Council (BCC) [60] and World Water Bodies shape file [63]. There is not much information available about the BCC flood map other than that it was created using information from river and creek flooding [64]. It delineates water (white) from non-water (black). The extent of water is concatenated to the area governed by the BCC. However, the extent of the flood itself covered areas in Queensland and New South Wales. The World Water Bodies shape file represents the open water rivers, lakes, dry salt flats, seas, and oceans of the world. Figure 3 shows the steps involved in creating the labelled dataset generation. The Brisbane River map is displayed in Figure 3a, the BCC floods map is displayed in Figure 3b, and the labelled dataset is displayed in Figure 3c. Information about the labelled dataset is displayed in Table 4.

Figure 3.

(a) River-only image acquired from World Water Bodies, the black area represents the Brisbane River, whereas the white area represents others; (b) flood-only image acquired from BCC, where the white area represents flooding areas and the black area represents non-flooding areas; (c) labelled dataset image with the conjunction of (a,b) images with white areas set to flooded areas and the black area set as non-flooding area. Image (c) effectively removes the river from calculations.

Table 4.

Information about the Labelled Dataset, BCC generated it from both river and creek flooding in a shape file.

2.3. Spectral Indices Process

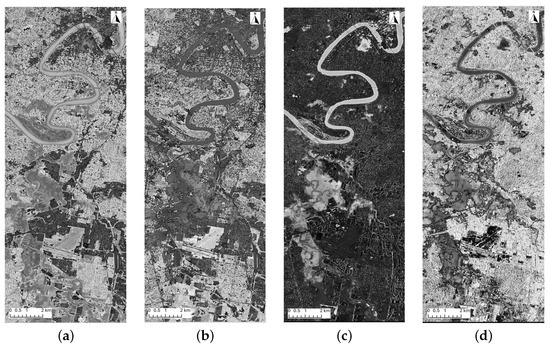

Four different indices were applied and generated to corresponding images in ArcGIS [65]: NDII, peak-NDWI, Concatenated NDWI (CNDWI), and dNDWI. Figure 4 shows the four spectral indices images.

Figure 4.

Four images created using different spectral indices. (a) pre-NDWI image generated from pre-flooding PlanetScope image green and NIR bands; (b) peak-NDWI image generated from PlanetScope peak-flooding image green and NIR bands; (c) dNDWI image generated from peak-NDWI image subtracted pre-NDWI image; (d) NDII image generated from PlanetScope pre-flooding and peak-flooding images NIR band.

McFeeter’s NDWI was named as peak-NDWI for consistency in this study.

The peak-NDWI formula only extracted the green band and NIR band from the peak-flood image. Its value range was between −1 and 1. Figure 4b shows the peak-NDWI image. There is another version of NDWI proposed by Gao [32], but this involves a band from the middle infrared spectrum, which was not captured in the PlanetScope dataset.

The dNDWI image needed two images (pre-NDWI and peak-NDWI) to be generated. Figure 4c displays the dNDWI image.

The NDII was implemented using the formula proposed by Levin and Phinn [32]. Two NIR bands from pre-flood and peak-flood images were calculated using the NDII formula. Figure 4d represents the NDII image.

Since machine learning algorithms tend to perform better with more information [66], a new index was proposed: Concatenated NDWI (CNDWI). The CNDWI concatenated peak-NDWI and pre-NDWI as two features, using the formula:

2.4. Machine Learning Algorithms

Four machine learning (ML) algorithms were applied in this research: Decision Tree, Random Forest, Naive Bayes, and K-Nearest Neighbours.

2.4.1. Decision Tree

The Decision Tree (DT) algorithm is based on the work of Belson [67]. The DT classifies a discrete set of values within a classification tree model. In the tree, leaves are class labels and the branches are connections of cause between each class label. The assumption of a DT is that all input features have finite discrete domains and can be classified into a single target, the class label.

During classification, elements are compared to the nodes in the tree structure. This starts with a single node that leads to possible results by branches. The most likely branch is chosen based on the features of the element and node. The selected node contains new branches and the process is repeated until each element is matched to a leaf.

2.4.2. Random Forest

Random Forest (RF) was first used in 2001 [68]. RF is a combination of tree predictors, and whereas individual trees are useful, they are reliant on a single dataset, which lowers accuracy and leads to incorporating unlikely patterns, which can lead to overfitting. RF addresses these issues by creating multiple DTs and combining them using the bagging method and the random subspace method.

To address accuracy, RFs can use the bagging method, which is used to produce various versions of a predictor. First, the dataset is split into multiple subsamples. Next, several Decision Tree classifiers are fitted into those samples. Finally, those versions can be employed to obtain an aggregated predictor which calculates the averages of the versions and makes a plurality vote for predicting a class [69].

To address overfitting, RF uses a random selection of features. Without the randomness, all trees would have the same distribution. Introducing the randomness means that the RF is a collection of independent individual classification, thereby decreasing overfitting and increasing its robustness. A well-known example of RF is classification regression tree (CART) classifiers [55].

RF can provide a useful comparison with DT. This is a comparison of the effectiveness of RF in capturing complex relationships within the data and the increase in execution time it takes to execute with its foundational algorithm, DT.

2.4.3. Naive Bayes

Naive Bayes (NB) is a machine learning model based upon Bayes’ theorem [70]. A version of NB is the Gaussian Naive Bayes (GNB), which applies a linear kernel. To deal with continuous data, the classical assumption is the continuous values that are related to each class followed by Gaussian distribution.

In comparison to the other ML algorithms, NB provides a linear category comparison whereas the others provide a non-linear category comparison. Including NB in the set of algorithms allowed comparison between linear and non-linear models.

2.4.4. K-Nearest Neighbours

K-Nearest Neighbours (KNN) was invented by Fix and Hodges [71] and explored more by Cover and Hart [72]. It is a non-parametric supervised machine learning model that is used to regress and classify items [73]. In KNN, one object is classified by its neighbours’ plurality vote. After classifying, the object is assigned to the most common class of its kth nearest neighbour. For example, if k = 1, the output will be assigned to the class that is the object’s single nearest neighbour, whereas if k > 1, it will assign values to a wider range of neighbours.

Its main difference compared with most other machine learning classifiers is that it compares against the original training data with every unknown sample rather than being trained to generate a model [74,75,76]. The most common type of the KNN algorithm is to assign unknown samples to the nearest feature space of known samples. This allowed for a thorough exploration of the data, enriching the research findings.

2.4.5. Cross Validation

The cross-validation method was applied to split the dataset into 10 folds. Each fold has two procedures. The first procedure is training a model by using training data consisting of nine folds. The second procedure was testing the trained model using the remaining data. The data were stratified, meaning that each fold contains approximately the same number of flooded and non-flooded pixels as the entire population. The accuracy, F1 score, training time, and testing time for 10 folds and the mean were recorded.

2.5. Evaluation Measures

Three measures were used in the research: accuracy, F1 score, and time.

2.5.1. Accuracy

The confusion matrix is commonly used to calculate accuracy [77] using the following formula:

TP is equal to True Positives, which means the model predicts the positive class correctly. TN is equal to True Negatives which represents the output where the model predicts negative class correctly. FP is equal to False Positives which means the outcome is incorrect because the negative class is supposed to be the positive class. FN is equal to False Negatives which is an outcome in the positive class is predicted by the model incorrectly to the negative class.

2.5.2. F1 Score

The second measure was the F1 score, which combines the precision and recall scores of a classifier [78]. The formula for the F1 score is as follows:

The parameters TP, FP, and FN have the same meanings as those used in the accuracy formula. The main difference between the F1 score and the accuracy is that the F1 score does not take TN values into account. This helps to address an issue with disproportionately sized binary sets. In this case study, the number of flooded pixels to non-flooded pixels is 3:1. Therefore, one could achieve an accuracy of ∼75% by declaring every pixel as non-flooded.

2.5.3. Time

Two time measures were used: training time and testing time. These were calculated for each index and algorithm pair.

2.6. Evaluation Metrics

In this research, three evaluation metrics were applied: standard deviation, Analysis of Variance (ANOVA), and Tukey–Kramer post hoc test.

2.6.1. Standard Deviation

Standard Deviation (SD) was first applied by Pearson [79]. It is used to measure the amount of variation across a set of data values in relation to their mean. It can indicate if a data value from an experiment is due to chance depending on how far it is from the mean. Since it is often difficult to capture an entire population, a sample of the population can be used instead. The formula for the sample standard deviation is:

where s represents the SD of the sample, N represents the size of the sample, represents each value in the population, and represents the mean of the sample.

SD is also called the first standard deviation, whereas SD multiplied by two is called the second standard deviation. The first upper threshold is equal to the mean plus the first standard deviation and the second upper threshold is equal to the mean plus the second standard deviation. Similarly, the first lower threshold is the mean minus the first standard deviation and the second lower threshold is the mean minus the second deviation. Values above the second upper threshold are often considered statistically significant.

2.6.2. Analysis of Variance and Tukey–Kramer Post Hoc Test

A one-way Analysis of Variance (ANOVA) is another statistical analysis method, which is used to check if there are any significant differences between two samples’ means within multiple input data classes. To apply one-way ANOVA, the F-test was implemented. F-test was developed by Fisher [80]. The corresponding formula is

An F-probability table is generated from this formula, which includes F-statistic and p-value. The alpha level depends on the research field, which can be compared with the p-value to detect if there is a significant difference between the two datasets. Since the ANOVA does not specify which comparison is significant, a Tukey–Kramer post hoc was performed to identify significant pairs [81].

2.7. Analysis Groups

Multi-comparison was conducted to compare the results, categorised by algorithms and indices. Each algorithm had four indice values and each index had four algorithm values. In each category, an ANOVA test (>0.05 threshold) and a Tukey–Kramer post hoc were performed to assess the accuracy, F1 score, and time to signal if there was a significant difference between the groups.

3. Results

3.1. Flood Maps

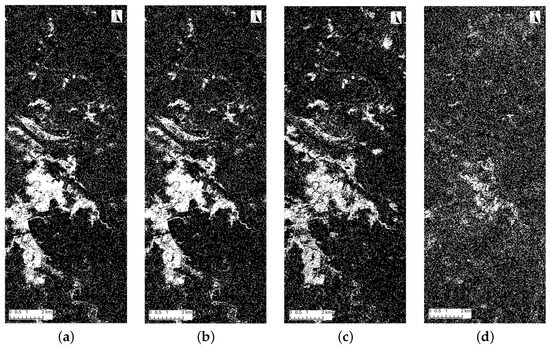

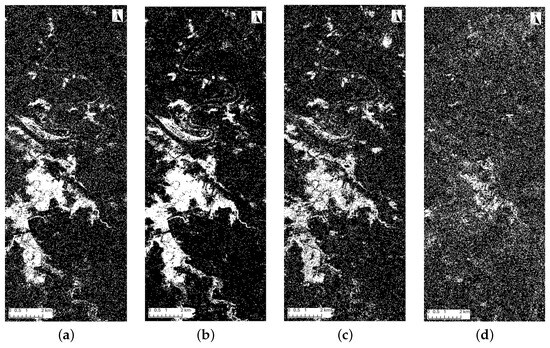

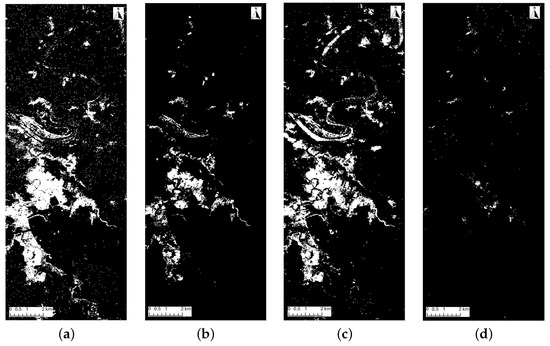

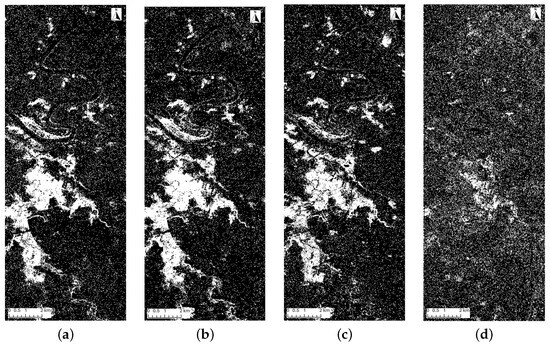

Throughout this study, four machine learning algorithms and four indices were measured. Overall, this meant that 16 experiments were performed. Figure 5, Figure 6, Figure 7 and Figure 8 present the 16 predicted flood maps. In the flood maps, white represents flooded pixels whereas black represents non-flooded pixels.

Figure 5.

Four DT-predicted flood maps using the four spectral indices. In each image, the white area represents flooding areas, whereas the black area represents non-flooding areas. (a) dNDWI-predicted flood map; (b) CNDWI-predicted flood map; (c) NDII-predicted flood map; (d) peak-NDWI-predicted flood map.

Figure 6.

Four RF-predicted flood maps using the four spectral indices. In each image, the white area represents flooding areas, whereas the black area represents non-flooding areas. (a) dNDWI-predicted flood map; (b) CNDWI-predicted flood map; (c) NDII-predicted flood map; (d) peak-NDWI-predicted flood map.

Figure 7.

Four NB-predicted flood maps using the four spectral indices. In each image, the white area represents flooding areas, whereas the black area represents non-flooding areas. (a) dNDWI-predicted flood map; (b) CNDWI-predicted flood map; (c) NDII-predicted flood map; (d) peak-NDWI-predicted flood map.

Figure 8.

Four KNN-predicted flood maps using the four spectral indices. In each image, the white area represents flooding areas, whereas the black area represents non-flooding areas. (a) dNDWI-predicted flood map; (b) CNDWI-predicted flood map; (c) NDII-predicted flood map; (d) peak-NDWI-predicted flood map.

3.2. Measures and Standard Deviation

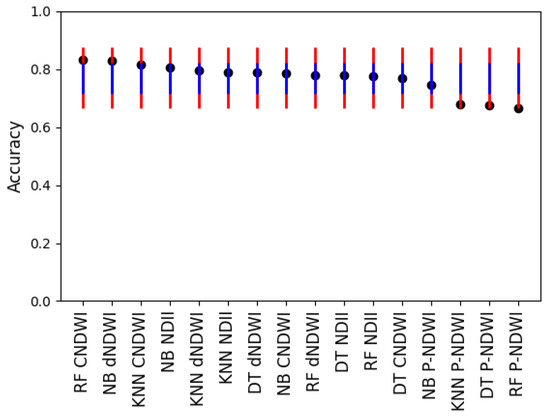

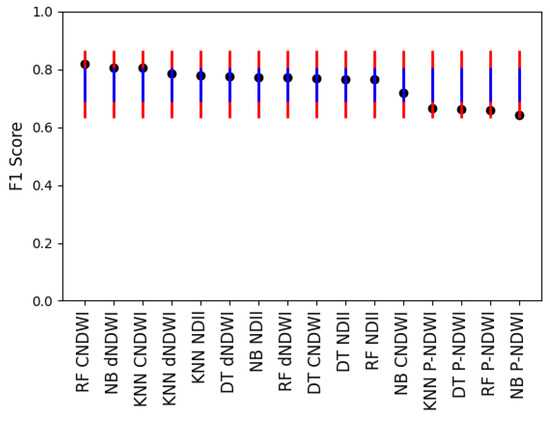

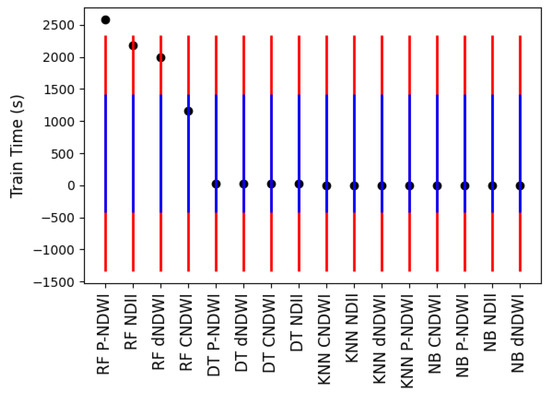

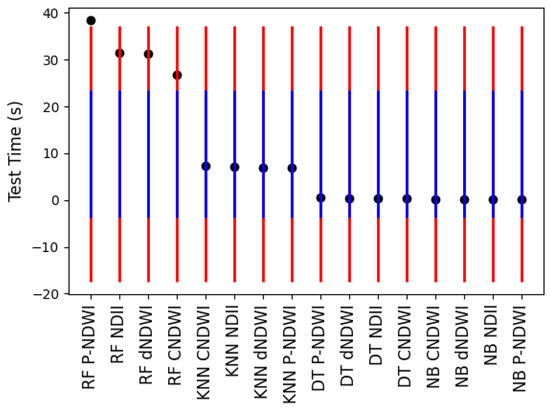

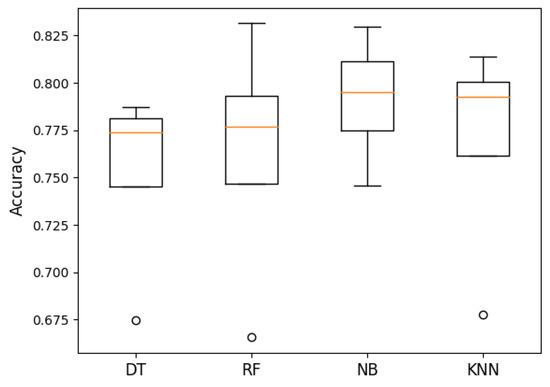

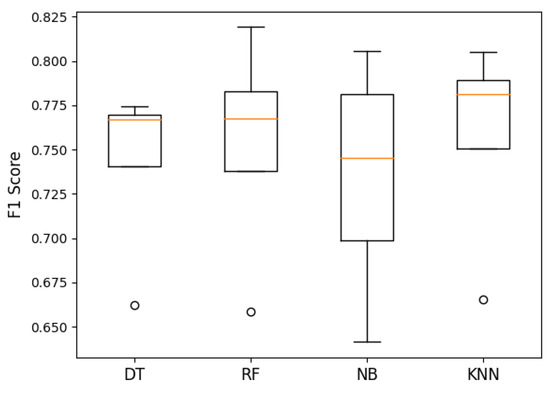

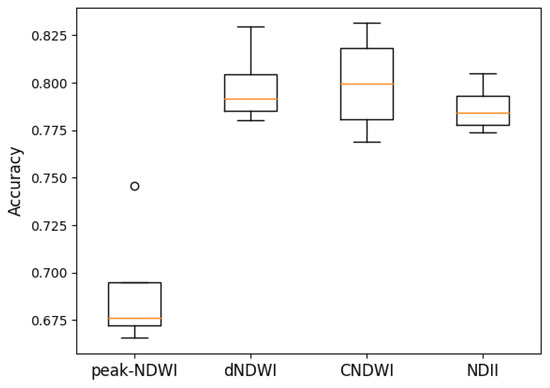

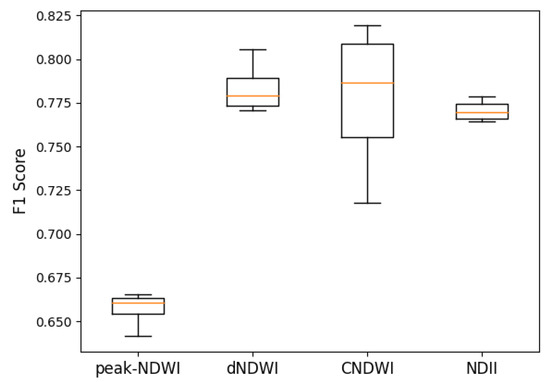

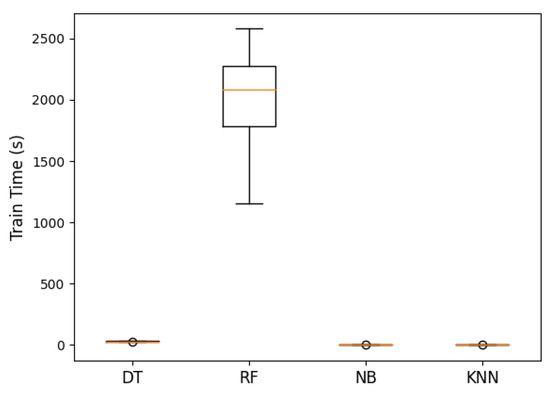

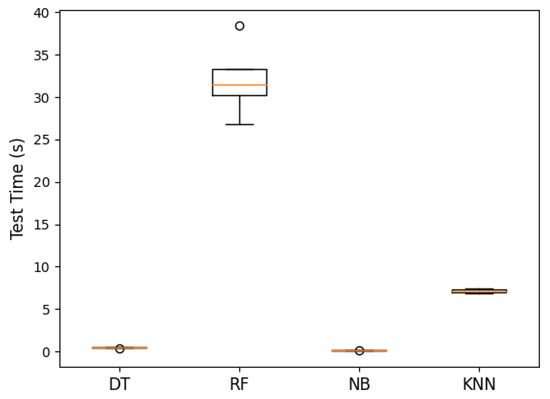

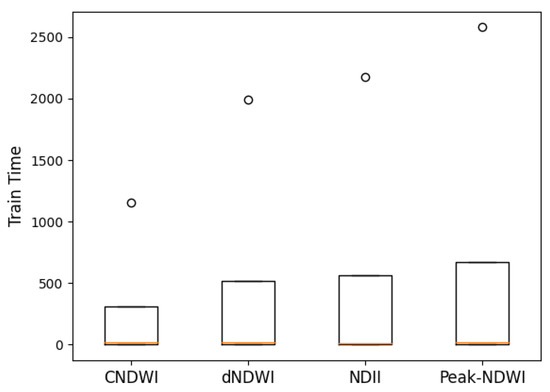

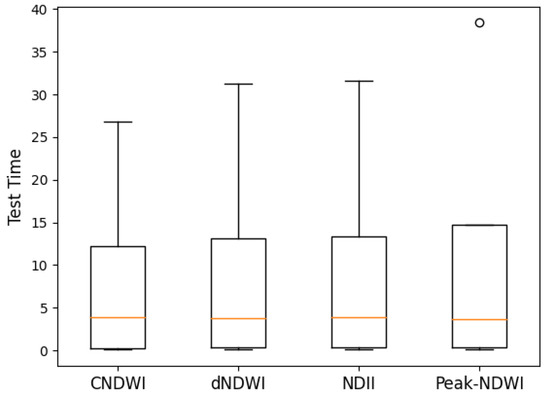

Each of the results from these experiments was described in terms of effectiveness (accuracy and F1 score) and efficiency (time). The results are presented in Table 5, Table 6, Table 7 and Table 8 and Figure 9, Figure 10, Figure 11 and Figure 12.

Table 5.

Accuracy table for combining the four ML algorithms and the four types of indices.

Table 6.

F1 score table for combining the four ML algorithms and the four types of indices.

Table 7.

Train time for combining the four ML algorithms and the four types of indices.

Table 8.

Test time for combining the four ML algorithms and the four types of indices.

Figure 9.

The accuracy of the 16 experiments. The mean value was 0.769. The first standard deviation was 0.053 and the second standard deviation was 0.105. The standard error was 0.013. The two upper thresholds were 0.821 and 0.874. The two lower thresholds were 0.716 and 0.664. The lines for the two lower thresholds are blue whereas the lines for the two higher thresholds are red. RF CNDWI and NB dNDWI were above the first upper threshold. KNN, DT, and RF peak-NDWI were below the first lower threshold.

Figure 10.

The F1 Score of the 16 experiments. The mean value was 0.747. The first standard deviation was 0.058 and the second standard deviation was 0.117. The standard error was 0.015. The two upper thresholds were 0.806 and 0.864. The two lower thresholds were 0.689 and 0.630. The lines for the two lower thresholds are blue, whereas the lines for the two higher thresholds are red. RF CNDWI and NB dNDWI were above the first upper threshold. All the peak-NDWI algorithms were below the first lower threshold.

Figure 11.

The training time of the 16 experiments. The mean value was 502.099. The first standard deviation was 919.044 and the second standard deviation was 1838.089. The standard error was 229.761. The two upper thresholds were 1421.144 and 2340.188. The two lower thresholds were below 0. The lines for the two lower thresholds are blue whereas the lines for the two higher thresholds are red. RF peak-NDWI was above the second upper threshold. RF NDII and RF dNDWI were above the first upper threshold.

Figure 12.

The testing time of the 16 experiments. The mean value was 9.917. The first standard deviation was 13.664 and the second standard deviation was 27.329. The standard error was 3.416. The two upper thresholds were 23.581 and 37.246. Both two lower thresholds were below 0. The lines for the two lower thresholds are blue whereas the lines for the two higher thresholds are red. RF peak-NDWI was above the second upper thresholds. The rest of the RF indices were above the first threshold.

3.3. Analysis of Variance

The set of Analysis of Variance (ANOVA) had three comparisons: the machine learning algorithms, indices, and time. For the machine learning algorithms and indices, ANOVA was applied to the accuracy and F1 score. The threshold (alpha) for all the ANOVA tests was 0.05. After the ANOVA test, Tukey’s honestly significant difference (HSD) was performed to compare the means to each other. The threshold was again set at 0.05. Table 9 displays the overall results of this analysis whereas Table 10, Table 11, Table 12, Table 13, Table 14, Table 15, Table 16 and Table 17 and Figure 13, Figure 14, Figure 15, Figure 16, Figure 17, Figure 18, Figure 19 and Figure 20 show the individual results.

Table 9.

The ANOVA p-value across ML algorithms, indices, and time with different measures: accuracy, F1 score, training time, and test time. Underlined values mean that the p-values were less than 0.05.

Table 10.

The HSD results of the machine learning algorithms’ accuracy. The p-value was 0.796, meaning that there was no significant difference between the groups. For clarity, the comparisons between the same group are greyed.

Table 11.

The HSD results of the machine learning algorithms’ F1 score. The p-value was 0.953, meaning that there was no significant difference between the groups. For clarity, the comparisons between the same group are greyed.

Table 12.

The HSD results of the indices’ accuracy. The p-value was 2.023 , meaning that there was a significant difference between the groups. In this case, it was the peak-NDWI compared to the other indices. For clarity, the comparisons between the same group are greyed.

Table 13.

The HSD results of the indices’ F1 score. The p-value was 2.482 , meaning that there was a significant difference between the groups. In this case, it was the peak-NDWI compared to the other indices. For clarity, the comparisons between the same group are greyed.

Table 14.

The HSD results of the machine learning algorithms’ training time. The p-value was 1.055 , meaning that there was a significant difference between the groups. In this case, it was the Random Forest algorithm compared to the other machine learning algorithms. For clarity, the comparisons between the same group are greyed.

Table 15.

The HSD results of the machine learning algorithms’ testing time. The p-value was 6.273 , meaning that there was a significant difference between the groups. In this case, it was the Random Forest and K-Nearest Neighbours algorithms compared to the other machine learning algorithms. For clarity, the comparisons between the same group are greyed.

Table 16.

The HSD results of the indices’ training time. The p-value was 0.995, meaning that there was not a significant difference between the groups. For clarity, the comparisons between the same group are greyed.

Table 17.

The HSD results of the indices’ testing time. The p-value was 0.966, meaning that there was not a significant difference between the groups. For clarity, the comparisons between the same group are greyed.

Figure 13.

Accuracy of ANOVA machine learning algorithms. The p-value was 0.796, meaning that there was no significant difference between the groups. The orange line represented the median accuracy of each algorithm. The circle represented outliers.

Figure 14.

F1 score of ANOVA machine learning algorithms. The p-value was 0.953, meaning that there was no significant difference between the groups. The orange line represented the median accuracy of each algorithm. The circle represented outliers.

Figure 15.

Accuracy of ANOVA indices. The p-value was 2.023 , meaning that there was a significant difference between the groups. In this case, it was the peak-NDWI compared to the other indices. The orange line represented the median accuracy of each algorithm. The circle represented outliers.

Figure 16.

F1 score of ANOVA indices. The p-value was 2.482 , meaning that there was a significant difference between the groups. In this case, it was the peak-NDWI and the other indices. The orange line represented the median accuracy of each algorithm. The circle represented outliers.

Figure 17.

ANOVA machine learning training time (s). The p-value was 1.055 , meaning that there was a significant difference between the groups. In this case, it was the Random Forest algorithm compared to the other machine learning algorithms. The orange line represented the median accuracy of each algorithm. The circle represented outliers.

Figure 18.

ANOVA machine learning testing time (s). The p-value was 6.273 , meaning that there was a significant difference between the groups. In this case, it was the Random Forest and K-Nearest Neighbours algorithms compared to the other machine learning algorithms. The orange line represented the median accuracy of each algorithm. The circle represented outliers.

Figure 19.

ANOVA indices training time (s). The p-value was 0.995, meaning that there was not a significant difference between the groups. The orange line represented the median accuracy of each algorithm. The circle represented outliers.

Figure 20.

ANOVA indices testing time (s). The p-value was 0.966, meaning that there was not a significant difference between the groups. The orange line represented the median accuracy of each algorithm. The circle represented outliers.

4. Discussion

4.1. Comparison of the Effectiveness of Four Machine Learning Algorithms

Overall, the four algorithms provided no significant difference in terms of accuracy and F1 score. However, there were some non-significant differences between the algorithms. The accuracy and F1 score from KNN outperformed the other three algorithms in most cases. KNN has a high sensitivity for the local distribution of data points. Because KNN relies on its nearest neighbours, it will affect the calculation if the nearest neighbours are included incorrectly or in the case of noisy data. Based on this case study, KNN handled noisy data well.

The number of neighbours might also be a reason. In this research, the number of neighbours was set as 3, which might be the suitable number for the NDII, dNDWI, and CNDWI data distribution.

4.2. Comparison of Four Types of Indices Effectiveness

Overall, there were some significant differences when some variations in the indices and the algorithms were combined. In the accuracy and F1 score evaluation metrics, across the different algorithms, there are some significant differences:

- Peak-NDWI performed worst;

- NDII and dNDWI performed similar;

- CNDWI outperformed other indices.

Overall Differences in Indices

Peak-NDWI performed worst in terms of accuracy and F1 score, which aligns with Levin and Phinn’s hypothesis: peak-NDWI misclassified the building area and inundation area [32]. CNDWI outperformed the other indices because its input had two features rather than one feature, which provided more information to the machine learning algorithms. This aligns with standard machine learning outcomes.

The difference between the peak-NDWI and NDII is that the peak-NDWI involves the green and the near-infrared band but the NDII just uses the near-infrared band. Another difference is that the peak-NDWI formula is applied to one image each time, but the NDII formula is applied to two images each time. The NDII focuses on the change in the near-infrared band, which changed dramatically during flood events in an urban environment.

The dNDWI and NDII obtained similar accuracy because both types of images are differential. The output of NDII and dNDWI images consistently outperformed peak-NDWI images. As well as focusing on different bands, thresholding could be an issue. The NDII highlights areas with significantly higher inundation content by classifying the threshold value greater than 0.1 as flooding and other values as non-flooding. The dNDWI demonstrated similarly with the NDII, which adjusted the threshold equal to or greater than 0.3 to flooding and other values to non-flooding. This precise amplification of relevant feature engineering enables algorithms to concentrate on the most related data points, resulting in more precise and reliable flood predictions in an urban environment.

4.3. Comparison of Time

All experiments in this section were conducted on an Intel Xeon Platinum 8160 CPU @ 2.10 GHz, running on a single thread. According to the time measurements, there are some significant differences between the four algorithms. RF spent significantly more time training the model than the other methods, which verifies previous research [82,83,84]. KNN spent significantly more time testing the model compared to NB and DT, which is consistent with existing research [85,86]. These differences align with previous research about machine learning algorithm complexity [59].

RF experiments were an ensemble method, meaning that multiple trees were established and then combined. The other algorithms build a single model. It aligns with the existing hypothesis that RF can be slow and requires more time during training due to its complex data [87,88]. However, these established trees handled the consistent features more efficiently in test time. DT experiments showed the same principle with RF, due to the fact that they are heavily related.

In the KNN experiments, there was a short training time but a long test time, which is consistent with the observation that KNN does not produce a trained model but directly compares each unknown sample against each other during testing [76].

4.4. Application to Sustainable Development

One of the United Nations Sustainable Development Goals is to reduce disaster risk [6]. Currently, using satellite images for flood mapping is a post-disaster analysis, but it aligns with this goal by identifying the spatial impact of floods in urban areas. This work can be incorporated with other research to better identify the risks of flood plains and to better prepare communities [89]. Although it is unlikely that a satellite system can be used during a flood event due to clouds, similar approaches can be used for other remote-sensing systems such as drones or planes, which would provide ‘live action’ sensing of a flood event and could provide information to emergency departments to locate people trapped in the floods.

Given the small size of this case study, it is different to generalise the outcomes of other areas, particularly to urban areas with a different set of features, different environments, and within different socio-economic context. However, more accurate flood mapping can provide more information to governments on a national, state, or local government scale to reduce the impact of floods. In Brisbane, previous floods have seen more dams being built using funding from national or state governments [90] or proposals to better manage the waterflow by engineering fluvial transects [91]. From a council level, frequently flooded houses can be bought and repurposed; for example, as a park, or retrofitted; for example, rising the house onto stilts [92,93], and there are recommendations to build more evacuation centres. In additional, councils may ban further housing in those areas [94].

In terms of urban sustainable development, decisions such as these affect environmental, economic, and social dimensions [95]. For example, purchasing a house is significantly less expensive than building a dam, but can come with significant social costs to an individual homeowner. Addressing these issues will require collaboration between researchers, governments, and the community. Over time, it can contribute to a sustainable path for people living in urban locations to better adapt to global climate change.

4.5. Future Research

Ultimately, more research needs to be performed on flooding in an urban environment. Future work for this research can focus on three aspects. Firstly, exploring other machine learning models such as convolutional neural networks (CNNs), which can provide a more nuanced analysis. Secondly, expanding the number of spectral indices because each index captures unique aspects of the landscape and incorporating them into the analysis might provide a more comprehensive understanding of flooding areas. Thirdly, the performance of different threshold indices can be explored to classify which threshold is optimal within the indices.

Images with finer resolution; for example, drones or planes, can also be used to differentiate the different land types, such as houses, apartments, roads, parks, gardens, and pools, which are found in urban environments.

5. Conclusions

Floods have had one of the most substantial impacts on the population in comparison to other natural disasters [96]. A key feature of flood assessment has been making use of remote-sensing imagery from satellites. This research investigated four machine learning algorithms, Decision Tree, Random Forest, Naïve Bayes, and K-Nearest Neighbours, and four spectral indices, the peak-NDWI, dNDWI, CNDWI, and NDII using satellite images from the Brisbane February 2022 floods.

According to the accuracy and F1 score, the four algorithms had similar performances, but the long training time and test time in RF should be considered disadvantages. There were some differences in terms of the indices. In particular, the peak-NDWI index performed worse than the other indices, which supports previous research [32]. The other indices included a temporal aspect either in terms of differential (dNDWI and NDII) or using concatenating two instances (CNDWI), which seemed to make a difference.

In conclusion, this research has presented rising contributions in fusing machine learning and remote-sensing indices. It analysed the performance of four popular machine learning algorithms with a popular spectral index and three novel indices in assessing floods. In addition, for future research, these methodologies open several exciting prospects in mapping floods, as well as classifying other natural disasters.

Author Contributions

Conceptualisation, L.L.; data curation, L.L.; formal analysis, L.L.; investigation, L.L.; methodology, L.L.; project administration, A.W.; resources, L.L. and A.W.; software, L.L., A.W. and T.C.; supervision, A.W. and T.C.; validation, L.L.; visualisation, L.L.; writing—original draft, L.L.; writing—review and editing, L.L., A.W. and T.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

We would like to thank N. Levin and S. Phinn for sharing valuable information about their PlanetScope materials.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jayaweera, L.; Wasko, C.; Nathan, R.; Johnson, F. Non-stationarity in extreme rainfalls across Australia. J. Hydrol. 2023, 624, 129872. [Google Scholar] [CrossRef]

- Blöschl, G.; Hall, J.; Viglione, A.; Perdigão, R.A.P.; Parajka, J.; Merz, B.; Lun, D.; Arheimer, B.; Aronica, G.T.; Bilibashi, A.; et al. Changing climate both increases and decreases European river floods. Nature 2019, 573, 108–111. [Google Scholar] [CrossRef] [PubMed]

- Rajib, A.; Zheng, Q.; Lane, C.R.; Golden, H.E.; Christensen, J.R.; Isibor, I.I.; Johnson, K. Human alterations of the global floodplains 1992–2019. Sci. Data 2023, 10, 499. [Google Scholar] [CrossRef] [PubMed]

- Quinn, N.; Bates, P.D.; Neal, J.; Smith, A.; Wing, O.; Sampson, C.; Smith, J.; Heffernan, J. The Spatial Dependence of Flood Hazard and Risk in the United States. Water Resour. Res. 2019, 55, 1890–1911. [Google Scholar] [CrossRef]

- United Nations General Assembly. Transforming Our World: The 2030 Agenda for Sustainable Development, A/RES/70/1. Available online: https://www.unfpa.org/resources/transforming-our-world-2030-agenda-sustainable-development (accessed on 25 January 2024).

- Disaster Risk Reduction|Department of Economic and Social Affairs. Available online: https://sdgs.un.org/topics/disaster-risk-reduction (accessed on 25 January 2024).

- Laframboise, M.N.; Loko, M.B. Natural Disasters: Mitigating Impact, Managing Risks; International Monetary Fund: Washington, DC, USA, 2012. [Google Scholar]

- Rieger, K. Multi-hazards, displaced people’s vulnerability and resettlement: Post-earthquake experiences from Rasuwa district in Nepal and their connections to policy loopholes and reconstruction practices. Prog. Disaster Sci. 2021, 11, 100187. [Google Scholar] [CrossRef]

- Rusk, J.; Maharjan, A.; Tiwari, P.; Chen, T.H.K.; Shneiderman, S.; Turin, M.; Seto, K.C. Multi-hazard susceptibility and exposure assessment of the Hindu Kush Himalaya. Sci. Total Environ. 2022, 804, 150039. [Google Scholar] [CrossRef] [PubMed]

- Beaglehole, B.; Mulder, R.T.; Frampton, C.M.; Boden, J.M.; Newton–Howes, G.; Bell, C.J. Psychological distress and psychiatric disorder after natural disasters: Systematic review and meta-analysis. Br. J. Psychiatry 2018, 213, 716–722. [Google Scholar] [CrossRef] [PubMed]

- Zúñiga, E.; Magaña, V.; Piña, V. Effect of Urban Development in Risk of Floods in Veracruz, Mexico. Geosciences 2020, 10, 402. [Google Scholar] [CrossRef]

- Miles, S. Flood-Impacted Homeowners Accept Buy Back Offers. Available online: https://statements.qld.gov.au/statements/96352 (accessed on 6 March 2024).

- Gonenc, A.; Ozerdem, M.S.; Acar, E. Comparison of NDVI and RVI Vegetation Indices Using Satellite Images. In Proceedings of the 2019 8th International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Istanbul, Turkey, 16–19 July 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Singh, V.; Singh, K. Development of Statistical Based Decision Tree Algorithm for Mixed Class Classification with Sentinel-2 Data. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2304–2307. [Google Scholar] [CrossRef]

- Said, N.; Ahmad, K.; Riegler, M.; Pogorelov, K.; Hassan, L.; Ahmad, N.; Conci, N. Natural disasters detection in social media and satellite imagery: A survey. Multimed. Tools Appl. 2019, 78, 31267–31302. [Google Scholar] [CrossRef]

- Sarker, C.; Mejias, L.; Maire, F.; Woodley, A. Flood Mapping with Convolutional Neural Networks Using Spatio-Contextual Pixel Information. Remote Sens. 2019, 11, 2331. [Google Scholar] [CrossRef]

- Dalponte, M.; Bruzzone, L.; Gianelle, D. Fusion of Hyperspectral and LIDAR Remote Sensing Data for Classification of Complex Forest Areas. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1416–1427. [Google Scholar] [CrossRef]

- Li, Z.; Itti, L. Saliency and Gist Features for Target Detection in Satellite Images. IEEE Trans. Image Process. 2011, 20, 2017–2029. [Google Scholar] [CrossRef]

- Rhee, J.; Im, J.; Carbone, G.J. Monitoring agricultural drought for arid and humid regions using multi-sensor remote sensing data. Remote Sens. Environ. 2010, 114, 2875–2887. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Smith, R.B.; De Pauw, E. Hyperspectral Vegetation Indices and Their Relationships with Agricultural Crop Characteristics. Remote Sens. Environ. 2000, 71, 158–182. [Google Scholar] [CrossRef]

- Sobrino, J.A.; Raissouni, N. Toward remote sensing methods for land cover dynamic monitoring: Application to Morocco. Int. J. Remote Sens. 2000, 21, 353–366. [Google Scholar] [CrossRef]

- Dobrinić, D.; Gašparović, M.; Župan, R. Horizontal Accuracy Assessment of Planetscope, Rapideye and Worldview-2 Satellite Imagery. In Proceedings of the International Multidisciplinary Scientific GeoConference: SGEM, Albena, Bulgaria, 2–8 July 2018; Volume 18, pp. 129–136. [Google Scholar] [CrossRef]

- Ganci, G.; Bilotta, G.; Calvari, S.; Cappello, A.; Del Negro, C.; Herault, A. Volcanic Hazard Monitoring Using Multi-Source Satellite Imagery. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 1903–1906. [Google Scholar] [CrossRef]

- Carn, S.A.; Krotkov, N.A.; Theys, N.; Li, C. Advances in UV Satellite Monitoring of Volcanic Emissions. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 973–976. [Google Scholar] [CrossRef]

- Tian, X.r.; Douglas, M.J.; Shu, L.f.; Wang, M.y.; Li, H. Satellite remote-sensing technologies used in forest fire management. J. For. Res. 2005, 16, 73–78. [Google Scholar] [CrossRef]

- Gup, G.; Xie, G. Earthquake cloud over Japan detected by satellite. Int. J. Remote Sens. 2007, 28, 5375–5376. [Google Scholar] [CrossRef]

- De Santis, A.; Marchetti, D.; Spogli, L.; Cianchini, G.; Pavón-Carrasco, F.J.; Franceschi, G.D.; Di Giovambattista, R.; Perrone, L.; Qamili, E.; Cesaroni, C.; et al. Magnetic Field and Electron Density Data Analysis from Swarm Satellites Searching for Ionospheric Effects by Great Earthquakes: 12 Case Studies from 2014 to 2016. Atmosphere 2019, 10, 371. [Google Scholar] [CrossRef]

- Chao, J.; Zhao, Z.; Lai, Z.; Xu, S.; Liu, J.; Li, Z.; Zhang, X.; Chen, Q.; Yang, H.; Zhao, X. Detecting geothermal anomalies using Landsat 8 thermal infrared remote sensing data in the Ruili Basin, Southwest China. Environ. Sci. Pollut. Res. 2022, 30, 32065–32082. [Google Scholar] [CrossRef]

- Huang, D.; Tang, Y.; Qin, R. An evaluation of PlanetScope images for 3D reconstruction and change detection—Experimental validations with case studies. GIScience Remote Sens. 2022, 59, 744–761. [Google Scholar] [CrossRef]

- Deoli, V.; Kumar, D.; Kumar, M.; Kuriqi, A.; Elbeltagi, A. Water spread mapping of multiple lakes using remote sensing and satellite data. Arab. J. Geosci. 2021, 14, 2213. [Google Scholar] [CrossRef]

- Malakeel, G.S.; Abdu Rahiman, K.U.; Vishnudas, S. Flood Risk Assessment Methods—A Review. In Current Trends in Civil Engineering; Lecture Notes in Civil Engineering; Thomas, J., Jayalekshmi, B., Nagarajan, P., Eds.; Springer: Singapore, 2021; pp. 197–208. [Google Scholar] [CrossRef]

- Levin, N.; Phinn, S. Assessing the 2022 Flood Impacts in Queensland Combining Daytime and Nighttime Optical and Imaging Radar Data. Remote Sens. 2022, 14, 5009. [Google Scholar] [CrossRef]

- Xu, H. Modification of Normalised Difference Water Index (NDWI) to Enhance Open Water Features in Remotely Sensed Imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Bai, Y.; Zhao, Y.; Shao, Y.; Zhang, X.; Yuan, X. Deep learning in different remote sensing image categories and applications: Status and prospects. Int. J. Remote Sens. 2022, 43, 1800–1847. [Google Scholar] [CrossRef]

- Navalgund, R.R.; Jayaraman, V.; Roy, P.S. Remote sensing applications: An overview. Curr. Sci. 2007, 93, 1747–1766. [Google Scholar]

- Joyce, K.E.; Wright, K.C.; Samsonov, S.V.; Ambrosia, V.G.; Joyce, K.E.; Wright, K.C.; Samsonov, S.V.; Ambrosia, V.G. Remote sensing and the disaster management cycle. In Advances in Geoscience and Remote Sensing; IntechOpen: London, UK, 2009. [Google Scholar] [CrossRef]

- Ahuja, S.; Biday, S. A Survey of Satellite Image Enhancement Techniques. Int. J. Adv. Innov. Res. 2018, 2, 131–136. [Google Scholar]

- Coops, N.C.; Tooke, T.R. Introduction to Remote Sensing. In Learning Landscape Ecology: A Practical Guide to Concepts and Techniques; Gergel, S.E., Turner, M.G., Eds.; Springer: New York, NY, USA, 2017; pp. 3–19. [Google Scholar] [CrossRef]

- Roy, D.P.; Huang, H.; Houborg, R.; Martins, V.S. A global analysis of the temporal availability of PlanetScope high spatial resolution multi-spectral imagery. Remote Sens. Environ. 2021, 264, 112586. [Google Scholar] [CrossRef]

- PlanetScope. Available online: https://developers.planet.com/docs/data/planetscope/ (accessed on 28 August 2023).

- Qayyum, N.; Ghuffar, S.; Ahmad, H.M.; Yousaf, A.; Shahid, I. Glacial Lakes Mapping Using Multi Satellite PlanetScope Imagery and Deep Learning. ISPRS Int. J. Geo-Inf. 2020, 9, 560. [Google Scholar] [CrossRef]

- Wakabayashi, H.; Hongo, C.; Igarashi, T.; Asaoka, Y.; Tjahjono, B.; Permata, I.R.R. Flooded Rice Paddy Detection Using Sentinel-1 and PlanetScope Data: A Case Study of the 2018 Spring Flood in West Java, Indonesia. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6291–6301. [Google Scholar] [CrossRef]

- Niroumand-Jadidi, M.; Bovolo, F.; Bruzzone, L.; Gege, P. Physics-based Bathymetry and Water Quality Retrieval Using PlanetScope Imagery: Impacts of 2020 COVID-19 Lockdown and 2019 Extreme Flood in the Venice Lagoon. Remote Sens. 2020, 12, 2381. [Google Scholar] [CrossRef]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Tkachenko, N.; Zubiaga, A.; Procter, R. WISC at MediaEval 2017: Multimedia satellite task. In Proceedings of the Working Notes Proceedings of the MediaEval 2017 Workshop, Dublin, Ireland, 13–15 September 2017; Volume 1984. [Google Scholar]

- Ogashawara, I.; Curtarelli, M.; Ferreira, C. The Use of Optical Remote Sensing for Mapping Flooded Areas. J. Eng. Res. Appl. 2013, 3, 1956–1960. [Google Scholar]

- Mueller, N.; Lewis, A.; Roberts, D.; Ring, S.; Melrose, R.; Sixsmith, J.; Lymburner, L.; McIntyre, A.; Tan, P.; Curnow, S.; et al. Water observations from space: Mapping surface water from 25 years of Landsat imagery across Australia. Remote Sens. Environ. 2016, 174, 341–352. [Google Scholar] [CrossRef]

- Taloor, A.K.; Drinder Singh, M.; Chandra Kothyari, G. Retrieval of land surface temperature, normalized difference moisture index, normalized difference water index of the Ravi basin using Landsat data. Appl. Comput. Geosci. 2021, 9, 100051. [Google Scholar] [CrossRef]

- Mallinis, G.; Gitas, I.Z.; Giannakopoulos, V.; Maris, F.; Tsakiri-Strati, M. An object-based approach for flood area delineation in a transboundary area using ENVISAT ASAR and LANDSAT TM data. Int. J. Digit. Earth 2013, 6, 124–136. [Google Scholar] [CrossRef]

- Jony, R.I.; Woodley, A.; Raj, A.; Perrin, D. Ensemble Classification Technique for Water Detection in Satellite Images. In Proceedings of the 2018 Digital Image Computing: Techniques and Applications (DICTA), Canberra, ACT, Australia, 10–13 December 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Sarker, C.; Mejias, L.; Maire, F.; Woodley, A. Evaluation of the Impact of Image Spatial Resolution in Designing a Context-Based Fully Convolution Neural Networks for Flood Mapping. In Proceedings of the 2019 Digital Image Computing: Techniques and Applications (DICTA), Perth, WA, Australia, 2–4 December 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Chamola, V.; Hassija, V.; Gupta, S.; Goyal, A.; Guizani, M.; Sikdar, B. Disaster and Pandemic Management Using Machine Learning: A Survey. IEEE Internet Things J. 2021, 8, 16047–16071. [Google Scholar] [CrossRef] [PubMed]

- Mazhar, S.; Sun, G.; Wang, Z.; Liang, H.; Zhang, H.; Li, Y. Flood Mapping and Classification Jointly Using MuWI and Machine Learning Techniques. In Proceedings of the 2021 International Conference on Control, Automation and Information Sciences (ICCAIS), Xi’an, China, 14–17 October 2021; pp. 662–667. [Google Scholar] [CrossRef]

- Chen, J.; Huang, G.; Chen, W. Towards better flood risk management: Assessing flood risk and investigating the potential mechanism based on machine learning models. J. Environ. Manag. 2021, 293, 112810. [Google Scholar] [CrossRef] [PubMed]

- Feng, Q.; Liu, J.; Gong, J. Urban Flood Mapping Based on Unmanned Aerial Vehicle Remote Sensing and Random Forest Classifier—A Case of Yuyao, China. Water 2015, 7, 1437–1455. [Google Scholar] [CrossRef]

- Nery, T.; Sadler, R.; Solis-Aulestia, M.; White, B.; Polyakov, M.; Chalak, M. Comparing supervised algorithms in Land Use and Land Cover classification of a Landsat time-series. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 5165–5168. [Google Scholar] [CrossRef]

- Sarker, C.; Alvarez, L.M.; Woodley, A. Integrating Recursive Bayesian Estimation with Support Vector Machine to Map Probability of Flooding from Multispectral Landsat Data. In Proceedings of the 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, QLD, Australia, 30 November–2 December 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Chen, W.; Li, Y.; Xue, W.; Shahabi, H.; Li, S.; Hong, H.; Wang, X.; Bian, H.; Zhang, S.; Pradhan, B.; et al. Modeling flood susceptibility using data-driven approaches of naïve Bayes tree, alternating decision tree, and random forest methods. Sci. Total Environ. 2020, 701, 134979. [Google Scholar] [CrossRef]

- Müller, A.C.; Guido, S. Introduction to Machine Learning with Python; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2016. [Google Scholar]

- Flood—Awareness—Historic—Brisbane River and Creek Floods—Feb-2022. Available online: https://www.spatial-data.brisbane.qld.gov.au/datasets/26f2d6ad138043c69326ce0e2259dfd8_0/about (accessed on 24 February 2024).

- Grantham, S. #bnefloods: An analysis of the Queensland Government media conferences during the 2022 Brisbane floods. Aust. J. Emerg. Manag. 2023, 38, 42–48. [Google Scholar] [CrossRef]

- Planet APIs. Available online: https://developers.planet.com/docs/apis (accessed on 16 September 2023).

- World Water Bodies. Available online: https://hub.arcgis.com/content/esri::world-water-bodies (accessed on 5 November 2023).

- Neighbourhood, Y. Flood Mapping Update 2022; Brisbane City Council: Brisbane, QLD, Australia, 2022. [Google Scholar]

- ArcGIS Online. Available online: https://www.arcgis.com (accessed on 17 September 2023).

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- Belson, W.A. Matching and Prediction on the Principle of Biological Classification. J. R. Stat. Soc. Ser. (Appl. Stat.) 1959, 8, 65–75. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Vikramkumar; B, V.; Trilochan. Bayes and Naive Bayes Classifier. arXiv 2014. [Google Scholar] [CrossRef]

- Fix, E.; Hodges, J. Discriminatory Analysis—Nonparametric Discrimination: Consistency Properties; Technical Report; USAF School of Aviation Medicine: Dayton, OH, USA, 1951. [Google Scholar]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based Detailed Vegetation Classification with Airborne High Spatial Resolution Remote Sensing Imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef]

- Maselli, F.; Chirici, G.; Bottai, L.; Corona, P.; Marchetti, M. Estimation of Mediterranean forest attributes by the application of k-NN procedures to multitemporal Landsat ETM+ images. Int. J. Remote Sens. 2005, 26, 3781–3796. [Google Scholar] [CrossRef]

- Altman, N.S. An Introduction to Kernel and Nearest-Neighbor Nonparametric Regression. Am. Stat. 1992, 46, 175–185. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Fan, X.; Lu, Y.; Liu, Y.; Li, T.; Xun, S.; Zhao, X. Validation of Multiple Soil Moisture Products over an Intensive Agricultural Region: Overall Accuracy and Diverse Responses to Precipitation and Irrigation Events. Remote Sens. 2022, 14, 3339. [Google Scholar] [CrossRef]

- Abraham, S.; V R, J.; Thomas, S.; Jose, B. Comparative Analysis of Various Machine Learning Techniques for Flood Prediction. In Proceedings of the 2022 International Conference on Innovative Trends in Information Technology (ICITIIT), Kottayam, India, 12–13 February 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Pearson, K.; Henrici, O.M.F.E. III. Contributions to the mathematical theory of evolution. Philos. Trans. R. Soc. A 1894, 185, 71–110. [Google Scholar] [CrossRef]

- Lomax, R.G. An Introduction to Statistical Concepts; Lawrence Erlbaum Associates Publishers: Mahwah, NJ, USA, 2007. [Google Scholar]

- Tukey, J.W. Comparing Individual Means in the Analysis of Variance. Biometrics 1949, 5, 99–114. [Google Scholar] [CrossRef]

- Ahmad, M.W.; Reynolds, J.; Rezgui, Y. Predictive modelling for solar thermal energy systems: A comparison of support vector regression, random forest, extra trees and regression trees. J. Clean. Prod. 2018, 203, 810–821. [Google Scholar] [CrossRef]

- Tan, Y.; Shenoy, P.P. A bias-variance based heuristic for constructing a hybrid logistic regression-naïve Bayes model for classification. Int. J. Approx. Reason. 2020, 117, 15–28. [Google Scholar] [CrossRef]

- Ramezan, C.A.; Warner, T.A.; Maxwell, A.E.; Price, B.S. Effects of Training Set Size on Supervised Machine-Learning Land-Cover Classification of Large-Area High-Resolution Remotely Sensed Data. Remote Sens. 2021, 13, 368. [Google Scholar] [CrossRef]

- Han, T.; Almeida, J.S.; da Silva, S.P.P.; Filho, P.H.; de Oliveira Rodrigues, A.W.; de Albuquerque, V.H.C.; Rebouças Filho, P.P. An effective approach to unmanned aerial vehicle navigation using visual topological map in outdoor and indoor environments. Comput. Commun. 2020, 150, 696–702. [Google Scholar] [CrossRef]

- Shkurti, L.; Kabashi, F.; Sofiu, V.; Susuri, A. Performance Comparison of Machine Learning Algorithms for Albanian News articles. IFAC-PapersOnLine 2022, 55, 292–295. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Gebrehiwot, A.; Hashemi-Beni, L. Automated Indunation Mapping: Comparison of Methods. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 3265–3268. [Google Scholar] [CrossRef]

- Smith, I.; McAlpine, C. Estimating future changes in flood risk: Case study of the Brisbane River, Australia. Clim. Risk Manag. 2014, 6, 6–17. [Google Scholar] [CrossRef]

- Cook, M. “It Will Never Happen Again”: The Myth of Flood Immunity in Brisbane. J. Aust. Stud. 2018, 42, 328–342. [Google Scholar] [CrossRef]

- Davidson, J.; Architect, J.D.; Bowstead, S. Water Futures: An Integrated Water and Flood Management Plan for Enhancing Liveability in South East Queensland; James Davidson Architect: Woolloongabba, QLD, Australia, 2017. [Google Scholar]

- Brisbane’s FloodSmart Future Strategy. Available online: https://www.brisbane.qld.gov.au/community-and-safety/community-safety/disasters-and-emergencies/be-prepared/flooding-in-brisbane/flood-strategy/brisbanes-floodsmart-future-strategy (accessed on 5 March 2024).

- Jersey, P.d. Brisbane City Council 2022 Flood Review; Brisbane City Council: Brisbane, QLD, Australia, 2022. [Google Scholar]

- Sriranganathan, J. Preventing New Development on Flood-Prone Sites. Available online: https://www.jonathansri.com/flooding (accessed on 5 March 2024).

- The Sustainable Development Goals Report 2023: Special Edition|DESA Publications. Available online: https://desapublications.un.org/publications/sustainable-development-goals-report-2023-special-edition (accessed on 5 March 2024).

- Jonkman, S.N. Global Perspectives on Loss of Human Life Caused by Floods. Nat. Hazards 2005, 34, 151–175. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).