MMKG-PAR: Multi-Modal Knowledge Graphs-Based Personalized Attraction Recommendation

Abstract

1. Introduction

- The model employs a time-weighted user embedding strategy, aggregating users’ historical interaction information into the collaborative multi-modal knowledge graph (CMKG, detailed in Section 3.3). This approach captures users’ changing interests over time more precisely, significantly enhancing the accuracy and personalization of the recommendation system.

- The model utilizes advanced feature extractors for multi-modal data, effectively combining features from different modalities to enhance entity representation in the knowledge graph.

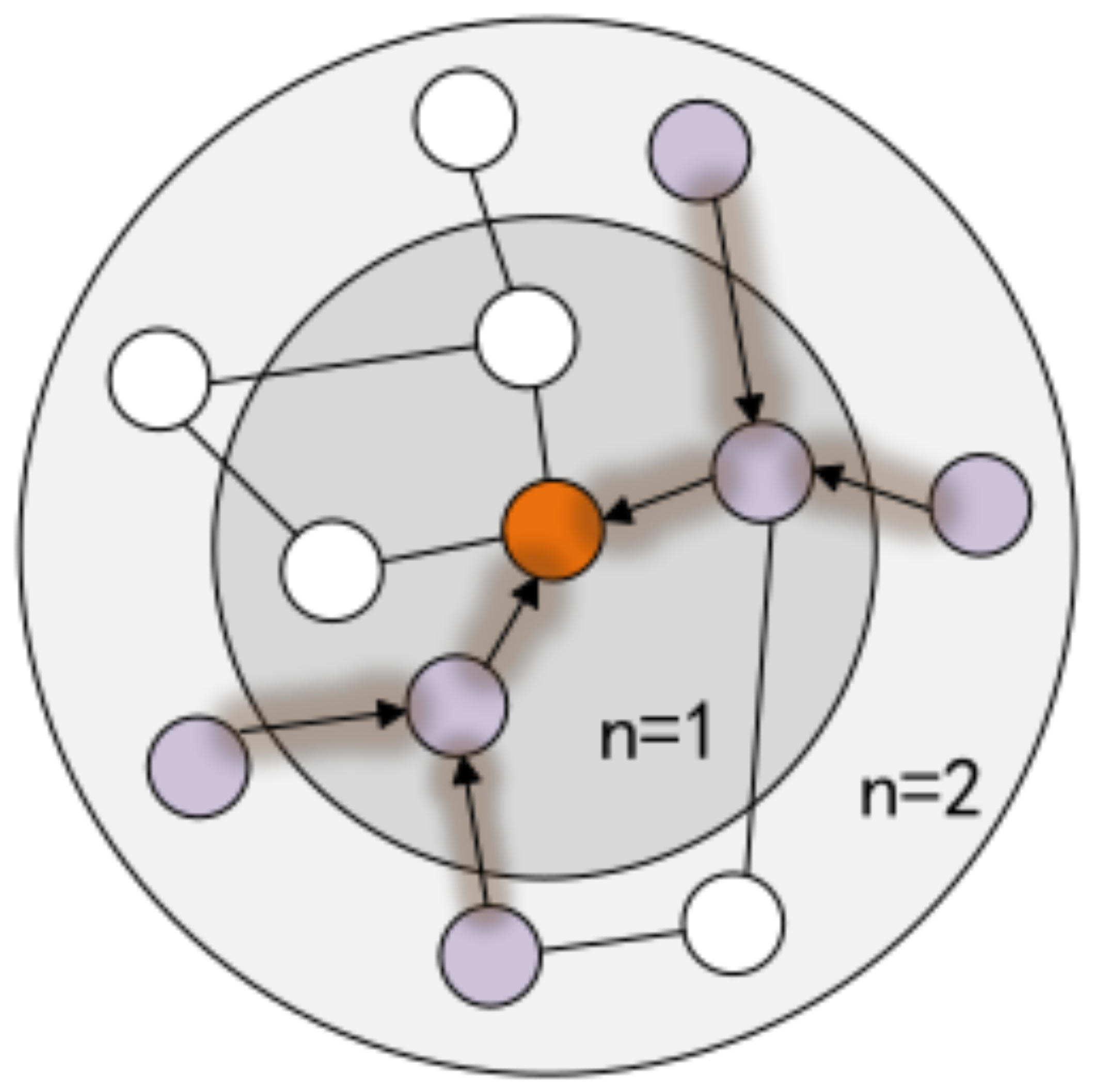

- The model utilizes the message propagation mechanism of graph neural networks (GNNs) [17,18], recursively aggregating information from adjacent nodes to update the feature representation of each node. In this process, by incorporating an attention mechanism [19], it learns the weights of each neighboring node during propagation, thereby enabling the extraction and induction of higher-order information.

- To the best of our knowledge, this is the first application of multi-modal knowledge graph in the field of tourist attraction recommendation. The multi-modal knowledge graph we developed integrates images and textual information of attractions, offering a more comprehensive view of each site. Furthermore, the feature fusion method presented in this paper effectively consolidates multi-modal information and serves as a robust example for integrating additional modal features of attractions.

- The MMKG-PAR model proposed in this paper effectively integrates various modal attribute data to enhance the representation of entity nodes. It employs an aggregation strategy based on graph attention mechanisms for efficiently merging higher-order information, resulting in more precise learning and representation.

- Utilizing real data from the “Travel Yunnan” app, this study constructed two tourist attraction CMKG datasets. The MMKG-PAR was compared with several state-of-the-art methods. Extensive experiments validated the model’s rationality and effectiveness.

2. Related Work

2.1. Multi-Modal Knowledge Graph

2.2. MMKG-Based Recommendation

3. Problem Formulation

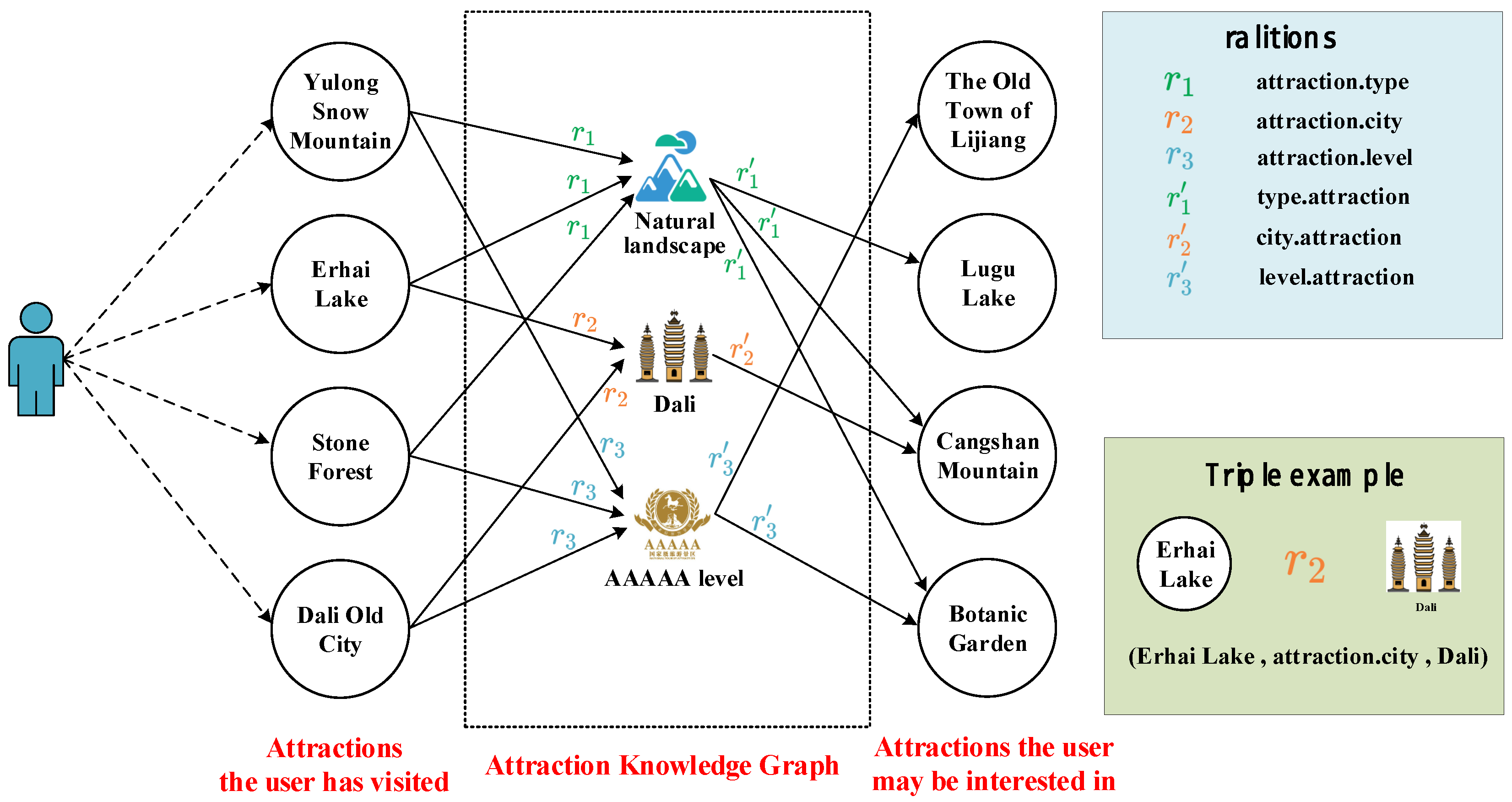

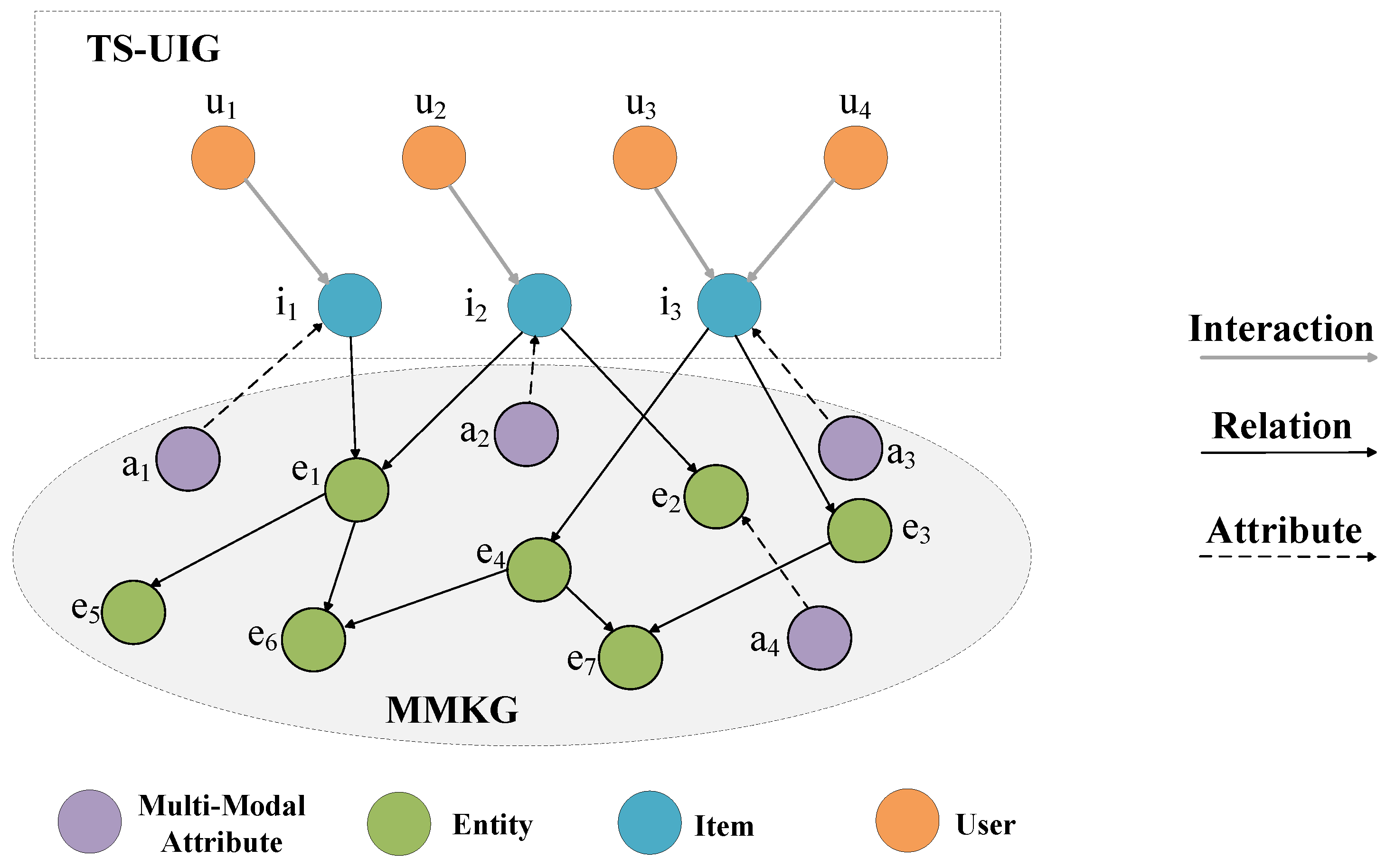

3.1. User-Attraction Interaction Graph

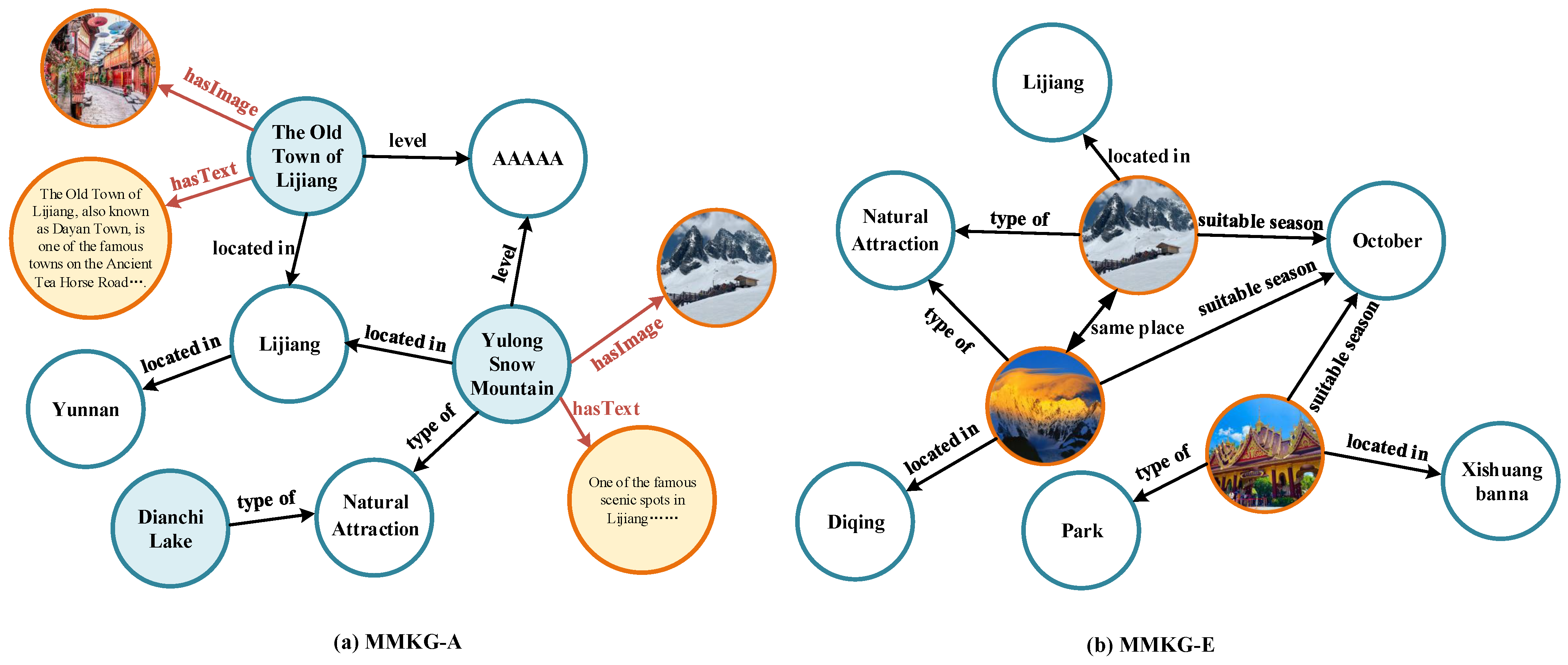

3.2. Multi-Modal Attraction Knowledge Graph

3.3. Collaborative Multi-Modal Knowledge Graph (CMKG)

3.4. Task Description

- Input: the collaborative multi-modal knowledge graph , comprising the user-attraction interaction graph and the multi-modal attraction knowledge graph .

- Output: a predictive function designed to estimate the probability of interaction between a user u and a previously uninteracted attraction i, denoted aswhere represents the probability of recommending attraction i to user u, and denotes the model parameters.

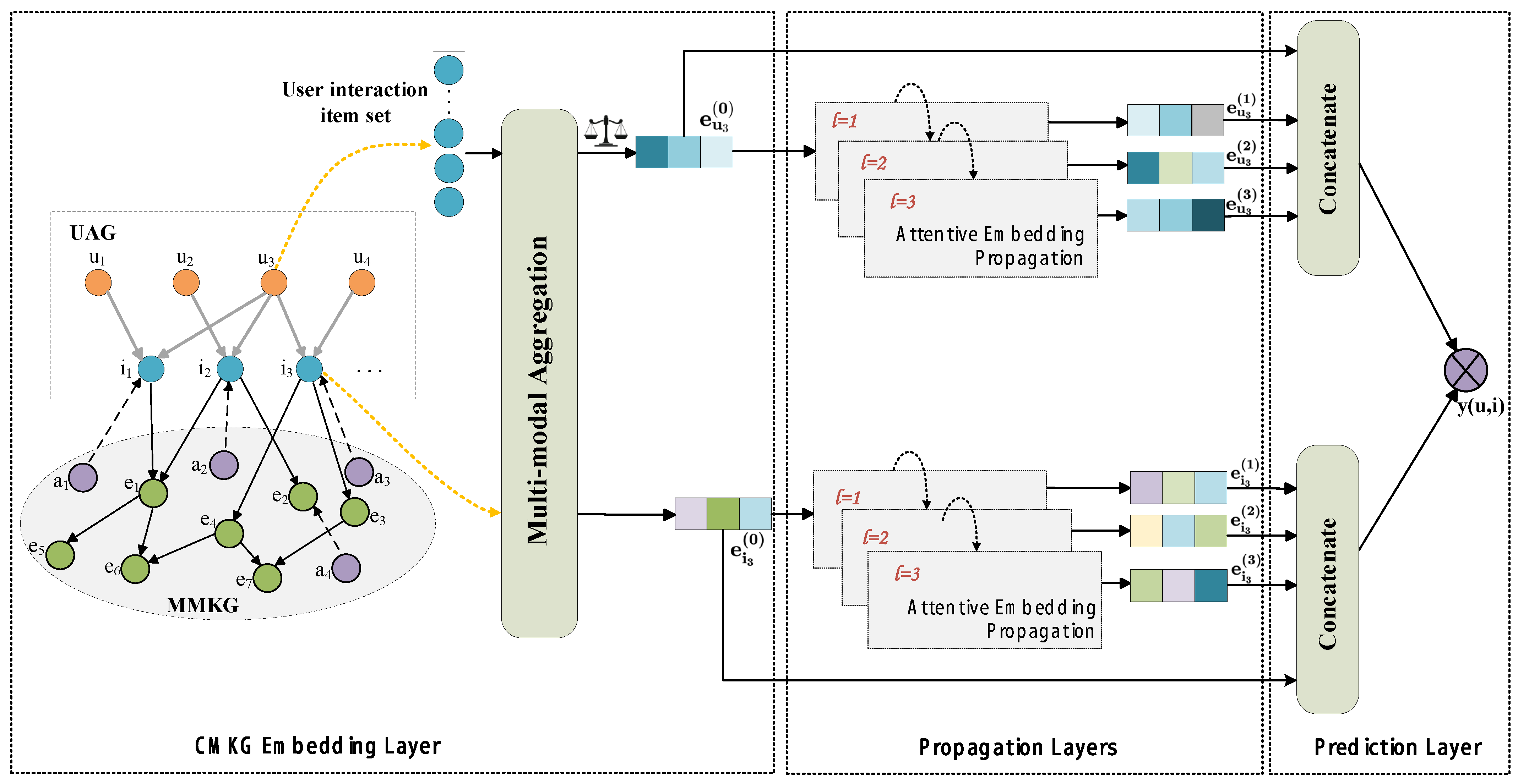

4. Method

4.1. CMKG Embedding Layer

4.1.1. User Embedding

- Direct user embedding method: This is a conventional user embedding strategy where each user is directly encoded as a unique embedding vector . This approach is intuitive and straightforward, with user embeddings typically learned through the model, reflecting individual user characteristics. To maintain consistency in representation, the symbol will also be used to denote user embeddings in subsequent discussions of different user representation methods.

- Interaction-averaged embedding method: In this strategy, the embedding of a user is calculated as the arithmetic mean of the embedding vectors of all items they have interacted with. Suppose the set of attractions a user u has interacted with is denoted as , where each attraction has a corresponding embedding . The embedding of user u can be obtained by calculating the arithmetic mean of the embeddings of all attractions they have interacted with, as illustrated below:

- Interaction-time-weighted embedding method: Similar to the average embedding method, this approach incorporates a time-weighting mechanism for calculating user embeddings. Interactions closer to the current time are assigned greater weight, allowing the embedding vector to more significantly reflect the user’s recent interests and preferences. Assuming that the weight of each interaction is determined by a function , where the value of decreases as the time interval between the interaction and the current time increases, the embedding vector e for user u can be represented by the following formula:where is the weight assigned based on the temporal distance between the interaction and the current time. In this study, we opted to define the weight using an exponential decay function, denoted as . Here, represents the decay rate, and is the time elapsed from the occurrence of the interaction to the current time. The choice of this exponential decay form was based on the assumption that a user’s more recent interactions have a greater influence on their current preferences than earlier ones. Through this weighted strategy, we are able to more accurately capture the dynamics of user preferences over time, thereby providing user embeddings that are more closely aligned with the user’s current interests for prediction and recommendation systems.

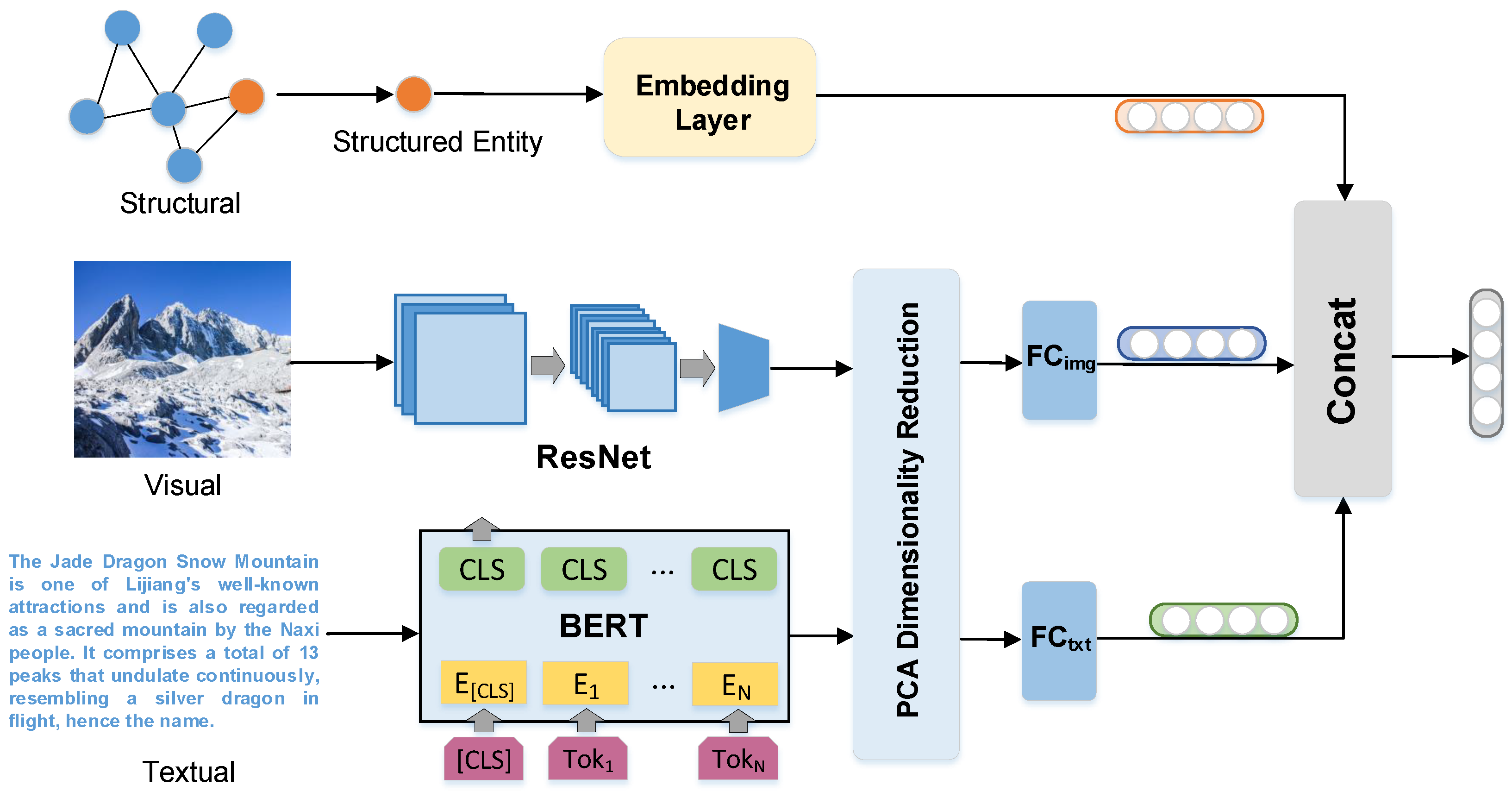

4.1.2. Multi-Modal Data Fusion

- Structured entity: In the process of handling structured entities in knowledge graphs, we assigned a unique identifier (ID) to each entity. These IDs were transformed into dense vector representations, namely entity embeddings , via an embedding layer. The embedding layer is responsible for learning the mapping function , where d represents the dimension of the embedding space.

- Image data: We utilized the deep residual network ResNet-50 [28] for extracting image features in our research. This network, pre-trained on the ImageNet dataset, is adept at capturing high-level semantic information from images. Subsequently, the visual features extracted using ResNet-50 were subjected to dimensionality reduction through principal component analysis (PCA) [29]. These features were then nonlinearly mapped using the fully connected layer , with the aim of projecting the visual data into the embedding space of structured entities. The processing of the image feature vector is represented as follows:where represents a nonlinear activation function, ensuring the effective integration of visual features with entity embeddings within the same dimensional space. This process, by optimizing the representation of visual features and incorporating them into entity embeddings, enhances the semantic representation of entities in the knowledge graph, providing visual support for multi-modal recommendations.

- Textual data: For encoding textual information, we selected BERT [30] (Bidirectional Encoder Representations from Transformers) as our text encoder to thoroughly extract rich knowledge associated with entities. Proposed by Devlin et al., the BERT model has set new benchmarks in various NLP tasks due to its superior language representation capabilities. We utilized the pre-trained BERT-Base [30] model, specifically its pooled output, to generate descriptive embeddings for each entity, resulting in a 768-dimensional textual feature vector. In integrating textual features, we also employed PCA to reduce the dimensionality of the high-dimensional features outputted by BERT, optimizing computational efficiency and enhancing the scalability of the model. Ultimately, the textual features were mapped through a fully connected layer, , ensuring alignment with the feature space of entity embeddings. The processing of textual features is represented as

- Vector concatenation: In the vector concatenation strategy, feature vectors from different modalities are initially concatenated together. Subsequently, they are transformed through a fully connected layer to map them into a common feature space. This process is represented as follows:

- Vector addition: In this strategy, the feature vectors of structured entities, text, and images are summed up to balance the contributions of each modality. This combined vector then undergoes a nonlinear transformation. This process is represented as

- Maximal vector selection: In this strategy, a single modality is selected, specifically the feature vector that contains the maximum amount of information among all modalities, to represent the overall entity. This process is represented as

4.1.3. Multi-Modal Knowledge Graph Embedding (MKGE)

4.2. Propagation Layer

4.2.1. Single-Layer Propagation

4.2.2. Information Aggregation

- Sum aggregator: This aggregator employs a summation strategy to combine two representations, which are further refined through high-order nonlinear transformations. It is represented by the formulawhere W is a trainable weight matrix that maps features from various dimensions to a common space. b is a bias term, providing additional flexibility to the linear transformation. The incorporation of a nonlinear activation function enables the model to capture more complex data patterns. The subsequent two aggregators also utilize the same approach in applying a weight matrix W, a bias term b, and the LeakyReLU nonlinear activation function; therefore, they are not distinguished further.

- Concat aggregator: The concat aggregator employs a strategy of concatenating two representations, initiating with a concatenation followed by complex nonlinear transformations. This approach helps to preserve the information of the original features, while providing a rich input for subsequent linear and nonlinear transformations. The concat aggregator can be represented aswhere denotes the concatenation of the embedding vector of the head entity h with the aggregated vector of adjacent entity information.

- Hybrid feature interaction aggregator: Inspired by the bi-interaction aggregator in the KGAT [32] model, the hybrid feature interaction aggregator enhances the capability to capture information by combining various types of feature interactions. It is represented aswhere ⊙ denotes the element-wise product. In the experimental section, we will comprehensively examine the performance of these three aggregators.

4.2.3. High-Order Propagation

4.3. Prediction Layer

5. Experiments

5.1. Experimental Settings

5.1.1. Datasets Description

5.1.2. Baselines

- BPRMF [34] is a widely used collaborative filtering technique in recommender systems, specifically designed for implicit feedback data. Its core idea revolves around providing a personalized ranking for each user by leveraging a pairwise ranking loss function.

- CKE [35] integrates collaborative filtering and knowledge graph embedding, optimizing the system by combining user interaction data and knowledge graphs to enhance recommendation accuracy through the merging of user behavior and structured knowledge.

- RippleNet [10] enhances user representations by integrating regularization and path-based methods. The model places particular emphasis on including relevant item information within user paths to comprehensively capture user interests and preferences.

- KGAT [32] combines knowledge graphs with recommendation systems, employing attention mechanisms to process entities and relationships within knowledge graphs. This approach enables more precise learning of the complex interactions between users and items, enhancing the efficacy of recommendation systems in academic research.

- MMGCN [21] captures interaction relationships by constructing bipartite graphs of users and items for each modality, and then employs GCNs to train these graphs.

- MMKDGAT [27] integrates visual information as entities within a knowledge graph and employs GCNs to aggregate neighbors, thereby enhancing the representation of these entities.

5.1.3. Evaluation Protocols and Parameter Settings

5.2. Overall Performance Comparison

- In the MT-6M and MT-13M datasets, the MMKG-PAR model outperforms other models on two key evaluation metrics: Recall@20 and NDCG@20. Notably, compared with the strongest baseline model, on the MT-6M dataset, MMKG-PAR achieves an improvement of 6.67% in Recall@20 and 5.30% in NDCG@20.

- BPRMF, as a collaborative filtering model, performs less effectively compared to other models that incorporate knowledge graph information due to its lack of utilization of additional knowledge graph data. KGAT, which combines GCNs and attention mechanisms, shows improved performance over models that solely utilize knowledge graphs, such as CKE and RippleNet.

- Within the realm of multi-modal knowledge graph-based recommendation methods, the performance of MMGCN and MMKDGAT models indicates that integrating multi-modal data on top of a knowledge graph is an effective means of enhancing recommendation accuracy. Both models surpassed those not utilizing multi-modal data in terms of the Recall@20 and NDCG@20 metrics.

- The performance of MMKG-PAR surpasses that of MMGCN and MMKDGAT, indicating that the multi-modal data fusion strategy employed by MMKG-PAR effectively enhances the representation capability of entities. Consequently, MMKG-PAR demonstrates a more significant improvement in recommendation results.

- The models demonstrate better overall performance in the MT-6M dataset compared to the MT-13M. This could be attributed to the fact that MT-6M, by filtering out users with less than seven interactions and their corresponding data, ensures higher data quality. Such filtering is particularly beneficial for user embedding methods that rely on interaction time weighting, effectively capturing user preferences more accurately.

5.3. Ablation and Effectiveness Analysis

5.3.1. Effect of Parameters

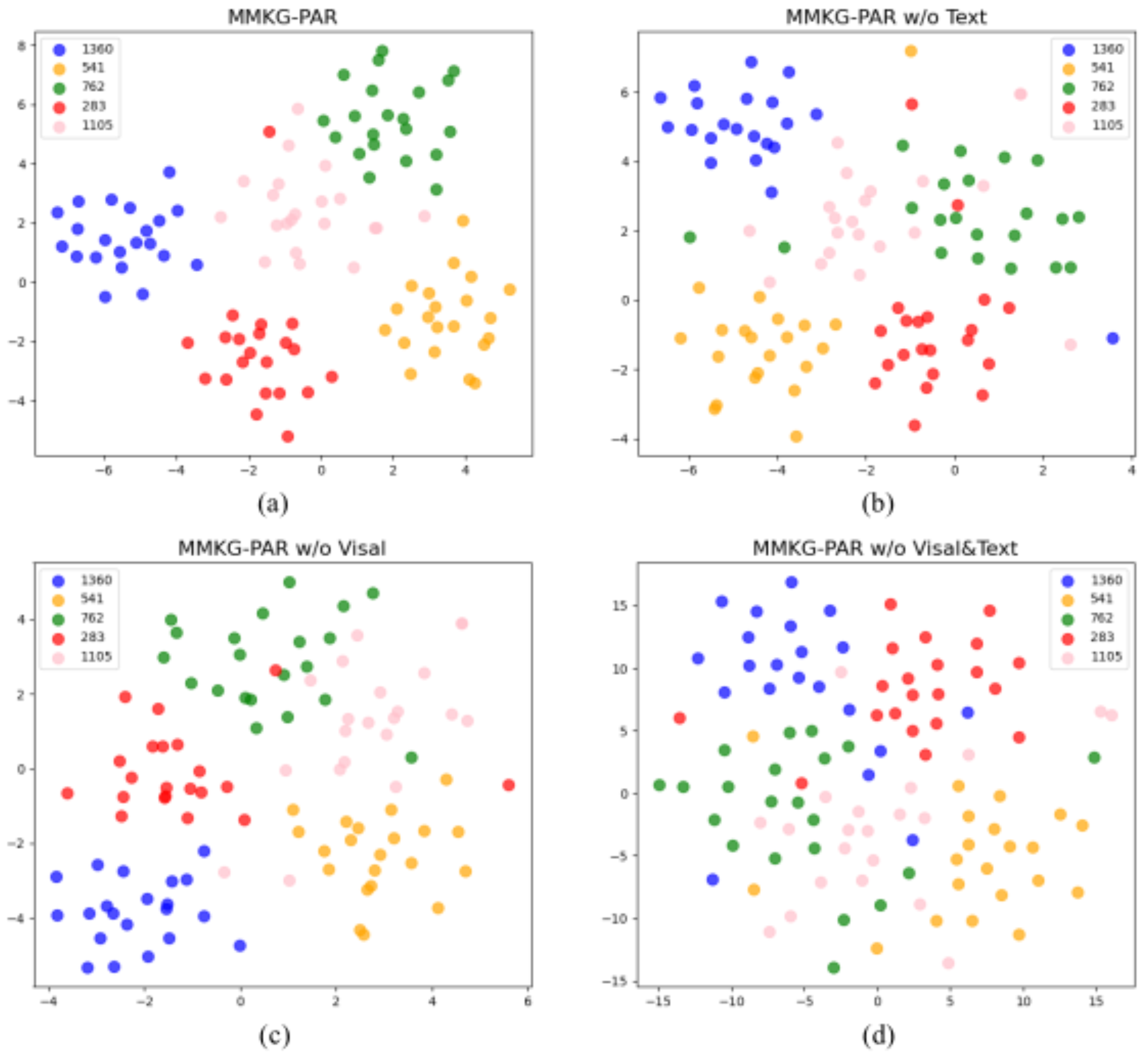

5.3.2. Effect of Multi-Modal Information

- Without any ablations, the baseline model achieved the best performance on both datasets, demonstrating the critical importance of image and text data in enhancing the quality of multi-modal entity representations in knowledge graph recommendation tasks. This impact consistently manifested across datasets of different scales.

- When removing any one modality, the recommendation performance decreased, with the most significant loss occurring when both modalities were removed. However, when considering the results in Table 3 and Table 4, even in the absence of multi-modal attributes, MMKG-PAR’s performance still surpasses knowledge graph baseline models such as CKE, RippleNet, and KGAT. One possible reason for this is that MMKG-PAR, based on time-weighted user embeddings, better reflects users’ latest interests. Additionally, its graph attention mechanism effectively captures neighborhood information, enabling higher-order connectivity and enhancing recommendation accuracy.

- Compared to removing text, the removal of image data had a more significant impact on performance. This could be attributed to the fact that visual information is more likely to capture the user’s attention when browsing information about tourist attractions on online platforms. Therefore, visual information is potentially more crucial for recommendation effectiveness than textual information. Additionally, textual descriptions often contain unrelated information, which may confuse users.

5.3.3. Effect of Model Depth

- In the MT-6M dataset, the model’s performance in terms of Recall@20 and NDCG@20 reached its peak when the number of propagation layers in the graph neural network was increased to three. For the MT-13M dataset, the optimal performance for Recall@20 was observed at four layers of propagation, while the best performance for NDCG@20 occurred at three layers. Overall, the trend indicates that the recommendation effectiveness is optimal when the number of propagation layers is between three and four.

- The increase in the number of layers had a dual effect. On the one hand, augmenting the number of layers can emulate more complex interactions by capturing higher-order relationships. On the other hand, an excessive number of layers might introduce noise, leading to stagnation or even a decline in model performance. As demonstrated in Table 5, when the model depth exceeds three layers, there is no significant enhancement in performance and, in some instances, a decrease in performance is observed.

5.3.4. Effect of Fusion and Aggregation Methods

5.4. Case Study

6. Discussion

7. Conclusions and Future Work

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rane, N.; Choudhary, S.; Rane, J. Sustainable Tourism Development Using Leading-Edge Artificial Intelligence (AI), Blockchain, Internet of Things (IoT), Augmented Reality (AR) and Virtual Reality (VR) Technologies. Blockchain, Internet of Things (IoT), Augmented Reality (AR) and Virtual Reality (VR) Technologies (31 October 2023). 2023. Available online: https://ssrn.com/abstract=4642605 (accessed on 2 November 2023).

- Hamid, R.A.; Albahri, A.S.; Alwan, J.K.; Al-Qaysi, Z.; Albahri, O.S.; Zaidan, A.; Alnoor, A.; Alamoodi, A.H.; Zaidan, B. How smart is e-tourism? A systematic review of smart tourism recommendation system applying data management. Comput. Sci. Rev. 2021, 39, 100337. [Google Scholar] [CrossRef]

- Bhagavatula, C.; Feldman, S.; Power, R.; Ammar, W. Content-based citation recommendation. arXiv 2018, arXiv:1802.08301. [Google Scholar]

- Wang, X.; He, X.; Wang, M.; Feng, F.; Chua, T.S. Neural graph collaborative filtering. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019; pp. 165–174. [Google Scholar]

- Koren, Y.; Bell, R.; Volinsky, C. Matrix factorization techniques for recommender systems. Computer 2009, 42, 30–37. [Google Scholar] [CrossRef]

- Mazlan, I.; Abdullah, N.; Ahmad, N. Exploring the impact of hybrid recommender systems on personalized mental health recommendations. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 935–944. [Google Scholar] [CrossRef]

- Thorat, P.B.; Goudar, R.M.; Barve, S. Survey on collaborative filtering, content-based filtering and hybrid recommendation system. Int. J. Comput. Appl. 2015, 110, 31–36. [Google Scholar]

- Ji, S.; Pan, S.; Cambria, E.; Marttinen, P.; Philip, S.Y. A survey on knowledge graphs: Representation, acquisition, and applications. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 494–514. [Google Scholar] [CrossRef] [PubMed]

- Guo, Q.; Zhuang, F.; Qin, C.; Zhu, H.; Xie, X.; Xiong, H.; He, Q. A survey on knowledge graph-based recommender systems. IEEE Trans. Knowl. Data Eng. 2020, 34, 3549–3568. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, F.; Wang, J.; Zhao, M.; Li, W.; Xie, X.; Guo, M. Ripplenet: Propagating user preferences on the knowledge graph for recommender systems. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, 22–26 October 2018; pp. 417–426. [Google Scholar]

- Jiang, X.; Xu, C.; Shen, Y.; Sun, X.; Tang, L.; Wang, S.; Chen, Z.; Wang, Y.; Guo, J. On the Evolution of Knowledge Graphs: A Survey and Perspective. arXiv 2023, arXiv:2310.04835. [Google Scholar]

- Liu, F.; Cheng, Z.; Sun, C.; Wang, Y.; Nie, L.; Kankanhalli, M. User diverse preference modeling by multimodal attentive metric learning. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 1526–1534. [Google Scholar]

- Rafailidis, D.; Kefalas, P.; Manolopoulos, Y. Preference dynamics with multimodal user-item interactions in social media recommendation. Expert Syst. Appl. 2017, 74, 11–18. [Google Scholar] [CrossRef]

- Matlovičová, K.; Tirpáková, E.; Mocák, P. City brand image: Semiotic perspective a case study of Prague. Folia Geogr. 2019, 61, 120. [Google Scholar]

- Zhu, X.; Li, Z.; Wang, X.; Jiang, X.; Sun, P.; Wang, X.; Xiao, Y.; Yuan, N.J. Multi-modal knowledge graph construction and application: A survey. IEEE Trans. Knowl. Data Eng. 2022, 36, 715–735. [Google Scholar] [CrossRef]

- Zhao, Y.; Cai, X.; Wu, Y.; Zhang, H.; Zhang, Y.; Zhao, G.; Jiang, N. Mose: Modality split and ensemble for multimodal knowledge graph completion. arXiv 2022, arXiv:2210.08821. [Google Scholar]

- Gao, C.; Zheng, Y.; Li, N.; Li, Y.; Qin, Y.; Piao, J.; Quan, Y.; Chang, J.; Jin, D.; He, X.; et al. A survey of graph neural networks for recommender systems: Challenges, methods, and directions. ACM Trans. Recomm. Syst. 2023, 1, 1–51. [Google Scholar] [CrossRef]

- Zhou, J.; Cui, G.; Hu, S.; Zhang, Z.; Yang, C.; Liu, Z.; Wang, L.; Li, C.; Sun, M. Graph neural networks: A review of methods and applications. AI Open 2020, 1, 57–81. [Google Scholar] [CrossRef]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Liang, K.; Meng, L.; Liu, M.; Liu, Y.; Tu, W.; Wang, S.; Zhou, S.; Liu, X.; Sun, F. A Survey of Knowledge Graph Reasoning on Graph Types: Static, Dynamic, and Multimodal. arXiv 2022, arXiv:2212.05767. [Google Scholar]

- Wei, Y.; Wang, X.; Nie, L.; He, X.; Hong, R.; Chua, T.S. MMGCN: Multi-modal graph convolution network for personalized recommendation of micro-video. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 1437–1445. [Google Scholar]

- Mei, P.; Zhao, Y.h. Dynamic network link prediction with node representation learning from graph convolutional networks. Sci. Rep. 2024, 14, 538. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Tong, H.; Xu, J.; Maciejewski, R. Graph convolutional networks: A comprehensive review. Comput. Soc. Netw. 2019, 6, 11. [Google Scholar] [CrossRef] [PubMed]

- Yu, D.; Zhang, R.; Jiang, Z.; Wu, Y.; Yang, Y. Graph-revised convolutional network. In Proceedings of the Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2020, Ghent, Belgium, 14–18 September 2020; pp. 378–393. [Google Scholar]

- Zheng, S.; Wang, W.; Qu, J.; Yin, H.; Chen, W.; Zhao, L. MMKGR: Multi-hop multi-modal knowledge graph reasoning. In Proceedings of the 2023 IEEE 39th International Conference on Data Engineering (ICDE), Anaheim, CA, USA, 3–7 April 2023; pp. 96–109. [Google Scholar]

- Sun, R.; Cao, X.; Zhao, Y.; Wan, J.; Zhou, K.; Zhang, F.; Wang, Z.; Zheng, K. Multi-modal knowledge graphs for recommender systems. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Virtual Event, 19–23 October 2020; pp. 1405–1414. [Google Scholar]

- Wang, F.; Zhu, X.; Cheng, X.; Zhang, Y.; Li, Y. MMKDGAT: Multi-modal Knowledge graph-aware Deep Graph Attention Network for remote sensing image recommendation. Expert Syst. Appl. 2024, 235, 121278. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zou, H.; Xue, L. A selective overview of sparse principal component analysis. Proc. IEEE 2018, 106, 1311–1320. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Bordes, A.; Usunier, N.; Garcia-Duran, A.; Weston, J.; Yakhnenko, O. Translating embeddings for modeling multi-relational data. In Proceedings of the 26th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013. [Google Scholar]

- Wang, X.; He, X.; Cao, Y.; Liu, M.; Chua, T.S. KGAT: Knowledge graph attention network for recommendation. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 950–958. [Google Scholar]

- Shi, K.; Zhang, Y.; Jing, B.; Wang, W. Soft BPR Loss for Dynamic Hard Negative Sampling in Recommender Systems. arXiv 2022, arXiv:2211.13912. [Google Scholar]

- Rendle, S.; Freudenthaler, C.; Gantner, Z.; Schmidt-Thieme, L. BPR: Bayesian personalized ranking from implicit feedback. arXiv 2012, arXiv:1205.2618. [Google Scholar]

- Zhang, F.; Yuan, N.J.; Lian, D.; Xie, X.; Ma, W.Y. Collaborative knowledge base embedding for recommender systems. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 353–362. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, JMLR Workshop and Conference Proceedings, Sardinia, Italy, 13–15 May 2010; pp. 249–256. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Cai, T.T.; Ma, R. Theoretical foundations of t-sne for visualizing high-dimensional clustered data. J. Mach. Learn. Res. 2022, 23, 13581–13634. [Google Scholar]

| Abbreviation | Expansion |

|---|---|

| KG | Knowledge graph |

| MMKG | Multi-modal knowledge graph |

| CF | Collaborative filtering |

| CMKG | Collaborative multi-modal knowledge graph |

| GNN | Graph neural network |

| PCA | Principal component analysis |

| MKGE | Multi-modal knowledge graph embedding |

| GCN | Graph convolutional network |

| MT-6M | MT-13M | |

|---|---|---|

| #users | 49,935 | 240,115 |

| #items | 1218 | 1218 |

| #interactions | 660,912 | 1,321,054 |

| #entities | 32,184 | 32,184 |

| #relations | 9 | 9 |

| #triples | 362,527 | 362,527 |

| MT-6M | MT-13M | |||

|---|---|---|---|---|

| Recall@20 | NDCG@20 | Recall@20 | NDCG@20 | |

| BPRMF | 0.1157 | 0.0842 | 0.0821 | 0.0730 |

| CKE | 0.1244 | 0.0918 | 0.0960 | 0.0876 |

| RippleNet | 0.1237 | 0.0954 | 0.1019 | 0.0927 |

| KGAT | 0.1331 | 0.1051 | 0.1236 | 0.1028 |

| MMGCN | 0.1353 | 0.1042 | 0.1316 * | 0.1042 |

| MMKDGAT | 0.1411 * | 0.1075 * | 0.1309 | 0.1054 * |

| MMKG-PAR | 0.1505 | 0.1132 | 0.1368 | 0.1092 |

| Improv.% | 6.67% | 5.30% | 3.95% | 3.61% |

| MT-6M | MT-13M | |||

|---|---|---|---|---|

| Recall@20 | NDCG@20 | Recall@20 | NDCG@20 | |

| MMKG-PAR | 0.1505 | 0.1132 | 0.1368 | 0.1092 |

| MMKG-PAR w/o Visal | 0.1435 (−4.7%) | 0.1076 (−4.9%) | 0.1324 (−3.2%) | 0.1063 (−2.7%) |

| MMKG-PAR w/o Text | 0.1466 (−2.6%) | 0.1109 (−2.0%) | 0.1347 (−1.5%) | 0.1065 (−2.4%) |

| MMKG-PAR w/o Visal&Text | 0.1416 (−5.9%) | 0.1067 (−5.7%) | 0.1318 (−3.7%) | 0.1047 (−4.1%) |

| MT-6M | MT-13M | |||

|---|---|---|---|---|

| Recall@20 | NDCG@20 | Recall@20 | NDCG@20 | |

| One layer | 0.1476 | 0.1103 | 0.1346 | 0.1062 |

| Two layer | 0.1497 | 0.1127 | 0.1361 | 0.1087 |

| Three layer | 0.1505 | 0.1132 | 0.1366 | 0.1092 |

| Four layer | 0.1501 | 0.1130 | 0.1368 | 0.1089 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, G.; Li, H.; Li, S.; Wang, B.; Ding, Z. MMKG-PAR: Multi-Modal Knowledge Graphs-Based Personalized Attraction Recommendation. Sustainability 2024, 16, 2211. https://doi.org/10.3390/su16052211

Zhang G, Li H, Li S, Wang B, Ding Z. MMKG-PAR: Multi-Modal Knowledge Graphs-Based Personalized Attraction Recommendation. Sustainability. 2024; 16(5):2211. https://doi.org/10.3390/su16052211

Chicago/Turabian StyleZhang, Gengyue, Hao Li, Shuangling Li, Beibei Wang, and Zhixing Ding. 2024. "MMKG-PAR: Multi-Modal Knowledge Graphs-Based Personalized Attraction Recommendation" Sustainability 16, no. 5: 2211. https://doi.org/10.3390/su16052211

APA StyleZhang, G., Li, H., Li, S., Wang, B., & Ding, Z. (2024). MMKG-PAR: Multi-Modal Knowledge Graphs-Based Personalized Attraction Recommendation. Sustainability, 16(5), 2211. https://doi.org/10.3390/su16052211

_Li.png)