The Role of Artificial Intelligence Autonomy in Higher Education: A Uses and Gratification Perspective

Abstract

1. Introduction

2. Literature Review

2.1. Artificial Intelligence (AI) in Online Education

2.2. Artificial Autonomy

2.3. Uses and Gratification (U&G) Theory

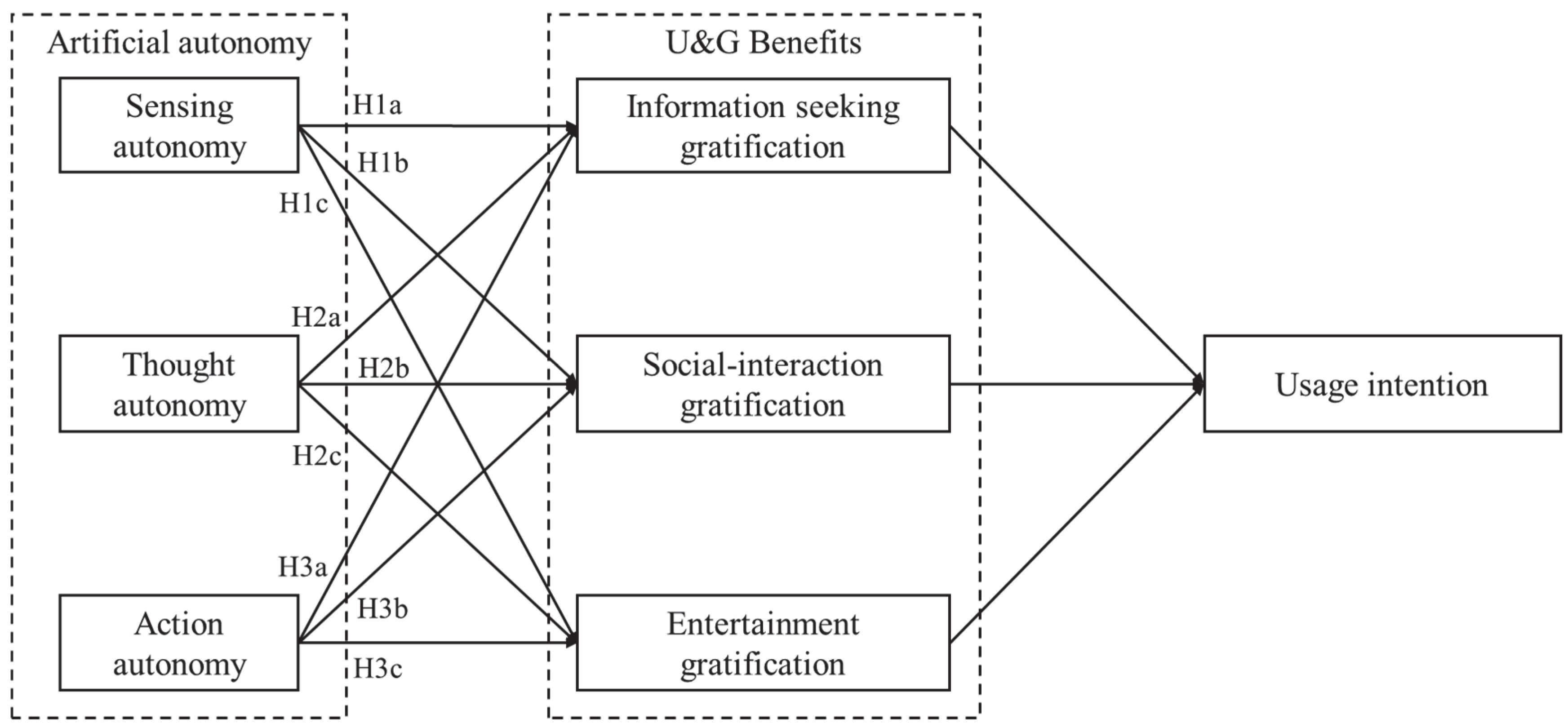

3. Research Model and Hypotheses Development

3.1. Categorizing the Artificial Autonomy of AI Educators

3.2. Identifying the U&G Benefits of AI Educators

3.3. Hypotheses Development

3.3.1. The Sensing Autonomy and Usage Intention of AI Educators

3.3.2. The Thought Autonomy and Usage Intention of AI Educators

3.3.3. The Action Autonomy and Usage Intention of AI Educators

4. Methods

4.1. Sampling and Data Collection

4.2. Measurement Scales

4.3. The Profiles of Respondents

5. Results

5.1. An Assessment of the Measurement Model

5.2. Structural Model and Hypothesis Testing

5.2.1. The Results of Sensing Autonomy on the Usage Intentions of AI Educators

5.2.2. The Results of Thought Autonomy on the Usage Intentions of AI Educators

5.2.3. The Results of Action Autonomy on the Usage Intentions of AI Educators

6. Discussion

6.1. Theoretical Contributions

6.2. Practical Implications

6.3. Limitations and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Berente, N.; Gu, B.; Recker, J.; Santhanam, R. Managing artificial intelligence. MIS Q. 2021, 45, 1433–1450. [Google Scholar]

- Research, G.V. AI in Education Market Size, Share & Trends Analysis Report by Component (Solutions, Services), by Deployment, by Technology, by Application, by End-Use, by Region, and Segment Forecasts, 2022–2030. Available online: https://www.grandviewresearch.com/industry-analysis/artificial-intelligence-ai-education-market-report (accessed on 24 December 2023).

- Sparks, S.D. An AI Teaching Assistant Boosted College Students’ Success. Could It Work for High School? Available online: https://www.edweek.org/technology/an-ai-teaching-assistant-boosted-college-students-success-could-it-work-for-high-school/2023/10 (accessed on 24 December 2023).

- Halpern, D.F. Teaching critical thinking for transfer across domains: Disposition, skills, structure training, and metacognitive monitoring. Am. Psychol. 1998, 53, 449. [Google Scholar] [CrossRef] [PubMed]

- Sherman, T.M.; Armistead, L.P.; Fowler, F.; Barksdale, M.A.; Reif, G. The quest for excellence in university teaching. J. High. Educ. 1987, 58, 66–84. [Google Scholar] [CrossRef]

- Anderson, J.R.; Corbett, A.T.; Koedinger, K.R.; Pelletier, R. Cognitive tutors: Lessons learned. J. Learn. Sci. 1995, 4, 167–207. [Google Scholar] [CrossRef]

- Deci, E.L.; Vallerand, R.J.; Pelletier, L.G.; Ryan, R.M. Motivation and education: The self-determination perspective. Educ. Psychol. 1991, 26, 325–346. [Google Scholar] [CrossRef]

- Dörnyei, Z. Motivation in action: Towards a process-oriented conceptualisation of student motivation. Br. J. Educ. Psychol. 2000, 70, 519–538. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.C.; Wang, C.K.J.; Kee, Y.H.; Koh, C.; Lim, B.S.C.; Chua, L. College students’ motivation and learning strategies profiles and academic achievement: A self-determination theory approach. Educ. Psychol. 2014, 34, 338–353. [Google Scholar] [CrossRef]

- Abeysekera, L.; Dawson, P. Motivation and cognitive load in the flipped classroom: Definition, rationale and a call for research. High. Educ. Res. Dev. 2015, 34, 1–14. [Google Scholar] [CrossRef]

- Guilherme, A. AI and education: The importance of teacher and student relations. AI Soc. 2019, 34, 47–54. [Google Scholar] [CrossRef]

- Cope, B.; Kalantzis, M.; Searsmith, D. Artificial intelligence for education: Knowledge and its assessment in AI-enabled learning ecologies. Educ. Philos. Theory 2021, 53, 1229–1245. [Google Scholar] [CrossRef]

- Wang, X.; Li, L.; Tan, S.C.; Yang, L.; Lei, J. Preparing for AI-enhanced education: Conceptualizing and empirically examining teachers’ AI readiness. Comput. Hum. Behav. 2023, 146, 107798. [Google Scholar] [CrossRef]

- Kim, J.H.; Kim, M.; Kwak, D.W.; Lee, S. Home-tutoring services assisted with technology: Investigating the role of artificial intelligence using a randomized field experiment. J. Mark. Res. 2022, 59, 79–96. [Google Scholar] [CrossRef]

- Ouyang, F.; Jiao, P. Artificial intelligence in education: The three paradigms. Comput. Educ. Artif. Intell. 2021, 2, 100020. [Google Scholar] [CrossRef]

- Kim, J.; Merrill, K., Jr.; Xu, K.; Kelly, S. Perceived credibility of an AI instructor in online education: The role of social presence and voice features. Comput. Hum. Behav. 2022, 136, 107383. [Google Scholar] [CrossRef]

- Xia, Q.; Chiu, T.K.; Lee, M.; Sanusi, I.T.; Dai, Y.; Chai, C.S. A self-determination theory (SDT) design approach for inclusive and diverse artificial intelligence (AI) education. Comput. Educ. 2022, 189, 104582. [Google Scholar] [CrossRef]

- Ali, S.; Payne, B.H.; Williams, R.; Park, H.W.; Breazeal, C. Constructionism, ethics, and creativity: Developing primary and middle school artificial intelligence education. In Proceedings of the International Workshop on Education in Artificial Intelligence K-12 (Eduai’19), Macao, China, 10–16 August 2019; pp. 1–4. [Google Scholar]

- Su, J.; Zhong, Y.; Ng, D.T.K. A meta-review of literature on educational approaches for teaching AI at the K-12 levels in the Asia-Pacific region. Comput. Educ. Artif. Intell. 2022, 3, 100065. [Google Scholar] [CrossRef]

- Touretzky, D.; Gardner-McCune, C.; Martin, F.; Seehorn, D. Envisioning AI for K-12: What should every child know about AI? In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 9795–9799. [Google Scholar]

- Ottenbreit-Leftwich, A.; Glazewski, K.; Jeon, M.; Jantaraweragul, K.; Hmelo-Silver, C.E.; Scribner, A.; Lee, S.; Mott, B.; Lester, J. Lessons learned for AI education with elementary students and teachers. Int. J. Artif. Intell. Educ. 2023, 33, 267–289. [Google Scholar] [CrossRef]

- Kim, K.; Park, Y. A development and application of the teaching and learning model of artificial intelligence education for elementary students. J. Korean Assoc. Inf. Educ. 2017, 21, 139–149. [Google Scholar]

- Han, H.-J.; Kim, K.-J.; Kwon, H.-S. The analysis of elementary school teachers’ perception of using artificial intelligence in education. J. Digit. Converg. 2020, 18, 47–56. [Google Scholar]

- Park, W.; Kwon, H. Implementing artificial intelligence education for middle school technology education in Republic of Korea. Int. J. Technol. Des. Educ. 2023, 1–27. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Lee, I.; Ali, S.; DiPaola, D.; Cheng, Y.; Breazeal, C. Integrating ethics and career futures with technical learning to promote AI literacy for middle school students: An exploratory study. Int. J. Artif. Intell. Educ. 2023, 33, 290–324. [Google Scholar] [CrossRef]

- Williams, R.; Kaputsos, S.P.; Breazeal, C. Teacher perspectives on how to train your robot: A middle school AI and ethics curriculum. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021; pp. 15678–15686. [Google Scholar]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education—Where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 39. [Google Scholar] [CrossRef]

- Dodds, Z.; Greenwald, L.; Howard, A.; Tejada, S.; Weinberg, J. Components, curriculum, and community: Robots and robotics in undergraduate ai education. AI Mag. 2006, 27, 11. [Google Scholar]

- Corbelli, G.; Cicirelli, P.G.; D’Errico, F.; Paciello, M. Preventing prejudice emerging from misleading news among adolescents: The role of implicit activation and regulatory self-efficacy in dealing with online misinformation. Soc. Sci. 2023, 12, 470. [Google Scholar] [CrossRef]

- Liu, K.; Tao, D. The roles of trust, personalization, loss of privacy, and anthropomorphism in public acceptance of smart healthcare services. Comput. Hum. Behav. 2022, 127, 107026. [Google Scholar] [CrossRef]

- Shin, D.; Chotiyaputta, V.; Zaid, B. The effects of cultural dimensions on algorithmic news: How do cultural value orientations affect how people perceive algorithms? Comput. Hum. Behav. 2022, 126, 107007. [Google Scholar] [CrossRef]

- Kim, T.W.; Duhachek, A. Artificial intelligence and persuasion: A construal-level account. Psychol. Sci. 2020, 31, 363–380. [Google Scholar] [CrossRef] [PubMed]

- Hu, Q.; Lu, Y.; Pan, Z.; Gong, Y.; Yang, Z. Can AI artifacts influence human cognition? The effects of artificial autonomy in intelligent personal assistants. Int. J. Inf. Manag. 2021, 56, 102250. [Google Scholar] [CrossRef]

- Etzioni, A.; Etzioni, O. AI assisted ethics. Ethics Inf. Technol. 2016, 18, 149–156. [Google Scholar] [CrossRef]

- Mezrich, J.L. Is artificial intelligence (AI) a pipe dream? Why legal issues present significant hurdles to AI autonomy. Am. J. Roentgenol. 2022, 219, 152–156. [Google Scholar] [CrossRef]

- Rijsdijk, S.A.; Hultink, E.J.; Diamantopoulos, A. Product intelligence: Its conceptualization, measurement and impact on consumer satisfaction. J. Acad. Mark. Sci. 2007, 35, 340–356. [Google Scholar] [CrossRef]

- Wang, P. On defining artificial intelligence. J. Artif. Gen. Intell. 2019, 10, 1–37. [Google Scholar] [CrossRef]

- Formosa, P. Robot autonomy vs. human autonomy: Social robots, artificial intelligence (AI), and the nature of autonomy. Minds Mach. 2021, 31, 595–616. [Google Scholar] [CrossRef]

- Beer, J.M.; Fisk, A.D.; Rogers, W.A. Toward a framework for levels of robot autonomy in human-robot interaction. J. Hum.-Robot. Interact. 2014, 3, 74. [Google Scholar] [CrossRef]

- Pelau, C.; Dabija, D.-C.; Ene, I. What makes an AI device human-like? The role of interaction quality, empathy and perceived psychological anthropomorphic characteristics in the acceptance of artificial intelligence in the service industry. Comput. Hum. Behav. 2021, 122, 106855. [Google Scholar] [CrossRef]

- Mishra, A.; Shukla, A.; Sharma, S.K. Psychological determinants of users’ adoption and word-of-mouth recommendations of smart voice assistants. Int. J. Inf. Manag. 2021, 67, 102413. [Google Scholar] [CrossRef]

- Ameen, N.; Tarhini, A.; Reppel, A.; Anand, A. Customer experiences in the age of artificial intelligence. Comput. Hum. Behav. 2021, 114, 106548. [Google Scholar] [CrossRef] [PubMed]

- Ameen, N.; Hosany, S.; Paul, J. The personalisation-privacy paradox: Consumer interaction with smart technologies and shopping mall loyalty. Comput. Hum. Behav. 2022, 126, 106976. [Google Scholar] [CrossRef]

- Jiang, H.; Cheng, Y.; Yang, J.; Gao, S. AI-powered chatbot communication with customers: Dialogic interactions, satisfaction, engagement, and customer behavior. Comput. Hum. Behav. 2022, 134, 107329. [Google Scholar] [CrossRef]

- Lin, J.-S.; Wu, L. Examining the psychological process of developing consumer-brand relationships through strategic use of social media brand chatbots. Comput. Hum. Behav. 2023, 140, 107488. [Google Scholar] [CrossRef]

- Alimamy, S.; Kuhail, M.A. I will be with you Alexa! The impact of intelligent virtual assistant’s authenticity and personalization on user reusage intentions. Comput. Hum. Behav. 2023, 143, 107711. [Google Scholar] [CrossRef]

- Garvey, A.M.; Kim, T.; Duhachek, A. Bad news? Send an AI. Good news? Send a human. J. Mark. 2022, 87, 10–25. [Google Scholar] [CrossRef]

- Hong, J.-W.; Fischer, K.; Ha, Y.; Zeng, Y. Human, I wrote a song for you: An experiment testing the influence of machines’ attributes on the AI-composed music evaluation. Comput. Hum. Behav. 2022, 131, 107239. [Google Scholar] [CrossRef]

- Plaks, J.E.; Bustos Rodriguez, L.; Ayad, R. Identifying psychological features of robots that encourage and discourage trust. Comput. Hum. Behav. 2022, 134, 107301. [Google Scholar] [CrossRef]

- Ulfert, A.-S.; Antoni, C.H.; Ellwart, T. The role of agent autonomy in using decision support systems at work. Comput. Hum. Behav. 2022, 126, 106987. [Google Scholar] [CrossRef]

- Baxter, L.; Egbert, N.; Ho, E. Everyday health communication experiences of college students. J. Am. Coll. Health 2008, 56, 427–436. [Google Scholar] [CrossRef]

- Severin, W.J.; Tankard, J.W. Communication Theories: Origins, Methods, and Uses in the Mass Media; Longman: New York, NY, USA, 1997. [Google Scholar]

- Cantril, H. Professor Quiz: A Gratifications Study. In Radio Research; Duell, Sloan & Pearce: New York, NY, USA, 1940; pp. 64–93. [Google Scholar]

- Blumler, J.G.; Katz, E. The Uses of Mass Communications: Current Perspectives on Gratifications Research; Sage Publications: Beverly Hills, CA, USA, 1974; Volume III. [Google Scholar]

- Rubin, A.M. Media uses and effects: A uses-and-gratifications perspective. In Media Effects: Advances in Theory and Research; Bryant, J., Zillmann, D., Eds.; Lawrence Erlbaum Associates, Inc.: Mahwah, NJ, USA, 1994; pp. 417–436. [Google Scholar]

- Rubin, A.M. Uses-and-gratifications perspective on media effects. In Media Effects; Routledge: Oxfordshire, UK, 2009; pp. 181–200. [Google Scholar]

- Cheng, Y.; Jiang, H. How do AI-driven chatbots impact user experience? Examining gratifications, perceived privacy risk, satisfaction, loyalty, and continued use. J. Broadcast. Electron. Media 2020, 64, 592–614. [Google Scholar] [CrossRef]

- Xie, Y.; Zhao, S.; Zhou, P.; Liang, C. Understanding continued use intention of AI assistants. J. Comput. Inf. Syst. 2023, 63, 1424–1437. [Google Scholar] [CrossRef]

- Xie, C.; Wang, Y.; Cheng, Y. Does artificial intelligence satisfy you? A meta-analysis of user gratification and user satisfaction with AI-powered chatbots. Int. J. Hum.-Comput. Interact. 2022, 40, 613–623. [Google Scholar] [CrossRef]

- McLean, G.; Osei-Frimpong, K. Hey Alexa… examine the variables influencing the use of artificial intelligent in-home voice assistants. Comput. Hum. Behav. 2019, 99, 28–37. [Google Scholar] [CrossRef]

- Valentine, A. Uses and gratifications of Facebook members 35 years and older. In The Social Media Industries; Routledge: Oxfordshire, UK, 2013; pp. 166–190. [Google Scholar]

- Wald, R.; Piotrowski, J.T.; Araujo, T.; van Oosten, J.M.F. Virtual assistants in the family home. Understanding parents’ motivations to use virtual assistants with their Child(dren). Comput. Hum. Behav. 2023, 139, 107526. [Google Scholar] [CrossRef]

- Baek, T.H.; Kim, M. Is ChatGPT scary good? How user motivations affect creepiness and trust in generative artificial intelligence. Telemat. Inform. 2023, 83, 102030. [Google Scholar] [CrossRef]

- Siegel, M. The sense-think-act paradigm revisited. In Proceedings of the 1st International Workshop on Robotic Sensing, Örebro, Sweden, 5–6 June 2003. [Google Scholar]

- Hayles, N.K. Computing the human. Theory Cult. Soc. 2005, 22, 131–151. [Google Scholar] [CrossRef]

- Luo, X. Uses and gratifications theory and e-consumer behaviors: A structural equation modeling study. J. Interact. Advert. 2002, 2, 34–41. [Google Scholar] [CrossRef]

- Kaur, P.; Dhir, A.; Chen, S.; Malibari, A.; Almotairi, M. Why do people purchase virtual goods? A uses and gratification (U&G) theory perspective. Telemat. Inform. 2020, 53, 101376. [Google Scholar]

- Azam, A. The effect of website interface features on e-commerce: An empirical investigation using the use and gratification theory. Int. J. Bus. Inf. Syst. 2015, 19, 205–223. [Google Scholar] [CrossRef]

- Boyle, E.A.; Connolly, T.M.; Hainey, T.; Boyle, J.M. Engagement in digital entertainment games: A systematic review. Comput. Hum. Behav. 2012, 28, 771–780. [Google Scholar] [CrossRef]

- Huang, L.Y.; Hsieh, Y.J. Predicting online game loyalty based on need gratification and experiential motives. Internet Res. 2011, 21, 581–598. [Google Scholar] [CrossRef]

- Hsu, L.-C.; Wang, K.-Y.; Chih, W.-H.; Lin, K.-Y. Investigating the ripple effect in virtual communities: An example of Facebook Fan Pages. Comput. Hum. Behav. 2015, 51, 483–494. [Google Scholar] [CrossRef]

- Riskos, K.; Hatzithomas, L.; Dekoulou, P.; Tsourvakas, G. The influence of entertainment, utility and pass time on consumer brand engagement for news media brands: A mediation model. J. Media Bus. Stud. 2022, 19, 1–28. [Google Scholar] [CrossRef]

- Luo, M.M.; Chea, S.; Chen, J.-S. Web-based information service adoption: A comparison of the motivational model and the uses and gratifications theory. Decis. Support Syst. 2011, 51, 21–30. [Google Scholar] [CrossRef]

- Lee, C.S.; Ma, L. News sharing in social media: The effect of gratifications and prior experience. Comput. Hum. Behav. 2012, 28, 331–339. [Google Scholar] [CrossRef]

- Choi, E.-k.; Fowler, D.; Goh, B.; Yuan, J. Social media marketing: Applying the uses and gratifications theory in the hotel industry. J. Hosp. Mark. Manag. 2016, 25, 771–796. [Google Scholar] [CrossRef]

- Darwall, S. The value of autonomy and autonomy of the will. Ethics 2006, 116, 263–284. [Google Scholar] [CrossRef]

- Moreno, A.; Etxeberria, A.; Umerez, J. The autonomy of biological individuals and artificial models. BioSystems 2008, 91, 309–319. [Google Scholar] [CrossRef] [PubMed]

- Schneewind, J.B. The Invention of Autonomy: A History of Modern Moral Philosophy; Cambridge University Press: Cambridge, UK, 1998. [Google Scholar]

- Formosa, P. Kantian Ethics, Dignity and Perfection; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

- Pal, D.; Babakerkhell, M.D.; Papasratorn, B.; Funilkul, S. Intelligent attributes of voice assistants and user’s love for AI: A SEM-based study. IEEE Access 2023, 11, 60889–60903. [Google Scholar] [CrossRef]

- Schepers, J.; Belanche, D.; Casaló, L.V.; Flavián, C. How smart should a service robot be? J. Serv. Res. 2022, 25, 565–582. [Google Scholar] [CrossRef]

- Falcone, R.; Sapienza, A. The role of decisional autonomy in User-IoT systems interaction. In Proceedings of the 23rd Workshop from Objects to Agents, Genova, Italy, 1–3 September 2022. [Google Scholar]

- Guo, W.; Luo, Q. Investigating the impact of intelligent personal assistants on the purchase intentions of Generation Z consumers: The moderating role of brand credibility. J. Retail. Consum. Serv. 2023, 73, 103353. [Google Scholar] [CrossRef]

- Ko, H.; Cho, C.-H.; Roberts, M.S. Internet uses and gratifications: A structural equation model of interactive advertising. J. Advert. 2005, 34, 57–70. [Google Scholar] [CrossRef]

- Ki, C.W.C.; Cho, E.; Lee, J.E. Can an intelligent personal assistant (IPA) be your friend? Para-friendship development mechanism between IPAs and their users. Comput. Hum. Behav. 2020, 111, 106412. [Google Scholar] [CrossRef]

- Oeldorf-Hirsch, A.; Sundar, S.S. Social and technological motivations for online photo sharing. J. Broadcast. Electron. Media 2016, 60, 624–642. [Google Scholar] [CrossRef]

- Park, N.; Kee, K.F.; Valenzuela, S. Being immersed in social networking environment: Facebook groups, uses and gratifications, and social outcomes. Cyberpsychol. Behav. 2009, 12, 729–733. [Google Scholar] [CrossRef]

- Eighmey, J. Profiling user responses to commercial web sites. J. Advert. Res. 1997, 37, 59–67. [Google Scholar]

- Eighmey, J.; McCord, L. Adding value in the information age: Uses and gratifications of sites on the World Wide Web. J. Bus. Res. 1998, 41, 187–194. [Google Scholar] [CrossRef]

- Canziani, B.; MacSween, S. Consumer acceptance of voice-activated smart home devices for product information seeking and online ordering. Comput. Hum. Behav. 2021, 119, 106714. [Google Scholar] [CrossRef]

- Ahadzadeh, A.S.; Pahlevan Sharif, S.; Sim Ong, F. Online health information seeking among women: The moderating role of health consciousness. Online Inf. Rev. 2018, 42, 58–72. [Google Scholar] [CrossRef]

- Gordon, I.D.; Chaves, D.; Dearborn, D.; Hendrikx, S.; Hutchinson, R.; Popovich, C.; White, M. Information seeking behaviors, attitudes, and choices of academic physicists. Sci. Technol. Libr. 2022, 41, 288–318. [Google Scholar] [CrossRef]

- Hernandez, A.A.; Padilla, J.R.C.; Montefalcon, M.D.L. Information seeking behavior in ChatGPT: The case of programming students from a developing economy. In Proceedings of the 2023 IEEE 13th International Conference on System Engineering and Technology (ICSET), Shah Alam, Malaysia, 2 October 2023; pp. 72–77. [Google Scholar]

- Poitras, E.; Mayne, Z.; Huang, L.; Udy, L.; Lajoie, S. Scaffolding student teachers’ information-seeking behaviours with a network-based tutoring system. J. Comput. Assist. Learn. 2019, 35, 731–746. [Google Scholar] [CrossRef]

- Dinh, C.-M.; Park, S. How to increase consumer intention to use Chatbots? An empirical analysis of hedonic and utilitarian motivations on social presence and the moderating effects of fear across generations. Electron. Commer. Res. 2023, 1–41. [Google Scholar] [CrossRef]

- Aitken, G.; Smith, K.; Fawns, T.; Jones, D. Participatory alignment: A positive relationship between educators and students during online masters dissertation supervision. Teach. High. Educ. 2022, 27, 772–786. [Google Scholar] [CrossRef]

- So, H.-J.; Brush, T.A. Student perceptions of collaborative learning, social presence and satisfaction in a blended learning environment: Relationships and critical factors. Comput. Educ. 2008, 51, 318–336. [Google Scholar] [CrossRef]

- Tackie, H.N. (Dis)connected: Establishing social presence and intimacy in teacher–student relationships during emergency remote learning. AERA Open 2022, 8, 23328584211069525. [Google Scholar] [CrossRef]

- Nguyen, N.; LeBlanc, G. Image and reputation of higher education institutions in students’ retention decisions. Int. J. Educ. Manag. 2001, 15, 303–311. [Google Scholar] [CrossRef]

- Dang, J.; Liu, L. Implicit theories of the human mind predict competitive and cooperative responses to AI robots. Comput. Hum. Behav. 2022, 134, 107300. [Google Scholar] [CrossRef]

- Pal, D.; Vanijja, V.; Thapliyal, H.; Zhang, X. What affects the usage of artificial conversational agents? An agent personality and love theory perspective. Comput. Hum. Behav. 2023, 145, 107788. [Google Scholar] [CrossRef]

- Munnukka, J.; Talvitie-Lamberg, K.; Maity, D. Anthropomorphism and social presence in Human–Virtual service assistant interactions: The role of dialog length and attitudes. Comput. Hum. Behav. 2022, 135, 107343. [Google Scholar] [CrossRef]

- Lv, X.; Yang, Y.; Qin, D.; Cao, X.; Xu, H. Artificial intelligence service recovery: The role of empathic response in hospitality customers’ continuous usage intention. Comput. Hum. Behav. 2022, 126, 106993. [Google Scholar] [CrossRef]

- Jiang, Y.; Yang, X.; Zheng, T. Make chatbots more adaptive: Dual pathways linking human-like cues and tailored response to trust in interactions with chatbots. Comput. Hum. Behav. 2023, 138, 107485. [Google Scholar] [CrossRef]

- Harris-Watson, A.M.; Larson, L.E.; Lauharatanahirun, N.; DeChurch, L.A.; Contractor, N.S. Social perception in Human-AI teams: Warmth and competence predict receptivity to AI teammates. Comput. Hum. Behav. 2023, 145, 107765. [Google Scholar] [CrossRef]

- Rhim, J.; Kwak, M.; Gong, Y.; Gweon, G. Application of humanization to survey chatbots: Change in chatbot perception, interaction experience, and survey data quality. Comput. Hum. Behav. 2022, 126, 107034. [Google Scholar] [CrossRef]

- Mamonov, S.; Koufaris, M. Fulfillment of higher-order psychological needs through technology: The case of smart thermostats. Int. J. Inf. Manag. 2020, 52, 102091. [Google Scholar] [CrossRef]

- Doty, D.H.; Wooldridge, B.R.; Astakhova, M.; Fagan, M.H.; Marinina, M.G.; Caldas, M.P.; Tunçalp, D. Passion as an excuse to procrastinate: A cross-cultural examination of the relationships between Obsessive Internet passion and procrastination. Comput. Hum. Behav. 2020, 102, 103–111. [Google Scholar] [CrossRef]

- Eigenraam, A.W.; Eelen, J.; Verlegh, P.W. Let me entertain you? The importance of authenticity in online customer engagement. J. Interact. Mark. 2021, 54, 53–68. [Google Scholar] [CrossRef]

- Drouin, M.; Sprecher, S.; Nicola, R.; Perkins, T. Is chatting with a sophisticated chatbot as good as chatting online or FTF with a stranger? Comput. Hum. Behav. 2022, 128, 107100. [Google Scholar] [CrossRef]

- Chubarkova, E.V.; Sadchikov, I.A.; Suslova, I.A.; Tsaregorodtsev, A.; Milova, L.N. Educational game systems in artificial intelligence course. Int. J. Environ. Sci. Educ. 2016, 11, 9255–9265. [Google Scholar]

- Kim, N.-Y.; Cha, Y.; Kim, H.-S. Future english learning: Chatbots and artificial intelligence. Multimed.-Assist. Lang. Learn. 2019, 22, 32–53. [Google Scholar]

- Huang, J.; Saleh, S.; Liu, Y. A review on artificial intelligence in education. Acad. J. Interdiscip. Stud. 2021, 10, 206–217. [Google Scholar] [CrossRef]

- Pizzoli, S.F.M.; Mazzocco, K.; Triberti, S.; Monzani, D.; Alcañiz Raya, M.L.; Pravettoni, G. User-centered virtual reality for promoting relaxation: An innovative approach. Front. Psychol. 2019, 10, 479. [Google Scholar] [CrossRef]

- Ceha, J.; Lee, K.J.; Nilsen, E.; Goh, J.; Law, E. Can a humorous conversational agent enhance learning experience and outcomes? In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–14. [Google Scholar]

- Sung, E.C.; Bae, S.; Han, D.-I.D.; Kwon, O. Consumer engagement via interactive artificial intelligence and mixed reality. Int. J. Inf. Manag. 2021, 60, 102382. [Google Scholar] [CrossRef]

- Eppler, M.J.; Mengis, J. The concept of information overload—A review of literature from organization science, accounting, marketing, MIS, and related disciplines. Inf. Soc. Int. J. 2004, 20, 271–305. [Google Scholar] [CrossRef]

- Jarrahi, M.H. Artificial intelligence and the future of work: Human-AI symbiosis in organizational decision making. Bus. Horiz. 2018, 61, 577–586. [Google Scholar] [CrossRef]

- Nowak, A.; Lukowicz, P.; Horodecki, P. Assessing artificial intelligence for humanity: Will AI be the our biggest ever advance? or the biggest threat [opinion]. IEEE Technol. Soc. Mag. 2018, 37, 26–34. [Google Scholar] [CrossRef]

- Chong, L.; Zhang, G.; Goucher-Lambert, K.; Kotovsky, K.; Cagan, J. Human confidence in artificial intelligence and in themselves: The evolution and impact of confidence on adoption of AI advice. Comput. Hum. Behav. 2022, 127, 107018. [Google Scholar] [CrossRef]

- Endsley, M.R. Supporting Human-AI Teams: Transparency, explainability, and situation awareness. Comput. Hum. Behav. 2023, 140, 107574. [Google Scholar] [CrossRef]

- Zhang, Z.; Yoo, Y.; Lyytinen, K.; Lindberg, A. The unknowability of autonomous tools and the liminal experience of their use. Inf. Syst. Res. 2021, 32, 1192–1213. [Google Scholar] [CrossRef]

- Cui, Y.; van Esch, P. Autonomy and control: How political ideology shapes the use of artificial intelligence. Psychol. Mark. 2022, 39, 1218–1229. [Google Scholar] [CrossRef]

- Osburg, V.-S.; Yoganathan, V.; Kunz, W.H.; Tarba, S. Can (A)I give you a ride? Development and validation of the cruise framework for autonomous vehicle services. J. Serv. Res. 2022, 25, 630–648. [Google Scholar] [CrossRef]

- Mohammadi, E.; Thelwall, M.; Kousha, K. Can Mendeley bookmarks reflect readership? A survey of user motivations. J. Assoc. Inf. Sci. Technol. 2016, 67, 1198–1209. [Google Scholar] [CrossRef]

- Li, J.; Che, W. Challenges and coping strategies of online learning for college students in the context of COVID-19: A survey of Chinese universities. Sustain. Cities Soc. 2022, 83, 103958. [Google Scholar] [CrossRef]

- Eisenberg, D.; Gollust, S.E.; Golberstein, E.; Hefner, J.L. Prevalence and correlates of depression, anxiety, and suicidality among university students. Am. J. Orthopsychiatry 2007, 77, 534–542. [Google Scholar] [CrossRef] [PubMed]

- Malhotra, Y.; Galletta, D.F. Extending the technology acceptance model to account for social influence: Theoretical bases and empirical validation. In Proceedings of the 32nd Annual Hawaii International Conference on Systems Sciences, Maui, HI, USA, 5–8 January 1999; p. 14. [Google Scholar]

- Hair, J., Jr.; Hair, J.F., Jr.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage Publications: Thousand Oaks, CA, USA, 2021. [Google Scholar]

- Petter, S.; Straub, D.; Rai, A. Specifying formative constructs in information systems research. MIS Q. 2007, 31, 623–656. [Google Scholar] [CrossRef]

- Podsakoff, P.M.; MacKenzie, S.B.; Lee, J.-Y.; Podsakoff, N.P. Common method biases in behavioral research: A critical review of the literature and recommended remedies. J. Appl. Psychol. 2003, 88, 879–903. [Google Scholar] [CrossRef]

- Nuimally, J.C. Psychometric Theory; McGraw-Hill Book Company: New York, NY, USA, 1978; pp. 86–113, 190–255. [Google Scholar]

- Comrey, A.L. A First Course in Factor Analysis; Academic Press: New York, NY, USA, 1973. [Google Scholar]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Urbach, N.; Ahlemann, F. Structural equation modeling in information systems research using partial least squares. J. Inf. Technol. Theory Appl. 2010, 11, 2. [Google Scholar]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Kim, S.S.; Malhotra, N.K.; Narasimhan, S. Research note—Two competing perspectives on automatic use: A theoretical and empirical comparison. Inf. Syst. Res. 2005, 16, 418–432. [Google Scholar] [CrossRef]

- Turel, O.; Yuan, Y.; Connelly, C.E. In justice we trust: Predicting user acceptance of e-customer services. J. Manag. Inf. Syst. 2008, 24, 123–151. [Google Scholar] [CrossRef]

- Preacher, K.J.; Hayes, A.F. Asymptotic and resampling strategies for assessing and comparing indirect effects in multiple mediator models. Behav. Res. Methods 2008, 40, 879–891. [Google Scholar] [CrossRef] [PubMed]

- MacMahon, S.J.; Carroll, A.; Osika, A.; Howell, A. Learning how to learn—Implementing self-regulated learning evidence into practice in higher education: Illustrations from diverse disciplines. Rev. Educ. 2022, 10, e3339. [Google Scholar] [CrossRef]

- Broadbent, J.; Poon, W.L. Self-regulated learning strategies & academic achievement in online higher education learning environments: A systematic review. Internet High. Educ. 2015, 27, 1–13. [Google Scholar]

- Wingate, U. A framework for transition: Supporting ‘learning to learn’ in higher education. High. Educ. Q. 2007, 61, 391–405. [Google Scholar] [CrossRef]

- Sagitova, R. Students’ self-education: Learning to learn across the lifespan. Procedia-Soc. Behav. Sci. 2014, 152, 272–277. [Google Scholar] [CrossRef]

- Chen, C.Y.; Lee, L.; Yap, A.J. Control deprivation motivates acquisition of utilitarian products. J. Consum. Res. 2017, 43, 1031–1047. [Google Scholar] [CrossRef]

- Chebat, J.-C.; Gélinas-Chebat, C.; Therrien, K. Lost in a mall, the effects of gender, familiarity with the shopping mall and the shopping values on shoppers’ wayfinding processes. J. Bus. Res. 2005, 58, 1590–1598. [Google Scholar] [CrossRef]

- Murray, A.; Rhymer, J.; Sirmon, D.G. Humans and technology: Forms of conjoined agency in organizations. Acad. Manag. Rev. 2021, 46, 552–571. [Google Scholar] [CrossRef]

- Möhlmann, M.; Zalmanson, L.; Henfridsson, O.; Gregory, R.W. Algorithmic management of work on online labor platforms: When matching meets control. MIS Q. 2021, 45, 1999–2022. [Google Scholar] [CrossRef]

- Fryberg, S.A.; Markus, H.R. Cultural models of education in American Indian, Asian American and European American contexts. Soc. Psychol. Educ. 2007, 10, 213–246. [Google Scholar] [CrossRef]

- Kim, J.; Merrill, K., Jr.; Xu, K.; Sellnow, D.D. I like my relational machine teacher: An AI instructor’s communication styles and social presence in online education. Int. J. Hum.-Comput. Interact. 2021, 37, 1760–1770. [Google Scholar] [CrossRef]

- Kim, J.; Merrill, K.; Xu, K.; Sellnow, D.D. My teacher is a machine: Understanding students’ perceptions of AI teaching assistants in online education. Int. J. Hum.-Comput. Interact. 2020, 36, 1902–1911. [Google Scholar] [CrossRef]

| Constructs | Items | Factor Loading | Reference |

|---|---|---|---|

| Sensing Autonomy | This AI educator can autonomously be aware of the state of its surroundings. | 0.864 | Hu, Lu, Pan, Gong and Yang [33] |

| This AI educator can autonomously recognize information from the environment. | 0.832 | ||

| This AI educator can independently recognize objects in the environment. | 0.873 | ||

| This AI educator can independently monitor the status of objects in the environment. | 0.860 | ||

| Thought Autonomy | This AI educator can autonomously provide me choices of what to do. | 0.792 | Hu, Lu, Pan, Gong and Yang [33] |

| This AI educator can independently provide recommendations for action plans for assigned matters. | 0.833 | ||

| This AI educator can independently recommend an implementation plan of the assigned matters. | 0.828 | ||

| This AI educator can autonomously suggest what can be done. | 0.821 | ||

| Action Autonomy | This AI educator can independently complete the operation of the skill. | 0.862 | Hu, Lu, Pan, Gong and Yang [33] |

| This AI educator can independently implement the operation of the skill. | 0.885 | ||

| This AI educator can autonomously perform the operation of the skill. | 0.881 | ||

| This AI educator can carry out the operation of skills autonomously. | 0.891 | ||

| Information-seeking gratification | I can use this AI educator to learn more about the lectures. | 0.863 | Lin and Wu [45] |

| I can use this AI educator to obtain information more quickly. | 0.845 | ||

| I can use this AI educator to be the first to know information. | 0.846 | ||

| Social interaction gratification | I can use this AI educator to communicate and interact with it. | 0.751 | Lin and Wu [45] |

| I can use this AI educator to show concern and support to it. | 0.766 | ||

| I can use this AI educator to get opinions and advice from it. | 0.740 | ||

| I can use this AI educator to give my opinion about it. | 0.789 | ||

| I can use this AI educator to express myself. | 0.775 | ||

| Entertainment gratification | I can use this AI educator to be entertained. | 0.865 | Lin and Wu [45] |

| I can use this AI educator to relax. | 0.852 | ||

| I can use this AI educator to pass the time when bored. | 0.749 | ||

| Usage intention | I plan to use the AI educator in the future. | 0.894 | McLean and Osei-Frimpong [60] |

| I intend use the AI educator in the future. | 0.894 | ||

| I predict I would use the AI educator in the future. | 0.832 |

| Profile | Percentage | |

|---|---|---|

| Undergraduate (67.75%) | Age | 17–22 |

| Female | 51.18% | |

| Male | 48.82% | |

| First-year | 15.63% | |

| Second-year | 18.85% | |

| Third-year | 35.33% | |

| Fourth-year | 30.19% | |

| Master’s (25.50%) | Age | 21–25 |

| Female | 48.21% | |

| Male | 51.79% | |

| First-year | 55.36% | |

| Second-year | 32.14% | |

| Third-year | 12.50% | |

| PhD students (6.75%) | Age | 22–29 |

| Female | 50.00% | |

| Male | 50.00% | |

| First-year | 71.05% | |

| Second-year | 18.42% | |

| Third-year or above | 10.53% | |

| Gender | Female | 50.37% |

| Male | 49.63% | |

| Experience of using AI applications other than AI educators | Yes | 84.84% |

| No | 15.16% | |

| Experience in participating in online education | Frequently participate | 89.01% |

| Participated, but not much | 6.98% | |

| Almost never participated | 4.01% | |

| Mean | SD | Cronbach’s Alpha | CR | AVE | |

|---|---|---|---|---|---|

| Sensing Autonomy | 5.17 | 1.07 | 0.880 | 0.917 | 0.735 |

| Thought Autonomy | 5.55 | 0.95 | 0.836 | 0.891 | 0.670 |

| Action Autonomy | 5.43 | 1.11 | 0.903 | 0.932 | 0.774 |

| Information-seeking Gratification | 6.04 | 0.81 | 0.810 | 0.888 | 0.725 |

| Social interaction Gratification | 5.73 | 0.81 | 0.822 | 0.876 | 0.585 |

| Entertainment Gratification | 5.28 | 1.10 | 0.763 | 0.863 | 0.678 |

| Usage Intention | 5.79 | 0.95 | 0.845 | 0.906 | 0.764 |

| SA | TA | AA | IG | SG | EG | UI | |

|---|---|---|---|---|---|---|---|

| Sensing Autonomy (SA) | 0.857 | ||||||

| Thought Autonomy (TA) | 0.628 | 0.819 | |||||

| Action Autonomy (AA) | 0.549 | 0.547 | 0.880 | ||||

| Information-seeking Gratification (IG) | 0.266 | 0.372 | 0.451 | 0.851 | |||

| Social interaction Gratification (SG) | 0.520 | 0.491 | 0.652 | 0.652 | 0.765 | ||

| Entertainment Gratification (EG) | 0.512 | 0.345 | 0.376 | 0.376 | 0.534 | 0.823 | |

| Usage Intention (UI) | 0.450 | 0.446 | 0.689 | 0.689 | 0.699 | 0.567 | 0.874 |

| SA | TA | AA | IG | SG | EG | UI | |

|---|---|---|---|---|---|---|---|

| Sensing Autonomy (SA) | |||||||

| Thought Autonomy (TA) | 0.733 | ||||||

| Action Autonomy (AA) | 0.615 | 0.629 | |||||

| Information-seeking Gratification (IG) | 0.313 | 0.451 | 0.526 | ||||

| Social interaction Gratification (SG) | 0.610 | 0.592 | 0.469 | 0.801 | |||

| Entertainment Gratification (EG) | 0.617 | 0.415 | 0.573 | 0.464 | 0.661 | ||

| Usage Intention (UI) | 0.520 | 0.530 | 0.541 | 0.831 | 0.838 | 0.695 |

| Relationships | Total Indirect Effect (CI) | Indirect Effect (CI) |

|---|---|---|

| Sensing autonomy → Information seeking → Usage | [0.055, 0.237] | [−0.068, 0.011] |

| Sensing autonomy → Social interaction → Usage | [0.046, 0.134] | |

| Sensing autonomy → Entertainment → Usage | [0.045, 0.132] | |

| Thought autonomy → Information seeking → Usage | [0.046, 0.221] | [0.036, 0.136] |

| Thought autonomy → Social interaction → Usage | [0.028, 0.107] | |

| Though autonomy → Entertainment → Usage | [−0.045, 0.014] | |

| Action autonomy → Information seeking → Usage | [0.150, 0.331] | [0.092, 0.200] |

| Action autonomy → Social interaction → Usage | [−0.002, 0.060] | |

| Action autonomy → Entertainment → Usage | [0.033, 0.111] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Niu, W.; Zhang, W.; Zhang, C.; Chen, X. The Role of Artificial Intelligence Autonomy in Higher Education: A Uses and Gratification Perspective. Sustainability 2024, 16, 1276. https://doi.org/10.3390/su16031276

Niu W, Zhang W, Zhang C, Chen X. The Role of Artificial Intelligence Autonomy in Higher Education: A Uses and Gratification Perspective. Sustainability. 2024; 16(3):1276. https://doi.org/10.3390/su16031276

Chicago/Turabian StyleNiu, Wanshu, Wuke Zhang, Chuanxia Zhang, and Xiaofeng Chen. 2024. "The Role of Artificial Intelligence Autonomy in Higher Education: A Uses and Gratification Perspective" Sustainability 16, no. 3: 1276. https://doi.org/10.3390/su16031276

APA StyleNiu, W., Zhang, W., Zhang, C., & Chen, X. (2024). The Role of Artificial Intelligence Autonomy in Higher Education: A Uses and Gratification Perspective. Sustainability, 16(3), 1276. https://doi.org/10.3390/su16031276