A Hybrid Model for Household Waste Sorting (HWS) Based on an Ensemble of Convolutional Neural Networks

Abstract

1. Introduction

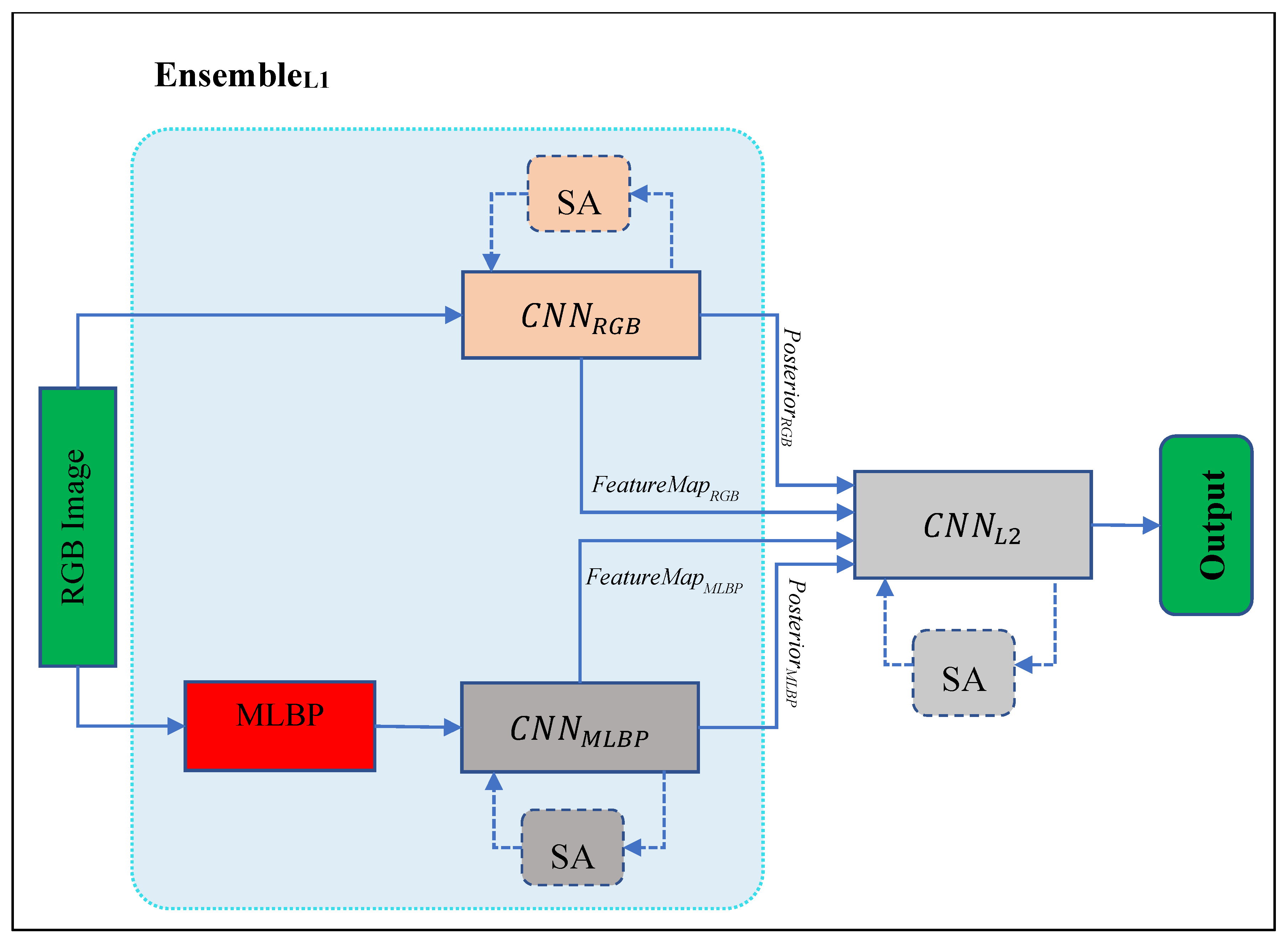

- The introduction of a hierarchical ensemble model for accurate detection of various types of Household Waste (HW).

- The utilization of local and global features of HW images to enhance detection accuracy.

- The application of the Simulated Annealing (SA) algorithm for optimizing the learning components of the hierarchical ensemble system.

- The provision of a generalizable method for detecting various types of HW.

- The use of a Convolutional Neural Network (CNN) model in the second level of the proposed ensemble system for fusing the obtained results of the first level. Instead of using conventional methods such as voting and averaging, a CNN model is used to fuse the results of the first-level models.

- The utilization of an MLBP feature selection approach for a more precise description of the HW image features.

- The application of the SA algorithm for fine-tuning the hyperparameters of the CNN models and optimizing the system’s performance.

2. Research Background

3. Research Methodology

3.1. Dataset

- Plastic (482 samples)

- Glass (501 samples)

- Metal (410 samples)

- Paper (594 samples)

- Cardboard (403 samples)

- Organic materials (137 samples)

3.2. Proposed Method

- Image representation;

- Local detection based on first-level CNN models;

- The final output will be determined based on the aggregation of results by the second-level CNN.

3.2.1. Image Representation

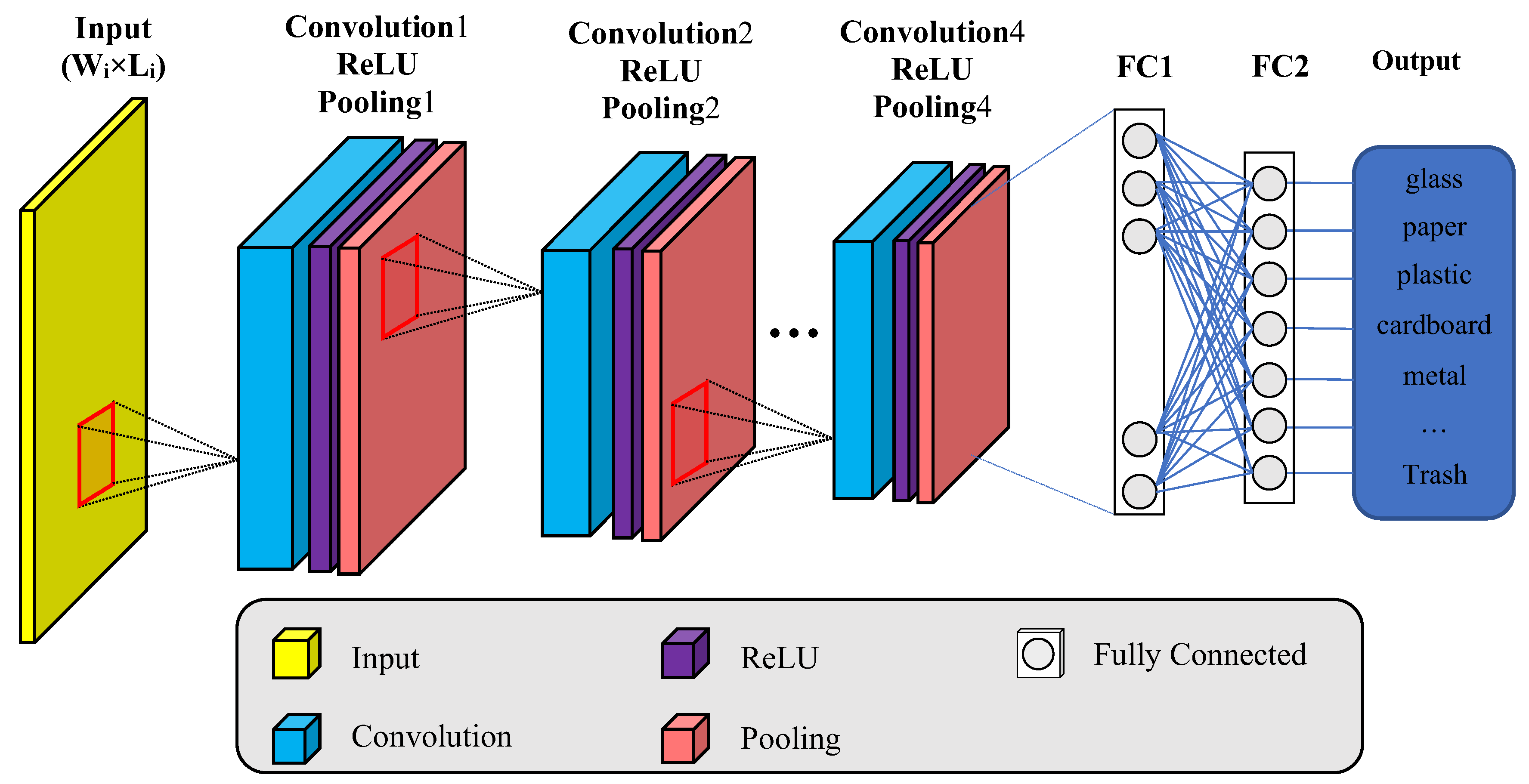

3.2.2. Local Detection Based on First-Level CNN Models

- Convolution layers C1 through C4: The convolution filter’s width, length, and number of filters are all included in this collection of parameter values. The filter length and width parameters are regarded as equivalent in order to restrict the search space. Empirical findings suggest that convolution filters of the same length and width can produce acceptable outcomes. The width and length parameters can be assigned an integer in the range [3, 9] based on the dimensions of the input instances. Conversely, the number of filters can be changed as an integer within the range [8, 128] using an eight-step step size.

- Pooling function type for layers P1–P4: The max, global, or average functions are the options available for each layer of pooling P1–P4.

- Number of neurons in layer FC1: This parameter is defined as an integer in the range of [30, 100].

- 1.

- Initialization:

- ○

- Define the initial solution (candidate configuration) for the optimization problem.

- ○

- Set a high initial temperature that allows for exploration of the search space.

- ○

- Define a cooling schedule that determines how the temperature will decrease over time.

- 2.

- Iteration Loop:

- ○

- This loop continues until a stopping criterion (zero fitness or a certain number of iterations) is met.

- 3.

- Generate Neighboring Solution:

- ○

- Create a slight modification (perturbation) of the current solution. This could involve swapping elements, adding/removing elements, or making small adjustments to existing values.

- 4.

- Calculate Energy Difference:

- ○

- Evaluate the difference in objective function value (Equation (2)) between the current solution and the neighboring solution.

- 5.

- Acceptance Probability:

- ○

- Use the Metropolis criterion to determine whether to accept the neighboring solution. This involves a probability function based on the temperature and the fitness difference.

- ▪

- If the neighboring solution improves the objective function (negative difference), it is always accepted.

- ▪

- If the neighboring solution worsens the objective function (positive difference), it is still accepted with a probability that decreases as the temperature cools.

- 6.

- Update Solution:

- ○

- If the neighboring solution is accepted, it becomes the current solution.

- 7.

- Decrease Temperature:

- ○

- Apply the cooling schedule to decrease the temperature.

- 8.

- Repeat:

- ○

- Go back to step 2 and continue iterating until the stopping criterion is met.

3.2.3. Determining the Final Output Based on Aggregating Results by the Second-Level CNN

- Posterior Probability Vector of the CNNRGB Model: This feature set is described as a vector whose length is equal to the number of target classes and is obtained from the output layer of the CNNRGB model. Each numerical value in this vector corresponds to one of the target classes in the problem and indicates the probability of the input sample belonging to that target class.

- Posterior Probability Vector of the CNNMLBP Model: Similarly, this vector is obtained through the output layer of the CNNMLBP model and reflects the knowledge gained by this learning model in distinguishing types of waste based on the MLBP matrices of images.

- Features Extracted from the MLBP Matrix: This feature set indicates a set of features that the CNNMLBP model uses to describe each of its input samples, which are obtained through the activation weights of the FC1 layer in the CNNMLBP model.

- Features Extracted from the RGB Matrix: Similarly, this feature set is obtained through the activation weights of the FC1 layer in the CNNRGB model. These features are used to describe the input samples based on the RGB matrix of the images.

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Behera, R.; Adhikary, L. Review on cultured meat: Ethical alternative to animal industrial farming. Food Res. 2023, 7, 42–51. [Google Scholar] [CrossRef] [PubMed]

- Alnezami, S.; Lamaa, G.; Pereira, M.F.C.; Kurda, R.; de Brito, J.; Silva, R.V. A sustainable treatment method to use municipal solid waste incinerator bottom ash as cement replacement. Constr. Build. Mater. 2024, 423, 135855. [Google Scholar] [CrossRef]

- Hajam, Y.A.; Kumar, R.; Kumar, A. Environmental waste management strategies and vermi transformation for sustainable development. Environ. Chall. 2023, 13, 100747. [Google Scholar] [CrossRef]

- Hoy, Z.X.; Phuang, Z.X.; Farooque, A.A.; Van Fan, Y.; Woon, K.S. Municipal solid waste management for low-carbon transition: A systematic review of artificial neural network applications for trend prediction. Environ. Pollut. 2024, 423, 123386. [Google Scholar] [CrossRef] [PubMed]

- Hayat, P. Integration of advanced technologies in urban waste management. In Advancements in Urban Environmental Studies: Application of Geospatial Technology and Artificial Intelligence in Urban Studies; Springer: Berlin/Heidelberg, Germany, 2023; pp. 397–418. [Google Scholar]

- Vinti, G.; Bauza, V.; Clasen, T.; Tudor, T.; Zurbrügg, C.; Vaccari, M. Health risks of solid waste management practices in rural Ghana: A semi-quantitative approach toward a solid waste safety plan. Environ. Res. 2023, 216, 114728. [Google Scholar] [CrossRef] [PubMed]

- Ruiz, V.; Sánchez, Á.; Vélez, J.F.; Raducanu, B. Automatic image-based waste classification. In Proceedings of the From Bioinspired Systems and Biomedical Applications to Machine Learning: 8th International Work-Conference on the Interplay Between Natural and Artificial Computation, IWINAC 2019, Almería, Spain, 3–7 June 2019; Proceedings, Part II 8. pp. 422–431. [Google Scholar]

- Vo, A.H.; Vo, M.T.; Le, T. A novel framework for trash classification using deep transfer learning. IEEE Access 2019, 7, 178631–178639. [Google Scholar] [CrossRef]

- Shi, C.; Xia, R.; Wang, L. A novel multi-branch channel expansion network for garbage image classification. IEEE Access 2020, 8, 154436–154452. [Google Scholar] [CrossRef]

- Meng, S.; Zhang, N.; Ren, Y. X-DenseNet: Deep learning for garbage classification based on visual images. Proc. J. Phys. Conf. Ser. 2020, 1575, 012139. [Google Scholar] [CrossRef]

- Feng, J.-W.; Tang, X.-Y. Office garbage intelligent classification based on inception-v3 transfer learning model. Proc. J. Phys. Conf. Ser. 2020, 1487, 012008. [Google Scholar] [CrossRef]

- Wang, C.; Qin, J.; Qu, C.; Ran, X.; Liu, C.; Chen, B. A smart municipal waste management system based on deep-learning and Internet of Things. Waste Manag. 2021, 135, 20–29. [Google Scholar] [CrossRef] [PubMed]

- Fu, B.; Li, S.; Wei, J.; Li, Q.; Wang, Q.; Tu, J. A novel intelligent garbage classification system based on deep learning and an embedded linux system. IEEE Access 2021, 9, 131134–131146. [Google Scholar] [CrossRef]

- Guo, Q.; Shi, Y.; Wang, S. Research on deep learning image recognition technology in garbage classification. In Proceedings of the 2021 Asia-Pacific Conference on Communications Technology and Computer Science (ACCTCS), Shenyang, China, 22–24 January 2021; pp. 92–96. [Google Scholar]

- Chen, Z.; Yang, J.; Chen, L.; Jiao, H. Garbage classification system based on improved ShuffleNet v2. Resour. Conserv. Recycl. 2022, 178, 106090. [Google Scholar] [CrossRef]

- Zhao, Q.; Xiong, C.; Liu, K. Design and Implementation of Garbage Classification System Based on Convolutional Neural Network. In Proceedings of the International Conference on 5G for Future Wireless Networks, Beijing, China, 21–23 April 2017; pp. 182–193. [Google Scholar]

- Liu, W.; Ouyang, H.; Liu, Q.; Cai, S.; Wang, C.; Xie, J.; Hu, W. Image recognition for garbage classification based on transfer learning and model fusion. Math. Probl. Eng. 2022, 2022, 1–12. [Google Scholar] [CrossRef]

- Liu, F.; Xu, H.; Qi, M.; Liu, D.; Wang, J.; Kong, J. Depth-wise separable convolution attention module for garbage image classification. Sustainability 2022, 14, 3099. [Google Scholar] [CrossRef]

- Li, X.; Li, T.; Li, S.; Tian, B.; Ju, J.; Liu, T.; Liu, H. Learning fusion feature representation for garbage image classification model in human–robot interaction. Infrared Phys. Technol. 2023, 128, 104457. [Google Scholar] [CrossRef]

- Yang, M.; Thung, G. Classification of trash for recyclability status. In CS229 Project Report; Stanford University: Stanford, CA, USA, 2016; Volume 2016, p. 3. [Google Scholar]

- HGCD: Household Garbage Classification Dataset. 2022. Available online: https://www.kaggle.com/datasets/mostafaabla/garbage-classification (accessed on 11 April 2023).

| Layer | TrashNet Parameter Setting | HGCD Parameter Setting |

|---|---|---|

| Convolution1 (W × H × N) | 9 × 9 × 8 | 9 × 9 × 8 |

| Pooling1 | Max | Average |

| Convolution2 (W × H × N) | 5 × 5 × 24 | 8 × 8 × 16 |

| Pooling2 | Average | Max |

| Convolution3 (W × H × N) | 4 × 4 × 48 | 6 × 6 × 28 |

| Pooling3 | Average | Max |

| Convolution4 (W × H × N) | 3 × 3 × 64 | 4 × 4 × 32 |

| Pooling4 | Global | Max |

| FC1 | 50 | 40 |

| Layer | TrashNet Parameter Setting | HGCD Parameter Setting |

|---|---|---|

| Convolution1 (W × H × N) | 7 × 7 × 8 | 8 × 8 × 8 |

| Pooling1 | Max | Average |

| Convolution2 (W × H × N) | 5 × 5 × 16 | 6 × 6 × 16 |

| Pooling2 | Average | Average |

| Convolution3 (W × H × N) | 5 × 5 × 32 | 4 × 4 × 16 |

| Pooling3 | Max | Average |

| Convolution4 (W × H × N) | 3 × 3 × 40 | 3 × 3 × 32 |

| Pooling4 | Max | Global |

| FC1 | 35 | 30 |

| Layer | TrashNet Parameter Setting | HGCD Parameter Setting |

|---|---|---|

| Convolution1 (W × H × N) | 6 × 6 × 16 | 7 × 7 × 8 |

| Pooling1 | Max | Average |

| Convolution2 (W × H × N) | 5 × 5 × 24 | 5 × 5 × 16 |

| Pooling2 | Max | Max |

| Convolution3 (W × H × N) | 3 × 3 × 24 | 5 × 5 × 24 |

| Pooling3 | Average | Max |

| Convolution4 (W × H × N) | 3 × 3 × 32 | 3 × 3 × 24 |

| Pooling4 | Max | Global |

| FC1 | 30 | 30 |

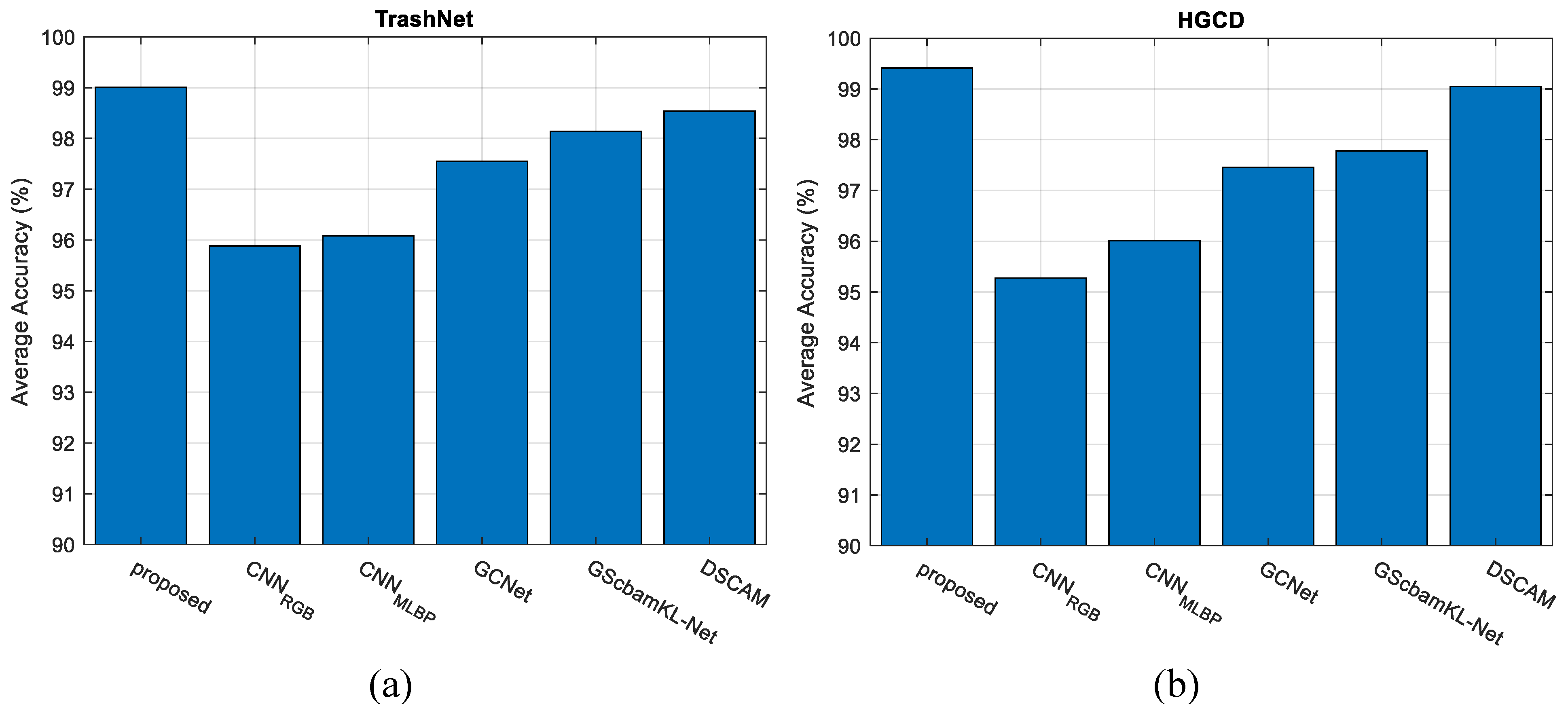

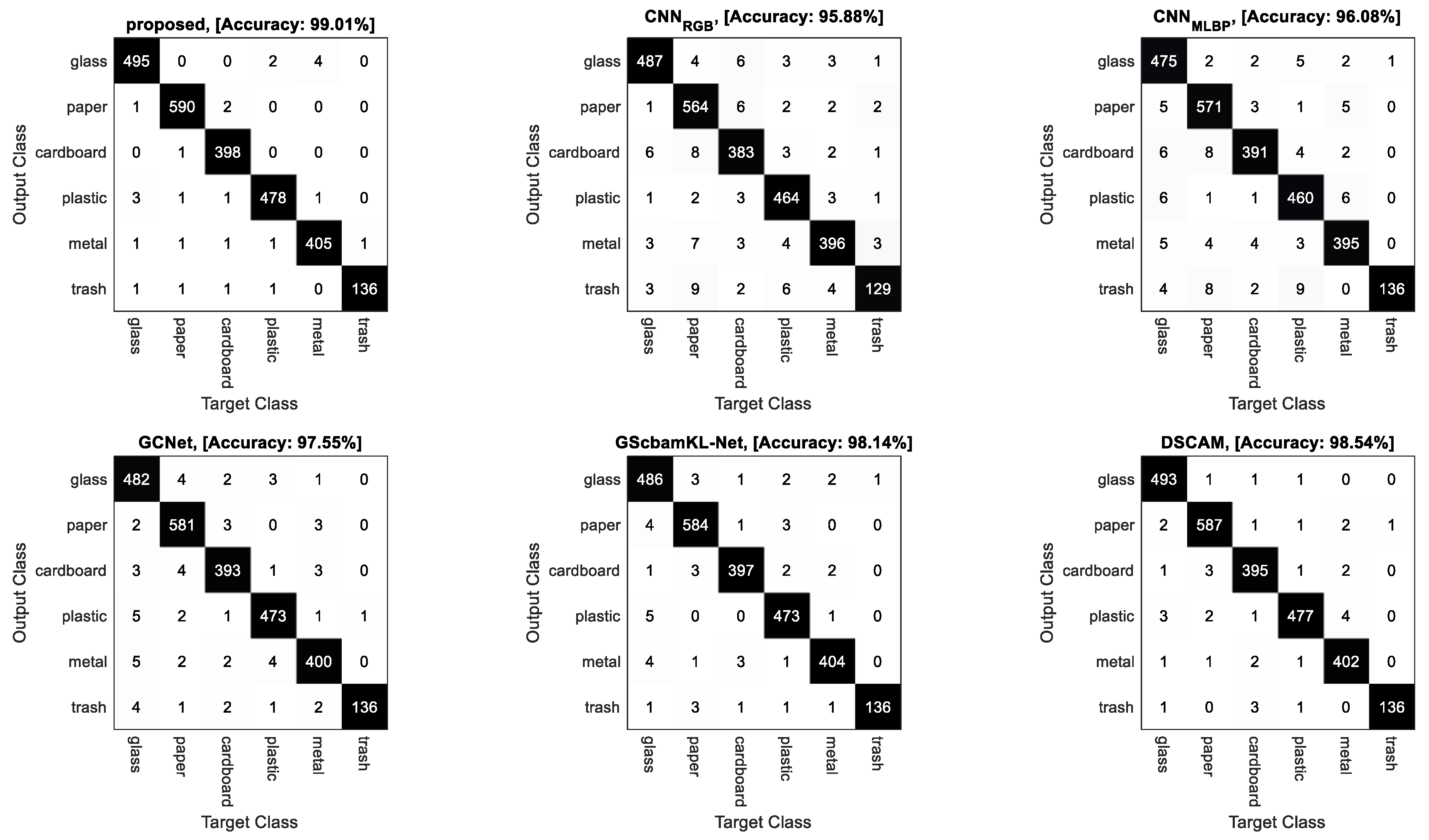

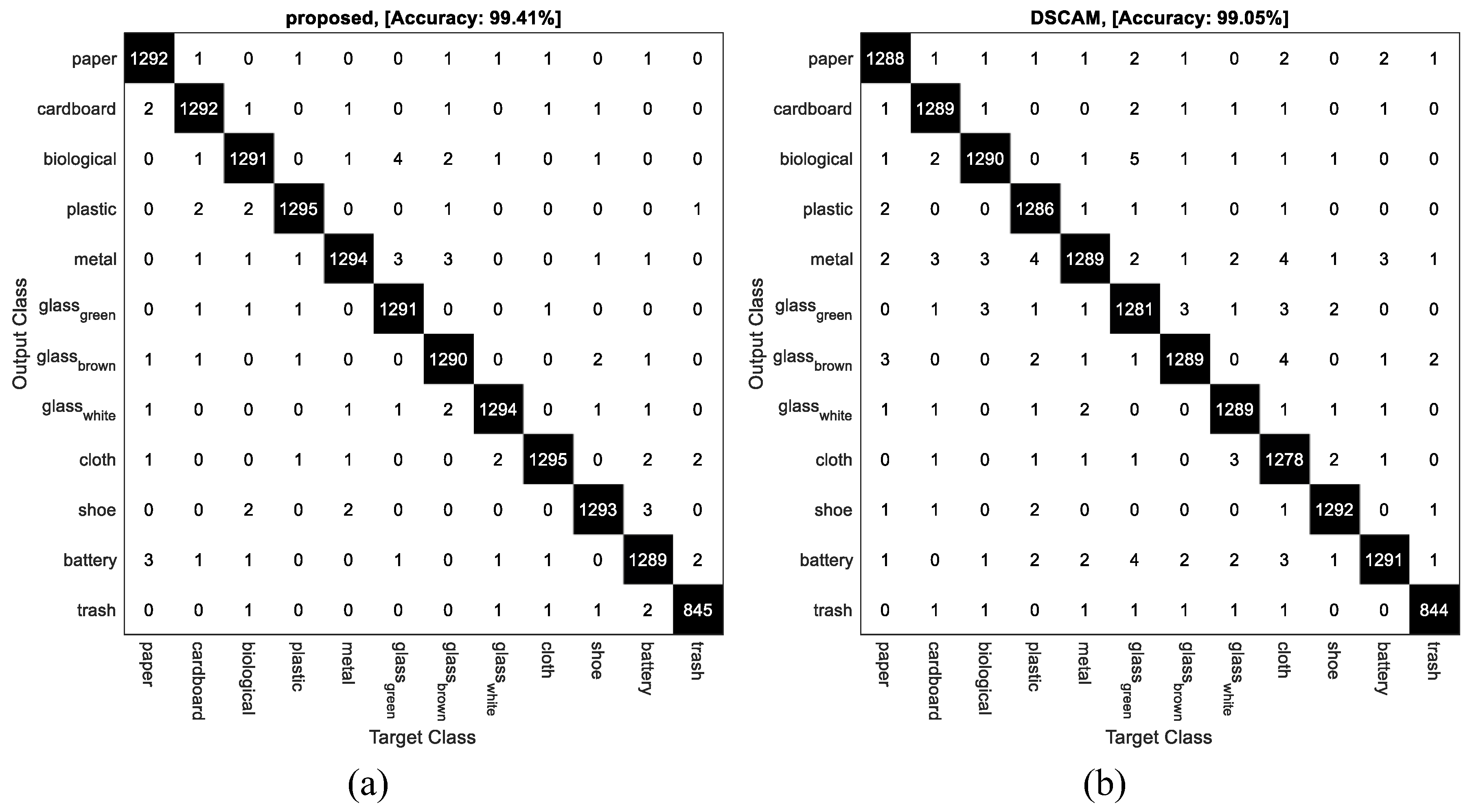

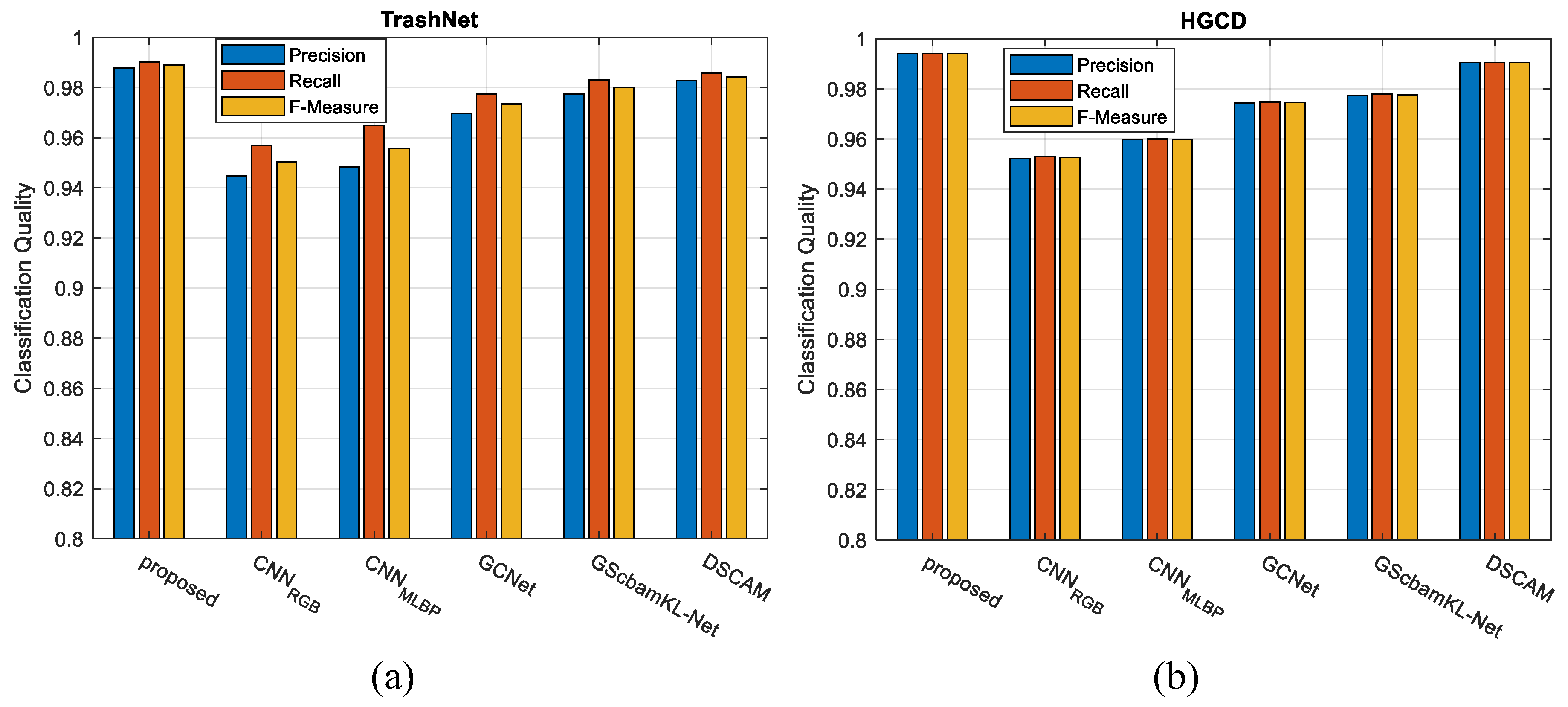

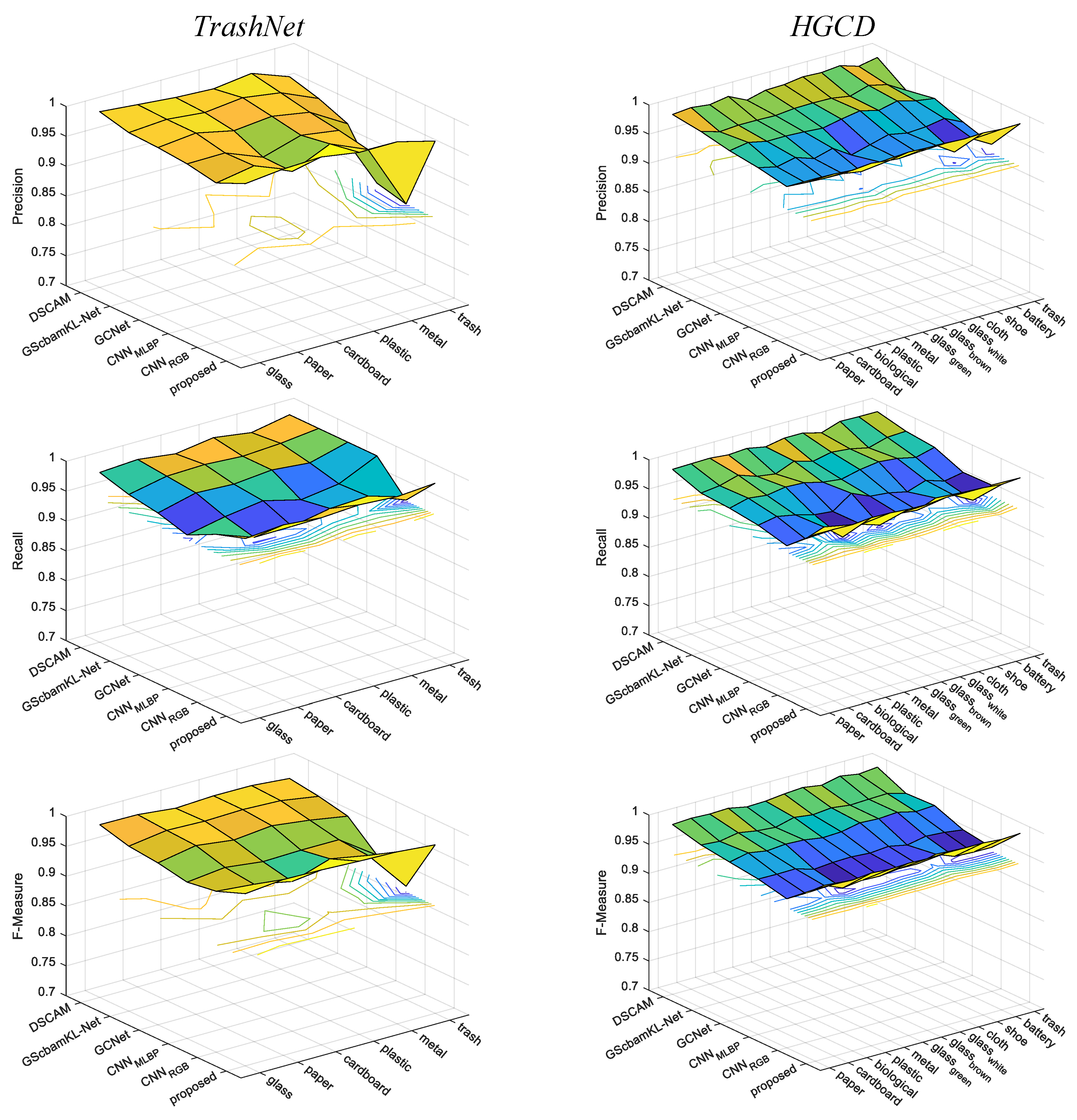

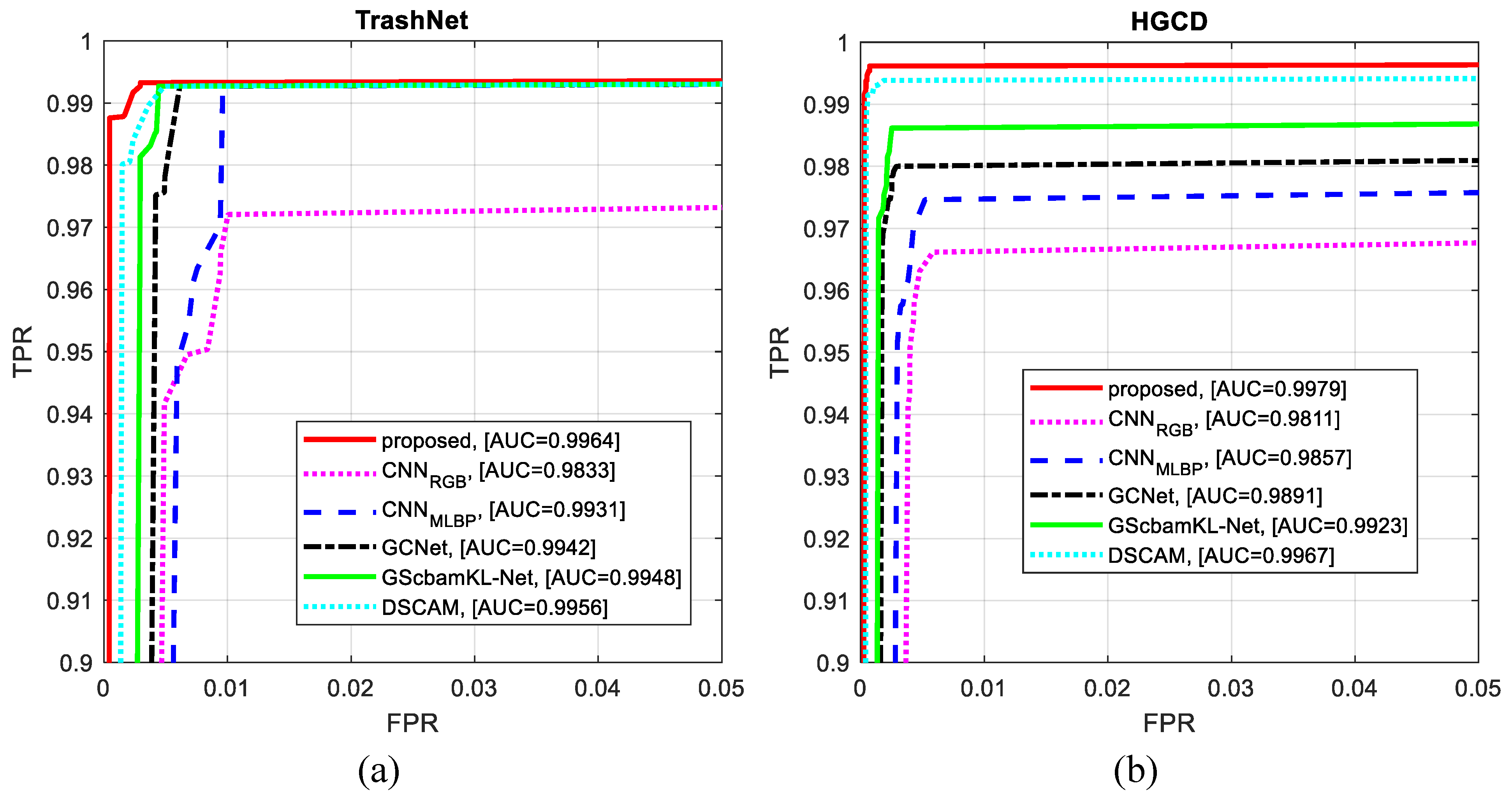

| Dataset | Method | Accuracy | F-Measure | Recall | Precision |

|---|---|---|---|---|---|

| TrashNet | Proposed | 99.0107 | 0.989 | 0.9902 | 0.9879 |

| CNNRGB | 95.8844 | 0.9503 | 0.957 | 0.9447 | |

| CNNMLBP | 96.0823 | 0.9557 | 0.965 | 0.9483 | |

| GCNet | 97.5465 | 0.9734 | 0.9775 | 0.9697 | |

| GScamKL-Net | 98.1401 | 0.9802 | 0.983 | 0.9775 | |

| DSCAM | 98.5358 | 0.9843 | 0.9859 | 0.9827 | |

| HGCD | Proposed | 99.4125 | 0.9941 | 0.9941 | 0.9941 |

| CNNRGB | 95.2739 | 0.9526 | 0.9529 | 0.9523 | |

| CNNMLBP | 96.0066 | 0.9599 | 0.96 | 0.9598 | |

| GCNet | 97.4587 | 0.9745 | 0.9747 | 0.9744 | |

| GScamKL-Net | 97.7822 | 0.9776 | 0.978 | 0.9773 | |

| DSCAM | 99.0495 | 0.9906 | 0.9906 | 0.9906 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, N.; Wang, G.; Jia, D. A Hybrid Model for Household Waste Sorting (HWS) Based on an Ensemble of Convolutional Neural Networks. Sustainability 2024, 16, 6500. https://doi.org/10.3390/su16156500

Wu N, Wang G, Jia D. A Hybrid Model for Household Waste Sorting (HWS) Based on an Ensemble of Convolutional Neural Networks. Sustainability. 2024; 16(15):6500. https://doi.org/10.3390/su16156500

Chicago/Turabian StyleWu, Nengkai, Gui Wang, and Dongyao Jia. 2024. "A Hybrid Model for Household Waste Sorting (HWS) Based on an Ensemble of Convolutional Neural Networks" Sustainability 16, no. 15: 6500. https://doi.org/10.3390/su16156500

APA StyleWu, N., Wang, G., & Jia, D. (2024). A Hybrid Model for Household Waste Sorting (HWS) Based on an Ensemble of Convolutional Neural Networks. Sustainability, 16(15), 6500. https://doi.org/10.3390/su16156500