Effects of ICT Integration in Teaching Using Learning Activities

Abstract

1. Introduction

2. Materials and Methods

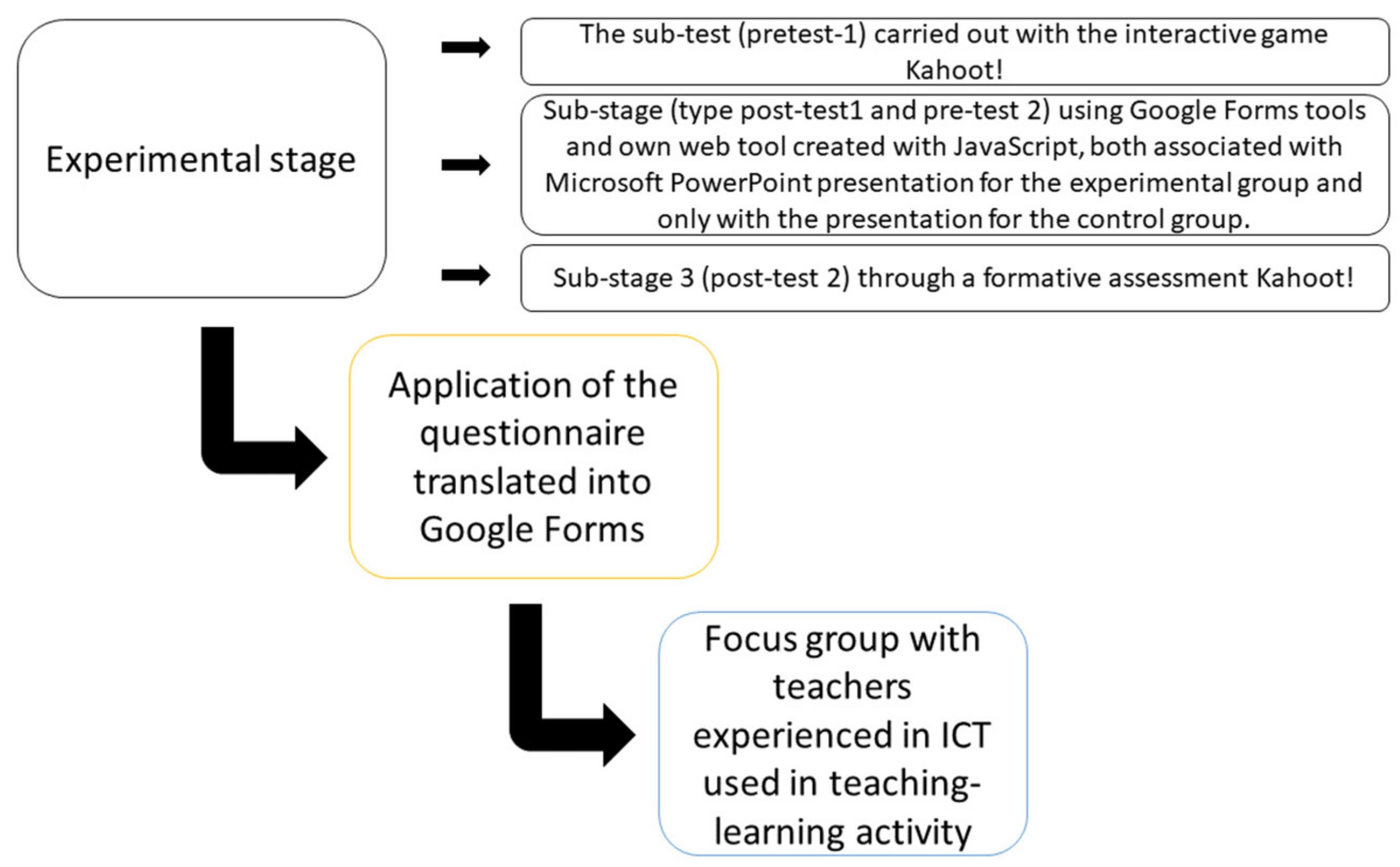

- The ascertainment substage (pre-test 1) for measuring the level of knowledge of students previously acquired through a standardized assessment with the interactive game of Kahoot!.

- The experimental substage (post-test 1 and pre-test 2) consisted of implementing in the teaching–learning sequence the interventions specified above and measuring the results obtained through a standardized assessment with the Kahoot! game, to observe if there is a statistically significant influence on the level of knowledge assimilated by students.

- Post-test 2 substage: measuring the level of knowledge of students acquired after applying the implemented methods, through a standardized assessment with the Kahoot! game.

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

- Below, you have access to data and applications, by category:

- Fifth grade:

- 1.

- Lesson 1 with Google Forms tool:

- 2.

- Lesson 1 with our JavaScript web tool:

- 3.

- Kahoot game lesson 1 feedback/formative assessment sequence:

- 4.

- Lesson 2 with Google Forms tool:

- 5.

- Lesson 2 with our JavaScript web tool:

- 6.

- Kahoot game lesson 2 feedback/formative assessment sequence:

- 7.

- Lesson 3 with Google Forms tool:

- 8.

- Lesson 3 with our JavaScript web tool:

- 9.

- Kahoot game lesson 3 feedback/formative assessment sequence:

- 10.

- Lesson 4 with Google Forms tool:

- 11.

- Lesson 4 with our JavaScript web tool:

- 12.

- Kahoot game lesson 4 feedback/formative assessment sequence:

- 13.

- Lesson 5 with Google Forms tool:

- 14.

- Lesson 5 with our JavaScript web tool:

- 15.

- Kahoot game lesson 5 feedback/formative assessment sequence:

- Sixth grade:

- 1.

- Lesson 1 with Google Forms tool:

- 2.

- Lesson 1 with our JavaScript web tool:

- 3.

- Kahoot game lesson 1 feedback/formative assessment sequence:

- Ninth grade:

- 1.

- Lesson 1 with Google Forms tool:

- 2.

- Lesson 1 with our JavaScript web tool:

- 3.

- Kahoot game lesson 1 feedback/formative assessment sequence:

- 4.

- Lesson 2 with Google Forms tool:

- 5.

- Lesson 1 with our JavaScript web tool:

- 6.

- Kahoot game lesson 2 feedback/formative assessment sequence:

- 7.

- Lesson 3 with Google Forms tool:

- 8.

- Lesson 3 with our JavaScript web tool:

- 9.

- Kahoot game lesson 3 feedback/formative assessment sequence:

- 10.

- Lesson 4 with Google Forms tool:

- 11.

- Lesson 4 with our JavaScript web tool:

- 12.

- Kahoot game lesson 4 feedback/formative assessment sequence:

- 13.

- Lesson 5 with Google Forms tool:

- 14.

- Lesson 5 with our JavaScript web tool:

- 15.

- Kahoot game lesson 5 feedback/formative assessment sequence:

- Tenth grade:

- 1.

- Lesson 1 with Google Forms tool:

- 2.

- Lesson 1 with our JavaScript web tool:

- 3.

- Kahoot game lesson 1 feedback/formative assessment sequence:

- Microsoft PowerPoint lessons Hydrosphere:

- Results Kahoot! games from the feedback/formative assessment sequence:

- Focus group guide on a sample of 29 teaching staff:

Conflicts of Interest

References

- Wang, Y.-H. The effectiveness of integrating teaching strategies into IRS activities to facilitate learning. J. Comput. Assist. Learn. 2017, 33, 35–50. [Google Scholar] [CrossRef]

- Sarpong, K.A.M.; Arthur, J.K.; Amoako, P.Y.O. Causes of failure of students in computer programming courses: The teacher-learner perspective. Int. J. Comput. Appl. 2013, 77, 27–32. [Google Scholar]

- Kennewell, S. Using affordances and constraints to evaluate the use of ICT in teaching and learning. J. IT Teach. Educ. 2001, 10, 101–116. [Google Scholar]

- Harrison, C.; Comber, C.; Fisher, T.; Haw, K.; Lewin, C.; Linzer, E.; McFarlane, A.; Mavers, D.; Scrimshaw, P.; Somekh, B.; et al. Impact2: The Impact of Information and Communication Technologies on Pupil Learning and Attainment; Becta: Coventry, UK, 2002. [Google Scholar]

- Kennewell, S.; Tanner, H.; Jones, S.; Beauchamp, G. Analysing the use of interactive technology to implement interactive teaching. J. Comput. Assist. Learn. 2007, 24, 61–73. [Google Scholar] [CrossRef]

- Toma, F.; Diaconu, D.C.; Popescu, C.M. The Use of the Kahoot! Learning Platform as a Type of Formative Assessment in the Context of Pre-University Education during the COVID-19 Pandemic Period. Educ. Sci. 2021, 11, 649. [Google Scholar] [CrossRef]

- Toma, F.; Diaconu, D.C.D. The Efficiency of Using the Google Forms Tool at the Stage of a Lesson Focusing on Directing the Teaching-Learning Process for Geography Discipline—An Online Model. Ann. Univ. Craiova 2022, 23, 101–124. [Google Scholar] [CrossRef]

- Chakma, U.; Li, B.; Kabuhung, G. Creating online metacognitive spaces: Graduate research writing during the COVID-19 pandemic. Issues Educ. Res. 2021, 31, 37–55. [Google Scholar]

- Rios-Campos, C.; Gutiérrez Valverde, K.; Vilchez de Tay, S.B.; Reto Gómez, J.; Agreda Cerna, H.W.; Lachos Dávila, A. Argentine Universities: Problems, COVID-19, ICT & Efforts. Cuest. Políticas 2022, 40, 880–884. [Google Scholar]

- Bhagwat, M.; Kulkarni, P. Impact of COVID-19 on the Indian ICT Industry. Cardiometry 2022, 23, 699–709. [Google Scholar]

- Li, B. Ready for Online? Exploring EFL Teachers’ ICT Acceptance and ICT Literacy During COVID-19 in Mainland China. J. Educ. Comput. Res. 2022, 60, 196–219. [Google Scholar] [CrossRef]

- Tomei, L.A. Taxonomy for the Technology Domain; Information Science Publishing, Robert Morris University: Moon Township, PA, USA, 2005. [Google Scholar]

- Zhang, P.; Aikman, S. Attitudes in ICT Acceptance and use. In Human-Computer Interaction; Jacko, J., Ed.; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- García-Perales, R.; Almeida, L. An enrichment program for students with high intellectual ability: Positive effects on school adaptation. Comunicar 2019, 60, 39–48. [Google Scholar] [CrossRef]

- Glover, M.J. Google Forms can stimulate conersations in discussion-based seminars? An activity theory perspective. South Afr. J. High. Educ. 2020, 34, 99–115. [Google Scholar] [CrossRef]

- Murphy, M.P.A. “Blending” Docent Learning: Using Google Forms Quizzes to Increase Efficiency in Interpreter Education at Fort Henry. J. Mus. Educ. 2018, 43, 47–54. [Google Scholar] [CrossRef]

- Rejón-Guardia, F.; Polo-Peña, A.I.; Maraver-Tarifa, G. The acceptance of a personal learning environment based on Google apps: The role of subjective norms and social image. J. Comput. High. Educ. 2019, 32, 203–233. [Google Scholar] [CrossRef]

- Basri, M.; Husain, B.; Modayama, W. University students’ perceptions in implementing asynchronous learning during Covid-19 era. Metathesis J. Engl. Lang. Lit. Teach. 2021, 4, 263–276. [Google Scholar] [CrossRef]

- Simamora, R.M. The Challenges of Online Learning during the COVID-19 Pandemic: An Essay Analysis of Performing Arts Education Students. Stud. Learn. Teach. 2020, 1, 86–103. [Google Scholar] [CrossRef]

- Liu, S.H.J.; Lan, Y.J. Social Constructivist Approach to Web-Based EFL Learning: Collaboration, Motivation, and Perception on the Use of Google Docs. Educ. Technol. Soc. 2016, 19, 171–186. [Google Scholar]

- Zhang, R.; Zou, D. Types, features, and effectiveness of technologies in collaborative writing for second language learning. Comput. Assist. Lang. Learn. 2022, 35, 2391–2422. [Google Scholar] [CrossRef]

- Andrew, M. Collaborating Online with Four Different Google Apps: Benefits to Learning And Usefulness for Future Work. J. Asia TEFL 2019, 16, 1268–1288. [Google Scholar] [CrossRef]

- Bondarenko, O.; Mantulenko, S.; Pikilnyak, A. Google Classroom as a tool of support of blended learning for geography students. arXiv 2019, arXiv:1902.00775. [Google Scholar]

- Basilaia, G.; Dgebuadze, M.; Kantaria, M.; Chokhonelidze, G. Replacing the classic learning form at universities as an immediate response to the COVID-19 virus infection in Georgia. Int. J. Res. Appl. Sci. Eng. Technol. 2020, 8, 101–108. [Google Scholar] [CrossRef]

- Albashtawi, A.; Al Bataineh, K. The Effectiveness of Google Classroom Among EFL Students in Jordan: An Innovative Teaching and Learning Online Platform. Int. J. Emerg. Technol. Learn. 2020, 15, 78–88. [Google Scholar] [CrossRef]

- Okmawati, M. The use of Google Classroom during pandemic. J. Engl. Lang. Teach. 2020, 9, 438–443. [Google Scholar] [CrossRef]

- Krumm, S.; Thum, I. Distance learning on the Web supported by JavaScript: A critical appraisal with examples from clay mineralogy and knowledge-based tests. Comput. Geosci. 1998, 24, 641–647. [Google Scholar] [CrossRef]

- Jaimez-González, C.R. Evaluation of online teaching resources to support the teaching-learning process of web programming with JavaScript and Java Server Pages. Dilemas Contemp. Educ. Politica Valores 2019, 6, 54. [Google Scholar]

- Karaaslan, H.; Kilic, N.; Guven-Yalcin, G.; Gullu, A. Students’ reflections on vocabulary learning through synchronous and asynchronous games and activities. Turk. Online J. Distance Educ. 2018, 19, 53–70. [Google Scholar] [CrossRef]

- Gee, J.P. What video games have to teach us about learning and literacy. Comput. Entertain. 2003, 1, 20. [Google Scholar] [CrossRef]

- Sharples, M. The design of personal mobile technologies for lifelong learning. Comput. Educ. 2020, 34, 177–193. [Google Scholar] [CrossRef]

- Cheng, M.T.; Lin, Y.W.; She, H.C. Learning through playing Virtual Age: Exploring the interactions among student concept learning, gaming performance, in-game behaviors, and the use of in-game characters. Comput. Educ. 2015, 86, 18–29. [Google Scholar] [CrossRef]

- Lin, Y.C.; Hsieh, Y.H.; Hou, H.T.; Wang, S.M. Exploring students’ learning and gaming performance as well as attention through a drill-based gaming experience for environmental education. J. Comput. Educ. 2019, 6, 315–334. [Google Scholar] [CrossRef]

- Cheng, M.T.; Annetta, L. Student’s learning outcomes and learning experiences through playing a Serious Educational Game. J. Biol. Educ. 2012, 46, 203–213. [Google Scholar] [CrossRef]

- Elsherbiny, M.M.K.; Al Maamari, R.H. Game-based learning throug mobile phone apps: Effectively enhancing learning for social work students. Soc. Work. Educ. 2020, 40, 315–332. [Google Scholar] [CrossRef]

- Annetta, L.; Mangrum, J.; Holmes, S.; Collazo, K.; Cheng, M.T. Bridging Realty to Virtual Reality: Investigating gender effect and student engagement on learning though video game play in an elementary school classroom. Int. J. Sci. Educ. 2009, 31, 1091–1113. [Google Scholar] [CrossRef]

- Zaharias, P.; Chatzeparaskevaidou, I.; Karaoli, F. Learning Geography Through Serious Games: The Effects of 2-Dimensional and 3-Dimensional Games on Learning Effectiveness, Motivation to Learn and User Experience. Int. J. Gaming Comput. Mediat. Simul. 2017, 9, 28–44. [Google Scholar] [CrossRef]

- Parker, R.; Thomsen, B.S.; Berry, A. Learning Through Play at School—A Framework for Policy and Practice. Front. Educ. 2002, 7, 1–12. [Google Scholar] [CrossRef]

- Mullik, R.; Haque, S.S. Film as a pedagogical tool for geography during the pandemic induced virtual classes. GeoJournal 2022, 88, 465–477. [Google Scholar] [CrossRef]

- Iwamoto, D.H.; Hargis, J.; Taitano, E.J.; Vuong, K. Analyzing the efficacy of the testing effect using KahootTM on student performance. Turk. Online J. Distance Educ. 2017, 18, 80–93. [Google Scholar] [CrossRef]

- Dolezal, D.; Posekany, A.; Motschnig, R.; Kirchweger, T.; Pucher, R. Impact of game-based student response systems on factors of learning in a person-centered flipped classroom on C programming. In EdMedia+ Innovate Learning; Association for the Advancement of Computing in Education (AACE): Waynesville, NC, USA, 2018; pp. 1143–1153. [Google Scholar]

- Taylor, B.; Reynolds, E. Building vocabulary skills and classroom engagement with Kahoot. In Proceedings of the 26th Korea TESOL International Conference, Seoul, Republic of Korea, 13–14 October 2018; p. 89. [Google Scholar]

- Wang, A.I.; Tahir, R. The effect of using Kahoot! for learning—A literature review. Comput. Educ. 2020, 149, 103818. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Routledge: New York, NY, USA, 1988; 567p. [Google Scholar] [CrossRef]

- Rosenthal, R.; Rosnow, R.L.; Rubin, D.B. Contrasts and Effect Sizes in Behavioral Research: A Correlational Approach; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Schagen, L.; Hodgen, E. How Much Difference Does It Make? Notes on Understanding, Using, and Calculating Effect Sizes for Schools; Research Division, Ministry of Education and Edith Hodgen, New Zealand Council for Educational Research: Wellington, New Zealand, 2009. [Google Scholar]

- Hattie, J.A.C. Visible Learning for Teachers—Maximizing Impact on Learning; Routledge: New York, NY, USA, 2012. [Google Scholar] [CrossRef]

- Butler, J.A. Use of teaching methods within the lecture format. Med. Teach. 1992, 14, 11–25. [Google Scholar] [CrossRef]

- Wastiau, P.; Blamire, R.; Kearney, C.; Quittre, V.; Van de Gaer, E.; Monseur, C. The use of ICT in education: A survey of schools in Europe. Eur. J. Educ. 2013, 48, 11–27. [Google Scholar] [CrossRef]

- Apostu, O.; Balica, M.; Fartusnic, C.; Horga, I.; Novak, C.; Voinea, L. Analysis of the pre-university education system in Romania from the perspective of statistical indicators. In Data-Based Education Policy; Institute of Education Sciences: Bucharest, Romania, 2015. [Google Scholar]

- Fink, A. How to Conduct Surveys—A Step-by-Step Guide; SAGE Publications, Inc.: New York, NY, USA, 2015; ISBN 9781483378480. [Google Scholar]

- Frost, J. Hypothesis Testing: An Intuitive Guide for Making Data Driven Decisions; Jim Publishing: Costa Mesa, CA, USA, 2020. [Google Scholar]

- Pfefferman, D.; Rao, C.R. Sample Surveys Design, Methods and Applications; Elsevier: Amsterdam, The Netherlands; Boston, MA, USA, 2009. [Google Scholar]

- Martin, D.; Treves, R. Embedding e-learning in geographical practice. Br. J. Educ. Technol. 2007, 38, 773–783. [Google Scholar] [CrossRef]

- Mondozzi, M.A.; Harper, M.A. In search of effective education in burn and fire prevention. J. Burn. Care Rehabil. 2001, 22, 277–281. [Google Scholar] [CrossRef]

- Almaiah, M.A.; Al-Khasawneh, A.; Althunibat, A. Exploring the Critical Challenges and Factors Influencing the E-Learning System Usage during COVID-19 Pandemic. Educ. Inf. Technol. 2020, 25, 5261–5280. [Google Scholar] [CrossRef]

- Ana, A.; Minghat, A.D.; Purnawarman, P.; Saripudin, S.; Muktiarni, M.; Dwiyanti, V.; Mustakim, S.S. Students’ Perceptions of the Twists and Turns of E-learning in the Midst of the Covid 19 Outbreak. Rom. Mag. Multidimens. Educ. 2020, 12 (Suppl. 2), 15–26. [Google Scholar] [CrossRef]

- Gewin, V. Into the Digital Classroom. Five Tips for Moving Teaching Online as COVID-19 Takes Hold. Nature 2020, 580, 295–296. [Google Scholar] [CrossRef]

- Joo, Y.J.; Lee, H.W.; Ham, Y. Integrating user interface and personal innovativeness into the TAM for mobile learning in Cyber University. J. Comput. High. Educ. 2014, 26, 143–158. [Google Scholar] [CrossRef]

- Ruan, L.; Long, Y.; Zhang, L.; Lv, G.A. Platform and Its Applied Modes for Geography Fieldwork in Higher Education Based on Location Services. ISPRS Int. J. Geo-Inf. 2021, 10, 225. [Google Scholar] [CrossRef]

- Barab, S.; Thomas, M.; Dodge, T.; Carteaux, R.; Tuzun, H. Making learning fun: Quest Atlantis, a game without guns. Educ. Technol. Res. Dev. 2005, 53, 86–107. [Google Scholar] [CrossRef]

- Chirca, R. The Educational Potential of Video Games. Manag. Intercult. 2015, 34, 415–419. [Google Scholar]

- Toma, F.; Diaconu, C.D.; Dascălu, G.V.; Nedelea, A.; Peptenatu, D.; Pintilii, R.D.; Marian, M. Assessment of geography teaching-learning process through game, in pre-university education. Stud. UBB Geogr. 2022, 67, 93–121. [Google Scholar] [CrossRef]

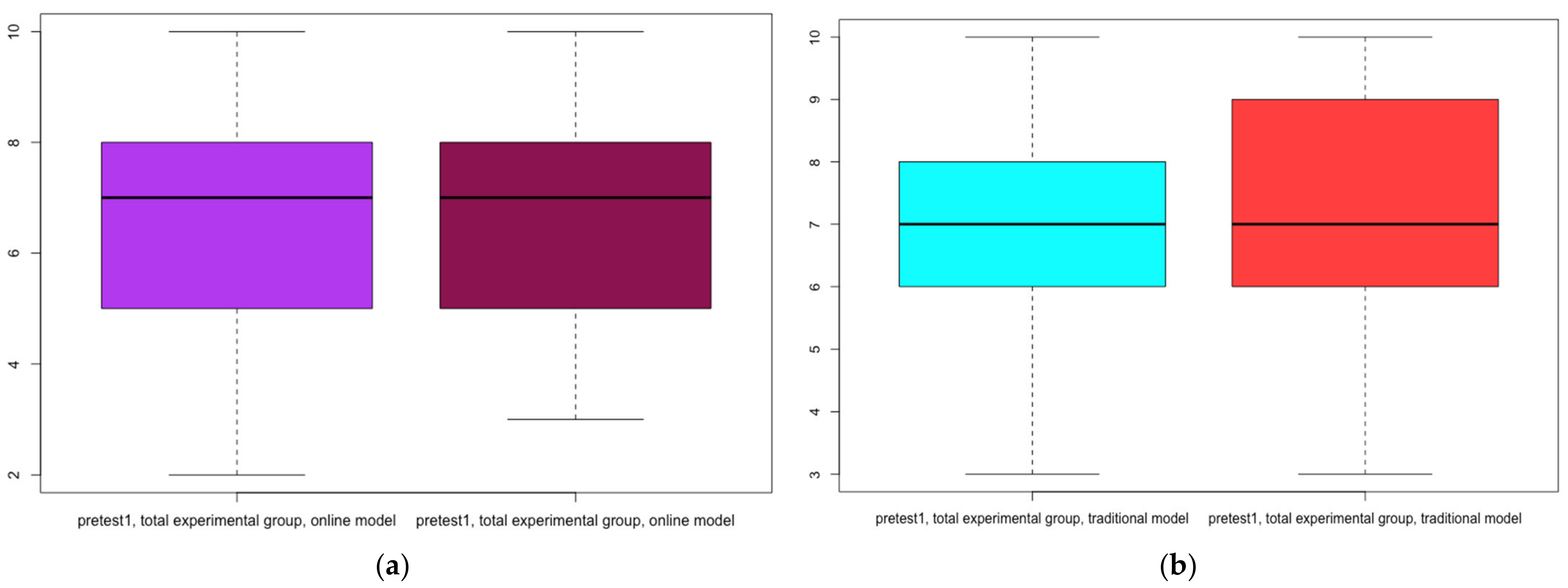

| Group Type of Test | Intervention Method | Term and Number of Tests | Number of Students | Minimum | Q1 | Median | Q3 | Maximum | Average | Standard Deviation |

|---|---|---|---|---|---|---|---|---|---|---|

| Experimental group Pre-test 1 Online model | Microsoft Power Point presentation | 5 weeks/ 552 tests | 168 | 2 | 5 | 7 | 8 | 10 | 6.645 | 1.77087 |

| Control group Pre-test1 Online model | Microsoft Power Point presentation | 5 weeks/ 522 tests | 168 | 3 | 5 | 7 | 8 | 10 | 6.682 | 1.63409 |

| Experimental group Pre-test 1 Online model | Microsoft Power Point presentation | 5 weeks/ 699 tests | 168 | 3 | 6 | 7 | 8 | 10 | 7.33 | 1.51520 |

| Control group Pre-test 1 Online model | Microsoft Power Point presentation | 5 weeks/ 651 tests | 170 | 3 | 6 | 7 | 9 | 10 | 7.279 | 1.55354 |

| Group Type of Test | Intervention Method | p-Value Shapiro–Wilk Test | p-Value Test F Experimental Group/Control Group | p-Value Mann–Whitney U-Test Experimental Group /Control Group | Effect Size r Control Group/Experimental Group |

|---|---|---|---|---|---|

| Experimental group Post-test 1/Pre-test 2 Online model | Google Forms tool and Microsoft Power Point presentation | <2.2 × 10−16 (converted to the value of <0.0001) | =1.791 × 10−5 (converted to the value of <0.0001) | <2.2 × 10−16 (converted to the value of <0.0001) | 0.239, small statistical effect, which indicates that it is an association |

| Control group Post-test 1/Pre-test 2 Online model | Microsoft Power Point presentation | =2.224 × 10−13 (converted to the value of <0.0001) | |||

| Experimental group Post-test 1/Pre-test 2 Traditional model | Own web game created JavaScript and Microsoft Power Point presentation | <2.2× 10−16 (converted to the value of <0.0001) | =3.426 × 10−16 (converted to the value of <0.0001) | <2.2 × 10−16 (converted to the value of <0.0001) | 0.745, very high statistical effect, which indicates that it is a very strong association |

| Control group Post-test 1/Pre-test 2 Traditional model | Microsoft Power Point presentation | =3.641 × 10−14 (converted to the value of <0.0001) | =3.426 × 10−16 (converted to the value of <0.0001) | <2.2 × 10−16 (converted to the value of <0.0001) | 0.745, very high statistical effect, which indicates that it is a very strong association |

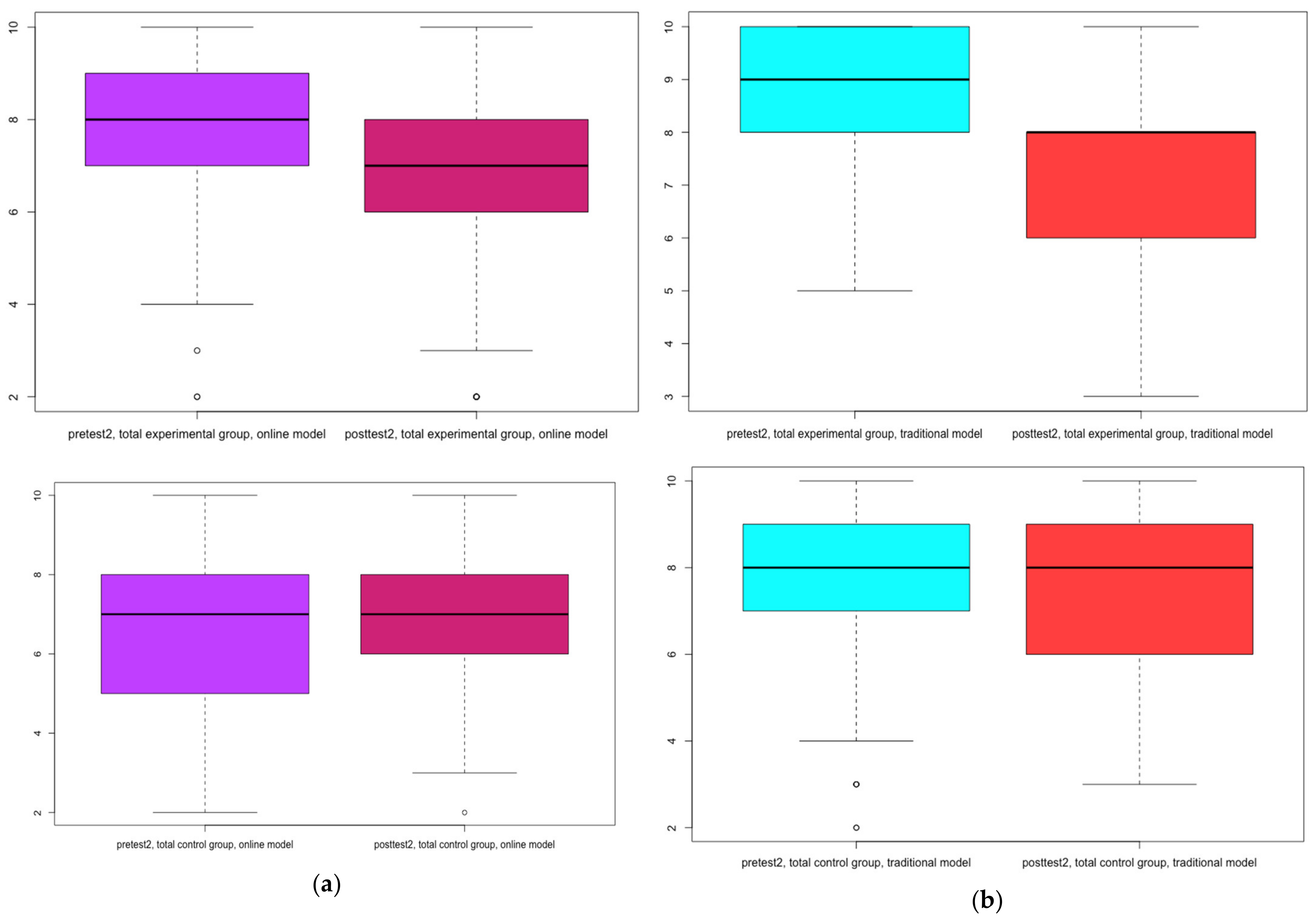

| Group Type of Test | Intervention Method | Term and Number of Tests | Number of Students | Minimum | Q1 | Median | Q3 | Maximum | Average | Standard Deviation |

|---|---|---|---|---|---|---|---|---|---|---|

| Experimental group Post-test 1/ Pre-test 2 Online model | Google Forms tool and Microsoft Power Point presentation | 5 weeks/ 488 tests | 168 | 2 | 7 | 8 | 9 | 10 | 8.068 | 1.5185 |

| Control group Post-test 1 /Pre-test 2 Online model | Microsoft Power Point presentation | 5 weeks/ 487 tests | 168 | 2 | 6 | 7 | 8 | 10 | 6.876 | 1.8247 |

| Experimental group Post-test 1/ Pre-test 2 Traditional model | Our own web game created JavaScript and Microsoft Power Point presentation | 5 weeks/ 394 tests | 168 | 5 | 8 | 9 | 10 | 10 | 8.82 | 1.0984 |

| Control group Post-test 1/ Pre-test 2 Traditional model | Microsoft Power Point presentation | 5 weeks/ 372 tests | 170 | 2 | 7 | 8 | 9 | 10 | 7.74 | 1.6750 |

| Group Type of Test | Intervention Method | Number of Test and Term | Number of Students | Minimum | Q1 | Median | Q3 | Maximum | Average | Standard Deviation |

|---|---|---|---|---|---|---|---|---|---|---|

| Experimental group Pre-test 2 Online model | Own web game created in JavaScript and Microsoft Power Point presentation | 5 weeks/ 488 tests | 168 | 2 | 7 | 8 | 9 | 10 | 8.068 | 1.5375 |

| Experimental group Post-test 2 Online model | Microsoft Power Point presentation | 5 weeks/ 596 tests | 168 | 2 | 6 | 7 | 8 | 10 | 6.788 | 1.6541 |

| Control group Pre-test 2 Online model | Microsoft Power Point presentation | 5 weeks/ 487 tests | 168 | 2 | 5 | 7 | 8 | 10 | 6.771 | 1.8192 |

| Control group Post-test 2 Online model | Microsoft Power Point presentation | 5 weeks/ 649 tests | 168 | 2 | 6 | 7 | 8 | 10 | 6.783 | 1.5685 |

| Experimental group Pre-test 2 Traditional model | Own web game created in JavaScript and Microsoft Power Point presentation | 5 weeks/ 394 tests | 168 | 5 | 8 | 9 | 10 | 10 | 8.82 | 1.0984 |

| Experimental group Post-test 2 Traditional model | Microsoft Power Point presentation | 5 weeks/ 372 tests | 168 | 3 | 6 | 8 | 8 | 10 | 7.203 | 1.5599 |

| Control group Pre-test 2 Traditional model | Microsoft Power Point presentation | 5 weeks/ 633 tests | 170 | 2 | 7 | 8 | 9 | 10 | 7.74 | 1.6750 |

| Control group Post-test 2 Traditional model | Microsoft Power Point presentation | 5 weeks/ 568 tests | 170 | 3 | 6 | 7 | 8 | 10 | 7.422 | 1.5051 |

| Category: Perceptions of the Benefits of Integrating the JavaScript Web Game into Learning | Category: Perceptions of the Benefits of Google Forms in Learning |

|---|---|

|

|

| Category: Perceptions of Disadvantages of Integrating JavaScript Web Game into Learning | Category: Perceptions of the Disadvantages of Google Forms Integration |

|---|---|

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Toma, F.; Ardelean, A.; Grădinaru, C.; Nedelea, A.; Diaconu, D.C. Effects of ICT Integration in Teaching Using Learning Activities. Sustainability 2023, 15, 6885. https://doi.org/10.3390/su15086885

Toma F, Ardelean A, Grădinaru C, Nedelea A, Diaconu DC. Effects of ICT Integration in Teaching Using Learning Activities. Sustainability. 2023; 15(8):6885. https://doi.org/10.3390/su15086885

Chicago/Turabian StyleToma, Florentina, Andreea Ardelean, Cătălin Grădinaru, Alexandru Nedelea, and Daniel Constantin Diaconu. 2023. "Effects of ICT Integration in Teaching Using Learning Activities" Sustainability 15, no. 8: 6885. https://doi.org/10.3390/su15086885

APA StyleToma, F., Ardelean, A., Grădinaru, C., Nedelea, A., & Diaconu, D. C. (2023). Effects of ICT Integration in Teaching Using Learning Activities. Sustainability, 15(8), 6885. https://doi.org/10.3390/su15086885