Machine Learning Applications for Reliability Engineering: A Review

Abstract

1. Introduction

- Is the application in RAMS or in PHM?

- What machine learning methods are used?

- What system is under study?

- Does the dataset come from real data, simulated data, or a public benchmark dataset?

- Applied Science & Technology Source

- Applied science and computing database

- Computers & Applied Sciences Complete

- Applied science and computing database

- Works not related to reliability engineering, i.e., not related to either RAMS or PHM

- Works dated before 2017

- Works that are not in English or French

- Full text available

- Peer-reviewed journal

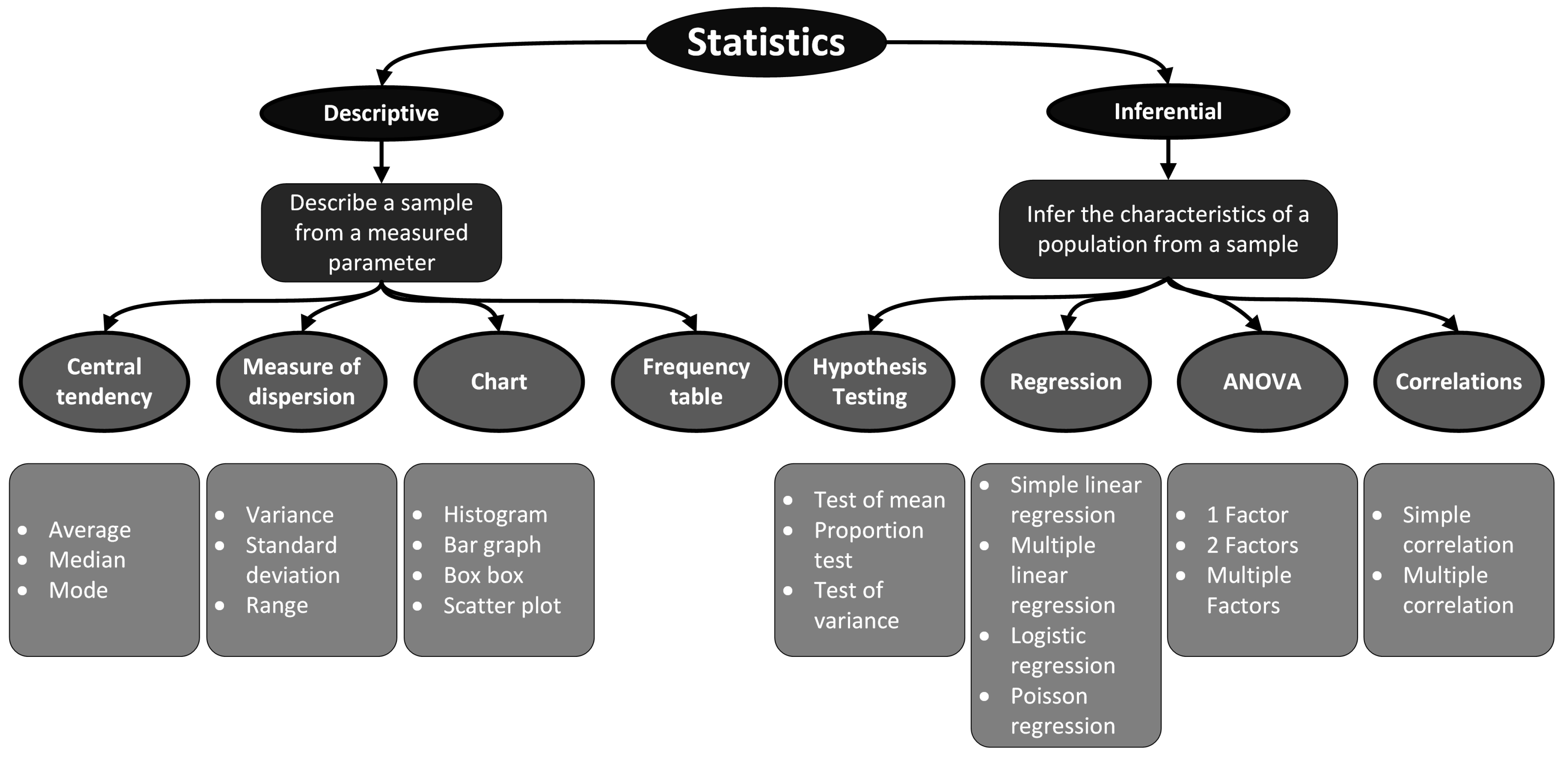

2. Modeling

2.1. Reliability Engineering

2.2. Mathematical Modeling

2.3. RAMS and PHM Approaches

2.4. Machine Learning, Artificial Intelligence and Data Science

2.4.1. History of Artificial Intelligence

2.4.2. Supervised Learning

2.4.3. Unsupervised Learning

2.4.4. Reinforcement Learning

2.4.5. Deep Learning

2.4.6. Data Science

3. Machine Learning

3.1. Data Science Modeling Process

3.1.1. Data Acquisition

3.1.2. Data Cleaning

3.1.3. Data Exploration

3.1.4. Feature Engineering

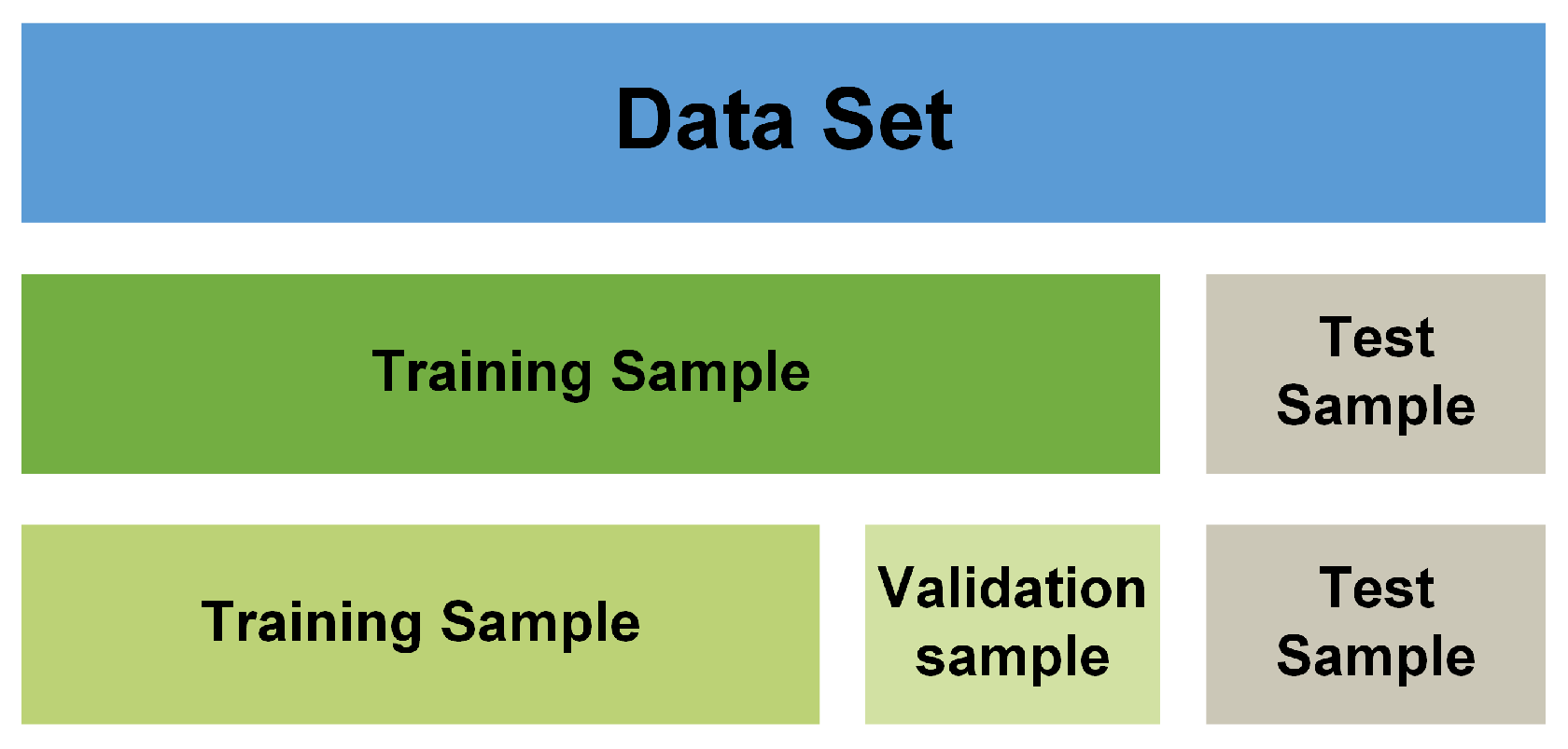

3.1.5. Model Conception

4. ML Applications Analysis

4.1. Results

4.1.1. Execution and Filtering of Results

4.1.2. Applications Analysis

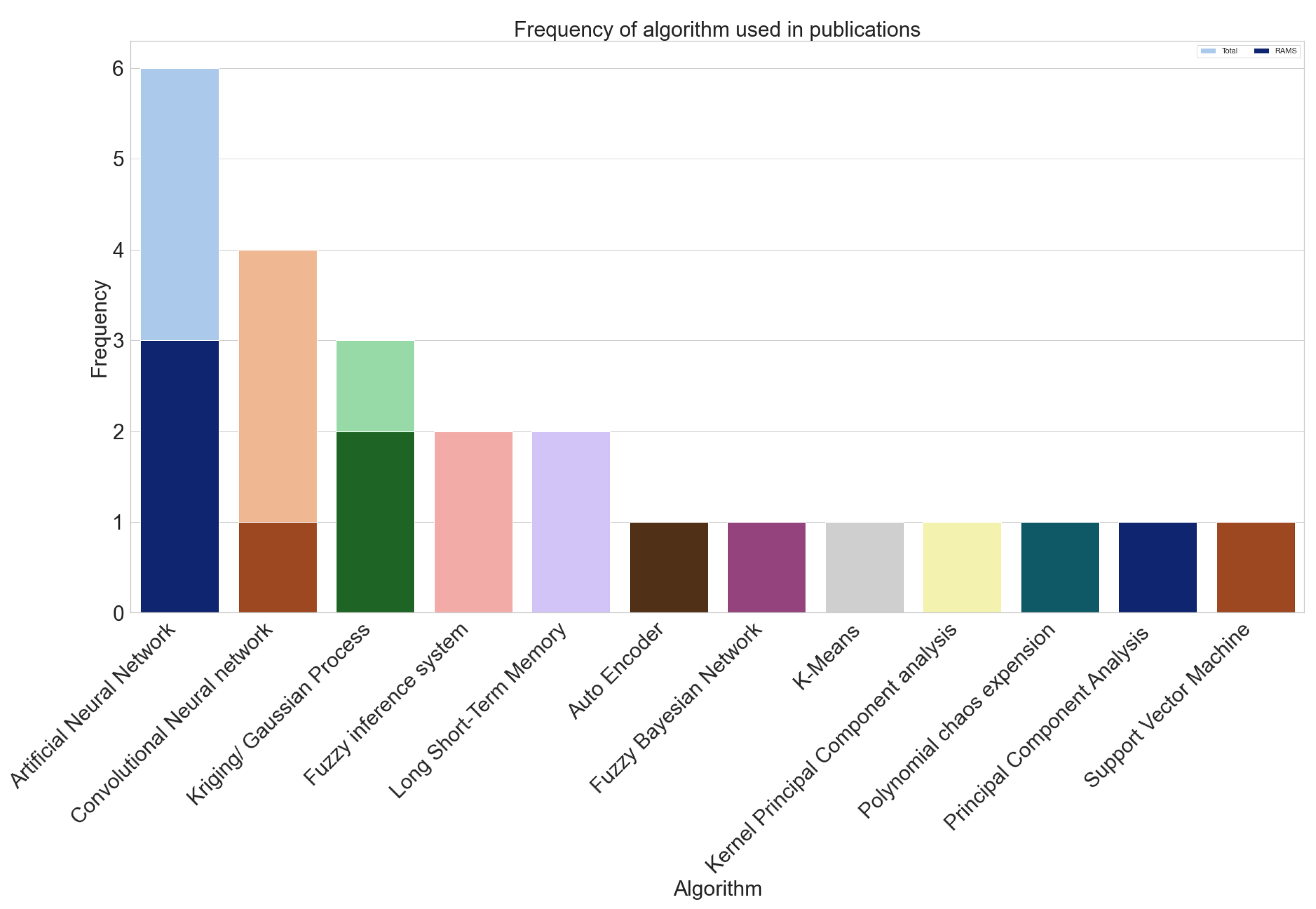

4.1.3. Machine Learning Methods Review

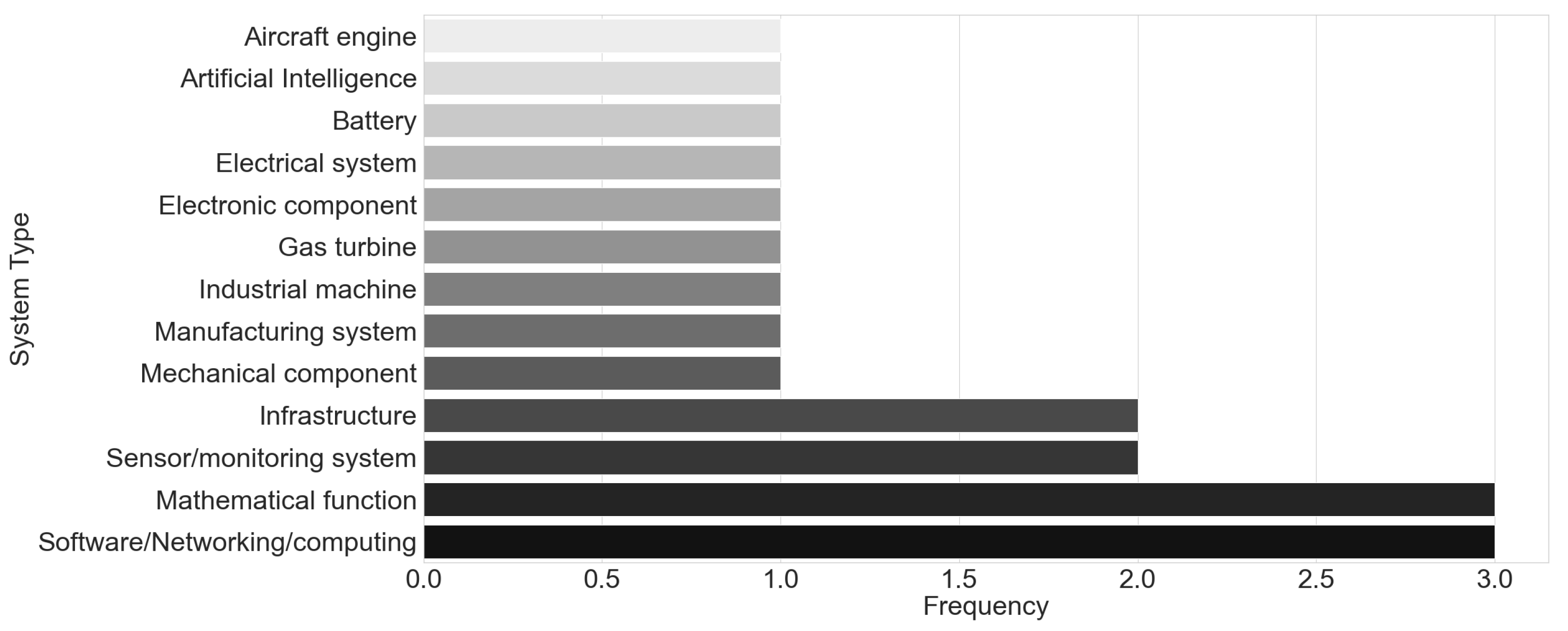

4.1.4. Datasets and Systems under Study

4.2. Related Works

4.3. Discussion

Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Iafrate, F. Artificial Intelligence and Big Data: The Birth of a New Intelligence; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Sage, M.; Zhao, Y.F. Is Machine Learning Suitable to Improve My Process? McGill University: Montreal, QC, Canada, 2020. [Google Scholar]

- Pecht, M.; Kumar, S. Data analysis approach for system reliability, diagnostics and prognostics. In Proceedings of the Pan Pacific Microelectronics Symposium, Kauai, HI, USA, 22–24 January 2008; Volume 795, pp. 1–9. [Google Scholar]

- Dersin, P. Prognostics & Health Management for Railways: Experience, Opportunities, Challenges. In Proceedings of the Applied Reliability and Durability Conference, Amsterdam, The Netherlands, 23–25 June 2020. [Google Scholar]

- Stillman, G.A.; Kaiser, G.; Blum, W.; Brown, J.P. Teaching Mathematical Modelling: Connecting to Research and Practice; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Stender, P. The use of heuristic strategies in modelling activities. ZDM 2018, 50, 315–326. [Google Scholar] [CrossRef]

- Biau, G.; Herzlich, M.; Droniou, J. Mathematiques et Statistique pour les Sciences de la Nature: Modeliser, Comprendre et Appliquer; Collection Enseignement sup.; Mathematiques, EDP Sciences: Les Ulis, France, 2010. [Google Scholar]

- Hicks, C.R.; Turner, K.V. Fundamental Concepts in the Design of Experiments, 5th ed.; Oxford University Press: New York, NY, USA, 1999. [Google Scholar]

- Lewis, E.E. Introduction to Reliability Engineering, 2nd ed.; Reliability Engineering; J. Wiley and Sons: New York, NY, USA, 1996; 435p. [Google Scholar]

- Van Harmelen, F.; Lifschitz, V.; Porter, B. Handbook of Knowledge Representation; Elsevier: Amsterdam, The Netherlands, 2008. [Google Scholar]

- Hurwitz, J.; Kirsch, D. Machine Learning for Dummies; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2018. [Google Scholar]

- Haenlein, M.; Kaplan, A. A brief history of artificial intelligence: On the past, present, and future of artificial intelligence. Calif. Manag. Rev. 2019, 61, 5–14. [Google Scholar] [CrossRef]

- Press, G. A Very Short History of Artificial Intelligence (AI). Forbes Magazine, 30 December 2016. [Google Scholar]

- IBM. Big DATA for the Intelligence Community; Report; IBM: Armonk, NJ, USA, 2013. [Google Scholar]

- Russell, S.; Norvig, P. Artificial Intelligence: A Modern Approach; Pearson Education, Inc.: London, UK, 2002. [Google Scholar]

- Fahad, A.; Alshatri, N.; Tari, Z.; Alamri, A.; Khalil, I.; Zomaya, A.Y.; Foufou, S.; Bouras, A. A survey of clustering algorithms for big data: Taxonomy and empirical analysis. IEEE Trans. Emerg. Top. Comput. 2014, 2, 267–279. [Google Scholar] [CrossRef]

- Akalin, N.; Loutfi, A. Reinforcement learning approaches in social robotics. Sensors 2021, 21, 1292. [Google Scholar] [CrossRef]

- Zhang, H.; Yu, T. Taxonomy of reinforcement learning algorithms. In Deep Reinforcement Learning; Springer: Singapore, 2020; pp. 125–133. [Google Scholar]

- Zhu, N.; Liu, X.; Liu, Z.; Hu, K.; Wang, Y.; Tan, J.; Huang, M.; Zhu, Q.; Ji, X.; Jiang, Y. Deep learning for smart agriculture: Concepts, tools, applications, and opportunities. Int. J. Agric. Biol. Eng. 2018, 11, 32–44. [Google Scholar] [CrossRef]

- Van der Aalst, W.M. Process Mining: Data Science in Action; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Agarwal, S. Understanding the Data Science Lifecycle. 2018. Available online: https://www.sudeep.co/data-science/2018/02/09/Understanding-the-Data-Science-Lifecycle.html (accessed on 26 March 2023).

- Ho, M.; Hodkiewicz, M.R.; Pun, C.F.; Petchey, J.; Li, Z. Asset Data Quality—A Case Study on Mobile Mining Assets; Springer: Berlin/Heidelberg, Germany, 2015; pp. 335–349. [Google Scholar]

- Murphy, G.D. Improving the quality of manually acquired data: Applying the theory of planned behaviour to data quality. Reliab. Eng. Syst. Saf. 2009, 94, 1881–1886. [Google Scholar] [CrossRef]

- Berti-Équille, L. Qualité des données. In Techniques de l’Ingénieur Bases de Données; Base Documentaire: TIB309DUO; Technique de l’ingénieur: Saint-Denis, France, 2018. [Google Scholar]

- Pecht, M.G.; Kang, M. Prognostics and Health Management of Electronics; Wiley: Hoboken, NJ, USA, 2018. [Google Scholar]

- Grus, J. Data Science from Scratch: First Principles with Python; O’Reilly Media: Sebastopol, CA, USA, 2019. [Google Scholar]

- Martinez, W.L.; Martinez, A.R.; Solka, J.L. Exploratory Data Analysis with MATLAB®; Chapman and Hall/CRC: Boca Raton, FL, USA, 2017. [Google Scholar]

- Ozdemir, S.; Susarla, D. Feature Engineering Made Easy: Identify Unique Features from Your Dataset in Order to Build Powerful Machine Learning Systems; Packt Publishing Ltd.: Birmingham, UK, 2018. [Google Scholar]

- Yi-Fan, C.; Yi-Kuei, L.; Cheng-Fu, H. Using Deep Neural Networks to Evaluate the System Reliability of Manufacturing Networks. Int. J. Perform. Eng. 2021, 17, 600–608. [Google Scholar] [CrossRef]

- Nabian, M.A.; Meidani, H. Deep Learning for Accelerated Seismic Reliability Analysis of Transportation Networks. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 443–458. [Google Scholar] [CrossRef]

- Wei-Ting, L.I.N.; Hsiang-Yun, C.; Chia-Lin, Y.; Meng-Yao, L.I.N.; Kai, L.; Han-Wen, H.U.; Hung-Sheng, C.; Hsiang-Pang, L.I.; Meng-Fan, C.; Yen-Ting, T.; et al. DL-RSIM: A Reliability and Deployment Strategy Simulation Framework for ReRAM-based CNN Accelerators. ACM Trans. Embed. Comput. Syst. 2022, 21, 1–29. [Google Scholar] [CrossRef]

- Gritsyuk, K.M.; Gritsyuk, V.I. Convolutional And Long Short-Term Memory Neural Networks Based Models For Remaining Useful Life Prediction. Int. J. Inf. Technol. Secur. 2022, 14, 61–76. [Google Scholar]

- Saxena, A.; Goebel, K. Turbofan Engine Degradation Simulation Data Set; NASA: Washington, DC, USA, 2008. [Google Scholar]

- Kara, A. A data-driven approach based on deep neural networks for lithium-ion battery prognostics. Neural Comput. Appl. 2021, 33, 13525–13538. [Google Scholar] [CrossRef]

- Saha, B.; Goebel, K. Battery Data Set. In NASA AMES Prognostics Data Repository; NASA: Washington, DC, USA, 2007. [Google Scholar]

- Kilkki, O.; Kangasrääsiö, A.; Nikkilä, R.; Alahäivälä, A.; Seilonen, I. Agent-based modeling and simulation of a smart grid: A case study of communication effects on frequency control. Eng. Appl. Artif. Intell. 2014, 33, 91–98. [Google Scholar] [CrossRef]

- Gomez Fernandez, J.F.; Olivencia, F.; Ferrero, J.; Marquez, A.C.; Garcia, G.C. Analysis of dynamic reliability surveillance: A case study. IMA J. Manag. Math. 2018, 29, 53–67. [Google Scholar] [CrossRef]

- Aremu, O.O.; Palau, A.S.; Parlikad, A.K.; Hyland-Wood, D.; McAree, P.R. Structuring Data for Intelligent Predictive Maintenance in Asset Management. IFAC-PapersOnLine 2018, 51, 514–519. [Google Scholar] [CrossRef]

- Fernandes, M.; Canito, A.; Bolón-Canedo, V.; Conceição, L.; Praça, I.; Marreiros, G. Data analysis and feature selection for predictive maintenance: A case-study in the metallurgic industry. Int. J. Inf. Manag. 2019, 46, 252–262. [Google Scholar] [CrossRef]

- Wan, J.; Tang, S.; Li, D.; Wang, S.; Liu, C.; Abbas, H.; Vasilakos, A.V. A manufacturing big data solution for active preventive maintenance. IEEE Trans. Ind. Inform. 2017, 13, 2039–2047. [Google Scholar] [CrossRef]

- Abu-Samah, A.; Shahzad, M.K.; Zamai, E.; Said, A.B. Failure Prediction Methodology for Improved Proactive Maintenance using Bayesian Approach. IFAC-PapersOnLine 2015, 48, 844–851. [Google Scholar] [CrossRef]

- Brundage, M.P.; Sexton, T.; Hodkiewicz, M.; Dima, A.; Lukens, S. Technical language processing: Unlocking maintenance knowledge. Manuf. Lett. 2021, 27, 42–46. [Google Scholar] [CrossRef]

- Naqvi, S.M.R.; Varnier, C.; Nicod, J.M.; Zerhouni, N.; Ghufran, M. Leveraging free-form text in maintenance logs through bert transfer learning. In Proceedings of the Progresses in Artificial Intelligence & Robotics: Algorithms & Applications: Proceedings of 3rd International Conference on Deep Learning, Artificial Intelligence and Robotics, (ICDLAIR) 2021; Springer: Berlin/Heidelberg, Germany, 2022; pp. 63–75. [Google Scholar]

- Payette, M.; Abdul-Nour, G.; Meango, T.J.M.; Côté, A. Improving Maintenance Data Quality: Application of Natural Language Processing to Asset Management. In 16th WCEAM Proceedings; Springer: Berlin/Heidelberg, Germany, 2023; pp. 582–589. [Google Scholar]

- Krishnan, R. Reliability Analysis of k-out-of-n: G System: A Short Review. Int. J. Eng. Appl. Sci. (IJEAS) 2020, 7, 21–24. [Google Scholar] [CrossRef]

- Odeyar, P.; Apel, D.B.; Hall, R.; Zon, B.; Skrzypkowski, K. A Review of Reliability and Fault Analysis Methods for Heavy Equipment and Their Components Used in Mining. Energies 2022, 15, 6263. [Google Scholar] [CrossRef]

- Reid, M. Reliability—A Python Library for Reliability Engineering, Version 0.5. 2020. Available online: https://zenodo.org/record/3938000#.ZC4xK3ZBxPY (accessed on 26 March 2023).

- Davidson-Pilon, C. Lifelines: Survival analysis in Python. J. Open Source Softw. 2019, 4, 1317. [Google Scholar] [CrossRef]

- Pölsterl, S. Scikit-survival: A Library for Time-to-Event Analysis Built on Top of scikit-learn. J. Mach. Learn. Res. 2020, 21, 1–6. [Google Scholar]

- Teubert, C.; Jarvis, K.; Corbetta, M.; Kulkarni, C.; Daigle, M. ProgPy Python Prognostics Packages. 2022. Available online: https://nasa.github.io/progpy (accessed on 26 March 2023).

- Seabold, S.; Perktold, J. statsmodels: Econometric and statistical modeling with python. In Proceedings of the 9th Python in Science Conference, Austin, TX, USA, 28 June–3 July 2010. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: www.tensorflow.org (accessed on 10 March 2023).

- Chollet, F. Keras. 2015. Available online: https://keras.io (accessed on 10 March 2023).

- Bird, S.; Klein, E.; Loper, E. Natural Language Processing with Python: Analyzing Text with the Natural Language Toolkit; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2009. [Google Scholar]

| Type | Structure | Applications |

|---|---|---|

| Artificial Neural Network | Input layer | Classification |

| Hidden layers | Regression | |

| Output layer | Pattern recognition | |

| Convolutional Neural Network | Input layer | |

| Convolution layers | Natural language processing | |

| Pooling layer | Image processing | |

| Fully connected layer | ||

| Output layer | ||

| Recurrent Neural Network | Input layer | Time series analysis |

| Hidden layers | Sentiment Analysis | |

| Output layer | Natural language processing |

| Rule | Numbers of Publications |

|---|---|

| Keywords | 547 |

| From 2017 to 2023 | 362 |

| Works not related to reliability engineering | 253 |

| English or French only | 251 |

| Full-text available | 38 |

| Peer-reviewed | 34 |

| Hand-selected | 19 |

| Title | Authors | Data Type | Algorithm |

|---|---|---|---|

| Effective software fault localization using predicted execution results | Gao, Wong et al., 2017 | Operational | K-Means |

| An Intelligent Reliability Assessment technique for Bipolar Junction Transistor using Artificial Intelligence Techniques | Bhargava and Handa 2018 | Experimental testing | Artificial Neural Network, Fuzzy Inference System |

| Active fault tolerant control based on a neuro fuzzy inference system applied to a two shafts gas turbine | Hadroug, Hafaifa et al., 2018 | Operational | Artificial Neural Network, Fuzzy inference system |

| Deep Learning for Accelerated Seismic Reliability Analysis of Transportation Networks | Nabian and Meidani 2018 | Simulated/ Generated randomly | Artificial Neural Network |

| Gaussian Process-Based Response Surface Method for Slope Reliability Analysis | Hu, Su et al., 2019 | Simulated/ Generated randomly | Kriging/Gaussian Process |

| Fault diagnosis of multi-state gas monitoring network based on fuzzy Bayesian net | Xue, Li et al., 2019 | Operational | Fuzzy Bayesian Network |

| Active learning polynomial chaos expansion for reliability analysis by maximizing expected indicator function prediction error | Cheng and Lu 2020 | Simulated/ Generated randomly | Polynomial Chaos Expansion, Kriging/Gaussian Process |

| A Reliability Management System for Network Systems using Deep Learning and Model Driven Approaches | Min, Jiasheng et al., 2020 | Simulated/ Generated randomly | Artificial Neural Network |

| Integration of Dimension Reduction and Uncertainty Quantification in Designing Stretchable Strain Gauge Sensor | Sungkun, Gorguluarslan et al., 2020 | Simulated/ Generated randomly | Auto Encoder, Artificial Neural Network, Principal Component Analysis |

| Data-driven prognostic method based on self-supervised learning approaches for fault detection | Wang, Qiao et al., 2020 | Public dataset | Kernel Principal Component Analysis |

| Transfer Learning Strategies for Deep Learning-based PHM Algorithms | Yang, Zhang et al., 2020 | Public dataset | Convolutional Neural Network |

| Bounds approximation of limit-state surface based on active learning Kriging model with truncated candidate region for random-interval hybrid reliability analysis | Yang, Wang et al., 2020 | Simulated/ Generated randomly | Kriging/Gaussian Process |

| A data-driven approach based on deep neural networks for lithium-ion battery prognostics | Kara 2021 | Public dataset | Convolutional Neural Network, Long Short-Term Memory |

| Using Deep Neural Networks to Evaluate the System Reliability of Manufacturing Networks | Yi-Fan, Yi-Kuei et al., 2021 | Simulated/ Generated randomly | Artificial Neural Network |

| Convolutional And Long Short-Term Memory Neural Networks Based Models For Remaining Useful Life Prediction | Gritsyuk and Gritsyuk 2022 | Public dataset | Convolutional Neural Network, Long Short-Term Memory |

| DL-RSIM: A Reliability and Deployment Strategy Simulation Framework for ReRAM-based CNN Accelerators | Wei-Ting, Hsiang-Yun et al., 2022 | Public dataset | Convolutional Neural Network |

| A Novel Support-Vector-Machine-Based Grasshopper Optimization Algorithm for Structural Reliability Analysis | Yang, Sun et al., 2022 | Simulated/ Generated randomly | Support Vector Machine |

| Library Name | Application | Library Function |

|---|---|---|

| Reliability | Reliability and Survival Analysis | Parametric Reliability Models (Weibull, Exponential, Lognormal, etc.), Non-Parametric Models (Kaplan–Meier, Nelson–Aalen, etc.), Accelerated Life Testing |

| Lifelines | Survival Analysis | Cox Proportional Hazard Model, Kaplan–Meier Estimator, Parametric Survival Models |

| Scikit-survival | Survival Analysis | Survival Tree, Ensemble Model for Survival Analysis, Cox Proportional Hazard Model, Kaplan–Meier Estimator |

| ProgPy Python Prognostics Packages | Prognostics | |

| Statsmodels | Statistics | Linear Regression, Generalized Linear Model, ANOVA |

| Scikit-Learn | Machine Learning | Classification, Regressions, Clustering, Cross-Validation/Model Selection Methods |

| TensorFlow | Deep Learning | Artificial Neural Network, Recurrent Neural Network, Convolutional Neural Network |

| Keras | Deep Learning | High-level API built on top of Tensor Flow for easy use |

| NLTK | Natural Language Processing | Tokenization, Sentiment Analysis, Stemming, Part-of-Speech Tagging |

| Spacy | Natural Language Processing | Text Classifier, Transformer Models, Custom Trainable Pipeline, Named Entity Recognition |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Payette, M.; Abdul-Nour, G. Machine Learning Applications for Reliability Engineering: A Review. Sustainability 2023, 15, 6270. https://doi.org/10.3390/su15076270

Payette M, Abdul-Nour G. Machine Learning Applications for Reliability Engineering: A Review. Sustainability. 2023; 15(7):6270. https://doi.org/10.3390/su15076270

Chicago/Turabian StylePayette, Mathieu, and Georges Abdul-Nour. 2023. "Machine Learning Applications for Reliability Engineering: A Review" Sustainability 15, no. 7: 6270. https://doi.org/10.3390/su15076270

APA StylePayette, M., & Abdul-Nour, G. (2023). Machine Learning Applications for Reliability Engineering: A Review. Sustainability, 15(7), 6270. https://doi.org/10.3390/su15076270