1. Introduction

A competency exam, abbreviated “CE,” was established for use in Jordanian higher education institutions with the intention of ensuring that students are capable of reaching predetermined levels of expertise [

1]. In alignment with the achievement of the fourth Sustainable Development Goal (SDG 4), which aims to ensure inclusive and equitable quality education and promote lifelong learning opportunities for all, Jordanian higher education institutions have established a competency exam to ensure that students attain predetermined levels of expertise [

2]. This exam serves as a key performance indicator for program quality. SDG 4 strives to address global issues related to education and facilitate affordable and high-quality education, funding, and equality by 2030 [

3]. The goal is to equip learners with the necessary skills to achieve sustainable development throughout their lives.

The results of the competency exams are one of the metrics that are used as key performance indicators (KPIs) for the quality of programs. As a direct consequence of this, initiatives to assess the level of academic proficiency possessed by students have become increasingly significant. This is crucial in order for management to be able to devise a plan for programs whose performance has not met certain criteria, such as modifying the learning objectives or improving the learning process. A competence exam is a series of questions that measures the general and accurate abilities that graduates of Jordanian universities are required to possess at the bachelor’s level in order to evaluate the efficacy of educational results in universities. These graduates must have a level of education equal to or higher than a bachelor’s degree.

Competency-based education (CBE) is an approach to education that focuses on the acquisition of specific skills and knowledge by students [

2]. The goal of CBE is to equip students with the skills and knowledge necessary for success in the workforce. However, there are several driving behaviors that can affect student performance in CBE [

4]. These behaviors include motivation, self-regulation, goal-setting, and self-efficacy. Motivation is a critical factor in CBE as it drives students to engage in learning activities and complete their work. Self-regulation refers to the ability of students to manage their own learning, set goals, and monitor their progress. Goal-setting is important in CBE as it helps students to stay focused and motivated. Finally, self-efficacy, or the belief that one can successfully complete a task, can greatly impact student performance in CBE. All of these behaviors are interrelated and play a crucial role in student success in CBE [

5]. Understanding these behaviors can help educators to create effective learning environments and support student success. To assess the quality of graduates from Jordan’s higher education institutions and aid in the formulation of regulations, it is important to evaluate the university’s progress and identify areas for improvement.

The CE exam is broken up into two distinct parts. The macro level and the micro level are the two parts of the exam. At the macro level, there is a collection of thirty competencies that serves as an evaluation of the students’ more comprehensive skill sets. Depending on the student’s major, the 45-item list of competencies that comprise the micro level will seem very different. The performance of students can be evaluated in a variety of ways, including the use of machine learning as well as more traditional statistical methods, and this evaluation is based on the results of the students’ exams (pass or fail). In order to provide an accurate prediction model for student performance, the data from the competency exams will be merged with ML models. After classifying every individual subset of data according to its features. When evaluating the performance of students, a variety of factors, including the data source (questionnaire or dataset and size), the type of education (e-learning or traditional learning), and the categories of student features, are taken into consideration [

6,

7].

Although the fact that the competency exam in Jordan was first implemented in 2008, there is a paucity of research on the ways in which the exam affects the development and improvement of competencies, as well as the quality of the program, through the improvement of student performance; in addition, there are no studies on ML to evaluate the data provided by the exam. A large number of machine learning (ML) programs have been developed in an effort to predict student achievement. This is due to the fact that there are multiple markers that be used to quantify student performance. It is possible to categorize student performance models based on factors such as academic status (from kindergarten to graduate level). However, it is unlikely that developing broad models based on these factors alone would produce accurate results in terms of classification or regression. Additionally, models could be constructed by considering information related to the degree of performance, such as whether a student is at risk of dropping out or has a specific grade [

8]. Every single data case has been included in the various attempts that have been made in the past in an effort to develop accurate and exhaustive classification models. In the fields of machine learning, however, there are a number of classification techniques, such as Decision Trees, K Nearest Neighbor (KNN), Naive Bayes, Support Vector Machine (SVM), and neural networks. Some of these techniques are included below. By conducting an analysis of the data from the competency exams and determining the academic programs and learning outcomes that need to be established, the purpose of this study is to provide guidance to those responsible for making decisions regarding the development of academic programs that make use of ML. We intend to investigate the differences in student characteristics that already exist by taking a close look at important demographic and academic elements that affect a student’s performance in CE. Demographic factors such as race, ethnicity, socioeconomic status, and gender can have an impact on a student’s performance on competency-based exams, as they may have less access to resources and support systems [

9]. Academic characteristics, such as prior knowledge, study habits, and learning styles, can also affect exam performance [

10]. However, students, educators and policymakers can all be involved to create an equitable and supportive learning environment.

This will be carried out so as to achieve our goal. As a consequence of this, the research project intends to develop prediction models in a number of different sub-datasets, taking into consideration the gender, grade level, involvement style, and any other relevant features of the students. These characteristics were chosen because of their capacity to recognize successful student performance.

Using the data from the competency exam, the researcher first divides the exam data into subgroups depending on the demographic and academic characteristics of the students. Next, the researcher evaluates the machine learning models and presents a comparative analysis between multiple machine learning models. The case statement for this paper investigates the extent to which student data and machine learning can be used to determine the nature of academic programs that should be developed for institutions. In regard to this topic, we discuss three significant research issues, which are as follows:

I. Can ML models be used to detect student performance, and if so, to what extent is this possible, and is it even a feasible strategy?

II. Which machine learning model provides the most accurate results, and how should ML models be utilized in practice?

III. Does the variety of student data affect the accuracy with which machine learning algorithms forecast the results?

The following are the contributions that we make as a result of our efforts.

1. Determining whether it is “appropriate” or valid to use a machine learning model to predict student achievement.

2. Formulating recommendations for how the performance of ML models might be improved when working with this kind of data

3. Creates student subgroups based on significant demographic and academic characteristics, and then examines the efficacy of these subsets in determining the accuracy of predictions made by machine learning models.

The rest of this paper is structured as follows. In

Section 2, the related works are presented. Academic-Data Heterogeneity is discussed in

Section 3. The paper’s methodology is covered in

Section 4. In

Section 5, experiments and results of different case are explained.

Section 6 conclude the paper and makes recommendations for future research.

2. Related Works

Multiple studies have demonstrated the effectiveness of using machine learning techniques to predict student behavior and performance in educational settings [

10,

11,

12,

13,

14]. These studies have applied various algorithms including J48, Naive Bayes, Neural Network, Bagging, Boosting, Logistic Regression, and Decision Trees. The results of these studies have shown that machine learning models can achieve high prediction accuracy and have been used to predict student enrollment, admission to colleges, dropout, and the risk of failure and withdrawal in online courses. These findings highlight the potential for machine learning to be used in education to support student success and improve decision-making.

The primary stakeholders in educational institutions are the students. The effectiveness of educational institutions is crucial in generating graduates and post-graduates of the highest caliber. The modern educational institutions work to maintain quality and reputation in the educational community. In actuality, the institutions are more concerned with their reputation than with the caliber of instruction [

15]. However, a number of government and accreditation organizations make sure that educational institutions maintain a high standard of learning, and the explicit accreditation procedures have forced the institutions to develop and adopt unique procedures to uphold their standards [

16].

The goal of artificial intelligence (AI) is to give computers enough intelligence to enable them to think and respond in ways that are similar to those of a human [

17]. Humans, as opposed to computers, are able to gain knowledge from experience, allowing them to rationally choose the best course of action given their unique set of circumstances. However, for the computer to complete the essential duty, it must adhere to human-made algorithms. Artificial intelligence seeks novel ways to give computers intelligence and make them behave similarly to humans in order to decrease this difference between computers and people. Projects that create systems that grant humans’ unique intellectual processes—such as the capacity to reason, find meaning, or learn from experience—are frequently referred to by this name. According to [

18], AI applications are steadily expanding across many commercial, service, manufacturing, and agricultural domains. Future AI artifacts will be able to communicate with people in their own languages and adjust to their moods and movements.

Many models have been put out in various educational contexts to address the prediction of student performance. Ensemble approaches were used by [

19] to investigate the connection between students’ semester courses and results. According on the results of the experimental evaluation, Random Forest and Stacking Classifiers have the highest accuracy. In order to identify the weak students and help the institution create interference measures to increase student attrition, Ref. [

20] updated the Genetic Algorithm (GA) to remove extraneous features. In [

21] work, they extracted a collection of attributes from the institution’s auto-grading system and used them to create decision tree and linear regression models. The study helps the university identify the difficult students and intelligently assign teaching hours automatically.

A decision tree approach was put up by [

22] to identify the key elements that affect students’ academic achievement. A survey was used to gather information about the demographic, academic, and social characteristics of the pupils. Ref. [

23] proposed a machine learning technique in which supervised machine learning algorithms are utilized to train prediction models for forecasting student performance after the K-Means algorithm generates a set of coherent clusters. In order to predict whether a student would graduate on time or after the expected graduation date, Ref. [

24] built a model [

25] investigates the relationship between students’ social interactions and academic outcomes. Although a decision tree proved to be a beneficial tool, there was only a minor link between the two elements. The examination of the literature demonstrates that machine learning algorithms are useful tools for creating models that forecast students’ ultimate results.

Similarly, Ref. [

26] developed models based on decision tree algorithms to predict students’ academic performance. The researchers collected data on students’ demographics, academic, and family background information through questionnaires. The models were built using these data and the decision tree algorithm. The results showed that the models produced by [

27] were accurate in predicting students’ academic performance based on the various factors studied. These studies highlight the potential of machine learning in education and demonstrate how decision tree algorithms can be used to predict student behavior and performance in educational settings. The results of these studies can inform the development of similar systems and can be used to support decision-making in educational settings. The related works on student performance based on data source, data type, and ML and evaluation metrics have been conducted in the field of education.

These works have used various regression models, decision trees, clustering techniques, and machine learning algorithms to predict student performance. The data sources have varied from questionnaires, exams, learning management systems, and socio-demographic information. The data types have included demographic information, academic data, and log files from e-learning systems. The ML and evaluation metrics have been used to compare different prediction models, with accuracy scores as a measure of performance. Works have shown that incorporating demographic and academic information, as well as study habits and social behavior, can result in better predictions of student performance [

28]. Neural networks and SVM have performed well in the studies, and the Naive Bayesian model has also shown good results in some cases.

In the field of educational research, various factors can influence student performance [

29]. The “Learning type” category in the field refers to the method used for students in the sample used. This can be either E-learning, Traditional Learning, or Both (E-learning and Traditional Learning). The “Dataset source” category refers to the source of the used sample and the method of collecting the sample. This can be either pre-existing data collected through university systems, e-learning systems, or other sources, or a questionnaire set of questions to be answered by the sample students [

30]. The “Sample size” category refers to the number of rows in the sample. The “Type of data” category classifies the data based on a set of foundations specified in this type of research [

31,

32]. This can include demographic data such as a student’s personal data such as gender and age, academic data such as the student’s average of specialization [

33], and other data such as internet usage and student interaction on social networking sites [

33]. Finally, the “ML Models” category refers to the ML algorithms used to predict student performance. The Description features used in contrasting different studies are provided in

Table 1.

Learning Type: The learning type category refers to the method of learning used by students in the sample. There are three options for learning type: e-learning, traditional learning, and both (e-learning and traditional learning). E-learning refers to online learning, where students access course materials and participate in online discussions through the internet. Traditional learning refers to classroom-based learning, where students attend lectures and complete coursework in a physical setting. The category “both (e-learning and traditional learning)” refers to a combination of both e-learning and traditional learning, where students participate in both online and classroom-based learning.

Dataset source: The dataset source category refers to the source of the sample used for the study and the method used to collect the sample. The two options for dataset source are pre-existing data and questionnaire. Pre-existing data refer to data that has already been collected from university systems, e-learning systems, or other sources. Questionnaire refers to a set of questions that are answered by the sample students, which is used to collect data for the study.

Sample size: The sample size category refers to the number of rows in the sample. This category provides information about the size of the sample used in the study, which is an important factor in determining the reliability and validity of the results.

Type of data: The type of data category refers to the classification of data based on a set of foundations specified in this type of research. There are three options for type of data: demographic data, academic data, and other data. Demographic data refer to student personal data such as gender and age. Academic data refer to the student’s academic information, such as their average university degree and specialization. Other data refer to other student data such as internet usage and student interaction on social networking sites.

ML Models: The ML models category refers to the machine learning algorithms used to predict student performance. This category provides information about the type of machine learning models used in the study and the effectiveness of these models in predicting student performance.

We summarizes and contrast a prior studies based on the previously explained features in

Table 2.

3. Academic—Data Heterogeneity

The academic performance of engineering students was studied using a regression model by [

44] the questionnaire was created based on the intended study in order to collect data from the students. The input information on students’ academic achievement was gathered from 150 undergraduate engineering specialties. The models that were forecasted and the proportion of accurate predictions were calculated and verified using a variety of metrics. The outcomes demonstrated that the regression model provides the greater prediction accuracy. From the information above, teachers evaluate the group’s performance and adjust their teaching methods according to the outcomes of the engineering students in each area. The study, however, was unable to demonstrate whether a regression model can be utilized to enhance learning results. Furthermore, a methodological gap occurs when a multiple regression model is used with a limited sample size. In [

45] the authors conducted a study on the academic performance of students of nine countries in the PISA 2015 test. The questionnaire was prepared to collect information from the students in addition to their exam results. They apply multilevel regression trees In the first stage, apply regression trees and boosting to identify which are the school level characteristics related to school value-added (estimated at first stage) in the second stage.

In their research [

46] The BS students’ academic performance dataset from Kaggle.com, which was extracted from the learning management system, is where the data were obtained from. The K-means clustering data mining technique is then used to obtain clusters, which are further mapped to find the key features of a learning context. To evaluate the student’s performance, relationships between these features are found. In [

36] the authors applied a decision tree algorithm with inputs including student academic information and student activity, researchers investigated students’ academic performance. they construct a data collection of records for 22 undergraduate students from the Spring 2017 semester at a private higher education institution in Oman [

47].

Use demographic data such as (age, nationality, marital status) and academic data such as (major and grade) to compare various resampling techniques such as Borderline SMOTE, Random Over Sampler, SMOTE, SMOTE-ENN, SVM-SMOTE, and SMOTE-Tomek to handle the imbalanced data problem while predicting students’ performance using two different datasets. In order to predict students’ grades (pass/fail), the study by [

48] gathered information from e-learning, pre-course data, and socioeconomics. The information includes information about each student’s enrollment as well as activity information produced by the university’s (LMS), Information about the students, including sociodemographic characteristics, is contained in the enrollment data. In this study, student subpopulations are created based on important demographic and academic characteristics for building student sub-models, and their value in identifying vulnerable pupils is assessed.

Ref. [

38] Used machine learning algorithms to predict and categorize student performance. This study takes into account the Student Performance Dataset (SPD) and the Student Academic Performance Dataset (SAPD), two datasets. Analysis of educational data, in particular the impact of social environment and family on students’ performance, is crucial to raising the factors and boosting the quality of education for future generations. Analysis of various datasets is crucial in order to anticipate and categorize how students will behave in related courses and to offer early intervention to improve performance [

49]. Discovered a relationship between students’ academic performance and their socio-demographic (e.g., gender, and economic status) and academic (e.g., kind of university and their performance in that school) characteristics.

Ref. [

50] Showed how taking into account academic records and socio-demographic data during the enrollment of a given candidate can result in higher-performing models with better prediction accuracy. Students’ study habits and social behavior (partying) data were collected via mobile phones as alternative data sources, and it was discovered that both are strongly connected with their GPA [

51]. Ref. [

52] Investigated an ML-based model for forecasting student performance. Students’ transcript data, including GPA, were gathered for the study. They employed support vector machines, neural networks, naive cells, and ML (SMO) approaches after pre-processing the data. The IT department’s Naive Bayesian model performed the best (95.7%). A study was conducted by [

53] to use NN to predict student performance. An LMS log file including log data on 4601 students across 17 undergraduate courses served as the dataset for this investigation. The study assessed the prediction performance of neural networks against six different classifiers on this dataset to determine the NN applicability. These classifiers, which trained using the data gathered throughout each course, included NB, kNN, DT, RF, SVM and LR. The training characteristics came from LMS data collected during the course of each course, and they ranged from information on how much time was spent on each course page to grades received for course tasks. With an accuracy score of 66.1%, the NN surpassed all other classifiers. Ref. [

54] Used a data mining classifier to predict undergraduate students’ performance. Four different classifiers DT, RF, NB, and rule induction—are used to assess student performance. Depending on the different methods it uses, different classifiers display varying degrees of accuracy. These evaluated findings are specifically utilized to forecast the students’ impending grades and the pertinent factors (such as Internet connection, study time, etc.) that influence the students’ academic success. The findings showed that decision trees had a 90.00% prediction accuracy, NB had an 84.00% prediction accuracy, random forest had an 85.00% prediction accuracy, and induction rule had an 82.00% prediction accuracy.

Many previous studies have explored the ability of machine learning algorithms to predict students’ performance by using different types of data, such as demographic information and data from e-learning systems. However, these studies have largely relied on using students’ grades as a measure of performance, without clearly distinguishing between grades as a measure of achievement versus grades as a measure of competence. Furthermore, these studies have not extensively investigated the impact of data heterogeneity and data segmentation on the accuracy of the machine learning algorithms. This paper, in contrast, places a special focus on examining the effect of data heterogeneity and data segmentation on the accuracy of these algorithms and measures students’ performance based on their competence rather than solely relying on their grades.

In summary, these works have shown the effectiveness of using machine learning techniques to predict student behavior and performance in educational settings. These studies have used various algorithms, including J48, Naive Bayes, Neural Network, Bagging, Boosting, Logistic Regression, and Decision Trees. However, the current models are effective for a single course and are helpful locally. Therefore, this study aimed to develop a prediction model for student performance that could be applied to any course offered by the host university, using decision tree algorithms. This study also contributes to the identification of the key factors that influence student performance, including demographic, academic, and social characteristics. The primary stakeholders in educational institutions are the students, and the effectiveness of educational institutions is crucial in generating graduates and post-graduates of the highest caliber.

4. Material and Method

4.1. Dataset Description

The study data were collected over two academic semesters and were focused on seven academic families, out of the more than one hundred majors available in the competency exam. The selected families were chosen to represent half of the total number of families present in the exam, and were also categorized based on their type, either scientific or humanitarian. In addition, the two semesters were differentiated based on the method of participation, whether it was on campus or online. This was carried out to ensure that the collected data were diverse and representative of the overall competency exam. The study’s findings and analyses were based on

Table 3, which outlines the number of students present in each family. Overall, the study collected data from a total of 45,392 students, indicating that the dataset is sufficiently large to provide robust results and statistical analyses. By focusing on a diverse range of families and majors, the study provides a comprehensive understanding of the competency exam’s effectiveness in evaluating students’ knowledge and skills in Jordan.

Demographic data refer to information about individuals that includes characteristics such as age, gender, ethnicity, and socioeconomic status, whereas academic data refer to the information related to the student educational background, including their university, major, and grade. The assessment data refer to data collected through tests or evaluations of an individual’s knowledge or skills, such as exam scores or performance in specific tasks. In the context of machine learning, assessment data are often used as training data for algorithms that predict future outcomes based on past performance.

4.2. CE-Exploratory Data Analysis

Demographic data refer to the basic information of students, typically collected from universities and used in the exam system. The data include the student’s name, ID, and gender. The name consists of three syllables in Arabic text, the ID is a unique number, and gender is represented as symbols (1 for male and 2 for female). The academic data for students contain information about their university, major, and grades. The data were received from universities and processed to be uploaded to the exam system. The data include the following attributes:

University code: A unique number that represents each educational institution;

Grade: The last rate of the student at the university, standardized into one of four options (Fair, Good, Very Good, Excellent);

Major: The student’s exact major, standardized into one of 189 majors in the AQACHEI (Arab Quality Assurance and Accreditation Commission for Higher Education Institutions);

College: The college in which the student studies.

The AQACHEI also adds the following data:

Standard Major: The exam system contains a group of standard majors, including 189 majors that cover all the specializations taught in Jordanian universities;

Family: The commission collects all the majors into groups that have common characteristics, each with a two-digit code;

University code: A categorical number that represents each university in which the student studies, converted into a set of symbols in the form of two numbers;

University type: A categorical number that represents each university’s type, based on financing method (government or private).

The data have been modified to standardize some of the information, such as the grade, major, and gender, which were standardized into categorical data.

The assessment data are a representation of the results of students in an exam taken at the AQACHEI (Academic Quality Assurance and Accreditation Commission for Higher Education Institutions). The exam has two levels of competencies—macro level and micro level. The macro level consists of 30 items measuring general skills and is 40% of the total exam items. The micro level is 60% of the total exam and includes competencies that differ for each major. The results for each student were extracted according to their answers and are reported at the level of competence. The study added several variables to improve the analysis and understanding of the data. The first variable, “Family type”, categorizes families into two groups: scientific (1) and humanitarian (2), based on the nature of the study. This information is presented in

Table 3, which shows the classification of families used in the study.

Another variable, “Participation Method”, classifies students based on their examination method (0: in-campus, 1: online), and the number of students based on each method is presented in

Table 4. The study also created a new variable called “Exam type” which indicates the type of exam the students took (0: general, 1: accurate). Additionally, a questionnaire was added to evaluate the students’ satisfaction with the university, and it was rated from 0 to 5.

Finally, the students’ scores were collected based on each level of competency, and were divided by the number of questions to obtain a percentage score. Based on the exam hypotheses, a proficient student in the general exam was defined as one who scored more than 55% (cut-off score). The exam results were then converted from a numerical score (0–100) to a classification variable (0: not perfect, 1: perfect). A similar process was followed for the accurate exam, with the cut-off score varying according to each family. The final results were then placed in a new variable called “Test result”.

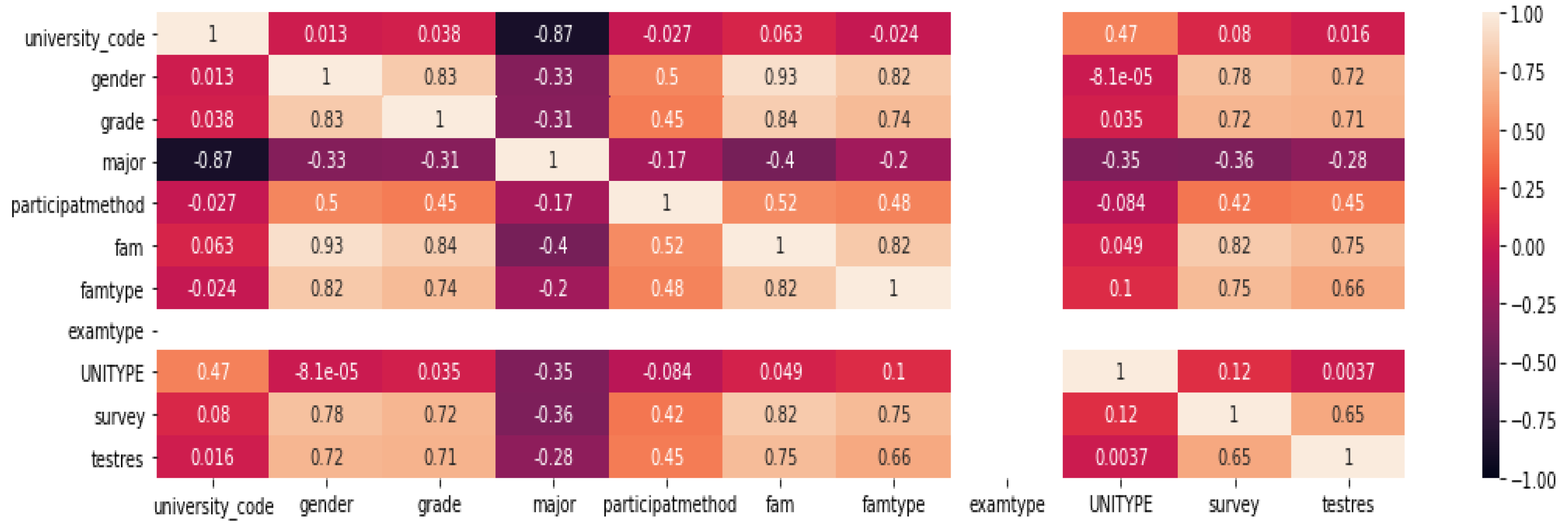

The process of studying and analyzing the data involves converting categorical variables, which are represented as ordinal integers, into numerical ones. This is necessary in order to use various machine learning algorithms and models. The correlation matrix was used to determine the relationship between different variables in the dataset as shown in

Figure 1.

There are three types of correlations: positive correlation, negative correlation, and no correlation. Variables that have a correlation coefficient of above 0.5 are considered strongly correlated. To handle non-normality in the data, normalization techniques such as Min-Max scaling are used to shift and rescale the values so that they fall between 0 and 1. The heat map after normalization shows a generally positive correlation between the parameters such as gender, grade, family, family type, survey, and participation method as we can see in

Figure 2.

Further verification was performed by testing different scalers and correlation scores as shown in

Figure 3.

4.3. Used Machine Learning

The Decision Trees (DT) algorithm was used to build a model that can predict student performance based on the extracted features from the competency exam dataset. The algorithm builds a tree-like model of decisions and their possible consequences, where each internal node represents a test on an attribute and each branch represents the outcome of the test, leading to a new internal node or a leaf node. The Support Vector Machines (SVM) algorithm was used to classify students’ performance as good or poor based on their exam results. SVM is a supervised machine learning algorithm that uses a hyperplane to separate data points into different classes. The Multi-layer Perceptron (MLP) algorithm was used to build a neural network that can predict student performance based on the extracted features. The MLP consists of multiple layers of nodes, where each node in one layer is connected to all nodes in the previous and next layers. The K-Nearest Neighbors (KNN) algorithm was used to classify students’ performance based on the results of their closest neighbors. KNN is a non-parametric algorithm that looks for the k closest training examples in the feature space and uses the majority class among these neighbors as the prediction. Finally, the Logistic Regression (LR) algorithm was used to build a model that can predict the probability of a student’s performance being good or poor based on the extracted features. LR is a parametric algorithm that models the relationship between the dependent variable and one or more independent variables by estimating probabilities using a logistic function. Hyperparameter values for all the machine learning models used in our study are shown in

Table 5.

4.4. CE-Model Construction

The

Figure 4 methodology used in this research is a three-phase process that aims to predict students’ performance based on their competence. The first phase is data preparation, which involves cleaning and pre-processing the dataset. This step involves removing irrelevant features, handling missing values, and improving the quality of the dataset.

The second phase is data segmentation in the context of modeling student performance using a competency exam dataset involves dividing the dataset into smaller, more manageable subsets for the purpose of analysis and modeling. The purpose of segmenting the data is to reduce the complexity of the data and make it easier to analyze and interpret. Data segmentation also helps to identify patterns and relationships within the data that are not easily noticeable in the larger dataset. When segmenting the competency exam dataset, relevant factors such as student demographic information, prior academic performance, and specific exam scores get used to create subgroups. These subgroups can then be analyzed to identify any correlations between the factors and student performance. The end goal is to use this information to develop accurate machine learning models for predicting student performance based on their competence, rather than just their grades. The final phase is the experimental evaluation, where a set of machine learning algorithms are run on the prepared dataset. Each algorithm produces a prediction model, which is then evaluated and compared using various metrics to choose the most robust model.

The CE dataset was subjected to a series of experiments based on data segmentation. The data were divided into segments based on the type and value of the features. In the first level, all the data were selected. In the second level, the data were segmented based on the method of participation or exam type. In the third level, the student results were analyzed and predicted using data segmented based on exam type and participation method. In the fourth level, data were segmented based on main family, participation method, and exam type.

Figure 5 illustrates the process of data segmentation.

After dividing the dataset into a training set and a test set, the next step is to apply various machine learning algorithms and compare their performance in terms of accuracy. The algorithms used in this study included Decision Trees (DT), Support Vector Machines (SVM), Multi-layer Perceptron (MLP), K-Nearest Neighbors (KNN), and Logistic Regression (LR).

The final step involved evaluating the performance of each algorithm and selecting the best performing one. The accuracy of the selected algorithm was then tested on the test set, as the goal of this study was to determine the most effective machine learning algorithm for predicting student performance based on the CE dataset. Through the process of feature selection, data segmentation, and applying various machine learning algorithms, the best performing algorithm was selected and used to make predictions about student performance.

By segmenting the dataset based on different feature types and values, we aimed to perform a deeper analysis of the data. By creating models in sub-datasets, the authors obtain specific trends or patterns in the data that are better captured by the models built in these sub-datasets compared to the base model built on the full dataset. The sub-models built include the exam type, participation method, and family of program, and their performance will be compared to the base model and evaluated using metrics such as accuracy, precision, recall, and F1-score. Ultimately, the goal was to determine which model performs the best in predicting student performance.

5. Experiments and Results

The experiments were based on a CE-dataset that was segmented into different sub-datasets based on feature type and value. Four different classification techniques were used to build prediction models for the student performance. The assessment of prediction models for the student dataset involved segmenting the data based on specific features, applying different classification techniques, and evaluating their performance using metrics such as accuracy and cross-validation. The models were then compared to a base model created from the full dataset and the best-performing sub-model is discussed in each sub-dataset.

Experiment-1 All-Dataset Case Study

In the all-dataset case study, the data were analyzed using four machine learning techniques: Logistic Regression, KNN Classifier, Multi-Layer Perceptron (MLP), and Support Vector Machines (SVM). Feature selection was performed using Decision Trees (DT) and Random Forest (RF) and Extra Trees (ET), and the most important features were found to be the student’s grade and the university, indicating that the student’s grade and the university’s quality of instruction play a role in student performance. The results of the cross-validation accuracy were presented in

Table 6, which showed that MLP achieved the highest accuracy (0.651784) among the four techniques. The other techniques also showed decent results, but MLP outperformed them in terms of accuracy, F1 score, AUC, recall, and precision.

Experiment-2 Macro Exam Case Study

The study analyzed the performance of ML methods applied to a sub-dataset based on student results in a macro exam. The dataset contains 36,977 records and the most important features are found to be grade, survey, and university respectively. The results show that Multi-Layer Perceptron (MLP) achieved the best accuracy with a score of 0.624482. The logistic regression, KNN classifier, and support vector machines also performed well but with lower accuracy compared to MLP. The emergence of the importance of the survey can be explained by the fact that students who are satisfied with their universities are more serious about taking the exams and non-professional students generally tend to be dissatisfied with their universities. The classifier accuracy of the training set for all models shown in

Table 7 shows that Multi-Layer Perceptron achieved the best performance with accuracy (0.624482).

Experiment-3 Micro Exam Case Study

In this case study, the results of a machine learning analysis of student results in a micro exam are presented. The most important features found to influence the students’ results are participation method, grade, and university. The participation method is seen as an important feature because it is believed to have an impact on the students’ results in their area of specialization. The results of the machine learning analysis show that the KNN classifier performed the best with an accuracy of 0.753663. This can be attributed to the strong connection between the features used and the student’s specialist. The experiment shows that the most important factors influencing student performance in the micro exam are the participation method, grade, and university. The change in participation method appears to be an important variable, which suggests that the way students participate in the exam (such as online or in-person) has an impact on their results. This could be due to the relationship between the student’s specialization and the participation method, as well as the possibility of variations in the exam questions.

This result suggests that the KNN classifier is the best model for predicting the results of micro exams, with an accuracy of 0.7536. The performance of all machine learning methods used is relatively high, and this can be attributed to the connection of the features used in the dataset, which are more specialized in nature. The fact that the participation method, grade, and university are the most important features can be explained by the impact of the student’s specialization on their performance and the possibility of changing questions at this level.

Table 8 shows the accuracy metrics of different machine learning models applied to a micro level dataset of 8415 records. The accuracy, F1, AUC, recall, and precision metrics have been used to evaluate the performance of each model. The KNN classifier achieved the best accuracy of 0.7536, followed by the Multi-layer Perceptron with an accuracy of 0.7314. The Logistic Regression and Support Vector Machines had lower accuracy scores of 0.62297 and 0.621782 respectively. The F1 score is a measure of a model’s precision and recall, and in this case, the KNN classifier also had the highest F1 score of 0.672287. The AUC metric represents the area under the ROC (Receiver Operating Characteristic) curve, and again the KNN classifier had the highest AUC score of 0.736898. The recall and precision metrics are used to measure a model’s ability to identify positive cases, and the KNN classifier performed the best in both recall and precision as well.

In the micro exam case study, the student’s participation method, grade, and university are the most important features in predicting the student’s exam result. The KNN classifier has the best performance among the different machine learning methods applied with an accuracy of 0.753663. The high accuracy of the different models used could be attributed to the connection of the features used at a more specialized level.

Experiment-4 Participation Method, Exam Type and Family Case Study

This case study involves the analysis of the relationship between student performance and the combination of participation method, exam type, and family. The study first categorized students into three levels: participation method, exam type, and family see

Table 9. This table presents the number of students in each experiment based on the combination of participation method, exam type, and family dataset. The study aimed to analyze the relationship between student performance and the combination of these factors. The table provides a breakdown of the number of students in each experiment, with a total of 45,392 students included in the analysis. The participation method was divided into two categories—online and in-campus—while the exam type was divided into two categories: macro and micro levels. The family dataset was divided into seven categories, representing half of the families present in the exam, with each family categorized as either scientific or humanitarian. The table provides a comprehensive overview of the sample size for each experiment, which is useful for understanding the scope and generalizability of the findings of the study.

The Decision Tree Classifier algorithm was applied to evaluate the accuracy of the prediction of student performance based on these three factors. The results of the study showed that experiment 9, which involves a traditional type of exam with traditional participation method and family number 4, had the highest prediction accuracy of 0.95. Further analysis was performed on the combination that showed the highest accuracy, and various machine learning algorithms were applied to verify the results. Further analysis of experiment 9 using various machine learning algorithms confirmed the results, with the accuracy ranging from 0.942 to 0.951 as we can see in

Table 10. These results suggest that the traditional type of exam with traditional participation method and family number 4 could be considered important factors in predicting student performance.

The results showed that using a student subset-generated model in the fifth level of segmentation led to improved performance compared to other levels. The combination of the factors of Participation Method, Exam Type, and Family Dataset were found to have superior performance compared to other models. The results of the campus Participation Method experiments also showed similar results, indicating that this approach is effective in predicting academic performance. Referring to the research questions related to the use of machine learning in evaluating student performance in Jordanian higher education institutions. The results indicate that ML models can be effectively used to detect student performance, and the experiments showed that ML models have the potential to be an accurate and feasible strategy for evaluating student performance. Additionally, the results of the experiments suggested that the MLP model provides an accurate results. However, it is important to note that no single ML model outperforms other ML models consistently, and selecting the appropriate model depends on the specific context and objectives of the evaluation. Finally, the study found that the variety of student data does impact the accuracy of machine learning algorithms in forecasting results. Thus, researchers and decision-makers should carefully consider the selection and preparation of data sets to achieve the most accurate results.

Discussion and Remarks

We assessed the accuracy of the created models and sub-models in identifying students’ performance. However, when it came to analyzing the various datasets, no single ML model stands out as having higher performance. For the majority of experiments, MLP performs best when predicting the dataset, correctly classifying over 95% of students for the models created from the (Participation method, Exam type, and Family) dataset. The experiments carried out on the combined datasets showed a prediction accuracy of 70%. The results of all-dataset experiments revealed that the second level model in the campus participation method dataset had an accuracy of 83%, while the accuracy rose to 94% for the experiment using the (Participation method, Exam type, and Family) dataset. Furthermore, the findings of the experiments showed that the grade of the students in the university, the university of graduation, and the questionnaire measuring students’ satisfaction with the university all have a significant impact on the performance of the models. The findings suggest that the university grade and the University of Graduation have a significant impact on the performance of the models, and the variable of the questionnaire measuring students’ satisfaction with the university also affects the models’ accuracy. These findings indicate the importance of considering multiple factors when predicting students’ performance. It was also observed that students from the educational sciences family yielded the best prediction results due to their familiarity with the nature of questions asked in competency exams, which focus more on measuring skills rather than direct achievement.

The sub-models combining exam type (macro and micro) with a specific participation method demonstrated better prediction results compared to the model that only considers exam type. However, not all sub-models performed better. Further investigation using datasets that focus on specific features, such as exam type and participation method, has been deemed useful. The results showed that subset models generated from the combination of (Participation method, Exam type, and Family dataset) produced high accuracy, with MLP having the best performance. The results also indicated that factors such as students’ grades, university of graduation, and family background play a significant role in the accuracy of the models. The educational sciences family showed the highest prediction results due to their familiarity with the type of questions in the competency exams. The results showed that the sub-models combining exam type and participation method had superior predictions compared to the exam type only model. The findings suggest that it is useful to consider different datasets for certain features when developing prediction models.

Segmenting the competency dataset into sub-datasets based on majors, type of test (macro or micro), and study type (online or face-to-face) can improve the performance of ML models. By dividing the data into subsets, the ML models can be trained more efficiently, focusing on the specific features of each subset. This approach can also help in identifying any potential bias or variations in the data that affecting the model’s performance. Additionally, using domain-specific feature engineering techniques can also help in improving the accuracy of the ML models. The feature engineering process involves identifying the most relevant features that impact the target variable and transforming the data to highlight these features. Overall, the approach of data segmentation and domain-specific feature engineering can enhance the accuracy of the ML models, leading to better insights and decision-making.

In comparison to previous studies in the context of competency exam prediction using machine learning, this study has several pros and cons. One of the strengths of this study is the large dataset that was used, consisting of over 45,000 students from seven different academic families. This dataset was also collected from Jordanian universities over two academic semesters, which increases the generalizability of the study findings. Additionally, the study used a comprehensive set of demographic and academic variables as predictors, which provided a more holistic view of the factors influencing students’ performance on the CE.

Several research studies that have utilized machine learning techniques to assess competencies and skills across various domains. The studies include a range of applications, such as assessing physician competencies [

49], analyzing graduate student employment outcomes [

50], assisting volunteers with cause-effect reasoning [

51], promoting software engineering competencies [

52], evaluating reading level for foreign students, assessing science competency in student-composed text [

53], and grading computer programs automatically based on programming skills and program complexity [

54].

Table 11 summarize some key differences between our work and others. These studies highlight the potential of machine learning techniques in assessing competencies and skills, providing insights into developing more effective educational and training programs. However, while the literature review covers a wide range of applications, the focus of the current work is on competency exam data and the effect of data segmentation on accuracy. The current work also develops a framework for forecasting and assessing student performance and answers specific questions related to the suitability of ML algorithms and the appropriate model for accuracy. Overall, both the current work and the literature review demonstrate the potential of machine learning for analyzing and predicting student performance, but the current work provides more specific insights into the use of machine learning in analyzing competency exam data.

On the other hand, one of the limitations of the study is that it only utilized one type of algorithm, Random Forest, to predict CE outcomes. Other studies [

55,

56] have utilized different machine learning algorithms which may have yielded different results. Additionally, the study did not consider non-cognitive variables such as motivation or study habits, which have been shown to be important predictors of academic performance in other studies. Therefore, it is possible that the addition of these variables could have improved the predictive accuracy of the models.

In summary, the findings of this study contribute to the growing body of research on using machine learning techniques to predict academic performance. The study identified important predictors of CE performance and demonstrated the effectiveness of machine learning in predicting student outcomes. However, further research is needed to explore the generalizability of the findings to other contexts and to compare the effectiveness of different machine learning algorithms in predicting academic performance.

Practical implications of this study include the development of a framework that decision-makers and universities can use to evaluate academic programs using ML. This framework can help identify programs and learning outcomes that need to be established by analyzing competency exam data. It can also reduce exam costs by substituting machine learning algorithms for the actual execution of the exam.