Development of an Intelligent Personal Assistant System Based on IoT for People with Disabilities

Abstract

:1. Introduction

- An IRON system that helps the elderly and impairment to control their home devices through their voice is proposed.

- The dataset consists of 3000 normal, negative, and unstructured commands to control 100 devices.

- Natural language processing is applied to the text generated from the google speech API for splitting the text into tokens for further classification.

- A machine learning algorithm is included in IRON for classifying the commands as positive or negative.

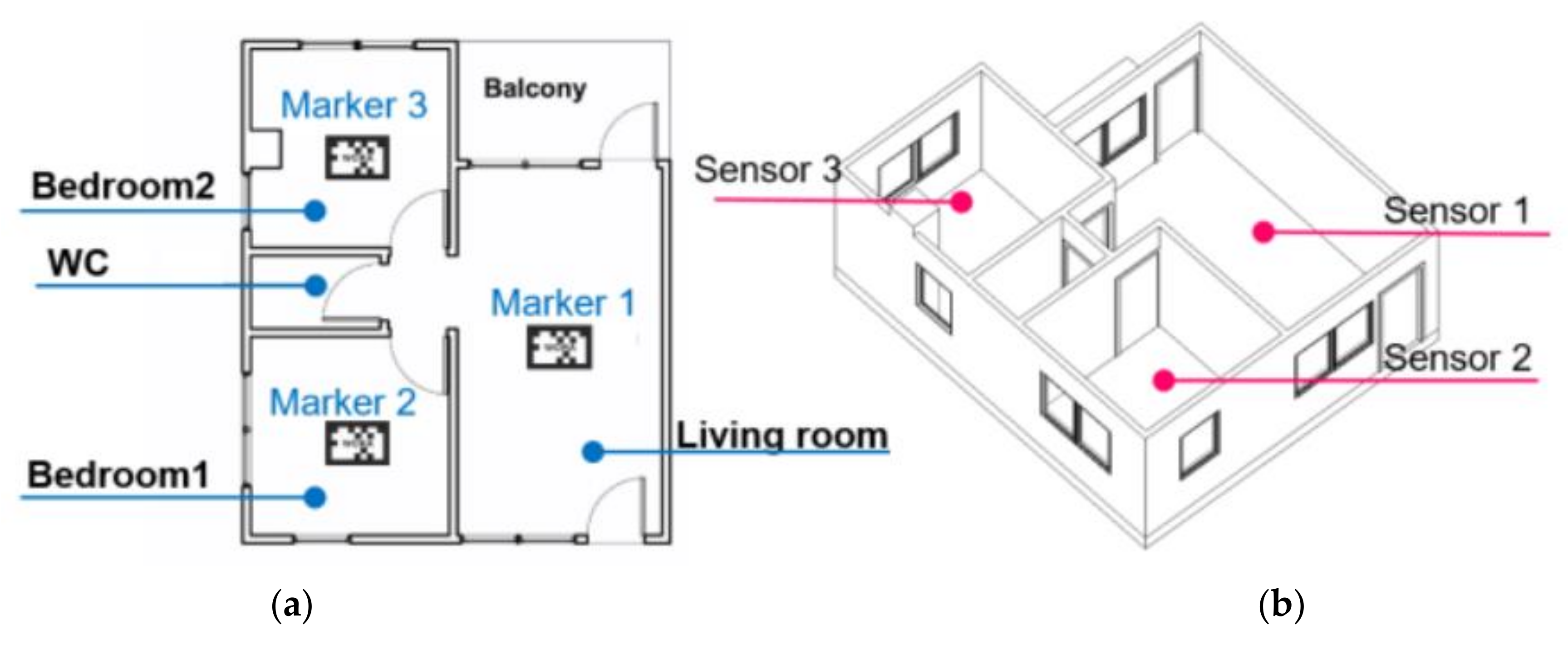

- Multi-microphones are distributed in different locations in the home to ensure that the elderly and disabled can access IRON from their locations.

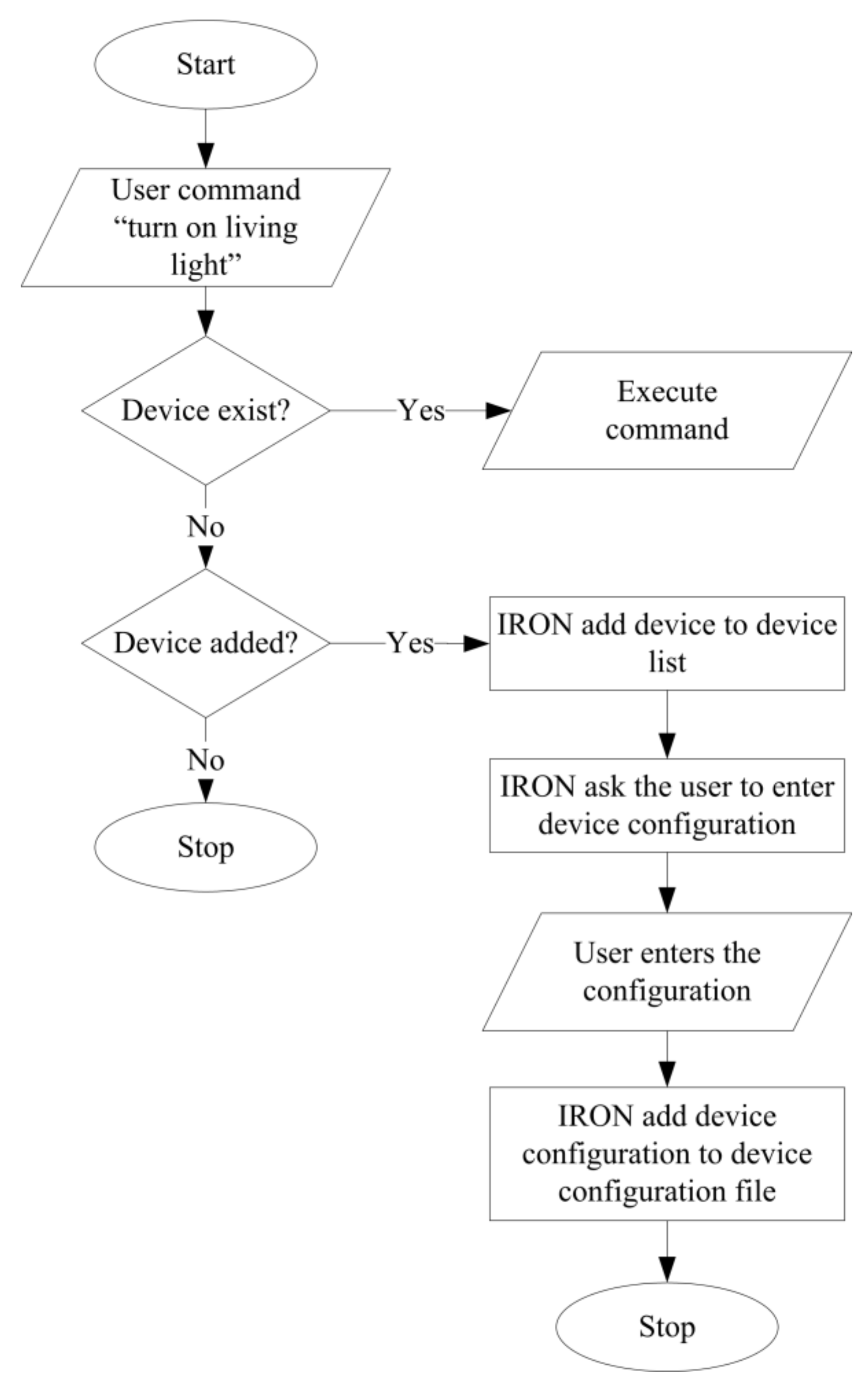

- New devices can be added and reconfigured by the impaired person’s voice without re-coding the IRON.

- The IRON system is designed to work online or offline and to turn off, on, or adjust a device range as fan speed.

- The IRON system is secured by requesting a password to start the controlling process.

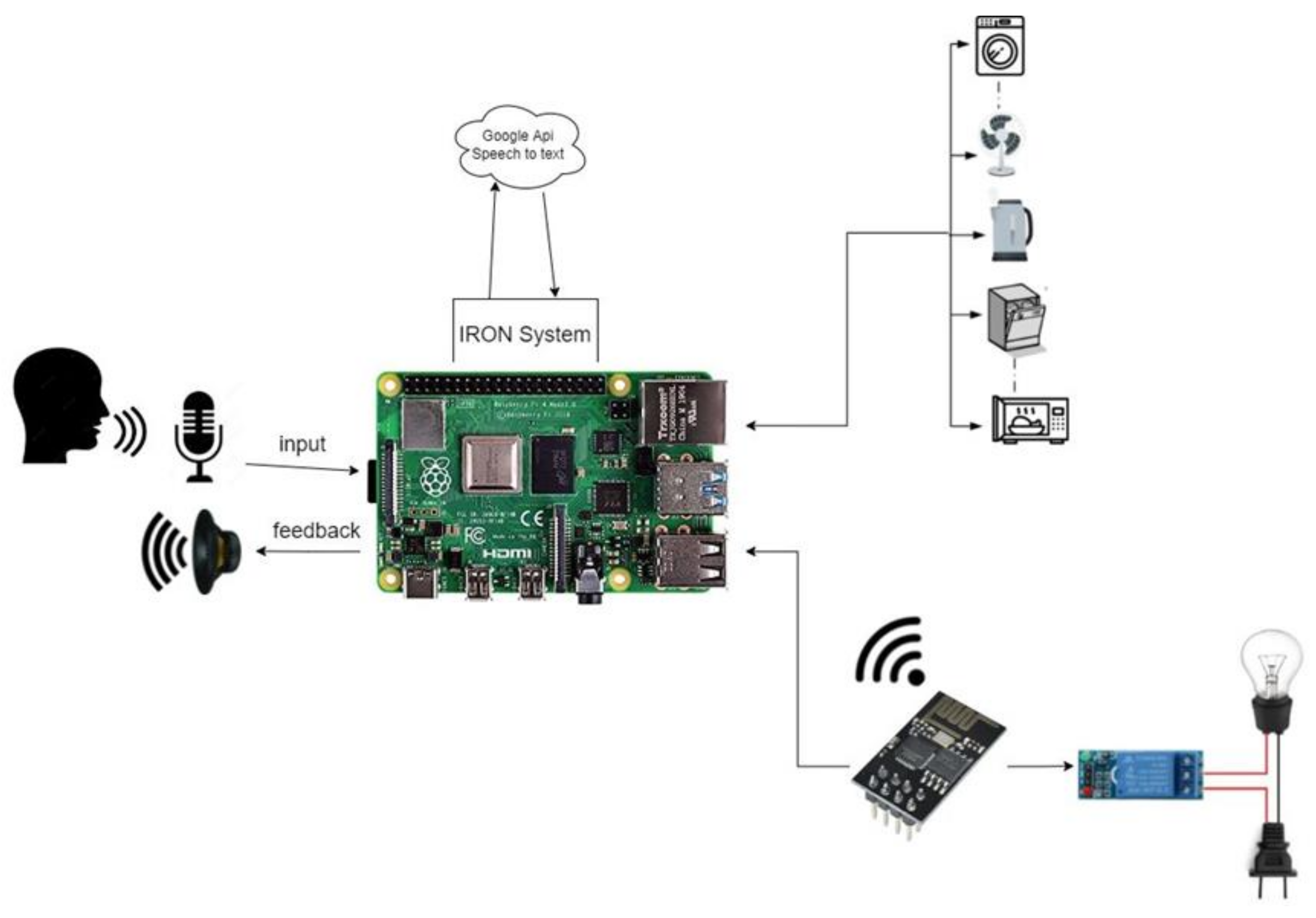

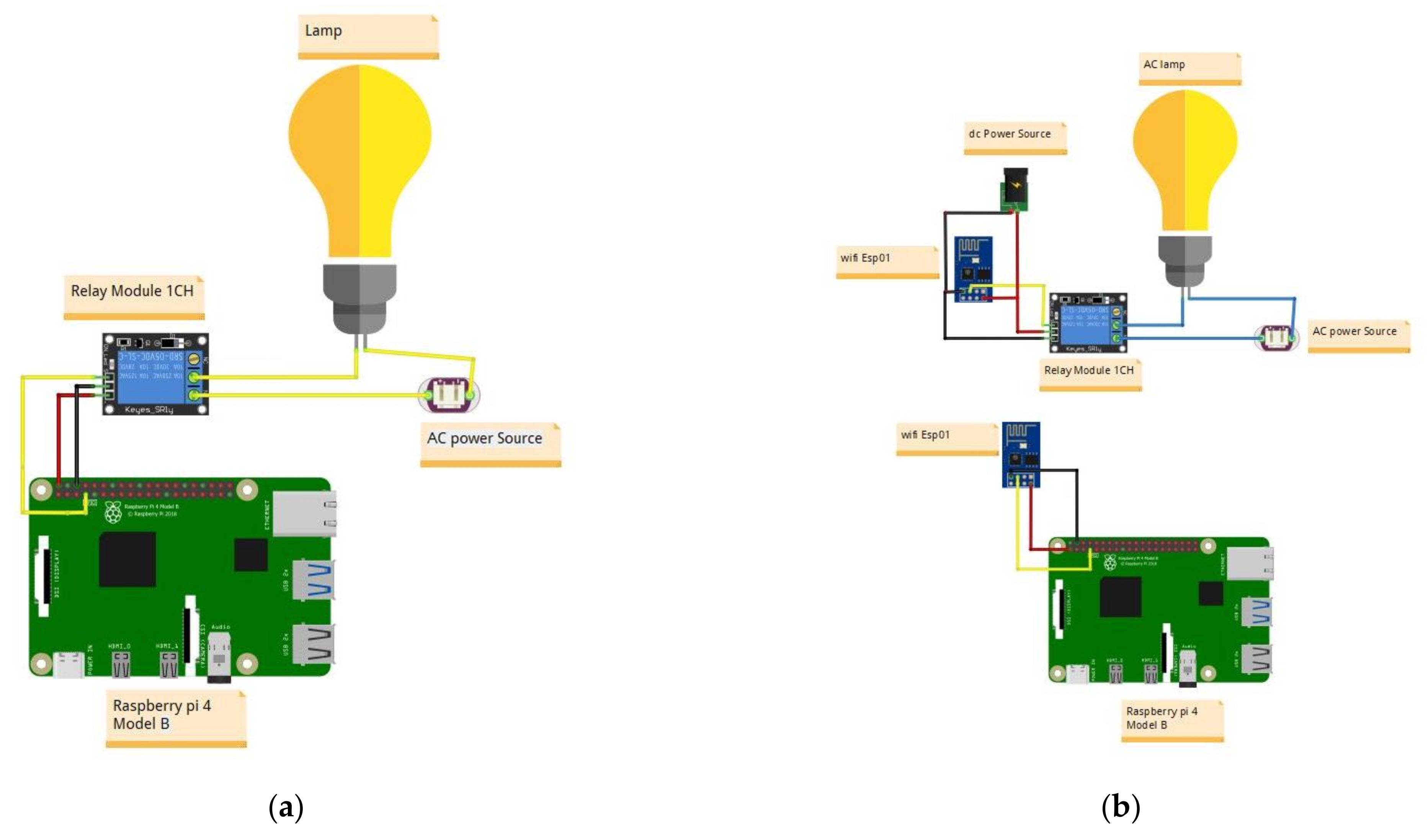

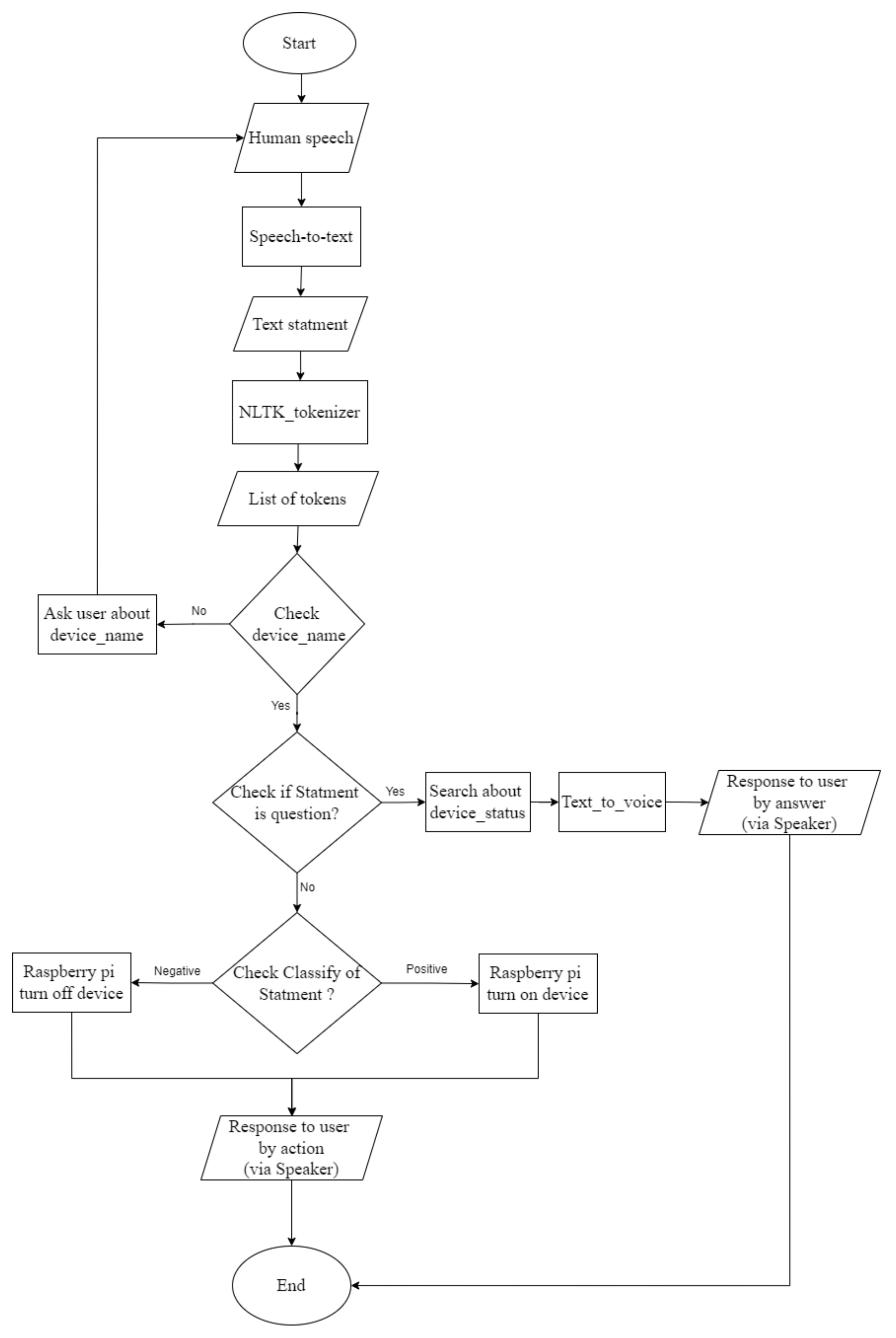

2. System Architecture and Design

| Algorithm 1: The IRON system procedure |

| Input:user voice command Output:device control Initialization:IRON introduces itself and informs the user instructions about how interact with it. While true Wait in sleep mode #microphone ready to receive voice. If the user says “IRON”: #user knows this from instruction. IRON say: hello message and ask human what he need to do. Receive Order () Speech to text () Analysis Text () Execute the command () return feedback () Else: Check if the user needs to end IRON or not. End End |

- Module 1: Speech to text

- Module 2: Text analysis and classification

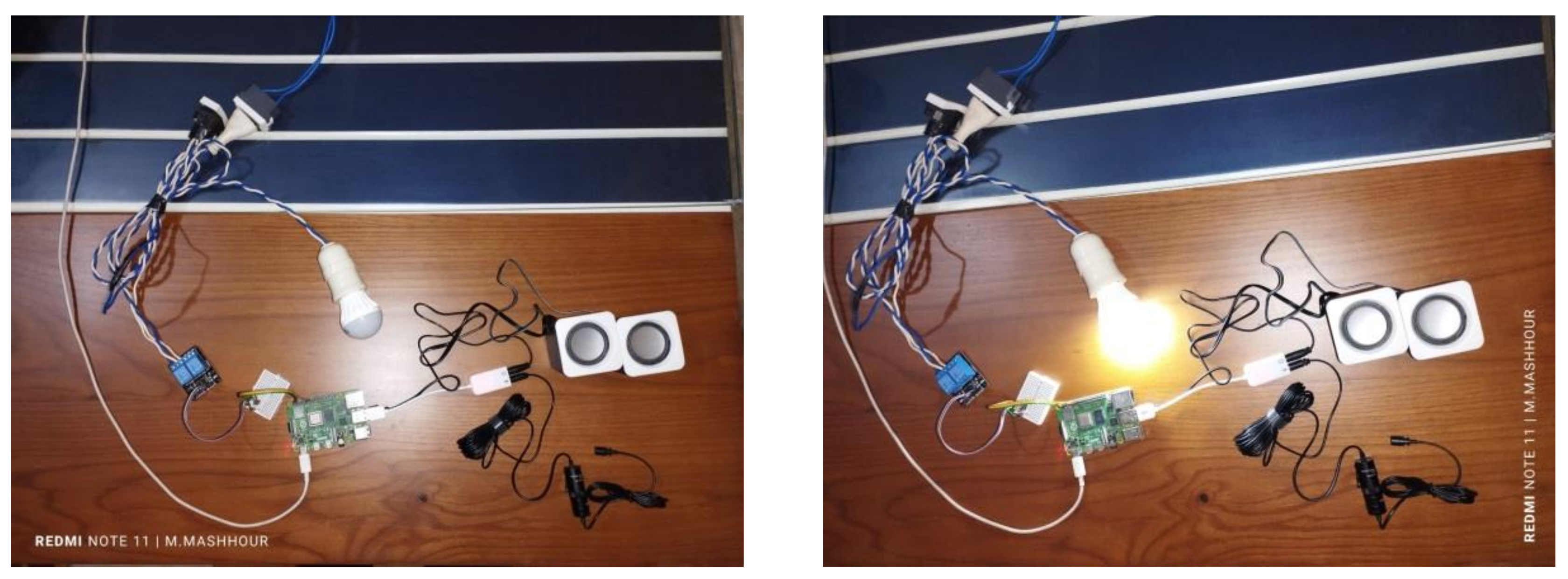

- Module 3: Command execution

3. Evaluation Results

3.1. Evaluation Metrics

- Accuracy

- Precision

- Recall

3.2. Dataset

3.3. Simulation Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| IPA | intelligent personal assistant |

| GUI | graphical user interface |

| SHAS | smart home automation system |

| CFL | compact fluorescent lamp |

| GPIO | general purpose input output |

| VAD | voice activity detection |

| CANVAS | context awareness for voice assistants |

| HAS | home automation system |

| MFCC | mel-frequency cepstral coefficients |

| VQ | vector quantization |

| PCA | principal component analysis |

| GMM | Gaussian mixture model |

| NLTK | Natural Language Toolkit |

| SVM | support vector machine |

References

- World Health Organization (n.d.). WHO. Available online: https://www.who.int/en/ (accessed on 9 March 2023).

- Li, G.; Cheng, L.; Gao, Z.; Xia, X.; Jiang, J. Development of an untethered adaptive thumb exoskeleton for delicate rehabilitation assistance. IEEE Trans. Robot. 2022, 38, 3514–3529. [Google Scholar] [CrossRef]

- Abdelhamid, S.; Aref, M.; Hegazy, I.; Roushdy, M. A survey on learning-based intrusion detection systems for IoT networks. In Proceedings of the 2021 Tenth International Conference on Intelligent Computing and Information Systems (ICICIS), Cairo, Egypt, 5–7 December 2021; pp. 278–288. [Google Scholar] [CrossRef]

- Omran, M.A.; Saad, W.K.; Hamza, B.J.; Fahe, A. A survey of various intelligent home applications using IoT and intelligent controllers. Indones. J. Electr. Eng. Comput. Sci. 2021, 23, 490–499. [Google Scholar] [CrossRef]

- Mittal, Y.; Toshniwal, P.; Sharma, S.; Singhal, D.; Gupta, R.; Mittal, V.K. A voice-controlled multi-functional smart home automation system. In Proceedings of the 2015 Annual IEEE India Conference (INDICON), New Delhi, India, 17–20 December 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Abidi, M.E.; Asnawi, A.L.; Azmin, N.F.; Jusoh, A.Z.; Ibrahim, S.N.; Mohd Ramli, H.A.; Abdul Malek, N. Development of voice control and home security for smart home automation. In Proceedings of the 2018 7th International Conference on Computer and Communication Engineering (ICCCE), Kuala Lumpur, Malaysia, 19–20 September 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Mayer, J. IoT architecture for home automation by speech control aimed to assist people with mobility restrictions. In International Conference on Internet Computing (ICOMP); The Steering Committee of The World Congress in Computer Science, Computer Engineering and Applied Computing (WorldComp)—CSREA: Providence, RI, USA, 2017; pp. 92–97. [Google Scholar]

- Saha, I.; Maheswari, S. Deep learning for voice control home automation with auto mode. In Proceedings of the International Conference on Computational Vision and Bio Inspired Computing, Coimbatore, India, 29–30 November 2018; pp. 1605–1614. [Google Scholar] [CrossRef]

- Ismaeel, A.G. Home automation system support for persons with different types of disabilities using IoT. In Proceedings of the IMDC-SDSP 2020: 1st International Multi-Disciplinary Conference Theme: Sustainable Development and Smart Planning, Cyberspace, Ghent, Belgium, 28–30 June 2020; p. 18. [Google Scholar] [CrossRef]

- Jat, D.S.; Limbo, A.S.; Singh, C. Voice activity detection-based home automation system for people with special needs. In Intelligent Speech Signal Processing; Academic Press: Cambridge, MA, USA, 2019; pp. 101–111. [Google Scholar] [CrossRef]

- Alrumayh, A.S.; Lehman, S.M.; Tan, C.C. Context aware access control for home voice assistant in multi-occupant homes. Pervasive Mob. Comput. 2020, 67, 101196. [Google Scholar] [CrossRef]

- Khan, M.A.; Ahmad, I.; Nordin, A.N.; Ahmed, A.E.-S.; Mewada, H.; Daradkeh, Y.I.; Rasheed, S.; Eldin, E.T.; Shafiq, M. Smart Android Based Home Automation System Using Internet of Things (IoT). Sustainability 2022, 14, 717. [Google Scholar] [CrossRef]

- Omran, M.A.; Hamza, B.J.; Saad, W.K. The design and fulfillment of a Smart Home (SH) material powered by the IoT using the Blynk app. Mater. Today Proc. 2022, 60, 1199–1212. [Google Scholar] [CrossRef]

- de Barcelos Silva, A.; Gomes, M.M.; da Costa, C.A.; da Rosa Righi, R.; Barbosa, J.L.V.; Pessin, G.; Federizzi, G. Intelligent personal assistants: A systematic literature review. Expert Syst. Appl. 2020, 147, 113193. [Google Scholar] [CrossRef]

- Google Assistant (n.d.). Available online: https://assistant.google.com/ (accessed on 9 March 2023).

- Apple Inc. (n.d.). Siri. Available online: https://www.apple.com/siri/ (accessed on 9 March 2023).

- Amazon.com Inc. (n.d.). Alexa Developer. Available online: https://developer.amazon.com/en-US/alexa (accessed on 9 March 2023).

- Uma, S.; Eswari, R.; Bhuvanya, R.; Kumar, G.S. IoT based voice/text controlled home appliances. Procedia Comput. Sci. 2019, 165, 232–238. [Google Scholar] [CrossRef]

- Kumer, S.A.; Kanakaraja, P.; Teja, A.P.; Sree, T.H.; Tejaswni, T. Smart home automation using IFTTT and google assistant. Mater. Today Proc. 2021, 46, 4070–4076. [Google Scholar] [CrossRef]

- Putthapipat, P.; Woralert, C.; Sirinimnuankul, P. Speech recognition gateway for home automation on open platform. In Proceedings of the 2018 International Conference on Electronics Information and Communication (ICEIC), Honolulu, HI, USA, 24–27 January 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Tiwari, V.; Hashmi, M.F.; Keskar, A.; Shivaprakash, N.C. Virtual home assistant for voice based controlling and scheduling with short speech speaker identification. Multimed. Tools Appl. 2020, 79, 5243–5268. [Google Scholar] [CrossRef]

- Mehrabani, M.; Bangalore, S.; Stern, B. Personalized speech recognition for Internet of Things. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing (EMNLP), Lisbon, Portugal, 19–21 September 2015; pp. 1836–1841. [Google Scholar]

- Google (n.d.). Speech-to-Text API Requests. Available online: https://cloud.google.com/speech-to-text/docs/speech-to-text-requests#overview (accessed on 9 March 2023).

- Bird, S.; Loper, E.; Klein, E. Natural Language Toolkit (NLTK) (2009–2020). Available online: https://www.nltk.org/ (accessed on 9 March 2023).

- Haroon, M. Comparative analysis of stemming algorithms for web text mining. J. Adv. Inf. Technol. 2018, 9, 20–25. [Google Scholar] [CrossRef] [Green Version]

- Raulji, J.K.; Saini, J.R. Stop-word removal algorithm and its implementation for Sanskrit language. Int. J. Comput. Appl. 2016, 150, 15–17. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Vanderplas, J. Logistic Regression—Scikit-Learn 1.0 Documentation (2011–2021). Available online: https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LogisticRegression.html (accessed on 9 March 2023).

- IBM (n.d.). Logistic Regression—Overview and Use Cases. Available online: https://www.ibm.com/topics/logistic-regression (accessed on 9 March 2023).

- Google (n.d.). Classification: Accuracy. Available online: https://developers.google.com/machine-learning/crash-course/classification/accuracy (accessed on 9 March 2023).

- Ceunen, B. Smart Home Commands Dataset. 2021. Available online: https://www.kaggle.com/datasets/bouweceunen/smart-home-commands-dataset (accessed on 9 March 2023).

- Mashhour, M. Mashhour Dataset. 2021. Available online: https://www.kaggle.com/datasets/mohamedmashhour/mashhour-dataset (accessed on 9 March 2023).

- Microsoft (n.d.). Cortana. Available online: https://www.microsoft.com/en-us/cortana (accessed on 9 March 2023).

- IBM (n.d.). Watson Assistant. Available online: https://www.ibm.com/products/watson-assistant (accessed on 9 March 2023).

| NLP Toolkit | Programming Language | Tokenization | Stemmer |

|---|---|---|---|

| NLTK | Python | Yes | Yes |

| Apache OpenNLP | Java | Yes | Yes |

| Stanford CoreNLP | Java | Yes | Yes |

| Pattern | Python | Yes | No |

| GATE | Java | Yes | No |

| Spacy | Python/Cython | Yes | No |

| Categories | Detected Devices | Correct Classified Commands |

|---|---|---|

| Normal commands | 90% | 93% |

| Unstructured commands | 90% | 80% |

| Negative commands | 90% | 83% |

| Algorithm | Dataset | Accuracy | Precision | Recall | Time |

|---|---|---|---|---|---|

| Naive Bayes | Smart Home Commands Dataset | 0.89 | 0.89 | 1.0 | - |

| The Proposed Dataset | 0.63 | 0.59 | 0.64 | 0.0119 | |

| Logistic Regression | Smart Home Commands Dataset | 0.969 | 0.967 | 1.0 | - |

| The Proposed Dataset | 1.0 | 1.0 | 1.0 | 0.0112 | |

| SVM | Smart Home Commands Dataset | 0.949 | 0.977 | 0.966 | - |

| The Proposed Dataset | 1.0 | 1.0 | 1.0 | 0.020 |

| IPA | Speech Recognition | Text Analysis | Devices Detection | Negative and Unstructured Command Understanding |

|---|---|---|---|---|

| Apple Siri | Yes | Yes | No | Semi |

| Google Assistant | Yes | Yes | No | Semi |

| Amazon Alexa | Yes | Yes | Semi | Semi |

| Microsoft Cortana | Yes | Yes | No | Semi |

| IBM Watson | Yes | Yes | Yes | Semi |

| IRON system | Yes | Yes | Yes | Yes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ali, A.-e.A.; Mashhour, M.; Salama, A.S.; Shoitan, R.; Shaban, H. Development of an Intelligent Personal Assistant System Based on IoT for People with Disabilities. Sustainability 2023, 15, 5166. https://doi.org/10.3390/su15065166

Ali A-eA, Mashhour M, Salama AS, Shoitan R, Shaban H. Development of an Intelligent Personal Assistant System Based on IoT for People with Disabilities. Sustainability. 2023; 15(6):5166. https://doi.org/10.3390/su15065166

Chicago/Turabian StyleAli, Abd-elmegeid Amin, Mohamed Mashhour, Ahmed S. Salama, Rasha Shoitan, and Hassan Shaban. 2023. "Development of an Intelligent Personal Assistant System Based on IoT for People with Disabilities" Sustainability 15, no. 6: 5166. https://doi.org/10.3390/su15065166

APA StyleAli, A.-e. A., Mashhour, M., Salama, A. S., Shoitan, R., & Shaban, H. (2023). Development of an Intelligent Personal Assistant System Based on IoT for People with Disabilities. Sustainability, 15(6), 5166. https://doi.org/10.3390/su15065166