Citizen Science: Is It Good Science?

Abstract

1. Introduction

- RQ1: What are the characteristics (e.g., launch years, regions, disciplines, and data accessibility) of existing citizen science projects?

- RQ2: How many peer-reviewed articles are produced by citizen science projects, and does this differ depending upon duration of the project, discipline, study region, and data accessibility of citizen science projects?

- RQ3: Once launched, how many years does a project take to publish its first academic article, and is this affected by the launch year, discipline, study region, and data accessibility?

2. Methods

2.1. Citizen Science Projects

2.2. Topic Searches

3. Results

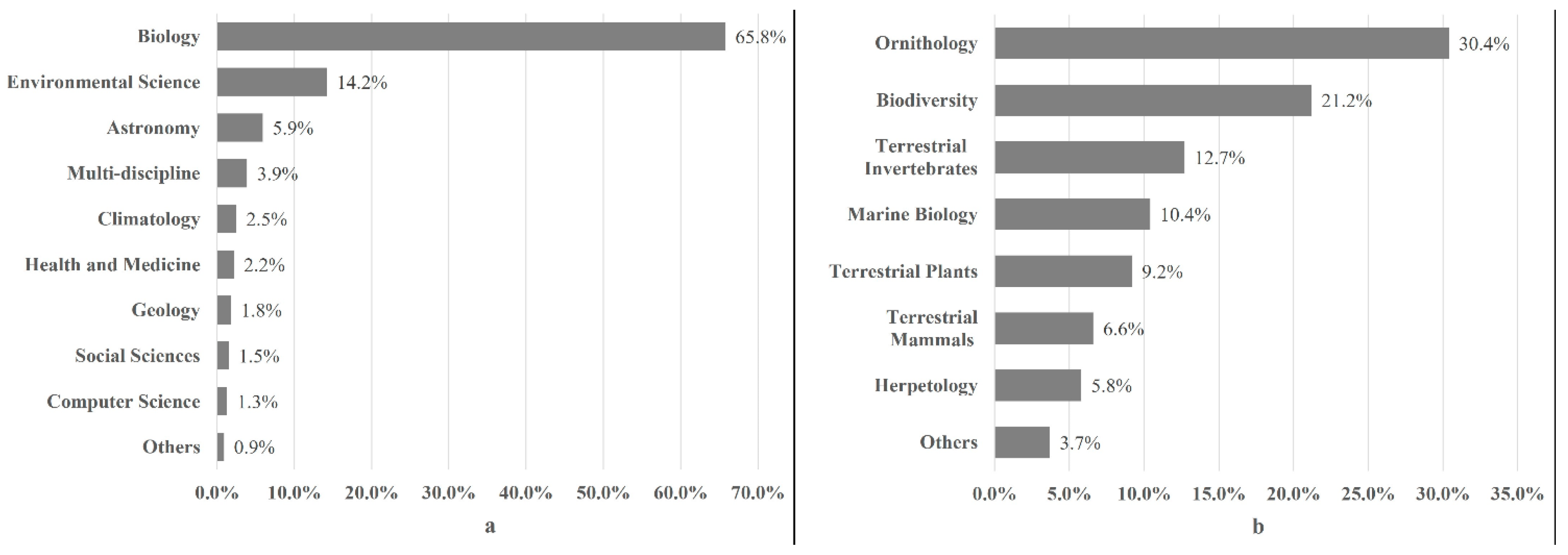

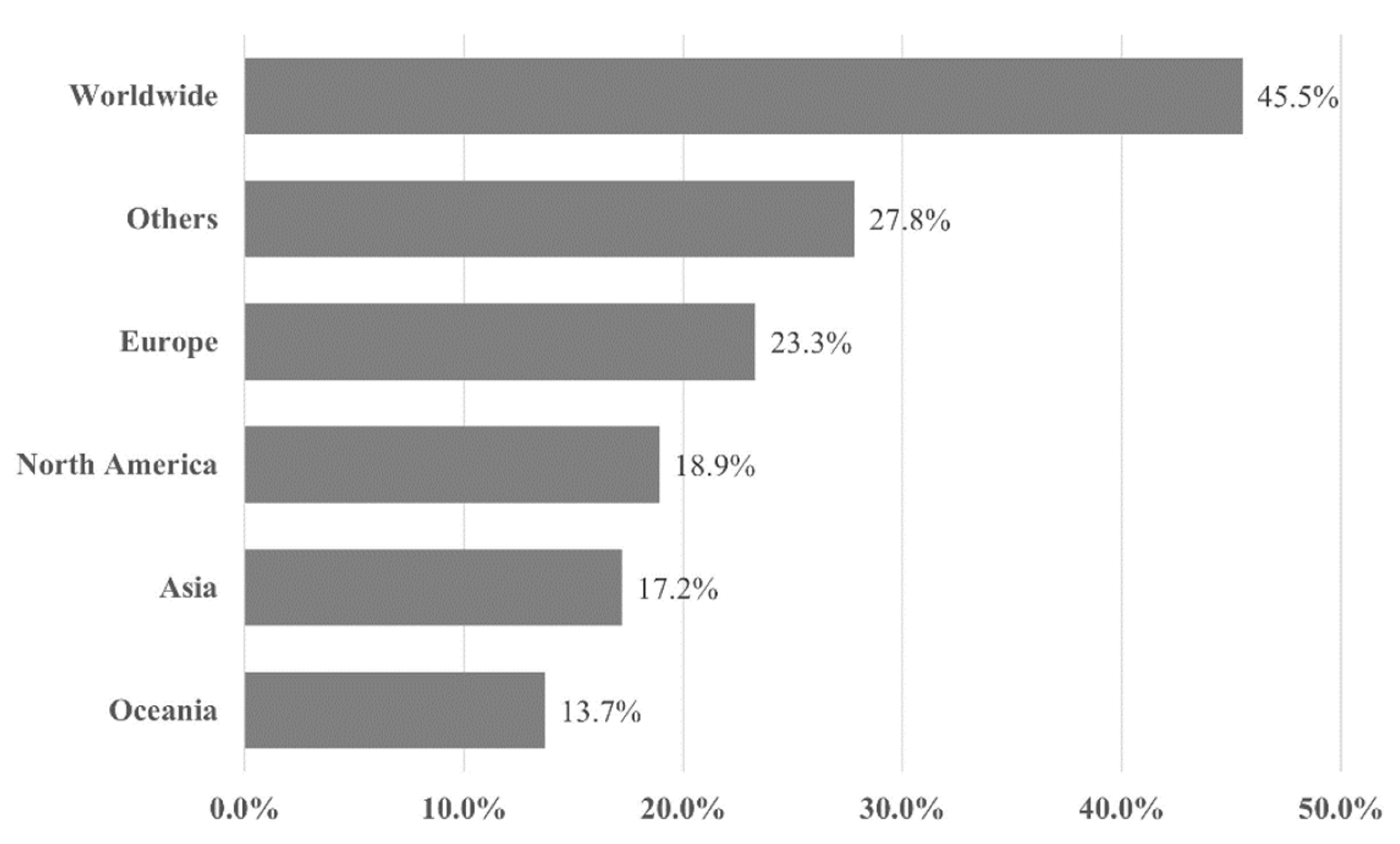

3.1. Characteristics of Sampled Projects

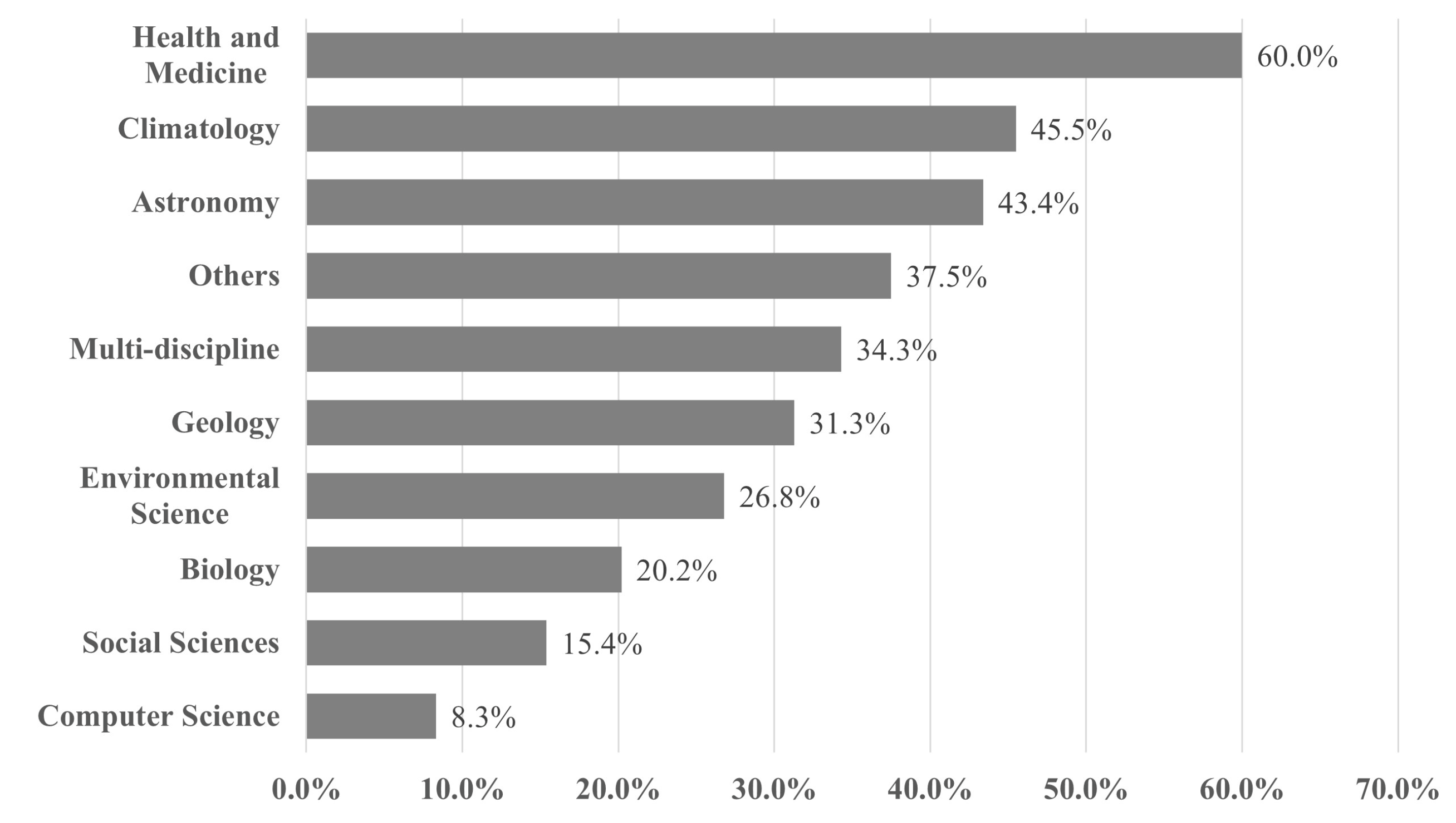

3.2. The Publication of Peer-Reviewed Articles

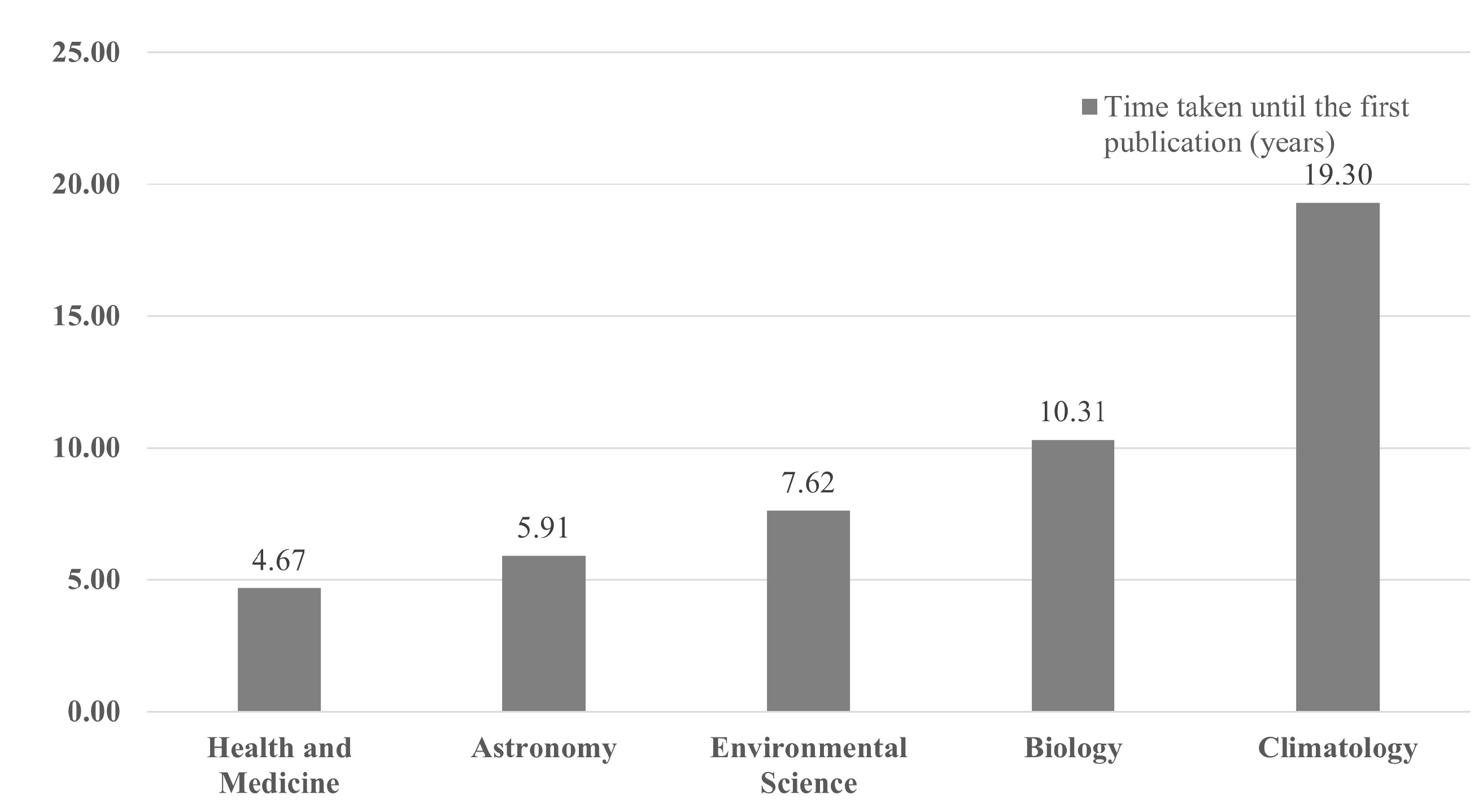

3.3. How Long Did It Take to Publish?

4. Discussion

5. Conclusions

- Citizen Science Projects: a minority of current citizen science projects that conduct actual science and produce peer-reviewed scientific outputs, in which the participants are often little more than anonymous sampling bots.

- Citizen Engagement Projects: the large majority of current citizen science projects that are really aimed at citizen engagement with science, in which conducting science is a secondary function and scientific outputs are unlikely.

5.1. The Outlook for Citizen Science Projects

5.2. The Outlook for Citizen Engagement Projects

5.3. Evaluation and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bonney, R. Citizen science: A lab tradition. Living Bird 1996, 15, 7–15. [Google Scholar]

- Irwin, A. Citizen Science: A Study of People, Expertise and Sustainable Development; Routledge: London, UK, 1995. [Google Scholar]

- Oxford English Dictionary. New Words List June 2014. 2014. Available online: https://public.oed.com/updates/new-words-list-june-2014/ (accessed on 21 November 2021).

- Oxford English Dictionary. Citizen Science. 2021. Available online: https://www.oed.com/view/Entry/33513?redirectedFrom=citizen+science#eid316619123 (accessed on 21 November 2021).

- Kullenberg, C.; Kasperowski, D. What Is Citizen Science?—A Scientometric Meta-Analysis. PLoS ONE 2016, 11, e0147152. [Google Scholar] [CrossRef] [PubMed]

- MacPhail, V.J.; Colla, S.R. Power of the people: A review of citizen science programs for conservation. Biol. Conserv. 2020, 249, 108739. [Google Scholar] [CrossRef]

- Brown, E.D.; Williams, B.K. The potential for citizen science to produce reliable and useful information in ecology. Conserv. Biol. 2019, 33, 561–569. [Google Scholar] [CrossRef] [PubMed]

- Simpson, R.; Page, K.R.; De Roure, D. Zooniverse: Observing the world’s largest citizen science platform. In Proceedings of the 23rd International Conference on World Wide Web, Seoul, Republic of Korea, 7–11 April 2014. [Google Scholar]

- Citizenscience.gov. Federal Crowdsourcing and Citizen Science Catalog. 2021. Available online: https://www.citizenscience.gov/catalog/# (accessed on 15 September 2021).

- Kelling, S. Using bioinformatics in citizen science. In Citizen Science: Public Participation in Environmental Research; Dickinson, J.L., Bonney, R., Eds.; Cornell University Press: Ithaca, NY, USA, 2012; pp. 58–68. [Google Scholar]

- Dickinson, J.L.; Zuckerberg, B.; Bonter, D.N. Citizen science as an ecological research tool: Challenges and benefits. Annu. Rev. Ecol. Evol. Syst. 2010, 41, 149–172. [Google Scholar] [CrossRef]

- Sullivan, B.L.; Aycrigg, J.L.; Barry, J.H.; Bonney, R.E.; Bruns, N.; Cooper, C.B.; Damoulas, T.; Dhondt, A.A.; Dietterich, T.; Farnsworth, A.; et al. The eBird enterprise: An integrated approach to development and application of citizen science. Biol. Conserv. 2014, 169, 31–40. [Google Scholar] [CrossRef]

- Van Horn, G.; Aodha, O.M.; Song, Y.; Cui, Y.; Sun, C.; Shepard, A.; Adam, H.; Perona, P.; Belongie, S. The iNaturalist Species Classification and Detection Dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Follett, R.; Strezov, V. An analysis of citizen science based research: Usage and publication patterns. PLoS ONE 2015, 10, e0143687. [Google Scholar] [CrossRef] [PubMed]

- Connors, J.P.; Lei, S.; Kelly, M. Citizen Science in the Age of Neogeography: Tilizing volunteered geographic information for environmental monitoring. Ann. Assoc. Am. Geogr. 2012, 102, 1267–1289. [Google Scholar] [CrossRef]

- Darch, P.T. Managing the Public to Manage Data: Citizen science and astronomy. arXiv 2017, arXiv:1703.00037. [Google Scholar] [CrossRef]

- Wiggins, A.; Wilbanks, J. The rise of citizen science in health and biomedical research. Am. J. Bioeth. 2019, 19, 3–14. [Google Scholar] [CrossRef]

- Prestopnik, N.R.; Tang, J. Points, stories, worlds, and diegesis: Comparing player experiences in two citizen science games. Comput. Hum. Behav. 2015, 52, 492–506. [Google Scholar] [CrossRef]

- Bonney, R.; Phillips, T.B.; Ballard, H.L.; Enck, J.W. Can citizen science enhance public understanding of science? Public Underst. Sci. 2016, 25, 2–16. [Google Scholar] [CrossRef] [PubMed]

- Jordan, R.C.; Gray, S.A.; Howe, D.V.; Brooks, W.R.; Ehrenfeld, J.G. Knowledge gain and behavioral change in citizen-science programs. Conserv. Biol. 2011, 25, 1148–1154. [Google Scholar] [CrossRef] [PubMed]

- Wiggins, A.; Newman, G.; Stevenson, R.D.; Crowston, K. Mechanisms for data quality and validation in citizen science. Presented at the 2011 IEEE Seventh International Conference on e-Science Workshops, Stockholm, Sweden, 5–8 December 2011. [Google Scholar]

- Theobald, E.J.; Ettinger, A.K.; Burgess, H.K.; DeBey, L.B.; Schmidt, N.R.; Froehlich, H.E.; Wagner, C.; HilleRisLambers, J.; Tewksbury, J.; Harsch, M.A.; et al. Global change and local solutions: Tapping the unrealised potential of citizen science for biodiversity research. Biol. Conserv. 2015, 181, 236–244. [Google Scholar] [CrossRef]

- Hecker, S.; Wicke, N.; Haklay, M.; Bonn, A. How does policy conceptualise citizen science? A qualitative content analysis of international policy documents. Citiz. Sci. Theory Pract. 2019, 4, 32. [Google Scholar]

- Evans, C.; Abrams, E.; Reitsma, R.; Roux, K.; Salmonsen, L.; Marra, P.P. The Neighborhood Nestwatch Program: Participant outcomes of a citizen-science ecological research project. Conserv. Biol. 2005, 19, 589–594. [Google Scholar] [CrossRef]

- Davis, L.S. Popularizing Antarctic science: Impact factors and penguins. Aquat. Conserv. Mar. Freshw. Ecosyst. 2007, 17 (Suppl. 1), S148–S164. [Google Scholar] [CrossRef]

- Neylon, C.; Wu, S. Level metrics and the evolution of scientific impact. PLoS Biol. 2009, 7, e1000242. [Google Scholar] [CrossRef]

- Freitag, A.; Pfeffer, M.J. Process, not product: Investigating recommendations for improving citizen science “success”. PLoS ONE 2013, 8, e64079. [Google Scholar] [CrossRef]

- Franzoni, C.; Sauermann, H. Crowd science: The organisation of scientific research in open collaborative projects. Res. Policy 2014, 43, 1–20. [Google Scholar] [CrossRef]

- Aceves-Bueno, E.; Adeleye, A.S.; Feraud, M.; Huang, Y.; Tao, M.; Yang, Y.; Anderson, S.E. The accuracy of citizen science data: A quantitative review. Bull. Ecol. Soc. Am. 2017, 98, 278–290. [Google Scholar] [CrossRef]

- Balázs, B.; Mooney, P.; Nováková, E.; Bastin, L.; Arsanjani, J.J. Data quality in citizen science. In The Science of Citizen Science; Springer: Berlin/Heidelberg, Germany, 2021; p. 139. [Google Scholar]

- Bonter, D.N.; Cooper, C.B. Data validation in citizen science: A case study from Project FeederWatch. Front. Ecol. Environ. 2012, 10, 305–307. [Google Scholar] [CrossRef]

- Cooper, C.B.; Shirk, J.; Zuckerberg, B. The invisible prevalence of citizen science in global research: Migratory birds and climate change. PLoS ONE 2014, 9, e106508. [Google Scholar] [CrossRef]

- Silvertown, J. A new dawn for citizen science. Trends Ecol. Evol. 2009, 24, 467–471. [Google Scholar] [CrossRef] [PubMed]

- Ferner, J.W. The Audubon Christmas Bird Count: A Valuable Teaching Resource. Am. Biol. Teach. 1977, 39, 533–535, 544. [Google Scholar] [CrossRef]

- Hames, I. Peer Review and Manuscript Management in Scientific Journals: Guidelines for Good Practice; Blackwell Publishing Ltd.: Malden, MA, USA, 2007. [Google Scholar]

- Kelly, J.; Sadeghieh, T.; Adeli, K. Peer Review in Scientific Publications: Benefits, Critiques, & A Survival Guide. EJIFCC 2014, 25, 227–243. [Google Scholar] [PubMed]

- Horbach, S.P.J.M.; Halffman, W. The changing forms and expectations of peer review. Res. Integr. Peer Rev. 2018, 3, 8. [Google Scholar] [CrossRef]

- Kirman, C.R.; Simon, T.W.; Hays, S.M. Science peer review for the 21st century: Assessing scientific consensus for decision-making while managing conflict of interests, reviewer and process bias. Regul. Toxicol. Pharmacol. 2019, 103, 73–85. [Google Scholar] [CrossRef]

- Ciasullo, M.V.; Carli, M.; Lim, W.M.; Palumbo, R. An open innovation approach to co-produce scientific knowledge: An examination of citizen science in the healthcare ecosystem. Eur. J. Innov. Manag. 2022, 25, 365–392. [Google Scholar] [CrossRef]

- Mazel-Cabasse, C. Modes and Existences in Citizen Science: Thoughts from Earthquake Country. Sci. Technol. Stud. 2018, 32, 34–51. [Google Scholar] [CrossRef]

- Skarlatidou, A.; Haklay, M. Citizen science impact pathways for a positive contribution to public participation in science. J. Sci. Commun. 2021, 20, A02. [Google Scholar] [CrossRef]

- Phillips, T.B.; Ballard, H.L.; Lewenstein, B.V.; Bonney, R. Engagement in science through citizen science: Moving beyond data collection. Sci. Educ. 2019, 103, 665–690. [Google Scholar] [CrossRef]

- Kasperowski, D.; Kullenberg, C. The many modes of citizen science. Sci. Technol. Stud. 2019, 32, 2–7. [Google Scholar] [CrossRef]

- Burgess, H.K.; DeBey, L.B.; Froehlich, H.E.; Schmidt, N.; Theobald, E.J.; Ettinger, A.K.; HilleRisLambers, J.; Tewksbury, J.; Parrish, J.K. The science of citizen science: Exploring barriers to use as a primary research tool. Biol. Conserv. 2017, 208, 113–120. [Google Scholar] [CrossRef]

- Gadermaier, G.; Dörler, D.; Heigl, F.; Mayr, S.; Rüdisser, J.; Brodschneider, R.; Marizzi, C. Peer-reviewed publishing of results from Citizen Science projects. JCOM 2018, 17, L01. [Google Scholar] [CrossRef]

- Golumbic, Y.N.; Baram-Tsabari, A.; Fishbain, B. Engagement styles in an environmental citizen science project. J. Sci. Commun. 2020, 19, A03. [Google Scholar] [CrossRef]

- Williams, C.R.; Burnell, S.M.; Rogers, M.; Flies, E.J.; Baldock, K.L. Nature-Based Citizen Science as a Mechanism to Improve Human Health in Urban Areas. Int. J. Environ. Res. Public Health 2022, 19, 68. [Google Scholar] [CrossRef] [PubMed]

- Williams, K.A.; Hall, T.E.; O’Connell, K. Classroom-based citizen science: Impacts on students’ science identity, nature connectedness, and curricular knowledge. Environ. Educ. Res. 2021, 27, 1037–1053. [Google Scholar] [CrossRef]

- Brandt, M.; Groom, M.A.; Misevic, D.; Narraway, C.L.; Bruckermann, T.; Beniermann, A.; Børsen, T.; González, J.; Meeus, S.; Roy, H.E.; et al. Promoting scientific literacy in evolution through citizen science. Proc. R. Soc. B 2022, 289, 20221077. [Google Scholar] [CrossRef]

- Kythreotis, A.P.; Mantyka-Pringle, C.; Mercer, T.G.; Whitmarsh, L.E.; Corner, A.; Paavola, J.; Chambers, C.; Miller, B.A.; Castree, N. Citizen Social Science for More Integrative and Effective Climate Action: A Science-Policy Perspective. Front. Environ. Sci. 2019, 7, 10. [Google Scholar] [CrossRef]

- MacLeod, C.J.; Scott, K. Mechanisms for enhancing public engagement with citizen science results. People Nat. 2021, 3, 32–50. [Google Scholar] [CrossRef]

- Toomey, A.H.; Domroese, M.C. Can citizen science lead to positive conservation attitudes and behaviors? Hum. Ecol. Rev. 2013, 20, 50–62. [Google Scholar]

- Crall, A.W.; Jordan, R.; Holfelder, K.; Newman, G.J.; Graham, J.; Waller, D.M. The impacts of an invasive species citizen science training program on participant attitudes, behavior, and science literacy. Public Underst. Sci. 2012, 22, 745–764. [Google Scholar] [CrossRef] [PubMed]

- Somerwill, L.; When, U. How to measure the impact of citizen science on environmental attitudes, behaviour and knowledge? A review of state-of-the-art approaches. Environ. Sci. Eur. 2022, 34, 18. [Google Scholar] [CrossRef]

- Van Brussel, S.; Huyse, H. Citizen science on speed? Realising the triple objective of scientific rigour, policy influence and deep citizen engagement in a large-scale citizen science project on ambient air quality in Antwerp. J. Environ. Plan. Manag. 2019, 62, 534–551. [Google Scholar] [CrossRef]

- Hajibayova, L.; Coladangelo, L.P.; Soyka, H.A. Exploring the invisible college of citizen science: Questions, methods and contributions. Scientometrics 2021, 126, 6989–7003. [Google Scholar] [CrossRef]

- Kam, W.; Haklay, M.; Lorke, J. Exploring factors associated with participation in citizen science among UK museum visitors aged 40–60: A qualitative study using the theoretical domains framework and the capability opportunity motivation-behaviour model. Public Underst. Sci. 2021, 30, 212–228. [Google Scholar] [CrossRef]

- Church, S.P.; Payne, L.B.; Peel, S.; Prokopy, L.S. Beyond water data: Benefits to volunteers and to local water from a citizen science program. J. Environ. Plan. Manag. 2019, 62, 306–326. [Google Scholar] [CrossRef]

- Asingizwe, D.; Poortvliet, P.M.; van Vliet, A.J.H.; Koenraadt, C.J.M.; Ingabire, C.M.; Mutesa, L.; Leeuwis, C. What do people benefit from a citizen science programme? Evidence from a Rwandan citizen science programme on malaria control. Malar. J. 2020, 19, 283. [Google Scholar] [CrossRef]

- Spiers, H.; Swanson, A.; Fortson, L.; Simmons, B.D.; Trouille, L.; Blickhan, S.; Lintott, C. Patterns of Volunteer Behaviour Across Online Citizen Science. In Proceedings of the WWW 2018 Companion Proceedings of the World Wide Web Conference, Lyon, France, 23–27 April 2018; pp. 93–94. [Google Scholar]

| Names | URLs | Regions | Fields |

|---|---|---|---|

| Australian Citizen Science Assn | citizenscience.org.au | Australia | All |

| British Trust for Ornithology | www.bto.org | UK | Biology |

| Citizen Science Portal | www.ic.gc.ca | Canada | All |

| CitizenScience.gov | www.citizenscience.gov | United States | All |

| iNaturalist Citizen Science | www.inaturalist.org/projects | Worldwide | Biology |

| Science Learning Hub | www.sciencelearn.org.nz | New Zealand | All |

| SciStarter | scistarter.org | Worldwide | All |

| Zooniverse | www.zooniverse.org | Worldwide | All |

| Projects | Region | Years | Scientific Disciplines | Papers |

|---|---|---|---|---|

| Nth American Breeding Bird Survey | North America | 1966 | Biology (Ornithology) | 265 |

| eBird | Worldwide | 2002 | Biology (Ornithology) | 222 |

| Galaxy Zoo | Worldwide | 2007 | Astronomy | 190 |

| DISCOVER-AQ | North America | 2011 | Environmental Science | 138 |

| iNaturalist | Worldwide | 2008 | Biology (Biodiversity) | 109 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Davis, L.S.; Zhu, L.; Finkler, W. Citizen Science: Is It Good Science? Sustainability 2023, 15, 4577. https://doi.org/10.3390/su15054577

Davis LS, Zhu L, Finkler W. Citizen Science: Is It Good Science? Sustainability. 2023; 15(5):4577. https://doi.org/10.3390/su15054577

Chicago/Turabian StyleDavis, Lloyd S., Lei Zhu, and Wiebke Finkler. 2023. "Citizen Science: Is It Good Science?" Sustainability 15, no. 5: 4577. https://doi.org/10.3390/su15054577

APA StyleDavis, L. S., Zhu, L., & Finkler, W. (2023). Citizen Science: Is It Good Science? Sustainability, 15(5), 4577. https://doi.org/10.3390/su15054577