Performance Improvement of Machine Learning Model Using Autoencoder to Predict Demolition Waste Generation Rate

Abstract

1. Introduction

- (1)

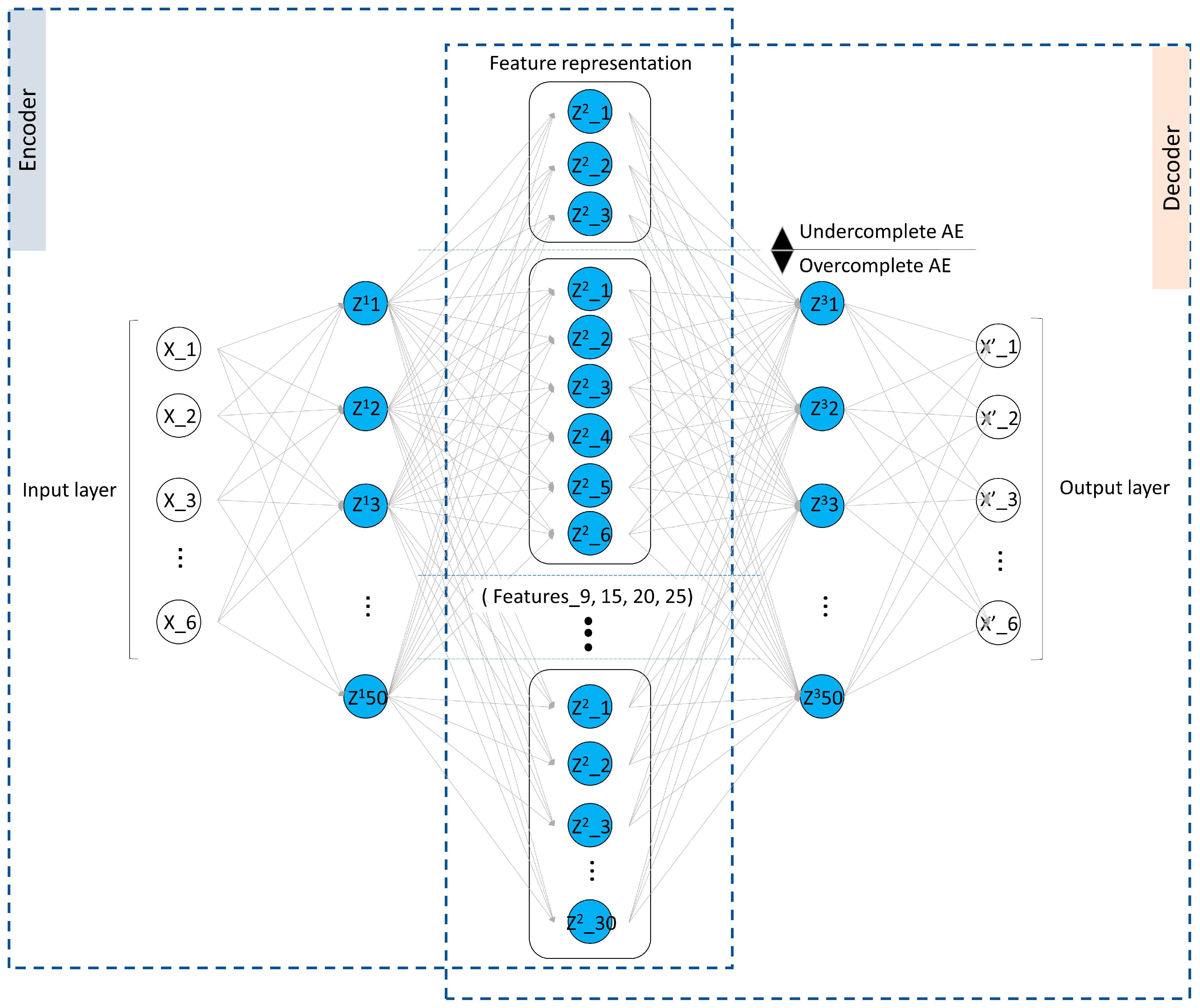

- Two distinct data-preprocessing methods were applied to construct the dataset. The first data-preprocessing method constructed a dataset by eliminating outliers, standardizing, and label-encoding categorical variables. The second method developed a dataset by reconstructing the numerical data using AE technology on the dataset obtained from the first method. After the application of the AE, various feature groups (i.e., number of features: 3, 6, 9, 15, 25, and 30) were created according to the representation size;

- (2)

- The development of DW predictive models with standalone algorithms (i.e., ANN, SVR, and RF) and hybrid DW predictive models (i.e., AE–ANN, AE–SVR, and AE–RF) with respective algorithms and AE technology;

- (3)

- The leave-one-out cross-validation (LOOCV) technique was utilized for model validation. The performances of various developed models were evaluated based on the statistical metrics of R, RMSE, R2, and MAE;

- (4)

- The performance results of DW predictive models and hybrid DW predictive models via standalone algorithms were compared and discussed. Finally, the optimal hybrid predictive model yielding the greatest performance improvement was proposed for DW generation, and the corresponding application method was discussed.

2. Methods and Materials

2.1. Data Source

2.2. Data Preprocessing and Dataset Size

2.3. Application of ML Algorithms

2.3.1. Autoencoder (Unsupervised Learning)

2.3.2. Supervised Learning Techniques Used for DWGR Estimation

Random Forest

Support Vector Regression

Artificial Neural Networks

2.4. Application of Algorithms and Hyperparameter-Tuning

2.5. Model Evaluation

2.5.1. Model Validation

2.5.2. Performance Measures

3. Results

3.1. Learning Validity Assessment of the Stacked AE Utilized in this Study

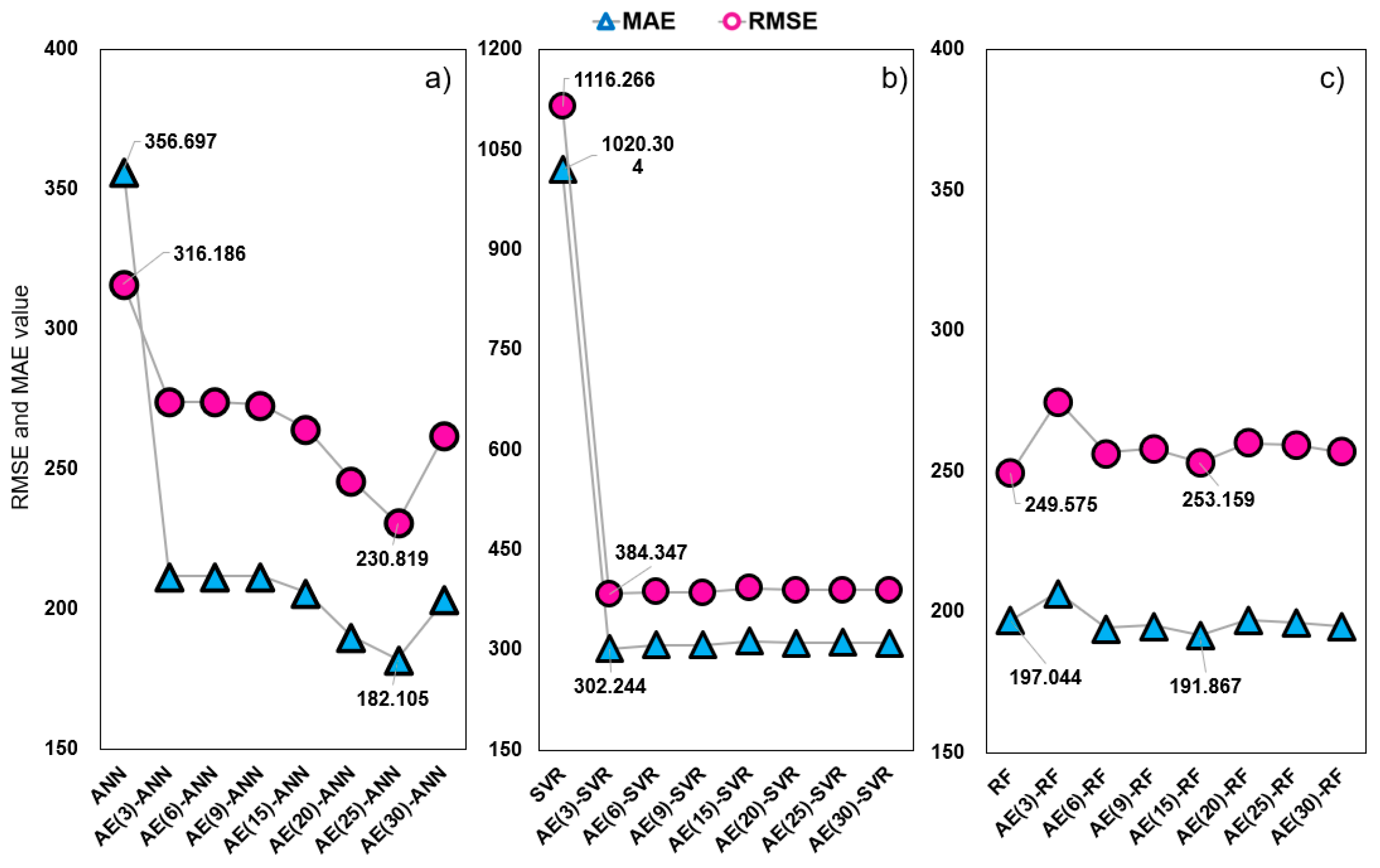

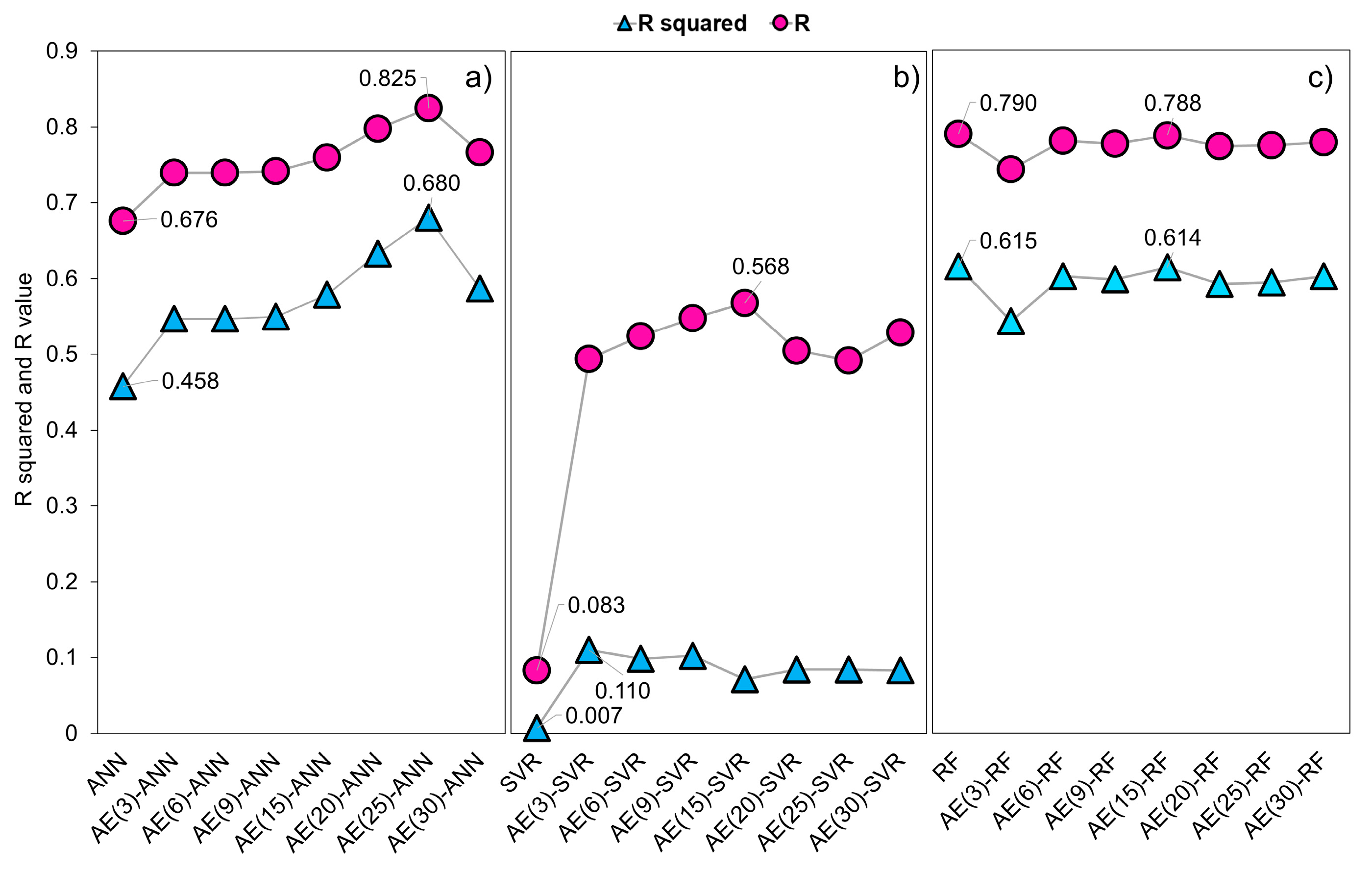

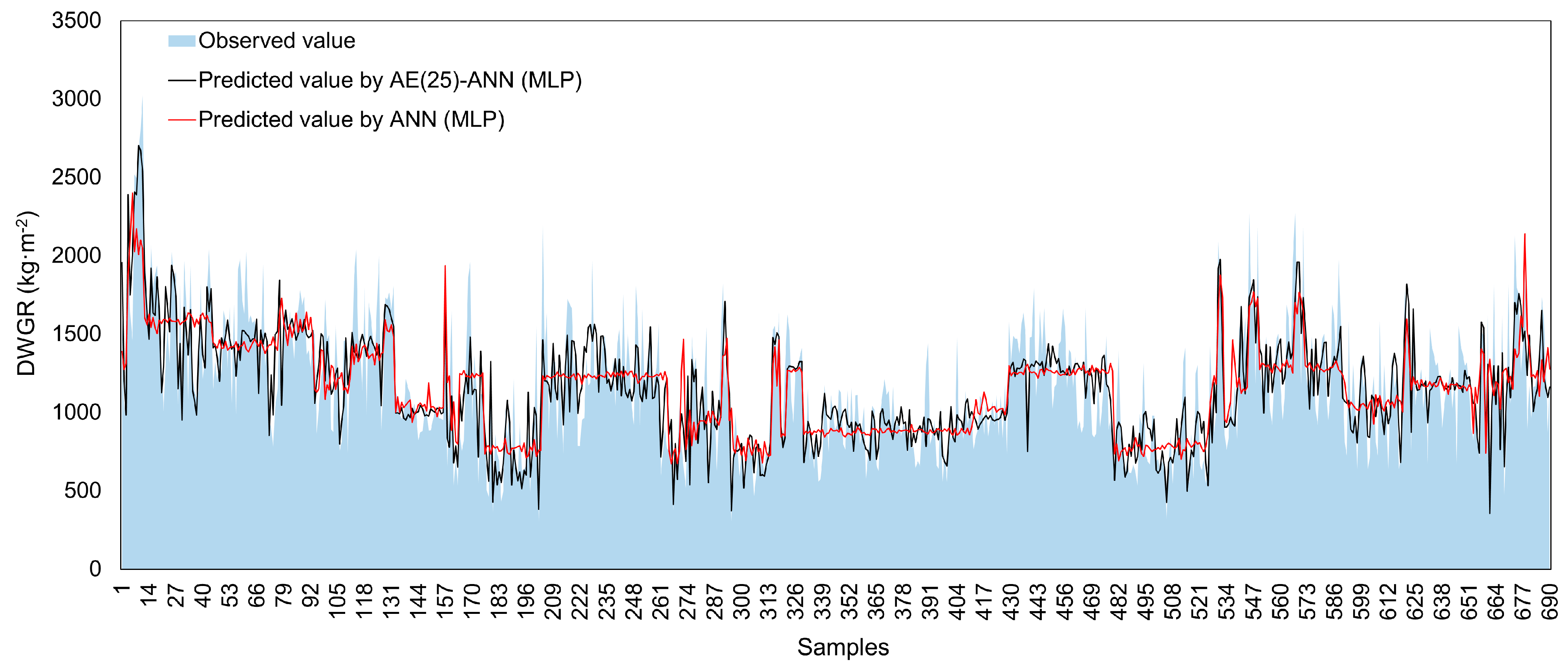

3.2. Comparison of Performance Results and Improvement of Models

4. Discussion and Recommendations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AE | Autoencoder |

| ANN | Artificial Neural Network |

| C&DW | Waste, Construction, and Demolition Waste |

| CV | Cross Validation |

| DW | Demolition Waste |

| DWGR | Demolition Waste Generation Rate |

| GFA | Gross Floor Area |

| LOOCV | Leave-One-Out Cross Validation |

| ML | Machine Learning |

| MSW | Municipal Solid Waste |

| MAE | Mean Absolute Error |

| MLP | Multilayer Perceptron |

| MSE | Mean Squared Error |

| R | Pearson’s Correlation Coefficient |

| R2 | Coefficient of Determination |

| RMSE | Root–Mean–Square Error |

| RF | Random Forest |

| SVR | Support Vector Regression |

| WG | Waste Generation |

References

- Kaza, S.; Yao, L.; Bhada-Tata, P.; Van Woerden, F. What a Waste 2.0: A Global Snapshot of Solid Waste Management to 2050. World Bank Publications: Washington, DC, USA, 2018. [Google Scholar]

- Triassi, M.; Alfano, R.; Illario, M.; Nardone, A.; Caporale, O.; Montuori, P. Environmental pollution from illegal waste disposal and health effects: A review on the ‘‘Triangle of Death”. Int. J. Environ. Res. Public Health 2015, 12, 1216–1236. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Xu, X. Legal regulation perspective of eco-efficiency construction waste reduction and utilization. Urban Dev. Stud. 2011, 9, 90–94. [Google Scholar]

- Rani, M.; Gupta, A. Construction waste management in India. Int. J. Sci. Technol. Manag. 2016, 2016. [Google Scholar]

- Wu, H.; Zuo, J.; Zillante, G.; Wang, J.; Yuan, H. Status quo and future directions of construction and demolition waste research: A critical review. J. Clean. Prod. 2019, 240, 118163. [Google Scholar] [CrossRef]

- Li, J.; Ding, Z.; Mi, X.; Wang, J.A. model for estimating construction waste generation index for building project in China. Resour. Conserv. Recycl. 2013, 74, 20–26. [Google Scholar] [CrossRef]

- Llatas, C.A. Model for quantifying construction waste in projects according to the European waste list. Waste Manag. 2011, 31, 1261–1276. [Google Scholar] [CrossRef]

- Wang, J.; Li, Z.; Tam, V.W. Identifying best design strategies for construction waste minimization. J. Clean. Prod. 2015, 92, 237–247. [Google Scholar] [CrossRef]

- Butera, S.; Christensen, T.H.; Astrup, T.F. Composition and leaching of construction and demolition waste: Inorganic elements and organic compounds. J. Hazard. Mater. 2014, 276, 302–311. [Google Scholar] [CrossRef]

- Lu, W.; Yuan, H.; Li, J.; Hao, J.J.; Mi, X.; Ding, Z. An empirical investigation of construction and demolition waste generation rates in Shenzhen city, South China. Waste Manag. 2011, 31, 680–687. [Google Scholar] [CrossRef]

- Ali Abdoli, M.; Falah Nezhad, M.; Salehi Sede, R.; Behboudian, S. Long-term forecasting of solid waste generation by the artificial neural networks. Environ. Prog. Sustain. Energy 2012, 31, 628–636. [Google Scholar] [CrossRef]

- Cha, G.W.; Moon, H.J.; Kim, Y.C. A hybrid machine-learning model for predicting the waste generation rate of building demolition projects. J. Clean. Prod. 2022, 375, 134096. [Google Scholar] [CrossRef]

- Golbaz, S.; Nabizadeh, R.; Sajadi, H.S. Comparative study of predicting hospital solid waste generation using multiple linear regression and artificial intelligence. J. Environ. Health Sci. Eng. 2019, 17, 41–51. [Google Scholar] [CrossRef] [PubMed]

- Liang, G.; Panahi, F.; Ahmed, A.N.; Ehteram, M.; Band, S.S.; Elshafie, A. Predicting municipal solid waste using a coupled artificial neural network with Archimedes optimisation algorithm and socioeconomic components. J. Clean. Prod. 2021, 315, 128039. [Google Scholar] [CrossRef]

- Milojkovic, J.; Litovski, V. Comparison of some ANN based forecasting methods implemented on short time series. In Proceedings of the 2008 9th Symposium on Neural Network Applications in Electrical Engineering, Belgrade, Serbia, 25–27 September 2008; pp. 175–178. [Google Scholar]

- Noori, R.; Abdoli, M.A.; Ghazizade, M.J.; Samieifard, R. Comparison of neural network and principal component-regression analysis to predict the solid waste generation in Tehran, Iran. J. Public Health 2009, 38, 74–84. [Google Scholar]

- Shamshiry, E.; Mokhtar, M.; Abdulai, A.M.; Komoo, I.; Yahaya, N. Combining artificial neural network- genetic algorithm and response surface method to predict waste generation and optimize cost of solid waste collection and transportation process in Langkawi island, Malaysia. Malays. J. Sci. 2014, 33, 118–140. [Google Scholar] [CrossRef]

- Song, Y.; Wang, Y.; Liu, F.; Zhang, Y. Development of a hybrid model to predict construction and demolition waste: China as a case study. Waste Manag. 2017, 59, 350–361. [Google Scholar] [CrossRef]

- Soni, U.; Roy, A.; Verma, A.; Jain, V. Forecasting municipal solid waste generation using artificial intelligence models—A case study in India. SN Appl. Sci. 2019, 1, 1–10. [Google Scholar] [CrossRef]

- Abbasi, M.; Abduli, M.A.; Omidvar, B.; Baghvand, A. Forecasting municipal solid waste generation by hybrid support vector machine and partial least square model. Int. J. Environ. Res. 2013, 7, 27–38. [Google Scholar]

- Abbasi, M.; Abduli, M.A.; Omidvar, B.; Baghvand, A. Results uncertainty of support vector machine and hybrid of wavelet transform-support vector machine models for solid waste generation forecasting. Environ. Prog. Sustain. Energy 2014, 33, 220–228. [Google Scholar] [CrossRef]

- Abbasi, M.; El Hanandeh, A. Forecasting municipal solid waste generation using artificial intelligence modelling approaches. Waste Manag. 2016, 56, 13–22. [Google Scholar] [CrossRef]

- Abunama, T.; Othman, F.; Ansari, M.; El-Shafie, A. Leachate generation rate modeling using artificial intelligence algorithms aided by input optimization method for an MSW landfill. Environ. Sci. Pollut. Res. Int. 2019, 26, 3368–3381. [Google Scholar] [CrossRef] [PubMed]

- Cai, T.; Wang, G.; Guo, Z. Construction and demolition waste generation forecasting using a hybrid intelligent method. In Proceedings of the 2020 9th International Conference on Industrial Technology and Management (ICITM), Oxford, UK, 1–13 February 2020; pp. 312–316. [Google Scholar]

- Dai, C.; Li, Y.P.; Huang, G.H. A two-stage support-vector-regression optimization model for municipal solid waste management—A case study of Beijing, China. J. Environ. Manag. 2011, 92, 3023–3037. [Google Scholar] [CrossRef] [PubMed]

- Graus, M.; Niemietz, P.; Rahman, M.T.; Hiller, M.; Pahlenkemper, M. Machine learning approach to integrate waste management companies in micro grids. In Proceedings of the 2018 19th International Scientific Conference on Electric Power Engineering (EPE), Brno, Czech Republic, 16–18 May 2018; pp. 1–6. [Google Scholar]

- Kumar, A.; Samadder, S.R.; Kumar, N.; Singh, C. Estimation of the generation rate of different types of plastic wastes and possible revenue recovery from informal recycling. Waste Manag. 2018, 79, 781–790. [Google Scholar] [CrossRef] [PubMed]

- Noori, R.; Abdoli, M.A.; Ghasrodashti, A.A.; Jalili Ghazizade, M. Prediction of municipal solid waste generation with combination of support vector machine and principal component analysis: A case study of Mashhad. Environ. Prog. Sustain. Energy. 2009, 28, 249–258. [Google Scholar] [CrossRef]

- Azadi, S.; Karimi-Jashni, A. Verifying the performance of artificial neural network and multiple linear regression in predicting the mean seasonal municipal solid waste generation rate: A case study of Fars province, Iran. Waste Manag. 2016, 48, 14–23. [Google Scholar] [CrossRef]

- Chhay, L.; Reyad, M.A.H.; Suy, R.; Islam, M.R.; Mian, M.M. Municipal solid waste generation in China: Influencing factor analysis and multi-model forecasting. J. Mater. Cycles Waste Manag. 2018, 20, 1761–1770. [Google Scholar] [CrossRef]

- Fu, H.Z.; Li, Z.S.; Wang, R.H. Estimating municipal solid waste generation by different activities and various resident groups in five provinces of China. Waste Manag. 2015, 41, 3–11. [Google Scholar] [CrossRef]

- Jahandideh, S.; Jahandideh, S.; Asadabadi, E.B.; Askarian, M.; Movahedi, M.M.; Hosseini, S.; Jahandideh, M. The use of artificial neural networks and multiple linear regression to predict rate of medical waste generation. Waste Manag. 2009, 29, 2874–2879. [Google Scholar] [CrossRef]

- Kumar, A.; Samadder, S.R. An empirical model for prediction of household solid waste generation rate—A case study of Dhanbad, India. Waste Manag. 2017, 68, 3–15. [Google Scholar] [CrossRef]

- Montecinos, J.; Ouhimmou, M.; Chauhan, S.; Paquet, M. Forecasting multiple waste collecting sites for the agro-food industry. J. Clean. Prod. 2018, 187, 932–939. [Google Scholar] [CrossRef]

- Wei, Y.; Xue, Y.; Yin, J.; Ni, W. Prediction of municipal solid waste generation in China by multiple linear regression method. Int. J. Comp. Appl. 2013, 35, 136–140. [Google Scholar]

- Wu, Z.; Fan, H.; Liu, G. Forecasting construction and demolition waste using gene expression programming. J. Comp. Civ. Eng. 2015, 29, 04014059. [Google Scholar] [CrossRef]

- Cha, G.W.; Kim, Y.C.; Moon, H.J.; Hong, W.H. New approach for forecasting demolition waste generation using chi-squared automatic interaction detection (CHAID) method. J. Clean. Prod. 2017, 168, 375–385. [Google Scholar] [CrossRef]

- Kannangara, M.; Dua, R.; Ahmadi, L.; Bensebaa, F. Modeling and prediction of regional municipal solid waste generation and diversion in Canada using machine learning approaches. Waste Manag. 2018, 74, 3–15. [Google Scholar] [CrossRef] [PubMed]

- Rosecký, M.; Šomplák, R.; Slavík, J.; Kalina, J.; Bulková, G.; Bednář, J. Predictive modelling as a tool for effective municipal waste management policy at different territorial levels. J. Environ. Manag. 2021, 291, 112584. [Google Scholar] [CrossRef] [PubMed]

- Márquez, M.Y.; Ojeda, S.; Hidalgo, H. Identification of behavior patterns in household solid waste generation in Mexicali’s city: Study case. Resour. Conserv. Recy. 2008, 52, 1299–1306. [Google Scholar] [CrossRef]

- Cha, G.W.; Moon, H.J.; Kim, Y.M.; Hong, W.H.; Hwang, J.H.; Park, W.J.; Kim, Y.C. Development of a prediction model for demolition waste generation using a random forest algorithm based on small datasets. Int. J. Environ. Res. Public Health 2020, 17, 6997. [Google Scholar] [CrossRef]

- Cha, G.W.; Moon, H.J.; Kim, Y.C. Comparison of random forest and gradient boosting machine models for predicting demolition waste based on small datasets and categorical variables. Int. J. Environ. Res. Public Health 2021, 18, 8530. [Google Scholar] [CrossRef]

- Dissanayaka, D.M.S.H.; Vasanthapriyan, S. Forecast municipal solid waste generation in Sri Lanka. In Proceedings of the 2019 International Conference on Advancements in Computing (ICAC), Malabe, Sri Lanka, 5–7 December 2019; pp. 210–215. [Google Scholar]

- Nguyen, X.C.; Nguyen, T.T.H.; La, D.D.; Kumar, G.; Rene, E.R.; Nguyen, D.D.; Chang, S.W.; Chung, W.J.; Nguyen, X.H.; Nguyen, V.K. Development of machine learning-based models to forecast solid waste generation in residential areas: A case study from Vietnam. Resour. Conserv. Recy. 2021, 167, 105381. [Google Scholar] [CrossRef]

- Abdallah, M.; Talib, M.A.; Feroz, S.; Nasir, Q.; Abdalla, H.; Mahfood, B. Artificial intelligence applications in solid waste management: A systematic research review. Waste Manag. 2020, 109, 231–246. [Google Scholar] [CrossRef]

- Osisanwo, F.Y.; Akinsola, J.E.T.; Awodele, O.; Hinmikaiye, J.O.; Olakanmi, O.; Akinjobi, J. Supervised machine learning algorithms: Classification and comparison. Int. J. Comp. Trends Technol. 2017, 48, 128–138. [Google Scholar]

- Dai, F.; Nie, G.H.; Chen, Y. The municipal solid waste generation distribution prediction system based on FIG–GA-SVR model. J. Mater. Cycles Waste Manag. 2020, 22, 1352–1369. [Google Scholar] [CrossRef]

- Chen, X.; Lu, W. Identifying factors influencing demolition waste generation in Hong Kong. J. Clean. Prod. 2017, 141, 799–811. [Google Scholar] [CrossRef]

- Banias, G.; Achillas, C.; Vlachokostas, C.; Moussiopoulos, N.; Papaioannou, I. A web-based Decision Support System for the optimal management of construction and demolition waste. Waste Manag. 2011, 31, 2497–2502. [Google Scholar] [CrossRef]

- Wang, Z.S.; Xie, W.C.; Liu, J.K. Regional differences and driving factors of construction and demolition waste generation in China. Eng. Constr. Arch. Manag. 2021, 29, 2300–2327. [Google Scholar] [CrossRef]

- Wu, H.; Zuo, J.; Zillante, G.; Wang, J.; Duan, H. Environmental impacts of cross-regional mobility of construction and demolition waste: An Australia Study. Resour. Conserv. Recycl. 2021, 174, 105805. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Hancock, J.T.; Khoshgoftaar, T.M. Survey on categorical data for neural networks. J. Big Data 2020, 7, 1–41. [Google Scholar] [CrossRef]

- Baldi, P. Autoencoders, unsupervised learning, and deep architectures. In Proceedings of the ICML Workshop on Unsupervised and Transfer Learning, Bellevue, WA, USA, 2 July 2011; pp. 37–49. [Google Scholar]

- Najafabadi, M.M.; Villanustre, F.; Khoshgoftaar, T.M.; Seliya, N.; Wald, R.; Muharemagic, E. Deep learning applications and challenges in big data analytics. J. Big Data 2015, 2, 1–21. [Google Scholar] [CrossRef]

- Delgado, J.M.D.; Oyedele, L. Deep learning with small datasets: Using autoencoders to address limited datasets in construction management. Appl. Soft Comput. 2021, 112, 107836. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. An Introduction to Variational Autoencoders; Foundations and Trends® in Machine Learning · Now Publishers Inc.: Hanover, MA, USA, 2019; Volume 12, pp. 307–392. [Google Scholar]

- Ranzato, M.A.; Poultney, C.; Chopra, S.; Cun, Y. Efficient learning of sparse representations with an energy-based model. In Advances in Neural Information Processing Systems 19 (NIPS 2006); MIT Press: Cambridge, MA, USA, 2006; Volume 19. [Google Scholar]

- Lewicki, M.S.; Sejnowski, T.J. Learning. overcomplete representations. Neural Comput. 2000, 12, 337–365. [Google Scholar] [CrossRef]

- Fan, C.; Sun, Y.; Zhao, Y.; Song, M.; Wang, J. Deep learning-based feature engineering methods for improved building energy prediction. Appl. Energy 2019, 240, 35–45. [Google Scholar] [CrossRef]

- Meyer, D. Introduction to Autoencoders. 2015. Available online: https://davidmeyer.github.io/ml/ae.pdf (accessed on 30 January 2023).

- Bengio, Y.; Lamblin, P.; Popovici, D.; Larochelle, H. Greedy layer-wise training of deep networks. In Advances in Neural Information Processing Systems 19 (NIPS 2006); MIT Press: Cambridge, MA, USA, 2006; Volume 19. [Google Scholar]

- Saha, M.; Santara, A.; Mitra, P.; Chakraborty, A.; Nanjundiah, R.S. Prediction. of the Indian summer monsoon using a stacked autoencoder and ensemble regression model. Int. J. Forecast. 2021, 37, 58–71. [Google Scholar] [CrossRef]

- Charte, D.; Charte, F.; del Jesus, M.J.; Herrera, F. An analysis on the use of autoencoders for representation learning: Fundamentals, learning task case studies, explainability and challenges. Neurocomputing 2020, 404, 93–107. [Google Scholar] [CrossRef]

- Guo, H.N.; Wu, S.B.; Tian, Y.J.; Zhang, J.; Liu, H.T. Application of machine learning methods for the prediction of organic solid waste treatment and recycling processes: A review. Bioresour. Technol. 2021, 319, 124114. [Google Scholar] [CrossRef] [PubMed]

- Xia, W.; Jiang, Y.; Chen, X.; Zhao, R. Application of machine learning algorithms in municipal solid waste management: A mini review. Waste Manag. Res. 2022, 40, 609–624. [Google Scholar] [CrossRef]

- Fernández-Delgado, M.; Cernadas, E.; Barro, S.; Amorim, D. Do we need hundreds of classifiers to solve real world classification problems? J. Mach. Learn. Res. 2014, 15, 3133–3181. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Namoun, A.; Hussein, B.R.; Tufail, A.; Alrehaili, A.; Syed, T.A.; BenRhouma, O. An ensemble learning based classification approach for the prediction of household solid waste generation. Sensors 2022, 22, 3506. [Google Scholar] [CrossRef] [PubMed]

- Chen, K.Y.; Wang, C.H. Support vector regression with genetic algorithms in forecasting tourism demand. Tour. Manag. 2007, 28, 215–226. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Z.; Lu, P. Electricity load forecasting using rough set attribute reduction algorithm based on immune genetic algorithm and support vector machines. In Proceedings of the 2008 International Conference on Risk Management & Engineering Management, Beijing, China, 4–6 November 2008; pp. 239–244. [Google Scholar]

- Xu, A.; Chang, H.; Xu, Y.; Li, R.; Li, X.; Zhao, Y. Applying artificial neural networks (ANNs) to solve solid waste-related issues: A critical review. Waste Manag. 2021, 124, 385–402. [Google Scholar] [CrossRef]

- You, H.; Ma, Z.; Tang, Y.; Wang, Y.; Yan, J.; Ni, M.; Cen, K.; Huang, Q. Comparison of ANN (MLP), ANFIS, SVM, and RF models for the online classification of heating value of burning municipal solid waste in circulating fluidized bed incinerators. Waste Manag. 2017, 68, 186–197. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Li, J.; Gu, J.; Zhou, Z.; Wang, Z. Artificial neural networks for infectious diarrhea prediction using meteorological factors in Shanghai (China). Appl. Soft Comput. 2015, 35, 280–290. [Google Scholar] [CrossRef]

- Dong, B.; Cao, C.; Lee, S.E. Applying support vector machines to predict building energy consumption in tropical region. Energy Build. 2005, 37, 545–553. [Google Scholar] [CrossRef]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Cheng, J.; Dekkers, J.C.; Fernando, R.L. Cross-validation of best linear unbiased predictions of breeding values using an efficient leave-one-out strategy. J. Anim. Breed. Genet. 2021, 138, 519–527. [Google Scholar] [CrossRef]

- Wong, T.T. Performance evaluation of classification algorithms by k-fold and leave-one-out cross validation. Pattern Recognit. 2015, 48, 2839–2846. [Google Scholar] [CrossRef]

- Witten, I.H.; Hall, M.A. Data Mining: Practical Machine Learning Tools and Techniques, 3rd ed.; Morgan Kaufmann: Massachusetts, MA, USA, 2011. [Google Scholar]

- Cheng, H.; Garrick, D.J.; Fernando, R.L. Efficient. strategies for leave-one-out cross validation for genomic best linear unbiased prediction. J. Anim. Sci. Biotechnol. 2017, 8, 1–5. [Google Scholar] [CrossRef]

- Shao, Z.; Er, M.J. Efficient. leave-one-out cross-validation-based regularized extreme learning machine. Neurocomputing 2016, 194, 260–270. [Google Scholar] [CrossRef]

- Bai, J.; Li, Y.; Li, J.; Yang, X.; Jiang, Y.; Xia, S.T. Multinomial random forest. Pattern Recognit. 2022, 122, 108331. [Google Scholar] [CrossRef]

| Building Features | Output | ||||||

|---|---|---|---|---|---|---|---|

| Bldg ID | Location | Structure | Usage | Wall Type | Roof Type | GFA (m2) | Demolition Waste Generation (kg·m−2) |

| Bldg 1 | Project B | RC | Residential | Concrete | Slab | 289.50 | 1279.71 |

| Bldg 2 | Project A | RC | Residential | Brick | Slab | 114.20 | 2060.07 |

| Bldg 3 | Project A | RC | Residential | Brick | Slab | 100.45 | 3875.76 |

| Bldg 4 | Project A | RC | Residential | Brick | Slab | 100.45 | 1644.75 |

| Bldg 5 | Project A | RC | Residential | Brick | Slab | 197.68 | 1458.22 |

| Bldg 6 | Project A | RC | Residential | Brick | Slab | 190.36 | 2519.33 |

| Bldg 7 | Project A | RC | Residential | Brick | Slab | 114.80 | 2494.95 |

| Bldg 8 | Project A | RC | Residential | Brick | Slab | 118.41 | 3398.11 |

| Bldg 9 | Project A | RC | Residential | Brick | Slab | 47.11 | 1849.38 |

| Bldg 10 | Project A | RC | Residential | Brick | Slab | 106.45 | 2665.72 |

| Bldg 11 | Project A | RC | Residential | Brick | Slab | 87.53 | 2805.48 |

| Bldg 12 | Project A | RC | Residential | Brick | Slab | 82.40 | 3024.04 |

| Bldg 13 | Project A | RC | Residential | Brick | Slab | 95.15 | 6033.67 |

| Bldg 14 | Project A | RC | Residential | Brick | Slab | 51.11 | 2426.00 |

| Bldg 15 | Project A | RC | Residential | Brick | Slab | 149.51 | 1990.99 |

| … | … | … | … | … | … | … | … |

| Bldg 781 | Project C | Masonry | Residential | Block | Slate | 85.66 | 823.51 |

| Bldg 782 | Project B | Masonry | Commercial | Block | Slab and roofing tile | 94.44 | 1166.09 |

| Category | Numbers | DWGR (kg·m2) | ||||

|---|---|---|---|---|---|---|

| Total | Min | Mean | Max | |||

| Location | Project A | 343 | 450,310 | 298 | 1313 | 6034 |

| Project B | 356 | 485,037 | 83 | 1362 | 8574 | |

| Project C | 83 | 101,531 | 736 | 1223 | 1808 | |

| Usage | Residential | 595 | 767,578 | 83 | 1290 | 8574 |

| Residential and commercial | 172 | 251,381 | 418 | 1462 | 5718 | |

| Commercial | 15 | 19,510 | 607 | 1301 | 2474 | |

| Structure | RC | 87 | 169,538 | 418 | 1949 | 6034 |

| Masonry | 604 | 788,042 | 83 | 1305 | 8574 | |

| Wood | 91 | 80,889 | 298 | 889 | 2237 | |

| Wall type | Concrete | 9 | 10,357 | 871 | 1151 | 4696 |

| Brick | 236 | 391,259 | 252 | 1658 | 6034 | |

| Block | 500 | 596,799 | 83 | 1194 | 8574 | |

| Mud plastered and mortar | 37 | 40,056 | 517 | 1083 | 2591 | |

| Roof type | Slab | 289 | 479,356 | 252 | 1659 | 6034 |

| Slab and roofing tile | 33 | 38,877 | 252 | 1178 | 1808 | |

| Slate | 178 | 227,923 | 306 | 1280 | 8574 | |

| Roofing tile | 282 | 292,314 | 83 | 1037 | 2527 | |

| Categorical Variable | Numerical Value Assigned by Label Encoding | |

|---|---|---|

| Location | Location_project A | 0 |

| Location_project B | 1 | |

| Location_project C | 2 | |

| Structure | Structure_RC | 0 |

| Structure_masonry | 1 | |

| Structure_wood | 2 | |

| Usage | Usage_ residential | 0 |

| Usage_residential & commercial | 1 | |

| Usage_ commercial | 2 | |

| Wall type | Wall type_concrete | 0 |

| Wall type_brick | 1 | |

| Wall type_block | 2 | |

| Wall type_ mud plastered and mortar | 3 | |

| Roof type | Roof type_slab | 0 |

| Roof type_slab and roofing tile | 1 | |

| Roof type_slate | 2 | |

| Roof type_roofing tile | 3 |

| Study | Estimation Level | Waste Type | Input Variable Data Composition (Number of Input Variables or Characteristics of Data) | Model Type | Performance of Predictive Model | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| RMSE | MSE | MAE | MAPE | R2 | R | Error Rate (%) | |||||

| This study | Building | DW | Numerical (1); categorical (5) | ANN (MLP) | 316.186 | 356.697 | 0.458 | 0.676 | |||

| AE (25 features)–ANN (MLP) | 230.819 | 128.105 | 0.680 | 0.825 | |||||||

| SVR | 1020.304 | 1116.266 | 0.007 | 0.083 | |||||||

| AE (3 features)–SVR | 302.224 | 384.347 | 0.110 | 0.494 | |||||||

| Abbasi et al., 2013 [20] | City | MSW | Numerical (time series data) | SVM | 2070 | 0.761 | |||||

| PL–SVM | 1541 | 0.869 | |||||||||

| Abbasi et al., 2014 [21] | City | MSW | Numerical (time series data) | SVM | 814–3268 | 0.702–0.756 | |||||

| WT-SVM | 639–2283 | 0.813–0.887 | |||||||||

| Song et al., 2017 [18] | Regional | C&D waste | Numerical (time series data) | GM | 21 | ||||||

| GM -SVR | 4.6 | ||||||||||

| Golbaz et al., 2019 [13] | Building | MSW (hospital solid waste) | Numerical (7); categorical (1) | SVM | 0.001–0.003 | 0.79–0.98 | |||||

| F–SVM | 0.001–0.002 | 0.79–0.92 | |||||||||

| Soni et al., 2019 [19] | City | MSW | Numerical (4) | ANN | 165.5 | 0.72 | |||||

| GA–ANN | 95.7 | 0.87 | |||||||||

| Cai et al., 2020 [24] | Regional | C&D waste | Numerical (time series data) | SVR | 50.19 | 17.29 | |||||

| LSTM–SVR | 29.04 | 10.02 | |||||||||

| Dai et al., 2020 [47] | District | MSW | Numerical (3; time series data) | SVR | 34.725 | 14.434 | 0.8376 | ||||

| FIG–GA–SVM | 5.703 | 2.012 | 0.9845 | ||||||||

| Liang et al., 2021 [14] | City | MSW | Numerical (8) | ANN | 11.23 | 10.29 | 0.76 | ||||

| AOA–ANN | 5.89 | 6.21 | 0.88 | ||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cha, G.-W.; Hong, W.-H.; Kim, Y.-C. Performance Improvement of Machine Learning Model Using Autoencoder to Predict Demolition Waste Generation Rate. Sustainability 2023, 15, 3691. https://doi.org/10.3390/su15043691

Cha G-W, Hong W-H, Kim Y-C. Performance Improvement of Machine Learning Model Using Autoencoder to Predict Demolition Waste Generation Rate. Sustainability. 2023; 15(4):3691. https://doi.org/10.3390/su15043691

Chicago/Turabian StyleCha, Gi-Wook, Won-Hwa Hong, and Young-Chan Kim. 2023. "Performance Improvement of Machine Learning Model Using Autoencoder to Predict Demolition Waste Generation Rate" Sustainability 15, no. 4: 3691. https://doi.org/10.3390/su15043691

APA StyleCha, G.-W., Hong, W.-H., & Kim, Y.-C. (2023). Performance Improvement of Machine Learning Model Using Autoencoder to Predict Demolition Waste Generation Rate. Sustainability, 15(4), 3691. https://doi.org/10.3390/su15043691