1. Introduction

Mucilage is a phenomenon that is caused by several different macro-aggregates. Marine snow and sea snow terms are also used for marine mucilage. Although it is sometimes only possible to observe mucilage in deep water, it is also observed on the sea’s surface very often as creamy or gelatinous substances [

1]. An example of creamy marine mucilage is given in

Figure 1, and an example of gelatinous marine mucilage is given in

Figure 2. The area of each mucilage part on the sea’s surface can exhibit different sizes within a wide range. The small mucilage parts can be observed frequently on sea surfaces; however, the large ones can be observed at the time of mucilage bloom [

1]. Nevertheless, mucilage is a phenomenon of nature. There are some factors that increase the volume of mucilage, such as high levels of industrialization, the increase in the usage of agricultural chemicals, a substantial amount of fishing, and a high volume of marine traffic. If the conditions are met, factors such as increasing temperatures or a change in wind speed can provoke a mucilage bloom [

1]. In the study of Komuscu et al. [

2], it has also been shown in detail that mucilage cannot be explained only by a change in meteorological conditions, but a combination of other environmental factors and meteorological conditions can cause the phenomenon of mucilage bloom [

2].

There are several studies that monitor sea surfaces. Satellite-based data were used in some of these studies [

3,

4,

5,

6,

7,

8,

9,

10]. Rasuly et al. [

3] examined satellite images of the Caspian Sea’s coasts in their study. The authors proposed a method to determine the changes in the water level on the coasts by evaluating satellite images [

3]. Bondur et al. [

4] examined anthropogenic influences in the Mamola Bay region of Hawaii, USA, using sensor data from different satellites [

4]. Messager et al. [

5] performed ocean monitoring using synthetic aperture radar (SAR) data. One of the aims of the study targeted oil spill detection. A support vector machine (SVM)-based classification has also been utilized for this process [

5]. Ferreira et al. [

6] used satellite data to monitor chlorophyll-a concentrations in the Western Antarctic Peninsula. The authors proposed a custom algorithm and used machine learning methods for this purpose [

6]. Khan et al. [

7] investigated satellite data to monitor glaciers. They used SVM, ANN, and RF methods and compared the success of these methods to their application [

7]. Shen et al. [

8] used satellite data to classify sea ice types. CNN, k-nearest neighbor (kNN), and SVM are some of the methods used for this purpose. The accuracy rates of the different methods used are provided comparatively [

8]. Gokaraju et al. [

9] proposed a method that monitors harmful algal blooms in the US Gulf of Mexico region using satellite-based sensor data. For this purpose, an SVM-based method was developed [

9]. Hereher investigated a system that monitors sea surface temperatures using satellite image data [

10]. There are also studies aiming to monitor marine mucilage, one of the sea’s surface irregularities, using satellite data. Kavzoglu et al. [

11] investigated a method for describing mucilage areas using satellite-based photos. A random-forest-based method was used to detect mucilage areas. The authors focused on finding differences in the patterns on the sea’s surface due to the ship’s movements and marine mucilage [

11]. Yagci et al. [

1] also determined whether there was mucilage by using satellite images. In the method they used, they made use of moderate-resolution imaging spectroradiometer (MODIS) products that contained satellite images. A mucilage index was created by using the green and blue pixel values of the sea images and the near-infrared (NIR) and short-wave infrared (SWIR) values. The algorithm decides whether mucilage is present or not by performing evaluations according to this index using the data coming from the satellite’s images [

1]. In the study of Cavalli [

12], MODIS was also used. In that study, sea surface temperatures were calculated using MODIS data [

12]. Acar et al. [

13] used image data taken from satellites to monitor marine mucilage. Satellite data were retrieved from the Google Earth Engine. Firstly, the authors used several indexes in order to mask clouds, such as the normalized difference vegetation index (NDVI) and normalized difference water index (NDWI). After that, the median filter was applied to the images. Using a supervised random forest (RF) classifier, the mucilage and non-mucilage areas on the sea’s surface were detected [

13]. Another study that focused on marine mucilage detection using satellite data was presented by Tassan [

14]. In his work, the data of satellite-based and advanced very high-resolution radiometers (AVHRR) were used to monitor floating mucilage parts on sea surfaces [

14].

Utilizing unmanned aerial vehicles is also a common method for detecting irregularities on the sea’s surface and beaches. Pinto et al. [

15] attempted to detect pollution on Portuguese beaches. In the aforementioned study, the areas that are contaminated with large-scale objects on the beach were determined by using different machine learning methods, which were classified according to their types. The authors added that one of the aims of the study was to accurately direct cleaning personnel on beaches [

15]. Goncalves et al. [

16] collected images from Portuguese beaches in their study and investigated the observed pollution elements in the images. This study also included contamination detection with machine learning methods compared with manual contamination detection using operators. CNN and RF methods were used as the machine-learning methods. The authors proposed that machine-learning methods would yield better results when the database grew and when the number of these types of studies increased [

16]. Goncalves et al. [

17] examined unmanned aerial vehicle images that they took from the Portuguese beaches in their study. In the obtained data, they attempted to detect polluted areas by using three different object-oriented machine learning methods, namely kNN, SVM, and RF. In their comparison, the RF method produced more successful results among these three methods. It has been emphasized that the kNN method is an effective method for non-experts due to its simplicity [

17]. Fallati et al. [

18] applied CNN-based deep learning methods in their study. The images were obtained by using unmanned aerial vehicles over the beaches of the Republic of Maldives. In that study, the researchers also classified identified pollutants according to their types [

18]. One of the problems that have increased in recent years and threaten both nature and the economy is the jellyfish problem [

19]. Kim et al. [

20] monitored jellyfish in the images obtained from unmanned aerial vehicles using CNN-based classifiers. The authors stated that they achieved accuracy values over 80 percent [

20]. Narmillan et al. [

21]. investigated UAV multispectral images to monitor fields. The success of different machine learning methods was compared to find the best accuracy [

21]. Goncalves et al. [

22] aimed to obtain three-dimensional image data of coastal areas in their study. The main motivation of their study was to determine the maintenance needs of rocky coastal groins. For this purpose, both UAV and terrestrial laser scanning (TLS) systems were used, and the success rates of these systems were compared [

22]. There is also a study that used UAVs to image phaeocystis globosa algal bloom, which exhibits a gelatinous appearance that is similar to the mucilage that it can take on from time to time [

23]. Using hyperspectral imaging, regions of high algal bloom were identified by UAVs [

23]. Manned aerial vehicles were also used to monitor marine mucilage. Zambianchi et al. [

24] monitored marine mucilage on sea surfaces using manned aircraft to classify regions as those with mucilage and those without mucilage. Red, green, and blue (RGB)-based thresholding algorithms were used [

24].

Unmanned/manned surface vehicles are commonly used for marine fauna monitoring [

25,

26]. Tian et al. [

27] observed sea ice with the data they collected from the boat in their study. Since the detections made with the naked eye take a long time and are expensive, the authors have developed an algorithm to automate this task. Images taken from the surface vehicle have benefited from methods such as support vector machine (SVM) and random forest (RF), and they achieved successful results over four out of five. It was stated by the authors that this rate increased with the addition of different machine learning methods [

27]. Cao et al. [

28] investigated an USV to monitor quality of sea water. Data of total dissolved solids, pH and turbidity are used to decide the quality of water in that study [

28]. Dabrowski et al. [

29] processed data, taken from satellite, unmanned aerial vehicle (UAV) and USV together and observed the tombolo phenomenon in Sopot, Poland [

29]. Specht et al. [

30] investigated a bathymetric monitoring system for shallow waterbodies. Both UAV and USV are used in that system. For data processing several methods including artificial neural networks (ANN) are used [

30]. Papachristopoulou et al. [

31] observed a beach in Greece by surface vehicle and collected images with the cameras they placed on it. The authors evaluated the images they collected and observed the pollution on the beach [

31]. Wang et al. [

32] developed an unmanned surface vehicle to quickly detect incident of spill and collect spilled oil. This vehicle is equipped with 8 different cameras. The data collected by the camera is transferred to the central station via wireless Local Area Network (LAN). Data is processed in the center and determination is made also there [

32]. Dowden et al. [

33] examined the sea ice images that are collected on the ice-broken ship. Authors used two different neural networks methods to monitor sea ices namely Segmentation Network (SegNet) and Pyramid Parsing Network (PSPNet101). The PSPNet101 is combination of several methods, one of which is based on convolutional neural network (CNN) [

33].

Mucilage-affected areas have been monitored using different sensors, such as temperature sensors, a pH meter, and dissolved oxygen meters, and this involves monitoring changes in the quality of seawater induced by marine mucilage [

34]. Seiber et al. [

35] investigated a Zigbee-based buoy network to monitor the seas for marine mucilage. Buoys are used to measure temperature, which is one of the symptoms indicating marine mucilage [

35]. Martin et al. [

36] conducted a study on the data of several sensors on buoys in the Ligurian Sea, and these sensors included temperature or salinity sensors. The data were collected during the period when the mucilage’s density increased in the Ligurian Sea and included data collected by other researchers during a period when similar events occurred in the Adriatic Sea; these data were compared. Similarities between the data were also described in the study [

36]. In addition to these measurements, samples taken from the sea could also be investigated by using microscopes and other sensors. Ohman et al. [

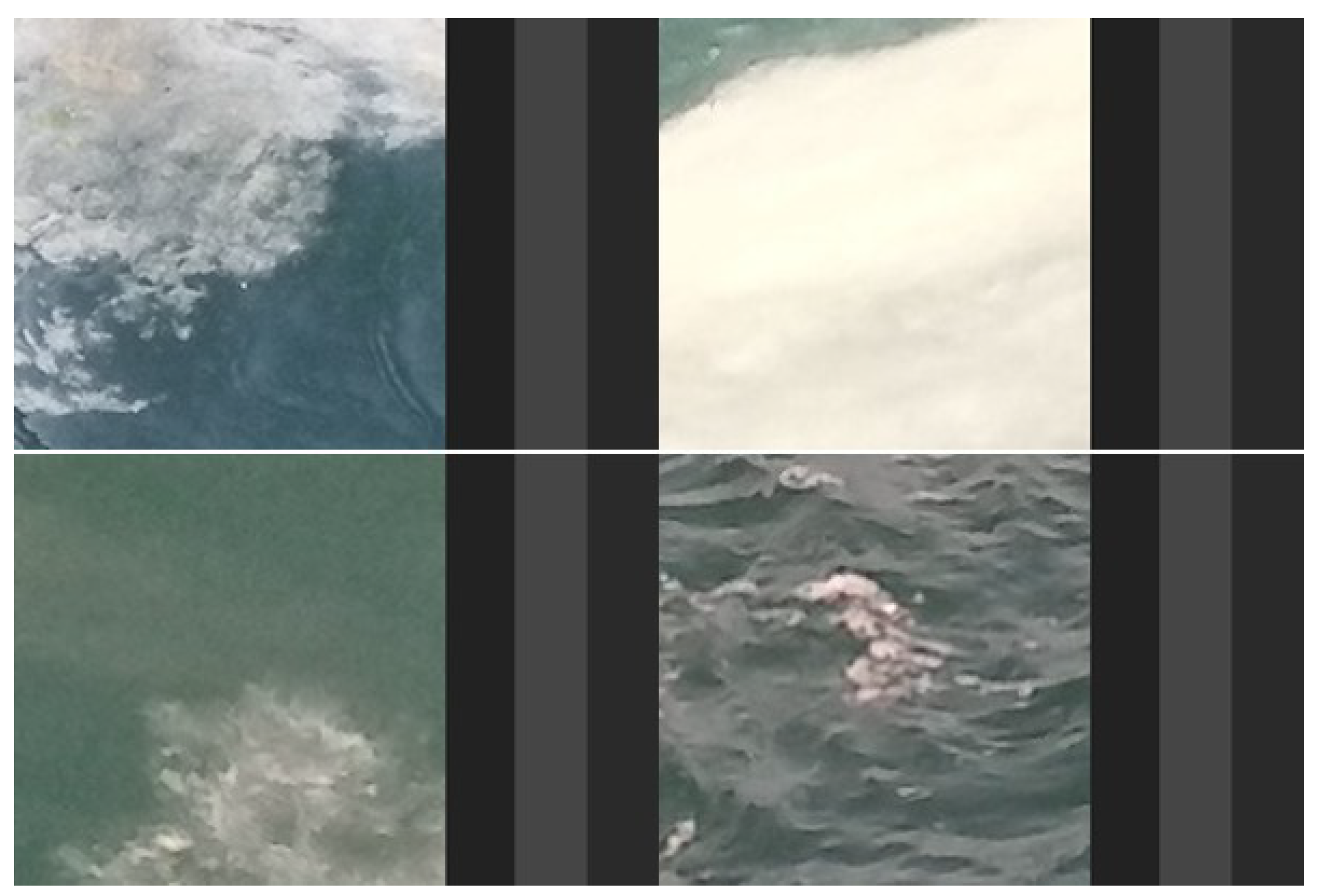

37] investigated a study to analyze samples from the sea using optical zoom capability devices. Different types of zooplanktons and mucilage, also known as sea snow, were detected [

37]. Totti et al. [

38] investigated samples from the sea that were collected from the same locations but at different times: at the time of mucilage bloom and when there was no mucilage. The samples were investigated, and the number and types of plankton were calculated and noted, respectively. In addition, other sensors were used to detect the values of chemicals that were observed in marine mucilage samples by measuring phosphate (

) and silicate (

) values [

38]. Giani et al. [

39] collected marine mucilage samples from the Northern Adriatic Sea. In the location where the data were collected, some parameters such as temperature, pH, and dissolved oxygen were measured. In addition, the collected data were investigated for their chemical content in the laboratory [

39].

Mucilage not only harms the economy by decreasing income from recreation, fishing, and tourism, but it also harms ecology due to the low levels of dissolved oxygen that are observed during mucilage bloom. It affects the food chain of marine fauna [

40]. The negative effects of mucilage increase the mortality ratio of different species and are in an extreme manner [

40,

41,

42]. In addition, at the time of mucilage bloom, there is a record of the number of opportunistic species, such as ostracods [

43]. Moreover, mucilage also harms the filters of ships; through them, seawater flows into the cooling system of the ship [

44].

It is important to solve the aforementioned conditions that occur in order to decrease the volume of mucilage. Moreover, it is also important to prevent marine mucilage on the sea’s surface at the time of mucilage bloom [

40]. Collecting mucilage parts on the sea surface helps increase the dissolved oxygen level in the sea [

40]. In addition, stopping fishing operations at the time of mucilage bloom can also be useful since fish can eat mucilage [

40,

45]. Using beneficial bacteria is another way to prevent marine mucilage [

46]. For such reasons, it is important to monitor marine mucilage and detect its locations.

The goal of our study is to improve a system for monitoring marine mucilage on sea surfaces autonomously. There are different ways to monitor marine mucilage, such as using satellite-based or unmanned aerial vehicles (UAV)-based monitoring methods in addition to applying unmanned/manned surface vehicle-based monitoring. However, surface vehicles have the capability to struggle against marine mucilage when using different methods, as explained in the previous paragraph. It is possible to use UAVs to detect mucilage regions and to divert unmanned surface vehicles (USVs) to this region. However, performing both monitoring marine mucilage and preventing it using only one vehicle is more of an economical solution. For this reason, a single USV is accepted as a user of our investigated system. Three different measuring methods are developed in this study for USVs to monitor marine mucilage. First, the USV checks the areas on its route by taking images of the sea’s surfaces. Areas in the images are classified as mucilage candidate areas and non-mucilage areas. If mucilage-candidate areas are identified, the USV is directed to that region. After reaching the mucilage-candidate region, the USV collects data using its sensors, such as a pH meter, dissolved oxygen level sensor, and electrical conductivity sensor. The USV also collects samples from mucilage-candidate areas for analysis either on the USV itself or at the base station by using other sensors or microscopes. This analysis is applied as a useful tool for detecting marine mucilage in suspicious scenarios. Using different types of sensing methods increases the accuracy of detection for the mucilage. In addition, identifying mucilage-candidate regions primarily by using image analysis and directing the USV to those regions also saves time and energy.

4. Discussion

The application of unmanned surface vehicles is becoming increasingly common. In this study, it was predicted that unmanned surface vehicles could be used to monitor marine mucilage, which has become a common problem in many countries in the Mediterranean Basin, especially in Turkey. The support of a project for the use of UAV and USV together in the struggle against marine mucilage by an institution of the Turkish State also supports this previous assertion [

68]. Unmanned surface vehicles can collect marine mucilage from the sea surface when mucilage blooms, in addition to monitoring mucilage. A three-stage mucilage determination system was envisaged to fulfill this task.

In the first step, the areas suspected of comprising mucilage were determined by using image processing. In this study, the image-based mucilage detection application was implemented using five different methods. All applied methods have been sufficiently successful and are preferred. The highest success rate was obtained by the FFNN method. Since the FFNN method has the highest success rate, using the FFNN method in the first stage (image processing) is strongly recommended if a computer with sufficient processing capability is available on the USV. In the second step, parameters such as pH, electrical conductivity, and dissolved oxygen level were measured in areas suspected of containing mucilage. In the literature (for example, in [

34,

40,

42]), it has been stated that mucilage causes a decrease in oxygen levels in the seawater. Therefore, these measurements, provided in

Table 6, are in line with studies in the literature. In addition, in the literature (for example, in [

34]), it has been stated that mucilage causes a decrease in the pH level in seawater. Therefore, these measurements provided in

Table 7 are in line with the studies in the literature. It has been explained in the literature that similar salinity levels were observed in the two different seas of the Mediterranean Sea during the mucilage bloom period: the Adriatic Sea and the Ligurian Sea [

36]. Therefore, it can be expected that the salinity level will change during the mucilage period, and accordingly, electrical conductivity will change. In this respect, it can be said that the information provided in

Table 8 is in accordance with the literature. The use of three different sensors allows the separation of with-mucilage seawater not only from non-mucilage (standard) seawater but also from seawater contaminated with the heavily acidic or heavily basic matter. This situation is shown in

Table 6,

Table 7 and

Table 8. Sample 3 in these tables represents an intensely acidic pollutant, and Sample-4 represents an intensely basic pollutant which is described in

Section 2.2. As a third step, samples were taken from the area suspected of containing mucilage, and the samples were examined under a microscope in the laboratory. Two different methods were used in this study. The success rates of the proposed methods are at an acceptable level. However, using the RGB-based method is recommended since the microscope examination is performed under controlled conditions.

In addition, two studies on the search for sea ice on the sea’s surface were also discussed for comparison purposes. In the comparison, it is observed that the methods applied here can also be used in alternative monitoring applications on the sea’s surface. There are studies that detected sea ice on the sea’s surface for manned or unmanned surface vehicles or observed pollution such as oil spills. For marine mucilage, studies have been conducted to determine marine mucilage with images taken from satellite or manned/unmanned aerial vehicles. In this study, unlike previous studies, we aimed to monitor marine mucilage areas for unmanned surface vehicles. Our results were compared with other studies in

Section 3.1.3 that focused on classification of the sea’s surface. We show that our results are within the acceptable range.

In this study, it was assumed that image acquisition, onboard sensor usage, and sample-taking processes were carried out in appropriate meteorological conditions. Under adverse meteorological conditions, it may be necessary to establish a number of supporting mechanisms so that the specified measurements can be made with acceptable accuracy. This issue will be addressed by the authors in future studies. Another possible scenario, which takes pictures and collects onboard sensor data on a determined patrol route, is considered, and in this scenario, the images taken from the camera are combined with three different onboard sensor data and entered into the Resnet-50 Network. The results produced by the network have a very high success rate. Therefore, it is possible to consider that this method is quite useful and successful when the USV is required to follow a certain route continuously in its search for mucilage.

The authors of this study intend to develop an unmanned surface vehicle for mucilage monitoring and collection or intend to participate in studies conducted for this purpose in the near future. We also aim to design the underwater scanning features with mucilage in mind in addition to the surface mission requirements.