Abstract

The technology known as cemented paste backfill (CPB) has gained considerable popularity worldwide. Yield stress (YS) is a significant factor considered in the assessment of CPB’s flowability or transportability. The minimal shear stress necessary to start the flow is known as Yield stress (YS), and it serves as an excellent measure of the strength of the particle-particle interaction. The traditional evaluation and measurement of YS performed by experimental tests are time-consuming and costly, which induces delays in construction projects. Moreover, the YS of CPB depends on numerous factors such as cement/tailing ratio, solid content and oxide content of tailing. Therefore, in order to simplify YS estimation and evaluation, the Artificial Intelligence (AI) approaches including eight Machine Learning techniques such as the Extreme Gradient Boosting algorithm, Gradient Boosting algorithm, Random Forest algorithm, Decision Trees, K-Nearest Neighbor, Support Vector Machine, Multivariate Adaptive Regression Splines and Gaussian Process are used to build the soft-computing model in predicting the YS of CPB. The performance of these models is evaluated by three metrics coefficient of determination (R2), Root Mean Square Error (RMSE) and Mean Absolute Error (MAE). The 3 best models were found to predict the Yield Stress of CPB (Gradient Boosting (GB), Extreme Gradient Boosting (XGB) and Random Forest (RF), respectively) with the 3 metrics of the three models, respectively, GB {R2 = 0.9811, RMSE = 0.1327 MPa, MAE = 0.0896 MPa}, XGB {R2 = 0.9034, RMSE = 0.3004 MPa, MAE = 0.1696 MPa} and RF {R2 = 0.8534, RMSE = 0.3700 MPa, MAE = 0.1786 MPa}, for the testing dataset. Based on the best performance model including GB, XG and RF, the other AI techniques such as SHapley Additive exPlanations (SHAP), Permutation Importance, and Individual Conditional Expectation (ICE) are also used for evaluating the factor effect on the YS of CPB. The results of this investigation can help the engineers to accelerate the mixed design of CPB.

1. Introduction

The mining sector produces a lot of trash, with waste rock and concentrator waste being the major types (mining tailings). Environmental issues are frequently brought on by the surface-based storage of these discharges. In actuality, sulfur emissions that have been exposed to air and water will result in acid mine drainage [1]. Making paste backfill, which is a mixture of tailing, water, and a binding agent such as cement, is one method of managing these mining wastes. This mixture technology is called Cemented Paste Backfill (CPB). CPB’s main purpose is to aid in ground control by serving as a supplemental support for mine workers. By lowering the volume of concentrator rejects that must be kept on the surface, this subsurface paste backfill serves the dual purpose of lessening the environmental effects of mining operations. In the modern world, CPB is still the most popular technology for reusing tailing.

Yield Stress (YS) is a significant factor considered in the assessment of CPB’s flowability or transportability. The minimal shear stress necessary to start the flow is known as the yield stress, and it serves as an excellent measure of the strength of the particle-particle interaction [2]. It can be used as a quantitative standard for evaluating and controlling quality [3]. Changes in friction loss and pipe diameter in pipeline design are frequently linked to variations in the yield stress of fluid CPB. A lot of research has been conducted in the past to evaluate the yield stress of CPB and its influences on yield stress. The studies [4,5] have demonstrated that the yield stress of CPB is affected by external (such as warm temperatures and loading pressure) as well as internal (such as pH, density and concentration of the CPB mixtures, characteristics of the CPB mixture constituents, and curing time). Recently, Haiqiang et al. [6] determined experimentally yield stress (YS) of cemented paste backfill in sub-zero environments. Overall, the studies determining the YS of CPB depend greatly on numerous input variables, moreover, the influence of the input factors on the CPB tensile strength needs to be studied more carefully by increasing the number of samples to be considered.

Therefore, the prediction of CPB Yield Stress (YS) and evaluation of the factors affecting the YS should be considered with a suitable approach. Artificial intelligence (AI) using Machine Learning (ML) algorithms is a novel and relevant way of predicting and studying CPB Yield Stress (YS) which is dependent on many factors. The use of machine learning tools enables fast, convenient and accurate CPB Yield Stress (YS). Along with that, the assessment of the influence of the input factors on the CPB Yield Stress (YS) is more accurate. Using an artificial intelligence approach makes the design of CPB’s delivery component quicker and easier for engineers. Indeed, the application of artificial intelligence or machine learning models has been applied in determining the compressive strength of concrete [7,8], design of reinforced lightweight soil [9], investigation and the design of the stabilized soil mix [10,11], assessment of the permeability coefficient of the soil [12], and research on the durability of concrete in chloride environments [13,14] and carbonate environments [15]. Moreover, In the subject of structural and seismic engineering, supervised machine learning (ML) algorithms have attracted a lot of interest. This is because ML-based models can forecast the link between the predictors and response variable(s) without requiring an understanding of underlying mathematical and physical theories [16,17]. In addition, some applications of artificial intelligence models have been directly applied in CPB-related studies. For instance, Qi et al. [18] developed an artificial intelligence-based unconfined compressive strength prediction algorithm for recycling waste tailings into backfill made of cement paste. The boosted regression trees (BRT) approach was used to simulate the non-linear connection between inputs and outputs and the particle swarm optimization (PSO) was utilized to tune the BRT hyper-parameters in the machine learning model. To predict the unconfined compressive strength of a backfill made of cement paste, Qi et al. [19] employed a neural network and particle swarm optimization. Using a hybrid machine learning approach that combines artificial neural networks and differential evolution, Qi et al. [20] improved pressure drops prediction of new cemented paste backfill slurry. Recently, Liu et al. [21] performed a comparative study of a prediction model consisting of a support vector machine (SVM), decision tree (DT), random forest (RF) and back-propagation neural network (BPNN) for evaluating the unconfined compressive strength of CPB. Regarding tensile strength prediction, Qi et al. [22] used machine learning algorithms (including decision tree (DT), Gradient Boosting (GB), and random forest (RF)) and genetic algorithms (GA) for predicting the Yield Stress (YS) of CPB. The performance of the best ML model in the investigation of Qi et al. [22] for predicting the YS of CPB is GB model with R2 = 0.8082; therefore, the performance of the ML model in predicting the YS of CPB can be improved. The evaluation of the input variable effect on the YS of CPB can be deeply performed with aid of the high performance of the ML model.

In order to build an ML model with high performance including the accuracy and reliability for predicting the YS of CPB, eight popular single ML algorithms consisting of Extreme Gradient Boosting (XGB), Gradient Boosting (GB), Random Forest (RF), Decision Trees (DT), K-Nearest Neighbor (KNN), Support Vector Regression (SVR), Multivariate Adaptive Regression Spline (MARS) and Gaussian Regression (Gau) are used to train ML model based on a database derived from the investigation of Qi et al. [22]. These algorithms can be accessible in the open-source code of Python language. The performance of the ML model is evaluated by three metrics such as coefficient of determination R2, Root Mean Square Error (RMSE), and Mean Absolute Error (MAE) with aid of 10-Fold Cross Validation for verifying the reliability of each ML model. The ML models that have the best performance are used to investigate the feature importance analysis based on SHapley Additive exPlanations (SHAP), Permutation Importance and Individual Conditional Expectation (ICE).

2. Description of Database

The dataset used in this study was collected from the previous study by Qi et al. [22]. This dataset includes 299 result samples which use 13 input variables including Gs, D10, D50, Cu, Cc, SiO2, CaO, Al2O3, MgO, Fe2O3, C/T, Solid content and To. Where Gs is the specific gravity of the cemented paste backfill, D10 and D50 are the diameter of grain size (mm) permitting only 10% and 50% grain of tailing passing, respectively, Cu is the uniform coefficient of tailing, Cc is coefficient of curvature of tailing, C/T is the ratio of used cement and tailing in CPB, and solid content is the percentage of mix “cement and tailing” in CPB. SiO2, CaO, Al2O3, MgO and Fe2O3 are the percentage content (%) of each oxide in tailing content. Lastly, To is the curing age of CPB. The output of database is the Yield Stress of CPB denoted YS.

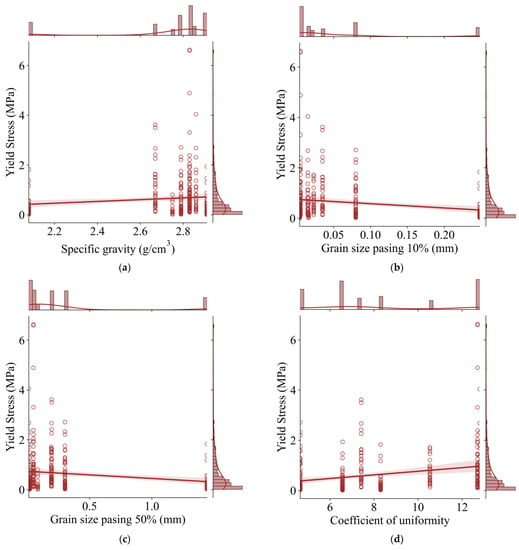

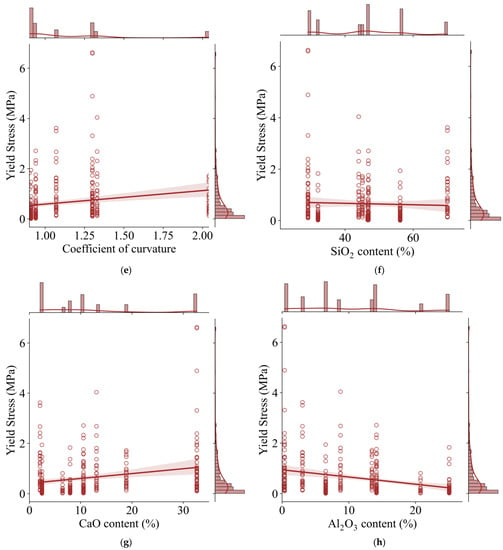

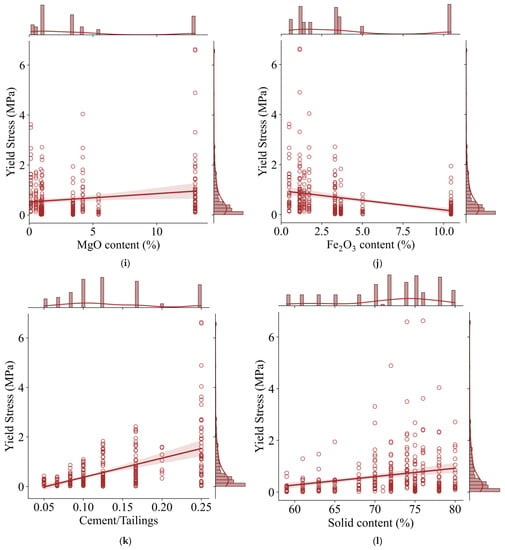

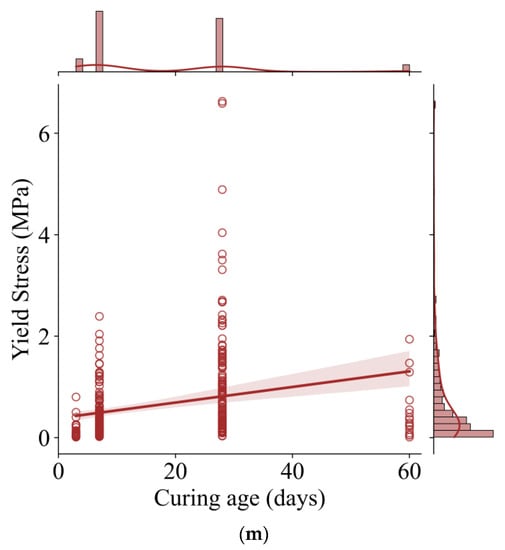

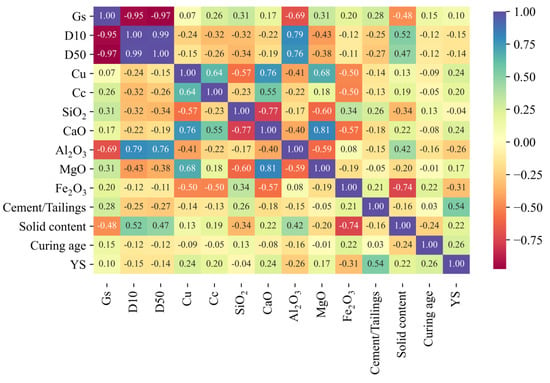

Figure 1 describes the distribution of each input variable and output variable YS. Using the simplest relation between two variables as linear regression fit, the YS depends positively on the C/T variable, and this dependence can be observed sharply by comparing the dependence of the other input variables. The coefficient of Pearson correlation of this dependence YS-C/T is equal to +0.54, this value is the highest coefficient of Pearson correlation which can be seen in detail in Figure 2. The lowest absolute value of the Pearson correlation coefficient belongs to SiO2 content. The value of this coefficient is equal to 0.04. By the matrix of Pearson correlation coefficient value shown in Figure 2, some absolute values of Pearson correlation between two features are greater than 0.7, for instance, Gs-D10 (0.95), Gs-D50 (0.97), D10-D50 (0.99), D10-Al2O3 (0.79), D50-Al2O3 (0.76), CaO-Cu (0.76), Fe2O3-Solid content (0.74), MgO-CaO (0.81), and SiO2-CaO (0.77). The high-value Pearson correlation of oxide content seems to be due to mineral composition of tailing which also influences the grain size distribution (D50, D10, Cu) of the tailing. Observing Figure 1a–c, it is worth noting that each specific gravity Gs has a corresponding value (D10 and D50); therefore, the Pearson coefficient of D10-Gs and D50-Gs have high values. This is true for D10-D50 Pearson correlation value 0.99 in which each D10 has a corresponding value of D50. However, to understand the effect of each feature on the YS in the feature importance analysis by Permutation Importance and SHapley Additive exPlanations (Shapley) in the last section of this investigation, 13 initial input variables are proposed and introduced for building Machine Learning model.

Figure 1.

Distribution between each input variable and output variable Yield Stress: (a) Specific gravity, (b) Grain size passing 10%, (c) Grain size passing 50%, (d) Coefficient of uniformity, (e) Coefficient of curvature, (f) SiO2 content, (g) CaO content, (h) Al2O3 content, (i) MgO content, (j) Fe2O3 content, (k) Cement/Tailings, (l) Solid content and (m) Curing age.

Figure 2.

Coefficient of Pearson correlation of each input variable and Yield Stress.

Table 1 shows statical description including minimal (min), maximal (max), mean and standard deviation (StD), which describes a measure of how dispersed the data is in relation to the mean, Q25, Q75 and median of value variable in the user database. These values in this Table 1 show the range validation of the ML models after building. The range value of YS is large varying from 0.01 to 6.63 MPa, the range value of C/T varies from 0.05 to 0.25, the solid content including cement (C) and tailing (T) varies from 59% to 80% and the specific gravity of tailing belong to range 2.08 g/cm3 to 2.91 g/cm3. The curing age to consists of 3, 7, 28 and 60 days. With regard to the oxide content of tailing and distribution of tailing grain size, the details of these input variables can be found in Table 1. In order to standardize features by removing the mean and scaling to unit variance. All datasets must be normalized by StandardScaler() function of Sklearn preprocessing [23].

Table 1.

Statical description including min, max, mean, StD, Q25, Q75 and median of value variable in the user database.

3. Machine Learning Algorithm

3.1. Single Decision Tree

The single decision tree algorithm is a method for approximating a discrete function’s value. It is a typical classification method in which the data is processed first, inductive methods are used to build comprehensible rules and decision trees, and the results are then used to evaluate new data. A decision tree is essentially the act of classifying data using a set of rules. To uncover the categorization rules suggested in the data, the decision tree method builds a decision tree. The decision tree algorithm’s fundamental topic is how to design a decision tree with high precision and compact scale. The decision tree may be built in two phases. The first phase is decision tree generation, which is the process of creating a decision tree using the training sample set. In general, a training sample data set is a data collection with a history and a particular level of comprehensiveness based on actual demands that are used for data analysis and processing. The second phase is decision tree pruning, which is the process of testing, correcting, and mending the decision tree constructed in the previous stage, mostly utilizing the fresh sample data set (called the test data set) The data is used to validate the preliminary rules established during the decision tree construction process, and any branches that impair the correctness of the pre-balance are removed.

3.2. Ensemble Models including Extreme Gradient Boosting, Gradient Boosting and Random Forest

The ensemble technique is effective because it combines predictions from numerous machine learning algorithms to provide more accurate predictions than a single model. Random Forest (RF) is one of the most well-known, and it is simple to utilize. Random Forest (RF) is based on bagging (bootstrap aggregation), which averages the results from sub-samples over several decision trees. It also restricts its search to only one-third of the data (in regression) to fit each tree, diminishing linkages between decision trees. When compared to linear models, it is simple to obtain a reduced testing error. Boosting moves at a slower pace, creating predictors sequentially rather than independently. It leverages residual patterns repeatedly, reinforces the model with poor predictions, and improves it.

The popular approach known as the gradient boosting algorithm (GB) has shown to be successful in a variety of applications [24]. This method, which works well for new models, has the advantage of continuous learning to provide a response variable prediction that is more precise. This method’s main goal is to produce fresh elementary learners who are optimally connected with the loss function’s negative gradient for the collection. Extreme Gradient Boosting or xgboost (XGB) provided a prediction error several times lower than boosting or random by combining the benefits of both Random Forest and Gadient Bosting (GB).

Chen and Guestrin [25] introduced the supervised advanced method known as Extreme Gradient Boosting or “xgboost” (XGB). In the Kaggle contests, this method is particularly well-liked. The idea behind this method is “boosting,” which implies that it uses additive training techniques to combine all of the predictions from a group of “weak” learners to create a “strong” learner. The goal function and XGB’s loss function both have an additional regularization term that was introduced to prevent over-fitting and smooth the final learned weights [25].

To control over-fitting specifically, xgboost uses a more regularized model formalization, which improves performance. However, the term “xgboost” actually refers to the engineering objective of pushing the computational resource limit for boosted tree algorithms. It might be more appropriate to refer to the model as regularized gradient boosting. The xgboost prediction function can be expressed as the following:

(i is the total number of trees, fi represents the i-th tree, and represents the prediction result of the sample)

The loss function is

From a mathematical point of view, this is a functional optimization, multi-objective, look at the objective function:

where

is the loss function and

is the regularization function.

Wakjira et al. [26]’s summaries simplify the RF algorithm in the following ways. In order to improve the predictive accuracy of the underlying algorithms, random forest is an ensemble model that builds numerous decision trees concurrently as a committee to provide separate outputs, and then computes their mean to produce the final outcome. In this way, random forest operates in two stages: the final prediction in the case of regression and a majority vote in the case of classification issues are produced by (a) building T number of decision trees (estimators) and (b) computing the arithmetic mean of the predictions across the estimators. RF regression employs replacement samples (bootstrap samples) from the training dataset along with randomly chosen input data to develop the trees. Furthermore, each node selects an ideal number of input attributes. The number of estimators, which is the maximum number of trees the algorithm uses to make the prediction, the maximum number of randomly chosen features to be included at each node split, the minimum number of samples at the leaf node, and the maximum depth that a tree can grow are some of the hyperparameters used by random forest.

Based on building Bagging ensembles with decision trees as the base learners, RF further adds random attribute selection to the training process of the decision trees. Specifically, the traditional decision tree selects an optimal attribute among all candidate attributes (assuming there are d) of the current node when selecting the partition attribute; while in RF, for each node of the base decision tree, first Randomly select a subset containing k attributes from the candidate attribute set of the node, and then select an optimal attribute from this subset for division. The selection of the number of attributes k to be extracted is more important, and is generally recommended. Therefore, the “diversity” of the base learner of the random forest comes not only from the perturbation of the samples but also from the perturbation of the attributes, which further enhances the generalization ability of the final ensemble.

3.3. K-Neighbor Nearest and Support Vector Machine

The K-Nearest Neighbor (KNN) technique is a popular supervised learning algorithm that can perform classification and regression tasks. Its operating principle is extremely simple: take a test sample and calculate the distance between the training set and the nearest training sample. This “neighbor” information is then utilized to create predictions. Usually, the voting method can be used in the classification task, that is, the category mark that appears most in this sample is selected as the prediction result. The average method can be used in the regression task, that is, the average value of the real-valued output marks of this sample is used as prediction results; weighted average or weighted voting can also be performed based on the distance (the closer the sample weight is, the greater the weight). Value selection, distance measurement and classification decision rules are the three basic elements of the k-nearest neighbor method.

In machine learning, support vector machines (SVM) are supervised learning models with associated learning algorithms. The goal of SVM is to build a line that “best” distinguishes these two sorts of points, such that if a new point appears, this line will also produce a decent classification. SVM is appropriate for small and medium-sized data samples, as well as nonlinear, high-dimensional classification tasks. Map the instance’s feature vector (for example, two-dimensional) to certain places in space, such as the solid and hollow points in the picture below, which correspond to two separate categories. SVM’s goal is to build a line that “best” distinguishes these two sorts of points, such that if more points appear in the future, this line can also produce a decent classification. Support vector machines enlarge the vector space such that a pattern in a lower-level vector space may be embedded in a higher-level vector space in a way that perceptron can learn. This is accomplished by linking new vectors with certain sub-patterns, which are known as support vectors. A support vector machine’s purpose is to learn which support vectors are required to understand a pattern.

KNN and SVM represent different approaches to learning. Each method assumes a distinct model for the underlying data. SVM makes the quite restricted assumption that there is a hyper-plane separating the data points, whereas KNN seeks to approximate the underlying distribution of the data in a non-parametric approach (crude approximation of parzen-window estimator).

3.4. Multivariate Adaptive Regression Splines and Gaussian Process

The piecewise linear function is a straightforward way to understand multivariate adaptive regression splines (MARS). The link between a certain characteristic variable x and the response variable y is complicated and nonlinear. It is broken into a number of (n + 1) roughly linear models by determining an appropriate number (n) of cut points or knots (hinge function). The assumption behind linear regression is that the relationship between the feature variable and the response variable is monotonically linear. Some characteristic variables have an obvious linear relationship with the response variable when multiple regression is performed on complex data sets with multiple characteristic variables (temporarily assuming that there is no correlation between variables). However, some variables are more complex. The SSE of the two linear models that are produced is smaller than it was prior to segmentation, and the point (x) with the highest drop is picked as the split point when doing a segmented regression between the characteristic variable x and the response variable y.

One predictor in nonlinear regression refers to n + 1 terms, whereas one predictor in general linear regression corresponds to a term (also known as a relationship or coefficients) (if there are n terms).

A Gaussian process (GP) is a random process in which observations occur in a continuous domain (such as time and space). Each point in the continuous input space of a Gaussian process is connected to a properly distributed random variable. Additionally, any finite linear combination of these random variables has a normal distribution since each finite collection of them has a multidimensional normal distribution. As the joint distribution of all (infinitely many) random variables, the distribution of a Gaussian process is a distribution of functions over continuous domains (such as time and space). A generalized polynomial (GP) can be compared to an endlessly large multivariate normal distribution (MVN). The implication is that a GP is an infinite set of variables, each of whose finite subsets is jointly distributed with the MVN distribution. Simply said, because every finite collection of data (y) from the GP has a regular Gaussian distribution, they are subject to all the beautiful characteristics of the MVN (conditional distributions are Gaussian, marginal distributions are Gaussian, etc.). GPs can also be seen as a probability distribution, but one that is applied to functions rather than variables. This is incredibly helpful because machine learning frequently involves some kind of function approximation. By only observing variables, a GP enables us to infer posterior distributions over functions.

3.5. Tuning Hyperparameter and K-Fold Cross Validation

According to the conception proposed in [27,28,29,30], all hyperparameters of ML models should be tuned before applying the ML models to specific problems. The prediction effectiveness and generalizability of a given model are determined by its hyperparameter values [27]. Hyperparameter tuning or optimization is used to determine the hyperparameters’ ideal values. Particle Swarm Optimization is one of the effective algorithms for tuning the hyperparameters of ML algorithms [10]. During the hyperparameter tuning process, the K-Fold Cross-Validation technique is used to avoid over-fitting issues. First, the dataset is divided into training and testing datasets, each of which contains 70% and 30% of the whole dataset [31]. The following three steps are used to perform the K-Fold Cross Validation:

- (i)

- Divide the training dataset into independent, equal-sized K-groups or folds without replacement, ensuring that each observation is utilized exactly once for both training and validation.

- (ii)

- Fit the model using K-1 folds and validate the model using the final fold.

- (iii)

- Repeat step (b) K times to generate K performance indices.

The mean of K performance indicators is used to calculate the model’s ultimate performance. In this study, the hyperparameters are optimized using Particle Swarm Optimization (PSO) in combination with a 10-Fold Cross Validation (K = 10). In 10-Fold Cross-Validations, 90% of the training dataset is used to train the model, with the remaining 10% of the training dataset serving as a validation dataset in each iteration. The tuning process code can be found in the Hyperactive library [32].

3.6. Evaluation Metrics of Machine Learning Model

In this study, three evaluation metrics of ML performance including coefficient of determination R2, Root Mean Squared Error (RMSE) and Mean Absolute Error (MAE) are used. The coefficient of determination R2 is one of the commonly used measures of precision in linear models and analysis of variance, representing the percentage of variance in the model that the dependent variable can explain by the independent variables. In other words, R2 shows how well the data fit the regression model (goodness of fit). The higher the value of R2, the better the performance of the ML model is. RMSE has the same dimension as Mean Absolute Error (MAE). After calculating the predicted result, the RMSE value is larger than the MAE value. The RMSE first accumulates the squares of the errors and then takes the square root of the mean after accumulating these squares of errors which actually magnifies the gap between the larger errors. The MAE reflects the real error. Therefore, the smaller the value of RMSE is, the more meaningful it is because its value can reflect that its maximum error is relatively small. With lower values of RMSE and MAE, the performance of the ML model is better.

Notably, m is the number of samples in the database, and are the experimental value and mean experimental value, respectively, and is the value predicted by the ML model.

4. Results and Discussion

In this section: Eight ML models are trained, and the performances of the eight models are evaluated by the training dataset and testing dataset. After building the best ML models, the best ML model is used for the following: (i) Predicting the YS of CPB. (ii) Other AI techniques such as SHapley Additive exPlanations (SHAP), Permutation Importance and Individual Conditional Expectation (ICE) are used to investigate the feature importance analysis on the predicted Yield Stress.

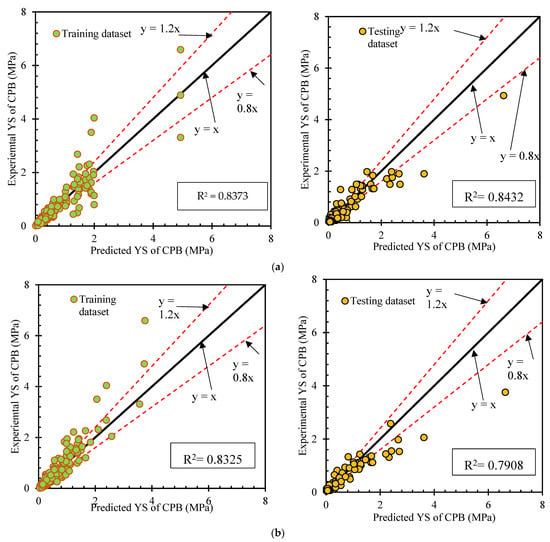

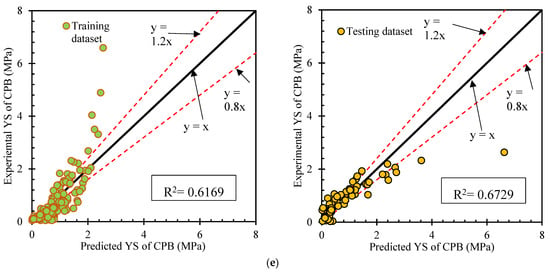

4.1. Predicting Yield Stress of CPB by Eight Machine Learning Models

Figure 3 and Figure 4 show the comparison between the experimental YS of CPB and predicted YS based on ML models. Figure 3a–d and e show the comparison based on the DT, KNN, SVR, MARS and Gaussian models, respectively, and these ML models are single ML models. Moreover, two error lines y = 1.2x and y = 0.8x are displayed on both the training and testing datasets. These lines quantify the accuracy of ML models in predicting the YS of CPB. For instance, numerous data points of the comparison for the DT, KNN, SVR, MARS and Gaussian models are far from a perfect fit y = x and outside of the error zone (−20% to +20%) value of YS. For testing datasets, the MARS model and Gaussian model have the most comparison data points that are outside the error zone (−20% to +20%). Regarding the predictivity of the YS of single ML models, it seems that the accuracy of five single ML models can be arranged in descending order DT > KNN > SVR > MARS > Gaussian.

Figure 3.

Predicting the YS of CPB based on single models in comparison with the experimental YS: (a) Decision tree (DT) model, (b) KNN model, (c) SVR model, (d) MARS model and (e) Gaussian model.

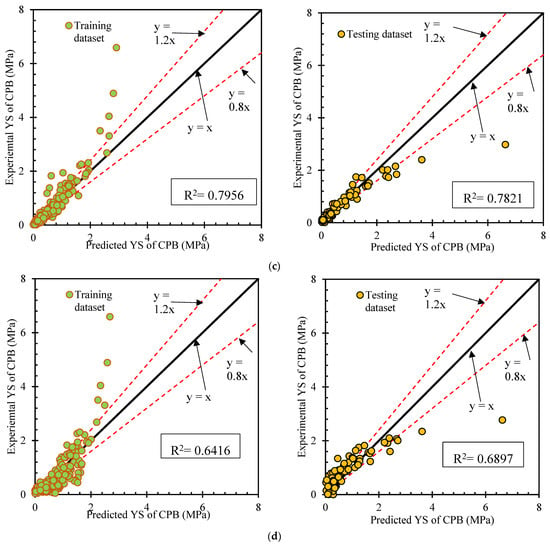

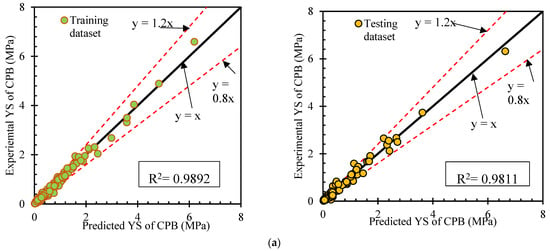

Figure 4.

Predicting the YS of CPB based on ensemble models in comparison with the experimental YS: (a) GB model, (b) XGB model and (c) RF model.

Figure 4a–c show the comparison data point between experimental YS and predicted YS based on ensemble models including the GB model, XGB model and RF model. The GB model has quite a few data points outside the two-line error lines y = 1.2x and y = 0.8x on both the training and testing datasets. It can be seen that the values of the evaluation criteria of the GB model have a relatively small change (Figure 4a). The XGB model has extremely good results on the training dataset with the value points almost centered on the line showing a linear fit y = x (Figure 4b). However, this model does not have negative results with the data points lying outside the error lines. Figure 4c shows that the RF model has the most comparison data points that are outside of the error zone. Using the testing dataset, the accuracy of ensemble models seems to be arranged in descending order GB > XGB > RF.

In order to compare the performance of 8 ML models including the single models and ensemble models, Table 2 shows the performance values such as coefficient of determination R2, Root Mean Square Error RMSE, and Mean Absolute Error MAE for the training dataset and the testing dataset. After assessing the performance values summarized in Table 2, it is worth noting that the performance of ML models can be arranged in descending order GB > XGB > RF > DT > KNN > SVR > MARS > Gaussian. The GB model can achieve the best predictive ability with the highest R2 value and the least RMSE and MAE value for both training and testing datasets. R2, RMSE and MAE values are equal to 0.9892, 0.0864, 0.0542, 0.9811, 0.1327, and 0.0896 for the training dataset and testing dataset, respectively.

Table 2.

Performance values of 8 ML models.

In following the best performance of the GB model, the XGB and RF models also have the high predictive ability that R2, RMSE and MAE values are equal to 0.9034, 0.3004 and 0.1696 for testing datasets based on the XGB model and 0.8534, 0.3700 and 0.1786 for testing datasets based on the RF model.

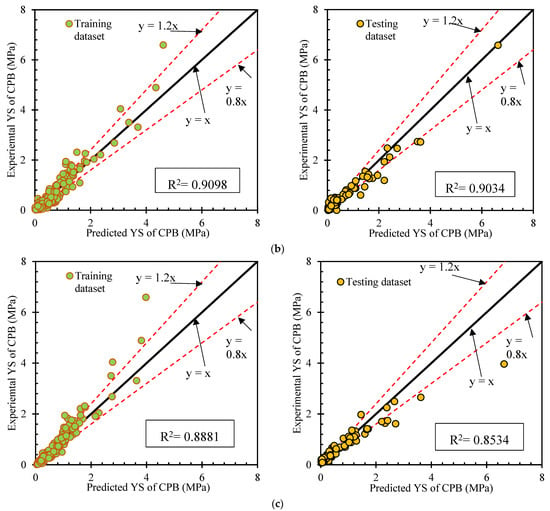

The Taylor diagram shown in Figure 5 shows the performance comparison of eight ML models for (a) the training dataset and (b) the testing dataset. The statistical analysis of predicted YS values based on the GB model including standard deviation and the RMSE value is the nearest to the statistical analysis of experimental YS (observed values) for both the case training dataset and testing dataset. In the following GB model, the predicted YS values based on the XGB model and RF model are also very near the observed values. The predicted values based on the MARS and Gaussian models are the furthest from the observed values.

Figure 5.

Taylor diagram for comparing the performance of 8 ML models in predicting YS of CPB (a) Training dataset and (b) Testing dataset.

With these results, three of the best ML models such as GB > XGB > RF models are used for the feature importance analysis in the next section.

4.2. Evaluation of Input Variable Effect on YS Prediction of ML Models

Feature importance can intuitively reflect the importance of features and see which features have a greater impact on the final model, but it is impossible to judge whether the relationship between features and the final prediction result is a positive correlation, negative correlation, or other more complex correlations. The SHAP value can not only explain these problems but also has local explanatory power, that is, it reflects the influence of the characteristics in each sample, and, at the same time, shows the positive and negative. Permutation Importance and SHapley Additive exPlanations (SHapley) are relatively versatile methods for model interpretability, which can be used for both previous global interpretation and local interpretation, that is, from the perspective of a single sample, the predicted value given by the model and the possible relationship between certain features.

Shapley introduced SHapley Additive exPlanations (SHAP) based on cooperative game theory in 1951 [33] and they are comparable to many other sensitivity analysis methodologies [34]. In the following years [35,36]. Strumbelj and Kononenko were the first to apply the methodology to machine learning issues. SHAP has seen a lot of use since the publication of Lundberg and Lee’s work [37] and the related Python package for SHapley Additive exPlanations. The fundamental goal of SHAP is to determine the significance of a particular property, such as X. To begin, all feature subsets in the input space that do not contain X are gathered in order to accomplish this. The impact of adding X to each of the preceding groups is assessed in a subsequent stage to see how it affects prediction outcomes. The contribution of feature X is calculated in the last stage after all contributions have been combined.

The representation is as follows: each row represents a feature, and the abscissa is the SHAP value. A point represents a sample; the redder the color, the greater the value of the feature itself, and the bluer the color, the lower the value of the feature itself. The relevance of a feature may be calculated by taking the mean value of the absolute value of its effect on the target variable.

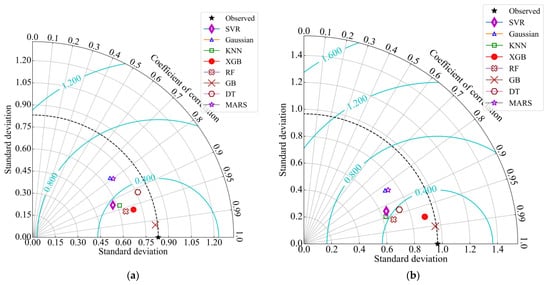

Figure 6 includes the Permutation importance value and Shapley value of three models GB, XGB, and RF. The eight features used to develop the model are interpreted to select the features that are significant to yield stress. The Cement/Tailings Shapley values show that yield stress increases as the Cement/Tailings ratio increases. In contrast, the Shapley values of the Cement/Tailings ratio indicate that, when this ratio decreases, the yield stress is also low. Therefore, this is a feature that has an important influence on yield stress development. Curing age and solid content are also features that have a big impact on output. Among the features related to inorganic compounds, Fe2O3 is the feature that has the greatest influence on yield stress. The results of the Shapley value for this feature indicate that the Fe2O3 content should be kept at a moderate level to avoid having a negative effect on the output. According to the analysis of four features Cement/Tailings, Curing age, Solid content, and Fe2O3 content are ranked as significant features that have a lot of impact on output.

Figure 6.

Feature importance analysis by Permutation Importance and SHapley Additive exPlanations (SHAP value) based on (a) GB, (b) XGB and (c) RF model.

SiO2, D10, D50, and Gs seem to be the least important input variables in predicting the YS of CPB according to the Permutation Importance and SHapley Additive exPlanations (Shapley) values.

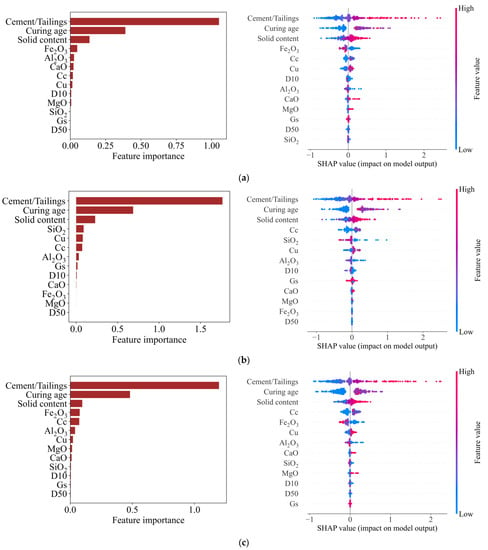

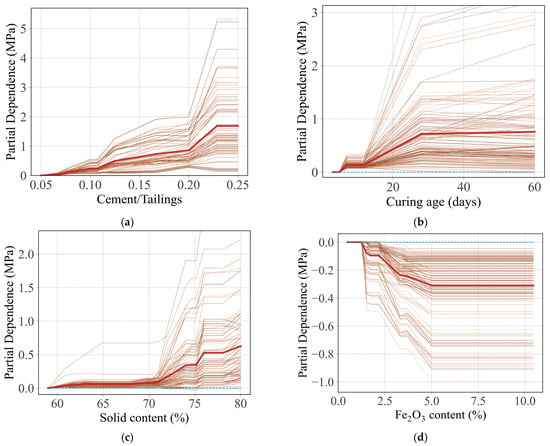

After selecting the four features that have the greatest influence on yield stress, a partial dependency plot is used to highlight how those features affect the model’s predictions. Figure 7 is the Individual Conditional Expectation (ICE) of features for the output. The results showing the average model prediction for variations of features are lines with a darker brown color. However, the graph also shows the output variation for each sample. This allows observing whether the samples have different interactions or not. Figure 7a is the Partial Dependence of the Cement/Tailings ratio. With the increase in the Cement/Tailings ratio, the growth of the output is easily seen. The effect of Curing age on the increase in yield stress is very large before 40 days, but after that, the increase is very small, hardly visible in Figure 7b. Figure 7c shows that the effect of solid content on the output when it is small is negligible. However, with content greater than 70%, the increase in output is very obvious. Contrary to the above three features, the Fe2O3 content has a very clear negative effect on yield stress (Figure 7d). When the Fe2O3 content increases to a certain threshold, the degradation of the output is no longer so rapid. With these observations, it is possible to capture the component content of CPB to create a sample that matches the needs.

Figure 7.

Individual Conditional Expectation (ICE) analysis for four plus important input variables (a) Cement/Tailings, (b) Curing age, (c) Solid content and (d) Fe2O3 content.

5. Conclusions

In this study, based on 299 data collected from the library including 13 input variables, 8 popular machine learning algorithms were used to build 8 machine learning models including Extreme Gradient Boosting (XGB), Gradient Boosting (GB), Random Forest (RF), Decision Trees (DT), K-Nearest Neighbor (KNN), Support Vector Regression (SVR), Multivariate Adaptive Regression Spline (MARS) and Gaussian Regression (Gau) to predict the yield stress of CPB. The 3 best models were found to predict the Yield Stress of CPB (GB, XGB and RF, respectively) with the 3-performance metrics of the three models, respectively, GB {R2 = 0.9811, RMSE = 0.1327 MPa, MAE = 0.0896 MPa}, XGB {R2 = 0.9034, RMSE = 0.3004 MPa, MAE = 0.1696 MPa} and RF {R2 = 0.8534, RMSE = 0.3700 MPa, MAE = 0.1786 MPa}, for the testing dataset. The four initial input variables, which are cement/tailings, curing age, solid content, and Fe2O3 content, have the highest permutation significance value and SHAP value. SiO2, D10, D50, and Gs seem to be the least important input variables in predicting the YS of CPB according to the Permutation Importance and SHapley Additive exPlanations (Shapley) values. By Individual Conditional Expectation (ICE), the effect of the four input variables can be quantified and qualified. The Cement/Tailings, Curing age and Solid content have a positive effect on the Yield Stress of CPB, and a higher Fe2O3 content decreases the Yield Stress of CPB. The best ML models can be a good selection of soft tools for engineering and predicting the YS of CPB in order to design CPB components.

The new metaheuristic algorithms such as the Circle Search Algorithm, Whale Optimization Algorithm and Flower Pollination Algorithm can be used in the tuning hyperparameters process for improving the performance of ML models.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data will be made available on request.

Conflicts of Interest

The authors declare that there is no conflict of interest.

References

- Hane, I. Caractérisation En Laboratoire Des Remblais En Pâte Cimentés Avec Ajout De Granulats (Stériles Concassés). Master’s Thesis, Université De Montréal, Montreal, QC, Canada, 2015. [Google Scholar]

- Simon, D.; Grabinsky, M. Apparent yield stress measurement in cemented paste backfill. Int. J. Min. Reclam. Environ. 2013, 27, 231–256. [Google Scholar] [CrossRef]

- Liddel, P.V.; Boger, D.V. Yield stress measurements with the vane. J. Non-Newton. Fluid Mech. 1996, 63, 235–261. [Google Scholar] [CrossRef]

- Yin, S.; Wu, A.; Hu, K.; Wang, Y.; Zhang, Y. The effect of solid components on the rheological and mechanical properties of cemented paste backfill. Miner. Eng. 2012, 35, 61–66. [Google Scholar] [CrossRef]

- Wu, D.; Fall, M.; Cai, S. Coupling temperature, cement hydration and rheological behaviour of fresh cemented paste backfill. Miner. Eng. 2013, 42, 76–87. [Google Scholar] [CrossRef]

- Jiang, H.; Fall, M.; Cui, L. Yield stress of cemented paste backfill in sub-zero environments: Experimental results. Miner. Eng. 2016, 92, 141–150. [Google Scholar] [CrossRef]

- Tran, V.Q.; Dang, V.Q.; Ho, L.S. Evaluating compressive strength of concrete made with recycled concrete aggregates using machine learning approach. Constr. Build. Mater. 2022, 323, 126578. [Google Scholar] [CrossRef]

- Tran, V.Q.; Nguyen, L.Q. Using hybrid machine learning model including gradient boosting and Bayesian optimization for predicting compressive strength of concrete containing ground glass particles. J. Intell. Fuzzy Syst. 2022, 43, 5913–5927. [Google Scholar] [CrossRef]

- Tran, V.Q.; Nguyen, L.Q. Using machine learning technique for designing reinforced lightweight soil. J. Intell. Fuzzy Syst. 2022, 43, 1633–1650. [Google Scholar] [CrossRef]

- Tran, V.Q. Hybrid gradient boosting with meta-heuristic algorithms prediction of unconfined compressive strength of stabilized soil based on initial soil properties, mix design and effective compaction. J. Clean. Prod. 2022, 355, 131683. [Google Scholar] [CrossRef]

- Ngo, T.Q.; Nguyen, L.Q.; Tran, V.Q. Novel hybrid machine learning models including support vector machine with meta-heuristic algorithms in predicting unconfined compressive strength of organic soils stabilised with cement and lime. Int. J. Pavement Eng. 2022, 23, 1–18. [Google Scholar] [CrossRef]

- Tran, V.Q. Predicting and Investigating the Permeability Coefficient of Soil with Aided Single Machine Learning Algorithm. Complexity 2022, 2022, e8089428. [Google Scholar] [CrossRef]

- Tran, V.Q. Machine learning approach for investigating chloride diffusion coefficient of concrete containing supplementary cementitious materials. Constr. Build. Mater. 2022, 328, 127103. [Google Scholar] [CrossRef]

- Tran, V.Q.; Giap, V.L.; Vu, D.P.; George, R.C.; Ho, L.S. Application of machine learning technique for predicting and evaluating chloride ingress in concrete. Front. Struct. Civ. Eng. 2022, 16, 1153–1169. [Google Scholar] [CrossRef]

- Tran, V.Q.; Mai, H.T.; To, Q.T.; Nguyen, M.H. Machine learning approach in investigating carbonation depth of concrete containing Fly ash. Struct. Concr. 2022. [CrossRef]

- Flood, I. Towards the next generation of artificial neural networks for civil engineering. Adv. Eng. Inform. 2008, 22, 4–14. [Google Scholar] [CrossRef]

- Wakjira, T.G.; Rahmzadeh, A.; Alam, M.S.; Tremblay, R. Explainable machine learning based efficient prediction tool for lateral cyclic response of post-tensioned base rocking steel bridge piers. Structures 2022, 44, 947–964. [Google Scholar] [CrossRef]

- Qi, C.; Fourie, A.; Chen, Q.; Zhang, Q. A strength prediction model using artificial intelligence for recycling waste tailings as cemented paste backfill. J. Clean. Prod. 2018, 183, 566–578. [Google Scholar] [CrossRef]

- Qi, C.; Fourie, A.; Chen, Q. Neural network and particle swarm optimization for predicting the unconfined compressive strength of cemented paste backfill. Constr. Build. Mater. 2018, 159, 473–478. [Google Scholar] [CrossRef]

- Qi, C.; Guo, L.; Ly, H.-B.; Van Le, H.; Pham, B.T. Improving pressure drops estimation of fresh cemented paste backfill slurry using a hybrid machine learning method. Miner. Eng. 2021, 163, 106790. [Google Scholar] [CrossRef]

- Liu, J.; Li, G.; Yang, S.; Huang, J. Prediction Models for Evaluating the Strength of Cemented Paste Backfill: A Comparative Study. Minerals 2020, 10, 1041. [Google Scholar] [CrossRef]

- Qi, C.; Chen, Q.; Fourie, A.; Zhang, Q. An intelligent modelling framework for mechanical properties of cemented paste backfill. Miner. Eng. 2018, 123, 16–27. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Duchesnay, Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Natekin, A.; Knoll, A. Gradient boosting machines, a tutorial. Front. Neurorobot. 2013, 7, 21. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the KDD ’16: 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Wakjira, T.G.; Ebead, U.; Alam, M.S. Machine learning-based shear capacity prediction and reliability analysis of shear-critical RC beams strengthened with inorganic composites. Case Stud. Constr. Mater. 2022, 16, e01008. [Google Scholar] [CrossRef]

- Wakjira, T.G.; Ibrahim, M.; Ebead, U.; Alam, M.S. Explainable machine learning model and reliability analysis for flexural capacity prediction of RC beams strengthened in flexure with FRCM. Eng. Struct. 2022, 255, 113903. [Google Scholar] [CrossRef]

- Wakjira, T.G.; Al-Hamrani, A.; Ebead, U.; Alnahhal, W. Shear capacity prediction of FRP-RC beams using single and ensenble ExPlainable Machine learning models. Compos. Struct. 2022, 287, 115381. [Google Scholar] [CrossRef]

- Wakjira, T.G.; Abushanab, A.; Ebead, U.; Alnahhal, W. FAI: Fast, accurate, and intelligent approach and prediction tool for flexural capacity of FRP-RC beams based on super-learner machine learning model. Mater. Today Commun. 2022, 33, 104461. [Google Scholar] [CrossRef]

- AlKhereibi, A.H.; Wakjira, T.G.; Kucukvar, M.; Onat, N.C. Predictive Machine Learning Algorithms for Metro Ridership Based on Urban Land Use Policies in Support of Transit-Oriented Development. Sustainability 2023, 15, 1718. [Google Scholar] [CrossRef]

- Nguyen, Q.H.; Ly, H.-B.; Ho, L.S.; Al-Ansari, N.; Van Le, H.; Tran, V.Q.; Prakash, I.; Pham, B.T. Influence of Data Splitting on Performance of Machine Learning Models in Prediction of Shear Strength of Soil. Math. Probl. Eng. 2021, 2021, e4832864. [Google Scholar] [CrossRef]

- Blanke, S. Hyperactive: An Optimization and Data Collection Toolbox for Convenient and Fast Prototyping of Computationally Expensive Models. 2019. Available online: https://github.com/SimonBlanke (accessed on 1 June 2019).

- Roth, A. (Ed.) The Shapley Value: Essays in Honor of Lloyd S. Shapley; Cambridge University Press: Cambridge, UK, 1988. [Google Scholar] [CrossRef]

- Liu, B.; Vu-Bac, N.; Zhuang, X.; Rabczuk, T. Stochastic multiscale modeling of heat conductivity of Polymeric clay nanocomposites. Mech. Mater. 2020, 142, 103280. [Google Scholar] [CrossRef]

- Strumbelj, E.; Kononenko, I. An efficient explanation of individual classifications using game theory. J. Mach. Learn. Res. 2010, 11, 1–18. [Google Scholar]

- Štrumbelj, E.; Kononenko, I. Explaining prediction models and individual predictions with feature contributions. Knowl. Inf. Syst. 2014, 41, 647–665. [Google Scholar] [CrossRef]

- Lundberg, S.; Lee, S.-I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4768–4777. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).