Conceptualizing Corporate Digital Responsibility: A Digital Technology Development Perspective

Abstract

1. Introduction

2. Literature Review

2.1. Corporate Responsibility in the Digital Realm

2.1.1. Ethical Issues Related to the Descriptive Function of Digital Technology

2.1.2. Ethical Issues Related to the Normalized Function of Digital Technology

2.1.3. Ethical Issues Related to the Shaped Function of Digital Technology

2.2. Corporate Digital Responsibility

3. Conceptualization of CDR from a Digital Technology Development Perspective

3.1. Corporate Digitized Responsibility

3.1.1. Unbiased Data Acquisition

3.1.2. Data Protection

3.1.3. Data Maintenance

3.2. Corporate Digitalized Responsibility

3.2.1. Appropriate Data Interpretation

3.2.2. Objective Predicted Results

3.2.3. Tackling Value Conflicts in Data-Driven Decision-Making

4. Methods

4.1. Sample and Data

4.2. Questionnaire

4.3. Measures

4.3.1. Measurement of CDR

4.3.2. Measurement of Firms’ Digital Performance

4.3.3. Data Analysis Technique

5. Results

5.1. Exploratory Factor Analysis

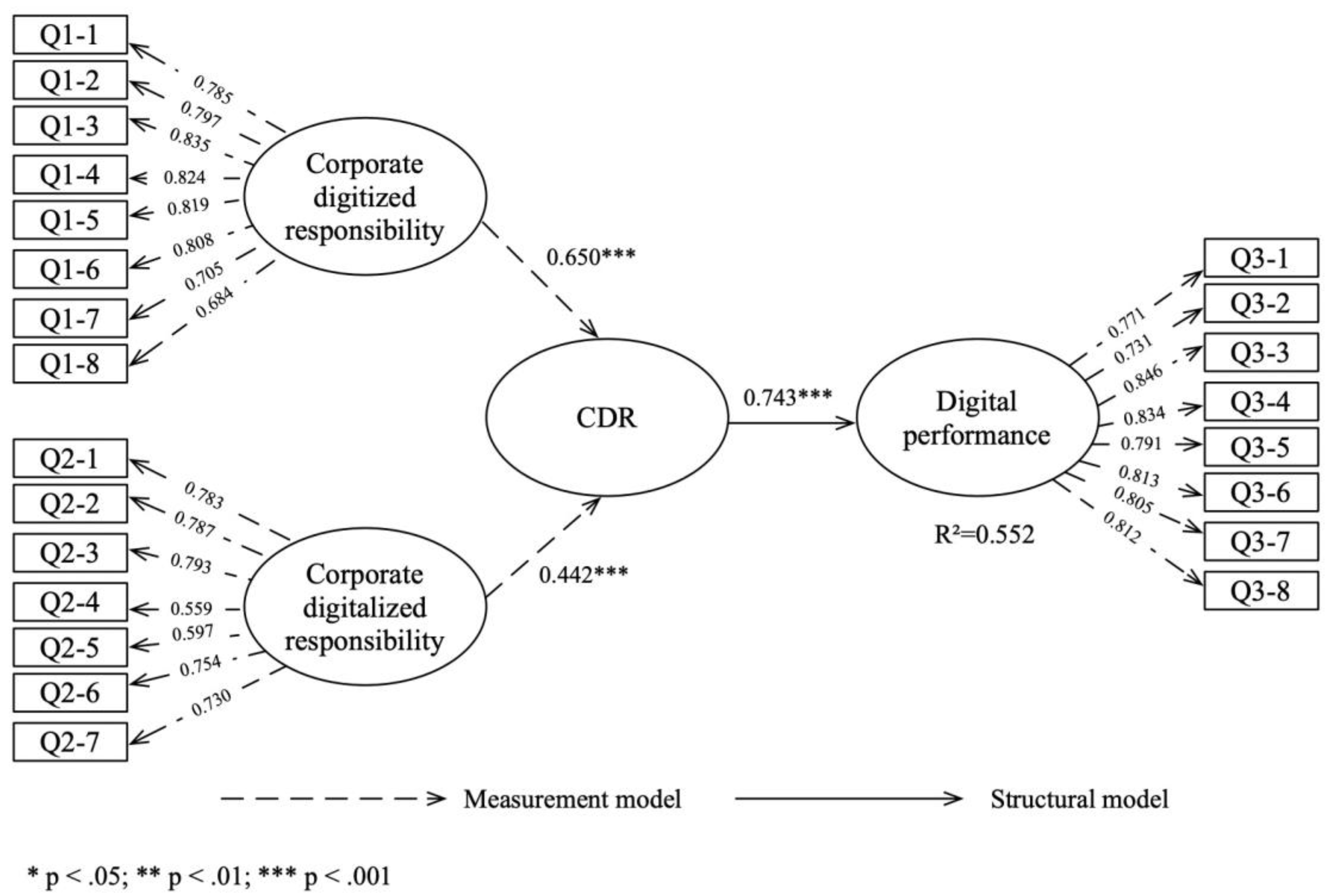

5.2. Measurement Model

5.3. Structural Equation Model

6. Discussion

6.1. Findings

6.2. Theoretical Contributions

6.3. Managerial Implications

6.4. Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Digital Performance | Strongly Disagree | Disagree | Neutral | Agree | Strongly Agree | |

|---|---|---|---|---|---|---|

| Q3-1 | The quality of our digital solutions is superior compared to our competitors. | 1 | 2 | 3 | 4 | 5 |

| Q3-2 | The features of our digital solutions are superior compared to our competitors. | 1 | 2 | 3 | 4 | 5 |

| Q3-3 | The applications of our digital solutions are totally different from our competitors. | 1 | 2 | 3 | 4 | 5 |

| Q3-4 | Our digital solutions are different from those of our competitors in terms of product platform. | 1 | 2 | 3 | 4 | 5 |

| Q3-5 | Our new digital solutions are major improvements of existing products. | 1 | 2 | 3 | 4 | 5 |

| Q3-6 | Some of our digital solutions are new to the market at the time of launching. | 1 | 2 | 3 | 4 | 5 |

| Q3-7 | Our solutions have superior digital technology. | 1 | 2 | 3 | 4 | 5 |

| Q3-8 | New digital technology is readily accepted in our organization. | 1 | 2 | 3 | 4 | 5 |

References

- Moorthy, J.; Lahiri, R.; Biswas, N.; Sanyal, D.; Ranjan, J.; Nanath, K.; Ghosh, P. Big data: Prospects and challenges. Vikalpa J. Decis. Mak. 2015, 40, 74–96. [Google Scholar] [CrossRef]

- Kshetri, N. Big data’s impact on privacy, security and consumer welfare. Telecommun. Policy 2014, 38, 1134–1145. [Google Scholar] [CrossRef]

- Bharadwaj, A.; El Sawy, O.A.; Pavlou, P.A.; Venkatraman, N. Digital business strategy: Toward a next generation of insights. MIS Q. 2013, 37, 471–482. [Google Scholar] [CrossRef]

- Wiener, N. Cybernetics: Or Control and Communication in the Animal and the Machine; Technology Press: Cambridge, MA, USA, 1948. [Google Scholar]

- Bynum, T.W. Computer ethics: Its birth and its future. Ethics Inf. Technol. 2001, 3, 109–112. [Google Scholar] [CrossRef]

- Lee, I.; Shin, Y.J. Machine learning for enterprises: Applications, algorithm selection, and challenges. Bus. Horiz. 2020, 63, 157–170. [Google Scholar] [CrossRef]

- Van Doorn, J.; Mende, M.; Noble, S.M.; Hulland, J.; Ostrom, A.L.; Grewal, D.; Petersen, J.A. Domo arigato Mr. Roboto: Emergence of automated social presence in organizational frontlines and customers’ service experiences. J. Serv. Res. 2017, 20, 43–58. [Google Scholar] [CrossRef]

- Cooper, T.; Siu, J.; Wei, K. Corporate Digital Responsibility—Doing Well by Doing Good. 2015. Available online: https://www.criticaleye.com/inspiring/insights-servfile.cfm?id=4431 (accessed on 28 December 2022).

- Herden, C.J.; Alliu, E.; Cakici, A.; Cormier, T.; Deguelle, C.; Gambhir, S.; Griffiths, C.; Gupta, S.; Kamani, S.R.; Kiratli, Y.S.; et al. “Corporate Digital Responsibility”: New corporate responsibilities in the digital age. Nachhalt. Manag. Forum 2021, 29, 13–29. [Google Scholar] [CrossRef]

- Thelisson, E.; Morin, J.H.; Rochel, J. AI governance: Digital responsibility as a building block: Towards an index of digital responsibility. Delphi 2019, 2, 167. [Google Scholar]

- Lobschat, L.; Mueller, B.; Eggers, F.; Brandimarte, L.; Diefenbach, S.; Kroschke, M.; Wirtz, J. Corporate digital responsibility. J. Bus. Res. 2019, 122, 875–888. [Google Scholar] [CrossRef]

- Mihale-Wilson, C.A.; Zibuschka, J.; Carl, K.V.; Hinz, O. Corporate digital responsibility—Extended Conceptualization and Empirical Assessment. In Proceedings of the 29th European Conference on Information Systems, Marrakech, Morocco, 14–16 June 2021. [Google Scholar]

- Mueller, B. Corporate digital responsibility. Bus. Inf. Syst. Eng. 2022, 64, 689–700. [Google Scholar] [CrossRef]

- Elliott, K.; Price, R.; Shaw, P.; Spiliotopoulos, T.; Ng, M.; Coopamootoo, K.; van Moorsel, A. Towards an equitable digital society: Artificial Intelligence (AI) and corporate digital responsibility (CDR). Society 2021, 58, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Lin, P.; Abney, K.; Bekey, G. Robot ethics: Mapping the issues for a mechanized world. Artif. Intell. 2011, 175, 942–949. [Google Scholar] [CrossRef]

- Brennen, S.J.; Kreiss, D. Digitalization. The International Encyclopedia of Communication Theory and Philosophy; Wiley: Hoboken, NJ, USA, 2016; pp. 1–11. [Google Scholar]

- Dourish, P. Where the Action Is: The Foundation of Embodied Interaction; MIT Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Ryals, L.; Payne, A. Customer Relationship Management in Financial Services: Towards Information-Enabled Relationship Marketing. J. Strateg. Mark. 2001, 9, 3–27. [Google Scholar] [CrossRef]

- Nevo, S.; Wade, M.R. The formation and value of IT-enabled resources: Antecedents and consequences of synergistic relationships. MIS Q. 2010, 34, 163–183. [Google Scholar] [CrossRef]

- Yoo, Y.; Boland, R.J., Jr.; Lyytinen, K.; Majchrzak, A. Organizing for innovation in the digitized world. Organ. Sci. 2012, 23, 1398–1408. [Google Scholar] [CrossRef]

- Baskerville, R.L.; Myers, M.D.; Yoo, Y. Digital first: The ontological reversal and new challenges for IS research. MIS Q. 2019, 44, 509–523. [Google Scholar] [CrossRef]

- Sivarajah, U.; Kamal, M.M.; Irani, Z.; Weerakkody, V. Critical analysis of Big Data challenges and analytical methods. J. Bus. Res. 2017, 70, 263–286. [Google Scholar] [CrossRef]

- Johnson, D.G. Computer Ethics; Prentice-Hall: Englewood Cliffs, NJ, USA, 1985. [Google Scholar]

- Parker, D.B. Rules of ethics in information processing. Commun. ACM 1968, 11, 198–201. [Google Scholar] [CrossRef]

- Capurro, R. Why information ethics? Int. J. Appl. Res. Inf. Technol. Comput. 2018, 9, 50–52. [Google Scholar] [CrossRef]

- Stahl, B.C.; Timmermans, J.; Flick, C. Ethics of emerging information and communication technologies: On the implementation of responsible research and innovation. Sci. Public Policy 2017, 44, 369–381. [Google Scholar] [CrossRef]

- Zwitter, A. Big Data ethics. Big Data Soc. 2014, 1, 1–6. [Google Scholar] [CrossRef]

- Taherdoost, H.; Sahibuddin, S.; Namayandeh, M.; Jalaliyoon, N. Propose an educational plan for computer ethics and information security. Procedia Soc. Behav. Sci. 2011, 28, 815–819. [Google Scholar] [CrossRef]

- Asaro, P.M. What should we want from a robot ethic? Int. Rev. Inf. Ethics 2006, 6, 9–16. [Google Scholar] [CrossRef]

- Malle, B.F. Integrating robot ethics and machine morality: The study and design of moral competence in robots. Ethics Inf. Technol. 2016, 18, 243–256. [Google Scholar] [CrossRef]

- Hagendorff, T. The ethics of AI ethics: An evaluation of guidelines. Minds Mach. 2020, 30, 1–22. [Google Scholar] [CrossRef]

- Suchacka, M. Corporate digital responsibility: New challenges to the social sciences. Int. J. Res. E-Learn. 2019, 5, 5–20. [Google Scholar] [CrossRef]

- Wirtz, J.; Hartley, N.; Kunz, W.H.; Tarbit, J.; Ford, J. Corporate Digital Responsibility at the Dawn of the Digital Service Revolution. 2021. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3806235 (accessed on 10 March 2022).

- Wade, M. Corporate responsibility in the digital era. MIT Sloan Management Review, 28 April 2020; p. 28. [Google Scholar]

- Nambisan, S.; Lyytinen, K.; Majchrzak, A.; Song, M. Digital innovation management: Reinventing innovation management research in a digital world. MIS Q. 2017, 41, 223–238. [Google Scholar] [CrossRef]

- Moustaka, V.; Theodosiou, Z.; Vakali, A.; Kounoudes, A.; Anthopoulos, L.G. Εnhancing social networking in smart cities: Privacy and security borderlines. Technol. Forecast. Soc. Chang. 2019, 142, 285–300. [Google Scholar] [CrossRef]

- Mihale-Wilson, C.; Hinz, O.; van der Aalst, W.; Weinhardt, C. Corporate Digital Responsibility. Bus. Inf. Syst. Eng. 2022, 64, 127–132. [Google Scholar] [CrossRef]

- Ritter, T.; Pedersen, C.L. Digitization capability and the digitalization of business models in business-to-business firms: Past, present, and future. Ind. Mark. Manag. 2020, 86, 180–190. [Google Scholar] [CrossRef]

- Yoo, Y.; Henfridsson, O.; Lyytinen, K. The new organizing logic of digital innovation: An agenda for information systems research. Inf. Syst. Res. 2010, 21, 724–735. [Google Scholar] [CrossRef]

- Ranschaert, E.R.; Morozov, S.; Algra, P.R. Artificial Intelligence in Medical Imaging: Opportunities, Applications and Risks; Springer: Berlin, Germany, 2019. [Google Scholar]

- Shakina, E.; Parshakov, P.; Alsufiev, A. Rethinking the corporate digital divide: The complementarity of technologies and the demand for digital skills. Technol. Forecast. Soc. Chang. 2021, 162, 120405. [Google Scholar] [CrossRef]

- Sestino, A.; Prete, M.I.; Piper, L.; Guido, G. Internet of Things and Big Data as enablers for business digitalization strategies. Technovation 2020, 98, 102173. [Google Scholar] [CrossRef]

- Liu, R.; Gupta, S.; Patel, P. The application of the principles of responsible AI on social media marketing for digital health. Inf. Syst. Front. 2021, 13, 1–25. [Google Scholar] [CrossRef]

- Kelley, D. Addressing the Unstructured Data Protection Challenge. 2008. Available online: https://www.xlsoft.com/en/services/materials/files/AddressingTheUnstructuredDataProtectionChallenge.pdf (accessed on 10 March 2022).

- Talha, M.; Abou El Kalam, A.; Elmarzouqi, N. Big Data: Trade-off between data quality and data security. Procedia Comput. Sci. 2019, 151, 916–922. [Google Scholar] [CrossRef]

- Lepenioti, K.; Bousdekis, A.; Apostolou, D.; Mentzas, G. Prescriptive analytics: Literature review and research challenges. Int. J. Inf. Manag. 2020, 50, 57–70. [Google Scholar] [CrossRef]

- Rehman, M.H.; Chang, V.; Batool, A.; Teh, Y.W. Big data reduction framework for value creation in sustainable enterprises. Int. J. Inf. Manag. 2016, 36, 917–928. [Google Scholar] [CrossRef]

- Banerjee, A.; Bandyopadhyay, T.; Acharya, P. Data analytics: Hyped up aspirations or true potential? Vikalpa 2013, 38, 1–12. [Google Scholar] [CrossRef]

- Attaran, M.; Gunasekaran, A. Applications of Blockchain Technology in Business: Challenges and Opportunities; Springer Nature: Berlin, Germany, 2019. [Google Scholar]

- Watson, H.J. Tutorial: Big data analytics: Concepts, technologies, and applications. Commun. Assoc. Inf. Syst. 2014, 34, 1247–1268. [Google Scholar] [CrossRef]

- Joseph, R.C.; Johnson, N.A. Big data and transformational government. IT Prof. 2013, 15, 43–48. [Google Scholar] [CrossRef]

- Waller, M.A.; Fawcett, S.E. Data science, predictive analytics, and big data: A revolution that will transform supply chain design and management. J. Bus. Logist. 2013, 34, 77–84. [Google Scholar] [CrossRef]

- Stedham, Y.; Yamamura, J.H.; Beekun, R.I. Gender differences in business ethics: Justice and relativist perspectives. Bus. Ethics A Eur. Rev. 2007, 16, 163–174. [Google Scholar] [CrossRef]

- Orlikowski, W.J.; Scott, S.V. The algorithm and the crowd: Considering the materiality of service innovation. MIS Q. 2015, 31, 201–216. [Google Scholar] [CrossRef]

- Setzke, D.S.; Riasanow, T.; Bhm, M.; Krcmar, H. Pathways to digital service innovation: The role of digital transformation strategies in established organizations. Inf. Syst. Front. 2021, 1–21. [Google Scholar] [CrossRef]

- Rachinger, M.; Rauter, R.; Müller, C.; Vorraber, W.; Schirgi, E. Digitalization and its influence on business model innovation. J. Manuf. Technol. Manag. 2019, 30, 1143–1160. [Google Scholar] [CrossRef]

- Echeverría, J.; Tabarés, R. Artificial intelligence, cybercities and technosocieties. Minds Mach. 2017, 27, 473–493. [Google Scholar] [CrossRef]

- Newell, S.; Marabelli, M. Strategic opportunities (and challenges) of algorithmic decision-making: A call for action on the long-term societal effects of ‘datification’. J. Strateg. Inf. Syst. 2015, 24, 3–14. [Google Scholar] [CrossRef]

- Bonnefon, J.F.; Shariff, A.; Rahwan, I. The social dilemma of autonomous vehicles. Science 2016, 352, 1573–1576. [Google Scholar] [CrossRef]

- Balsmeier, B.; Woerter, M. Is this time different? How digitalization influences job creation and destruction. Res. Policy 2019, 48, 103765. [Google Scholar] [CrossRef]

- Lobera, J.; Fernández Rodríguez, C.J.; Torres-Albero, C. Privacy, values and machines: Predicting opposition to artificial intelligence. Commun. Stud. 2020, 71, 448–465. [Google Scholar] [CrossRef]

- Howcroft, D.; Bergvall-Kåreborn, B. A typology of crowdwork platforms. Work. Employ. Soc. 2019, 33, 21–38. [Google Scholar] [CrossRef]

- Brock, D.M.; Shenkar, O.; Shoham, A.; Siscovick, I.C. National culture and expatriate deployment. J. Int. Bus. Stud. 2008, 39, 1293–1309. [Google Scholar] [CrossRef]

- Podsakoff, P.M.; MacKenzie, S.B.; Lee, J.Y.; Podsakoff, N.P. Common method biases in behavioral research: A critical review of the literature and recommended remedies. J. Appl. Psychol. 2003, 88, 879–903. [Google Scholar] [CrossRef] [PubMed]

- Chetty, S.; Johanson, M.; Martín, O.M. Speed of internationalization: Conceptualization, measurement and validation. J. World Bus. 2014, 49, 633–650. [Google Scholar] [CrossRef]

- Ritter, T. Alignment Squared: Driving Competitiveness and Growth through Business Model Excellence; CBS Competitiveness Platform: Frederiksberg, Denmark, 2014. [Google Scholar]

- Jarvis, C.B.; Mackenzie, S.B.; Podsakoff, P.M. A critical review of construct indicators and measurement model misspecification in marketing and consumer research. J. Consum. Res. 2003, 30, 199–219. [Google Scholar] [CrossRef]

- Nambisan, S.; Wright, M.; Feldman, M. The digital transformation of innovation and entrepreneurship: Progress, challenges and key themes. Res. Policy 2019, 48, 103773. [Google Scholar] [CrossRef]

- Eller, R.; Alford, P.; Kallmünzer, A.; Peters, M. Antecedents, consequences, and challenges of small and medium-sized enterprise digitalization. J. Bus. Res. 2020, 112, 119–127. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, Y.; Ma, L. Research on successful factors and influencing mechanism of the digital transformation in SMEs. Sustainability 2022, 14, 2549. [Google Scholar] [CrossRef]

- Hair, J.F.; Sarstedt, M.; Pieper, T.M.; Ringle, C.M. The use of partial least squares structural equation modeling in strategic management research: A review of past practices and recommendations for future applications. Long Range Plan. 2012, 45, 320–340. [Google Scholar] [CrossRef]

- Chin, W.W.; Newsted, P.R. Structural equation modeling analysis with small samples using partial least squares. Stat. Strateg. Small Sample Res. 1999, 1, 307–341. [Google Scholar]

- Ringle, C.M.; Wende, S.; Becker, J.M. SmartPLS 3. SmartPLS GmbH, Boenningstedt. J. Serv. Sci. Manag. 2015, 10, 32–49. [Google Scholar]

- Chin, W.W. The partial least squares approach to structural equation modeling. Mod. Methods Bus. Res. 1998, 295, 295–336. [Google Scholar]

- Hulland, J. Use of partial least squares (PLS) in strategic management research: A review of four recent studies. Strateg. Manag. J. 1999, 20, 195–204. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Nunnally, J.C.; Bernstein, I.H. Psychometric Theory, 3rd ed.; McGraw-Hill: New York, NY, USA, 1994. [Google Scholar]

- Hair, J.F.; Hult, G.T.M.; Ringle, C.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage Publications: Thousand Oaks, CA, USA, 2016. [Google Scholar]

- Saeidi, S.P.; Sofian, S.; Saeidi, P.; Saeidi, S.P.; Saaeidi, S.A. How does corporate social responsibility contribute to firm financial performance? The mediating role of competitive advantage, reputation, and customer satisfaction. J. Bus. Res. 2015, 68, 341–350. [Google Scholar] [CrossRef]

- Mackenzie, A. Cutting Code: Software and Sociality; Peter Lang Publishing: New York, NY, USA, 2006. [Google Scholar]

- Nasiri, M.; Saunila, M.; Ukko, J.; Rantala, T.; Rantanen, H. Shaping digital innovation via digital-related capabilities. Inf. Syst. Front. 2020, 1–18. [Google Scholar] [CrossRef]

| Topics | Concepts | Definition | Conceptualization | Contributions | Authors |

|---|---|---|---|---|---|

| Corporate responsibility in the digital realm | information ethics | Information ethics is defined as dealing with the impact of digital ICT on society and the environment at large as well as with ethical questions dealing with the internet, digital information, and communications media in particular. | Some authors reckon that issues of information ethics include privacy, information overload, internet addiction, the digital divide, surveillance, and robotics, particularly from an intercultural perspective. In addition, the conceptualization can be described in five aspects: 1. Impact on individuals: privacy, autonomy, treatment of humans, identity, security; 2. Consequences of society: digital divides, collective human identity and the good life; 3. Uncertainty of outcomes; 4. Perceptions of technology; and 5. Role of humans. | The academic contribution of these articles goes beyond the ethical analysis of individual technologies and offers an array of ethical issues that are likely to be relevant across different ICTs. These papers deliver the message that information ethics can and should contribute to addressing the challenges of the digital age. | [25,26] |

| Big data ethics | No explicit definition. | Big data ethics include privacy, security, consumer welfare, propensity, etc. | Authors investigate the relation between characteristics of big data and privacy, security and consumer welfare issues from the standpoints of data collection, storage, sharing, and accessibility. | [2,27] | |

| Computer ethics | Computer ethics is defined as the analysis of the nature and social impact of computer technology and the corresponding formulation and justification of policies for the ethical use of such a technology. | Computer ethics include intellectual property, privacy and data protection, equal access, responsibility and information, replacement of people in the workplace, computer crime, intellectual property, accuracy, accessibility, morality, and awareness. | The authors emphasize the importance of computer ethics and develop a typology that highlights different combinations of automated and human social presence, and indicate literature gaps, thereby emphasizing avenues for future research. | [28] | |

| Machine learning challenges | No explicit definition. | Machine learning challenges related to data privacy, protection rules, biases, equitably accessibility, and nondiscrimination. | This paper points out the challenges of machine learning, which include ethical challenges, the shortage of machine-learning engineers, the data quality challenge, and the cost–benefit challenge. | [6] | |

| Robot ethics | Robot ethics encompasses ethical questions about how humans should design, deploy, and treat robots. | Robot ethics can be divided into three broad categories: safety and errors, law and ethics, and social impact. Additionally, it can be illustrated by three aspects: the ethical systems built into robots; the ethics of people who design and use robots; and the ethics of how people treat robots. | Authors conclude that robot ethics are more related to the ethical level of the people who design and use robots. Moreover, we should consider both ethical questions about how humans should design, deploy, and treat robots and questions about what moral capabilities a robot could and should have. | [15,29,30] | |

| AI ethics | AI ethics deals less with AI as such, than with ways of deviating or distancing oneself from problematic routines of action, with uncovering blind spots in knowledge, and with gaining individual self-responsibility. | The author summarizes 8 AI ethical principles: privacy protection; accountability; fairness; transparency; safety; common good; explainability; and human oversight | The author gives a detailed overview of the field of AI ethics and also examines to what extent the respective ethical principles and values are implemented in the practice of research, development, and application of AI systems and how the effectiveness in the demands of AI ethics can be improved. | [31] |

| Topics | Concepts | Definition | Conceptualization | Contributions | Authors |

|---|---|---|---|---|---|

| CDR | CDR as an extension of CSR | CDR is an extension of a firm’s responsibilities which takes into account the ethical opportunities and challenges of digitalization. | This paper classifies CDR from three aspects: environmental CDR, social CDR, and governance CDR. | This paper expands on existing knowledge of CDR by clearly articulating how CDR relates to the general responsibilities of companies and by discussing which new topics might arise in these dimensions due to emerging technologies. | [9] |

| Digital corporate social responsibility | Corporate digital responsibility means a kind of digital corporate social responsibility. | The connotation of CDR includes securing autonomy and privacy, respecting equality, dealing with data, dealing with algorithms, taking impact on the environment into account, and ensuring a fair transition. | This paper provides an outline of what digital responsibility is and proposes a Digital Responsibility Index to assess corporate behavior. | [10] | |

| CDR as an awareness of duties | CDR means the awareness of duties binding the organizations active in the field of technological development and using technologies to provide services. | CDR issues related to the threat of AI and automation to the workforce and business operations. | The author identifies certain theoretical aspects and potential consequences related to threats posed by the development of new technologies, artificial intelligence, automation, and digitalization of social environments on a large scale. | [32] | |

| CDR as a set of shared value | CDR is a set of shared values and norms guiding an organization’s operations with respect to the creation and operation of digital technology and data. | The set of shared values and norms of an organization during the process of its creation of technology; data capture, operations, and decision-making; inspection and impact assessment; and refinement of technology and data. | This article illustrates how an organization’s shared values and norms regarding CDR can get translated into actionable guidelines for users. This provides grounds for future discussions related to CDR readiness, implementation, and success. | [11] | |

| CDR in service context | No explicit definition. | In the service context, CDR encompasses the ethical responsibility inherent in the creation and operation of service technologies and customer data across all functions of a service organization to ensure customers are treated in an ethical and fair manner while their privacy rights are protected. | This article uses a life-cycle stage perspective of data and technologies to understand digital risks, examine an organization’s business model and business partner ecosystem to identify risks origination, and finally proposes a set of strategies and tools for managers to choose. | [33] | |

| CDR as a voluntary commitment | CDR is a voluntary commitment by organizations fulfilling the corporate rationalizers’ role in representing community interests to inform “good” digital corporate actions and digital sustainability via collaborative guidance on addressing social, economic, and ecological impacts on digital society. | The contents of CDR include promoting economic transparency, promoting societal wellbeing, reducing tech impact on environment, fair and equitable access for all society, investing in the new-economy, promoting a sustainable planet to live on, and purpose and trust. | This paper uses harmonized and aligned approaches, illustrating the opportunities and threats of AI, while raising awareness of Corporate Digital Responsibility (CDR) as a potential collaborative mechanism to demystify governance complexity and to establish an equitable digital society. | [14] | |

| CDR should be considered separately from CSR | No explicit definition. | CDR includes technology access and technological literacy, information transparency, customers’ economic interests, product safety and liability, privacy and data security, dispute resolution and redress, and governance and participation mechanisms. | This paper conceptualizes CDR and gives an empirical analysis on consumers’ valuation of CDR norms and implementations. | [12] | |

| CDR | No explicit definition. | CDR is a set of practices and behaviors that help an organization use data and digital technologies in a way that is socially, economically, technologically, and environmentally responsible. | CDR include four categories: social, economic, technological, and environmental. | [34] |

| Characteristics | Description | Frequency (N = 202) | Percentage (%) |

|---|---|---|---|

| Founding | More than 10 years | 167 | 82.7 |

| 5 to 10 years | 17 | 8.4 | |

| Less than 5 years | 18 | 8.9 | |

| Ownership | Privately-owned | 129 | 63.9 |

| State-owned | 51 | 25.2 | |

| Others | 22 | 10.9 | |

| Size | Small (fewer than 300 employees) | 103 | 51.0 |

| Medium (300–1000 employees) | 58 | 28.7 | |

| Large (1000 or more employees) | 41 | 20.3 | |

| Industry | Software | 71 | 35.1 |

| Electrical equipment | 63 | 31.2 | |

| Computers | 43 | 21.3 | |

| Pharmaceuticals | 21 | 10.4 | |

| Others | 4 | 2.0 |

| Construct | Indicators | Measurement |

|---|---|---|

| Corporate digital responsibility | Corporate digitized responsibility Corporate digitalized responsibility | Latent variable scores Latent variable scores |

| Corporate digitized responsibility | Unbiased data acquisition Data protection Data maintenance | Latent variable scores Latent variable scores Latent variable scores |

| Unbiased data acquisition | We emphasize the encoding of analog information into digital format. We emphasize the collection of data that are addressable. We emphasize the collection of data that are programmable. | Scale 1(low)–5(high) Scale 1(low)–5(high) Scale 1(low)–5(high) |

| Data protection | We collect the data primarily from public sources. We use anonymized data in our business practices. We have established a data protection system. | Scale 1(low)–5(high) Scale 1(low)–5(high) Scale 1(low)–5(high) |

| Data maintenance | We update the database regularly. We have strict rules for data storage and utilization. | Scale 1(low)–5(high) Scale 1(low)–5(high) |

| Corporate digitalized responsibility | Appropriate data interpretation Objective predicted results Tackling value conflicts in data-driven decision-making | Latent variable scores Latent variable scores Latent variable scores |

| Appropriate data interpretation | We use digital technology to make data communicable. We use digital technology to make data traceable. We use digital technology to make data associable. | Scale 1(low)–5(high) Scale 1(low)–5(high) Scale 1(low)–5(high) |

| Objective predicted results | We use the processed data to promote business analysis. We use the processed data to guide operational decision-making. | Scale 1(low)–5(high) Scale 1(low)–5(high) |

| Tackling value conflicts in data-driven decision-making | We focus on human concern in data-driven business operations. We usually integrate data-diven analytics and social value in business decision. | Scale 1(low)–5(high) Scale 1(low)–5(high) |

| Corporate Digitized Responsibility | Unbiased Data Acquisition | Data Protection | Data Maintenance |

|---|---|---|---|

| We emphasize the encoding of analog information into a digital format. (Q1-1) | 0.884 | 0.236 | 0.183 |

| We emphasize the collection of data that are addressable. (Q1-2) | 0.902 | 0.236 | 0.184 |

| We emphasize the collection of data that are programmable. (Q1-3) | 0.847 | 0.340 | 0.198 |

| We collect the data primarily from public sources. (Q1-4) | 0.319 | 0.850 | 0.191 |

| We use anonymized data in our business practices. (Q1-5) | 0.255 | 0.886 | 0.218 |

| We have established a data protection system. (Q1-6) | 0.240 | 0.877 | 0.226 |

| We update the database regularly. (Q1-7) | 0.205 | 0.255 | 0.909 |

| We have strict rules for data storage and utilization. (Q1-8) | 0.209 | 0.209 | 0.922 |

| Corporate Digitalized Responsibility | Appropriate Data Interpretation | Objective Predicted Results | Tackling Value Conflicts of Data-Driven Decision-Making |

|---|---|---|---|

| We use digital technology to make data communicable. (Q2-1) | 0.929 | 0.090 | 0.167 |

| We use digital technology to make data traceable. (Q2-2) | 0.909 | 0.111 | 0.182 |

| We use digital technology to make data associable. (Q2-3) | 0.882 | 0.126 | 0.221 |

| We use the processed data to promote business analysis. (Q2-4) | 0.103 | 0.942 | 0.141 |

| We use the processed data to guide operational decision-making. (Q2-5) | 0.133 | 0.931 | 0.179 |

| We focus on human concern in data-driven business operations. (Q2-6) | 0.227 | 0.196 | 0.891 |

| We usually integrate data-driven analytics and social value in business decisions. (Q2-7) | 0.214 | 0.141 | 0.909 |

| Construct/Indicators | Mean | SD | Item Reliability Loading | Cronbach’s Alpha | Construct Reliability Composite Reliability | Convergent Validity AVE |

|---|---|---|---|---|---|---|

| Corporate digitized responsibility | 0.910 | 0.927 | 0.615 | |||

| Unbiased data acquisition Q1-1 Q1-2 Q1-3 | 3.63 3.72 3.70 | 1.051 1.082 1.099 | 0.785 0.797 0.835 | |||

| Data protection Q1-4 Q1-5 Q1-6 | 3.93 4.03 4.03 | 0.853 0.795 0.799 | 0.824 0.819 0.808 | |||

| Data maintenance Q1-7 Q1-8 | 3.86 3.78 | 0.930 0.966 | 0.705 0.684 | |||

| Corporate digitalized responsibility | 0.843 | 0.881 | 0.519 | |||

| Appropriate data interpretation Q2-1 Q2-2 Q2-3 | 3.70 3.65 3.61 | 1.073 1.029 1.057 | 0.783 0.787 0.793 | |||

| Objective predicted results Q2-4 Q2-5 | 3.56 3.63 | 0.974 1.051 | 0.559 0.597 | |||

| Tackling value conflicts in data-driven decision-making Q2-6 Q2-7 | 3.92 4.01 | 0.999 0.923 | 0.754 0.730 | |||

| Digital performance Q3-1 Q3-2 Q3-3 Q3-4 Q3-5 Q3-6 Q3-7 Q3-8 | 3.95 3.63 3.93 4.00 4.27 4.38 4.16 4.23 | 1.135 1.056 0.998 1.002 0.856 0.819 0.881 0.827 | 0.771 0.731 0.846 0.834 0.791 0.813 0.805 0.812 | 0.920 | 0.935 | 0.642 |

| Construct | Weight | t-Value | VIF |

|---|---|---|---|

| Corporate digitized responsibility | 0.650 *** | 22.862 | 1.786 |

| Corporate digitalized responsibility | 0.442 *** | 15.462 | 1.786 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, C.; Zhang, M. Conceptualizing Corporate Digital Responsibility: A Digital Technology Development Perspective. Sustainability 2023, 15, 2319. https://doi.org/10.3390/su15032319

Cheng C, Zhang M. Conceptualizing Corporate Digital Responsibility: A Digital Technology Development Perspective. Sustainability. 2023; 15(3):2319. https://doi.org/10.3390/su15032319

Chicago/Turabian StyleCheng, Cong, and Mengxin Zhang. 2023. "Conceptualizing Corporate Digital Responsibility: A Digital Technology Development Perspective" Sustainability 15, no. 3: 2319. https://doi.org/10.3390/su15032319

APA StyleCheng, C., & Zhang, M. (2023). Conceptualizing Corporate Digital Responsibility: A Digital Technology Development Perspective. Sustainability, 15(3), 2319. https://doi.org/10.3390/su15032319