1. Introduction

Campus planning holds a crucial role within the broader field of urban planning. With sustainable development and urbanization on the rise, the concept of creating walkable campuses has gained significant attention in urban planning. In 1990, numerous European and American universities collaborated to endorse the Talloires Declaration, placing sustainable campus transportation as a top priority and advocating for the development of green campuses. By 2003, an additional 293 universities, including many in China, had embraced this initiative. The adoption of sustainable transportation methods on campus is considered a crucial indicator, and establishing a walkable campus is recognized as an important means of fostering harmonious campus development.

Currently, the design of motorized roadways within campuses is relatively comprehensive. However, pedestrian pathways, which are the ones that are used most frequently, have not been paid a proportionate level of attention [

1]. This oversight reflects an underestimation of the importance of walkability in campus planning. Walking serves not only as the fundamental and primary mode of transportation within campuses but also as a means of combining leisure, physical fitness, and social interaction. Its most noteworthy characteristics are grounded in low-carbon and environmentally friendly attributes while simultaneously promoting physical and mental well-being and delivering a pleasurable experience. These aspects contribute positively to the overall well-being of urban societies and the ecological environment. Consequently, it has become an imperative task to conduct in-depth research aimed at enhancing the quality of walking spaces [

2,

3].

The link between walking and health receives widespread attention [

4]. The COVID-19 pandemic has not only escalated disease-related mortality but has also triggered a surge in mental health challenges [

5], which are often difficult to alleviate in the short term [

6]. As reported by the Chinese Association of Mental Health, the incidence of depression and anxiety disorders has been steadily increasing among college students, with depression rates exceeding 10% as of 2021. Numerous studies have shown that brief exposure to natural environments can enhance emotions [

7,

8] and reduce stress [

9]. Previous research [

10] has established that the concept of a walkable campus not only reduces carbon emissions but also enhances students’ health and overall quality of life. Other studies have explored the role of natural landscape elements (e.g., green vegetation, architecture, and human activities) in boosting pedestrians’ emotional well-being [

11]. Walking, as a form of moderate-intensity physical activity, has a positive impact on physical health [

12]. Meticulously designed pedestrian spaces not only elevate the comfort of walking, health, and well-being but also contribute to environmental sustainability [

13].

Micro-design factors play a crucial role in shaping the quality of walking environments and are directly linked to individuals’ walking experiences [

14]. It is imperative to pay more attention to the micro-scale aspects of walking environments, involving an exploration of the attributes within walking spaces that influence students’ perceptions of the physiological and psychological environment. The body of research on human perception during walking at the micro-scale remains limited. According to the Stimulus–Organism–Response (SOR) theory, stimuli consciously or unconsciously impact human emotions or behavior, eliciting a response [

15]. However, the perception of environmental stimuli by humans is not a singular and isolated process. Instead, it constitutes a multisensory experience that encompasses visual, auditory, olfactory, and other sensory inputs, all converging to form a comprehensive consciousness. People integrate this sensory information [

16] through multimodal perception, resulting in a bottom-up cognitive process. This cognitive process is also intertwined with human behavior and emotions (top-down signals) [

17], yielding a distinct and subjective perceptual experience [

18]. In contrast to other senses, vision and hearing perceive space more accurately and in direction. Amongst studies on audio-visual integration, some suggest that audio-visual integration can evoke stronger emotional responses [

19]. The existing body of research on multiple perceptions of walking space remains limited. Therefore, this study focuses on exploring the combined effects of audio-visual environmental factors on walking emotions.

Currently, researchers both domestically and internationally predominantly adopt a macroscopic objective approach when assessing the walking environment. This approach has resulted in a research gap regarding the subjective perspectives and psychological perceptions of individuals within the walking space and environment. From a physiological perspective, individuals perceive audio-visual cues from the external environment, and these cues trigger responses from the autonomic nervous system (ANS). The autonomic nervous system comprises the sympathetic nervous system (SNS), which activates during stressful situations, and the parasympathetic nervous system (PNS), responsible for restoring balance and homeostasis after stress. Heart rate variability (HRV), evaluated in both the time and frequency domains, arises from variations in the R-R interval. This interval is determined by impulses originating from the sinus node and is influenced by the activity of both the sympathetic and parasympathetic nerves. Consequently, changes in the R-R interval mirror the activation levels of both the SNS and the PNS. Research by Jackson M and Erin Chave further demonstrated the direct correlation between heart rate and HRV with an individual’s emotional state [

20]. HR(V) finds extensive use in medical examinations and responds to various factors such as exercise and emotional arousal [

21], accurately representing changes in physical states during walking. Hence, HRV serves as a valuable objective tool for assessing emotional changes. Physiological indicators can objectively reflect the influence of audio-visual factors on emotions, while subjective assessments are better suited to explore the underlying significance of the environment. Therefore, a combination of physiological data and subjective questionnaires is pivotal for obtaining the most accurate results.

To date, most studies have been carried out in controlled laboratory settings to examine the impact of the environment on emotions. However, given that environmental perception engages multiple senses, these experiments inherently suffer from limitations in replicating real-world conditions [

22,

23]. Laboratory environments provide controlled settings conducive to studying experimental variables, but these findings have limited applicability to real-life scenarios, resulting in reduced ecological validity. Conversely, field experiments offer a more authentic representation of the actual environment, ensuring greater ecological validity of the outcomes [

24]. To achieve the established goal, the field measurement experiment method is adopted in this study.

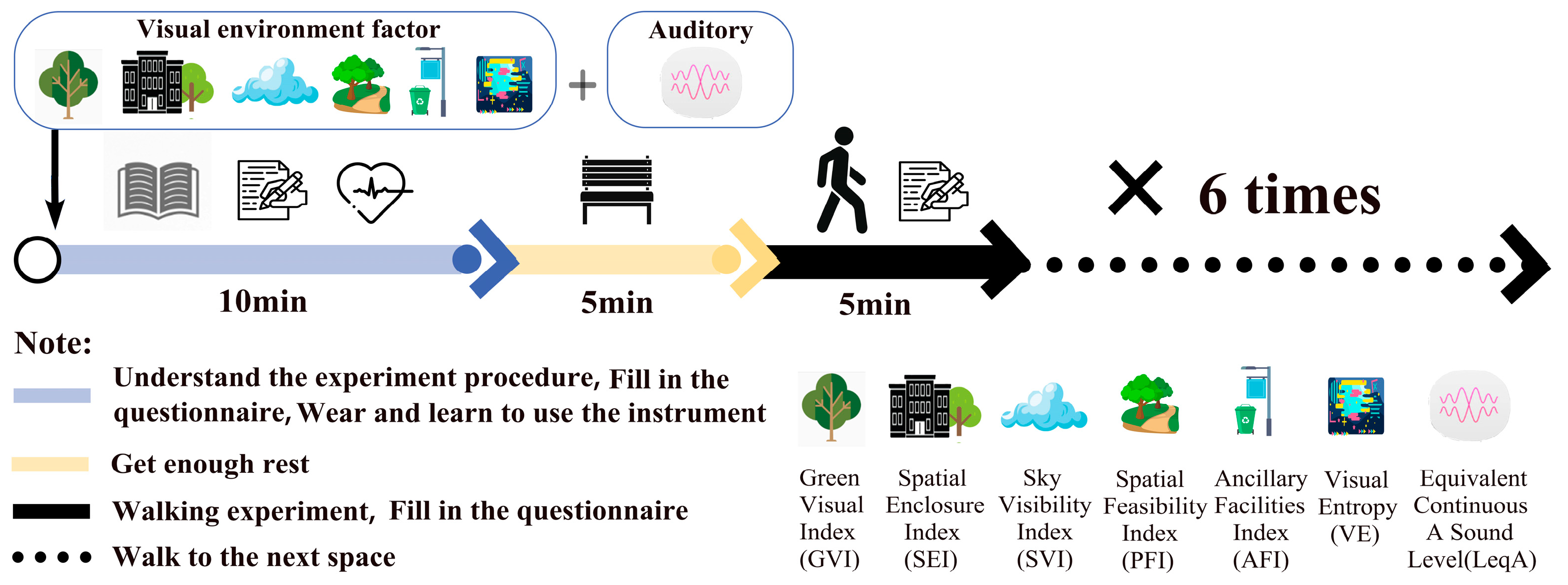

This study conducted comprehensive field measurement experiments to investigate the physiological indicators and subjective assessments of emotional responses in common and representative audio-visual environments within campus walking spaces. Within the research, this study addressed the following primary objectives:

To explore the correlation between individual audio-visual environmental factors and physiological and subjective evaluation indicators of emotions.

To examine how varying proportions of audio-visual environmental factors affect physiological and subjective evaluation responses to walking emotions in campus walking spaces.

To investigate whether the physiological and psychological effects of various combinations of audiovisual environmental factors on walking mood remain consistent.

To construct multiple linear regression models to establish the connection between audio-visual environmental factors and emotion perception in campus walking spaces. This model can guide planning and designing strategies in campus walking spaces to enhance pedestrians’ emotional perception.

3. Results

3.1. Descriptive Statistics and Correlation Analyses

The descriptive statistics of the experimental data are presented in

Table 3. Pearson’s correlation tests were conducted on the experimental data to analyze the relationship between the audio-visual environmental factors and the physiological indicators and subjective evaluations. The results are displayed in

Table 4. The analysis revealed that physiological indicators exhibited highly significant correlations with all audio-visual environmental factors except AFI (

p < 0.01), and subjective evaluation results displayed highly significant correlations with all audio-visual environmental factors except VE (

p < 0.01).

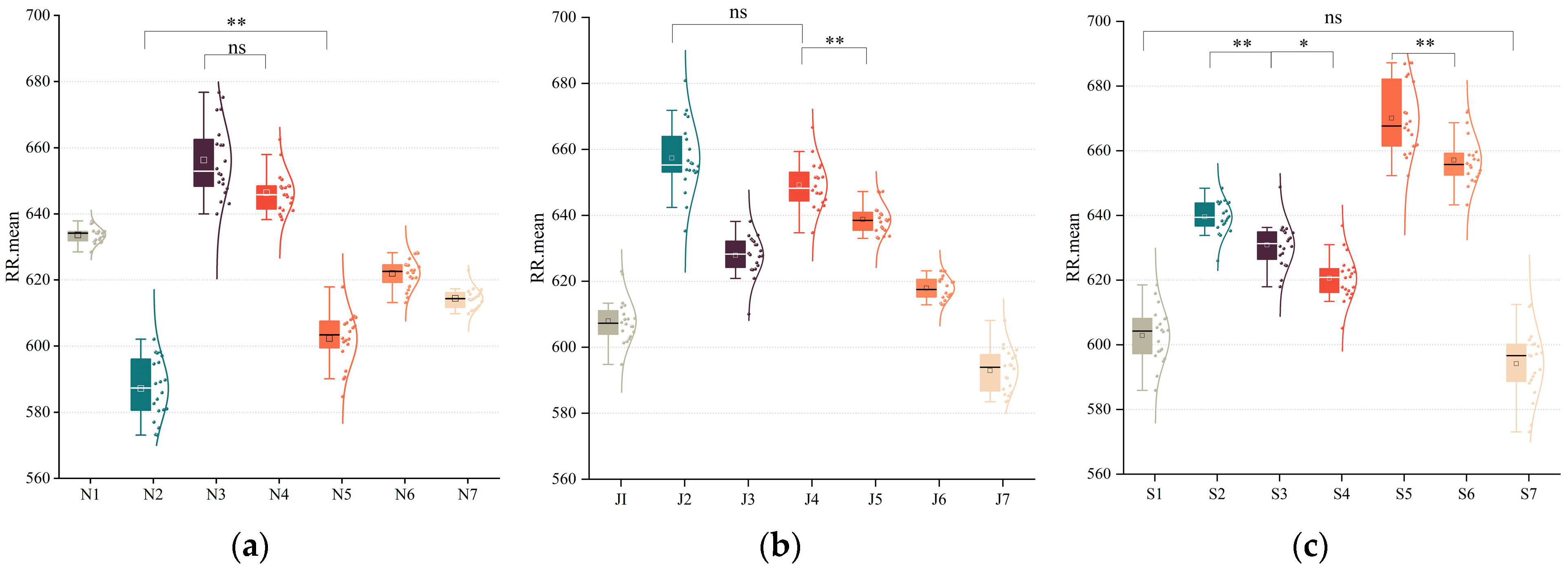

3.2. Physiological Effects of Emotions

Repeated measures ANOVA on the physiological indicator RR.mean for walking spaces at NEFU, JLU, and SYAU universities respectively showed (

Table 5) that the main effect of audio-visual environmental factors was significant F(3.57, 67.87) = 225.82,

p < 0.001, F(3.65, 69.31) = 215.61,

p < 0.001, and F(3.18, 60.42) = 210.85,

p < 0.001. The Greenhouse–Geisler estimates of deviation from sphericity were ω = 0.53, 0.61, and 0.60, respectively, and the deviation

was greater than 0.9, indicating that different audio-visual contextual factors have a significant effect on RR.mean.

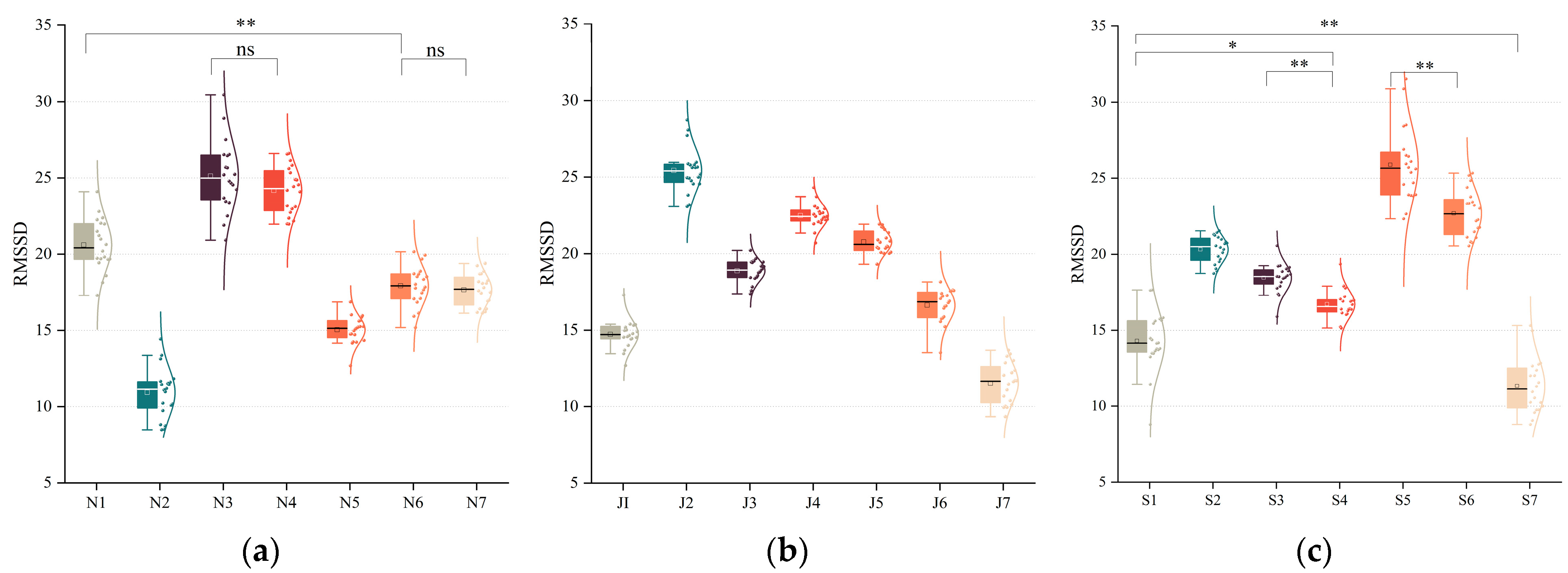

Repeated measures ANOVA on RMSSD showed significant main effects of audio-visual environmental factors F (4.43, 84.10) = 211.55, p < 0.001, F (3.80, 72.21) = 414.16, p < 0.001, F (3.20, 60.79) = 180.14, p < 0.001, and the Greenhouse–Geisler estimates of the deviations from sphericity estimates of ω = 0.51, 0.39, and 0.29, indicating significant differences in RMSSD across experimental sites on campus.

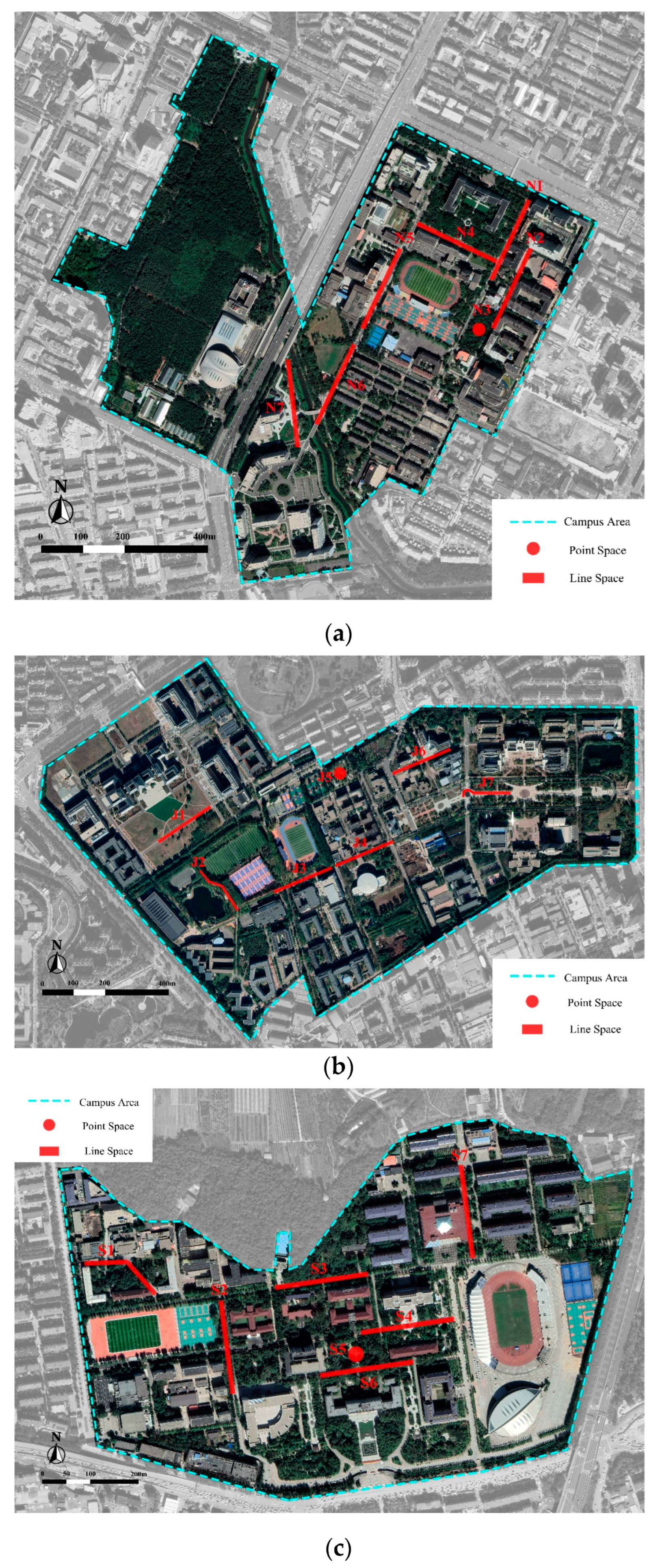

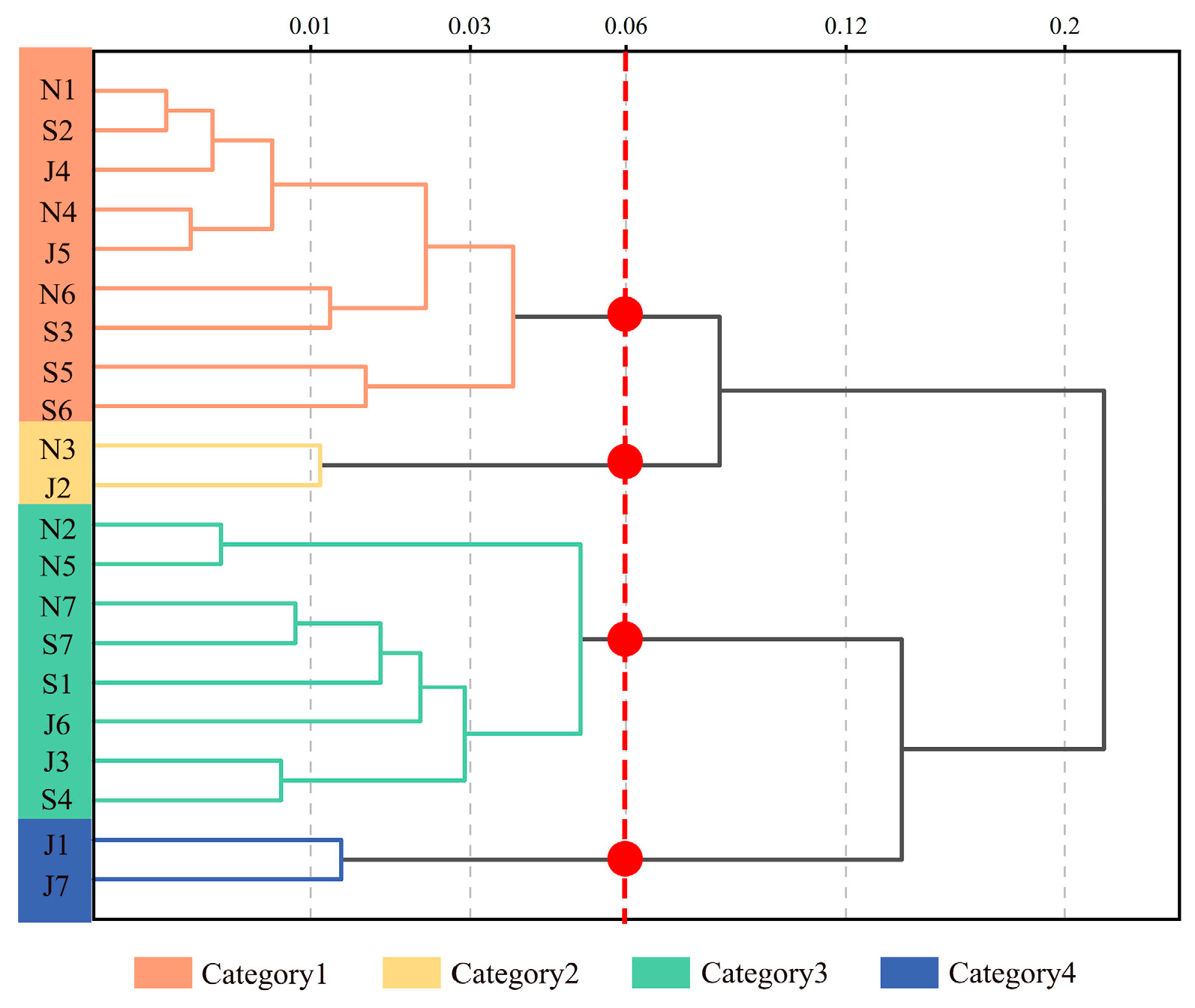

Hierarchical cluster analysis (HCA) can reliably identify clusters in data based on similarities between samples. HCA has been applied to audio-visual studies [

31]. Based on the measured audio-visual environmental factors data for 21 walking spaces, the HCA method was used to classify the 21 experimental locations into four classes of walking spaces (

Figure 4); features are shown in

Table 6.

The first category of walking spaces exhibited relatively high values for GVI, SED, and SFI, along with relatively low SVI and . The second category had the highest GVI and SED while featuring the lowest sound pressure levels and SVI. The third category of walking spaces displayed moderate levels across all audio-visual environmental factors. The fourth category had the highest SVI, sound pressure levels, SFI, and VE, but the lowest values for GVI, SED, and AFI. The categorization of different walking spaces based on the above audio-visual environmental factors will help to further investigate the effects of audio-visual environmental factors on emotion in different categories of walking spaces.

Furthermore, pairwise comparisons were conducted to perform a simple effects analysis on the pedestrian spaces of the three schools, comparing the mean values of physiological indicators under different audio-visual environmental conditions. The differences in the scores of physiological indicators (RR.mean and RMSSD) for the different walking spaces of the three schools are shown in

Figure 5 and

Figure 6, respectively.

Simple effects analyses revealed significant differences in physiological indicators (RR.mean and RMSSD) among the four types of walking spaces. Specifically, the fourth type of walking space had significantly higher scores than the third type (

p < 0.01), the third type scored significantly higher than the first type (

p < 0.01), and the first type had significantly higher scores than the second type (

p < 0.01). The analysis found that the first and second categories of walking space had a positive effect on emotion (

Table 7).

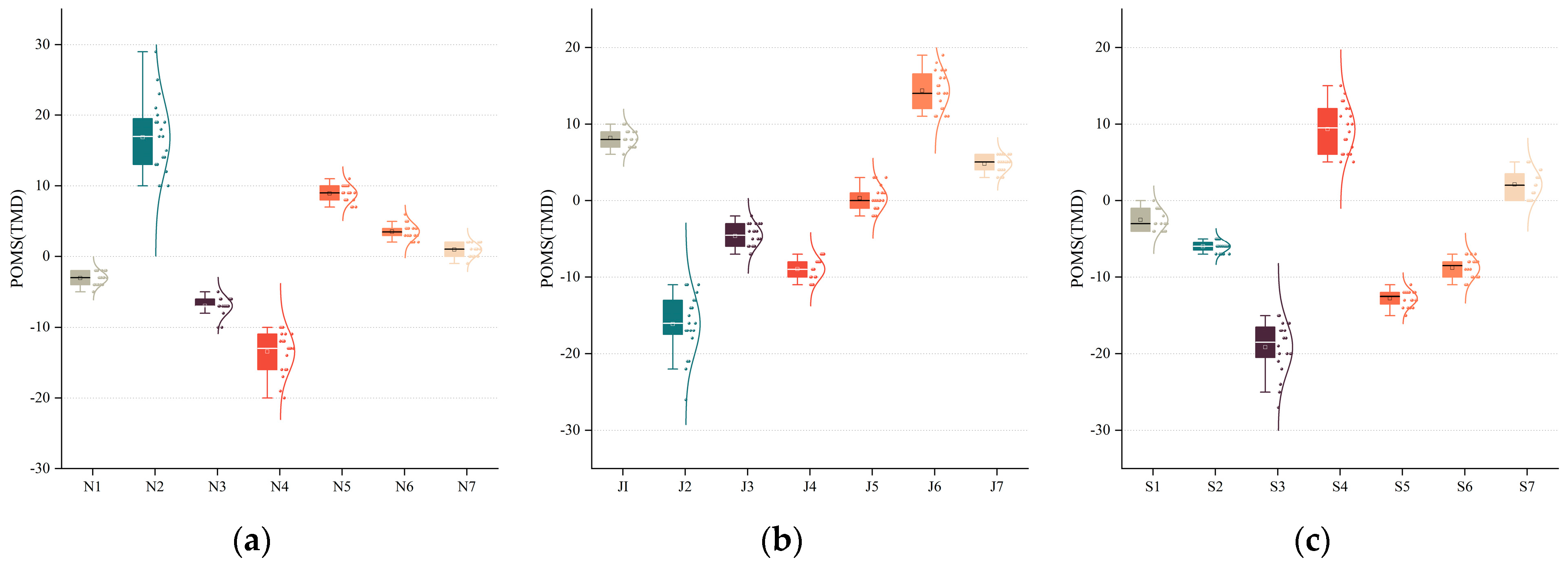

3.3. Psychological Effects of Emotions

The results showed that the main effect of different audio-visual environmental factors F (1.75, 33.16) = 328.10,

p < 0.001, F (2.30, 43.72) = 489.41,

p < 0.001, and F (3.05, 57.93) = 471.14,

p < 0.001 was statistically significant on POMS (TMD), as shown in

Table 8. Due to the differences in the levels of audio-visual environmental factors between the 21 walking spaces (

Figure 7), N2, N5, N6, J1, J6, J7, and S4 walking spaces increased negative emotions, the remaining walking spaces promoted positive emotions, and POMS(TMD) was significantly higher in the fourth category of walking spaces than in the first and the second categories of walking spaces (

p < 0.001), and the third category of walking spaces was significantly higher than in the second category of walking spaces (

p < 0.001).

Examining mood fluctuations allows us to pinpoint specific areas that feel confined and restricted due to inadequate design considerations, resulting in a sense of emptiness and inappropriateness. These spaces contribute to a less favorable pedestrian experience. For instance, Plaza J7 serves as a prime example of these characteristics, as indicated by its lower SED score. Similarly, Walking Space N6 is surrounded by an extensive area of ground cover plants but lacks nearby buildings, further emphasizing its unwelcoming nature. Some spaces create a tense atmosphere due to heavy traffic and the sheer volume of vehicles passing through them. This can lead to discomfort for pedestrians. Notable examples include N2, the primary road on campus characterized by dense traffic, and S1, a walking space near the campus gate. Conversely, some spaces come to life and become more intriguing due to the presence of people passing through, interacting, and communicating with one another, for example, J5, which is a compact walking space characterized by higher foot traffic, reduced noise levels, and a cleaner, more self-contained environment.

3.4. Prediction of Audio-Visual Environmental Factors and Emotions

This research employed multiple linear regression models to investigate the impact of various audio-visual environmental factors on the perception of walking emotions. The aim was to conduct a comprehensive analysis of the characteristics required for a campus environment that encourages enjoyable walking, offering practical reference suggestions for campus walking space design. This study excluded factors related to AFI and VE as they were not associated with physiological indicators or subjective evaluation indicators, and, therefore, they were not included in the model analysis (

Table 9,

Table 10,

Table 11 and

Table 12).

The table presents compelling evidence that different audio-visual environmental factors significantly influence both physiological indicators and subjective evaluation indicators when assessing emotion changes ( > 60). All three models passed the F-test, with test coefficients of 391.073, 459.667, and 125.423, respectively. Notably, GVI emerged as the dominant factor among the visual environmental factors, exhibiting substantial effects on physiological indicators and subjective evaluation indicators in all three models. The β coefficients for GVI were −0.792, −0.834, and −0.897.

4. Discussion

This study investigated the impact of various audio-visual environmental factors on the emotional experience of walking on campuses. Based on previous studies [

32,

33], this study combined these factors with physiological indicators of emotion and subjective evaluations to explore the underlying mechanisms. This study aimed to optimize campus walking spaces based on these audio-visual environmental factors. This study conducted field measurements to ensure ecological validity and employed real-time objective physiological indicators, including RR.mean and RMSSD, which were measured using portable electrocardiograph devices. These devices, combined with heart rate variability analysis, offered accurate and objective insights into individuals’ cognitive and emotional responses in built environments.

Unlike previous studies that often focused on urban streets and public spaces [

34,

35], this research concentrated on campuses, examining specific locations and populations. Prior research has shown that walking in green environments yields more favorable psychological and physiological responses than in suburban settings [

36,

37,

38]. Creating successful walkable environments necessitates attention to various physical factors, including pedestrian facilities, safety, and comfortable walking spaces [

39,

40,

41]. Increased pedestrian spaces, pedestrian-guided facilities, and green areas are associated with higher pedestrian satisfaction levels [

42].

The field experiments involving 21 pedestrian spaces revealed that all visual environment factors significantly influenced emotion. Among these factors, spatial form features (SEI) and spatial environmental features (GVI and SVI) played crucial roles in affecting emotion, especially concerning physiological effects. In particular, the GVI emerged as the dominant factor influencing emotional effects, aligning with prior research emphasizing the significance of greenery in elevating the overall experiences of pedestrians [

43].

Previous research has indicated a significant correlation between noise satisfaction and overall environmental satisfaction [

44]. This study corroborated these findings by demonstrating that quieter walking areas led to more positive emotional experiences for walkers. Thus, noise considerations are crucial in designing walking spaces. Each audio-visual environmental factor had a combined effect on emotion, with the combined effect of GVI, SEI, SVI, SFI, AFI, VE, and

emerging as a key factor influencing emotion. In contrast to prior studies that solely examined the impact of audio-visual environmental factors on emotion perception, this research took a step further by exploring the combined effects of these factors on emotion. Additionally, Li and Kang, in a previous study [

45], discovered that when environments were presented as audio-visual interactions, participants exhibited responses that closely aligned with those evoked by the simulated situation, and their physiological sensations were more in sync with their subjective perceptions. To enhance environmental quality planning and renovation strategies, an integration of visual and auditory environmental factors is imperative. This entails enriching the hierarchy of the campus plant landscape, enhancing road pavement construction, strategically situating public facilities, introducing enclosed spaces, simplifying interface complexity, mitigating noise pollution, and cultivating pedestrian-friendly campus pathways. These measures collectively promote a heightened sense of comfort for pedestrians, both subjectively and physiologically.

This study revealed that combining physiological and emotional data provided a more comprehensive reflection of the emotional responses. The process of emotion and mood development initially begins with an affective appraisal of the situation and is subsequently accompanied by physiological changes within the body [

46]. The comparative results of this study indicate that there are some differences between the emotional data captured by physiological sensors and the environmental experiences expressed by participants in questionnaires, effectively depicting changes in emotions. Therefore, this paper recommends the inclusion of physiological indicators as complementary measures to subjective assessments when evaluating the overall quality of campus pedestrian spaces.

Advancements in neurocognitive science and technological progress in the measurement and analysis of electrophysiological signals have paved the way for novel opportunities in directly observing human emotional experiences. While emotions may appear complex, psychologists have simplified most emotions into a two-dimensional model comprising emotional valence and arousal [

47]. Based on this two-dimensional emotion model, the Center for Emotion Research at the U.S. Department of Mental Health, through extensive experiments with picture emotion stimuli, has found that these two dimensions can be effectively reflected through electrocardiographic and other physiological signals. Some studies have even demonstrated that both dimensions of emotion can be recorded by measuring physiological responses. In this research domain, the collection of subjective and objective data from walkers is pivotal in understanding their relationship with the audio-visual environment within the walking space.

However, there are some limitations to the study. Experimenters’ familiarity with the campus environment can influence physiological and psychological responses to the same walking space. Participants familiar with the environment tend to be more sensitive to its nuances. In real-world environments, individuals often perform other tasks other than fully experiencing their surroundings. In such cases, audio-visual perceptions may be affected by salience, an attentional mechanism used to passively or actively filter out elements that stand out from the environment. Future studies on auditory environmental factors should consider overall sound complexity and the impact of specific sound sources on emotion.

With the continuous development of technology, audio-visual experiments increasingly use virtual reality (VR) technology to present environments [

48,

49]. In the future, research can explore how to integrate physiological measurements with VR instruments and incorporate additional physiological indices.

Therefore, in future research, the effects of audio-visual environments on physiological emotion indicators and subjective evaluations can be further investigated. This can be explored from the perspectives of audio-visual coordination, audio-visual salience, and audio-visual preferences.