Abstract

Digital platforms are one of the educational resources that were used in education prior to Covid-19 pandemic. Nevertheless, the pandemic has led to a complete shift to learning via digital platforms, and therefore they have become a strategic alternative for sustainable education. Given that previous studies regarding the impact of digital platforms on learning outcomes have yielded conflicting results, the present study aims to determine whether or not digital platforms improve learning outcomes. To achieve this aim, meta-analysis approach was used through the examination of the overall effect size of these platforms on the learning outcomes besides the examination of the effect size of a set of mediating variables including study period, subject area, student rating, and publication type. Thirty studies published between 2015 and 2021 comparing learning via digital platforms and learning in traditional classrooms were accounted for. The focus on this period of time was because the fourth industrial revolution took place in that time where the use of digital platforms in teaching was prosperous. Findings showed that the overall effect size using the random effect model (g = 0.278; p < 0.001; α = 0.05) was small and positive, from (0.123–0.433) in favor of learning via digital platforms, and so no evidence regarding publication bias could be discovered in these data. The result of the current study may be useful to universities and e-learning centers on how to use digital platforms to improve learning outcomes.

1. Introduction

Higher education institutions have integrated information and communication technologies (ICT) in the educational process and used the digital platforms to support student learning, teaching methods, and faculty members. Thus, digital platforms use started to grow significantly in the last years, especially in higher education [1]. Concentration was on the digital platforms and their associated cooperative tools to provide opportunities for knowledge-sharing higher-order thinking skills support through methods of conversation and active learning [2]. In addition, the appearance of Covid-19 pandemic has forced the educational institutions in various countries to shift towards e-learning and use digital platforms in the educational process [3,4]. Digital platforms are educational tools that enhance learning processes and provide a set of tools that allow synchronous and asynchronous interaction and communication between students themselves on one hand, and between the teacher and his students on the other hand. They also facilitate participation in different tasks [5].

Digital platforms that can be used in the educational process to provide flexible and reliable learning environment are numerous [6], such as Blackboard, Moodle, Canvas, Edmodo, Google Classroom… etc. [7,8]. For the successful use of these digital platforms, they should be adapted to the requirements and needs of teaching and learning [9]. Blackboard platform is one of the most popular and widely common digital platforms used in the educational process because of its ease of use, ability to access the educational resources, the availability of interaction and cooperative tools, the ability to prepare reports and statistics on student achievement, and many other advantages [10,11]. Furthermore, digital platforms have a set of common characteristics such as the learner’s participation-based active interaction and the positive interaction with knowledge and tasks available to students. They are also all flexible, scalable, and versatile of use [12]. In addition to assessment, digital platforms share the possibility to carry out tests and assignments, provide feedback to learners, provide content for each course, support interactive educational resources provide discussion forums, allow live chat between learners and teacher and between learners themselves, and conduct video conferencing [13].

Digital platforms are a vital learning environment to support sustainable education, as they include several tools that motivate students to continue learning [14]. In addition, digital platforms provide tools that enhance participatory construction of knowledge, which helps maintaining the impact of learning and supports the sustainability of learning outcomes [15]. Digital platforms facilitate independent learning skills, and such skills are considered among the most important inputs for sustainable education [16]. Sustainable education entails learning environments that support cognitive thinking and motivation for achievement, which can be obtained through the platforms through the digital tools and strategies of instructional design that can be practiced through these platforms [17]. Digital platforms also have the potential to improve wellbeing [18], which, given the correlation between wellbeing and sustainability [19], highlights the ability of digital platforms in promoting educational sustainability. Recently, many studies have been carried out on digital platforms and their impact on improving different learning outcomes. Different results have been reported by these studies revealing no consensus regarding the effectiveness of these digital platforms in improving learning outcomes. Some of these studies confirmed the positive impact of the digital platforms in improving various learning outcomes such as [20,21,22,23,24,25,26,27,28]. During COVID-19 pandemic, success of these digital platforms continued in achieving a positive effect on learning outcomes enhancement [17,29]. Other studies such as [30,31,32,33,34,35,36] showed no effect of digital platforms on different learning outcomes improvement.

Accordingly, the present study aims to bridge the gap regarding the impact of digital platforms in the enhancement of the learning outcomes based on evidence extracted from previous studies. Due to the pandemic, educational institutions all over the world have moved to online learning, and digital platforms have become the only way for continuing the educational process. Therefore, conducting studies in this aspect has been of great importance where meta-analysis can provide quantitative studies about the impact of digital platforms on the development of learning outcomes. Meta-analysis can also indicate whether intervention has a positive impact on improving learning outcomes [37]. Besides, it can determine the effect strength and whether it is positive or negative in addition to addressing the varied results [38,39].

In short, the present study aims to determine the effect of digital platforms on improving learning outcomes using results of previous studies’ meta-analysis and according to some intermediate variables. It mainly aims to answer these questions:

- ▪

- What is the effect of digital platforms in higher education students’ learning outcomes?

- ▪

- What is the effect of digital platforms regarding the present study’s duration on higher education students’ learning outcomes?

- ▪

- What is the effect of digital platforms regarding to the present study’s subject area on higher education students’ learning outcomes?

- ▪

- What is the effect of digital platforms regarding students’ classification on higher education students’ learning outcomes?

- ▪

- What is the effect of digital platforms regarding the type of publication on higher education students’ learning outcomes?

2. Literature Review

Digital platforms are networks that provide an effective learning environment and interactive support for students [40]. A digital platform contains a set of tools that support students’ learning, such as videos, discussion forums, chat forums, assignments, quizzes [41]. Interactions through the digital platforms are not very different from traditional interactions in face-to-face classroom. Through these platforms, students and teachers can exchange concepts and information, and assess and solve problems that may face students during the learning process [42,43,44,45].

In education, digital platforms have many advantages. For example, learning through these platforms advocates learner-centered learning approaches and ensures learner’s active participation [46]. Moreover, the use of digital platforms facilitates the delivery of content in various ways to account for individual differences between learners [47]. Digital platforms also allow the learner to communicate within a multimedia rich environment that enhances the frequent retrieval of information [48], and provides an educational environment conducive to group thinking and collaborative learning [49], which is beneficial in developing the learner’s individual and collaborative skills [50]. Furthermore, they help teachers organize the learning activities and plan their lessons [51]; and they reduce administrative tasks such as attendance registration, and make access to the teacher easier through the means of communication available within the platform [52]. Digital platforms are effective for the learner and well-being [18,53], ability to improve self-regulated learning [54], and motivation [29].

In recent years, many studies have tackled the effect of digital platforms on improving different learning outcomes, but conflicting results were concluded. In this regard, Akbari, Heidari Tabrizi, and Chalak [21] investigated the effect of teaching via digital platforms on improving reading comprehension in English language compared to face-to-face learning methods. Results showed the superiority of students who were taught via digital platforms to students who were taught through the traditional methods. On the contrary, results of Fayaz and Ameri–Golestan [34] showed that traditional teaching methods are more effective than teaching via digital platforms in enhancing English vocabulary acquisition. In addition, Elfaki, Abdulraheem, and Abdulrahim [22] showed that digital platforms are effective in improving the academic performance of nursing students and satisfaction with learning, whilst results of Aalaa, Sanjari, Amini, Ramezani, Mehrdad, Tehrani, Bigdeli, Adibi, Larijani, and Sohrabi [30] concluded that nursing students who study via the use of traditional teaching methods were superior to their colleagues who studied via digital platforms. Whereas, Sáiz-Manzanares, Marticorena-Sánchez, Díez-Pastor, and García-Osorio [25], after investigating the effect of using digital platforms in improving student learning outcomes in hypermedia design skills in comparison with the use of traditional teaching, revealed the effectiveness of digital platforms in improving students’ learning outcomes in media skills. On the other hand, Hu–Hui [36] highlighted the ineffectiveness of digital platforms in improving students’ learning outcomes in design skills. In addition, it concluded that learning via digital platforms reduced students’ satisfaction with the learning process. Handayanto, Supandi, and Ariyanto [28] aimed to determine the impact of learning via the Moodle platform in mathematics education, revealing a significant increase in students’ achievement results in mathematics through the digital platform Moodle. Fatmi, Muhammad, Muliana, and Nasrah [33] showed that Moodle digital platform was not effective in promoting the learning outcomes of physics students as students did not fully fulfill the expected goals. Similarly, Jusue, Alonso, Gonzalez, and Palomares [23] evaluated the learning process through the digital platform Moodle for medical students in clinical skills. Findings revealed that performance of students in the experimental group was superior to the performance of their colleagues in the control group who studied via the traditional method. The results of Balakrishnan, Nair, Kunhikatta, Rashid, Unnikrishnan, Jagannatha, Chandran, Khera, and Thunga [31] assured the superiority of student in the group that studied through the traditional ways. That is, digital platforms use was not effective in promoting students’ achievement in clinical research units. While Wu, Yu, Yang, Zhu, and Tsai [55] explored the impact of digital platforms on students’ skills, particularly computing skills, and ascertained the superiority of students who studied via digital platforms. In other words, digital platforms had an impact on improving students’ skills. In addition, Callister and Love [32] showed that students’ skills who studied by the traditional classroom were better than the skills of their colleagues who learned via the use of digital platforms. In brief, conflict or inconsistency in the findings of these previous studies shows the importance of conducting more studies among which is the present study to verify the effect of digital platforms use in improving learning outcomes using the meta-analysis approach of previous studies.

3. Theoretical Framework

The use of technology in general and digital platforms in particular is related to a number of educational theories. For instance, digital platforms are connected to the cognitive theory taking into consideration that the learner’s mind processes information in a way that is similar to the computer’s way of processing. It perceives and recognizes the information coming from the outside, then it stores this information to be retrieved when needed [56]. On the opposite, the teacher is the manager of the learning process who directs, explains, and organizes this information or knowledge which is an external reality that the learner should integrate into his mental patterns and then reuse instead of acquiring observable behaviors [57,58]. The preferred teaching method, in turn, should allow multiple learning paths that account for the individual differences affecting the way the learner processes information. Furthermore, the theory is usually concerned with the internal mental processes and how they are used to motivate learners to learn as motivation as an important and critical factor for learning [59,60,61].

However, when talking about digital platforms and their link to educational theories, it is important to consider the constructivist because digital learning platforms are based on the principles of constructivist, which enable the users to build content, and modify and adapt it to support participation and discussion in the digital platform environments where it will be possible for users to build and open forums, start group discussion, and close it at a specific time for the purpose of specific educational activities [62,63,64,65,66]. In addition, digital platforms support the learners’ active and collaborative participation where knowledge is built by strengthening participatory learning through discussion and social dialogue with a focus on building knowledge rather than transferring it [67,68,69].

Moreover, digital platforms are linked to social learning theory which postulates that learners learn better when receiving appropriate support from peers and teachers [70]. Besides, social interactions are of great importance because knowledge is constructed through a joint between two or more people [60,71]. Learning is a social secondary outcome of conversation and negotiation among learners who gain knowledge through their participation in related social activities [72]. Accordingly, digital platforms provide tools for social interaction, including chatting, discussion groups, blogs, and content sharing [73].

On the other hand, connectivism deals with learning as a network of personal knowledge created to engage learners in socialization and online interaction [63]. It postulates that the concept of the learning process consists of several nodes joined by links where nodes represent online information, whether text, audio, or visual information. Links on the other part stand for the learning process itself, which is the effort performed to join these nodes together to form a network of personal knowledge [74,75]. According to connectivism, distance learning environment is largely based on learning design because the design of distance learning environments based on connectivism is not just courses or programs, but based on specific characteristics that encourage the learner to continuous learning, communication, engaging in learning, and active participation [76,77].

4. Materials and Methods

4.1. Methodology

Meta-analysis procedures suggested by Glass, McGaw, and Smith [78], which include the following: collecting relevant studies, coding study features, calculating the effect sizes of the outcome measures for each study, and investigating the moderating effects of study characteristics on the outcome measures were used in the present study.

4.2. Literature Search

A number of terms that describe digital platforms have been used to search for relevant literature related to the study’s topic. Terms such as LMS, digital platforms, distance learning, E-learning, Learning Management system, online, learning, education, teaching, and Not Covid-19 were looked for in the titles abstracts, and keywords of previous related studies. The term “Not Covid-19” was used because studies in the time of this pandemic have a special nature which is different from the studies before it. Studies that were accounted for in the present study were those studies carried out from 2015 to 2021 because this period is considered as one of the periods of the fourth industrial revolution that witnessed the boom in the employment of digital platforms in the educational process. Search was conducted through the Web of Science and Scopus databases because they are distinguished by their quality and inclusion of many other databases—Appendix A includes all analyzed references in the selected databases. As mentioned previously, meta-analysis, where studies meeting the inclusion criteria mentioned in the present study, were analyzed.

4.3. Inclusion and Exclusion Criteria

A set of inclusion and exclusion criteria, as shown in Table 1, was developed. The study was assumed to be published between 2015 and December 2021 and not to be in the context of COVID-19 pandemic because it is difficult to standardize the comparison context between digital platforms and educational environments during that pandemic. In addition, the study had to be of an experimental nature and therefore should use either experimental or quasi-experimental research with a design that compares the digital classroom and its effect on the students’ outcomes to the traditional one and its effect. The study was applied to undergraduate and postgraduate education. Furthermore, studies chosen for analysis had to include sufficient data to calculate the effect sizes such as means, standard deviations, and sample sizes. In addition, student learning outcomes were being clearly described before the experimentation using quantitative data. Then, the learning content was to be the same for both the digital and traditional classrooms. Finally, the study had to be published in English.

Table 1.

Inclusion and exclusion criteria.

4.4. Identification and Selection of Publications

4.4.1. The First Phase

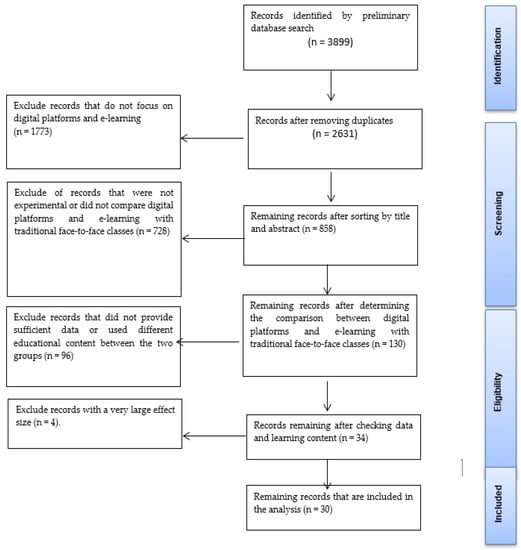

During the first phase of publications selection, study title and abstract of each study were read to ensure that they were relevant to the digital platforms. Articles of refereed journals and conference papers were only included, and articles not related to digital platforms were excluded with the result that 3899 publications were accounted for. All duplicates in the databases were removed. After data identification and screening, 858 publications were considered in the present study as shown in Figure 1.

Figure 1.

PRISMA flow diagram.

4.4.2. The Second Phase

During the second phase, studies that compared face-to-face education across digital platforms and traditional classrooms were identified. Studies that included the implementation of learning via digital platforms have been carefully examined to determine whether they compare learning via digital platforms with traditional face-to-face classes. At the end of the second stage, the number of considered publications was 130 publications.

4.4.3. The Third Phase

During the third phase, methodological designs and data generated from the study were examined. Studies that did not provide sufficient data for calculating the effect sizes (mean, standard deviations, and sample sizes) were excluded. Studies that used different educational content for both digital and face-to-face classes were also excluded. Experimental or quasi-experimental designs whose designs compare the intervention (digital platforms) to control (traditional head-to-head separation) were only included. In addition, studies that refer to multiple student learning outcome measures, such as a final test score or score on a final project were included as well. In each case, data were used to calculate the effect sizes using appropriate outcome equations. The Data Analysis section provided more details about the specific statistical models used. A PRISMA flowchart adapted [79] to the literature search process and the number of studies identified, screened, and included in the meta-analysis is shown in Figure 1.

4.5. Coding Features and Procedures

All studies were coded using a set of relevant variables including study time duration, subject area, student classification, and publication type. Data were extracted to calculate effect sizes using the mean scores, standard deviations, and sample size of each study. The final list of coding included, for example, time duration less than a semester, a whole semester, or more; subject areas such as social science, computer science, arts and humanities, health, science, or business; students’ classifications, whether undergraduates or postgraduates; and publication type, such as a journal article or conference paper. Therefore, the final list included these criteria:

- ▪

- Author Details: Author’s last name.

- ▪

- Year: The year the publication was published in.

- ▪

- Database Source: Database source used to locate the publication.

- ▪

- Publication Title: Title of the publication.

- ▪

- Publication place: Name of the journal or conference where the publication was published.

- ▪

- Publication Type: Type of publication (Journal article or Conference paper).

- ▪

- Subject Area: Discipline in which the study was conducted (arts and humanities, health, computer sciences, social sciences, science, or business).

- ▪

- Student classification: Population from which the sample was chosen (undergraduates or postgraduates).

- ▪

- Study Duration: Length of the study timeframe.

- ▪

- Quantitative Data: Quantitative data reported by the study to calculate the effect size.

Before coding the final list, the research team coded a small sample of articles individually and then discussed the coding scheme and results. Differences in opinions about the best way to accurately encode the variables included in the meta-analysis were discussed. Journal articles were then divided equally among the researchers and coded independently. After reviewing a random sample of publications, the research team developed the coding scheme. The coding procedure was carried out in a process consistent with frequent contact among the researchers participating in the study. The research team was invited to discussion whenever there were questions about coding and a consensus was reached. Through this level of communication consistent, the coding process was guaranteed that it was performed consistently. After finishing the coding process, the quantum coding was reviewed where some errors were identified and corrected.

4.6. Effect Size Extraction and Calculations

Comprehensive meta-analysis (CMA) version 3.0 was used to estimate the effect sizes of the thirty-four publications identified through systematic procedures. Later on, four publications were to be excluded because their effect sizes were too high with the result that only thirty eligible publications were left for analysis. Effect sizes were extracted in three different domains, mainly the cognitive (e.g., achievement), affective (e.g., satisfaction), and behavioral (e.g., retention rates) domains. However, the present study only presents the results of the cognitive domain because the reviewed studies included shortly reported about students’ behavioral and emotional outcomes. Whereas the articles included in this present study provides thirty-four effect size comparisons.

Cohen’s d is the difference between outcome means with respect to the pooled standard deviations:

The pooled standard deviation is calculated based on the standard deviations in groups, s1, s2:

Both of Hedge’s g and Cohen’s d measures work similarly with large sample sizes, but Hedge’s g has the best properties for small samples. It is equivalent to Cohen’s d multiplied by the correction factor J that adjusts for the bias of the small sample:

where N is the total volume of the sample.

All data were assumed to be under the model of random effects as (α = 0.05) [80]. Models of random effects are more appropriate when the effect sizes of the included studies in the meta-analysis are different from each other. Random effects model was decided to be used in the present study because measures of students’ cognitive outcomes differ from one study to another, and analyses of subsequent subgroups were carried out using mixed effects analysis (MEA) as applied in the comprehensive meta-analysis program where the effect size of 0.2 is considered small, 0.5 is considered medium, and 0.8 is considered large [81].

To calculate the probability that the present meta-analysis omitted the insignificant results, a fail-safe N test [82], which is the number of unpublished studies needed to change the estimate of the size of an insignificant effect, was used. In addition, Orwin’s fail-safe N test was used to determine the number of missing null studies required to raise the current effect size to a trivial level [83]. Moreover, publication bias was assessed using fail-safe N, Orwin’s fail-safe N test, and through visual inspection of the suppression scheme.

5. Result

According to any meta-analysis, effect sizes must be standardized before starting any analysis process. For this sake, Hedge’s g measure was chosen as a standard measure of the effect size for the continuous variables because it is better than Cohen’s d in control the bias of the small sample size

The final set of meta-analysis consisted of thirty publications and thirty-four effect sizes that were identified through a process of searching, filtering, coding, and extracting. The total sample size was (N = 8293) students who either studied via digital platforms (N = 3725) or through traditional face-to-face classes (N = 4568). The included samples of studies were published in a wide range of journals and conference proceedings. In addition, included studies were published between 2015 and 2021.

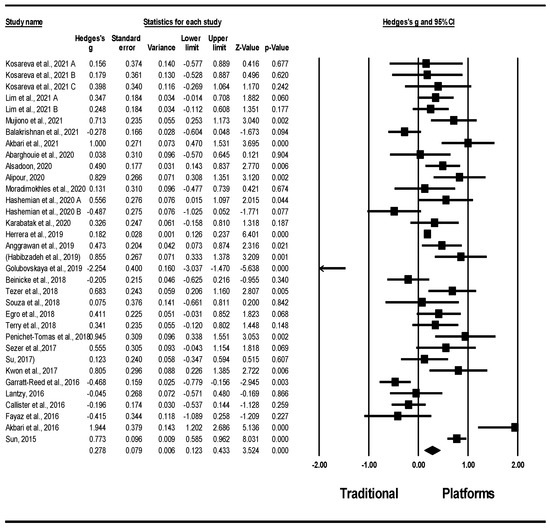

5.1. Overall Effect of Digital Platforms on Students’ Learning Outcomes

A total of thirty-four effect sizes were estimated from thirty published studies for the present study. Visual representations of data effect size to effectively present the final results were used [84]. A forest plot as shown in Figure 2 was also used. However, to demonstrate the summary of effect sizes collected in this study as shown in Figure 2, data were arranged in the following order: study author (first author family name), year of publication, Hedge’s g, the standard error, variance, confidence interval, Z-value, and p-value. Small squares indicate the point estimates of the effect size in each study, while the horizontal line that intersects each square represents the confidence interval for the study estimate. The diamond at the bottom of the forest plot represents the mean effect size after pooling all studies together with a confidence interval. Figure 2 shows that.

Figure 2.

Forest plot of student learning outcomes [20,21,24,31,32,34,35,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107].

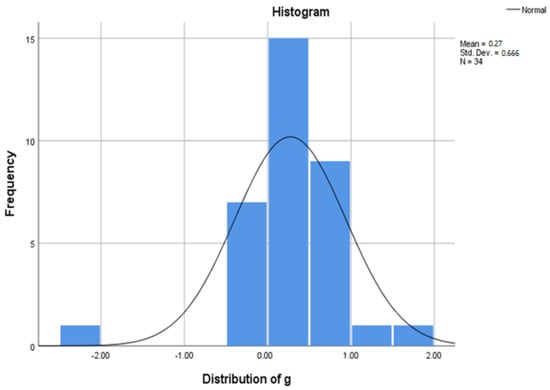

Hedge’s g was normally distributed as shown in Figure 3. The overall effect size using the random effects model was g = 0.278, which was a small effect size [81]. The overall effect size was statistically significant at Z-value = 3.524, p < 0.001 and a 95% confidence interval ranging from 0.123 to 0.433. Nevertheless, the size of the observed effect varied from one study to another, and a certain amount of variance was expected due to sampling error. The Q-statistic value provides a test for the null hypothesis stating that “all studies involved in the analysis share the same effect size [80].” The Q value was 188.16 with 33 degrees of freedom and p < 0.001.Thus, the null hypothesis that the true effect size is identical in all studies can be rejected. The value of I2 = 82.462% indicates that the observed variance ratio reflects differences in true effect sizes rather than sampling error [80]. Higgins–Thompson [108] suggest that I2 values around 25%, 50%, and 75% can be interpreted as low, medium, and high, respectively, in heterogeneity. Besides, I2 of the general model shows a high level of heterogeneity and indicates that one or more mediating variables, other than sampling error, can explain this variance in these data. Thus, exploring potential mediating variables in these data to predict and explain variance is essential. So, exploring the potential intermediate variables in the form of contextual elements of studies is important.

Figure 3.

Histogram of effect sizes.

5.2. Study Duration

Table 2 shows the distribution of the effect sizes to study duration. It shows that the largest effect sizes (k = 18) were in the “less than a semester” group and the participating students were (N = 1672) with a small to medium effect size at g = 0.424, p < 0.Meanwhile, 14 effect sizes were in the “one or more semesters” group and the participating students were (N = 6545) with an effect size g = 0.111, p = 0.Duration was not mentioned in two studies only and the participating students were (N = 76) with effect size g = 083, p = 0. Students who studied via the digital platforms outperformed peers who learned via traditional classrooms in three studies regarding study duration. The overall effect size of studies that were less than one term (g = 0.424, p < 0.001) was greater than those that were of one or more terms (g = 0.111, p = 0.380) and the study that did not determined its duration (g = 0.083, p = 0.865). In addition, no statistically significant difference due to the difference in study duration Q-value = 3.442, p = 0.179 was found.

Table 2.

Study Duration Effect Sizes.

5.3. Subject Area

Table 3 shows the effect sizes by subject area for the use of digital platforms and traditional classrooms. Subject areas were arts and humanities, business, computer science, health care, science, and social sciences. Their effect sizes were 14, 1, 4, 11, 1, and 3, respectively. The biggest size effects (K = 14) were in the field of arts and humanities with a small to medium effect size g = 0.313, p = 0.The largest effect size was in the field of computer science, that included four studies and the number of participating students was (N = 308), at g = 0.507, p < 0.However, the other four domains were not statistically significant namely, arts and humanities, business, health care, and social sciences, which were not statistically significant with 95% confidence intervals overlapping with zero. These works had a negative effect size (g = -0.196, p = 0.259) in favor of the traditional classroom condition. Nevertheless, the confidence interval overlapped with zero indicating that this result was not statistically significant. Moreover, statistically significant differences due to the difference in the field of study Q-value = 13.279, p = 0.02 were noticed.

Table 3.

Subject area effect sizes.

5.4. Student Rating

Table 4 shows the effect sizes regarding rating of participating students in studies of the effect of digital platforms. Among these effect sizes, there were eight effect sizes for postgraduate students (k = 8, N = 588) and twenty-six effect sizes for undergraduate students (k = 26, N = 7705). Results showed superiority of undergraduates regarding the use of digital platforms with a small-to-medium effect size at g = 0.321, p < 0.001. However, the effect size of postgraduate students’ use of digital platforms was s at g = 0.093, p = 0. Nevertheless, this difference was not statistically significant. In addition, the difference between students’ classification was not statistically significant also with Q-value = 0.770, p = 0.380.

Table 4.

Effect sizes of student rating.

5.5. Publication Type

Table 5 shows the effect sizes in accordance with the type of publication where it is obvious that the scientific articles have represented the vast majority of effect sizes k = Participating students in these articles were (N = 3141) with a small-to-medium effect size at g = 0.317, p = 0. That is, participating students in these articles were superior in the case of digital platforms than others. In contrast, the number of effect sizes of the conference papers was k = 4 with a negative effect size at g = −0.088, p = 0.820 indicating that the performance of students in the traditional classrooms was better than the performance of peers in the digital platforms. However, confidence interval overlapped with zero, which means that this difference according to publication type was not statistically as Q-value = 1.034, p = 0.309.

Table 5.

Publication type effect sizes.

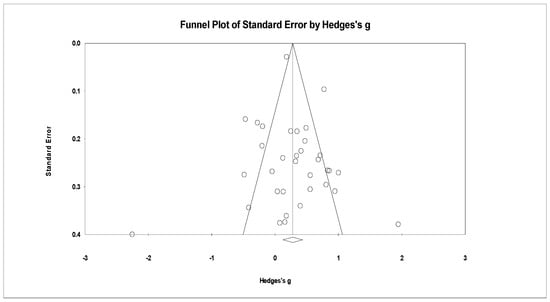

5.6. Publication Bias

The visual inspection of the funnel plot of the meta-analysis shows generally symmetrical distributions around probable medium effect sizes with a small number of outliers (Figure 4). The funnel plot is a scattered plot of effect sizes estimated from studies in a meta-analysis versus standard error [109]. In the diagram, the horizontal axis is Hegdes’ g drawn next to the standard error on the vertical axis. A funnel plot, in general, indicates no publication bias in the meta-analysis [110]. But because of the insufficiency of the visual inspection alone the Classic fail-safe N test and Orwin’s fail-safe N test were used. The Classic fail-safe N test showed that 513 additional studies about the effect of digital platforms use on students’ learning outcomes were required to negate the overall impact in the present meta-analysis. These calculations show that the number of existing or additional studies needed to negate the overall effect sizes found in this meta-analysis are more than 5k + 10 [111]. Besides, Orwin’s fail-safe N test results reveal that the number of null missing studies required to raise the present average overall effect size in the present study to a level (g = 0.01) was The consensus between the results of the Classic fail-safe N test, Orwin’s fail-safe N test, and the funnel schema prove that publication bias is not a serious threat to the validity of meta-analysis.

Figure 4.

Funnel plot of effect size data.

6. Discussion

Learning via digital platforms has been a strategic and essential alternative in today’s educational process since the closure of higher education institutions caused by Covid 19 pandemic, and not only in the emergency times. It is therefore essential to have evidence about the effectiveness of such platforms in improving and maintaining learning outcomes. Thus, the present study aims to shed light on the effectiveness of digital platforms in improving undergraduate and postgraduate students’ learning outcomes through a comprehensive review of the previous studies that used digital platforms as an educational environment. It also hopes that its results may contribute to determine, whether or not digital platforms are effective, and shed light on some design elements of digital platforms that can positively affect the improvement of students’ learning outcomes.

Basic conclusion of the conducted meta-analysis has indicated that digital platforms have a positive impact on students’ learning outcomes. This analysis that consisted of 8293 students, and revealed an effect size of g = 0.278 according to the random effects model, which is considered as a small positive effect according to Cohen’s criteria [81]. It also showed that there was no publication bias through the use of three bias assessment methods, mainly, visual inspection of the funnel plot, Classic fail-safe N test, and Orwin’s fail-safe N test.

This conclusion corroborates the conclusion of previous studies that concluded a positive impact of digital platforms on the learning outcomes [20,21,25,26,27]. Nonetheless, it disagrees with a set of other studies that did not find any positive effect of the digital platforms on the learning outcomes [30,31,32,33,34,35,36].

Besides, this meta-analysis showed that the largest number of effect sizes was found in the groups whose study duration was less than one semester. In all cases, whether the study period was less than one semester or a whole semester or more, students who studied via digital platforms outperformed their peers who studied via traditional classrooms. It also revealed that studies with a shorter duration of intervention had a greater effect size than studies with a longer duration.

In accordance with studies’ subject areas in general, the analysis showed statistically significant differences due to the difference in the subject field. Significant differences were found in the field of computer science and science, in particular. The largest effect size was in the field of computer science with a medium size. However, business field was the only field that had a negative effect size, bearing in mind that this impact size was based on one study in this field as it seems that learning via digital platforms in the field of business was less than other fields. Other domains did not show negative effect sizes in favor of studying via traditional classrooms, taking into account that the size effects of the three domains (arts and humanities; health care; and social sciences) were not statistically significant and overlapped with zero in the 95% confidence interval. On the other part, the field of science resulted in a small and statistically significant effect size. Therefore, these concluded results of the present study call for asking questions, such as the following: Why has the impact of digital platforms been studied in some areas but not in others? Is it because of the nature of the material and learning? Does it need more effort and the use of certain strategies appropriate to these disciplines? Are there reasons related to faculty members and students of these disciplines? Once these questions are considered, they can be referred to as a pathway for future research.

It is worth mentioning that the study sample was limited to undergraduate and postgraduate levels. The largest number of impact sizes was in the classification of university students. Studying through digital platforms showed an outperformance over studying in traditional classes with a small-to-medium statistically significant effect size. On the other side, the effect size for postgraduate students was small and insignificant overlapping with zero in the 5% confidence interval within a relatively few studies.

With regard to the type of publication, inclusion was limited to articles and conference papers. Articles made up the vast majority that met the inclusion criteria related to the study. Study publication type was of small-to-medium effect size but statistically significant. On the other part, conference papers were of a negative effect size as they were in favor of the traditional classrooms instead of digital platforms. Nevertheless, this difference was not statistically significant and the confidence interval overlapped with zero. Generally speaking, there were no statistically significant differences due to the different types of publication.

During the process of research and inclusion in the present study, it was noticed that the majority of studies focused on students’ learning outcomes by comparing learning via digital platforms with learning through the traditional classrooms, without any focus on the used design elements and educational strategies, whether via digital platforms or in the traditional classroom. In addition, the majority of studies focused on students’ test scores without making clear what skill levels were tested. Therefore, it is hoped that future studies will focus more on the effect of learning via digital platforms on students’ higher order thinking skills, mainly application, analysis, evaluation.

One more important aspect is that there was a group of design elements used in some studies that attracted the attention of the researchers during the research and inclusion process, in the digital platforms. These elements were not included in the present study because of their comparison between an experimental group that used the design element of the digital platform and a control group that used digital platforms traditionally besides the absence of a group to be taught in the traditional classrooms. These design elements were divided into technical design elements and educational design elements. Among the most important technical design elements are the following:

- ▪

- Adaptive Learning, where [112,113,114,115,116] a digital platform that uses a proposed adaptive electronic environment and a digital platform that uses the traditional electronic environment were compared.

- ▪

- Gamification, where [117,118] used it and its comparison and compared it with traditional e-learning across digital platforms, by including gamification elements in the digital platform such as the Blackboard platform or by developing a special digital platform and including gamification elements in it.

Among the most important educational design elements used in the previous studies via digital platforms:

- ▪

- Problem-based learning, which a design element that some studies such as [119,120] used and compared with a traditional electronic environment via digital platforms.

- ▪

- Feedback design element that was used by [121,122,123,124]

- ▪

- Cooperative learning [125], project-based learning [126], self-organized learning [54,127].

In turn, it hoped that future studies will focus more on the different design elements of digital platforms and expand them for potential studies. Finally, it was noticed that Moodle, Blackboard, I spring digital platforms; Skype, Zoom, and Google Plus were embedded in some studies.

There were some limitations that should be accounted while interpreting the results of the present study. This analysis focused on studies that were applied to a sample of undergraduate and postgraduate education students without reference to general education. It also focused on the cognitive learning outcomes rather than the behavioral and emotional one due to the shortage in studies using it. Therefore, this matter must be considered by educational practitioners when making decisions about whether or not to implement learning via digital platforms. In addition, studies related to the context of the COVID-19 pandemic were not included because that period was a special case and exceptional. Learning during this pandemic was entirely remote. In addition, some studies were also excluded because they did not provide sufficient data to calculate effect sizes.

7. Ethical Considerations

The research team declares that they have no material interests or personal relationships that could influence the work presented in the present study. The data can also be accessed by contacting the authors.

8. Conclusions

In this meta-analysis study, studies that focused on digital platforms to evaluate the general effect of the digital platforms on the learners’ cognitive learning outcomes in comparison with the effect of the traditional classrooms were used. A small positive impact of studying via digital platforms was found. Some intermediate variables such as study duration, subject area, students’ classification, and publication type were also analyzed. Results showed that there were some design elements for digital platforms that affect their performance. Among these elements were technical design elements such as adaptive learning and gamification and educational design elements such as problem-based learning, feedback, collaborative learning, project-based learning, and self-organized learning. Findings of the present study can be used to show how digital platforms are used to enhance cognitive learning outcomes. In the era after Covid- 19, educational institutions can rely on the results of this study in redeveloping the digital platform’s system, as the general use of digital platforms tend to be useless without considering the adaptive characteristics that these platforms can provide to learners, and the elements of gamification that can be relied upon to enhance students’ motivation and to improve the sustainability of digital. It is also important to link the platform tools and the learning strategies. They also can propose future research related to studying the effects of the design elements of the digital platforms on learning outcomes and the structure of variables that make a digital platform better affect learning outcomes. It is also important in future studies to develop instructional design models for adaptive platforms as well as studying motivational platforms and their impact on learning outcomes. Also, a meta-analysis of studies that focused on digital platforms in the era of Covid-19 may lead to new approaches towards the use of digital platforms in education.

Author Contributions

Conceptualization, W.S.A. and F.M.A.; methodology, W.S.A. and F.M.A.; software, F.M.A.; validation, W.S.A. and F.M.A.; formal analysis, W.S.A. and F.M.A.; investigation, W.S.A. and F.M.A.; resources, W.S.A. and F.M.A.; data curation, W.S.A. and F.M.A.; writing—original draft preparation, W.S.A. and F.M.A.; writing—review and editing, W.S.A. and F.M.A.; visualization, W.S.A. and F.M.A.; supervision, W.S.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

The appendix includes the analyzed studies, including their classification within the Scopus and ISI databases, with full reference citations for each study.

Table A1.

List of studies analyzed in Web of Science and Scopus.

Table A1.

List of studies analyzed in Web of Science and Scopus.

| No. | Author | Year of Publication | Source | Publication Type | |

|---|---|---|---|---|---|

| Web of Science | Scopus | ||||

| 1 | (Kosareva, Demidov, Ikonnikova, & Shalamovа, 2021) [85] | 2021 | ✓ | ✓ | Article |

| 2 | (Lim & Yi, 2021) [24] | 2021 | ✓ | ✓ | Article |

| 3 | (Mujiono & Herawati, 2021) [86] | 2021 | ✓ | ✓ | Article |

| 4 | (Balakrishnan et al., 2021) [31] | 2021 | ✓ | ✓ | Article |

| 5 | (Akbari, Heidari Tabrizi, & Chalak, 2021) [21] | 2021 | ✓ | ✓ | Article |

| 6 | (Abarghouie, Omid, & Ghadami, 2020) [87] | 2020 | ✓ | ✓ | Article |

| 7 | (Alsadoon, 2020) [88] | 2020 | ✓ | ✓ | Article |

| 8 | (Alipour, 2020) [89] | 2020 | ✓ | ✓ | Article |

| 9 | (Moradimokhles & Hwang, 2020) [90] | 2020 | ✓ | ✓ | Article |

| 10 | (Hashemian & Farhang-Ju, 2020) [91] | 2020 | ✓ | ✓ | Article |

| 11 | (Karabatak & Polat, 2020) [92] | 2020 | ✓ | ✓ | Article |

| 12 | (Herrera & Valenzuela, 2019) [93] | 2019 | ✓ | Conference Paper | |

| 13 | (Anggrawan, Yassi, Satria, Arafah, & Makka, 2019) [94] | 2019 | ✓ | ✓ | Conference Paper |

| 14 | (Habibzadeh et al., 2019) [95] | 2019 | ✓ | ✓ | Article |

| 15 | (Golubovskaya, Tikhonova, & Mekeko, 2019) [96] | 2019 | ✓ | Conference Paper | |

| 16 | (Beinicke & Bipp, 2018) [97] | 2018 | ✓ | ✓ | Article |

| 17 | (Tezer & Çimşir, 2018) [98] | 2018 | ✓ | ✓ | Article |

| 18 | (Souza, Mattos, Stein, Rosário, & Magalhães, 2018) [99] | 2018 | ✓ | ✓ | Article |

| 19 | (Egro, Tayler-Grint, Vangala, & Nwaiwu, 2018) [100] | 2018 | ✓ | ✓ | Article |

| 20 | (Terry, Terry, Moloney, & Bowtell, 2018) [101] | 2018 | ✓ | ✓ | Article |

| 21 | (Penichet-Tomas, Jimenez-Olmedo, Pueo, & Carbonell-Martinez, 2018) [102] | 2018 | ✓ | Conference Paper | |

| 22 | (Sezer, Karaoglan Yilmaz, & Yilmaz, 2017) [103] | 2017 | ✓ | Article | |

| 23 | (Su, 2017) [104] | 2017 | ✓ | ✓ | Article |

| 24 | (Kwon & Block, 2017) [105] | 2017 | ✓ | ✓ | Article |

| 25 | (Garratt-Reed, Roberts, & Heritage, 2016) [35] | 2016 | ✓ | ✓ | Article |

| 26 | (Lantzy, 2016) [106] | 2016 | ✓ | ✓ | Article |

| 27 | (Callister & Love, 2016) [32] | 2016 | ✓ | Article | |

| 28 | (Fayaz & Ameri-Golestan, 2016) [34] | 2016 | ✓ | Article | |

| 29 | (Akbari, Naderi, Simons, & Pilot, 2016) [20] | 2016 | ✓ | ✓ | Article |

| 30 | (Sun, 2015) [107] | 2015 | ✓ | Article | |

References

- Cerezo, R.; Sánchez-Santillán, M.; Paule-Ruiz, M.P.; Núñez, J.C. Students’ LMS interaction patterns and their relationship with achievement: A case study in higher education. Comput. Educ. 2016, 96, 42–54. [Google Scholar] [CrossRef]

- Zanjani, N. The important elements of LMS design that affect user engagement with e-learning tools within LMSs in the higher education sector. Australas. J. Educ. Technol. 2017, 33, 19–31. [Google Scholar] [CrossRef]

- Karakose, T. Emergency remote teaching due to COVID-19 pandemic and potential risks for socioeconomically disadvantaged students in higher education. Educ. Process Int. J. 2021, 10, 53–62. [Google Scholar] [CrossRef]

- Secundo, G.; Mele, G.; Vecchio, P.D.; Elia, G.; Margherita, A.; Ndou, V. Threat or opportunity? A case study of digital-enabled redesign of entrepreneurship education in the COVID-19 emergency. Technol. Forecast. Soc. Change 2021, 166, 120565. [Google Scholar] [CrossRef] [PubMed]

- Valencia, H.G.; Villota Enriquez, J.A.; Agredo, P.M. Strategies Used by Professors through Virtual Educational Platforms in Face-to-Face Classes: A View from the Chamilo Platform. Engl. Lang. Teach. 2017, 10, 1–10. [Google Scholar] [CrossRef]

- Turnbull, D.; Chugh, R.; Luck, J. Learning management systems: An overview. In Encyclopedia of Education and Information Technologies; Springer: Cham, Switzerland, 2019; pp. 1–7. [Google Scholar]

- Ayouni, S.; Menzli, L.J.; Hajjej, F.; Madeh, M.; Al-Otaibi, S. Fuzzy Vikor Application for Learning Management Systems Evaluation in Higher Education. Int. J. Inf. Commun. Technol. Educ. 2021, 17, 17–35. [Google Scholar] [CrossRef]

- Waheed, M.; Kaur, K.; Ain, N.; Hussain, N. Perceived learning outcomes from Moodle: An empirical study of intrinsic and extrinsic motivating factors. Inf. Dev. 2016, 32, 1001–1013. [Google Scholar] [CrossRef]

- Juricic, V.; Radosevic, M.; Pavlina, A.P. Characteristics of Modern Learning Management Systems. In Proceedings of the 14th International Technology, Education and Development Conference, Valencia, Spain, 2–4 March 2020; pp. 7685–7693. [Google Scholar]

- Aldiab, A.; Chowdhury, H.; Kootsookos, A.; Alam, F.; Allhibi, H. Utilization of Learning Management Systems (LMSs) in higher education system: A case review for Saudi Arabia. Energy Procedia 2019, 160, 731–737. [Google Scholar] [CrossRef]

- Ouyang, J.R.; Stanley, N. Theories and research in educational technology and distance learning instruction through Blackboard. Univers. J. Educ. Res. 2014, 2, 161–172. [Google Scholar] [CrossRef]

- Fernández, R.; Gil, I.; Palacios, D.; Devece, C. Technology platforms in distance learning: Functions, characteristics and selection criteria for use in higher education. In Proceedings of the WMSCI 2011, the 15th World Multi-Conference on Systemics, Cybernetics and Informatics 2011, Orlando, FL, USA, 19–22 July 2011; pp. 309–314. [Google Scholar]

- Shkoukani, M. Explore the Major Characteristics of Learning Management Systems and their Impact on e-Learning Success. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 296–301. [Google Scholar] [CrossRef]

- Alturki, U.; Aldraiweesh, A. Application of Learning Management System (LMS) during the COVID-19 Pandemic: A Sustainable Acceptance Model of the Expansion Technology Approach. Sustainability 2021, 13, 10991. [Google Scholar] [CrossRef]

- Alzahrani, F.K.J.; Alshammary, F.M.; Alhalafawy, W.S. Gamified Platforms: The Impact of Digital Incentives on Engagement in Learning During Covide-19 Pandemic. Cult. Manag. Sci. Educ. 2022, 7, 75–87. [Google Scholar] [CrossRef]

- Li, X.; Xia, Q.; Chu, S.K.; Yang, Y. Using Gamification to Facilitate Students’ Self-Regulation in E-Learning: A Case Study on Students’ L2 English Learning. Sustainability 2022, 14, 7008. [Google Scholar] [CrossRef]

- Alshammary, F.M.; Alhalafawy, W.S. Sustaining Enhancement of Learning Outcomes across Digital Platforms during the COVID-19 Pandemic: A Systematic Review. J. Posit. Sch. Psychol. 2022, 6, 2279–2301. [Google Scholar]

- Alhalafawy, W.S.; Zaki, M.Z. The Effect of Mobile Digital Content Applications Based on Gamification in the Development of Psychological Well-Being. Int. J. Interact. Mob. Technol. 2019, 13, 107–123. [Google Scholar] [CrossRef]

- Weziak-Bialowolska, D.; Koosed, T.; Leon, C.; McNeely, E.; OECD, H. A new approach to the well-being of factory workers in global supply chains: Evidence from apparel factories in Mexico, Sri Lanka, China and Cambodia. In Measuring the Impacts of Business on Well-Being and Sustainability; OECD, HEC Paris, SnO Centre: Paris, France, 2017; pp. 130–154. [Google Scholar]

- Akbari, E.; Naderi, A.; Simons, R.J.; Pilot, A. Student engagement and foreign language learning through online social networks. Asian-Pac. J. Second Foreign Lang. Educ. 2016, 1, 4. [Google Scholar] [CrossRef]

- Akbari, J.; Heidari Tabrizi, H.; Chalak, A. Effectiveness of virtual vs. Non-virtual teaching in improving reading comprehension of iranian undergraduate efl students. Turk. Online J. Distance Educ. 2021, 22, 272–283. [Google Scholar] [CrossRef]

- Elfaki, N.K.; Abdulraheem, I.; Abdulrahim, R. Impact of E-Learning vs Traditional Learning on Student’s Performance and Attitude. Int. J. Med. Res. Health Sci. 2019, 8, 76–82. [Google Scholar]

- Jusue, A.V.; Alonso, A.T.; Gonzalez, A.B.; Palomares, T. Learning How to Order Imaging Tests and Make Subsequent Clinical Decisions: A Randomized Study of the Effectiveness of a Virtual Learning Environment for Medical Students. Med. Sci. Educ. 2021, 31, 469–477. [Google Scholar] [CrossRef]

- Lim, H.; Yi, Y. Effects of a web-based education program for nurses using medical malpractice cases: A randomized controlled trial. Nurse Educ. Today 2021, 104, 104997. [Google Scholar] [CrossRef] [PubMed]

- Sáiz-Manzanares, M.C.; Marticorena-Sánchez, R.; Díez-Pastor, J.F.; García-Osorio, C.I. Does the use of learning management systems with hypermedia mean improved student learning outcomes? Front. Psychol. 2019, 10, 88. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.C.; Shen, P.D.; Chen, Y.F.; Tsai, C.W. Effects of Web-based Cognitive Apprenticeship and Time Management on the Development of Computing Skills in Cloud Classroom: A Quasi-Experimental Approach. Int. J. Inf. Commun. Technol. Educ. 2016, 12, 1–12. [Google Scholar] [CrossRef]

- Zare, M.; Sarikhani, R.; Salari, M.; Mansouri, V. The Impact of E-Learning on University Students’ Academic Achievement and Creativity. J. Tech. Educ. Train. 2016, 8, 25–33. [Google Scholar]

- Handayanto, A.; Supandi, S.; Ariyanto, L. Teaching using moodle in mathematics education. J. Phys. Conf. Ser. 2018, 1013, 012128. [Google Scholar] [CrossRef]

- Alanzi, N.S.; Alhalafawy, W.S. A Proposed Model for Employing Digital Platforms in Developing the Motivation for Achievement Among Students of Higher Education During Emergencies. J. Posit. Sch. Psychol. 2022, 6, 4921–4933. [Google Scholar]

- Aalaa, M.; Sanjari, M.; Amini, M.R.; Ramezani, G.; Mehrdad, N.; Tehrani, M.R.M.; Bigdeli, S.; Adibi, H.; Larijani, B.; Sohrabi, Z. Diabetic foot care course: A quasi-experimental study on E-learning versus interactive workshop. J. Diabetes Metab. Disord. 2021, 20, 15–20. [Google Scholar] [CrossRef] [PubMed]

- Balakrishnan, A.; Nair, S.; Kunhikatta, V.; Rashid, M.; Unnikrishnan, M.K.; Jagannatha, P.S.; Chandran, V.P.; Khera, K.; Thunga, G. Effectiveness of blended learning in pharmacy education: An experimental study using clinical research modules. PLoS ONE 2021, 16, e0256814. [Google Scholar] [CrossRef]

- Callister, R.R.; Love, M.S. A Comparison of Learning Outcomes in Skills-Based Courses: Online Versus Face-To-Face Formats. Decis. Sci. J. Innov. Educ. 2016, 14, 243–256. [Google Scholar] [CrossRef]

- Fatmi, N.; Muhammad, I.; Muliana, M.; Nasrah, S. The Utilization of Moodle-Based Learning Management System (LMS) in Learning Mathematics and Physics to Students’ Cognitive Learning Outcomes. Int. J. Educ. Vocat. Stud. 2021, 3, 155–162. [Google Scholar] [CrossRef]

- Fayaz, S.; Ameri-Golestan, A. Impact of Blended Learning, Web-Based Learning, and Traditional Classroom Learning on Vocabulary Acquisition on Iranian Efl Learners. Mod. J. Lang. Teach. Methods 2016, 6, 244–255. [Google Scholar]

- Garratt-Reed, D.; Roberts, L.D.; Heritage, B. Grades, Student Satisfaction and Retention in Online and Face-to-Face Introductory Psychology Units: A Test of Equivalency Theory. Front. Psychol. 2016, 7, 673. [Google Scholar] [CrossRef] [PubMed]

- Hu, P.J.-H.; Hui, W. Examining the role of learning engagement in technology-mediated learning and its effects on learning effectiveness and satisfaction. Decis. Support Syst. 2012, 53, 782–792. [Google Scholar] [CrossRef]

- Lazowski, R.A.; Hulleman, C.S. Motivation interventions in education: A meta-analytic review. Rev. Educ. Res. 2016, 86, 602–640. [Google Scholar] [CrossRef]

- Hunter, J.E.; Schmidt, F.L. Methods of Meta-Analysis: Correcting Error and Bias in Research Findings; Sage: New York, NY, USA, 2004. [Google Scholar]

- Paré, G.; Trudel, M.-C.; Jaana, M.; Kitsiou, S. Synthesizing information systems knowledge: A typology of literature reviews. Inf. Manag. 2015, 52, 183–199. [Google Scholar] [CrossRef]

- Jena, P.K. Online learning during lockdown period for covid-19 in India. Int. J. Multidiscip. Educ. Res. 2020, 9, 82–92. [Google Scholar]

- Simanullang, N.; Rajagukguk, J. Learning Management System (LMS) Based On Moodle To Improve Students Learning Activity. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2020; p. 012067. [Google Scholar]

- Alhat, S. Virtual Classroom: A Future of Education Post-COVID-Shanlax Int. J. Educ. 2020, 8, 101–104. [Google Scholar]

- Al-Kindi, I.; Al-Khanjari, Z. The Smart Learning Management System. In 4th Free & Open Source Software Conference (FOSSC’2019-Oman); Sultan Qaboos University: Muscat, Oman, 2019. [Google Scholar]

- Lavoie, F.B.; Proulx, P. A Learning Management System for Flipped Courses. In Proceedings of the 2019 the 3rd International Conference on Digital Technology in Education, Yamanashi, Japan, 25–27 October 2019; pp. 73–76. [Google Scholar]

- Kraleva, R.; Sabani, M.; Kralev, V. An analysis of some learning management systems. Int. J. Adv. Sci. Eng. Inf. Technol. 2019, 9, 1190–1198. [Google Scholar] [CrossRef]

- Reilly, C. Defining Active Learning in Online Courses Through Principles for Design. Ph.D. Thesis, University of Minnesota, Minneapolis, MN, USA, 2020. [Google Scholar]

- Chen, S.Y.; Wang, J.-H. Individual differences and personalized learning: A review and appraisal. Univers. Access Inf. Soc. 2020, 20, 833849. [Google Scholar] [CrossRef]

- Godwin-Jones, R. Riding the digital wilds: Learner autonomy and informal language learning. Lang. Learn. Technol. 2019, 23, 8–25. [Google Scholar]

- Liu, R.; Shi, C. Investigating Collaborative Learning Effect in Blended Learning Environment by Utilizing Moodle and WeChat. In International Conference on Blended Learning; Springer International Publishing: Cham, Switzerland, 2016; pp. 3–13. [Google Scholar]

- Tseng, H. An exploratory study of students’ perceptions of learning management system utilisation and learning community. Res. Learn. Technol. 2020, 28, 2423. [Google Scholar] [CrossRef]

- Kumar, V.; Sharma, D. Creating collaborative and convenient learning environment using cloud-based moodle LMS: An instructor and administrator perspective. Int. J. Web-Based Learn. Teach. Technol. 2016, 11, 35–50. [Google Scholar] [CrossRef]

- Abed, E.K. Electronic learning and its benefits in education. EURASIA J. Math. Sci. Technol. Educ. 2019, 15, em1672. [Google Scholar] [CrossRef] [PubMed]

- Alhalafawy, W.S.; Najmi, A.H.; Zaki, M.Z.T.; Alharthi, M.A. Design an Adaptive Mobile Scaffolding System According to Students’ Cognitive Style Simplicity vs Complexity for Enhancing Digital Well-Being. Int. J. Interact. Mob. Technol. 2021, 15, 108–127. [Google Scholar] [CrossRef]

- Alhalafawy, W.S.; Zaki, M.Z. How has gamification within digital platforms affected self-regulated learning skills during the COVID-19 pandemic? Mixed-methods research. Int. J. Emerg. Technol. Learn. 2022, 17, 123–151. [Google Scholar] [CrossRef]

- Wu, D.; Yu, L.; Yang, H.H.; Zhu, S.; Tsai, C.-C. Parents’ profiles concerning ICT proficiency and their relation to adolescents’ information literacy: A latent profile analysis approach. Br. J. Educ. Technol. 2020, 51, 2268–2285. [Google Scholar] [CrossRef]

- Ouadoud, M.; Nejjari, A.; Chkouri, M.Y.; El-Kadiri, K.E. Learning management system and the underlying learning theories. In Proceedings of the 2nd Mediterranean Symposium on Smart City Applications, Tangier, Morocco, 15–27 October 2017; Springer: Cham, Switzerland, 2017; pp. 732–744. [Google Scholar]

- Lavidas, K.; Apostolou, Z.; Papadakis, S. Challenges and opportunities of mathematics in digital times: Preschool teachers’ views. Educ. Sci. 2022, 12, 459. [Google Scholar] [CrossRef]

- Zeng, L.-H.; Hao, Y.; Tai, K.-H. Online Learning Self-Efficacy as a Mediator between the Instructional Interactions and Achievement Emotions of Rural Students in Elite Universities. Sustainability 2022, 14, 7231. [Google Scholar] [CrossRef]

- Radianti, J.; Majchrzak, T.A.; Fromm, J.; Wohlgenannt, I. A systematic review of immersive virtual reality applications for higher education: Design elements, lessons learned, and research agenda. Comput. Educ. 2020, 147, 103778. [Google Scholar] [CrossRef]

- Schunk, D.H. Learning Theories an Educational Perspective, 6th ed.; Pearson: London, UK, 2012. [Google Scholar]

- Alzahrani, F.K.J.; Alhalafawy, W.S. Benefits And Challenges Of Using Gamification Across Distance Learning Platforms At Higher Education: A Systematic Review Of Research Studies Published During The COVID-19 Pandemic. J. Posit. Sch. Psychol. 2022, 6, 1948–1977. [Google Scholar]

- Harasim, L. Learning Theory and Online Technologies; Routledge: London, UK, 2017. [Google Scholar]

- Goldie, J.G.S. Connectivism: A knowledge learning theory for the digital age? Med. Teach. 2016, 38, 1064–1069. [Google Scholar] [CrossRef]

- Kumar, V.; Sharma, D. E-learning theories, components, and cloud computing-based learning platforms. Int. J. Web-Based Learn. Teach. Technol. (IJWLTT) 2021, 16, 1–16. [Google Scholar] [CrossRef]

- Alismaiel, O.A.; Cifuentes-Faura, J.; Al-Rahmi, W.M. Online Learning, Mobile Learning, and Social Media Technologies: An Empirical Study on Constructivism Theory during the COVID-19 Pandemic. Sustainability 2022, 14, 11134. [Google Scholar] [CrossRef]

- Singh, A.; Singh, H.P.; Alam, F.; Agrawal, V. Role of Education, Training, and E-Learning in Sustainable Employment Generation and Social Empowerment in Saudi Arabia. Sustainability 2022, 14, 8822. [Google Scholar] [CrossRef]

- Amineh, R.J.; Asl, H.D. Review of constructivism and social constructivism. J. Soc. Sci. Lit. Lang. 2015, 1, 9–16. [Google Scholar]

- Hyslop-Margison, E.J.; Strobel, J. Constructivism and education: Misunderstandings and pedagogical implications. Teach. Educ. 2007, 43, 72–86. [Google Scholar] [CrossRef]

- Chai, J.; Fan, K.-K. Mobile inverted constructivism: Education of interaction technology in social media’, Eurasia Journal of Mathematics. Sci. Technol. Educ. 2016, 12, 1425–1442. [Google Scholar]

- Vygotsky, L.S. Mind in Society: The Development of Higher Psychological Processes; Harvard University Press: Cambridge, UK, 1980. [Google Scholar]

- Gopinathan, S.; Kaur, A.H.; Veeraya, S.; Raman, M. The Role of Digital Collaboration in Student Engagement towards Enhancing Student Participation during COVID-19. Sustainability 2022, 14, 6844. [Google Scholar] [CrossRef]

- Liu, C.H.; Matthews, R. Vygotsky’s Philosophy: Constructivism and Its Criticisms Examined. Int. Educ. J. 2005, 6, 386–399. [Google Scholar]

- Hussin, W.N.T.W.; Harun, J.; Shukor, N.A. Online Tools for Collaborative Learning to Enhance Students Interaction. In Proceedings of the 2019 7th International Conference on Information and Communication Technology (ICoICT 2019), Kuala Lumpur, Malaysia, 24–26 July 2019; pp. 1–5. [Google Scholar]

- Chetty, D. Connectivism: Probing Prospects for a Technology-Centered Pedagogical Transition in Religious Studies. Alternation 2013, 10, 172–199. [Google Scholar]

- Siemens, G. Connectivism: A learning theory for the digital age. Ekim 2004, 6, 2011. [Google Scholar]

- Alanzi, N.S.; Alhalafawy, W.S. Investigation the Requirements for Implementing Digital Platforms during Emergencies from the Point of View of Faculty Members: Qualitative Research. J. Posit. Sch. Psychol. 2022, 6, 4910–4920. [Google Scholar]

- Duke, B.; Harper, G.; Johnston, M. Connectivism as a digital age learning theory. Int. HETL Rev. 2013, 2013, 4–13. [Google Scholar]

- Glass, G.V.; McGaw, B.; Smith, M.L. Meta-Analysis in Social Research; Sage Publications, Incorporated: Washington, DC, USA, 1981. [Google Scholar]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; Group, P. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef]

- Borenstein, M.; Hedges, L.V.; Higgins, J.P.; Rothstein, H.R. A basic introduction to fixed-effect and random-effects models for meta-analysis. Res. Synth. Methods 2010, 1, 97–111. [Google Scholar] [CrossRef]

- Cohen, J. A power primer. Psychol. Bull. 1992, 112, 155. [Google Scholar] [CrossRef] [PubMed]

- Rosenthal, R. The file drawer problem and tolerance for null results. Psychol. Bull. 1979, 86, 638. [Google Scholar] [CrossRef]

- Orwin, R.G. A fail-safe N for effect size in meta-analysis. J. Educ. Stat. 1983, 8, 157–159. [Google Scholar] [CrossRef]

- Lipsey, M.W.; Wilson, D.B. Practical Meta-Analysis; SAGE Publications, Inc.: Washington, DC, USA, 2001. [Google Scholar]

- Kosareva, L.; Demidov, L.; Ikonnikova, I.; Shalamovа, O. Ispring platform for learning Russian as a foreign language. Interact. Learn. Environ. 2021, 1–12. [Google Scholar] [CrossRef]

- Mujiono, M.; Herawati, S. The Effectiveness of E-Learning-Based Sociolinguistic Instruction on EFL University Students’ Sociolinguistic Competence. Int. J. Instr. 2021, 14, 627–642. [Google Scholar] [CrossRef]

- Ghadami, A.; Abarghouie, M.H.G.; Omid, A. Effects of virtual and lecture-based instruction on learning, content retention, and satisfaction from these instruction methods among surgical technology students: A comparative study. J. Educ. Heal. Promot. 2020, 9, 296. [Google Scholar] [CrossRef]

- Alsadoon, E. The impact of an adaptive e-course on students’ achievements based on the students’ prior knowledge. Educ. Inf. Technol. 2020, 25, 3541–3551. [Google Scholar] [CrossRef]

- Alipour, P. A Comparative Study of Online Vs. Blended Learning on Vocabulary Development Among Intermediate EFL Learners. Cogent Educ. 2020, 7. [Google Scholar] [CrossRef]

- Moradimokhles, H.; Hwang, G.-J. The effect of online vs. blended learning in developing English language skills by nursing student: An experimental study. Interact. Learn. Environ. 2020, 30, 1653–1662. [Google Scholar] [CrossRef]

- Hashemian, M. E-Learning as a Means to Enhance L2 Learners’ Lexical Knowledge and Translation Performance. Appl. Linguist. Res. J. 2019. [Google Scholar] [CrossRef]

- Karabatak, S.; Polat, H. The effects of the flipped classroom model designed according to the ARCS motivation strategies on the students’ motivation and academic achievement levels. Educ. Inf. Technol. 2019, 25, 1475–1495. [Google Scholar] [CrossRef]

- Herrera, L.M.M.; Valenzuela, J.C.M. What Kind of Teacher Achieves Student Engagement in a Synchronous Online Model? In Proceedings of 2019 IEEE Global Engineering Education Conference (EDUCON), Dubai, United Arab Emirates, 8–11 April 2019; pp. 227–231. [Google Scholar]

- Anggrawan, A.; Yassi, A.H.; Satria, C.; Arafah, B.; Makka, H.M. Comparison of Online Learning Versus Face to Face Learning in English Grammar Learning. In Proceedings of 2019 5th International Conference on Computing Engineering and Design (ICCED), 11–13 April 2019; pp. 1–4. [Google Scholar]

- Habibzadeh, H.; Rahmani, A.; Rahimi, B.; Rezai, S.A.; Aghakhani, N.; Hosseinzadegan, F. Comparative study of virtual and traditional teaching methods on the interpretation of cardiac dysrhythmia in nursing students. J. Educ. Health Promot. 2019, 8, 202. [Google Scholar] [CrossRef]

- Golubovskaya, E.A.; Tikhonova, E.V.; Mekeko, N.M. Measuring Learning Outcome and Students’ Satisfaction in ELT: E-Learning against Conventional Learning. In Proceedings of the 2019 5th International Conference on Education and Training Technologies, Seoul, Republic of Korea, 27–29 May 2019; pp. 34–38. [Google Scholar]

- Beinicke, A.; Bipp, T. Evaluating Training Outcomes in Corporate E-Learning and Classroom Training. Vocat. Learn. 2018, 11, 501–528. [Google Scholar] [CrossRef]

- Tezer, M.; Çimşir, B.T. The impact of using mobile-supported learning management systems in teaching web design on the academic success of students and their opinions on the course. Interact. Learn. Environ. 2017, 26, 402–410. [Google Scholar] [CrossRef]

- Souza, C.L.E.; Mattos, L.B.; Stein, A.T.; Rosário, P.; Magalhães, C.R. Face-to-Face and Distance Education Modalities in the Training of Healthcare Professionals: A Quasi-Experimental Study. Front. Psychol. 2018, 9. [Google Scholar] [CrossRef] [PubMed]

- Egro, F.M.; Tayler-Grint, L.C.; Vangala, S.K.; Nwaiwu, C.A. Multicenter Randomized Controlled Trial to Assess an e-Learning on Acute Burns Management. J. Burn. Care Res. 2017, 1. [Google Scholar] [CrossRef] [PubMed]

- Terry, V.R.; Terry, P.C.; Moloney, C.; Bowtell, L. Face-to-face instruction combined with online resources improves retention of clinical skills among undergraduate nursing students. Nurse Educ. Today 2018, 61, 15–19. [Google Scholar] [CrossRef] [PubMed]

- Penichet-Tomas, A.; Jimenez-Olmedo, J.M.; Pueo, B.; Carbonell-Martinez, J.A. Learning management system in sport sciences degree. In Proceedings of 10th International Conference on Education and New Learning Technologies (EDULEARN), Palma, Spain, 2–4 July 2018; pp. 6330–6334. [Google Scholar]

- Sezer, B.; Yilmaz, F.G.K.; Yılmaz, R. Comparison of Online and Traditional Face-to-Face In-Service Training Practices: An Experimental Study. Cukurova Univ. Fac. Educ. J. 2017, 46, 264–288. [Google Scholar] [CrossRef]

- Su, C. The Effects of Students’ Learning Anxiety and Motivation on the Learning Achievement in the Activity Theory Based Gamified Learning Environment. Eurasia J. Math. Sci. Technol. Educ. 2016, 13. [Google Scholar] [CrossRef]

- Kwon, E.H.; Block, M.E. Implementing the adapted physical education E-learning program into physical education teacher education program. Res. Dev. Disabil. 2017, 69, 18–29. [Google Scholar] [CrossRef] [PubMed]

- Lantzy, T. Health literacy education: The impact of synchronous instruction. Ref. Serv. Rev. 2016, 44, 100–121. [Google Scholar] [CrossRef]

- Sun, M. Application of Multimodal Learning in Online English Teaching. Int. J. Emerg. Technol. Learn. (iJET) 2015, 10, 54–58. [Google Scholar] [CrossRef]

- Higgins, J.P.; Thompson, S.G. Quantifying heterogeneity in a meta-analysis. Stat. Med. 2002, 21, 1539–1558. [Google Scholar] [CrossRef]

- Sterne, J.A.; Egger, M. Funnel plots for detecting bias in meta-analysis: Guidelines on choice of axis. J. Clin. Epidemiol. 2001, 54, 1046–1055. [Google Scholar] [CrossRef] [PubMed]

- Duval, S.; Tweedie, R. Trim and fill: A simple funnel-plot–based method of testing and adjusting for publication bias in meta-analysis. Biometrics 2000, 56, 455–463. [Google Scholar] [CrossRef]

- Rosenthal, R. Writing meta-analytic reviews. Psychol. Bull. 1995, 118, 183. [Google Scholar] [CrossRef]

- El-Sabagh, H.A. Adaptive e-learning environment based on learning styles and its impact on development students’ engagement. Int. J. Educ. Technol. High. Educ. 2021, 18, 53. [Google Scholar] [CrossRef]

- Fatahi, S. An experimental study on an adaptive e-learning environment based on learner’s personality and emotion. Educ. Inf. Technol. 2019, 24, 2225–2241. [Google Scholar] [CrossRef]

- Karagiannis, I.; Satratzemi, M. An adaptive mechanism for Moodle based on automatic detection of learning styles. Educ. Inf. Technol. 2018, 23, 1331–1357. [Google Scholar] [CrossRef]

- Perišić, J.; Milovanović, M.; Kazi, Z. A semantic approach to enhance moodle with personalization. Comput. Appl. Eng. Educ. 2018, 26, 884–901. [Google Scholar] [CrossRef]

- Wu, C.H.; Chen, Y.S.; Chen, T.C. An Adaptive e-Learning System for Enhancing Learning Performance: Based on Dynamic Scaffolding Theory. Eurasia J. Math. Sci. Technol. Educ. 2018, 14, 903–913. [Google Scholar]

- Chen, C.C.; Huang, C.C.; Gribbins, M.; Swan, K. Gamify Online Courses With Tools Built Into Your Learning Management System (LMS) to Enhance Self-Determined and Active Learning. Online Learn. 2018, 22, 41–54. [Google Scholar] [CrossRef]

- Garcia-Cabot, A.; Garcia-Lopez, E.; Caro-Alvaro, S.; Gutierrez-Martinez, J.-M.; de-Marcos, L. Measuring the effects on learning performance and engagement with a gamified social platform in an MSc program. Comput. Appl. Eng. Educ. 2020, 28, 207–223. [Google Scholar] [CrossRef]

- Amin, S.; Sumarmi Bachri, S.; Susilo, S.; Bashith, A. The Effect of Problem-Based Hybrid Learning (PBHL) Models on Spatial Thinking Ability and Geography Learning Outcomes. Int. J. Emerg. Technol. Learn. 2020, 15, 83–94. [Google Scholar] [CrossRef]

- Dong, L.N.; Yang, L.; Li, Z.J.; Wang, X. Application of PBL Mode in a Resident-Focused Perioperative Transesophageal Echocardiography Training Program: A Perspective of MOOC Environment. Adv. Med. Educ. Pract. 2020, 11, 1023–1028. [Google Scholar] [CrossRef]

- Chen, C.-M.; Li, M.-C.; Huang, Y.-L. Developing an instant semantic analysis and feedback system to facilitate learning performance of online discussion. Interact. Learn. Environ. 2020, 1–19. [Google Scholar] [CrossRef]

- Grigoryan, A. Feedback 2.0 in online writing instruction: Combining audio-visual and text-based commentary to enhance student revision and writing competency. J. Comput. High. Educ. 2017, 29, 451–476. [Google Scholar] [CrossRef]

- Zheng, L.; Niu, J.; Zhong, L. Effects of a learning analytics-based real-time feedback approach on knowledge elaboration, knowledge convergence, interactive relationships and group performance in CSCL. Br. J. Educ. Technol. 2022, 53, 130–149. [Google Scholar] [CrossRef]

- Zheng, L.; Zhong, L.; Niu, J. Effects of personalised feedback approach on knowledge building, emotions, co-regulated behavioural patterns and cognitive load in online collaborative learning. Assess. Eval. High. Educ. 2022, 47, 109–125. [Google Scholar] [CrossRef]

- Alharbi, S.M.; Elfeky, A.I.; Ahmed, E.S. The Effect Of E-Collaborative Learning Environment On Development Of Critical Thinking And Higher Order Thinking Skills. J. Posit. Sch. Psychol. 2022, 6, 6848–6854. [Google Scholar]

- Elfeky, A.I.M.; Alharbi, S.M.; Ahmed, E.S.A.H. The Effect Of Project-Based Learning In Enhancing Creativity And Skills Of Arts Among Kindergarten Student Teachers. J. Posit. Sch. Psychol. 2022, 6, 2182–2191. [Google Scholar]

- Yu, H.-H.; Hu, R.-P.; Chen, M.-L. Global Pandemic Prevention Continual Learning—Taking Online Learning as an Example: The Relevance of Self-Regulation, Mind-Unwandered, and Online Learning Ineffectiveness. Sustainability 2022, 14, 6571. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).