1. Introduction

Food plays a vital role in people’s livelihoods and is a fundamental component of sustainable economic and social development [

1]. As global climate change continues to intensify, the frequency and intensity of extreme weather events are gradually increasing [

2,

3]. This includes droughts, floods, typhoons, and other natural disasters, which pose significant challenges to agricultural production. These extreme weather events not only directly impact crop growth and yields, but also have adverse effects on the global economy and food security, leading to an increase in global agricultural prices [

4]. The establishment of a grain reserve system is an effective mechanism for dealing with food crises and an important means of adjusting grain prices [

5]. Food security is a crucial aspect of a nation’s economy and people’s livelihood [

6]. In order to ensure food security, China has established a robust grain reserve system [

7]. To accurately assess the actual state of grain stocks and provide a reliable basis for national grain control, annual warehouse clearance and inventory activities are conducted. Additionally, a national grain inventory is carried out approximately every 10 years, with a particular focus on inspecting the quantity of grain [

8]. Weighing and measuring are the primary methods used to determine the quantity of grain in a stock. However, weighing is only employed on specific occasions during warehouse clearance due to its heavy workload, long hours, and high costs [

9]. Metric counting, on the other hand, involves calculating the number of grains based on the volume and average density of the pile. This method is commonly used to determine the quantity of grain in a cleared warehouse. Therefore, fast and highly accurate measurements of the grain pile volume are crucial for accurately detecting the mass of grain in the pile [

10,

11].

Currently, the two main methods for measuring grain inventory are the weighing method and the measurement calculation method. The weighing method is complex and time-consuming, making it suitable only for inspecting grain storage stages and not for daily monitoring of grain quantity. On the other hand, the measurement calculation method involves calculating the quantity of grain based on the volume of the grain pile and the average density. This method is suitable for inspecting bulk grain piles with regular shapes or non-quantitative packaging grain storage. It is also the most commonly used method for estimating the quantity of grain during warehouse clearance checks. The steps to determine the quantity of grain stock through measurement calculations are as follows: (1). Measure the volume of the grain pile. (2). Take samples to measure the grain density (grain weight per unit volume). (3). Calculate the average density of the grain pile by multiplying the correction coefficient and the grain density. (4). Determine the quantity of grain by using the volume of the grain pile and the average density of the grain pile. (5). Estimate the amount of food loss during storage. (6). Calculate the original weight of the grain in storage based on the measurement calculation number and grain loss (check calculation number). (7). Compare the calculated number with the inventory record in the warehouse custody account to determine the error rate and identify whether the quantity of grain inventory is abnormal. If the error rate exceeds ±3%, it is considered abnormal.

Grain pile volume measurement is a crucial aspect of quantifying grain quantity in a grain depot. Currently, the main methods for measuring the volume of grain piles are laser scanning [

12,

13] and image recognition [

14,

15]. Laser scanning involves using a high-precision turntable to measure the volume by laser ranging. However, this method requires expensive laser scanners with strict mechanical accuracy requirements, making it less feasible for the grain industry. Additionally, existing laser scanners are primarily designed for measuring object topography at short distances and small inclinations, making them ineffective for long distances and large inclinations in surveys. On the other hand, monocular and binocular vision measurements are passive methods [

16,

17,

18], while structured optical vision measurements are active methods. The image recognition method utilizes monocular or multi-ocular cameras to capture the image information of the grain pile. By processing these images, the 3D volume data of the grain pile can be obtained, enabling volume measurement of the grain pile.

Although this method still needs improvement in areas such as image matching and 3D reconstruction, with advancements in image acquisition hardware devices and ongoing research in image processing algorithms, it is expected that this method will become the mainstream approach for volume measurement in the future. Structured light measurements [

19,

20,

21] involve scanning the object using structured light and utilizing image reconstruction for 3D volume measurements. Depending on the type of structured light used, these measurements can be categorized into line structured light measurements [

22,

23] and planar structured light measurements [

24,

25]. The linear structured light measurement method is sensitive to lighting conditions and requires the measured object to be moved during the measurement process. As a result, it has a long measurement time and is only suitable for small-sized objects. It is not suitable for measuring large-sized objects [

26]. On the other hand, surface structured photometry utilizes a projector to project patterns, allowing for the acquisition of more depth information of the object surface in a single projection without the need for multiple scans [

27]. This enables surface structured light to quickly obtain the full 3D structure information of the tested object.

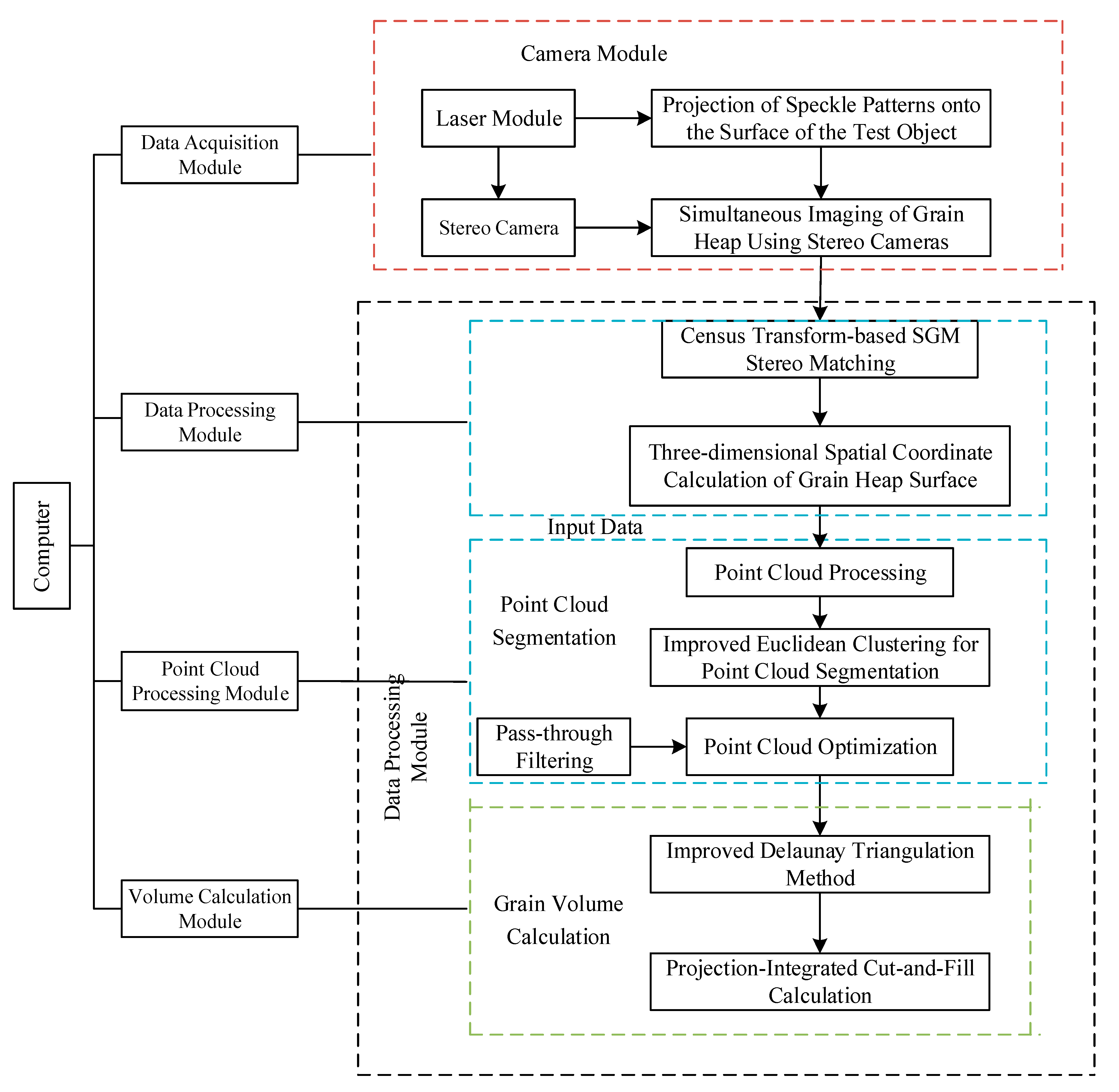

The innovative research contents of this paper are as follows: (1) This paper adopts a binocular structured light reconstruction method based on speckles to address the adverse effects of grain surface texture similarity and uneven illumination on image matching. (2) To achieve effective segmentation of grain pile point cloud images, this paper proposes a fusion of the improved European cluster segmentation method and the through-filter method. (3) This paper establishes a three-dimensional reconstruction system of the grain pile surface and verifies the accuracy of the three-dimensional reconstruction through experiments. (4) The paper utilizes the improved Delaunay method to divide the grain pile point cloud and proposes a volume calculation method based on mesh cuts and complements to achieve accurate measurement of grain pile volume.

The method used in this study to measure the volume of grain piles has the advantage of being non-contact, which means it can calculate the amount of grain by continuously monitoring the volume of the pile. Additionally, it can also provide real-time video monitoring of the grain depot using a vision system without the need for additional hardware. Implementing this technology can greatly simplify the daily inspection and management of grain depots while also reducing the costs associated with inspections for staff.

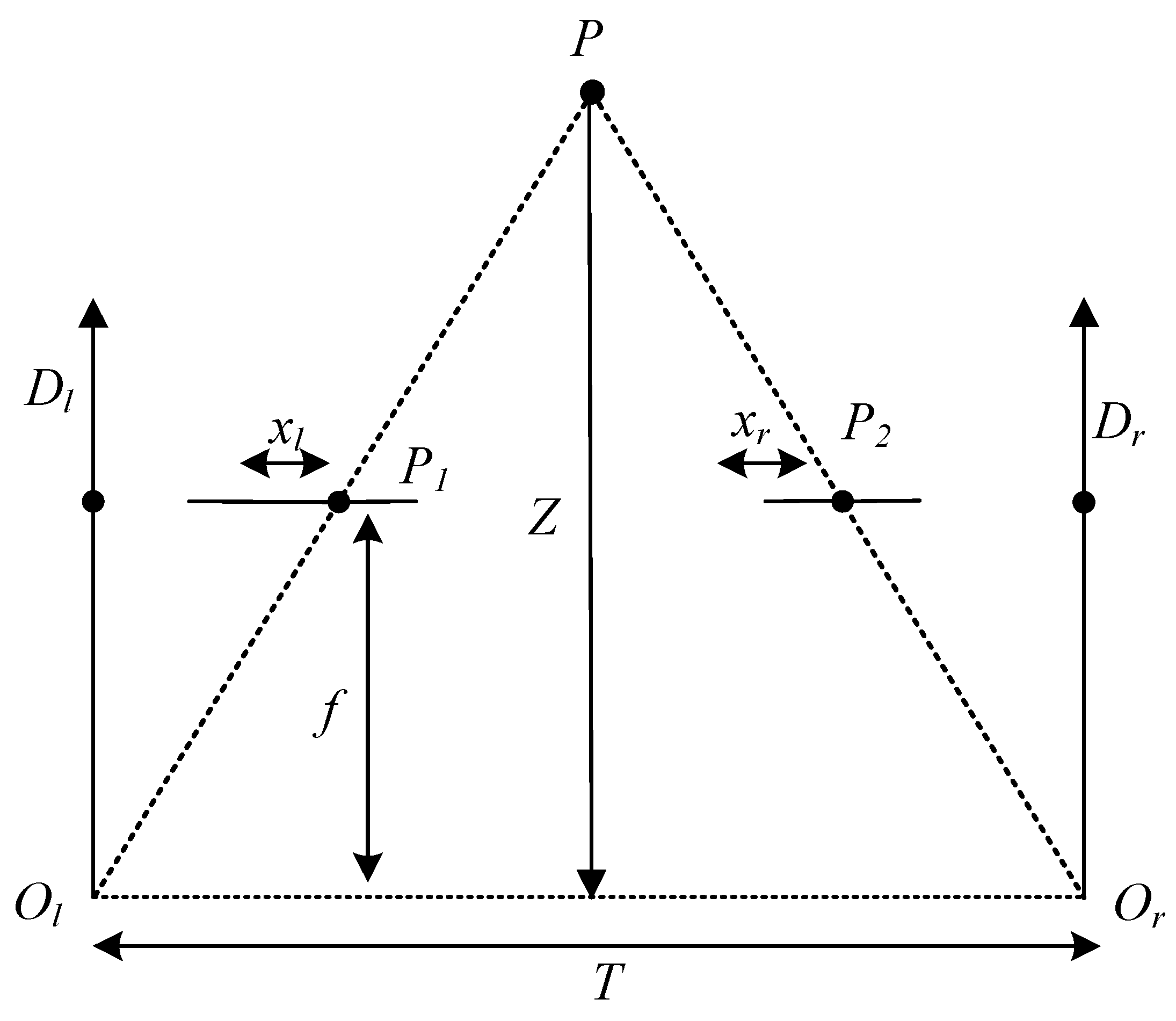

4. Stereo-Matching Algorithm

In this paper, we utilize speckle structured light in combination with the semi-global stereo matching algorithm to enhance texture information and compute disparity values. The semi-global stereo matching algorithm is a widely used technique that combines the advantages of global matching and local block matching, resulting in improved matching accuracy while maintaining global consistency. Traditional matching cost computation relies on mutual information theory [

33], which requires initial disparity values and necessitates layered iterations to obtain more precise matching cost values. However, this approach involves complex probability distribution calculations, leading to inefficiencies in cost computation. Additionally, mutual information theory is sensitive when dealing with cases that have weak textures and significant lighting variations.

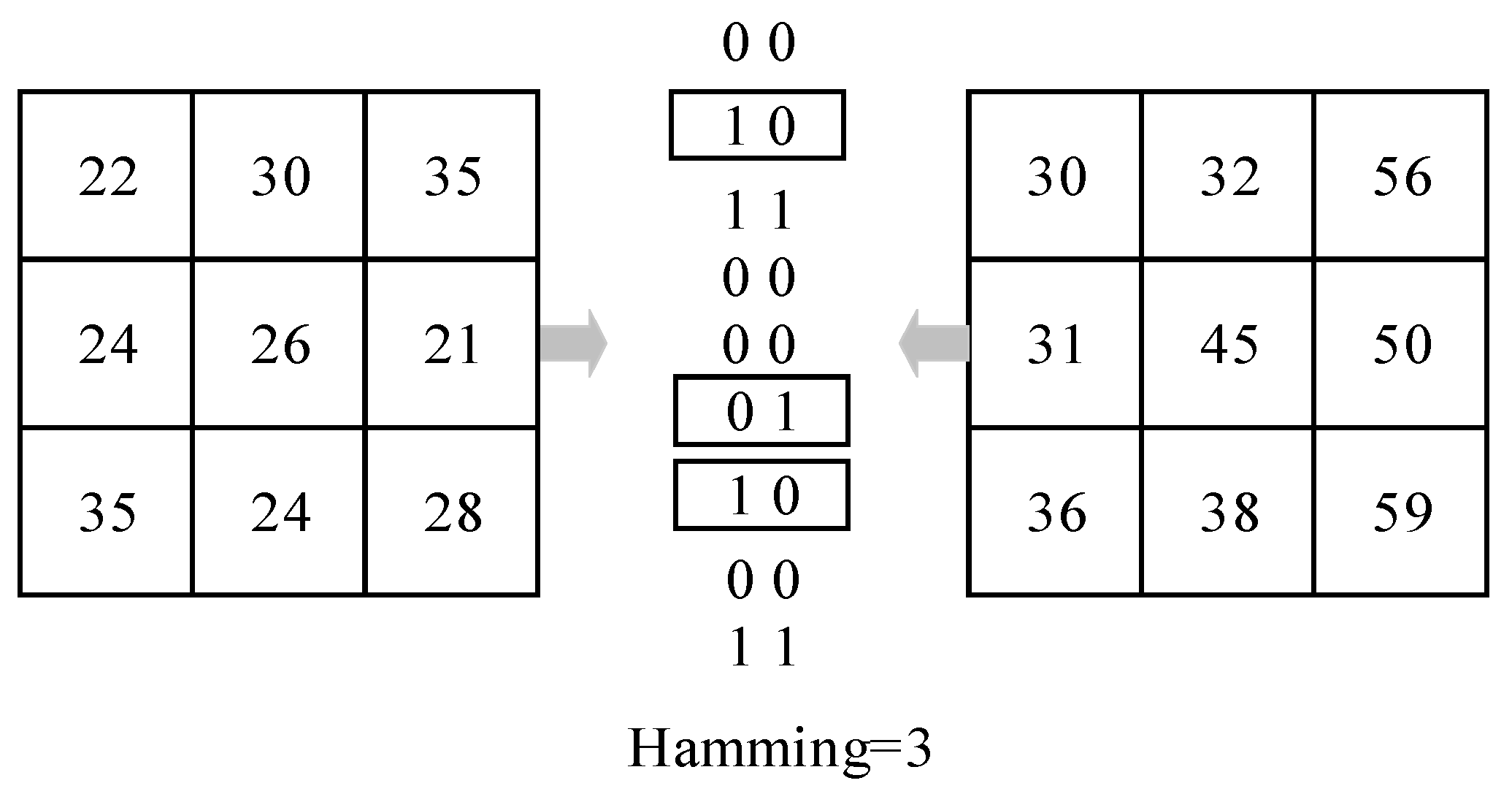

The census transform [

34] generates a code by comparing the relative relationships between a pixel and its surrounding neighboring pixels. This property allows the census transform to handle lighting variations and noise in images more effectively. Moreover, the census transform operates within a local window, which enhances its parallelism.

To compute the matching cost, we utilize the census transform to calculate the initial cost. The process of census transformation is depicted in

Figure 7. By setting a window of size

, we use the pixel intensity difference with respect to the central pixel’s intensity value as a reference to compare other pixels within the window. The result of the census transform is the generation of a bit string, and the census transform value is given by:

where

represents bitwise operation and

and

are the largest integers not exceeding half of

and

, respectively. The

operation is defined as follows:

The matching cost for census transformation is computed as the

distance between the output bit strings:

where

and

represent the bit strings in the left and right images.

The initial cost computation does not take into account the disparity similarity constraint, which can lead to a significant number of noise points. To overcome this issue, the initial cost is refined through cost aggregation and the matching cost is constrained by cost propagation. To achieve cost aggregation and constraint implementation, we designed a global energy function, , in this study. The purpose of cost aggregation is to improve the initial cost by taking into account the disparity relationships in the neighborhood of matching points. This helps in obtaining an optimization strategy for the global energy function. When the disparity value of a pixel point reaches its optimal value, the energy function achieves its minimum value.

The energy function,

, is defined as follows:

where

represents the disparity of pixel point

,

and

are penalty parameters,

is the initial cost at pixel point

for disparity

, and

represents the neighborhood of pixel points.

is the summation of matching costs for all pixels in the disparity map,

.

represents the constant penalty value,

, added when the disparity difference between pixel

and its neighboring pixel,

, is 1. Similarly,

adds a larger penalty value,

, for larger disparity differences. The global energy function requires accumulating the matching costs for all pixels in the disparity map and introducing penalty values to constrain the matching results. Small penalty values can adapt to slanted and curved surfaces, while larger penalty values are suitable for depth discontinuity regions. Depth discontinuities typically occur at locations with significant grayscale changes, so

is determined adaptively based on the grayscale gradient:

where

is a fixed value and

and

represent the grayscale values of the current pixel point and the surrounding pixel points along the same path as the current pixel point.

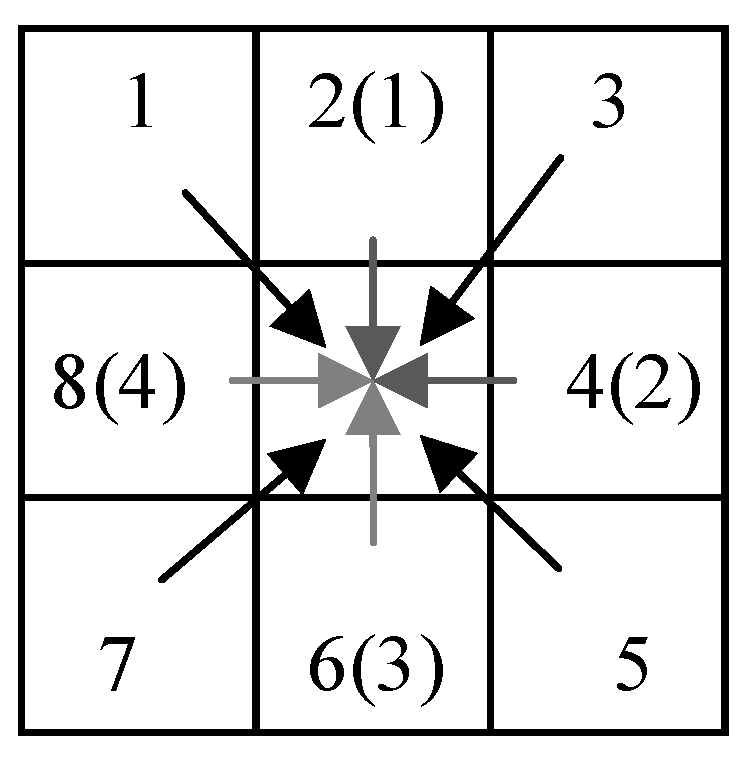

SGM aggregates matching costs from various directions to reduce the impact of errors and obtain an optimal energy function. The cost for pixel

at disparity

is recursively defined as follows:

where

represents a path,

denotes the pixels along that path preceding pixel

, and

refers to the matching cost when the pixel

has a disparity value of

along the path,

. The semi-global stereo matching algorithm seeks to find the minimum aggregated cost from various directions, and the final aggregated cost is the sum of the aggregated costs along all paths:

The path aggregation is illustrated in

Figure 8, where eight directions are aggregated for each pixel. This process yields the corresponding energy functions. In

Figure 8, there are two path aggregation methods: 4-path aggregation (light-colored arrows) and 8-path aggregation (black + light-colored arrows).

After cost aggregation, to further improve the stereo matching accuracy, the winner takes all (WTA) method is employed to calculate the disparity. In the WTA method, the pixel with the minimum matching cost is selected, and its corresponding disparity value becomes the optimal disparity. The initial disparity calculation is given by:

where

represents the disparity value of the pixel at position

and

denotes the aggregated cost value.

Disparity optimization aims to enhance the accuracy of the disparity map by eliminating incorrect disparities. In this study, the left

–right consistency check is used, which is based on the uniqueness constraint of disparity. By comparing the disparity maps of the left and right images, disparities that do not meet the uniqueness constraint are eliminated, resulting in improved depth information accuracy. The formula for the consistency check is:

After the disparity optimization, a more reliable disparity map is obtained, providing a solid foundation for obtaining the point cloud of the grain pile and conducting volume measurements.

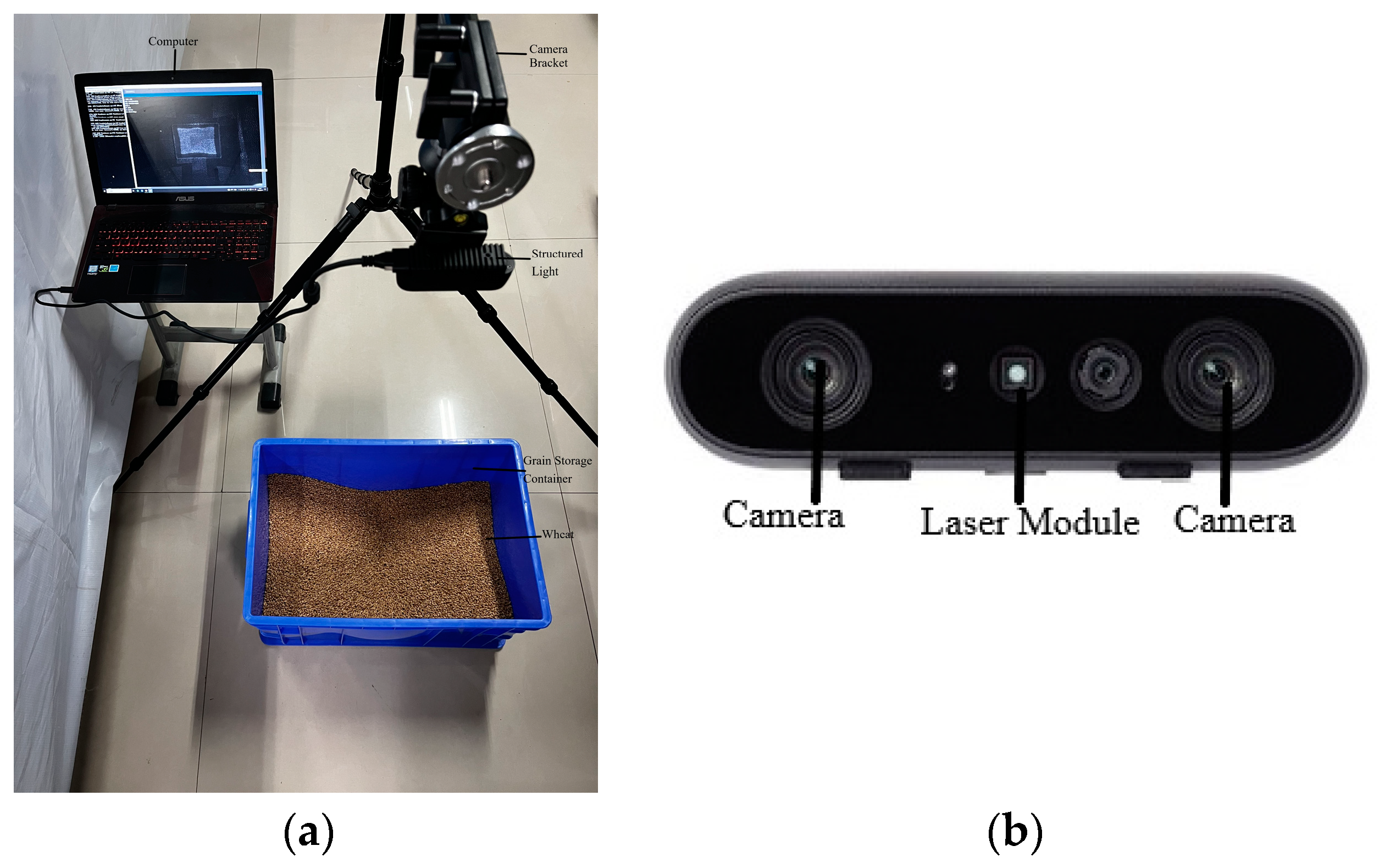

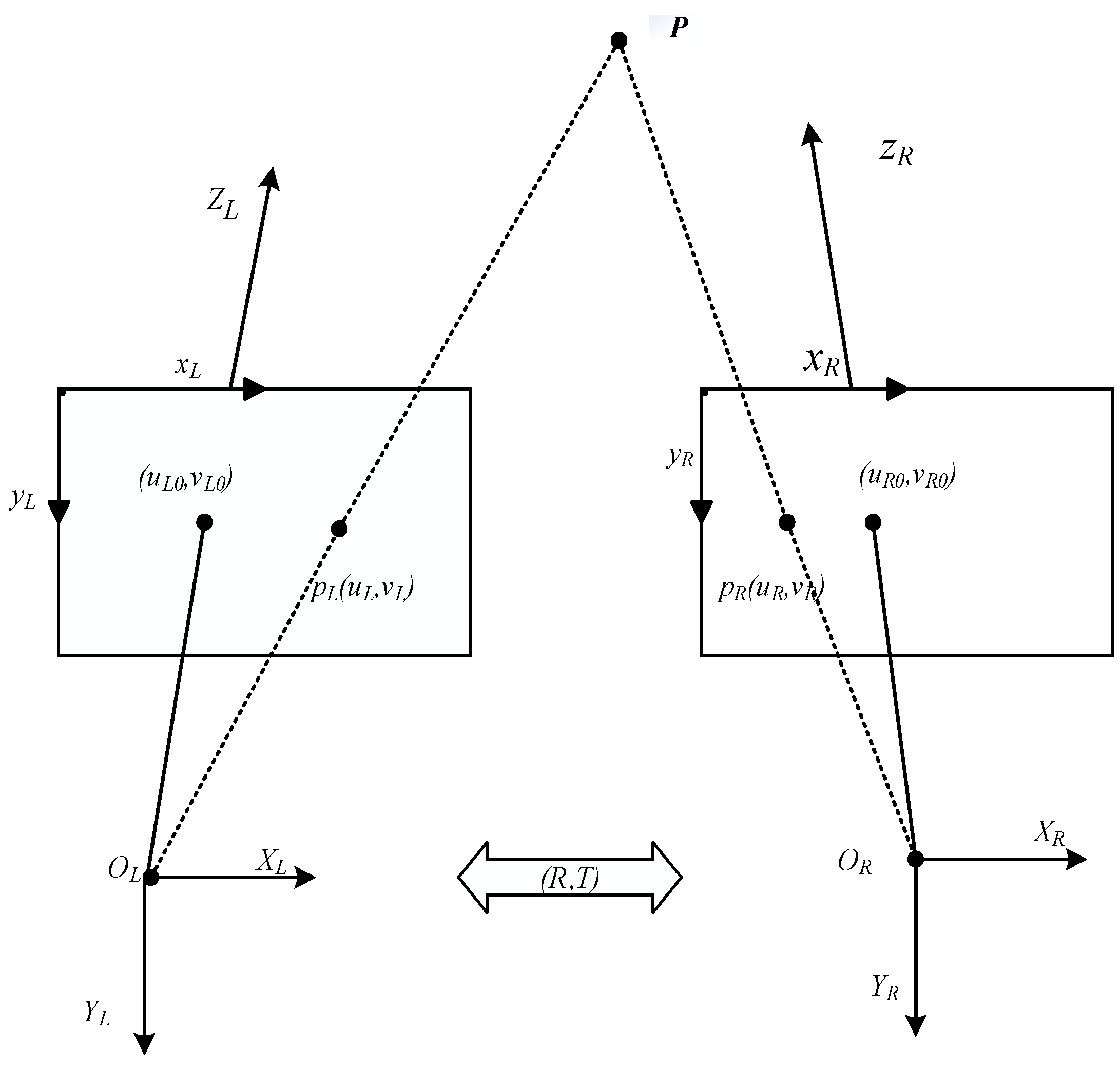

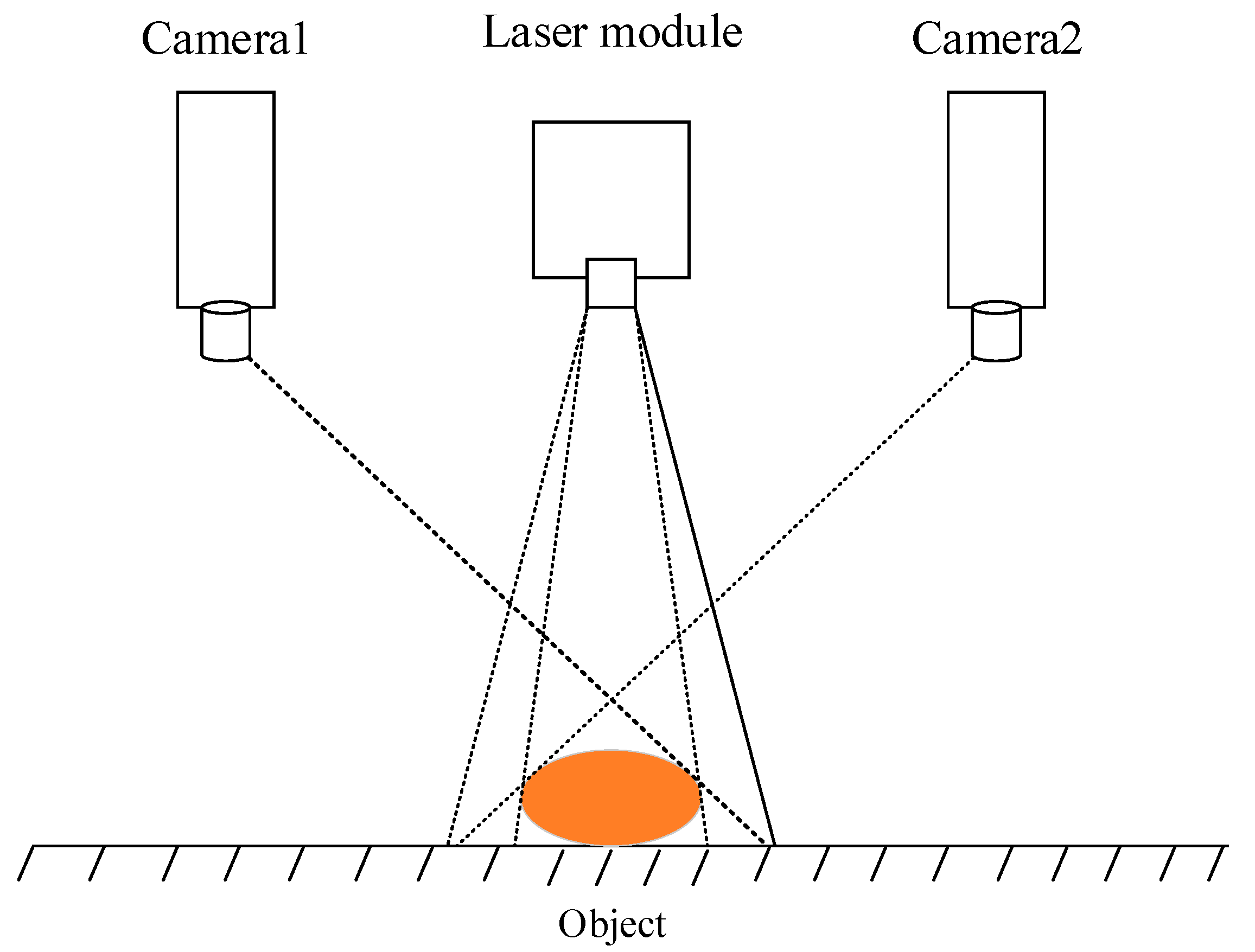

5. Data Acquisition

The hardware platform for surface 3D reconstruction of the grain pile primarily includes a display, a workstation (CPU: i5-12400F, GPU: 2060s), a binocular structured light system, and a camera mount. The binocular structured light system consists of two cameras and a laser emitter. The height of the binocular structured light can be adjusted by modifying the height of the camera mount.

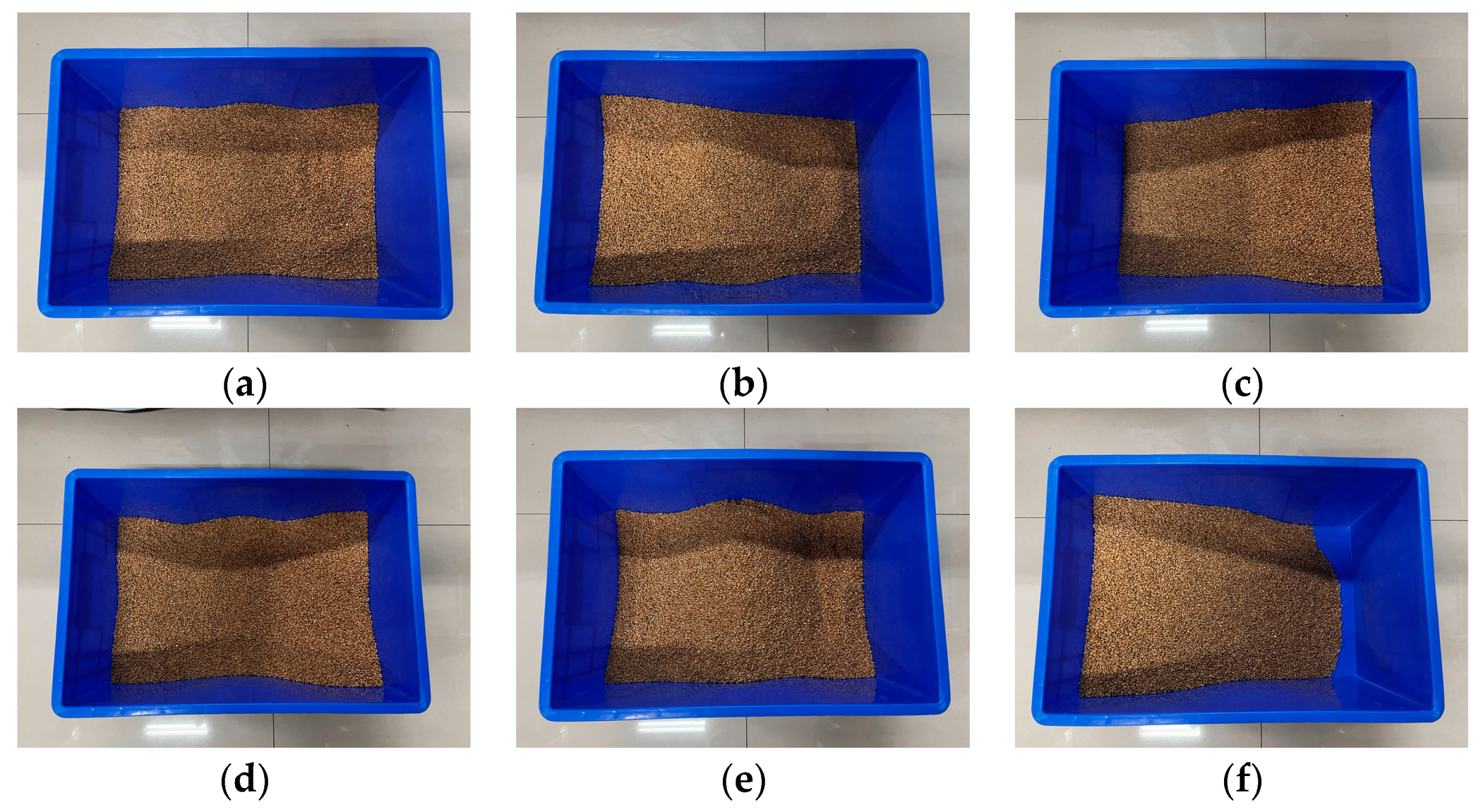

This study focuses on the Zhengmai 22 wheat variety from Kaifeng, Henan Province, China. Based on the storage characteristics, a grain storage model is constructed as shown in

Figure 9. The grain storage container is a lidless rectangular box with dimensions of 520 mm × 355 mm × 285 mm. The true volume is measured to be 0.019 m

3 using the drainage method. Different shapes of wheat grain piles are constructed in the storage container as shown in

Table 1. In order to investigate the impact of various grain pile shapes on volume measurement, this study aims to verify the stability and applicability of the measurement system. To simulate real-world grain warehouse storage conditions, different grain pile shapes were created, and experiments were conducted.

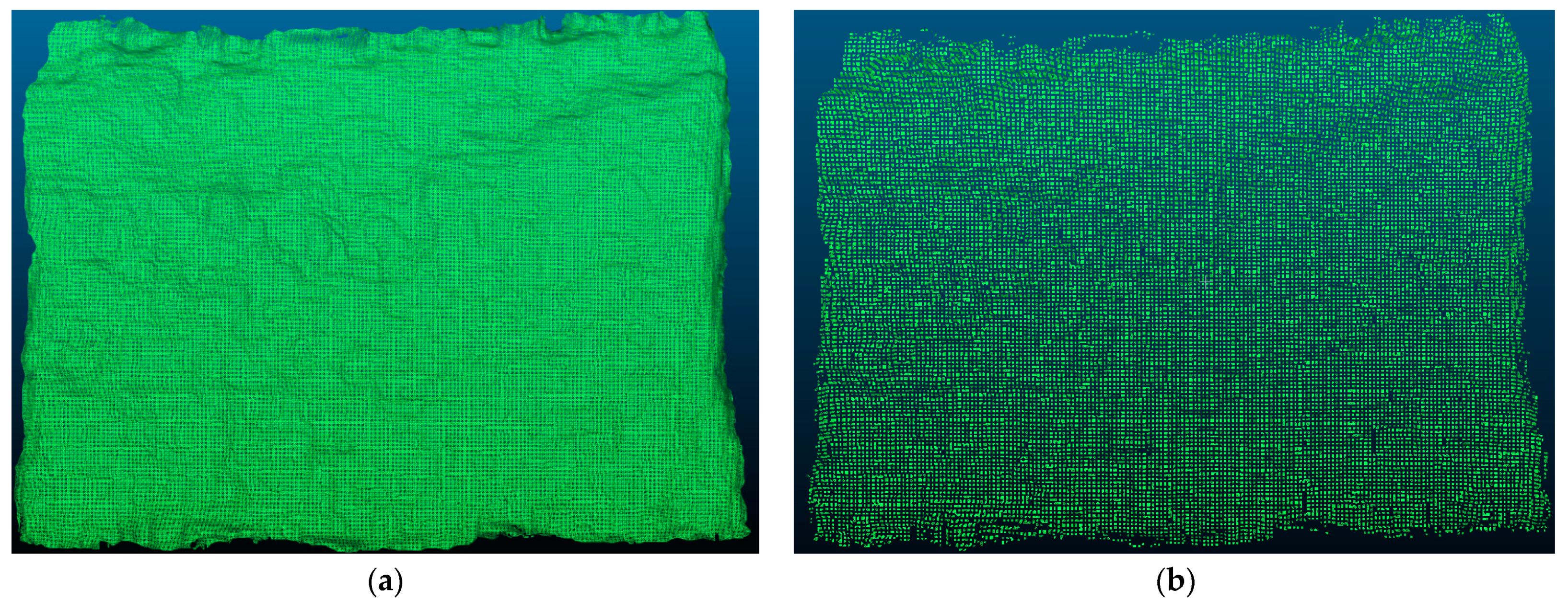

In

Figure 10a, a grain pile is shown with a flattened surface to achieve a smooth and even shape.

Figure 10b depicts a grain pile with a surface tilted to the left, forming an inclined shape. Similarly,

Figure 10c showcases a grain pile with a right tilt, creating another inclined shape.

Figure 10d displays the raised middle part of a grain pile surface, which results in a protruding shape. In

Figure 10e, the middle part of the grain pile is depressed, which produces a concave shape. Lastly,

Figure 10f presents a grain pile model that does not completely cover the container.

With these different grain pile shapes, volume measurement experiments are conducted to understand the influence of shape on the measurement results. This research provides important practical value for grain storage and related fields. The grain pile model we have developed takes into account the practical application background and is designed to be compatible with the actual situation of grain warehouse storage. It also possesses universality.

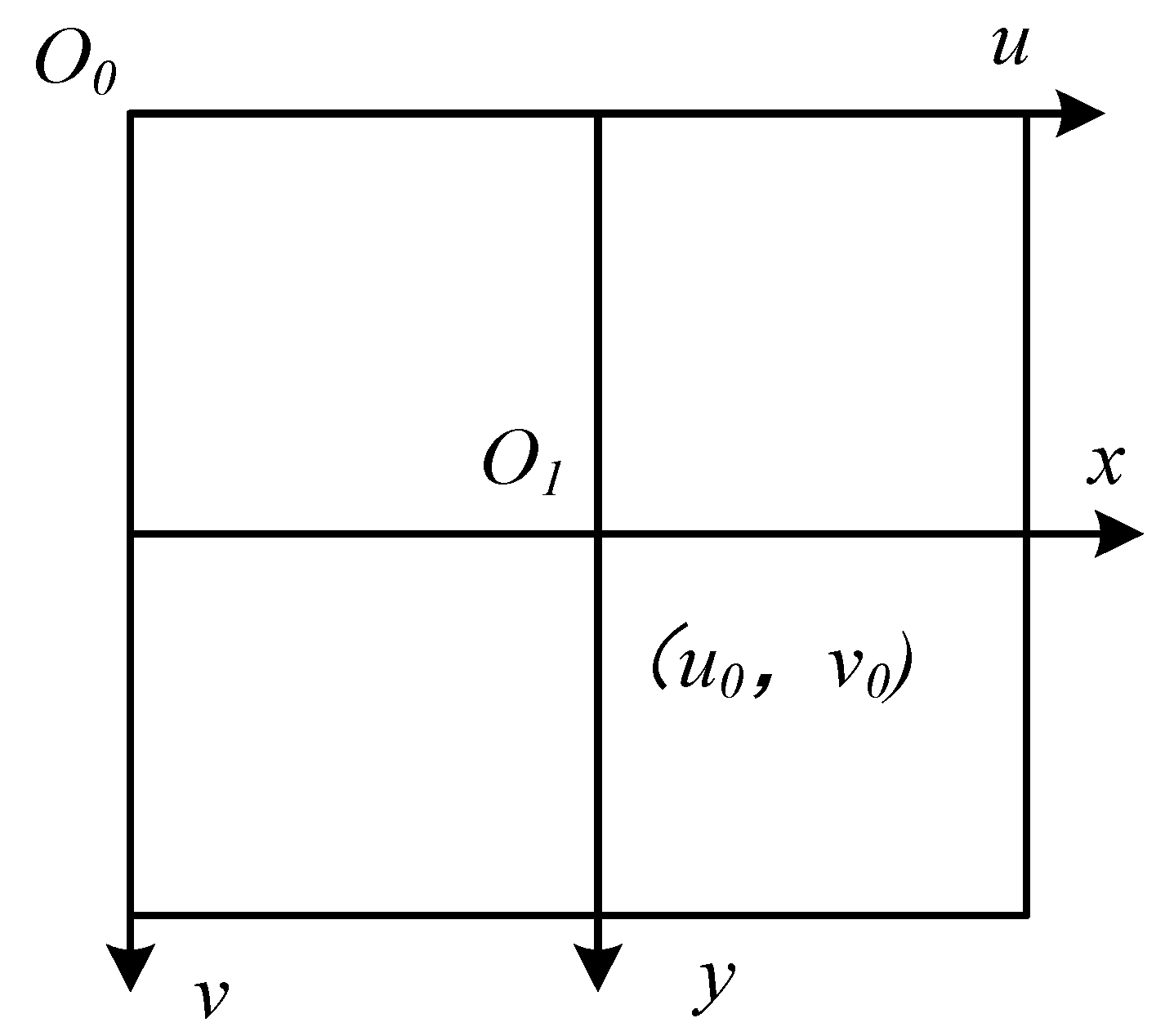

Using the binocular structured light system constructed in this study, we conducted measurements on simulated wheat grain storage. We also established a point cloud acquisition method for the grain pile based on stereo matching algorithms. The binocular structured light system captures images, and the disparity values of the grain pile images are extracted using a stereo matching algorithm that is based on the minimum energy cost function. By combining the binocular structured light mathematical model, the disparity map is converted into a depth map. With the assistance of the binocular vision model and calibration parameters, the depth map is transformed into a point cloud, allowing for the achievement of 3D reconstruction of the grain pile.

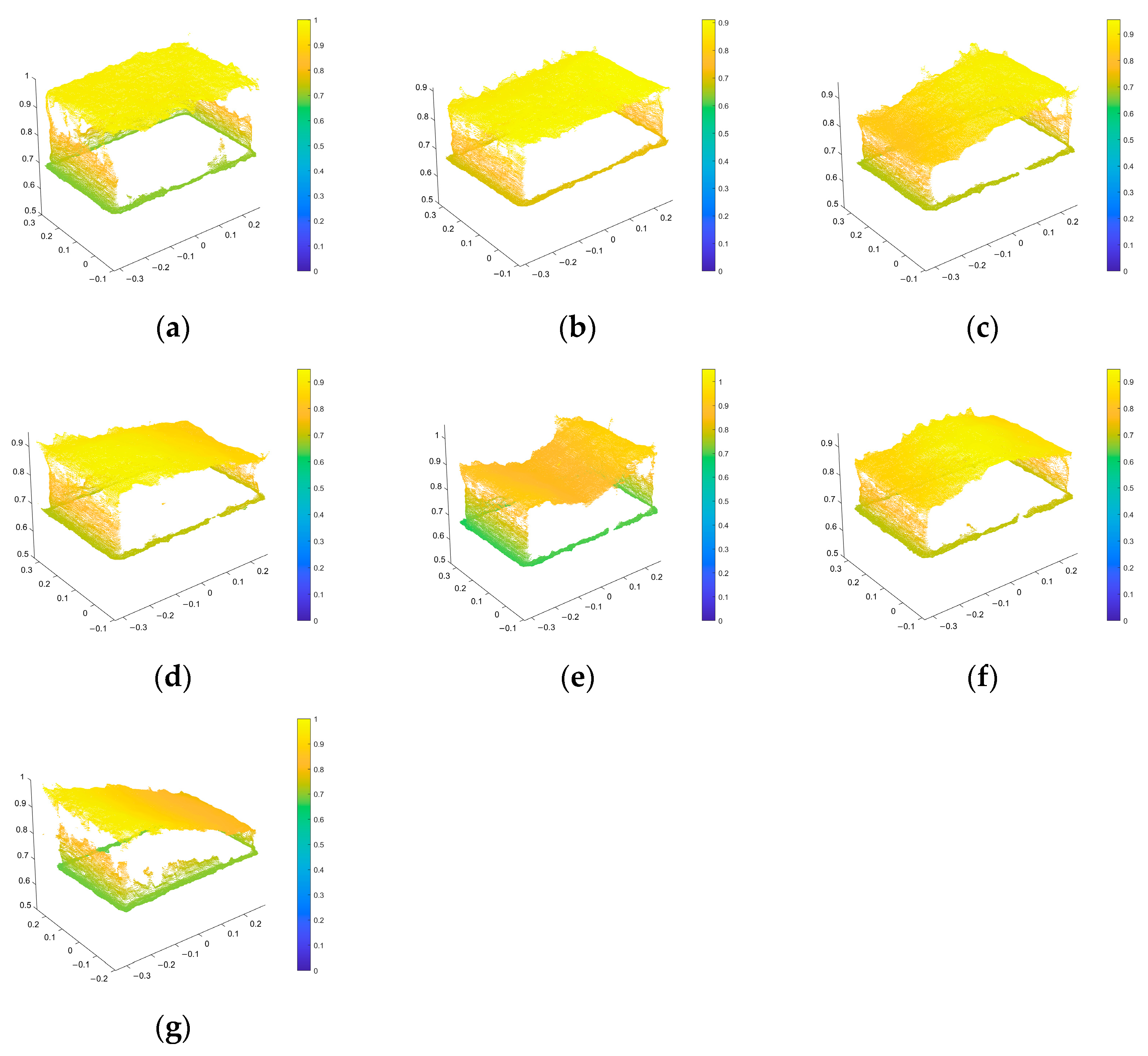

The acquired image information is converted into a depth map using stereo matching and a binocular structured light model. By utilizing camera calibration parameters and a binocular vision model, the depth map can be further transformed into point cloud data. The resulting point cloud data is then analyzed, as shown in

Figure 11.

Figure 11a represents the point cloud data without the grain pile, while

Figure 11b–g depict the point cloud data with various configurations of the grain pile, such as being flat, left-leaning, right-leaning, convex, concave, or not completely covering the bottom of the container.

5.1. Improving Point Cloud Segmentation Using Enhanced Euclidean Clustering

According to

Figure 11, the point cloud data obtained includes not only the point cloud of the grain pile but also the point cloud of the storage container. In order to accurately measure the volume of the grain pile, it is important to effectively separate the point cloud of the grain pile from the overall point cloud through segmentation during the subsequent analysis.

Currently, point cloud segmentation includes fitting-based point cloud segmentation, clustering-based point cloud segmentation, and deep learning-based point cloud segmentation. Li et al. [

35] proposed an improved RANSAC (random sample consensus) method based on normal distribution transformation units to avoid false plane segmentation in 3D point clouds. The method’s reliability and applicability were demonstrated through experiments, with correctness exceeding 88.5% and completeness exceeding 85.0%. Isa et al. [

36] used RANSAC and localization methods to segment 3D point cloud data acquired from ground-based LIDAR scans, and the method was tested on a clock tower, showing the capability to perform proper segmentation of the data. Li et al. [

37] introduced a multi-primitive reconstruction (MPR) method, which first segments primitives through a two-step RANSAC strategy, followed by global primitive fitting and 3D Boolean operations. Experimental results verified the effectiveness of the proposed method. However, these methods have certain limitations in segmenting irregular objects, especially when RANSAC algorithms are typically used for regular point cloud segmentation, such as lines, planes, spheres, etc. They may perform poorly for segmenting irregular objects. Although RANSAC algorithms can be applied to irregular object segmentation in certain cases, they often require combination with other algorithms or techniques to handle complex geometries and features to achieve more accurate and robust segmentation results.

Cluster-based point cloud segmentation divides the point cloud into different clusters to achieve object segmentation. Miao et al. [

38] proposed a single plant segmentation method based on Euclidean clustering and K-means clustering. The results showed that this method can address the issue of Euclidean clustering failing to segment cross-leaf plants. Chen et al. [

39] modified the classical DBSCAN clustering algorithm for 3D point cloud boundary detection and plane segmentation. They performed plane fitting and validity detection based on candidate samples in 3D space. Xu et al. [

40] used statistical filtering based on Gaussian distribution to remove outlier points, followed by an improved density clustering algorithm for coarse point cloud segmentation. They further addressed over-segmentation and under-segmentation issues using normal vectors for segmentation. The experimental results showed the effectiveness of their proposed method. However, it is worth noting that cluster-based methods usually involve manual parameter selection, including the number of clusters and distance metric methods. These parameter choices can have a significant impact on the segmentation results, often requiring experience or multiple attempts to achieve better outcomes. Furthermore, traditional cluster-based methods often prioritize geometric or attribute features of the data while neglecting semantic information. In certain applications, point cloud segmentation needs to consider object semantic relationships and contextual information, as they are crucial for achieving more accurate results. Deep learning-based point cloud segmentation is currently a widely used method. However, it encounters challenges such as the need for large amounts of data, extensive annotation for training, and the requirement for powerful computing resources. Moreover, deep learning-based methods for irregular point cloud segmentation require further research and improvement.

Considering the irregularity and continuity of grain surface point clouds, as shown in

Figure 11, which depicts the target scene that includes not only the grain pile surface but also the container, it is necessary to extract valid point clouds of the grain pile for further calculations. This study proposes an improved Euclidean clustering point cloud segmentation method. This method sets seed points in the point cloud and divides the point cloud into different categories based on Euclidean distance to achieve segmentation of the grain pile point cloud. Compared to traditional Euclidean clustering methods, as shown in

Figure 12, which have difficulties accurately segmenting the required grain pile point cloud, the proposed method not only considers the spatial features of the point cloud but also the continuity between different categories, resulting in more accurate and robust segmentation results.

In order to optimize the improved Euclidean clustering segmentation method and improve computational efficiency, this paper adopts a parallel computing strategy and combines it with the direct pass filter to eliminate outliers. The segmentation is performed on two sets of point clouds, where set represents the point cloud without grain heap data and set contains the point cloud with the grain heap. The specific steps are as follows:

Step 1: Select as the seed point in and calculate the Euclidean distances to find the closest points in set . Then, based on the set threshold, determine if the distance between the point and is smaller than the threshold. If it meets the condition, add the point to class .

Step 2: Continue selecting new seed points and repeat step 1 until no new points are added to class .

Step 3: Point set contains the effective point cloud of the grain heap and set contains the point cloud that does not belong to the grain heap. Therefore, point set can be removed from point set to obtain the final point cloud of the grain heap.

This paper takes into consideration the irregularity and disorderliness of the grain heap point cloud, as well as the subsequent operations and economic benefits. While traditional point cloud segmentation methods usually focus on segmenting different parts from the point cloud data, the objective of this paper is to obtain the point cloud of the grain heap for volume calculation. To achieve this, two sets of point cloud data are utilized and classified based on Euclidean distance. As shown in

Figure 11,

Figure 11a represents the point cloud without the grain heap,

Figure 11b represents the point cloud with a flat grain heap,

Figure 11c with a left-leaning grain heap,

Figure 11d with a right-leaning grain heap,

Figure 11e with a convex grain heap, and

Figure 11f with a concave grain heap. Additionally,

Figure 11g shows point clouds where the grain pile does not fully cover the container. These point clouds include those containing only the container and those containing both the container and the grain heap.

Figure 11b–g are combined with the

Figure 11a point cloud, and then a distance threshold is set to calculate the distance from each point in the point cloud to its nearest neighbor point. The point cloud data is then filtered based on this threshold.

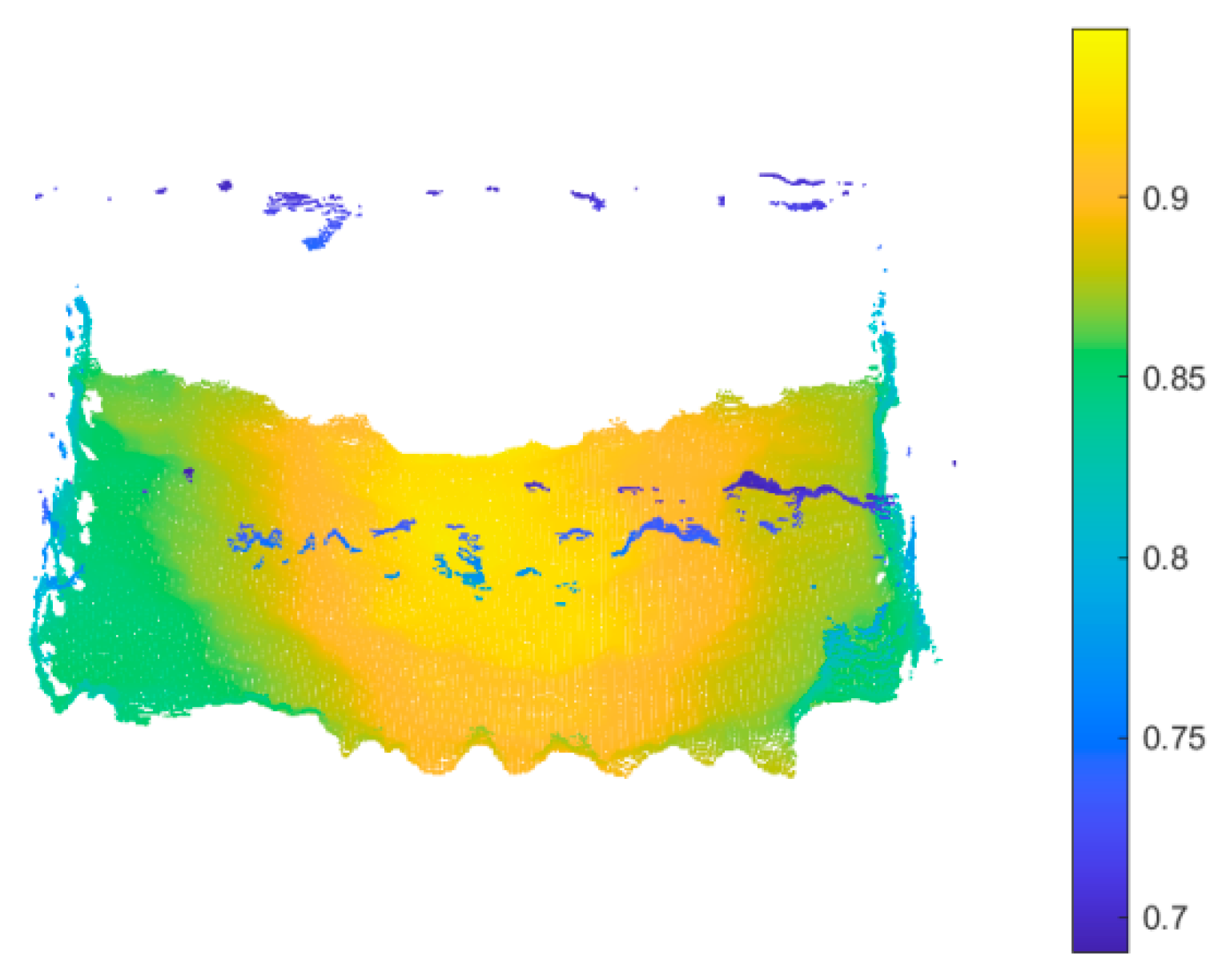

Figure 13 illustrates a set of point clouds that were generated using the point cloud segmentation method proposed in this paper. Upon examining the results depicted in the figure, it becomes apparent that the proposed segmentation method successfully and accurately separates the grain heap information from the entire point cloud. The grain heap point cloud demonstrates specific height and shape characteristics.

Considering the situation where the computation is computationally intensive, this paper adopts parallel computing to decompose the task into multiple sub-tasks and processes them simultaneously, greatly accelerating the overall processing speed. Unlike traditional point cloud segmentation methods, this paper does not use downsampling to reduce the number of point clouds but employs more efficient parallel computing to maintain the point cloud density and texture details while maximizing the efficiency of subsequent calculations. This improved approach preserves the density and texture details of the point cloud while significantly increasing the speed of point cloud processing, making it more suitable for engineering practical needs such as grain heap volume calculation. By considering the characteristics of grain heap point clouds of different shapes and utilizing parallel computing techniques, this paper provides an efficient and accurate point cloud processing method for grain storage and related fields, which is of great significance for improving engineering efficiency and economic benefits.

5.2. Grain Pile Point Cloud Optimization

The pass-through filter is a widely used method in point cloud filtering. It effectively removes points from the point cloud that fall outside a specified range along a given coordinate axis. This filter is particularly useful in eliminating outliers and filtering out points that are beyond the specified range. By doing so, it improves the accuracy and reliability of the point cloud data by reducing interference. In the context of grain heap point cloud segmentation, the pass-through filter is employed to eliminate outliers and poorly segmented boundary points from the point cloud.

As depicted in

Figure 13, the point cloud of the segmented grain heap surface contains outliers and poorly segmented boundary points, which could be noise or a result of imperfect segmentation algorithms. In order to enhance the accuracy and reliability of the point cloud data, this study employs the pass-through filter. This filter efficiently eliminates outliers and filters out poorly segmented points along the boundary. The steps involved in the pass-through filtering process are as follows:

- (1)

Select the point cloud axis to be retained: First, it is necessary to choose the coordinate axis (e.g., x, y, z) to be retained based on the characteristics of the point cloud data.

- (2)

Specify the range along the selected axis: Next, specify the minimum and maximum values along the selected axis to be retained. Points outside this range will be filtered out, effectively removing outliers.

- (3)

Execute the filtering: Based on the specified range, execute the pass-through filtering, retaining the points within the specified range and removing points outside the range. This process effectively eliminates outliers and poorly segmented points, resulting in a cleaner and more accurate grain heap surface point cloud.

Comparing

Figure 13 and

Figure 14, the pass-through filter effectively removes unnecessary point cloud data and improves the accuracy and reliability of point cloud segmentation. This processing step provides a more reliable foundation for subsequent grain heap volume calculations. The use of the pass-through filter in this study successfully enhances the quality of grain heap surface point cloud data, providing more accurate data support for research and applications in grain storage domains.

By comparing the results in

Figure 14 with those in

Figure 11 and

Figure 13, it is evident that the improved Euclidean clustering point cloud segmentation combined with the pass-through filter effectively and accurately separates the grain heap point cloud information from the entire point cloud. This process eliminates interference points and outliers, enhancing the quality and accuracy of the point cloud data. In

Figure 15, the successful segmentation of the grain heap point cloud can be observed while irrelevant points, such as the container, are filtered out. The application of the improved Euclidean clustering point cloud segmentation method combined with the pass-through filter improves the efficiency, accuracy, and reliability of the segmentation process for the grain heap point cloud. This method considers the spatial characteristics of the point cloud and the continuity between different categories, resulting in more accurate and robust segmentation. Furthermore, the use of the pass-through filter removes unnecessary point cloud data, thereby improving the quality of the point cloud data.

6. Volume Calculation

Currently, various methods exist for calculating volume based on point clouds, such as the convex hull method, the slicing method, the model reconstruction method, and the projection method. In this paper, we introduce a volume calculation method that combines mesh patching with the projection method. The underlying principle is to divide the 3D point cloud into a triangular mesh structure, project the mesh downwards, and generate multiple irregular triangular prisms. The volumes of these irregular triangular prisms are then calculated and summed to obtain the total volume. Although there is currently no direct method for calculating the volume of irregular triangular prisms, they can be decomposed into a regular triangular prism and a triangular or quadrangular pyramid through mesh patching. Our proposed method, in comparison to the methods proposed by Wang et al. [

41] and Zhang et al. [

10], which utilize the average elevation or average depth of all points in the mesh as the height, achieves smaller errors in volume calculation. The primary source of errors lies in the mesh partitioning.

Delaunay triangulation is a reliable and stable meshing method that preserves the topological properties of point cloud data. This method helps to retain the geometric features of the point cloud and generate a continuous and non-overlapping triangular mesh. It possesses the properties of an empty circle and maximizes the minimum angle. The empty circle property ensures that no four points in the triangular mesh share a circle, and the circumcircle of any triangle does not contain other points, ensuring the stability and consistency of the triangles. The maximization of the minimum angle property ensures that the generated triangles have large minimum angles, thereby improving the quality of the triangular mesh. Larger minimum angles help reduce sharp angles and distortions, resulting in more uniform and regular triangles. Furthermore, regardless of the angle at which the construction starts, the final result remains consistent.

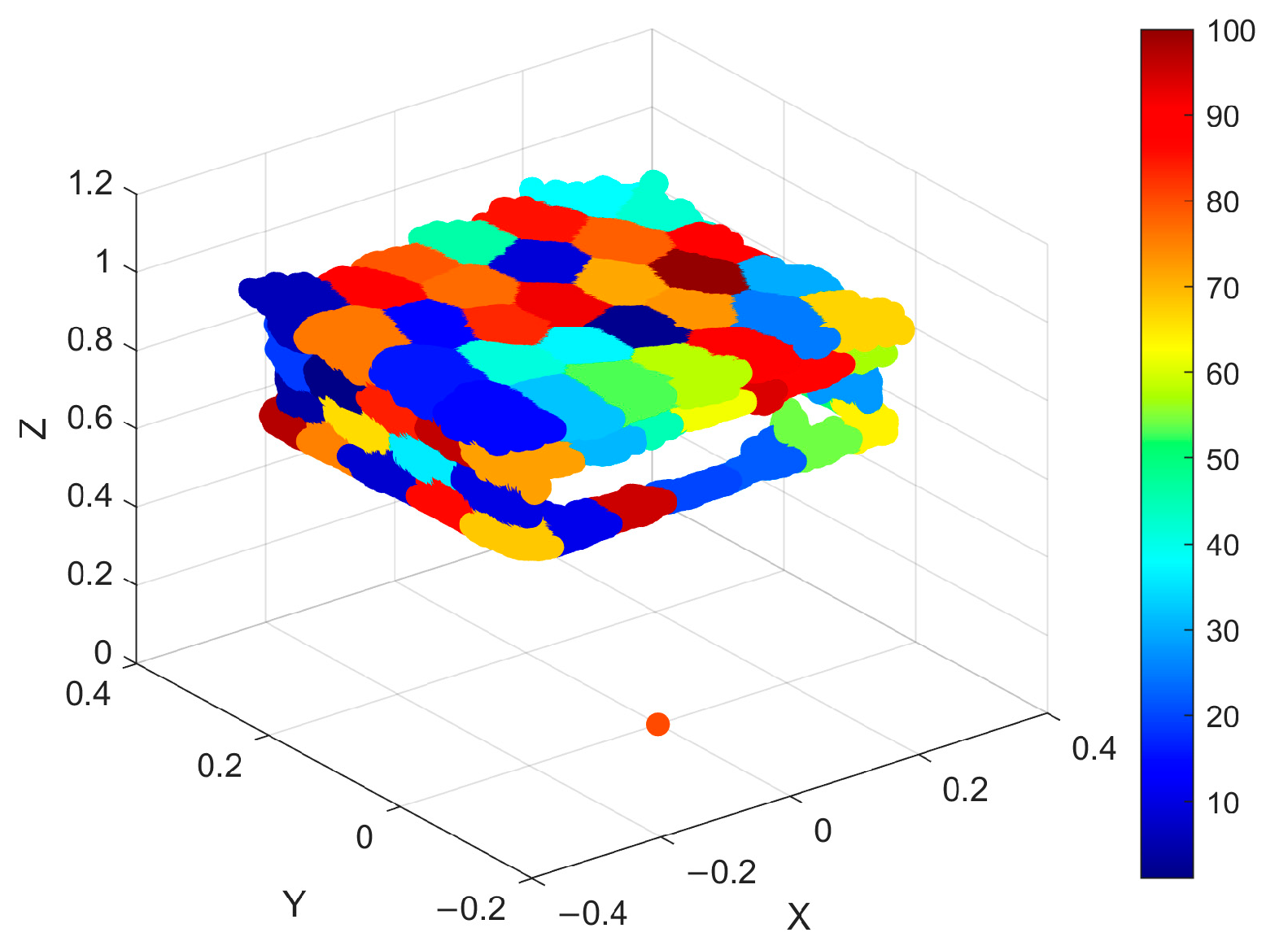

This paper presents an improved Delaunay triangulation method that addresses the issues of inconsistent or incomplete triangle shapes and elongated triangles in traditional Delaunay triangulation. These issues often arise due to interruptions or incompleteness in the point set. To overcome these challenges, we propose the introduction of a distance threshold to control the maximum edge length of the generated triangles in the traditional Delaunay triangulation method. By incorporating this distance threshold, our method ensures that the triangles on the boundary of the point cloud remain consistent and well-formed while minimizing the occurrence of elongated triangles. This refinement significantly improves the accuracy of subsequent volume calculations, making our method more suitable for handling point clouds with boundary interruptions or incompleteness. The proposed method consists of the following steps:

(1) Firstly, a point is selected from the point set and used as the center of a circle. Then, points within the threshold range from the center of the circle are searched for. One of the scattered points is chosen and connected to the center point to generate an initial edge length. If no points are found within the threshold range, the process is repeated until all points in the point set have been used.

(2) Once the initial edge length is determined, scattered points around it are searched for. A point is considered to be part of an initial triangle if its distance to one endpoint of the edge is smaller than the threshold.

(3) The initial triangle’s edges are then used as the ‘to-be-expanded’ edges. The triangle is expanded based on the threshold, with the requirement that the scattered points are located on the two sides of the ‘to-be-expanded’ edge. If no points are found within the threshold range, step (1) is repeated.

(4) Calculate the angle between the normal vectors of the ‘to-be-expanded’ triangle and the expanded triangle. Select the triangle with the largest angle as the expanded triangle.

(5) If there are multiple triangles that meet the conditions in step (4), compare the minimum angles of the triangles and choose the triangle with the largest minimum angle as the expanded triangle. Repeat this process until all points have been selected. End the process.

Figure 15 shows the segmentation results obtained using different thresholds (2 mm and 5 mm). From the figures, it is evident that when the threshold is set too small (2 mm), numerous holes appear, indicating the presence of small triangles that form disjointed regions. However, as the threshold is increased to 5 mm, the number of holes decreases and the point cloud data becomes more continuous, thereby better preserving the shape of the grain heap. Therefore, in practical applications, selecting an appropriate threshold is crucial to ensure accurate and continuous segmentation of the point cloud. This improved Delaunay triangulation method provides more reliable and accurate foundational data support for subsequent calculations of grain heap volume.

In this study, the true volume of the grain heap was measured to be 0.019 m3 using the drainage method. To validate the stability of the structured light system, measurements were conducted for six different shapes of grain heaps (flat, left-leaning, right-leaning, concave, convex, and the grain pile does not fully cover the container.). After obtaining the point cloud data, segmentation was performed, followed by the application of the improved Delaunay triangulation to triangulate the point cloud. The volume of the grain heap was then obtained using a combination of the projection method and the cut-and-fill method.

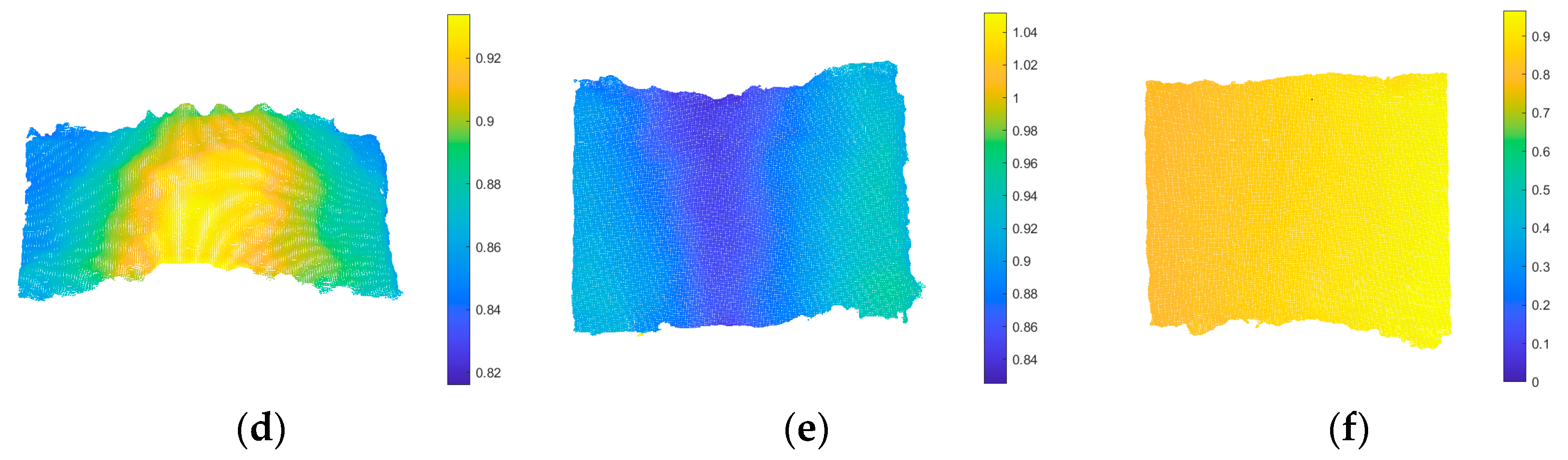

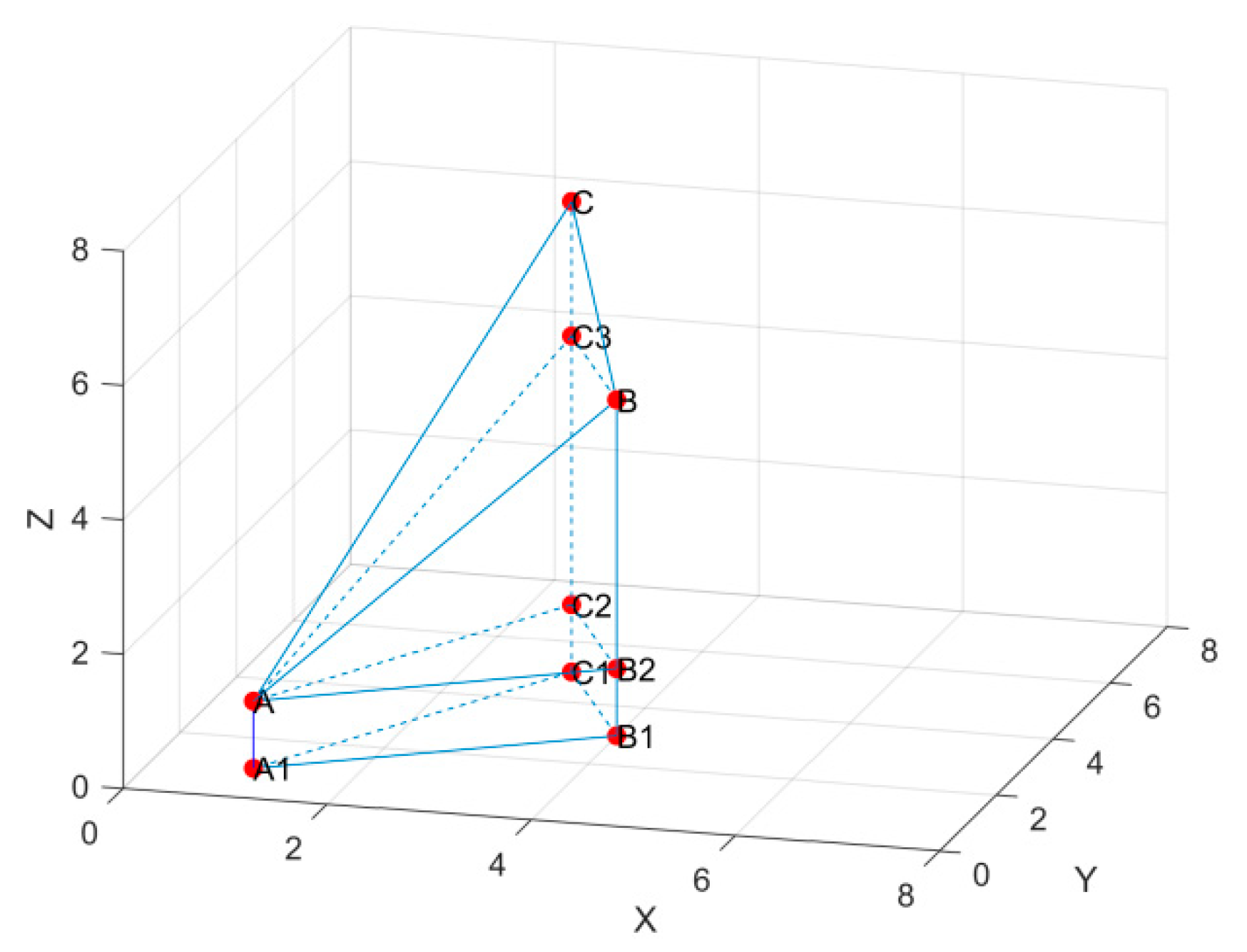

As shown in

Figure 16, A, B, and C represent a triangular face after triangulation. When projecting triangle ABC onto the xoy-plane, it forms an irregular triangular prism,

. This irregular triangular prism can be further divided into a regular triangular prism,

, a four-sided pyramid,

, and a triangular pyramid,

. The volume of this irregular triangular prism,

, can be represented as follows:

Based on the information presented in

Figure 16, we conducted a projection of the subdivided space triangle to obtain an irregular triangular prism. By analyzing the point cloud data, we were able to determine the three-dimensional coordinates of the triangle’s vertices. Through further calculations, we obtained the necessary height measurements for calculating the volume of each triangular prism. Formula 2 in the paper introduces three height values. In this scenario, let

represent the height of the triangular prism

. The

value corresponds to the z-axis coordinate of point A. Additionally,

denotes the distance between points A and

, which signifies the height of the triangular pyramid

. Similarly,

represents the distance between points A and

, indicating the height of the triangular pyramid

.

The volume of the grain pile is:

where

represents the number of irregular prisms and

denotes the volume of the irregular prism with index

.

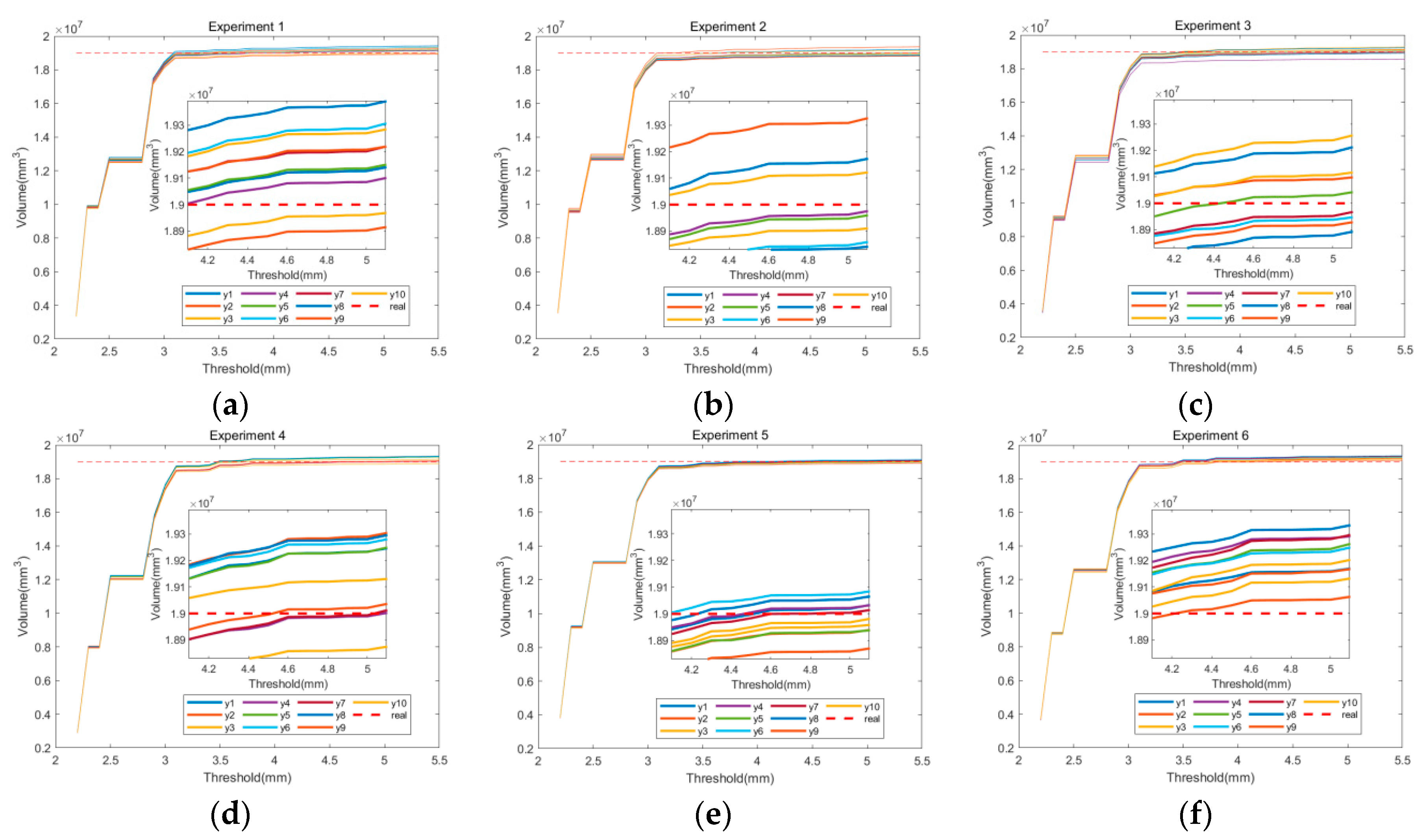

The study conducted six experiments using 31 different threshold ranges, ranging from 2.5 mm to 5.5 mm with a difference of 0.1 mm between each group. The experiments were performed on six different grain pile models, each representing a specific shape: flat, left-leaning, right-leaning, convex in the middle, concave in the middle, and grain piles that did not completely cover the container. Each experiment group consisted of ten measurements. The grain pile volume obtained through the binocular structured light system is illustrated in

Figure 17. The figure displays the ten measurement results represented by dashes, while the solid line indicates the actual volume value of 0.019 m

3.

Based on observations from

Figure 17, it was noted that the measured volume of grain heaps with different shapes (flattened, left-tilted, right-tilted, convex, and concave) remained relatively stable when the threshold value exceeded 3.5 mm. No significant variations were observed beyond this threshold. This experimental design enabled the study to draw important conclusions about the impact of threshold range on grain heap volume measurement.

The volume measurements for different grain heap shapes were found to be relatively stable and tended to approach the true values, especially for threshold values exceeding 3.5 mm. This suggests that using these threshold values can lead to more reliable measurement results. These findings have important practical implications for selecting and optimizing grain heap volume measurement methods in grain storage and related fields. Additionally, this study offers a rational approach for selecting the threshold range and determining the number of experiments, which enhances the reliability of the research findings.

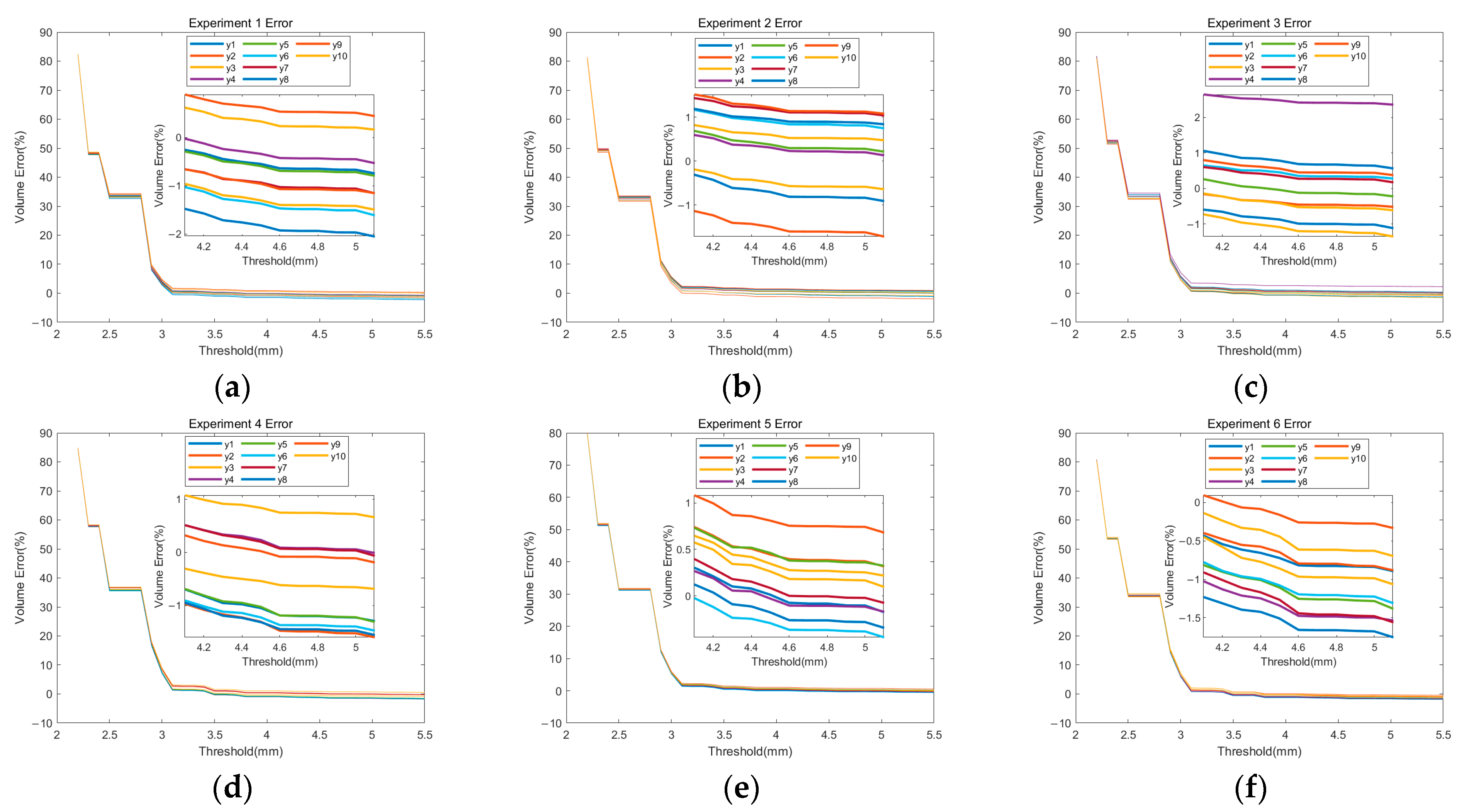

Experiments 1 to 6 in

Figure 18 represent ten sets of measurement results for five different scenarios: grain pile flat, grain pile left inclination, grain pile right inclination, grain pile convex, grain pile concave, and the grain pile does not fully cover the container. These figures show the relative errors between the measured volume and the true volume. From the graphs, the following observations can be made: When the threshold is greater than 4 mm, the error is less than 1.5%; between the threshold values of 2.2 mm and 3 mm, the error gradually decreases; and when the threshold is greater than 4.6 mm, the error starts to increase again. This indicates the impact of the threshold on the volume measurement results. When the threshold is set too low, a significant number of holes are present in the formed triangular mesh, which results in considerable errors in volume measurement. As the threshold value increases, the number of divided triangles also increases, and the occurrence of holes gradually decreases. This reduction in holes leads to a decrease in errors in volume measurement. However, if the threshold is set too high, inconsistencies or incompleteness may arise in the triangles along the boundaries, resulting in an increase in volume and consequently an increase in measurement errors.

In practical applications, it is important to strike a balance and make adjustments when selecting a threshold. By choosing an appropriate threshold range, accurate volume measurements can be achieved while keeping errors within an acceptable range. The results shown in

Figure 18 offer valuable insights for measuring grain pile volume and provide guidance for selecting the optimal threshold and optimizing volume measurements in practical scenarios. Taking into account both accuracy and computational efficiency, a threshold range greater than 4 mm can be chosen to obtain consistent and realistic volume measurement results.

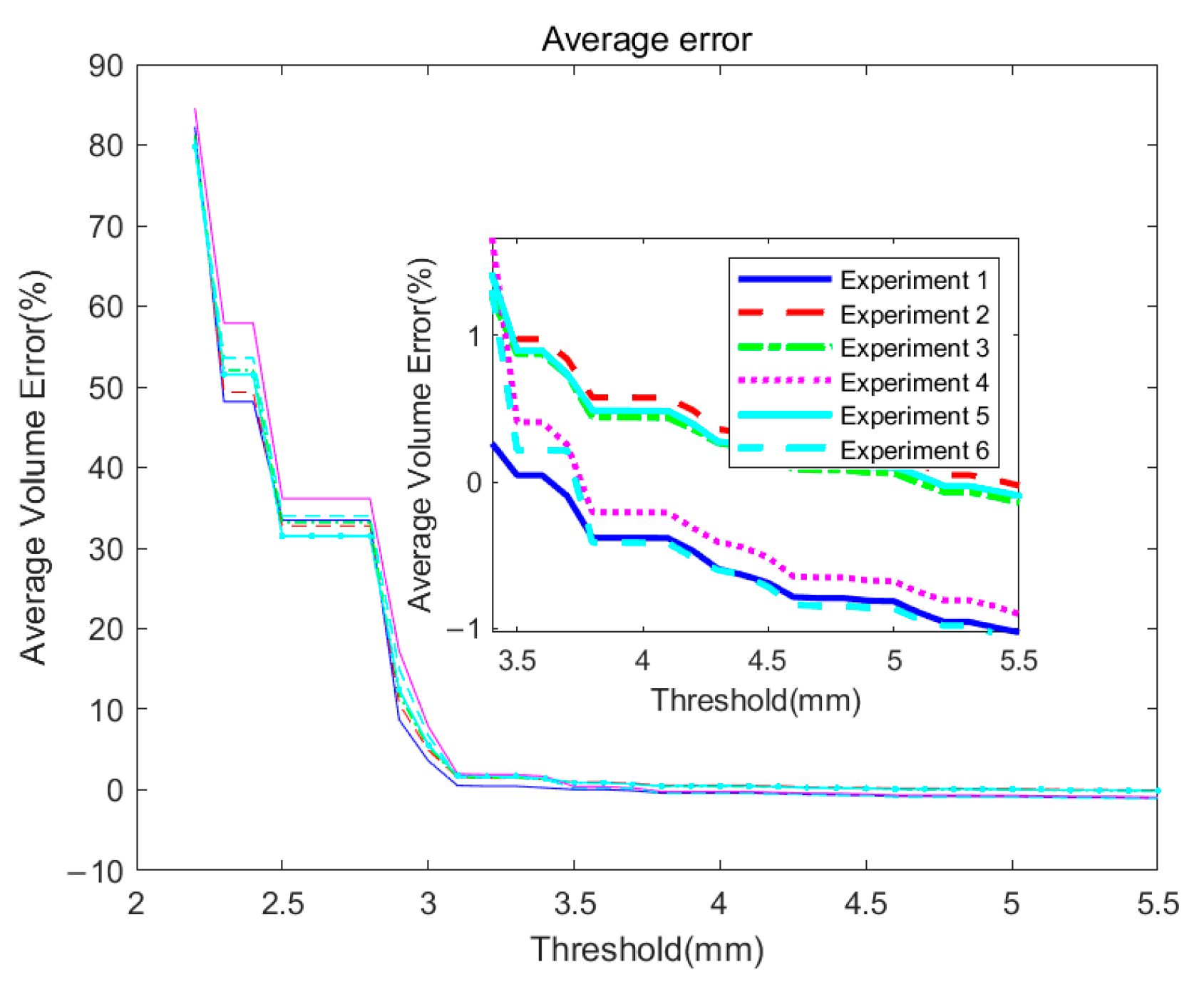

Figure 19 illustrates the relationship between the threshold and the mean error of the measurement volume. The experiments are labeled as Experiment 1 to Experiment 6, representing six distinct experiments with a flat grain pile, a grain pile with left inclination, a grain pile with right inclination, a convex grain pile, a concave grain pile, and a grain pile that does not fully cover the container. By analyzing the data in the graph, we can draw the following conclusions: when the precision (mean error) requirement is within 1%, choosing a threshold range between 3.5 mm and 5 mm can meet the requirement, and even within the range of 4 mm to 4.2 mm, the mean error can be within 0.5%. Since the shapes of grain piles in practical applications can be diverse, ensuring the applicability of the measurement system to different shaped grain piles is crucial.

We can observe that the method proposed in this study can achieve high-precision volume measurement results for the six different scenarios. This demonstrates that the method designed in this study has good applicability and stability for differently shaped grain piles.

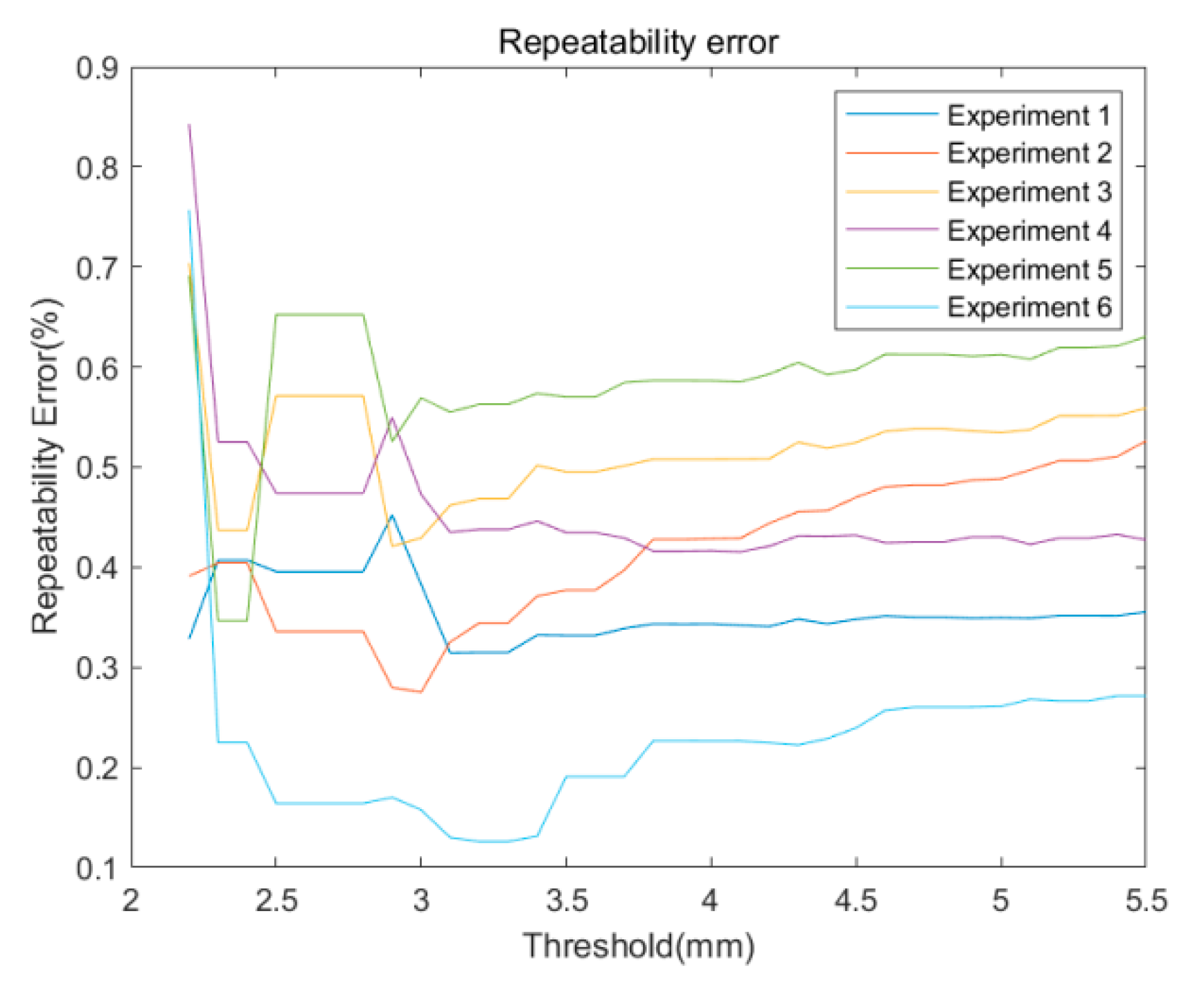

Figure 20 illustrates the relationship between the threshold and the repeatability error of the measurement volume (expressed as the relative standard deviation). The experiments are labeled as Experiment 1 to Experiment 6, representing six distinct experiments with a flat grain pile, a grain pile with left inclination, a grain pile with right inclination, a convex grain pile, a concave grain pile, and a grain pile that does not fully cover the container. The repeatability error shown in

Figure 20 is an important indicator for evaluating the reliability of the measurement system. It is represented by calculating the experimental standard deviation to assess the repeatability of the measurement system and is expressed as the experimental standard deviation divided by the average measurement value to represent the repeatability error of the system. In the experiments, multiple volume measurements were conducted to verify the stability and precision of the system. From

Figure 20, it can be observed that the repeatability error is consistently below 0.6% within the threshold range of 3 mm to 5 mm. This indicates that within this specific threshold range, the measurement system demonstrates excellent repeatability, high stability in volume measurements, and a relatively low repeatability error. The results show that the designed binocular structured light measurement system has good accuracy and reliability. A repeatability error below 0.6% indicates that the system can produce relatively consistent and stable volume measurement results in multiple measurements. The stability of grain pile volume measurements is crucial for accuracy and reliability, particularly when multiple measurements are needed in practical applications. The proposed binocular structured light measurement system for grain pile volume measurement is proven to be superior and effective. By taking repeatability error into account, the system’s performance can be thoroughly evaluated, providing valuable insights for future practical applications and system optimization. This experimental design and result analysis hold significant practical value for grain storage management and related research and practice.

To determine the optimal threshold range for our system, we conducted extensive experimental research. In the experiments, we used 31 different threshold ranges, ranging from 2.5 mm to 5.5 mm, with a difference of 0.1 mm between each group. We determined the best threshold range by comparing the measurement results under different threshold ranges and comparing them with the ground truth values. Ultimately, we selected a threshold range of 4 mm to 4.2 mm, which provided good stability and repeatability while maintaining measurement accuracy.

The experimental results from

Figure 19 and

Figure 20 show that the grain pile volume measurement error can be kept within 1.5%, the average error can be kept within 0.5%, and the repeatability error can be kept within 0.6%. The following is an analysis of several factors that may lead to errors.

- (1)

Due to the precision of the camera, it introduces systematic errors in the measurements.

- (2)

When obtaining the true volume of the grain pile through drainage methods, it may be influenced by human interventions, such as uneven drainage or inaccurate measurements.

- (3)

When segmenting the point cloud for the grain pile, the complexity of point cloud data and noise may result in incomplete segmentation, leading to errors.

- (4)

Factors such as the temperature and camera positioning in the experimental environment may have an impact on the measurement accuracy of the binocular camera.

- (5)

The deformation of grain storage containers due to grain compression may introduce measurement errors.