Perceived Usability Evaluation of Educational Technology Using the Post-Study System Usability Questionnaire (PSSUQ): A Systematic Review

Abstract

1. Introduction

2. Literature Review

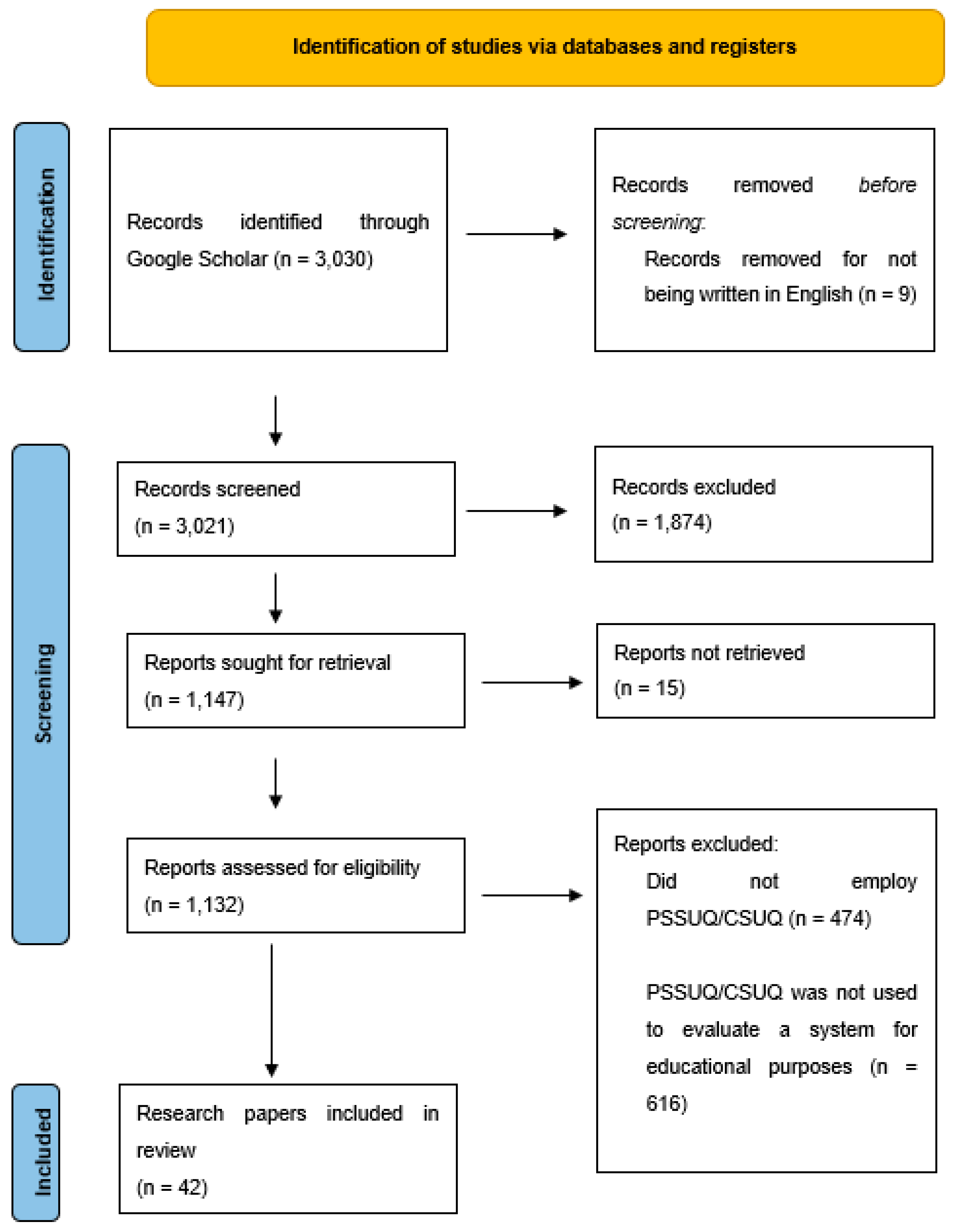

3. Materials and Methods

- Research papers that assess the usability of educational technology systems using PSSUQ/CSUQ.

- Each educational technology system should be specifically designed for educational use.

- 3.

- Research papers written in languages other than English.

- 4.

- Studies that did not employ the PSSUQ/CSUQ scale.

- 5.

- Evaluations of educational software that did not utilize the PSSUQ/CSUQ for usability assessment.

- What are the usability levels, as measured by the Post-Study System Usability Questionnaire (PSSUQ) and Computer System Usability Questionnaire (CSUQ), for different types of technology employed in education?

- Is there a significant variation in usability among these different types of technology?

- Is there a relationship between age, subject being studied, educational level targeted by the technology, stakeholders involved, type of educational technology used, participant count in each sample, and the PSSUQ/CSUQ score?

- Which level of education predominantly utilizes each evaluated type/category of educational technology?

- Does the perceived usability of the educational technology systems exhibit significant differences over time?

4. Results

4.1. Levels of Perceived Usability in Educational Technology Systems

4.2. Benchmark Data for Various Categories of Educational Technology Based on PSSUQ/CSUQ

4.3. Age of Participants and PSSUQ/CSUQ Score

4.4. Subject Being Studied and PSSUQ/CSUQ Score

4.5. Educational Stage and PSSUQ/CSUQ Score

4.6. Participant Type and PSSUQ/CSUQ Score

4.7. Number of Participants and PSSUQ/CSUQ Score

4.8. Educational Stage and the Category of Educational Technology

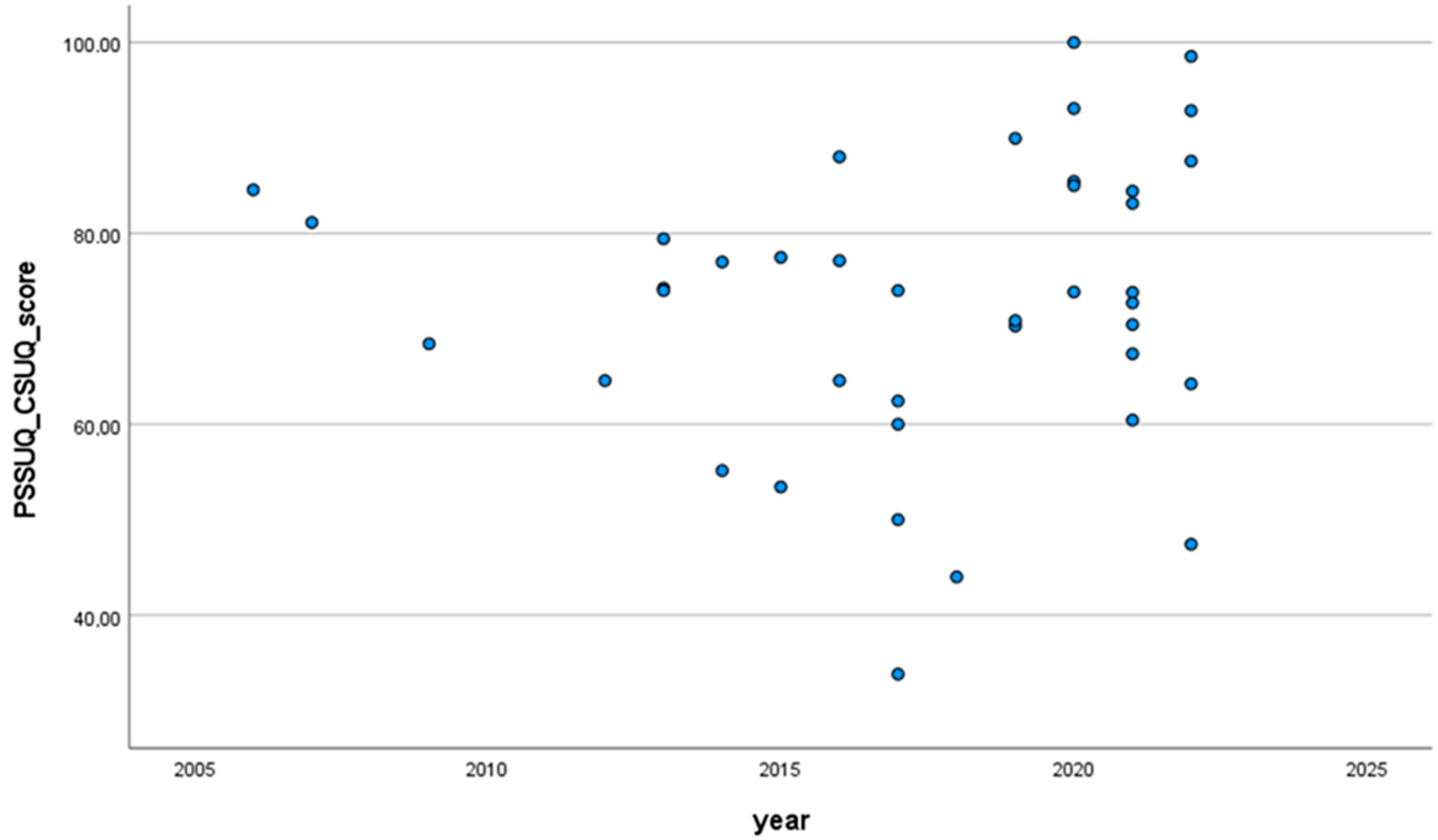

4.9. PSSUQ/CSUQ Scores over Time

5. Discussion

6. Conclusions

Funding

Conflicts of Interest

References

- Januszewski, A.; Molenda, M. (Eds.) Educational Technology: A Definition with Commentary; Routledge: New York, NY, USA, 2008; Available online: http://www.aect.org/publications/EducationalTechnology/ (accessed on 2 August 2023).

- Huang, R. Educational Technology a Primer for the 21st Century; Springer Nature Singapore Pte Ltd.: Singapore, 2019. [Google Scholar]

- Chugh, R.; Turnbull, D.; Cowling, M.A.; Vanderburg, R.; Vanderburg, M.A. Implementing educational technology in Higher Education Institutions: A review of technologies, stakeholder perceptions, frameworks and metrics. Educ. Inf. Technol. 2023, 1–27. [Google Scholar] [CrossRef]

- Carver, L.B. Teacher Perception of Barriers and Benefits in K-12 Technology Usage. Turk. Online J. Educ. Technol.-TOJET 2016, 15, 110–116. [Google Scholar] [CrossRef]

- Criollo-C, S.; Guerrero-Arias, A.; Jaramillo-Alcázar, Á.; Luján-Mora, S. Mobile Learning Technologies for Education: Benefits and Pending Issues. Appl. Sci. 2021, 11, 4111. [Google Scholar] [CrossRef]

- Mathew, I.R.; Ebelelloanya, J. Open and distance learning: Benefits and challenges of technology usage for online teaching and learning in Africa. In Proceedings of the Pan-Commonwealth Forum. Botswana. Commonwealth of Learning and Open University of Malaysia, Kuala Lumpur, Malaysia, 15–30 November 2016. [Google Scholar]

- Nikolopoulou, K. Secondary education teachers’ perceptions of mobile phone and tablet use in classrooms: Benefits, constraints and concerns. J. Comput. Educ. 2020, 7, 257–275. [Google Scholar] [CrossRef]

- Luschei, T.F. Assessing the Costs and Benefits of Educational Technology. In Handbook of Research on Educational Communications and Technology; Spector, J.M., Merrill, M.D., Elen, J., Bishop, M.J., Eds.; Springer Science+Business Media: New York, NY, USA, 2014; pp. 239–248. [Google Scholar] [CrossRef]

- Bajaj, R.; Sharma, V. Smart Education with artificial intelligence based determination of learning styles. Procedia Comput. Sci. 2018, 132, 834–842. [Google Scholar] [CrossRef]

- Ha, N.T.T. Effects of learning style on students achievement. Linguist. Cult. Rev. 2021, 5, 329–339. [Google Scholar] [CrossRef]

- Alhadreti, O. Assessing Academics’ Perceptions of Blackboard Usability Using SUS and CSUQ: A Case Study during the COVID-19 Pandemic. Int. J. Hum.-Comput. Interact. 2021, 37, 1003–1015. [Google Scholar] [CrossRef]

- Nicolaou, C.; Matsiola, M.; Kalliris, G. Technology-enhanced learning and teaching methodologies through audiovisual media. Educ. Sci. 2019, 9, 196. [Google Scholar] [CrossRef]

- Wetzel, K.; Buss, R.; Foulger, T.S.; Lindsey, L. Infusing Educational Technology in Teaching Methods Courses: Successes and Dilemmas. J. Digit. Learn. Teach. Educ. 2014, 30, 89–103. [Google Scholar] [CrossRef]

- ISO 9241-11; Ergonomic Requirements for Office Work with Visual Display Terminals (VDTs): Part 11: Guidance on Usability. International Organization for Standardization: Geneva, Switzerland, 1998.

- Bevan, N.; Carter, J.; Harker, S. ISO 9241-11 revised: What have we learnt about usability since 1998? In Proceedings of the International Conference on Human-Computer Interaction, Bamberg, Germany, 14–18 September 2015; pp. 143–151. [Google Scholar]

- Lewis, J.R. The System Usability Scale: Past, Present, and Future. Int. J. Hum.-Comput. Interact. 2018, 34, 577–590. [Google Scholar] [CrossRef]

- Alghabban, W.G.; Hendley, R. Perceived Level of Usability as an Evaluation Metric in Adaptive E-learning. SN Comput. Sci. 2022, 3, 238. [Google Scholar] [CrossRef]

- Law, E.L.-C.; Heintz, M. Augmented reality applications for K-12 education: A systematic review from the usability and user experience perspective. Int. J. Child-Comput. Interact. 2021, 30, 100321. [Google Scholar] [CrossRef]

- Meiselwitz, G.; Sadera, W.A. Investigating the connection between usability and learning outcomes in online learning environments. J. Online Learn. Teach. 2008, 4, 234–242. [Google Scholar]

- Orfanou, K.; Tselios, N.; Katsanos, C. Perceived usability evaluation of learning management systems: Empirical evaluation of the System Usability Scale. Int. Rev. Res. Open Distrib. Learn. 2015, 16, 227–246. [Google Scholar] [CrossRef]

- Vlachogianni, P.; Tselios, N. The relationship between perceived usability, personality traits and learning gain in an e-learning context. Int. J. Inf. Learn. Technol. 2022, 39, 70–81. [Google Scholar] [CrossRef]

- Vlachogianni, P.; Tselios, N. Perceived usability evaluation of educational technology using the System Usability Scale (SUS): A systematic review. J. Res. Technol. Educ. 2021, 54, 392–409. [Google Scholar] [CrossRef]

- Berkman, M.I.; Karahoca, D. Re-Assessing the Usability Metric for User Experience (UMUX) Scale. J. Usability Stud. 2016, 11, 89–109. [Google Scholar]

- Lewis, J. Psychometric Evaluation of the PSSUQ Using Data from Five Years of Usability Studies. Int. J. Hum.-Comput. Interact. 2002, 14, 463–488. [Google Scholar] [CrossRef]

- Hodrien, A.; Fernando, T. A Review of Post-Study and Post-Task Subjective Questionnaires to Guide Assessment of System Usability. J. Usability Stud. 2021, 16, 203–232. [Google Scholar]

- Schnall, R.; Cho, H.; Liu, J. Health Information Technology Usability Evaluation Scale (Health-ITUES) for Usability Assessment of Mobile Health Technology: Validation Study. JMIR mHealth uHealth 2018, 6, e4. [Google Scholar] [CrossRef]

- Sauro, J.; Lewis, J.R. Quantifying the User Experience: Practical Statistics for User Research. Morgan Kaufmann; Elsevier: Amsterdam, The Netherlands, 2016. [Google Scholar]

- Sauro, J. 10 Things to Know About the Post Study System Usability Questionnaire. 2019. Available online: https://measuringu.com/pssuq/ (accessed on 2 August 2023).

- Tullis, T.S.; Stetson, J.N. A comparison of questionnaires for assessing website usability. In Usability Professional Association Conference; 2004; Volume 1, Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.396.3677&rep=rep1&type=pdf (accessed on 2 August 2023).

- García-Peñalvo, F.J.; Vázquez-Ingelmo, A.; García-Holgado, A. Study of the usability of the WYRED Ecosystem using heuristic evaluation. In Learning and Collaboration Technologies. Designing Learning Experiences: 6th International Conference, LCT 2019, Held as Part of the 21st HCI International Conference, HCII 2019, Orlando, FL, USA, 26–31 July 2019, Proceedings, Part I 21; Springer International Publishing: Cham, Switzerland, 2019; pp. 50–63. [Google Scholar] [CrossRef]

- Al-Tahat, K.S. Arabic Translation, Cultural Adaptation and Psychometric Validation of the Post-Study System Usability Questionnaire (PSSUQ). Int. J. Hum.-Comput. Interact. 2021, 37, 1815–1822. [Google Scholar] [CrossRef]

- Katsanos, C.; Tselios, N.; Liapis, A. PSSUQ-GR: A First Step Towards Standardization of the Post-Study System Usability Questionnaire in Greek. In Proceedings of the CHI Greece 2021: 1st International Conference of the ACM Greek SIGCHI Chapter, Athens, Greece, 25–27 November 2021. [Google Scholar] [CrossRef]

- Rosa, A.F.; Martins, A.I.; Costa, V.; Queiros, A.; Silva, A.; Rocha, N.P. European Portuguese validation of the Post-Study System Usability Questionnaire (PSSUQ). In Proceedings of the 2015 10th Iberian Conference on Information Systems and Technologies (CISTI), Aveiro, Portugal, 17–20 June 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Erdinç, O.; Lewis, J.R. Psychometric Evaluation of the T-CSUQ: The Turkish Version of the Computer System Usability Questionnaire. Int. J. Hum.-Comput. Interact. 2013, 29, 319–326. [Google Scholar] [CrossRef]

- Gronier, G.; Johannsen, L. Proposition d’une adaptation française et premières validations de l’échelle d’utilisabilité Computer System Usability Questionnaire (F-CSUQ) Proposal for a French adaptation and first validations of the Computer System Usability Questionnaire (F-CSUQ). In Proceedings of the 33rd Conference on l’Interaction Humain-Machine, Namur, Belgium, 5–8 April 2022; pp. 1–11. [Google Scholar] [CrossRef]

- Aguilar, M.I.H.; González, A.D.l.G.; Miranda, M.P.S.; Villegas, A.A.G. Adaptación al español del Cuestionario de Usabilidad de Sistemas Informáticos CSUQ/Spanish language adaptation of the Computer Systems Usability Questionnaire CSUQ. RECI Rev. Iberoam. De Las Cienc. Comput. E Informática 2015, 4, 84–99. [Google Scholar] [CrossRef]

- Sonderegger, A.; Schmutz, S.; Sauer, J. The influence of age in usability testing. Appl. Ergon. 2016, 52, 291–300. [Google Scholar] [CrossRef]

- Lewis, J.R. Measuring perceived usability: The CSUQ, SUS, and UMUX. Int. J. Hum.-Comput. Interact. 2018, 34, 1148–1156. [Google Scholar] [CrossRef]

- Abd Aziz, A.; Yusoff, N.; Siraj, F. C-Man: Course management assistant. In Master Projects Seminar; Fakulti Teknologi Maklumat, Universiti Utara Malaysia: Kedah, Malaysia, 2006; Unpublished. [Google Scholar]

- Ahmad, N.A.N.; Lokman, A.; Ab Hamid, N.I.M. Performing Usability Evaluation on Multi-Platform Based Application for Efficiency, Effectiveness and Satisfaction Enhancement. Int. J. Interact. Mob. Technol. 2021, 15, 103–117. [Google Scholar] [CrossRef]

- Alkinani, E.A.; Alzahrani AI, A. Evaluating the Usability and Effectiveness of Madrasati Platforms as a Learning Management System in Saudi Arabia for Public Education. Int. J. Comput. Sci. Netw. Secur. 2021, 21, 275–285. [Google Scholar] [CrossRef]

- Hidalgo-Cespedes, J.; Marin-Raventos, G.; Calderon-Campos, M.E. Usability of an Online Judge for Concurrent Programming Education. In Proceedings of the 2021 XVI Latin American Conference on Learning Technologies (LACLO), Arequipa, Peru, 19–21 October 2021; pp. 318–325. [Google Scholar] [CrossRef]

- Lai, T.-L.; Chen, P.-Y.; Chou, C.-Y. A user experience study of a web-based formative assessment system. In Proceedings of the 2017 International Conference on Applied System Innovation (ICASI), Sapporo, Japan, 13–17 May 2017; pp. 899–902. [Google Scholar] [CrossRef]

- Ma, W.W.A. Usability Test of eHealth Promotion @HKIEd—A Community of Practice Platform to Promote Healthy Lifestyles. Health 2016, 8, 615–622. [Google Scholar] [CrossRef][Green Version]

- McArdle, G. Exploring the Use of 3D Collaborative Interfaces for E-Learning. In Intelligent Systems and Technologies: Methods and Applications; Teodorescu, H.-N., Watada, J., Jain, L.C., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 249–270. [Google Scholar] [CrossRef]

- Pipan, M.; Arh, T.; Blazic, B.J. Evaluation cycle management-model for selection of the most applicable learning management system. WSEAS Trans. Adv. Eng. Educ. 2008, 3, 129–136. [Google Scholar]

- Punjabi, D.M.; Tung, L.P.; Lin, B.S. CrowdSMILE: A Crowdsourcing-Based Social and Mobile Integrated System for Learning by Exploration. In Proceedings of the IEEE 10th International Conference on Ubiquitous Intelligence and Computing, UIC 2013 and IEEE 10th International Conference on Autonomic and Trusted Computing, ATC 2013, Vietri sul Mare, Italy, 18–21 December 2013; pp. 521–526. [Google Scholar] [CrossRef]

- Setiyawan, A. Assignment and Monitoring Information System of Prakerin Students Based On SMS Gateway with Raspberry Pi. VANOS J. Mech. Eng. Educ. 2020, 5, 19–30. [Google Scholar]

- Zapata, A.; Menéndez, V.; Prieto, M.; Romero, C. A framework for recommendation in learning object repositories: An example of application in civil engineering. Adv. Eng. Softw. 2012, 56, 1–14. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, C.; Liu, G.; Han, T. Cross-Platform Product Usability and Large Screen User Experience: A Teleconference System U&E Research. In Design, User Experience, and Usability. User Experience Design Practice; Marcus, A., Ed.; Springer International Publishing: Cham, Switzerland, 2014; Volume 8520, pp. 469–479. [Google Scholar] [CrossRef]

- Utami, I.Q.; Fakhruzzaman, M.N.; Fahmiyah, I.; Masduki, A.N.; Kamil, I.A. Customized moodle-based learning management system for socially disadvantaged schools. Bull. Electr. Eng. Inform. 2021, 10, 3325–3332. [Google Scholar] [CrossRef]

- Oliha, F.O. Web portal usability among Nigerian university students: A case study of University of Benin, Nigeria. Niger. J. Technol. 2014, 33, 199–206. [Google Scholar] [CrossRef][Green Version]

- Ikhsanuddin, N.; Santi, R.; Putri, U.M. Usability Analysis of Higher Education Information Systems (SIDIKTI) at Sjakhyakirti University Using Post-Study System Usability Questionnaire (PSSUQ). J. Comput. Sci. Inf. Technol. 2022, 14, 22–26. [Google Scholar] [CrossRef]

- Phongphaew, N.; Jiamsanguanwong, A. The usability evaluation concerning emotional responses of users on learning management system. In Proceedings of the 2016 6th International Workshop on Computer Science and Engineering, Tokyo, Japan, 17–19 June 2016; pp. 43–48. [Google Scholar]

- Bhakti, D.D.; Putri, S.M.; Nasrulloh, I.; Tetep; Nurkamilah, S. The development of ppdb (admission of new students) application to develop the quality of new students’ recapitulation administration in vocational high school bumi cikajang. J. Phys. Conf. Ser. 2019, 1280, 032041. [Google Scholar] [CrossRef]

- Gannon, B.; Davis, R.; Kuhns, L.M.; Rodriguez, R.G.; Garofalo, R.; Schnall, R. A Mobile Sexual Health App on Empowerment, Education, and Prevention for Young Adult Men (MyPEEPS Mobile): Acceptability and Usability Evaluation. JMIR Form. Res. 2020, 4, e17901. [Google Scholar] [CrossRef] [PubMed]

- Kopetz, J.P.; Wessel, D.; Jochems, N. User-Centered Development of Smart Glasses Support for Skills Training in Nursing Education. I-Com 2019, 18, 287–299. [Google Scholar] [CrossRef]

- Tolle, H.; Hafis, M.; Afif, A.; Arai, K. Perceived Usability of Educational Chemistry Game Gathered via CSUQ Usability Testing in Indonesian High School Students. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 715–724. [Google Scholar] [CrossRef][Green Version]

- Zhang, M.; Hou, G.; Chen, Y.-C. Effects of interface layout design on mobile learning efficiency: A comparison of interface layouts for mobile learning platform. Libr. Hi Tech. 2022. ahead-of-print. [Google Scholar] [CrossRef]

- Carrión-Toro, M.; Santorum, M.; Acosta-Vargas, P.; Aguilar, J.; Pérez, M. iPlus a user-centered methodology for serious games design. Appl. Sci. 2020, 10, 9007. [Google Scholar] [CrossRef]

- Koowuttayakorn, S.; Taylor, P. Usability and Motivation Study of Mobile Application for English Language Proficiency Test Preparation in Thailand: A Case Study of TU-GET CBT. LEARN J. Lang. Educ. Acquis. Res. Netw. 2022, 15, 625–648. [Google Scholar]

- Biabdillah, F.; Tolle, H.; Bachtiar, F.A. Go Story: Design and Evaluation Educational Mobile Learning Podcast using Human Centered Design Method and Gamification for History. J. Inf. Technol. Comput. Sci. 2021, 6, 308–318. [Google Scholar] [CrossRef]

- Yi, M.; Bao, D.; Mo, Y. Exploring the role of visual design in digital public health safety education. Int. J. Environ. Res. Public Health 2021, 18, 7965. [Google Scholar] [CrossRef] [PubMed]

- Chiang, V.C.L.; Choi, T.K.S.; Ching, S.S.Y.; Leung, K.L.K. Evaluation of a virtual reality based interactive simulator with haptic feedback for learning NGT placement. J. Probl. Learn. 2017, 4, 25–34. [Google Scholar] [CrossRef][Green Version]

- Liu, Z.; Jin, Y.; Ma, M.; Li, J. A Comparison of Immersive and Non-Immersive VR for the Education of Filmmaking. Int. J. Human-Comput. Interact. 2022, 39, 2478–2491. [Google Scholar] [CrossRef]

- Mallam, S.C.; Lundh, M.; MacKinnon, S.N. Evaluating a digital ship design tool prototype: Designers’ perceptions of novel ergonomics software. Appl. Ergon. 2017, 59, 19–26. [Google Scholar] [CrossRef]

- Santana, R.; Rossi, G.; Rybarczyk, Y.; Méndez, G.G.; Vera, F.; Rodríguez, A.; Mendoza, P. Studying the User Experience of an Educational AR-Based App for Smart Glasses. In Information Systems and Technologies; Rocha, A., Adeli, H., Dzemyda, G., Moreira, F., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 266–275. [Google Scholar] [CrossRef]

- Zulkifli, A.N.; Mohamed NF, F.; Qasim, M.M.; Bakar, N.A.A. Prototyping and Usability Evaluation of Road Safety Education Courseware for Primary Schools in Malaysia. Int. J. Interact. Mob. Technol. 2021, 15, 32–47. [Google Scholar] [CrossRef]

- Hamdan, M.N.; Ali, A.Z.M. User Satisfaction of Non-Realistic Three-Dimensional Talking-Head Animation Courseware (3D-NR). Int. J. E-Educ. E-Bus. E-Manag. E-Learn. 2015, 5, 23–30. [Google Scholar] [CrossRef][Green Version]

- Saidon, Z.L.; Safian, A.R.; Nasrifan, M.N. Usability Evaluation of a Virtual Reality Interactive Music Appreciation Module (E-Marz) for Secondary School. Int. J. Acad. Res. Progress. Educ. Dev. 2021, 10, 923–937. [Google Scholar] [CrossRef]

- Benaida, M.; Namoun, A. An Exploratory Study of the Factors Affecting the Perceived Usability of Algerian Educational Websites. Turk. Online J. Educ. Technol. 2018, 17, 1–12. [Google Scholar]

- Biery, N.; Bond, W.; Smith, A.B.; LeClair, M.; Foster, E. Using Telemedicine Technology to Assess Physician Outpatient Teaching. Fam. Med. 2015, 47, 807–810. [Google Scholar] [PubMed]

- Maragos, K. Web based Adaptive Educational Games-Exploitation in Computer Science Education. Ph.D. Thesis, National and Kapodistrian University of Athens, Athens, Grace, 2013. Available online: https://www.di.uoa.gr/sites/default/files/documents/grad/phdbook2012_compressed.pdf#page=81 (accessed on 2 August 2023).

- Wu, P.-F.; Fan, K.-Y.; Liao, Y.-T. Developing and assessing the usability of digital manipulative storytelling system for school-age children. In Proceedings of the 2016 3rd International Conference on Systems and Informatics (ICSAI), Shanghai, China, 19–21 November 2016; pp. 465–470. [Google Scholar] [CrossRef]

- Zapata, A.; Menéndez, V.H.; Prieto, M.E.; Romero, C. Evaluation and selection of group recommendation strategies for collaborative searching of learning objects. Int. J. Hum.-Comput. Stud. 2015, 76, 22–39. [Google Scholar] [CrossRef]

- Calleros, C.B.G.; García, J.G.; Rangel, Y.N. UvaMate, a serious game for learning mathematics for children with ADHD: Usability evaluation. Rev. Colomb. Comput. 2020, 21, 20–34. [Google Scholar] [CrossRef]

- Vázquez, S.R.; O’Brien, S.; Fitzpatrick, D. Usability of web-based MT post-editing environments for screen reader users. In Proceedings of the Machine Translation Summit XVI: Commercial MT Users and Translators Track, Nagoya, Japan, 18–22 September 2017; pp. 13–25. [Google Scholar]

- Bangor, A.; Kortum, P.; Miller, J. Determining what individual SUS scores mean: Adding an adjective rating scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

- Brooke, J. SUS: A retrospective. J. Usability Stud. 2013, 8, 29–40. [Google Scholar]

- Sauro, J. Measuring Usability with System Usability Scale (SUS). 2011. Available online: https://measuringu.com/sus (accessed on 2 August 2023).

- Gehanno, J.F.; Rollin, L.; Darmoni, S. Is the coverage of Google Scholar enough to be used alone for systematic reviews. BMC Med. Inform. Decis. Mak. 2013, 13, 1–5. [Google Scholar] [CrossRef]

- Vlachogianni, P.; Tselios, N. Investigating the impact of personality traits on perceived usability evaluation of e-learning platforms. Interact. Technol. Smart Educ. 2022, 19, 202–221. [Google Scholar] [CrossRef]

| Internet Platforms (LMS, MOOC, Wiki, etc.) | Mobile Applications | Multimedia | Research Papers Documenting Studies That Do Not Fall within the Aforementioned Categories of Educational Technology Systems |

|---|---|---|---|

| [11,30,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54] | [5,55,56,57,58,59,60,61,62,63] | [64,65,66,67,68,69,70] | [71,72,73,74,75,76,77] |

| Category | N | Mean PSSUQ/CSUQ Score | SD |

|---|---|---|---|

| Internet Platforms (LMS, MOOC, wiki, etc.) | 21 | 73.23 | 7.74 |

| Mobile applications | 12 | 81.53 | 12.61 |

| Multimedia | 8 | 73.89 | 19.69 |

| Age of Participants | Learning Object | Educational Stage | Participant Type | Participant Count | |

|---|---|---|---|---|---|

| PSSUQ/CSUQ score | p = 0.266 | p = 0.174 | p = 0.01 ** | p = 0.005 ** | p = 0.122 |

| Category | N | Primary Education | Secondary Education | Higher Education |

|---|---|---|---|---|

| Internet Platforms (LMS, MOOC, wiki, etc.) | 13 | 15.4% | 84.6% | |

| Mobile applications | 9 | 44.4% | 55.6% | |

| Multimedia | 7 | 14.3% | 14.3% | 71.4% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vlachogianni, P.; Tselios, N. Perceived Usability Evaluation of Educational Technology Using the Post-Study System Usability Questionnaire (PSSUQ): A Systematic Review. Sustainability 2023, 15, 12954. https://doi.org/10.3390/su151712954

Vlachogianni P, Tselios N. Perceived Usability Evaluation of Educational Technology Using the Post-Study System Usability Questionnaire (PSSUQ): A Systematic Review. Sustainability. 2023; 15(17):12954. https://doi.org/10.3390/su151712954

Chicago/Turabian StyleVlachogianni, Prokopia, and Nikolaos Tselios. 2023. "Perceived Usability Evaluation of Educational Technology Using the Post-Study System Usability Questionnaire (PSSUQ): A Systematic Review" Sustainability 15, no. 17: 12954. https://doi.org/10.3390/su151712954

APA StyleVlachogianni, P., & Tselios, N. (2023). Perceived Usability Evaluation of Educational Technology Using the Post-Study System Usability Questionnaire (PSSUQ): A Systematic Review. Sustainability, 15(17), 12954. https://doi.org/10.3390/su151712954