Digital Competence Development in Public Administration Higher Education

Abstract

:1. Introduction

Theoretical Background and Literature Review

- (1)

- Communication in the native language;

- (2)

- Communication in foreign languages;

- (3)

- Mathematical competence and basic competences in science and technology;

- (4)

- Digital competence;

- (5)

- Learning to learn;

- (6)

- Social and civic competences;

- (7)

- Sense of initiative and entrepreneurship;

- (8)

- Cultural awareness and expression.

- (1)

- Communication in the native language is the ability to express and interpret concepts, thoughts, feelings, facts, and opinions in both oral and written form (listening, speaking, reading, and writing), and to interact linguistically in an appropriate and creative way in a full range of social and cultural contexts; in education and training, work, home and leisure [10].

- (2)

- Communication in foreign languages broadly shares the main skill dimensions of communication in the native language: it is based on the ability to understand, express, and interpret concepts, thoughts, feelings, facts, and opinions in both oral and written form (listening, speaking, reading, and writing) in an appropriate range of social and cultural contexts (in education and training, work, home, and leisure). Communication in foreign languages also calls for skills such as mediation and intercultural understanding. An individual’s level of proficiency will vary among the four dimensions (listening, speaking, reading, and writing) and among the different languages, and according to that individual’s social and cultural background, environment, needs, and/or interests [10].

- (3)

- Mathematical competence is the ability to develop and apply mathematical thinking to solve a range of problems in everyday situations. Building on a sound mastery of numeracy, the emphasis is on process, activity, and knowledge. Mathematical competence involves, to different degrees, the ability and willingness to use mathematical modes of thought (logical and spatial thinking) and presentation (formulas, models, figures, graphs, and charts). Competence in science refers to the ability and willingness to use the body of knowledge and methodology employed to explain the natural world to identify questions and draw evidence-based conclusions [10].

- (4)

- Competence in technology is viewed as the application of knowledge and methodology in response to perceived human intentions or needs. Competence in science and technology involves an understanding of the changes caused by human activity and responsibility as an individual citizen [10].

- (5)

- Digital competence involves the confident and critical use of the information society technology (IST) for work, leisure, and communication. It is underpinned by basic skills in ICT: the use of computers to retrieve, assess, store, produce, present and exchange information, and to communicate and participate in collaborative networks via the Internet [10].

- (6)

- ‘Learning to learn’ is the ability to pursue and persist in learning, and to organize one’s own learning, including through effective management of time and information, both individually and in groups. This competence includes awareness of one’s learning process and needs, identifying available opportunities, and the ability to overcome obstacles to learn successfully. This competence means gaining, processing, and assimilating new knowledge and skills as well as seeking and making use of guidance. Learning to learn engages learners to build on prior learning and life experiences to use and apply knowledge and skills in a variety of contexts: at home, at work, in education, and in training. Motivation and confidence are crucial to an individual’s competence [10].

- (7)

- Sense of initiative and entrepreneurship refers to an individual’s ability to turn ideas into action. It includes creativity, innovation, and risk-taking, as well as the ability to plan and manage projects to achieve objectives. This supports individuals, both in their personal and professional lives, to be more aware of the context of their work and better able to seize opportunities. In addition, it is a foundation for more specific skills and knowledge needed by those establishing or contributing to social or commercial activity. This should include awareness of ethical values and promote good governance [10].

- (8)

- Cultural knowledge includes an awareness of local, national, and European cultural heritage and their place in the world. It covers basic knowledge of major cultural works, including popular contemporary culture. It is essential to understand the cultural and linguistic diversity in Europe and other regions of the world, the need to preserve it, and the importance of aesthetic factors in daily life [10].

2. Materials and Methods

- Information and data literacy

- 2.

- Communication and collaboration

- 3.

- Digital content creation

- 4.

- Safety

- 5.

- Problem-solving

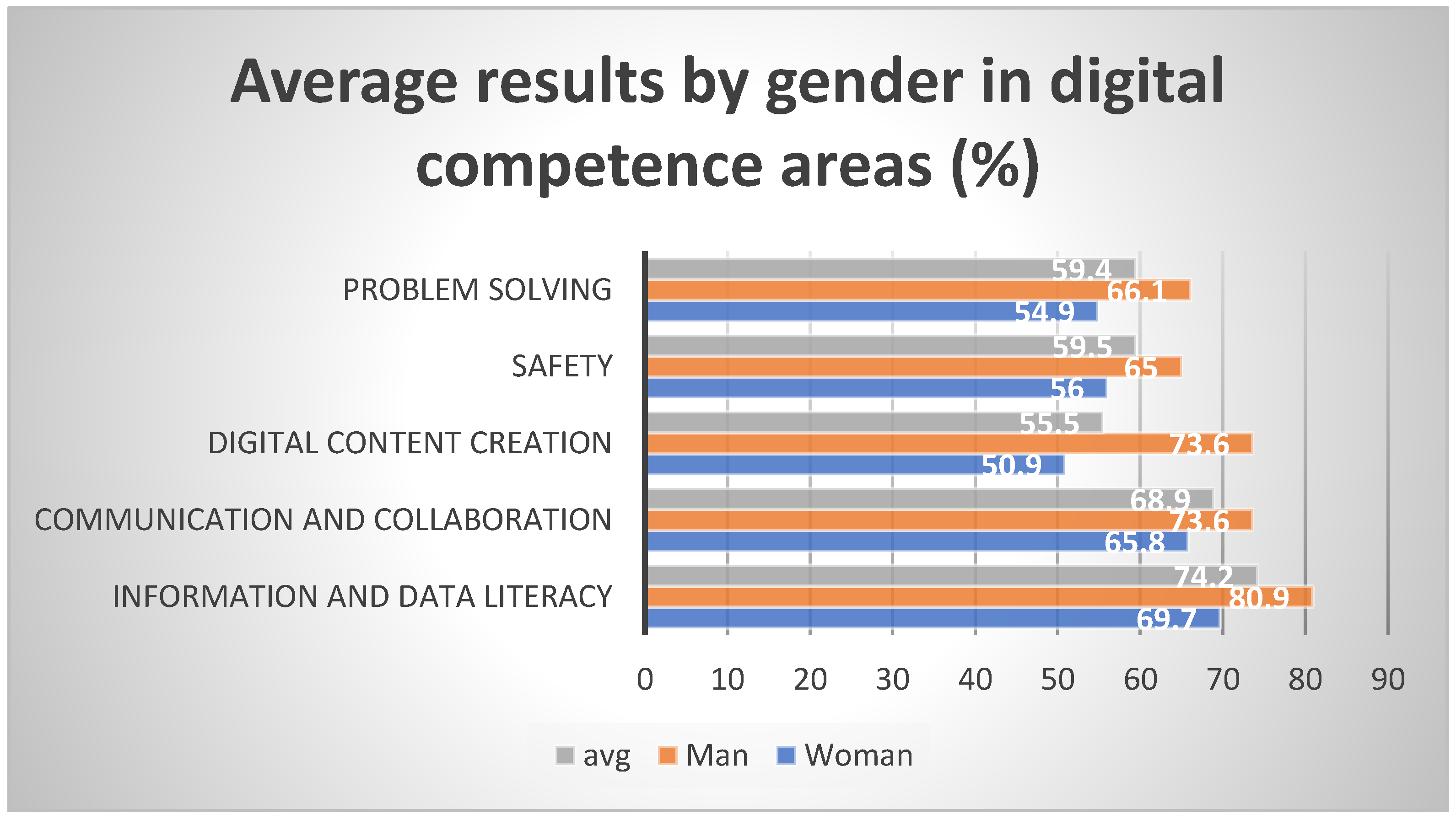

3. Results

4. Discussion

- There is very little specialized literature regarding DigCompSat testing in higher education. Our study intends to remedy this gap, specifically in the education environment of public administration.

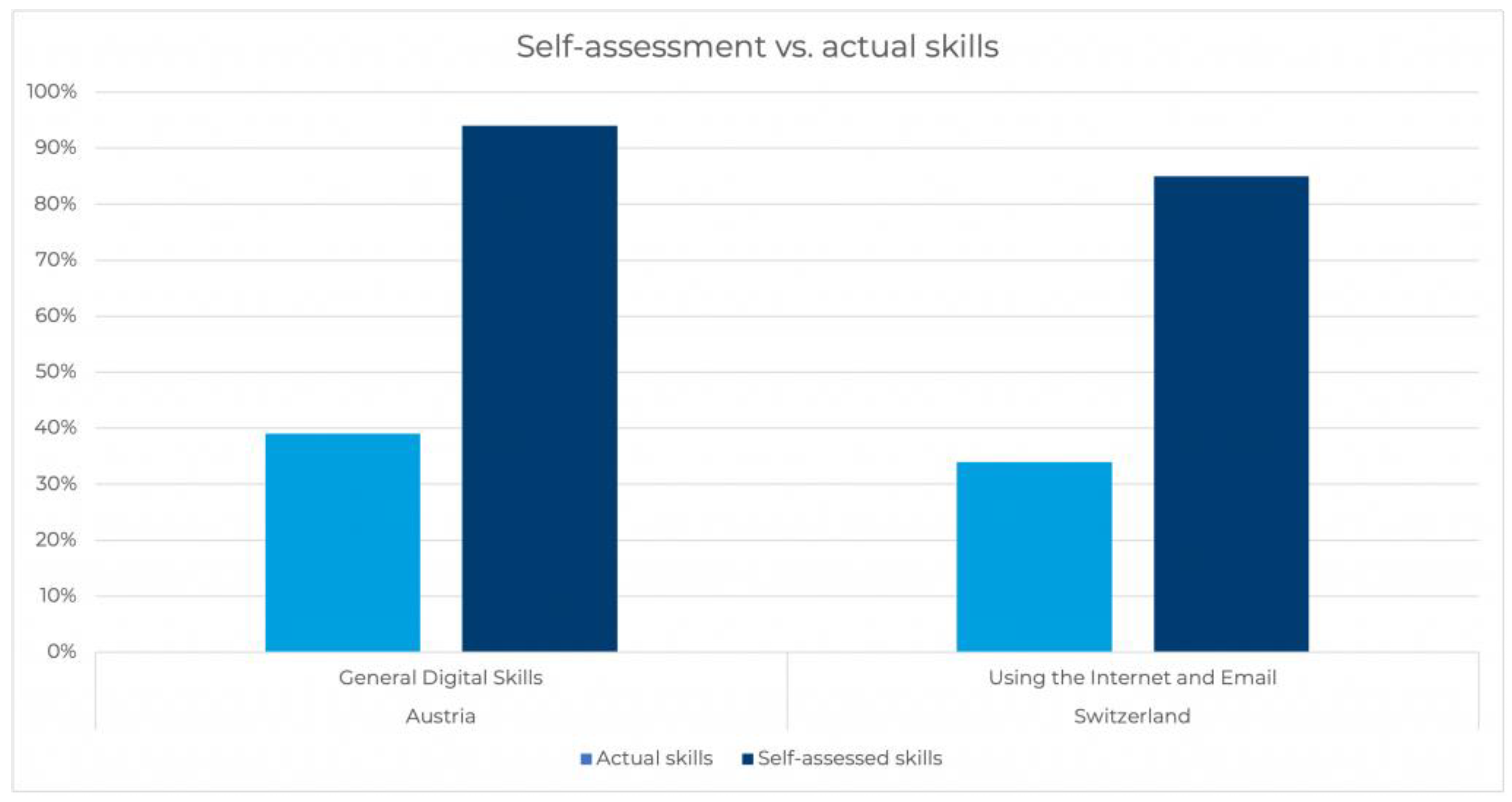

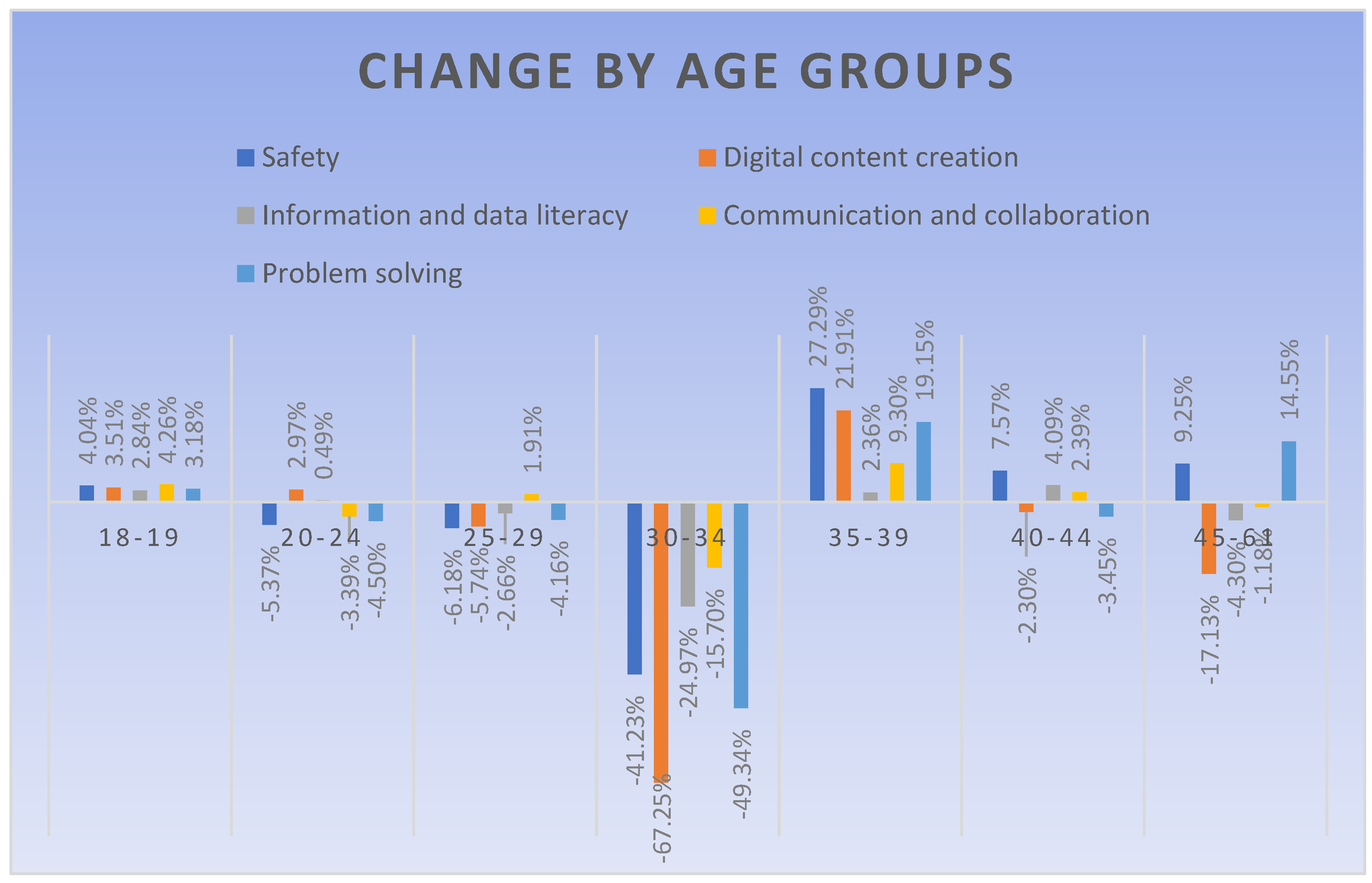

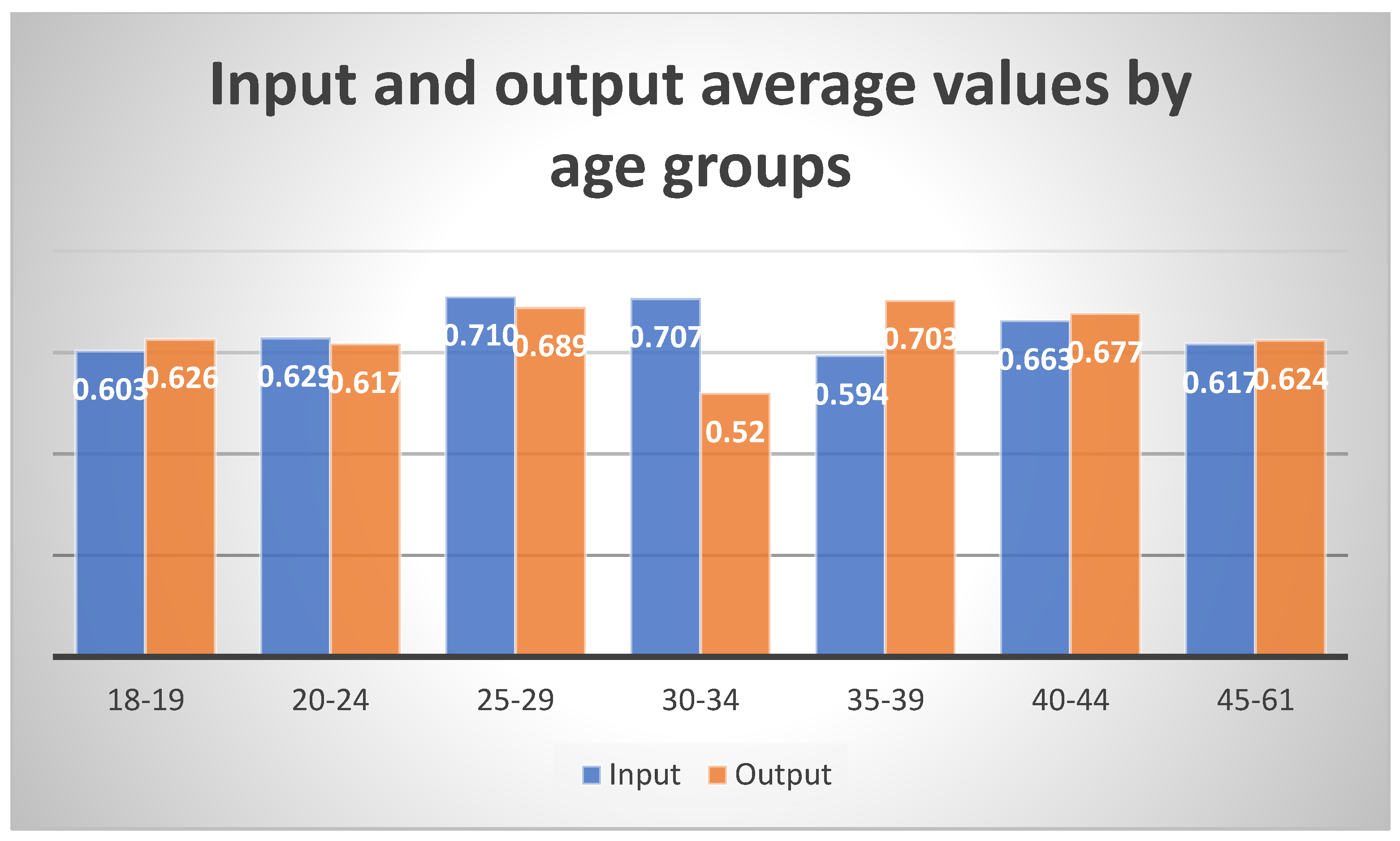

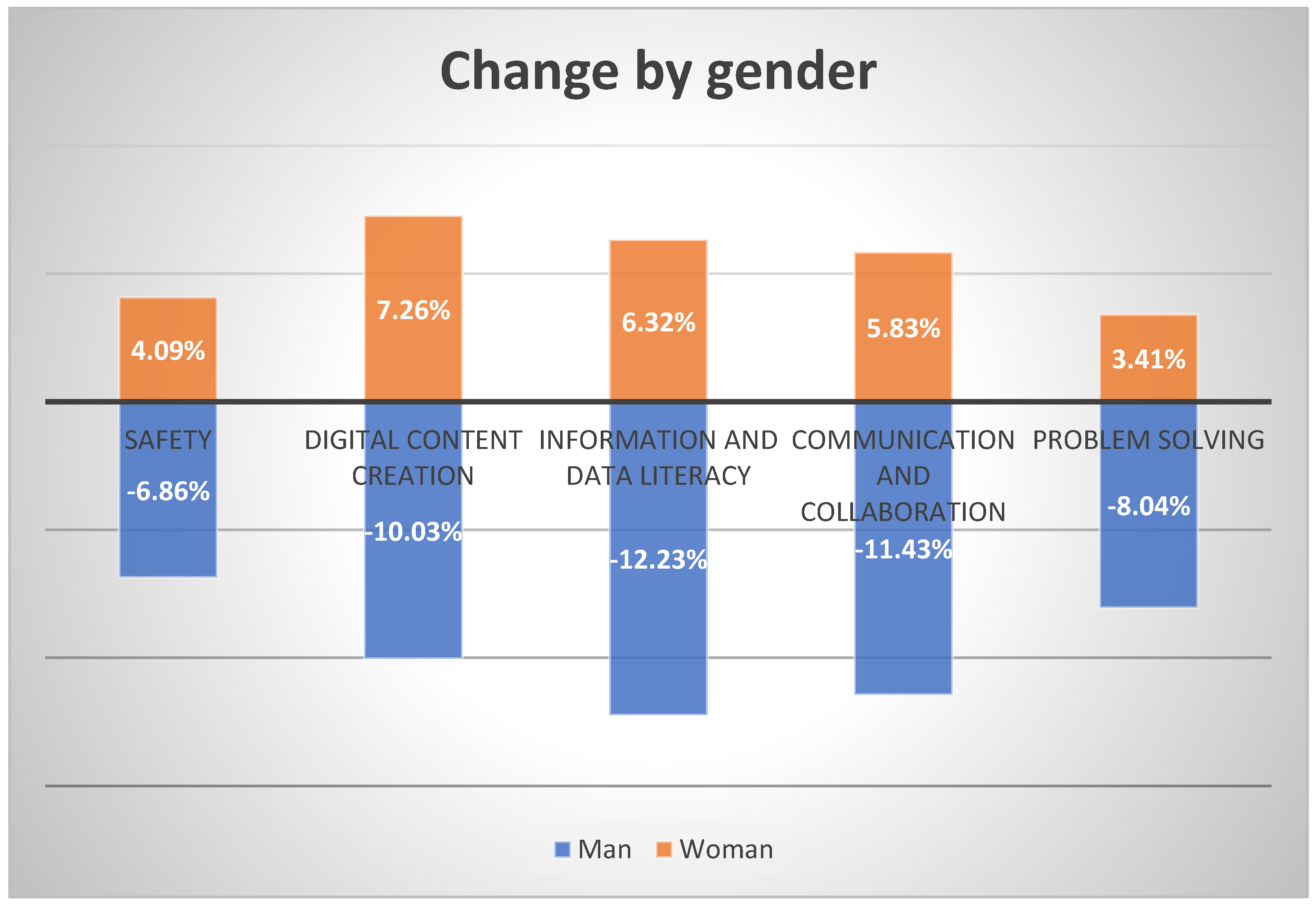

- During the research, we realized that the values based on self-assessment can shift, and that these shifts vary by age group, area, and range of competence.

- We also realized that these digital competencies can be developed and found some confirmation that limited results can be achieved even in such a short time.

- Our findings also showed that, in addition to self-assessment, independent survey solutions can show true competency levels and shifts.

- In addition, we realized that it would be better to examine the progress of a target group over longer periods of time, but more regularly.

- We can also state that the pilot version of this method will form the basis of the local competitiveness test, which will be implemented as part of a large-scale national survey.

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Parijkova, L. Digital Technologies in the Daily Life of the Alfa Generation—Research Gate, August 2020. Available online: https://www.researchgate.net/publication/348245098_DIGITAL_TECHNOLOGIES_IN_THE_DAILY_LIFE_OF_THE_ALFA_GENERATION (accessed on 10 July 2023).

- Budai, B.B. Települési Önkormányzatok az Információs Tengerben (Local Governments in the Sea of Information)—Pro Publico Bono—Magyar Közigazgatás, 2017/3, 196–215. Based on the Project: Önkormányzati Fejlesztések Figyelemmel Kísérése II. (Monitoring Local Government Developments), KÖFOP-2.3.4-VEKOP-15. 2017. Available online: https://folyoirat.ludovika.hu/index.php/ppbmk/article/view/1823 (accessed on 10 July 2023).

- Hrebenyk, I. Formation of Digital Competence of Leaders of Educational Institutions—Research Gate, January 2019. Open Educational E-Environment of Modern Universities. Available online: https://www.researchgate.net/publication/332983239_FORMATION_OF_DIGITAL_COMPETENCE_OF_LEADERS_OF_EDUCATIONAL_INSTITUTIONS (accessed on 10 July 2023).

- Budai, B.B. A Blockchain Hype és a Közigazgatási Realitás (Blockchain Hype and Public Administration Reality). E-GOV Hírlevél, Közigazgatás és Informatika. Recent Challenges in Governing Public Goods & Services. 2018. Available online: https://hirlevel.egov.hu/2018/04/08/blokklanc-es-kozigazgatas-tulzasok-es-realitas/ (accessed on 10 July 2023).

- Hawkins, R.; Paris, A.E. Computer Literacy and Computer Use Among College Students: Differences in Black and White. J. Negro Educ. 1997, 66, 147–158. [Google Scholar] [CrossRef]

- Schneckenberg, D.; Wildt, J. Understanding the Concept of eCompetence for Academic Staff. In The Challenge of eCompetence in Academic Staff Development; MacLabhrainn, I., McDonald Legg, C., Schneckenberg, D., Wildt, J., Eds.; CELT/eCompInt Publications: Galway, Ireland, 2006. [Google Scholar]

- EFOP 3.2.15_VEKOP-17- 2017-00001 Project on “A Köznevelés Keretrendszeréhez Kapcsolódó Mérési- Értékelési és Digitális Fejlesztések, Innovatív Oktatásszervezési Eljárások Kialakítása, Megújítása” (Development and Renewal of Measurement Evaluation and Digital Developments, Innovative Educational Organization Procedures Related to the Framework of Public Education). Oktatás 2030 Tanulástudományi Kutatócsoport. Available online: https://www.oktatas2030.hu/a-boldogulas-kulcsa-digitalis-kompetenciak/ (accessed on 10 July 2023).

- Novo Melo, P.; Machado, C. (Eds.) Management and Technological Challenges in the Digital Age; CRC Press: Boca Raton, FL, USA, 2018; ISBN 9781498787604. Available online: https://www.routledge.com/Management-and-Technological-Challenges-in-the-Digital-Age/Melo-Machado/p/book/9781498787604 (accessed on 10 July 2023).

- Gallardo-Echenique, E.E.; De Oliveira, J.M.; Marqués-Molias, L.; Esteve-Mon, F.; Wang, Y.; Baker, R. Digital Competence In The Knowledge Society. MERLOT J. Online Learn. Teach. 2015, 11, 1–17. [Google Scholar]

- European Parliament and Council. Recommendation of the European Parliament and of the Council of 18 December 2006 on Key Competences for Lifelong Learning. Off. J. Eur. Union 2006, 394, 10–18. Available online: https://eur-lex.europa.eu/eli/reco/2006/962/oj (accessed on 10 July 2023).

- Nuolijärvi, P.; Stickel, G. (Eds.) Language Use in Public Administration. Theory and Practice in the European States—European Federation of National Institutions of Language. Contributions to the EFNIL Conference in Helsinki 2015; Research Institute for Linguistics Hungarian Academy of Sciences: Budapest, Hungary, 2015; ISBN 978-963-9074-65-1. Available online: http://www.efnil.org/conferences/13th-annual-conference-helsinki/proceedings/EFNIL-Helsinki-Book-Final.pdf (accessed on 10 July 2023).

- Zouhar, J. On a Small Mother Tongue as a Barrier to Intercultural Policies: The Czech Language. Exedra Rev. Científica 2011, 25–34. [Google Scholar]

- Guardian. The Student Guide. Studying Public Administration without Mathematics. 2021. Available online: https://www.zambianguardian.com/studying-public-administration-without-mathematics/#google_vignette (accessed on 10 July 2023).

- Kausch-Zongo, J.; Schenk, B. General technological competency and usage in public administration education: An evaluation study considering on-the-job trainings and home studies. Smart Cities Reg. Dev. J. 2022, 6, 55–65. [Google Scholar]

- Wodecka-Hyjek, A.; Kafel, T.; Kusa, R. Managing digital competences in public administration. In People in Organization. Selected Challenges for Management; Skalna, I., Kusa, R., Eds.; AGH University of Science and Technology Press: Krakow, Poland, 2021. [Google Scholar] [CrossRef]

- Mele, V. SDA Bocconi School of Management—Theory to Practice. Methods for Studying Public Administration. 2020. Available online: https://www.sdabocconi.it/en/sda-bocconi-insight/theory-to-practice/government/methods-for-studying-public-administration?gclid=EAIaIQobChMIzcOTzt3W_QIVpo1oCR1bugkXEAAYAiAAEgJR5vD_BwE&gclsrc=aw.ds (accessed on 10 July 2023).

- Grimm, H.M.; Bock, C.L. Entrepreneurship in public administration and public policy programs in Germany and the United States. Teach. Public Adm. 2022, 40, 322–353. [Google Scholar] [CrossRef]

- Stare, J.; Klun, M. Required competencies in public administration study programs. Transylv. Rev. Adm. Sci. 2018, 55, 80–97. [Google Scholar] [CrossRef]

- Falloon, G. From digital literacy to digital competence: The teacher digital competency (TDC) framework. Educ. Technol. Res. Dev. 2020, 68, 2449–2472. [Google Scholar] [CrossRef]

- Ilomäki, L.; Paavola, S.; Lakkala, M.; Kantosalo, A. Digital competence—An emergent boundary concept for policy and educational research. Educ. Inf. Technol. 2016, 21, 655–679. [Google Scholar] [CrossRef]

- Pérez-Escoda, A.; Fernández-Villavicencio, N.G. Digital competence in use: From DigComp 1 to DigComp 2. In Proceedings of the Fourth International Conference on Technological Ecosystems for Enhancing Multiculturality, Salamanca, Spain, 2–4 November 2016; pp. 619–624. [Google Scholar] [CrossRef]

- Fraile, M.N.; Peñalva-Vélez, O.A.; Lacambra, A.M.M. Development of Digital Competence in Secondary Education Teachers’ Training. Educ. Sci. 2018, 8, 104. [Google Scholar] [CrossRef]

- EU Science Hub. Dig Comp Framework. 2018. Available online: https://joint-research-centre.ec.europa.eu/digcomp/digcomp-framework_en (accessed on 10 July 2023).

- Carretero, S.; Vuorikari, R.; Punie, Y. DigComp 2.1: The Digital Competence Framework for Citizens with Eight Proficiency Levels and Examples of Use; EUR 28558 EN; Publications Office of the European Union: Luxembourg, 2017. [CrossRef]

- ICDL. A Perception & Reality: Measuring Digital Skills Gaps in Europe, India, and Singapore. (ICDL, 2018). 2018. Available online: https://www.icdleurope.org/policy-and-publications/perception-reality-measuring-digital-skills-gaps-in-europe-india-and-singapore/#f4 (accessed on 10 July 2023).

- Clifford, I.; Kluzer, S.; Troia, S.; Jakobsone, M.; Zandbergs, U.; Vuorikari, R.; Punie, Y.; Castaño Muñoz, J.; Centeno Mediavilla, I.C.; O’Keeffe, W.; et al. (Eds.) Digcompsat—A Self-Reflection Tool for The European Digital Competence Framework for Citizens; Publications Office of The European Union: Luxembourg, 2020; ISBN 978-92-76-27592-3. [CrossRef]

- Vuorikari, R.; Kluzer, S.; Punie, Y. DigComp 2.2: The Digital Competence Framework for Citizens—With New Ex-amples of Knowledge, Skills and Attitudes; EUR 31006 EN; Publications Office of the European Union: Luxembourg, 2022; ISBN 978-92-76-48882-8. [CrossRef]

- Helsper, E.J. A Corresponding Fields Model for the Links Between Social and Digital Exclusion. Commun. Theory 2012, 22, 403–426. [Google Scholar] [CrossRef]

- van Deursen, A.J.; Helsper, E.J.; Eynon, R. Development and validation of the Internet Skills Scale (ISS). Inf. Commun. Soc. 2016, 19, 804–823. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Budai, B.B.; Csuhai, S.; Tózsa, I. Digital Competence Development in Public Administration Higher Education. Sustainability 2023, 15, 12462. https://doi.org/10.3390/su151612462

Budai BB, Csuhai S, Tózsa I. Digital Competence Development in Public Administration Higher Education. Sustainability. 2023; 15(16):12462. https://doi.org/10.3390/su151612462

Chicago/Turabian StyleBudai, Balázs Benjámin, Sándor Csuhai, and István Tózsa. 2023. "Digital Competence Development in Public Administration Higher Education" Sustainability 15, no. 16: 12462. https://doi.org/10.3390/su151612462

APA StyleBudai, B. B., Csuhai, S., & Tózsa, I. (2023). Digital Competence Development in Public Administration Higher Education. Sustainability, 15(16), 12462. https://doi.org/10.3390/su151612462