Temperature Prediction Based on STOA-SVR Rolling Adaptive Optimization Model

Abstract

1. Introduction

- (1)

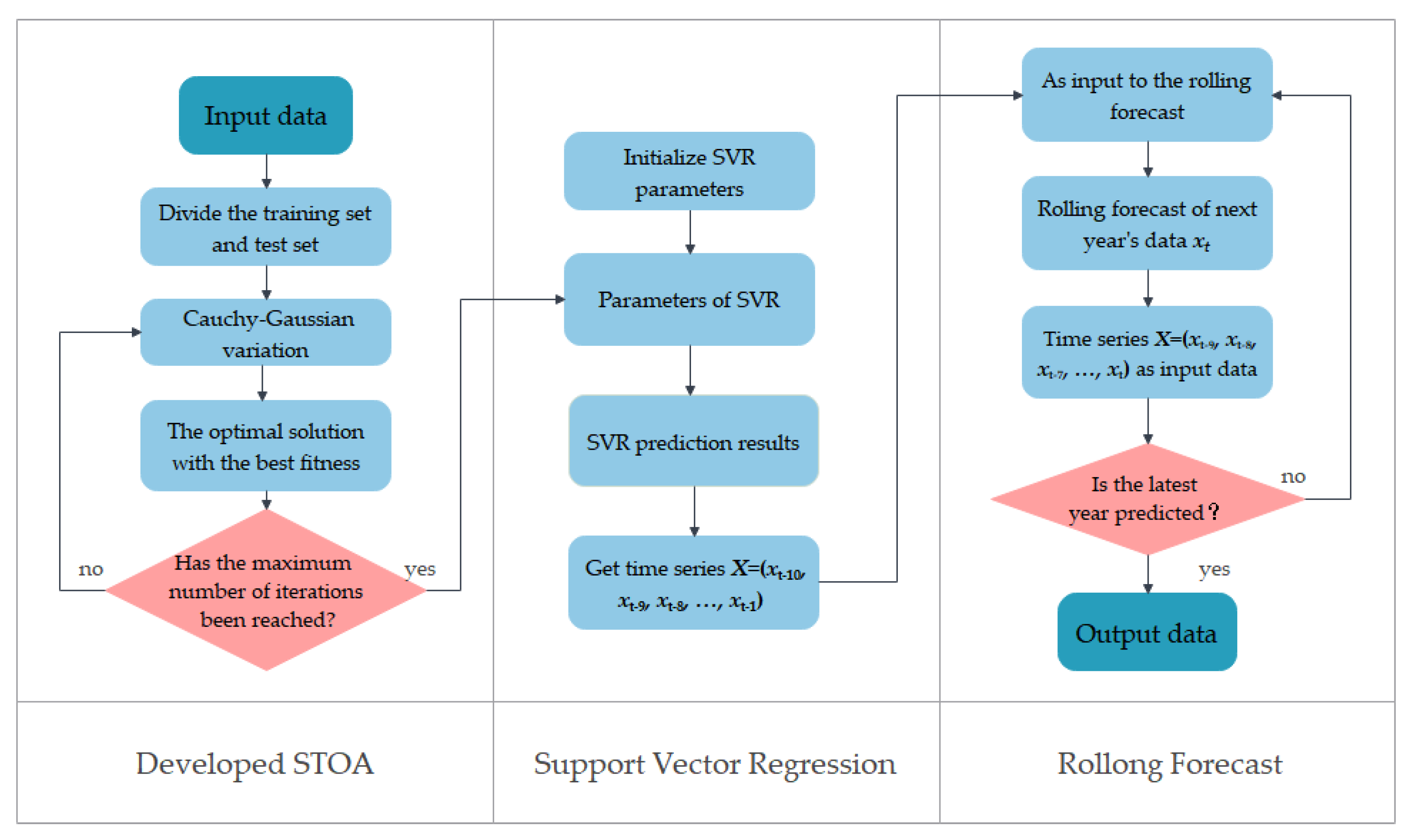

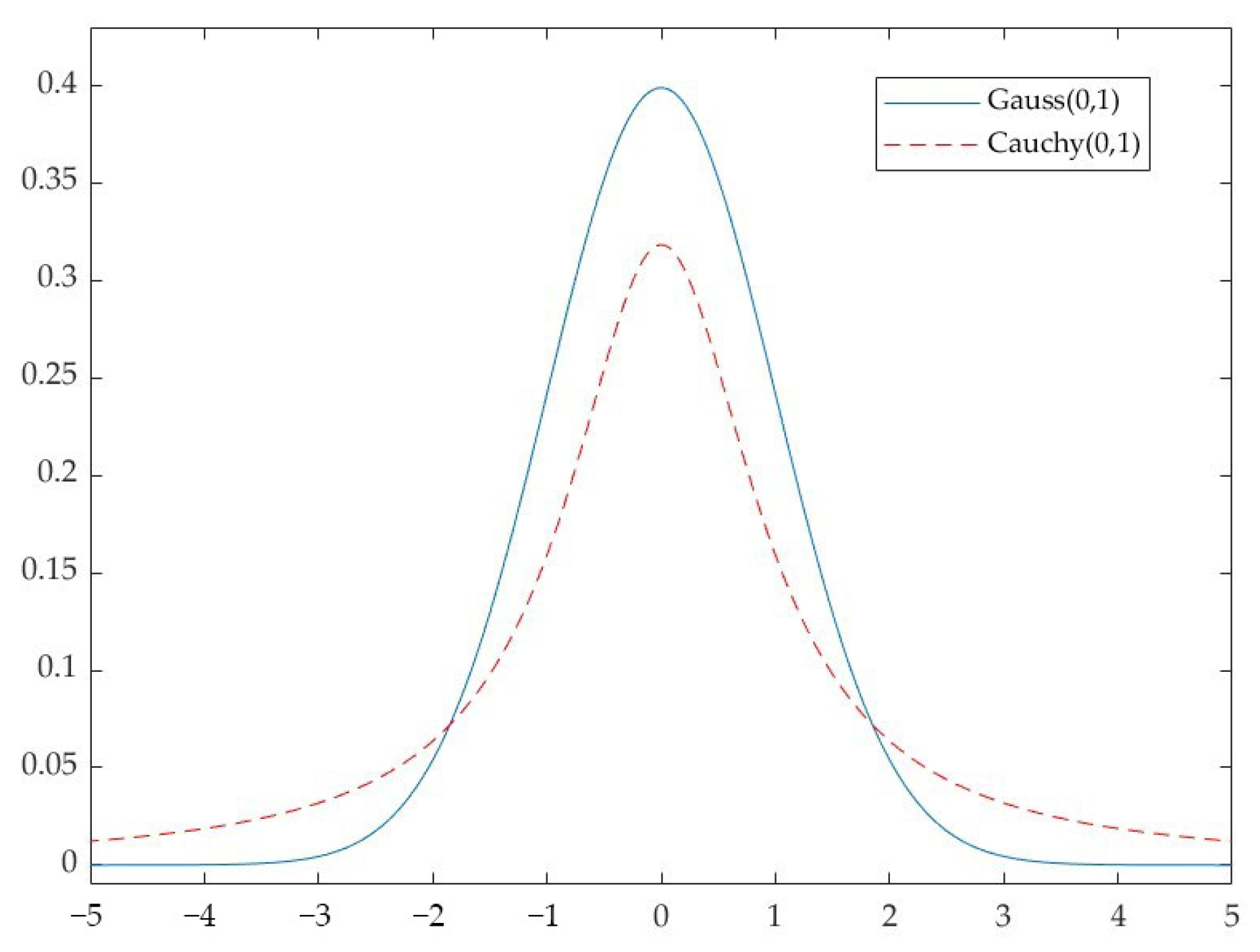

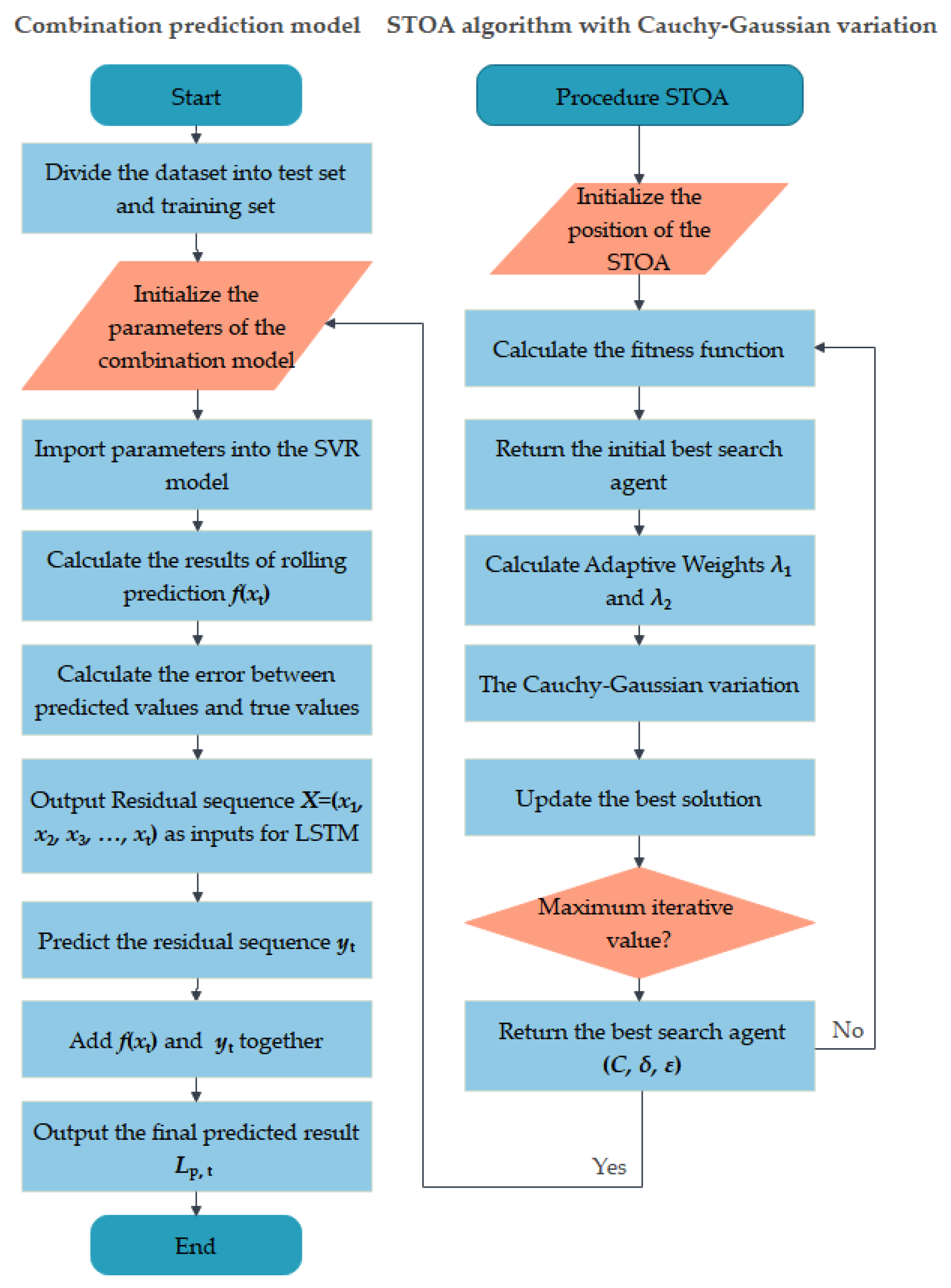

- The key parameters of the SVR model are optimized by using the sooty tern algorithm. Considering the problem that the sooty tern algorithm tends to fall into the local optimum, the model introduces the adaptive Gauss–Cauchy mutation operator to effectively increase the population diversity and search space.

- (2)

- This paper integrates the real-time updating and self-regulation principle based on rolling prediction into the SVR model, and it uses the updated data by eliminating the earliest data series so as to effectively improve the problem of accuracy decline caused by the long prediction cycle.

- (3)

- The SVR and long short-term memory (LSTM) models are applied to the research of temperature prediction. LSTM models can solve the problem that the traditional neural network model is unable to process the long time series to predict the residual error of SVR, so as to further improve the prediction accuracy.

2. Related Works

2.1. Predictive Model Based on a Single Method

2.2. Predictive Model Based on a Combination of Different Methods

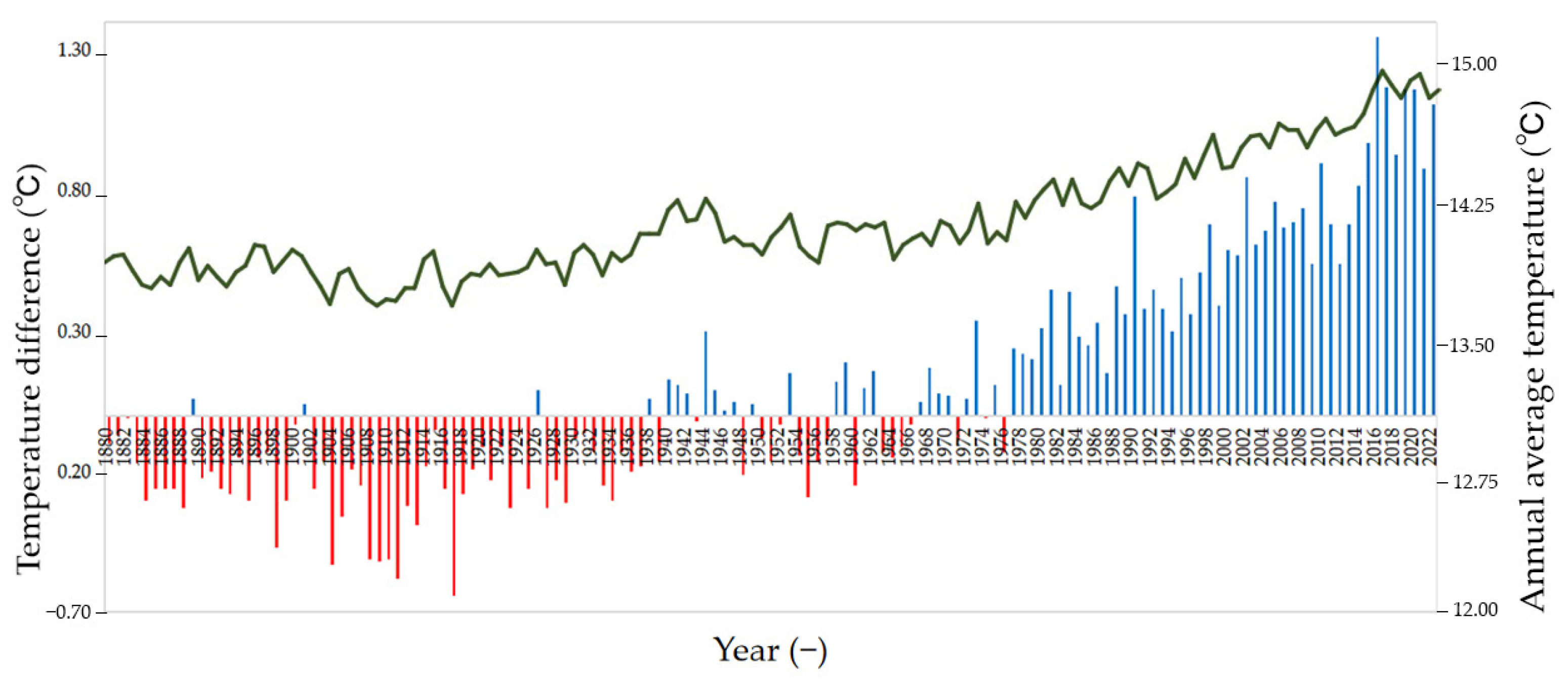

3. Data Preprocessing

4. Methodology

4.1. SVR with Fusion Rolling Prediction

4.1.1. Introduction of the SVR

4.1.2. SVM Regression with Rolling Prediction

4.2. Improved STOA Optimization Algorithm

4.2.1. Traditional STOA Optimization Algorithm

4.2.2. Improving the STOA Algorithm

4.3. STOA-SVR Rolling Temperature Prediction Based on Adaptive Optimization

5. Results

5.1. SVR Parameter Solution Based on Enhanced STOA

| Algorithm 1: SVR parameter solution based on improved STOA |

| Input: Data set D, population size N, maximum number of iterations Maxiterations, maximum Cmax and minimum Cmin of the penalty factor, maximum δmax and minimum δmin of the width parameter, and maximum εmax and minimum εmin of the insensitive loss function. |

| Output: The best parameter (C, δ, ε) best |

| 1: procedure STOA |

| 2: Initialize the parameters γ and β |

| 3: Calculate the fitness of each search agent |

| 4: (C, δ, ε) best ← the initial best search agent |

| 5: Calculate adaptive weights λ1 and λ2 |

| 6: Update the positions by using Equation (14) |

| 7: (C, δ, ε) best ← the best search agent after perturbation |

| 8: while (k < Maxiterations) do |

| 9: for each search agent do |

| 10: Update the positions of each agent by using Equation (12) |

| 11: end for |

| 12: Update the parameters γ and β |

| 13: Calculate the fitness value of each search agent |

| 14: Update (C, δ, ε) best if there is a better solution than previous optimal solution |

| 15: k ← k + 1 |

| 16: end while |

| 17: return (C, δ, ε) best |

| 18: end procedure |

5.2. Results of the SVR Rolling Forecast

6. Discussion

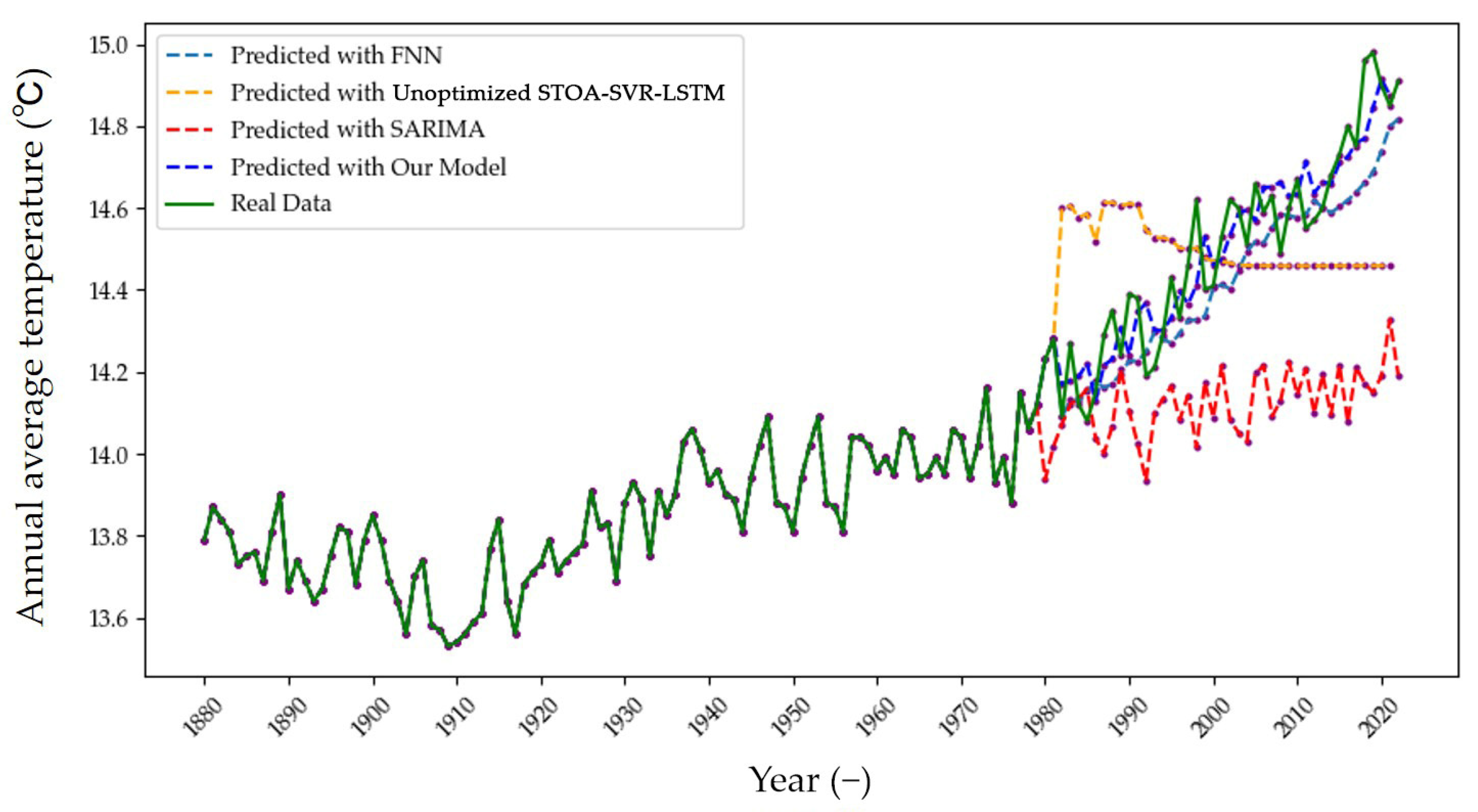

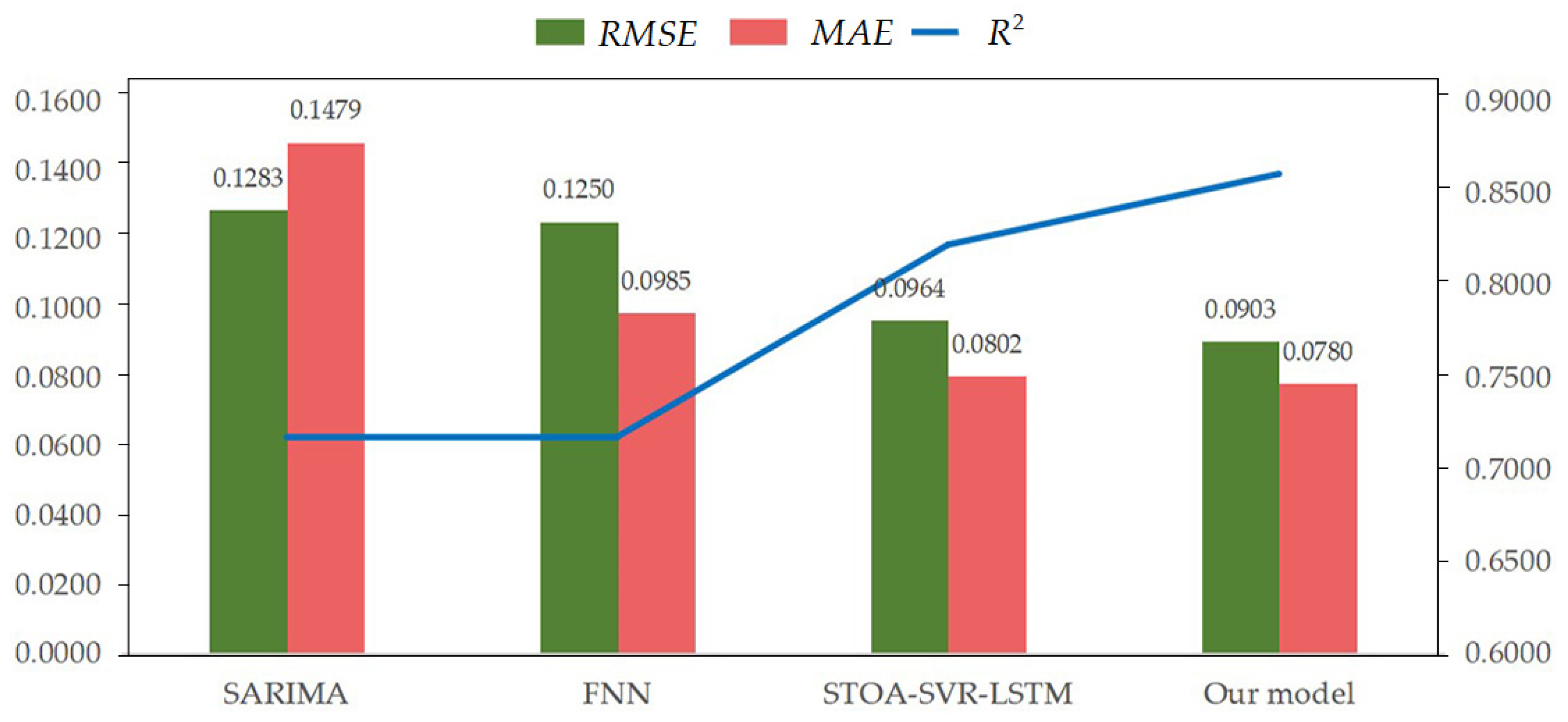

6.1. Comparative Experimental Results and Error Analysis

6.1.1. Comparative Experimental Results

6.1.2. Error Analysis

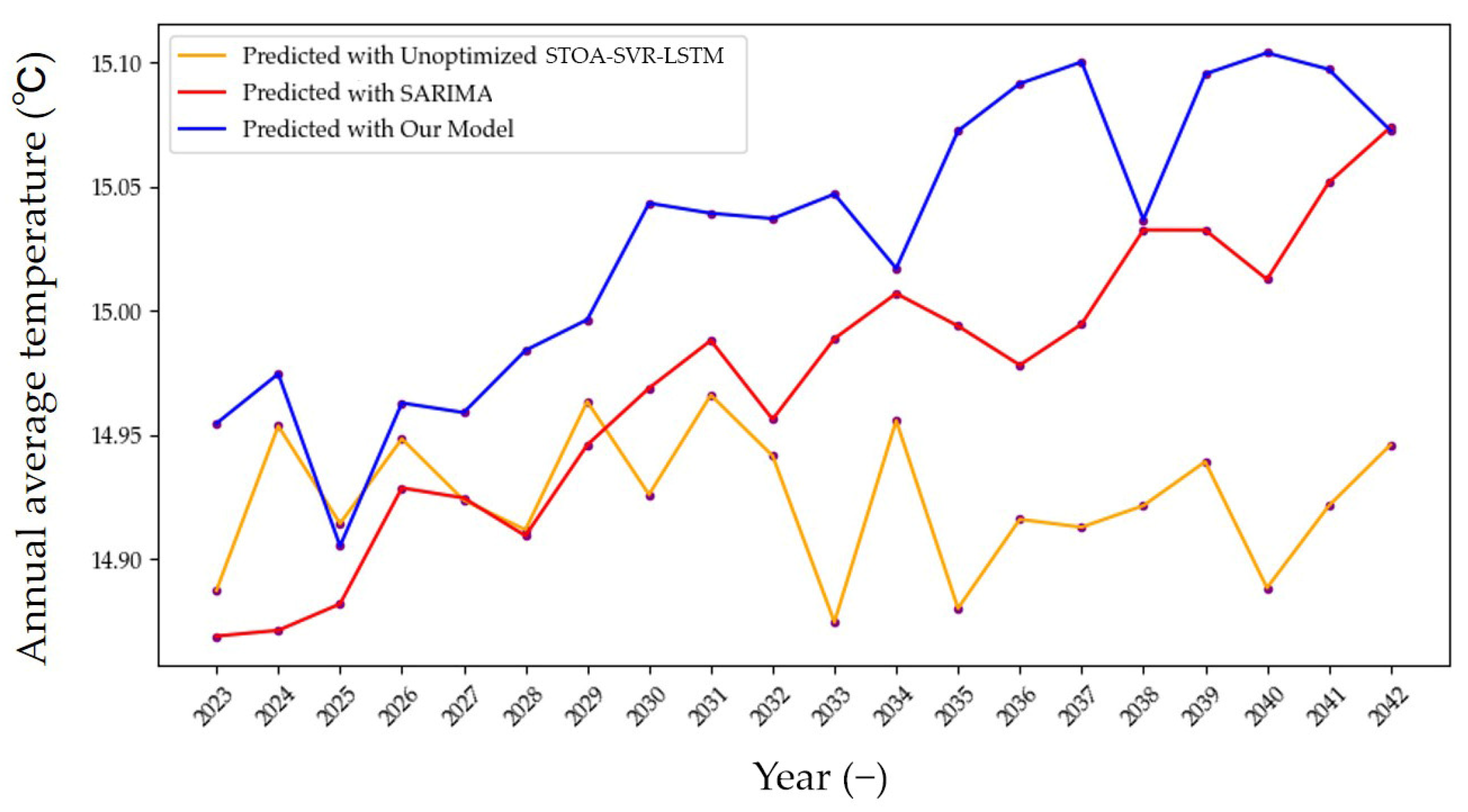

6.2. Prediction for the Next 20 Years

6.3. Modified Prediction Model Based on STOA-SVR-LSTM

7. Conclusions

- (1)

- A Cauchy–Gaussian variation was added to the sooty tern algorithm to ensure the diversity of the population and improve the ability of the algorithm to jump out of the local optimum. Then, the optimal parameters of the SVR model were obtained by using the improved STOA algorithm for rolling prediction to obtain the final prediction results.

- (2)

- Considering the influence of the SVR prediction residual on the results, the LSTM model was used to predict the residual sequence obtained by the STOA-SVR model, and the prediction accuracy was increased by about 1% by adding it to the prediction results obtained by the STOA-SVR model.

- (3)

- Three performance indices were used to evaluate the SARIMA, FNN, unimproved STOA-SVR-LSTM and improved STOA-SVR-LSTM models. The performance comparison of the prediction results shows that the STOA-SVR-LSTM rolling prediction model proposed in this paper can significantly improve the prediction accuracy.

- (4)

- The model predicts that the global mean temperature will increase by about 0.4976 °C in the next 20 years, with an increase rate of 3.43%.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Purnadurga, G.; Lakshmi Kumar, T.V.; Koteswara Rao, K.; Rajasekhar, M.; Narayanan, M.S. Investigation of temperature changes over India in association with meteorological parameters in a warming climate. Int. J. Climatol. 2018, 38, 867–877. [Google Scholar] [CrossRef]

- Andronova, N.G.; Schlesinger, M.E. Causes of global temperature changes during the 19th and 20th centuries. Geophys. Res. Lett. 2000, 27, 2137–2140. [Google Scholar] [CrossRef]

- Li, M.; Liu, H.; Yu, S.; Wang, J.; Miao, Y.; Wang, C. Estimating the Decoupling between Net Carbon Emissions and Construction Land and Its Driving Factors: Evidence from Shandong Province, China. Int. J. Environ. Res. Public Health 2022, 19, 8910. [Google Scholar] [CrossRef]

- Kaminskiy, V.; Asanishvili, N.; Bulgakov, V.; Kaminska, V.; Dukulis, I.; Ivanovs, S. Impact of global and regional climate changes upon the crop yields. J. Ecol. Eng. 2023, 24, 71–77. [Google Scholar] [CrossRef]

- Miszuk, B.; Adynkiewicz-Piragas, M.; Kolanek, A.; Lejcuś, I.; Zdralewicz, I.; Strońska, M. Climate changes and their impact on selected sectors of the Polish-Saxon border region under RCP8. 5 scenario conditions. Meteorol. Z. 2022, 31, 53–68. [Google Scholar] [CrossRef]

- Harnack, R.; Harnack, J.; Lanzante, J.R. Seasonal temperature predictions using a jackknife approach with an intraseasonal variability index. Mon. Weather Rev. 1986, 114, 1950–1954. [Google Scholar] [CrossRef]

- Zhang, X.; Dang, Y.; Ding, S.; Wang, J. A novel discrete multivariable grey model with spatial proximity effects for economic output forecast. Appl. Math. Model. 2023, 115, 431–452. [Google Scholar] [CrossRef]

- Liang, L. A method of antarctic temperature forecasting based on time series model. In Proceedings of the 2017 5th International Conference on Frontiers of Manufacturing Science and Measuring Technology (FMSMT 2017), Taiyuan, China, 24–25 June 2017; pp. 1039–1043. [Google Scholar]

- Saha, A.; Singh, K.N.; Ray, M.; Rathod, S.; Dhyani, M. Fuzzy rule–based weighted space–time autoregressive moving average models for temperature forecasting. Theor. Appl. Climatol. 2022, 150, 1321–1335. [Google Scholar] [CrossRef]

- Möller, A.; Groß, J. Probabilistic temperature forecasting based on an ensemble autoregressive modification. Quart. J. R. Meteorol. Soc. 2016, 142, 1385–1394. [Google Scholar] [CrossRef]

- Shi, L.; Liang, N.; Xu, X.; Li, T.; Zhang, Z. SA-JSTN: Self-attention joint spatiotemporal network for temperature forecasting. IEEE J. Select. Top. Appl. Earth Observ. Remote Sens. 2021, 14, 9475–9485. [Google Scholar] [CrossRef]

- Kim, Y.; Mark Berliner, L. Bayesian state space models with time-varying parameters: Interannual temperature forecasting. Environmetrics 2012, 23, 466–481. [Google Scholar] [CrossRef]

- Fister, D.; Perez-Aracil, J.; Pelaez-Rodriguez, C.; Del Ser, J.; Salcedo-Sanz, S. Accurate long-term air temperature prediction with Machine Learning models and data reduction techniques. Appl. Soft Comput. 2023, 136, 110118. [Google Scholar] [CrossRef]

- Joshi, P.; Ganju, A. Maximum and minimum temperature prediction over western Himalaya using artificial neural network. Mausam 2012, 63, 283–290. [Google Scholar] [CrossRef]

- Wei, L.; Guan, L.; Qu, L. Prediction of Sea Surface Temperature in the South China Sea by Artificial Neural Networks. IEEE Geosci. Remote Sens. Lett. 2020, 17, 558–562. [Google Scholar] [CrossRef]

- Haq, M.A.; Ahmed, A.; Khan, I.; Gyani, J.; Mohamed, A.; Attia, E.A.; Mangan, P.; Pandi, D. Analysis of environmental factors using AI and ML methods. Sci. Rep. 2022, 12, 13267. [Google Scholar] [CrossRef] [PubMed]

- Alomar, M.K.; Khaleel, F.; Aljumaily, M.M.; Masood, A.; Razali, S.F.M.; AlSaadi, M.A.; Al-Ansari, N.; Hameed, M.M. Data-driven models for atmospheric air temperature forecasting at a continental climate region. PLoS ONE 2022, 17, e0277079. [Google Scholar] [CrossRef]

- Radhika, Y.; Shashi, M. Atmospheric temperature prediction using support vector machines. Int. J. Comput. Theory Eng. 2009, 1, 55. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, Z.; Yang, Y.; Gao, J. ResGraphNet: GraphSAGE with embedded residual module for prediction of global monthly mean temperature. Artif. Intell. Geosci. 2022, 3, 148–156. [Google Scholar] [CrossRef]

- Aghelpour, P.; Mohammadi, B.; Biazar, S.M. Long-term monthly average temperature forecasting in some climate types of Iran, using the models SARIMA, SVR, and SVR-FA. Theor. Appl. Climatol. 2019, 138, 1471–1480. [Google Scholar] [CrossRef]

- Zhang, Z.; Dong, Y. Temperature forecasting via convolutional recurrent neural networks based on time-series data. Complexity 2020, 2020, 3536572. [Google Scholar] [CrossRef]

- Karevan, Z.; Suykens, J.A.K. Transductive LSTM for time-series prediction: An application to weather forecasting. Neural Netw. 2020, 125, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Baareh, A.K.M. Temperature forecasting system using fuzzy mathematical model: Case study Mumbai City. Int. J. Appl. Evol. Comput. 2018, 9, 48–57. [Google Scholar] [CrossRef]

- Jin, Y.; Wang, R.; Zhuang, X.; Wang, K.; Wang, H.; Wang, C.; Wang, X. Prediction of COVID-19 data using an ARIMA-LSTM hybrid forecast model. Mathematics 2022, 10, 4001. [Google Scholar] [CrossRef]

- Su, S. Nonlinear ARIMA models with feedback SVR in financial market forecasting. J. Math. 2021, 2021, 1519019. [Google Scholar] [CrossRef]

- Guo, Y.; Tang, W.; Hou, G.; Pan, F.; Wang, Y.; Wang, W. Research on precipitation forecast based on LSTM–CP combined model. Sustainability 2021, 13, 11596. [Google Scholar] [CrossRef]

- Ji, R.; Shi, S.; Liu, Z.; Wu, Z. Decomposition-Based Multi-Step Forecasting Model for the Environmental Variables of Rabbit Houses. Animals 2023, 13, 546. [Google Scholar] [CrossRef]

- Roy, D.S. Forecasting the air temperature at a weather station using deep neural networks. Procedia Comput. Sci. 2020, 178, 38–46. [Google Scholar] [CrossRef]

- Xiao, C.; Chen, N.; Hu, C.; Wang, K.; Xu, Z.; Cai, Y.; Xu, L.; Chen, Z.; Gong, J. A spatiotemporal deep learning model for sea surface temperature field prediction using time-series satellite data. Environ. Modell. Softw. 2019, 120, 104502. [Google Scholar] [CrossRef]

- Yang, J.; Huo, J.; He, J.; Xiao, T.; Chen, D.; Li, Y. A DBULSTM-Adaboost Model for Sea Surface Temperature Prediction. PeerJ Comput. Sci. 2022, 8, e1095. [Google Scholar] [CrossRef]

- Nketiah, E.A.; Chenlong, L.; Yingchuan, J.; Aram, S.A. Recurrent neural network modeling of multivariate time series and its application in temperature forecasting. PLoS ONE 2023, 18, e0285713. [Google Scholar] [CrossRef]

- Tran, T.K.T.; Lee, T.; Shin, J.-Y.; Kim, J.-S.; Kamruzzaman, M. Deep learning-based maximum temperature forecasting assisted with meta-learning for hyperparameter optimization. Atmosphere 2020, 11, 487. [Google Scholar] [CrossRef]

- Tao, H.; Ewees, A.A.; Al-Sulttani, A.O.; Beyaztas, U.; Hameed, M.M.; Salih, S.Q.; Armanuos, A.M.; Al-Ansari, N.; Voyant, C.; Shahid, S. Global solar radiation prediction over North Dakota using air temperature: Development of novel hybrid intelligence model. Energy Rep. 2021, 7, 136–157. [Google Scholar] [CrossRef]

- Zhang, X.; Xiao, Y.; Zhu, G.; Shi, J. A coupled CEEMD-BiLSTM model for regional monthly temperature prediction. Environ. Monit. Assess. 2023, 195, 379. [Google Scholar] [CrossRef] [PubMed]

- Elshewey, A.M.; Shams, M.Y.; Elhady, A.M.; Shohieb, S.M.; Abdelhamid, A.A.; Ibrahim, A.; Tarek, Z. A Novel WD-SARIMAX model for temperature forecasting using daily delhi climate dataset. Sustainability 2022, 15, 757. [Google Scholar] [CrossRef]

- National Oceanic and Atmospheric Administration. Biden-Harris Administration Considers National Marine Sanctuary in Pennsylvania’s Lake Erie. Available online: https://www.noaa.gov/ (accessed on 21 April 2023).

- Üstün, B.; Melssen, W.J.; Buydens, L.M.C. Visualisation and interpretation of support vector regression models. Anal. Chim. Acta 2007, 595, 299–309. [Google Scholar] [CrossRef]

- Dhiman, G.; Kaur, A. STOA: A bio-inspired based optimization algorithm for industrial engineering problems. Eng. Appl. Artif. Intell. 2019, 82, 148–174. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Mirjalili, S.; Chakrabortty, R.K.; Ryan, M.J. MOEO-EED: A multi-objective equilibrium optimizer with exploration–exploitation dominance strategy. Knowl.-Based Syst. 2021, 214, 106717. [Google Scholar] [CrossRef]

- Python Release 3.9.6. Available online: https://www.python.org/downloads/release/python-396/ (accessed on 20 May 2023).

- Knight, J.; Kennedy, J.J.; Folland, C.; Harris, G.; Jones, G.S.; Palmer, M.; Parker, D.; Scaife, A.; Stott, P. Do global temperature trends over the last decade falsify climate predictions. Bull. Am. Meteorol. Soc. 2009, 90, 22–23. [Google Scholar]

- Kerr, R.A. What happened to global warming? Scientists say just wait a bit. Science 2009, 326, 28–29. [Google Scholar] [CrossRef]

- IPCC. Climate Change 2013: The Physical Science Basis. Contribution to Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2013. [Google Scholar]

- Lean, J.L.; Rind, D.H. How will Earth’s surface temperature change in future decades? Geophys. Res. Lett. 2009, 36, L15708. [Google Scholar] [CrossRef]

- Zhou, T. New physical science behind climate change: What does IPCC AR6 tell us? Innovation 2021, 2, 100173. [Google Scholar] [CrossRef] [PubMed]

| Methods | Advantages | Disadvantages |

|---|---|---|

| Mathematical statistical-based forecasting methods | ① The main models include time series models, gray prediction, differential equation models, etc. These models can fully utilize data information, compute faster, require less data and can determine model parameters dynamically. ② Some models can show a significant relationship between dependent variables and independent variables and have good statistical properties. | ① Over-reliance on existing data can lead to large errors in long-term forecasting and is only suitable for short-term forecasting. ② The model is relatively simple, the factors considered are insufficient and the forecasting results are one-sided. ③ Most mathematical statistical models are linear models that cannot capture non-linear segments of complex time series. |

| Machine learning-based methods | ① The main models include ANN, CNN, RNN, LSTM, GA models, etc. The computation is simple, with strong robustness, memory, and strong self-learning ability. ② Some machine learning, such as SVM, is easy to find the global optimal solution; suitable for small samples. | ① Sensitive to the selection of some hyper-parameters, which requires tedious parameter adjustment. ② For some algorithms, such as neural network and GA, the learning process inside the model cannot be observed; the output results are difficult to predict. ③ Learning time is too long, may not achieve the purpose of learning. |

| Combination of different methods | ① Combined with the advantages of mathematical statistics and machine learning, it can capture the overall change rules of data sets with complex motion laws and reduce information loss. ② It has higher prediction accuracy and stability than a single prediction model. | It is necessary to select multi-dimensional and huge training data for model construction to improve the reliability of the model and greatly increase the computational complexity. |

| Methods | SARIMA | FNN | Unimproved STOA-SVR-LSTM Model | Our Model |

|---|---|---|---|---|

| RMSE | 0.1283 | 0.1250 | 0.0964 | 0.0903 |

| MAE | 0.1479 | 0.0985 | 0.0802 | 0.0800 |

| R2 | 0.7172 | 0.7175 | 0.8218 | 0.8722 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, S.; Du, Y.; Xu, Z.; Qin, X.; Chen, J. Temperature Prediction Based on STOA-SVR Rolling Adaptive Optimization Model. Sustainability 2023, 15, 11068. https://doi.org/10.3390/su151411068

Shen S, Du Y, Xu Z, Qin X, Chen J. Temperature Prediction Based on STOA-SVR Rolling Adaptive Optimization Model. Sustainability. 2023; 15(14):11068. https://doi.org/10.3390/su151411068

Chicago/Turabian StyleShen, Shuaihua, Yanxuan Du, Zhengjie Xu, Xiaoqiang Qin, and Jian Chen. 2023. "Temperature Prediction Based on STOA-SVR Rolling Adaptive Optimization Model" Sustainability 15, no. 14: 11068. https://doi.org/10.3390/su151411068

APA StyleShen, S., Du, Y., Xu, Z., Qin, X., & Chen, J. (2023). Temperature Prediction Based on STOA-SVR Rolling Adaptive Optimization Model. Sustainability, 15(14), 11068. https://doi.org/10.3390/su151411068