Abstract

Due to the uncertainty and randomness of clean energy, microgrid operation is often prone to instability, which requires the implementation of a robust and adaptive optimization scheduling method. In this paper, a model-based reinforcement learning algorithm is applied to the optimal scheduling problem of microgrids. During the training process, the current learned networks are used to assist Monte Carlo Tree Search (MCTS) in completing game history accumulation, and updating the learning network parameters to obtain optimal microgrid scheduling strategies and a simulated environmental dynamics model. We establish a microgrid environment simulator that includes Heating Ventilation Air Conditioning (HVAC) systems, Photovoltaic (PV) systems, and Energy Storage (ES) systems for simulation. The simulation results show that the operation of microgrids in both islanded and connected modes does not affect the training effectiveness of the algorithm. After 200 training steps, the algorithm can avoid the punishment of exceeding the red line of the bus voltage, and after 800 training steps, the training result converges and the loss values of the value and reward network converge to 0, showing good effectiveness. This proves that the algorithm proposed in this paper can be applied to the optimization scheduling problem of microgrids.

1. Introduction

With the growth of the global economy, the pressing issues of rising energy demand and environmental protection have become major concerns for researchers. Distributed energy system (DES) [1,2,3,4] and multi-energy system (MES) integrate advanced communication and control technologies with the power system and have become the mainstream solution [5,6]. Microgrids, due to their ability to improve the flexibility, reliability, and power quality of the grid, have become an important component of these systems. Microgrids provide an alternative to centralized power generation and long-distance transmission that address their inherent limitations. Despite the benefits, the variability and intermittent nature of clean energy production pose challenges to the safe and stable operation of microgrids. As a result, optimizing scheduling for microgrids has garnered considerable attention to ensure the secure and stable grid connection of clean energy production capacity.

Currently, there have been relatively mature technological developments in the field of economic scheduling for distributed energy and multi-energy systems [7,8,9,10]. However, the problem of scheduling and management of microgrids as energy vehicles relatively relies on traditional optimization models and algorithms. Heuristic algorithms are an extremely important part of traditional optimization algorithms, such as the particle swarm optimization algorithm for microgrid management proposed by Eseye et al. [11] and Zeng et al. [12], are the primary approaches utilized to resolve microgrid optimal issues. In 2018, Elsayed et al. [13] proposed a microgrid energy management method based on the random drift particle swarm optimization algorithm. Moreover, Zare et al. [14] applied the mixed-integer linearization method (MILP) to microgrid optimization problems in 2016. Other researchers have combined energy security issues with economic optimization issues, proposing a two-level energy scheduling approach [15] and an alternating direction method of multipliers (ADMM) [16]. Nevertheless, traditional optimal scheduling algorithms necessitate the accurate establishment of environmental dynamics models, which cannot be adaptively applied to various microgrid structures. Heuristic algorithms, such as particle swarm optimization (PSO), tend to converge to local optimal solutions due to gradient-based parameter updates. Moreover, the algorithm parameters cannot be optimized and need to be adjusted based on experience. In summary, due to the constantly changing scenario of microgrids and the complexity and diversity of energy coupling, heuristic algorithms have defects such as high dependence on experience selection, poor generalization, and poor robustness.

Fuzzy optimization algorithms are efficient in handling uncertainties and ambiguity, which makes them suitable for solving complex coupling scenarios of multiple energy sources in microgrids. Fossatì et al. [17] and Banaì et al. [18] have demonstrated the feasibility of applying fuzzy optimization to the optimization scheduling problem of microgrids. However, fuzzy optimization methods suffer from the uncertainties and ambiguity of decision variables and constraints, as well as the complexity of solving the problem, and the poor interpretability of the results.

The advancement of big data technology and the emergence of neural networks have facilitated the application of reinforcement learning (RL) in various domains [19]. Reinforcement learning combines deep neural networks with Markov decision process (MDP) optimization procedures and is a learning mechanism that maps environmental observations to selected action values. Subsequently, the reinforcement learning process updates neural network parameters through the pursuit of maximum rewards from environmental feedback. Reinforcement learning explains the decision-making process by establishing a state-action-reward model. It continuously learns through trial or error and feedback to obtain the optimal strategy. This makes it more efficient, interpretable, generalizeable, and flexible which is compared to heuristic algorithms. As such, reinforcement learning has the potential to meet the requirements for optimizing microgrid scheduling problems.

Currently, the prevailing algorithm utilized in integrated energy systems is model-free reinforcement learning, which has shown remarkable performance and learning outcomes in microgrid optimization scheduling. By optimizing the loss/reward function, this approach only updates neural network parameters between states and actions and does not require specific environmental dynamics modeling. The application of Q-learning to microgrid optimization was the first proposal [20,21,22], but the limited effectiveness of Q-learning in the field of microgrid scheduling was attributed to the large Q-table and overestimated Q-value. Double Q-learning (DQN) [23] and Deep Deterministic Policy Gradient (DDPG) [24,25] have achieved more promising results than Q-learning with the emergence of deep neural networks and strategy gradient optimization. To address the overestimation and high square error drawbacks of the DDPG algorithm, Garrido et al. [26] applied the TD3 algorithm to microgrids. Furthermore, Schulman et al. [27] proposed the PPO algorithm in the year 2017, which OpenAI has adopted as the baseline for reinforcement learning algorithms. PPO has been extended to the microgrid management domain [28].

Nonetheless, model-free reinforcement learning algorithms have many limitations as they only establish a connection between observation values and action values through the optimization object, disregarding the effectiveness of the environmental dynamic foundation. Consequently, modifying the optimization task may not result in the anticipated outcome using the original value path. In the face of this obstacle, the proposed approach entails the need for neural network reconstruction and parameter optimization, which ultimately results in longer training duration and compromised model resilience.

Model-based reinforcement learning [29] is intended to address these issues by starting with learning the environmental dynamic model and then planning based on the acquired model. Typically, these models focus on either reconstructing the accurate state of the environment [30,31,32] or completing observational sequences [33]. In 2016, Google’s DEEPMIND produced the AlphaGo algorithm, which triumphed over human chess players in the field of Go. Energy scheduling techniques with the AlphaGo algorithm as the core notion have also been adopted in the microgrid arena. For instance, Li et al. [34] established a distribution system outage repair system utilizing tree search as the primary strategy. Nonetheless, AlphaGo is a model-based reinforcement learning algorithm that requires prior knowledge from experts in the domain of Go before training, such as the rules for playing chess and winning or losing. Therefore, it is challenging to implement AlphaGo in general optimization control problems. Another limitation of AlphaGo is the high dimensional observation space, which requires large space and increased training time, resulting in excessive costs for training. The comparison of advantages and disadvantages of relevant research on microgrid optimization scheduling problems is shown in Table 1.

Table 1.

Frequency used notation.

The generalization, flexibility, and low dependence on professional knowledge of model-based reinforcement learning algorithms make them suitable for constantly changing scenarios, complex energy structures, and diverse optimization tasks in microgrids. The established environment model and policy model provide interpretability to the results. The utilization of deep neural networks enhances the accuracy and reliability of the results. Therefore, this paper borrows the algorithm ideas of model-based reinforcement learning to optimize the scheduling of results.

To address the problems identified in the above analysis, this paper introduces a microgrid energy scheduling method that combines environmental modeling with optimal strategy learning. The key contributions of this research are summarized as follows:

- This study converts the microgrid optimization scheduling issue into reinforcement learning tasks by identifying the observation space, state space, and reward functions within the microgrid model.

- This paper introduces model-based reinforcement learning to address the optimal scheduling problem in microgrid systems, which adopts the MuZero algorithm’s excellent performance in discrete action spaces into the application of Monte Carlo Tree Search for the determination of the optimal strategy.

- This paper builds a joint training model for three major networks to enable the algorithm to learn and optimize simultaneously for environmental dynamic and optimal strategy determination without prior professional knowledge.

The following organization is adopted in this paper: in Section 2, the microgrid scheduling model is developed. The relevant principles and processes of the algorithm are provided in Section 3. Subsequently, in Section 4, the simulation results are presented, while Section 5 summarizes the research study.

2. Microgrid Scheduling Model

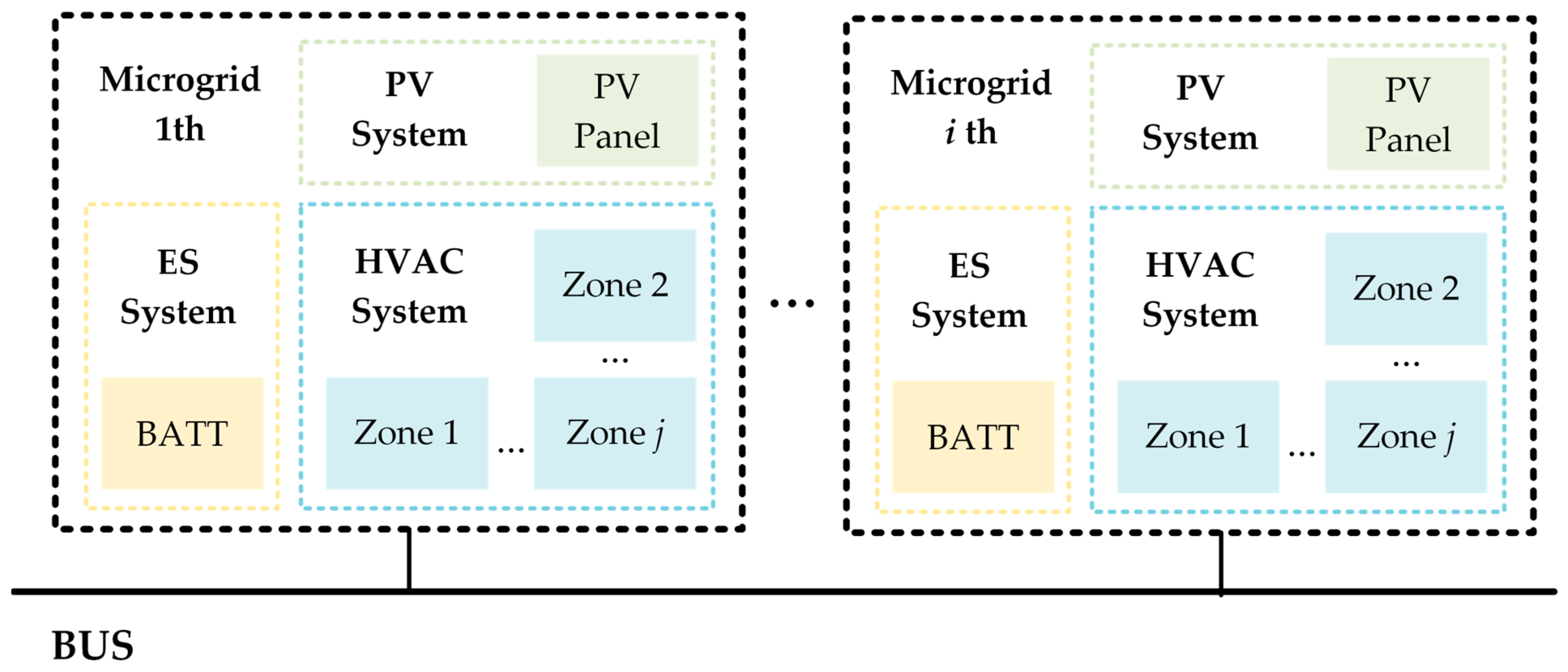

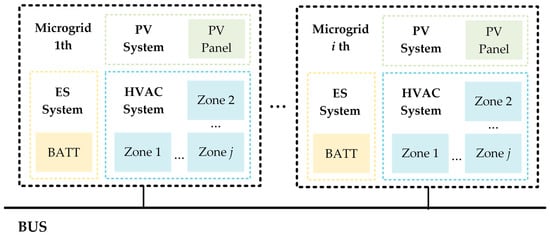

Inspired by the microgrid model proposed by Biagion et al. [35], known as the Power Grid World, we have established a new microgrid model that incorporates HVAC, PV, and ES. The microgrid structure is presented in Figure 1.

Figure 1.

The Structure of Microgrid Scheduling Model.

The following section provides a detailed description of the model used by the ith microgrid.

2.1. HVAC System

The system mainly refers to the load within the microgrid model, which aims to regulate the output of HVAC power and discharge temperature in order to balance the internal space temperature. The state space, action space, and reward function of the HVAC system are described below.

The observation space of the HVAC system is:

where represents the outdoor temperature. represents the power consumption. And represents the current voltage of the bus where the ith microgrid is located. Where , represents the temperature collection of all spaces in the ith microgrid and represents the temperatures in the jth space of the HVAC system. And represents the upper and lower limits of the comfort temperature at the current moment.

The action space of the HVAC system is:

where represents the flow rate of HVAC in jth space of the system, with the range of . And represents the discharge temperature of ith microgrid, with a range of .

To achieve the objectives of temperature comfort maintenance comfort and minimizing power consumption in the system, this paper proposes the design of separate reward functions.

2.1.1. Discomfort Reward

To ensure that the temperature in each space falls within a range that would make people inside feel comfortable, a discomfort reward is established to penalize observations that exceed the maximum comfortable temperature or fall below the minimum comfortable temperature. Firstly, the difference is obtained by comparing the comfort temperature limits, and the temperature of each space at the given moment t:

After obtaining the temperature difference, the uncomfortable temperature value of the jth space is calculated as follows:

Compute the discomfort reward by taking the sum of squared discomfort temperature values for each space:

2.1.2. Energy Consumption Reward

To attain the objective of maximizing energy conservation and minimizing emission, energy consumption incentives are implemented as a measure against high-power consumption. The energy consumption incentives are exclusively linked to power consumption, and their implementation aims to reduce energy consumption:

2.1.3. HVAC System Reward

With regards to the discomfort reward and energy consumption reward, this article formulates the reward intended for the HVAC system design:

where are the balance coefficients used to balance discomfort rewards and energy consumption rewards to satisfy the different control requirements.

2.2. PV System

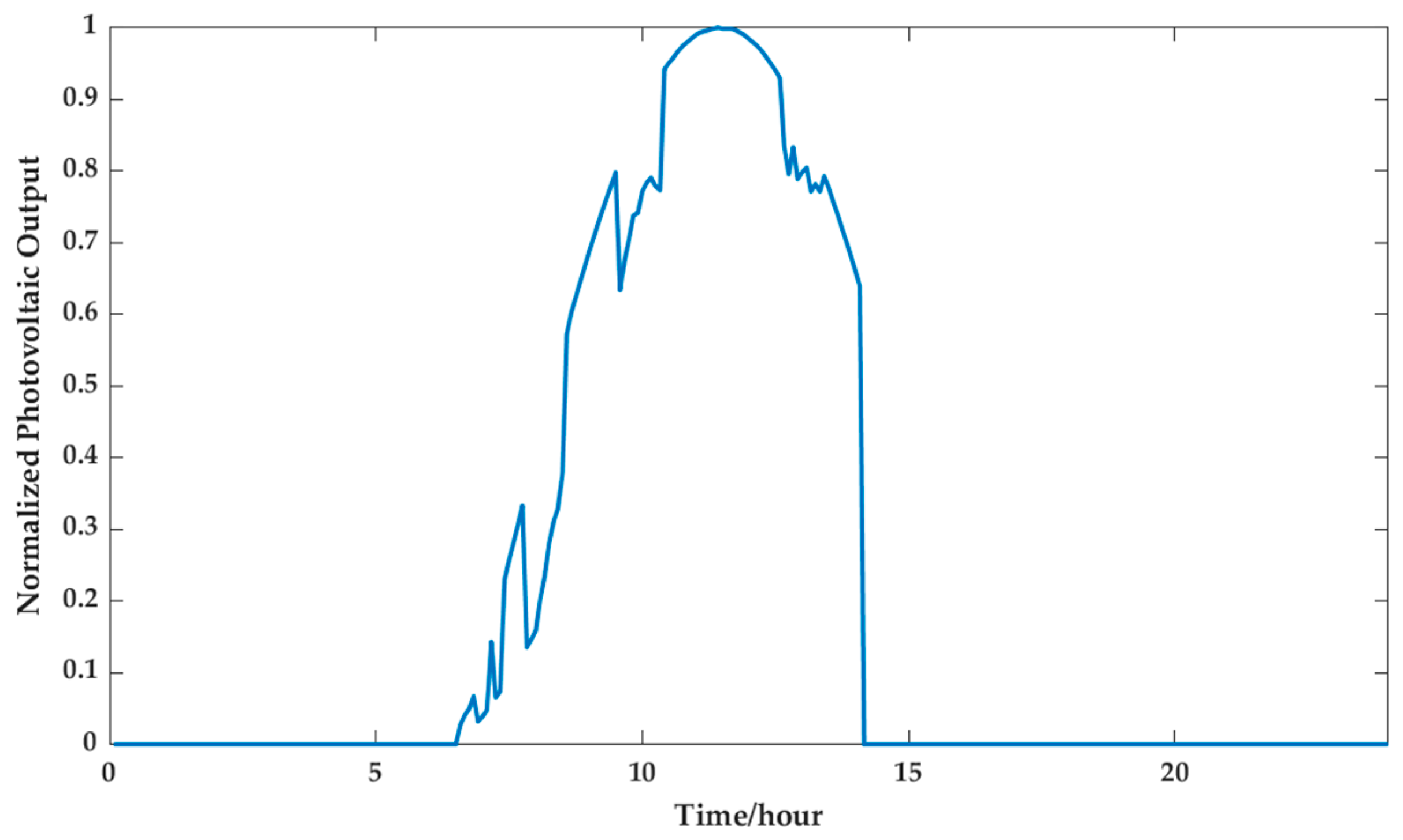

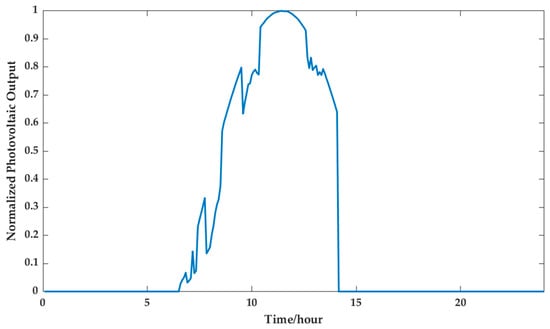

Photovoltaic power generation is considered a clean and sustainable energy source. However, due to the inherent uncertainty and randomness in its production capacity, there exist potential safety hazards in connecting it to the electrical grid. According to the research data of Biagion et al. [35], the photovoltaic power generation during a day mainly exhibits a parabolic shape, similar to the curve of solar radiation energy. The normalized daily photovoltaic power generation data is shown in Figure 2.

Figure 2.

Variations in Normalized Photovoltaic Power Generation throughout a Day.

As productivity equipment, this paper integrates the photovoltaic (PV) system into the Microgrid Scheduling Model. In this model, the PV system serves as the production capacity equipment that generates power for other parts of the system. The subsequent sections highlight the state space, action space, operation model and reward function associated with a PV system.

The observation space of the PV system is:

where represents the actual production capacity at time t, and this part of the observation value is provided by the actual observation data of a day.

The action space of the PV system is:

where is the ratio between the production capacity at time t and the local consumption, which is defined as the consumption rate of clean energy.

Based on the aforementioned physical definitions of observation and action space, it can be inferred that the effective electrical energy input from the PV system to the microgrid is:

where represents the actual amount of electrical energy that is being consumed in the jth microgrid at a given time t.

To ensure consistent and sustainable clean energy absorption by the microgrid, t the paper designs the reward functions of photovoltaic systems in the form of a defined light rejection rate as follows:

where represents the maximum energy that the electronic bus can transport.

2.3. ES System

The Microgrid Scheduling Model integrates an Energy Storage (ES) system that primarily employs a battery pack to store electrical energy. Its main function is to stabilize the power demand and supply within the system. The ensuing sections explicate the state space, action space, transition model, and reward function associated with the ES system.

The observation space of the ES system is:

where represents the standard of energy which the pack stored at time t.

The action space of the ES system is:

where represents the ratio of the energy charged or discharged at time t to the maximum energy charged or discharged during each cycle. When , it represents the discharge of the battery, otherwise, it is charged.

From the above definitions of observed values and action values, it can be concluded that the theoretical state transformation of the ES system is as follows:

where represents the control interval of the system. represent the efficiency of the discharging or charging of the energy.

Due to the limitation of energy storage in the ES system, the theoretical value at the current time may differ from the actual stored energy. Therefore, the observation of the ES system is as follows:

where represent the upper and lower limit of the ES system.

Based on the above analysis, the reward function is designed for behaviors with charging that exceeds the upper limit and discharging that falls below the lower limit as follows:

where is the balance coefficient used to balance the relationship between ES system rewards and other system rewards.

2.4. System Reward

In grid-connected mode, a microgrid will interact with other microgrids on the same bus. Therefore, the optimal scheduling of multiple microgrids should not only focus on the development of each microgrid individually but also on the overall system optimization.

The paper presents a designed reward function that guarantees the safe and stable operation of the bus voltage system. Each microgrid shall bear the overall system rewards on an average basis.

where represents the upper and lower limits of bus voltage under safe operation. represents a very high penalty coefficient. And represents the number of microgrids on the same bus.

2.5. Model Reward

Following the aforementioned analysis, the reward for individual microgrid can be obtained as:

The paper defines four rewards that hold equal significance in optimizing the system. The reward for the whole model should comprise the summation of the rewards of each microgrid:

3. Algorithm Design

To ensure that the optimal scheduling method of microgrid systems is closely tied to environmental dynamics, this paper utilizes a model-based reinforcement learning method for optimization. The Muzero algorithm framework, proposed by Schrittwieser et al. [36]. in 2020, has demonstrated superior performance in studying discrete action spaces. Accordingly, this paper employs it to resolve the optimal scheduling challenge in microgrids.

As MuZero leverages Monte Carlo Tree Search (MCTS) [37] to identify optimal strategy selection and requires discreet action values. Sinclair et al. [38] investigated the feasibility of solving problems in continuous action spaces by discretizing the action space. This paper discretizes the action space of the model:

The paper conducts the average discretization of motion space selection to ensure the comprehensiveness and universality of motion selection.

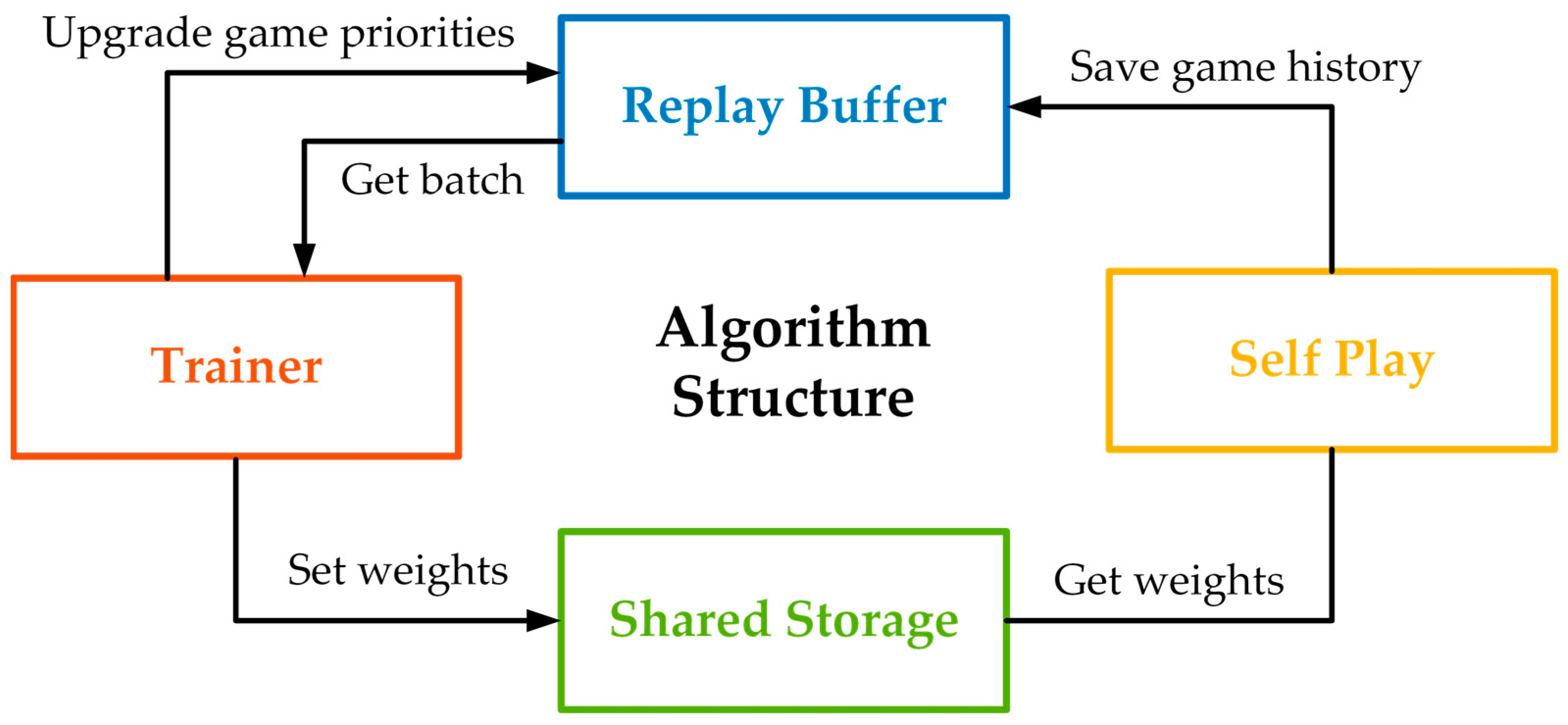

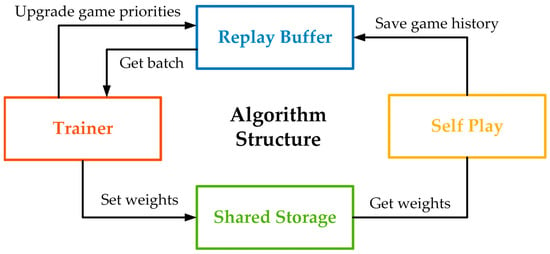

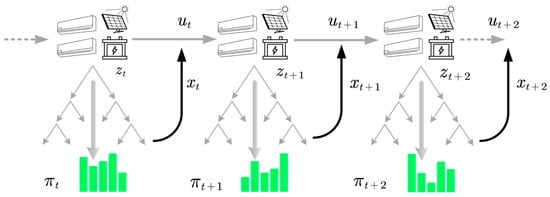

The learning and training process of optimal scheduling strategies is grounded in the MuZero training process, as demonstrated in Figure 3.

Figure 3.

Flowchart o the Algorithm Architecture.

Figure 3 showcases that the algorithm’s training process extensively comprises four facets: Self-play, Replay Buffer, Trainer, and Shared Storage. The Replay Buffer functions to hoard the game history data collated by the Self-play phase, while the Shared Storage preserves the Trainer training model parameters. The upcoming sections of this chapter expound upon the Self-play and Trainer components in detail.

3.1. Self-Play

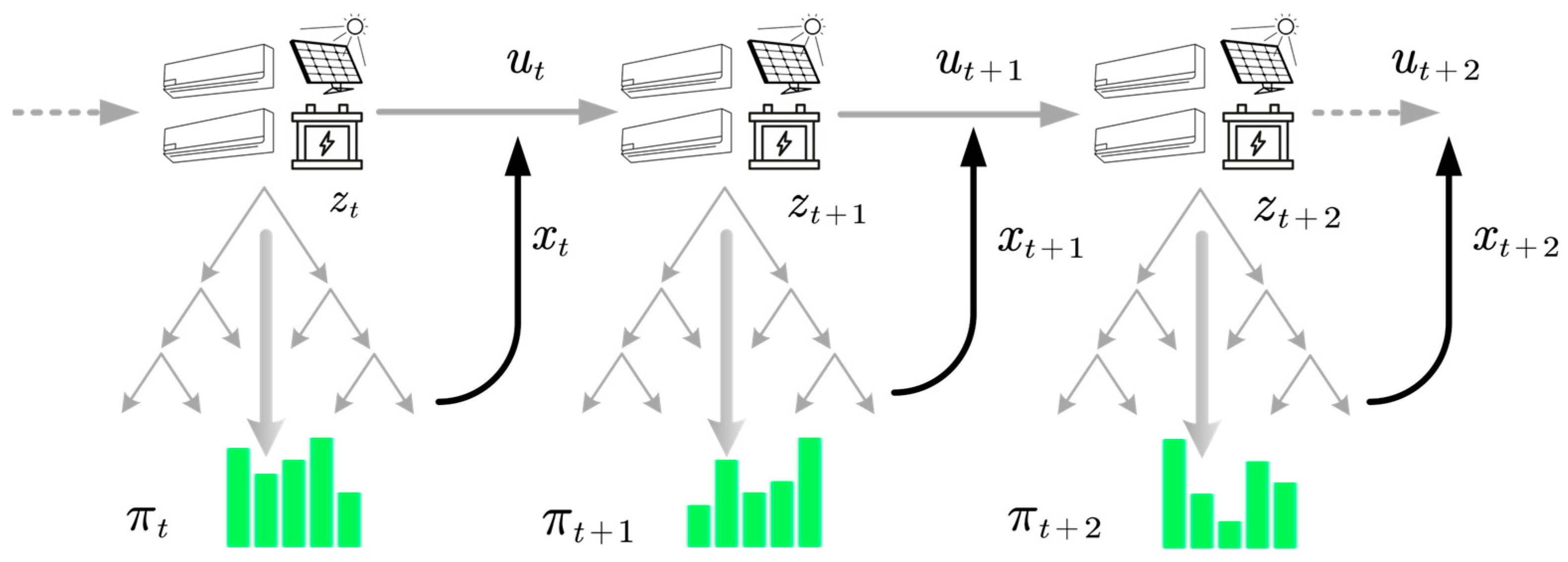

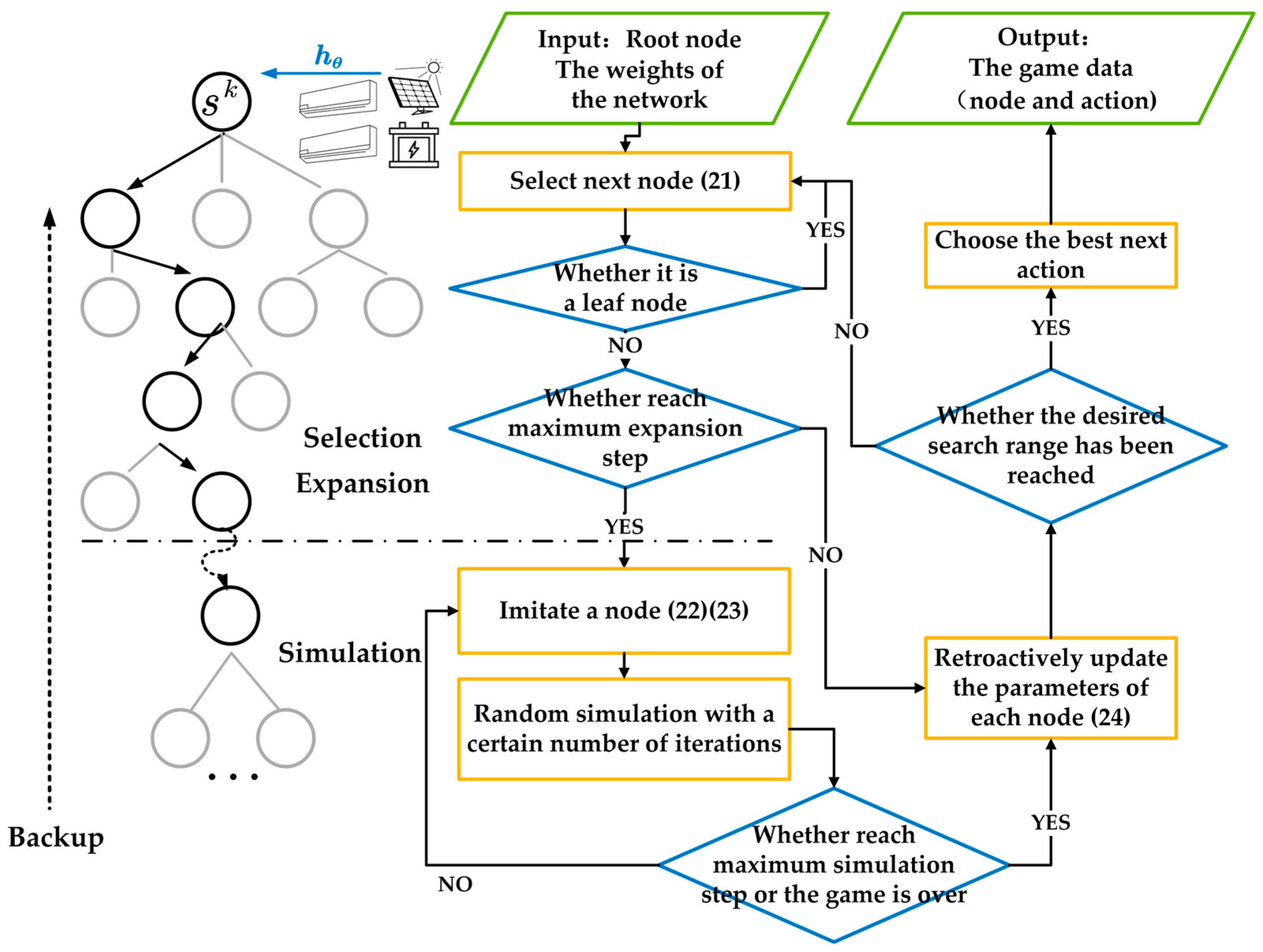

The Self-play module’s importance lies in generating game data through the algorithm’s interaction with the real environment, supplying data for the following model training. Crucial to Self-play is the utilization of MCTS [37] as a strategy optimization technique, coupled with Representation, Prediction, and Dynamic learning function for auxiliary simulation. The primary flow of MCTS exploration in Self-play from time t is illustrated in Figure 4.

Figure 4.

The Structure of MCTS.

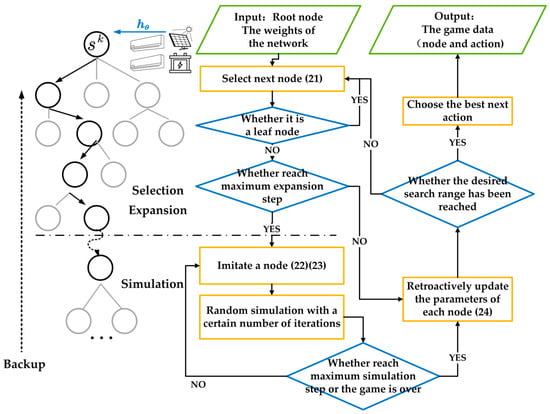

The primary role of Monte Carlo Tree Search (MCTS) is to determine the most effective approach, acquire timely rewards, and evaluate the projected worth. The particular course of action involved in identifying the optimal strategy at a given time t is depicted in Figure 5.

Figure 5.

The Search Process of MCTS with the Learned Model.

MCTS utilizes the three fundamental steps of Selection, Expansion, and Backup to screen the optimal next node. The process will be explained in detail below.

3.1.1. Selection

The selection of an action for the next node at the current node primarily employs Upper Confidence Bound (UCB). UCB’s action selection mainly relies on the estimated reward garnered by selecting the action and the number of previous times the action has been chosen:

where represents the mean predicted value that results from performing action x in the current state. This value is primarily used to assist Monte Carlo Trees (MCTs) in selecting actions that are expected to yield greater future rewards. The addition of further content following the plus sign serves to expand the feasible options available for MCTs to consider. Meanwhile, denotes the prior probability of selecting action x, while is leveraged to ensure a balance of search tree efficiency and breadth. Consequently, the search tree is more inclined to seek out and explore high-value nodes that have yet to be discovered.

3.1.2. Expansion

When the simulation reaches the maximum number of steps before the game ends, a new sub-node is expanded. The information pertaining to the new sub-node is calculated by the presently trained policy and dynamic network. Subsequently, the reward and value of the new node are obtained.

where m is the step of selecting a leaf node, and represent the reward, state, policy and value that are calculated or estimated by the three networks. The data is stored and subsequently subjected to a random simulation consisting of l step once expansion has been completed.

3.1.3. Backup

The primary function of this process entails backtracking from the leaf node to the root node of the whole process, updating the values that correspond to each node along the way.

where represents the cumulative median reward in MCTS and is the time loss constant. represents the times that the node was selected and after passing through a node, increases by 1.

The state, action strategy, reward, and value corresponding to the optimal selection obtained from the combined search of MCTs and learned models in the actual environment simulator will be recorded in the Replay Buffer for subsequent learning of the neural network model.

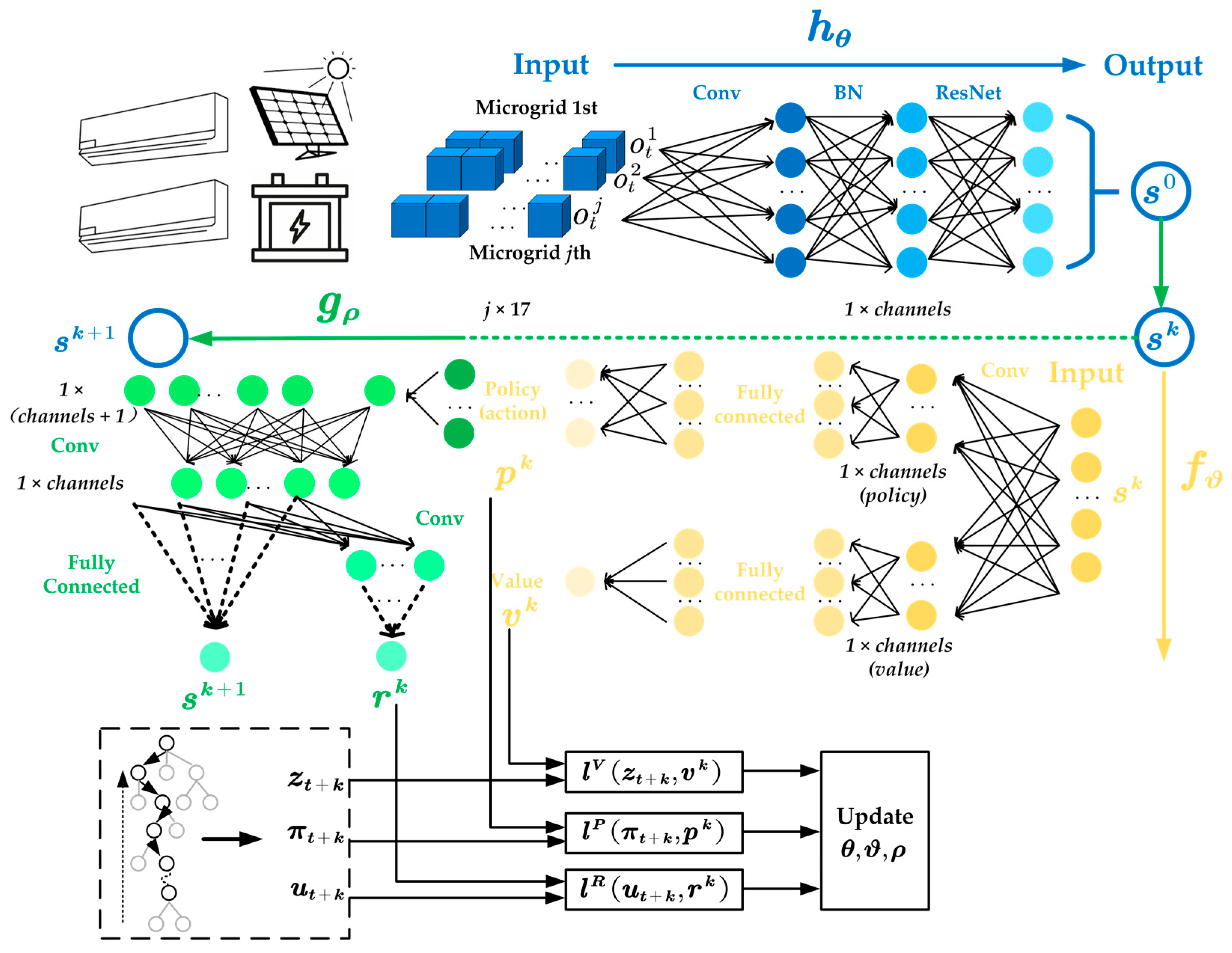

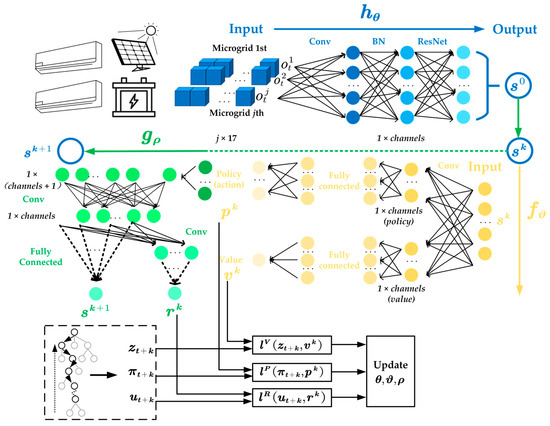

3.2. Trainer

Given the extended duration of MCTS, it is not recommended for utilization in scenarios involving rapid changes within the microgrid. For this reason, a “Trainer” link has been incorporated to facilitate reinforcement learning through deep neural network training aimed at learning the environmental dynamics, rewards, and punishment mechanisms present in the current milieu. In total, three functions have been established, mainly consisting of three neural networks, which are described in detail below.

3.2.1. Representation Function

The purpose of this function is to transform the observation space into a hidden state within MuZero. This step is necessary to address the challenge posed by complex observation spaces, such as Go, which has the potential to generate cumbersome computing tasks:

where is the hidden state of the root node , is the weights of the representation model, and the included neural network is an encoder network that encodes the observations of the current state as the input into a hidden state. This model solves the problem of increasing computational tasks due to excessive observation space.

3.2.2. Prediction Function

The model comprises two networks; the Policy network and the Value network. The input to this model is the hidden state, which was highlighted in Section 3.2.1. The objective of this model is to acquire the optimal strategy and expected average reward for the current moment corresponding to the aforementioned hidden state:

where represents the weight of the prediction model, while refers to the optimal strategy derived from the model’s policy network, and represents the expected value derived from the value network within the model.

3.2.3. Dynamic Function

This model presents an environmental dynamics simulation for the reinforcement learning method. It calculates the hidden state at the current moment by utilizing the previous moment’s hidden state and the current moment’s action:

Training this model allows the method to acquire knowledge of the dynamic model within the real environment, as the model serves as a transition model between hidden states.

3.2.4. Training Process

The training concept involves training three models to simulate MCTS for optimal strategy selection, as well as simulation prediction of timely and expected rewards. Consequently, this article presents the following loss function for updating parameters:

where represents the loss function of the policy, value, and reward networks in the microgrid model presented in this article. Specifically, the cross-entropy loss serves as the loss function for the policy network, while the square error loss serves as the loss function for the value network and reward network.

The pseudocode for parallel training of three major networks is shown in Algorithm 1.

| Algorithm 1: The Pseudocode of Trainer |

| Input: Game data from the self-play process, the current parameters of the Representation, Prediction and Dynamic model. Output: The updated weight parameters of the model. 1 start 2 (25) representation function to transform the observation into hiden state 3 repeat 4 (26) prediction function to choose the action and estimate the value 5 (27) dynamic function to use the environmental dynamics and reward function to calculate the next state and corresponding reward 6 (28) to update 7 until complete a game training batch 4 end |

The corresponding network structure and training process for joint parallel training of three networks are shown in Figure 6.

Figure 6.

Schematic Diagram of Joint Training of Three Functions.

4. Simulation Results

The simulation verification implementation can be bifurcated into two vital stages, namely parameter setting and results presentation. In Section 3.1 of this article, the article will lay down the model’s parameters, whereas Section 3.2 will highlight the simulation results.

4.1. Model and Neural Network Parameter Design

Based on the aforementioned analysis, the parameters may be applied to the Microgrid Scheduling Model, the Reinforcement Learning Algorithm, and the neural network structure.

4.1.1. Microgrid Scheduling Model Parameters

To begin with, an environmental simulator should be constructed to simulate the operation model of microgrids as outlined in Section 2. The pertinent parameters regarding the model discussed in Section 2 are listed in Table 2.

Table 2.

Parameters of the Environmental Simulator.

Where represent the default value of the comfort temperature set, which may vary over time, and denote the upper and lower limits of the bus voltage following normalization.

4.1.2. Reinforcement Learning Algorithm Parameters

The reinforcement learning algorithm based on the MuZero framework, as discussed in Section 3, is devised, and the parameters related to training as specified in the same section can be found in Table 3.

Table 3.

Parameters of the Reinforcement Learning Algorithm.

4.1.3. Neural Network Parameters

As per the learning model structure laid out in Section 3, the Representation, Prediction, and Dynamic learning networks are entirely interconnected internally. The detailed structure of the fully-connected network can be found in Table 4.

Table 4.

Structure of the Fully-connected Layer in Learning Neural Network.

4.2. Result

The operation of microgrids can be bifurcated into two modes, namely islanded operation and grid-connected operation. The fundamental difference between these two modes lies in their connection to other microgrids. This paper comprehensively analyzes and simulates the results of both modes under the purview of the reinforcement learning method. To facilitate the operation of the reinforcement learning algorithm, we correct the reward to the absolute value of the reciprocal of the original reward.

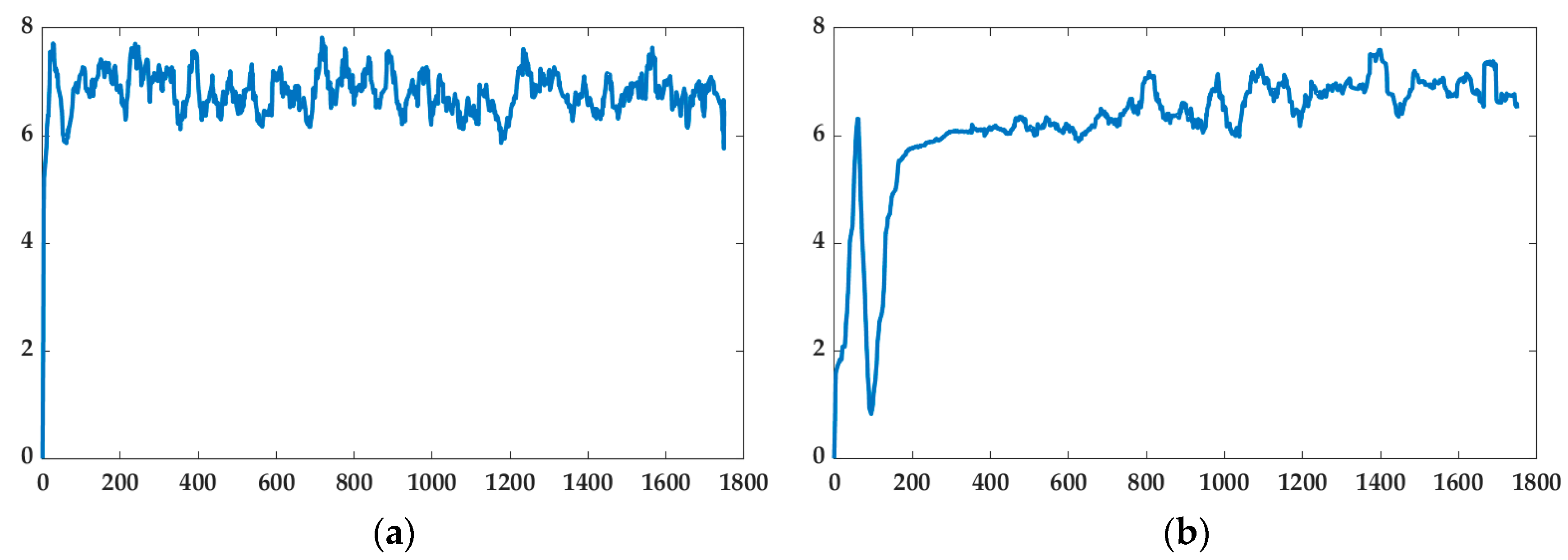

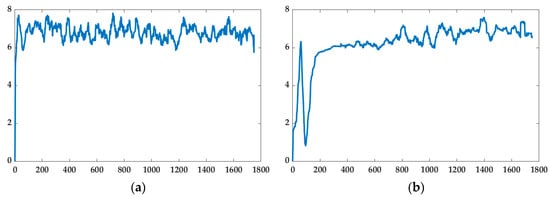

4.2.1. Islanded Operation

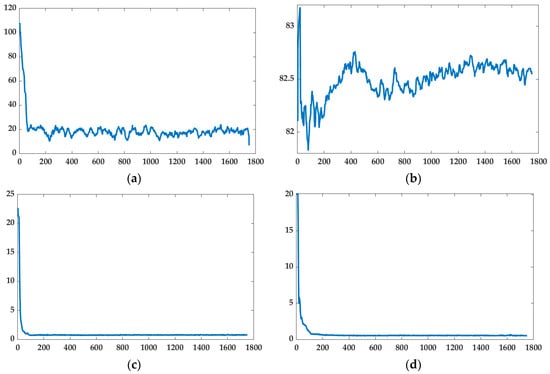

The “Islanded operation” pertains to a singular microgrid located on a bus, which can function independently and sustain itself with its electrical energy. This paper sets the number of microgrids in electrical models in the islanded operation situation. In the islanded operation mode, the bus’s rewards are exclusively allocated to only the microgrid. To begin with, we scrutinize the performance of the method in the given environment, which is highlighted in Figure 7.

Figure 7.

Performance of the Algorithm in Microgrid Island Operation Mode. (a) Optimization curve for timely rewards during training; (b) Optimization curve of expected average value during training.

It can be seen in the figure that (a) is represented by the optimization of the algorithm for the timely reward of the model, because the specific situation of each period in the energy environment is different, so it is mainly based on the long-term stable operation of the microgrid, rather than the acquisition of timely reward (b) indicates that the algorithm for the average expected reward, which can be seen from the figure has converged, representing that the value path optimization of the algorithm for the microgrid optimization scheduling problem has been completed.

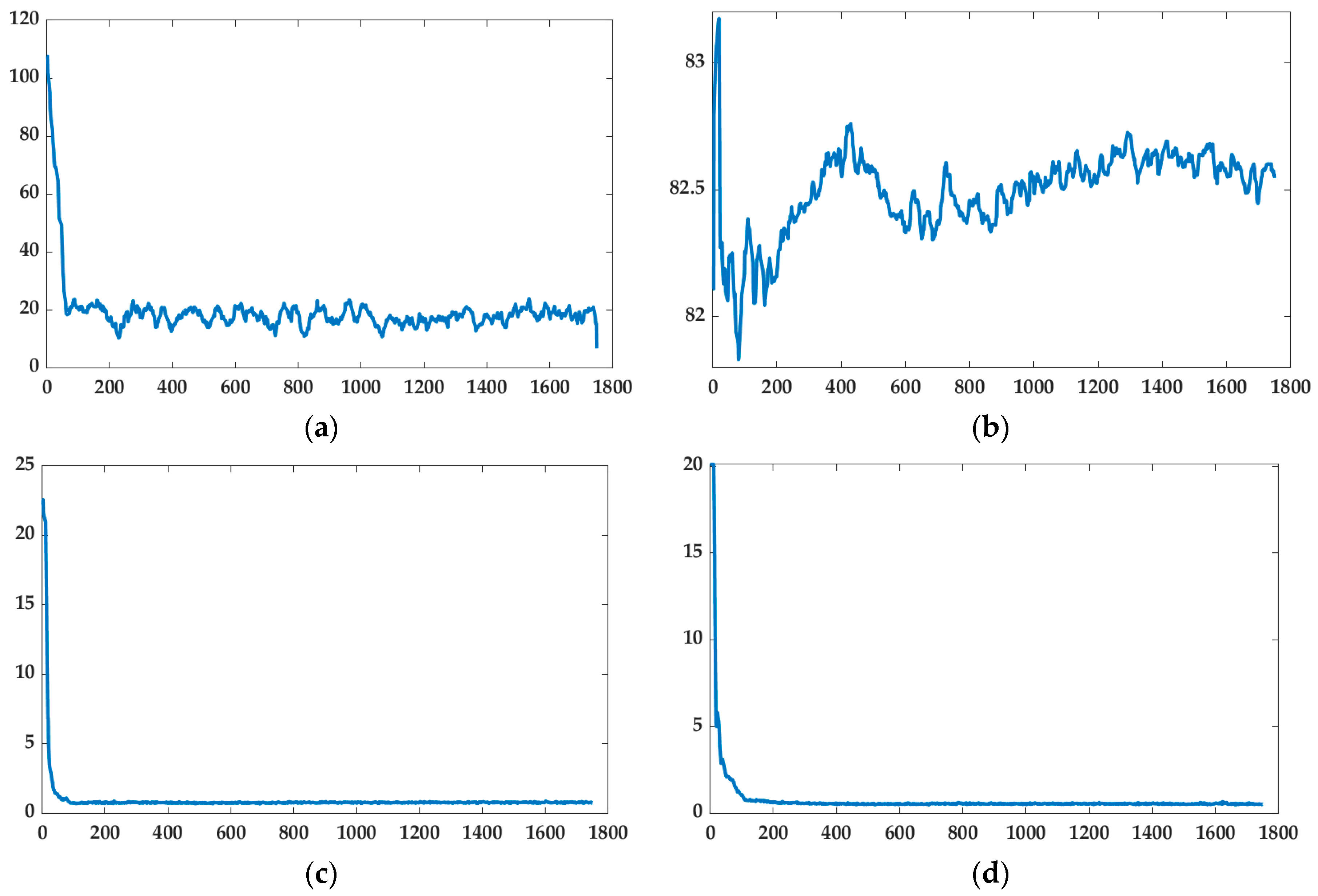

Subsequently, the training for environmental cognition primarily encompasses learning across the three networks, namely policy, reward, and value. The optimization of the loss values can be observed in Figure 8.

Figure 8.

Performance of the MESA Recognizing Environment (a) Total Weighted Loss; (b) Policy Weighted Loss; (c) Value Weighted Loss; (d) Reward Weighted Loss.

Based on the observations illustrated in Figure 8c,d, the loss values for both the Value and Reward networks have decreased to a value within 1. This development suggests that MESA has gained a precise comprehension of the environmental timely rewards and expected average values. However, the loss values for the policy network are gradually converging but are still comparatively high. This outcome could be due to the present level of discretization, which fails to converge to the minimum value. At the same time, due to the improvement in discretization level, the action space has increased, and the probability of encountering two actions with expected values equal to the immediate reward has increased. Additionally, the loss of the policy network has tended to converge, indicating that the results are acceptably good.

4.2.2. Grid-Connected Operation

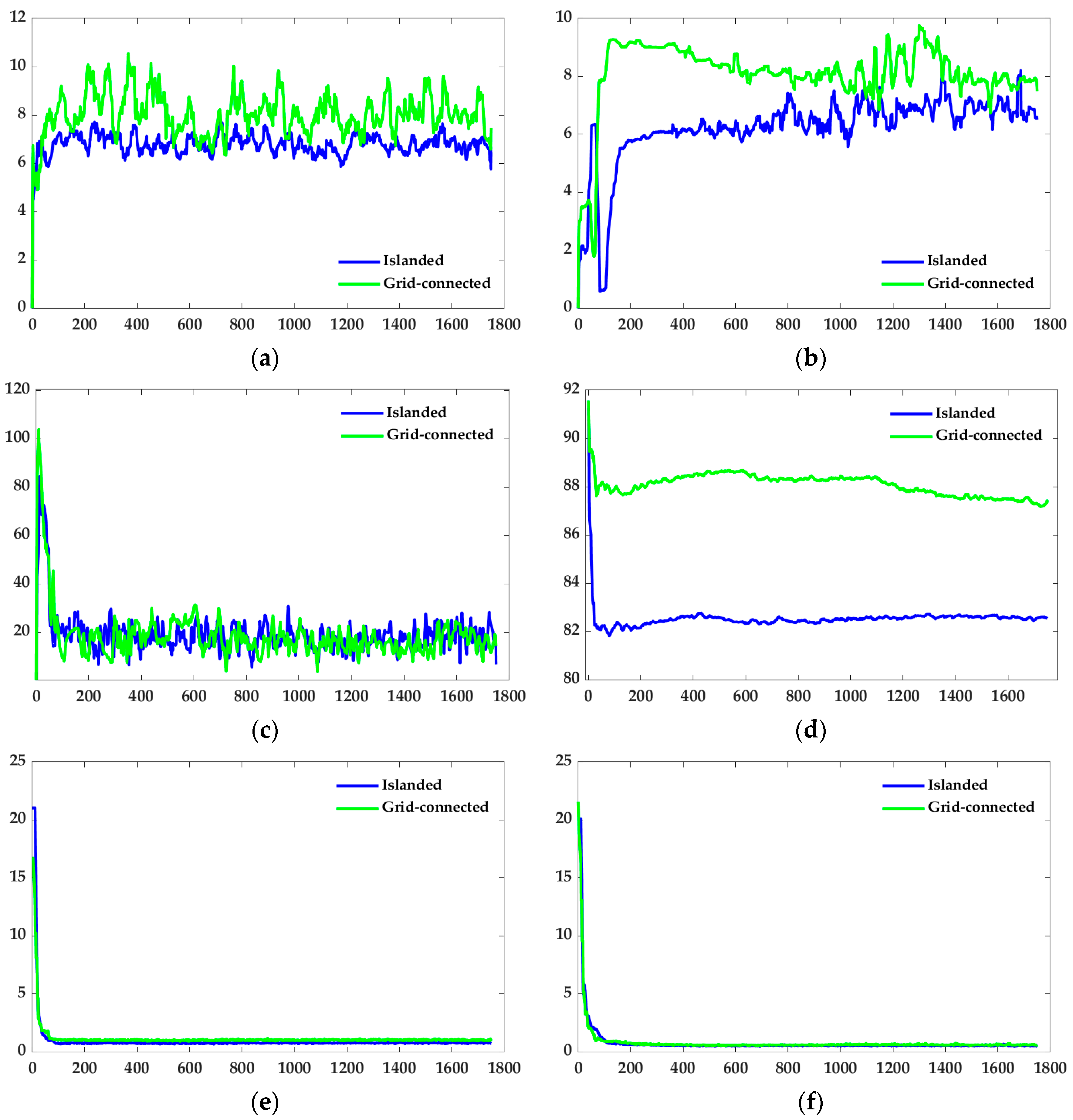

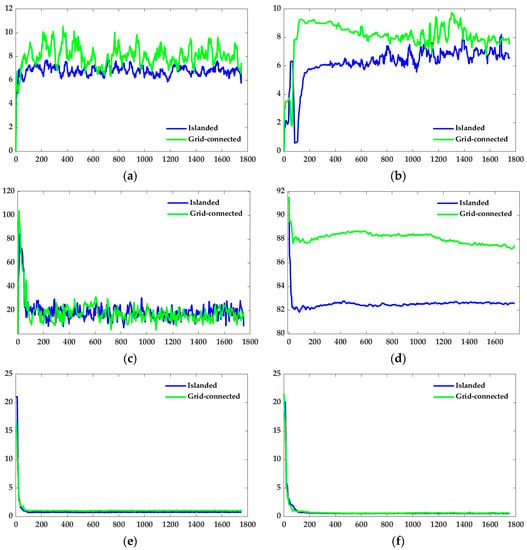

In contrast to islanded microgrids, grid-connected microgrids necessitate comprehensive consideration of not just their self-sufficiency but the optimal functioning of multi-microgrids. In this study, is designated, and the cumulative reward is determined by averaging the system reward of each microgrid. The obtained outcome is then juxtaposed with the results obtained in the case of islanded operation, as manifested in Figure 9.

Figure 9.

Comparison of Training Results of MESA in Islanded and Grid-connected Modes (a) Total Reward; (b) Mean Value; (c) Total Weights loss; (d) Policy Weights Loss; (e) Value Weights Loss; (f) Reward Weights Loss.

Figure 9a,b demonstrate that the timely rewards and expected average optimization of grid-connected operations surpass those of islanded operations according to IEEE standards. The interaction between microgrids after grid connection enhances their stability, thereby confirming the efficacy of the algorithm in the optimal scheduling of grid-connected operations. It is important to note, however, that the increased complexity of the model leads to slower updates of parameter loss values for the three main strategy networks during grid-connected operations, as seen in Figure 9c–f.

5. Conclusions

In this paper, we thoroughly examined the optimal scheduling problem of microgrids, inclusive of clean energy, and this paper utilizes a model-based reinforcement learning algorithm to resolve the optimal scheduling dilemma of microgrids. The proposed method can effectively acquire the microgrid’s operational model through the self-play process of the three learning functions, namely MCTs, Representation, Prediction, and Dynamic. Moreover, it optimizes the operation strategy by updating the learning network’s parameters during the self-play process via game data accumulation and reward function optimization, thereby reducing computational tasks and augmenting the strategy’s robustness and adaptability. By establishing a microgrid environment simulator encompassing HVAC, PV, and ES systems, we substantiated that the proposed model-based reinforcement learning method can be successfully adopted for both microgrid island and grid-connected operation modes. The simulation result demonstrates the algorithm proposed is effective for the optimization scheduling of the microgrid system. The convergence of the loss of the value and reward network demonstrates that the algorithm is highly efficient in learning high-value actions in the environment. The reason why the policy network converges to a higher value is that the action space is large, and there are approximate high-value actions that can be chosen.

Author Contributions

Conceptualization, J.Y.; Methodology, J.Y.; Software, J.X.; Validation, Y.G.; Formal analysis, J.X.; Resources, N.Z.; Writing—original draft, J.Y.; Writing—review & editing, N.Z. and Y.G.; Visualization, N.Z. and Y.G. All authors have read and agreed to the published version of the manuscript.

Funding

National Natural Science Foundation of China (62203004).

Data Availability Statement

Data is unavailable due to privacy.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Alanne, K.; Saari, A. Distributed energy generation and sustainable development. Renew. Sustain. Energy Rev. 2006, 10, 539–558. [Google Scholar] [CrossRef]

- Song, Z.; Wang, X.; Wei, B.; Shan, Z.; Guan, P. Distributed Finite-Time Cooperative Economic Dispatch Strategy for Smart Grid under DOS Attack. Mathematics 2023, 11, 2103. [Google Scholar] [CrossRef]

- Li, Y.; Gao, D.W.; Gao, W.; Zhang, H.; Zhou, J. A Distributed Double-Newton Descent Algorithm for Cooperative Energy Management of Multiple Energy Bodies in Energy Internet. IEEE Trans. Ind. Inform. 2021, 17, 5993–6003. [Google Scholar] [CrossRef]

- Zhang, H.; Li, Y.; Gao, D.W.; Zhou, J. Distributed Optimal Energy Management for Energy Internet. IEEE Trans. Ind. Inform. 2017, 13, 3081–3097. [Google Scholar] [CrossRef]

- Parhizi, S.; Lotfi, H.; Khodaei, A.; Bahramirad, S. State of the art in research on microgrids: A review. IEEE Access 2015, 3, 890–925. [Google Scholar] [CrossRef]

- Li, T.; Huang, R.; Chen, L.; Jensen, C.S.; Pedersen, T.B. Compression of Uncertain Trajectories in Road Networks. In Proceedings of the 46th International Conference on Very Large Data Bases, Online, 31 August–4 September 2020; Volume 13, pp. 1050–1063. [Google Scholar]

- Rezvani, A.; Gandomkar, M.; Izadbakhsh, M.; Ahmadi, A. Environmental/economic scheduling of a micro-grid with renewable energy resources. J. Clean. Prod. 2015, 87, 216–226. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Liang, X.; Huang, B. Event-triggered based distributed cooperative energy management for multienergy systems. IEEE Trans. Ind. Inf. 2019, 15, 2008–2022. [Google Scholar] [CrossRef]

- Liu, C.; Wang, D.; Yin, Y. Two-Stage Optimal Economic Scheduling for Commercial Building Multi-Energy System through Internet of Things. IEEE Access 2019, 7, 174562–174572. [Google Scholar] [CrossRef]

- Li, Y.; Gao, D.W.; Gao, W.; Zhang, H.; Zhou, J. Double-Mode Energy Management for Multi-Energy System via Distributed Dynamic Event-Triggered Newton-Raphson Algorithm. IEEE Trans. Smart Grid 2020, 11, 5339–5356. [Google Scholar] [CrossRef]

- Eseye, A.T.; Zheng, D.; Zhang, J.; Wei, D. Optimal energy management strategy for an isolated industrial microgrid using a modified particle swarm optimization. In Proceedings of the 2016 IEEE International Conference on Power and Renewable Energy (ICPRE), Shanghai, China, 21–23 October 2016; pp. 494–498. [Google Scholar]

- Zeng, Y.; Zhao, H.; Liu, C.; Chen, S.; Hao, X.; Sun, X.; Zhang, J. Multi objective optimization of microgrid based on Improved Multi-objective Particle Swarm Optimization. In Proceedings of the 2022 International Seminar on Computer Science and Engineering Technology (SCSET), Indianapolis, IN, USA, 8–9 January 2022; pp. 80–83. [Google Scholar]

- Elsayed, W.T.; Hegazy, Y.G.; Bendary, F.M.; El-Bages, M.S. Energy management of residential microgrids using random drift particle swarm optimization. In Proceedings of the 2018 19th IEEE Mediterranean Electrotechnical Conference (MELECON), Marrakech, Morocco, 2–7 May 2018; pp. 166–171. [Google Scholar]

- Zaree, N.; Vahidinasab, V. An MILP formulation for centralized energy management strategy of microgrids. In Proceedings of the 2016 Smart Grids Conference (SGC), Kerman, Iran, 20–21 December 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Picioroaga, I.I.; Tudose, A.; Sidea, D.O.; Bulac, C.; Eremia, M. Two-level scheduling optimization of multi-microgrids operation in smart distribution networks. In Proceedings of the 2020 International Conference and Exposition on Electrical and Power Engineering (EPE), Iasi, Romania, 22–23 October 2020; pp. 407–412. [Google Scholar]

- Ma, W.J.; Wang, J.; Gupta, V.; Chen, C. Distributed energy management for networked microgrids using online ADMM with regret. IEEE Trans. Smart Grid 2016, 9, 847–856. [Google Scholar] [CrossRef]

- Fossati, J.P.; Galarza, A.; Martín-Villate, A.; Echeverría, J.M.; Fontán, L. Optimal scheduling of a microgrid with a fuzzy logic controlled storage system. Int. J. Electr. Power Energy Syst. 2015, 68, 61–70. [Google Scholar] [CrossRef]

- Banaei, M.; Rezaee, B. Fuzzy scheduling of a non-isolated micro-grid with renewable resources. Renew. Energy 2018, 123, 67–78. [Google Scholar] [CrossRef]

- Lyu, L.; Shen, Y.; Zhang, S. The Advance of Reinforcement Learning and Deep Reinforcement Learning. In Proceedings of the 2022 IEEE International Conference on Electrical Engineering, Big Data and Algorithms (EEBDA), Changchun, China, 25–27 February 2022; pp. 644–648. [Google Scholar] [CrossRef]

- Leo, R.; Milton, R.S.; Kaviya, A. Multi agent reinforcement learning based distributed optimization of solar microgrid. In Proceedings of the 2014 IEEE International Conference on Computational Intelligence and Computing Research, Coimbatore, India, 18–20 December 2014; pp. 1–7. [Google Scholar]

- Li, T.; Chen, L.; Jensen, C.S.; Pedersen, T.B. TRACE: Real-time Compression of Streaming Trajectories in Road Networks. In Proceedings of the 47th International Conference on Very Large Data Bases, Copenhagen, Denmark, 16–20 August 2021; Volume 13, pp. 1175–1187. [Google Scholar]

- Singh, N.; Elamvazuthi, I.; Nallagownden, P.; Badruddin, N.; Ousta, F.; Jangra, A. Smart Microgrid QoS and Network Reliability Performance Improvement using Reinforcement Learning. In Proceedings of the 2020 8th International Conference on Intelligent and Advanced Systems (ICIAS), Kuching, Malaysia, 13–15 July 2021; pp. 1–6. [Google Scholar]

- Shu, Y.; Bi, W.; Dong, W.; Yang, Q. Dueling double q-learning based real-time energy dispatch in grid-connected microgrids. In Proceedings of the 2020 19th International Symposium on Distributed Computing and Applications for Business Engineering and Science (DCABES), Xuzhou, China, 16–19 October 2020; pp. 42–45. [Google Scholar]

- Xie, L.; Li, Y.; Xiao, J.; Yang, J.; Xu, B.; Ye, Y. Research on Autonomous Operation Control of Microgrid Based on Deep Reinforcement Learning. In Proceedings of the 2021 IEEE 5th Conference on Energy Internet and Energy System Integration (EI2), Taiyuan, China, 22–24 October 2021; pp. 2503–2507. [Google Scholar]

- Skiparev, V.; Belikov, J.; Petlenkov, E. Reinforcement learning based approach for virtual inertia control in microgrids with renewable energy sources. In Proceedings of the 2020 IEEE PES Innovative Smart Grid Technologies Europe (ISGT-Europe), The Hague, The Netherlands, 26–28 October 2020; pp. 1020–1024. [Google Scholar]

- Garrido, C.; Marín, L.G.; Jiménez-Estévez, G.; Lozano, F.; Higuera, C. Energy Management System for Microgrids based on Deep Reinforcement Learning. In Proceedings of the 2021 IEEE CHILEAN Conference on Electrical, Electronics Engineering, Information and Communication Technologies (CHILECON), Valparaíso, Chile, 6–9 December 2021; pp. 1–7. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Weiss, X.; Xu, Q.; Nordström, L. Energy Management of Smart Homes with Electric Vehicles Using Deep Reinforcement Learning. In Proceedings of the 2022 24th European Conference on Power Electronics and Applications (EPE’22 ECCE Europe), Hanover, Germany, 5–9 September 2022; pp. 1–9. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Adaptive Computation and Machine Learning. In Reinforcement Learning: An Introduction, 2nd ed.; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Deisenroth, M.; Rasmussen, C.E. PILCO: A model-based and data-efficient approach to policy search. In Proceedings of the 28th International Conference on Machine Learning (ICML-11), Bellevue, WA, USA, 28 June–2 July 2011; pp. 465–472. [Google Scholar]

- Li, T.; Chen, L.; Jensen, C.S.; Pedersen, T.B.; Gao, Y.; Hu, J. Evolutionary Clustering of Moving Objects. In Proceedings of the IEEE 38th International Conference on Data Engineering, Virtual, 9–12 May 2022; pp. 2399–2411. [Google Scholar]

- Heess, N.; Wayne, G.; Silver, D.; Lillicrap, T.; Erez, T.; Tassa, Y. Learning continuous control policies by stochastic value gradients. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar]

- Graepel, T. AlphaGo-Mastering the game of go with deep neural networks and tree search. Lect. Notes Comput. Sci. 2016, 9852. [Google Scholar]

- JLi, J.; Ma, X.-Y.; Liu, C.-C.; Schneider, K.P. Distribution System Restoration with Microgrids Using Spanning Tree Search. IEEE Trans. Power Syst. 2014, 29, 3021–3029. [Google Scholar] [CrossRef]

- Biagioni, D.; Zhang, X.; Wald, D.; Vaidhynathan, D.; Chintala, R.; King, J.; Zamzam, A.S. Powergridworld: A framework for multi-agent reinforcement learning in power systems. In Proceedings of the Thirteenth ACM International Conference on Future Energy Systems, Online, 28 June–2 July 2022; pp. 565–570. [Google Scholar]

- Schrittwieser, J.; Antonoglou, I.; Hubert, T.; Simonyan, K.; Sifre, L.; Schmitt, S.; Guez, A.; Lockhart, E.; Hassabis, D.; Graepel, T.; et al. Mastering atari, go, chess and shogi by planning with a learned model. Nature 2020, 588, 604–609. [Google Scholar] [CrossRef] [PubMed]

- Coulom, R. Efficient selectivity and backup operators in Monte-Carlo tree search. In Proceedings of the Computers and Games: 5th International Conference, CG 2006, Turin, Italy, 29–31 May 2006; Revised Papers 5. Springer: Berlin/Heidelberg, Germany, 2007; pp. 72–83. [Google Scholar]

- Sinclair, S.; Wang, T.; Jain, G.; Banerjee, S.; Yu, C. Adaptive discretization for model-based reinforcement learning. Adv. Neural Inf. Process. Syst. 2020, 33, 3858–3871. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).