Abstract

An easy method to evaluate a remote place’s snowpack depth has been discussed for helping later-stage elderly persons’ life. The method of using a smartphone camera and an augmented reality marker (AR marker) has been investigated. The general smartphone with a high image resolution camera was used to observe snowpack depth in remote places and remote control the robot via Bluetooth device. And image processing using artificially integrated technology (AI technology) was adapted for detecting the AR markers and for evaluating the snowpack depth.

1. Introduction

Population decrease and aging have become big social issues in Japan. Especially, later-stage elderly persons in the marginal areas have increased (A region where most of the residents are elderly over 65 years and the regional infrastructure and public social activities assist have deteriorated significantly) [1,2,3,4]. In the report [4], it was introduced using some quotes, that the number of later-stage elderly persons is expected to exceed 15 million by 2030; it will exceed 10% of the Japanese population, and more than 20% of them will become severe dementia, a difficult to live alone by themselves. The authors’ research fieldwork of Toyama prefecture situation is also in the same situation as that. Toyama prefecture has occupied 70% mountainous area and there are almost 1300 likely marginal communities [5]. From the questionnaire survey, it was thought that the increase in the burden on snow removal is a major worry thing in the areas [5]. Because some early-stage elderly persons should instead perform the later-stage elderly persons’ house snow removal. In the recent situation of heavy snowfall, some social workers and city officers have to confirm those person’s safety, and then they have to help those person’s snow removal [3,4]. But recently sometimes it has become difficult to do by the unexpected huge amount of snowfall, or the number of later-elderly persons rapidly increases beyond the number of public support members. Therefore, the simple remote place-monitoring robot has been investigated for a cooperative snow removal plan [4]. Already, many pieces of equipment were proposed for observing snowfall, floods, avalanches, animal damage, etc. In the previous report [4], the sound recognize AI (artificial intelligence) microcomputer attached to the smartphone was used as a communication device between a laboratory PC and a remote place robot for observing snowpack depth. But the method needed complex sound record adjustment. Therefore, in this report, an easier procedure for communication was investigated using commercial communication software and a general “Bluetooth switch”. In the experiment, the “AirDroid V3.5.1” software (hereafter it called AirDroid) and a four-channel Bluetooth switch were used for building the proposed remote monitoring robot. In the results, it was found that stressless and smooth remote control was performed by the present proposed procedure, and it has maintained ±1 cm snowpack depth evaluation accuracy. The details of the results were described as follows.

2. Proposed Remote Place Snowpack Depth Evaluation Procedure

Snowpack depth is closely related to our lives, and it has a wide range of influences, such as the weather itself, water utilization plans, and future agricultural production plans. Therefore, snowpack depth measurement methods and quantification have been studied in various countries, and many papers have been published in recent years [4,6,7,8,9,10,11,12,13,14,15]. Especially, research using Unmanned Aerial Vehicle, Global Navigation Satellite Systems, Artificial Intelligence, and various Numerical simulation for predicting the total amount of snow or evaluating snowpack depth over a wide area is nowadays trend. Moreover, many studies have been conducted on the snowpack depth in familiar living areas [4,16,17,18,19,20,21]. Some photogrammetry research on snowpack depth evaluation is being carried out on how to deal with snow disasters related to our daily lives, such as avalanches, floods, collapses of agricultural vinyl houses and empty houses, and the sinking of small ships, etc.

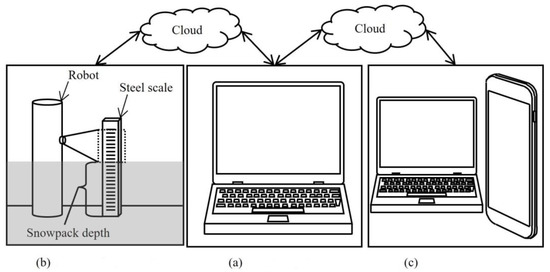

Many countries have developed photogrammetry procedures for described abovementioned purposes. Among them, the remote monitoring system for snow damage has been proposed and developed [4], and it was brushed up in the present report. The present proposed remote-control snowpack depth observation and evaluation system was shown in Figure 1. The laboratory PC with AirDroid software was connected to the smartphone of the remote robot via the cloud as shown in Figure 1a,b. If anything, it was preferable to use the smartphone using the phone number tagged Subscriber Identity Module (SIM). Because if Wireless Fidelity (Wi-Fi) was used for the connection route or data-SIM was used, sometimes its connection was stopped by the security. And it must connect between the PC and the smartphone every 72 h because voiding the connection halt by the security of AirDroid. During the communication, the robot was controlled by the PC via the Bluetooth devices connected to the smartphone. A general “4 Ch BLE” switch was used for the control device of the robot, and its 2 channels were used for upward and return movements, and the rest channels were used for clockwise and counterclockwise rotation movement controls, respectively. And Smartphone of “Motorola G7 power” was used for the communication control experiment. And many laboratory PCs were used for the communication experiments. If the AirDroid software could be used, there was no dependency on the PC model for performing the experimental aim. And the control command was given using general serial communication software “Serial Bluetooth Terminal for Android”. The robot was able to be controlled by both manual input, and automatic input using a Python program. The robot observed snowpack depth using “Microsoft Skype” or a smartphone’s camera function during the robot movement. If Skype was used, the video data was directly captured by the laboratory PC. The AirDroid software is a strong tool that can provide a variety of functions for the control of the remote robot via the smartphone. As a result, using AirDroid could eliminate the need for complicated sound adjustments using the sound recognition AI microcomputer that has already been reported [4]. Obtained data were analyzed using Python OpenCV for evaluating snowpack depth. And obtained snowpack depth data was published on the cloud as shown in Figure 1c. The proposed monitoring system can be operated remotely, and information can be collected using a worldwide network.

Figure 1.

Remote-control snowpack depth observation and evaluation system.: (a) Laboratory PC, (b) Remote place robot, and (c) Citizen’s devices.

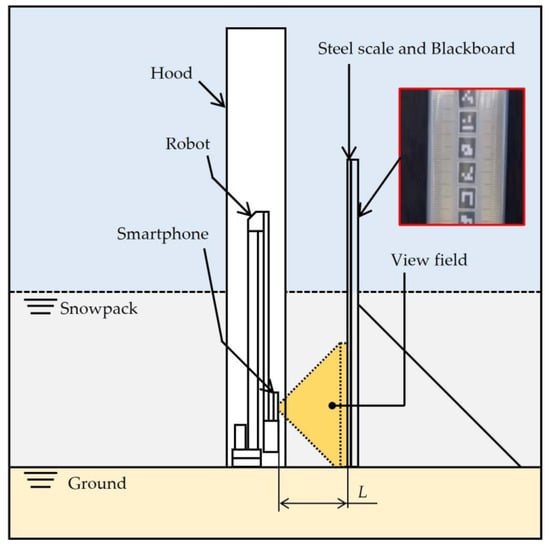

Schematic illustrations of remote observation and evaluation apparatus was shown in Figure 2. The apparatus consists of the remote-control robot and the steel scale that was in front of the robot. The basic measurement and evaluation principle was the same as the previous report [4]. When snowfall, the remote robot and steel scale were buried in the snowpack. The remote robot observed the buried steel scale in the snowpack during its upward and return movement. The robot took video or image data which included snowpack depth information. The steel scale has ArUco markers indicating height from the ground. The evaluation of snowpack depth was performed using the ArUco markers. The smartphone’s view field shifted upward direction in parallel with the robot’s upward movement. If the robot was under a lower position level than a snowpack, the smartphone took a snow wall picture. The measurement distance L is the distance between the smartphone and the steel scale. The robot size has a maximum of 140 cm, usually 70 cm in height. And the diameter of an occupied area is 20 cm. In this report, the robot added a rotation movement function compared with the previous report [4]. In the assumption of practice usage, it was supposed that the developed remote robot was covered using some shaped acrylic hoods to be rain and snow-proof. Therefore, the hood shape effect on the AR markers recognition rate was investigated.

Figure 2.

Schematic illustrations of remote observation and evaluation apparatus.

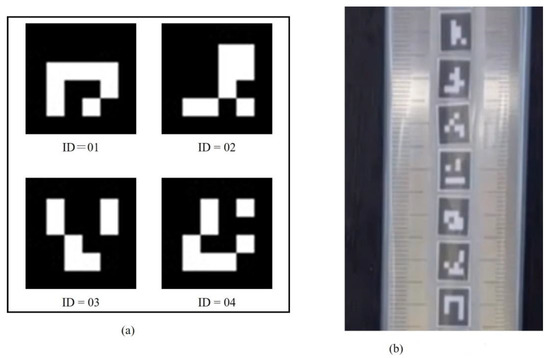

Here, an example of AR marker was shown in Figure 3. The ArUco marker was used in this study. The ArUco markers are a generally known robust sensing technique to detect some object positions in the image using an AI program and augmented reality technology (AR) [22,23,24,25,26,27,28]. The used AR marker had unique ID numbers as shown in Figure 3a. And the markers were produced using the Open Computer Vision Contribution package for Python programming. The software is open source and has powerful image-processing functionality. In printing the AR marker, the general laser printer: Canon LASER SHOT LBP-1310 (Canon Japan Inc., Tokyo, Japan), and general copy paper having 64 g∙m−2 were used. In this study, printed 100 patterns ArUco markers having 8.0 mm × 8.0 mm size stuck on the steel scale at each 1.0 cm as shown in Figure 3b, (Shinwa Rules Co., Ltd., Tokyo, Japan, 1 m, JIS B-7516, 1st grade [29]). The ID = 01 marker was stuck at the 1 cm position of the steel scale. The marker’s center position was matched with the 1 cm height position. Similarly, 100 markers were pasted on the steel scale up to 100 cm. And the markers and the steel scale were fully covered using a 0.04 mm thickness polyurethane sheet for waterproofing.

Figure 3.

Example of AR marker.: (a) AR marker, and (b) Printed AR marker.

Some image-capturing assumptions was shown in Table 1. The camera was Motorola G7 Power. The camera angle was set as the camera lens surface maintained perpendicular to the ground. And steel scale angel was set as the reading surface maintained perpendicular to the ground. So, the camera lens surface and steel scale reading surface were maintained parallel during image capturing. If using cover-hood the transparent acrylic having 10 mm thickness became a filter. The light source was both sunlight and fluorescent light during image capturing.

Table 1.

Image-capturing assumptions.

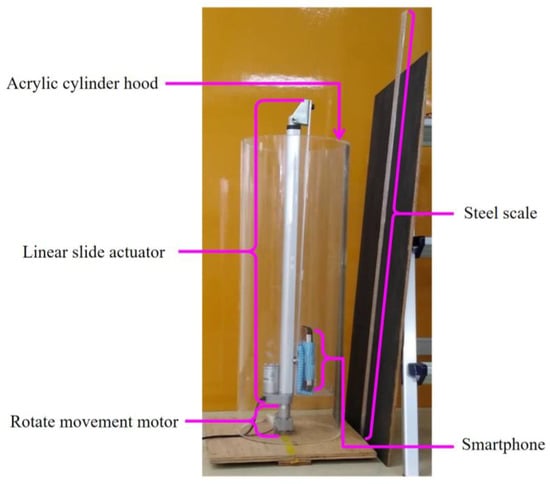

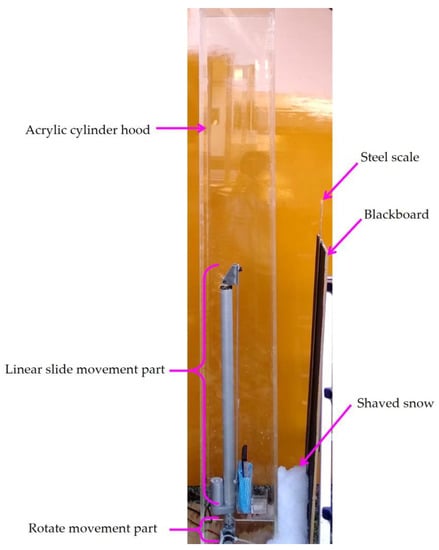

The hood shape effect on the ArUco marker recognition rate was investigated. The photograph of the hood setup for pre-experiment for obtaining hood shape effect on recognition rate was shown in Figure 4. Box and cylinder-type hoods were prepared for the observation pre-experiments. The hood type was classified as cylinder filter, plate filter (using box hood), and Non-filter condition depending on the hood shape or no usage of the hood, respectively. The acrylic hood was the most expensive in the experimental equipment, therefore, the smartphone was covered short height hood in pre-experiment by reason of cost cut as shown in Figure 4. After obtaining some knowledge about the hood shape effect on the recognition rate, the full cover hood was newly produced and used for the field test. Of course, it was confirmed that the size was enough to completely cover the smartphone’s view field. In the pre-experiment, pure recognition rate difference with some hood shapes was investigated with no obstacles condition between the smartphone and the steel scale as shown in Figure 4 in the laboratory room and field. The acrylic hood size complied with Japanese Industrial Standards (JIS: K6718-2 [30]), so around 10 mm thickness hoods were prepared for the experiments. And the shortest distance between the smartphone and the acrylic hood wall was 10 cm.

Figure 4.

Photograph of hood setup for pre-experiment for obtaining hood shape effect on recognition rate.

The example photograph of the laboratory PC display during remote communication was shown in Figure 5. The left side shows AirDroid software. On the software, Serial Bluetooth terminal software was launched. The remote-control command was transmitted both automatically using the developed Python program and manually input and confirmed the proposed procedure communication performance. The right side shows Microsoft Skype V 8.98.0.206 software (hereafter it called Skype), which gives high-resolution video. The communication software is centered in Singapore and has provided worldwide telecommunication, and this report has confirmed that the proposed procedure can obtain good remote communication for as far as by some thousand km distance.

Figure 5.

Example photograph of laboratory PC display during remote communication.

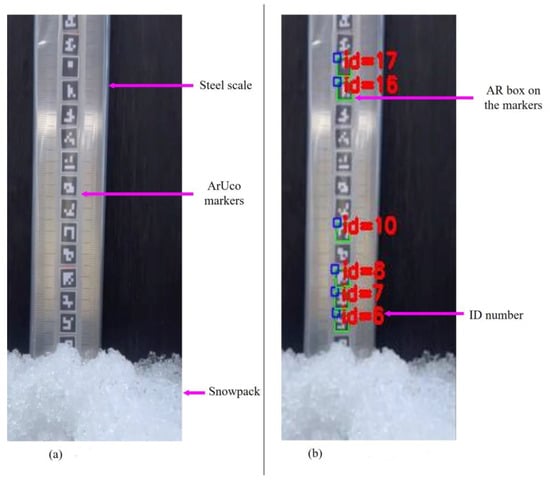

An example of the captured original image and image processed one is shown in Figure 6. Figure 6a shows obtained original image. Figure 6b shows imaged processed one, and the AR (Augmented Reality) green color box and its red color Id number showing snowpack height were layered on the image. The AR information of “Id = 10” shows the marker is located at 10 cm in height from the ground. Therefore, Figure 6b shows the snowpack depth was almost 6 cm, and the proposed process has ±1 cm accuracy. And AI program did not recognize all the markers as shown in Figure 6. The recognition rate markedly varied depending on the degree of reflection of light. The recognition rate Rcgn was defined by the followings,

Rcgn = NAI/Nhmn × 100 [%]

Figure 6.

Example of the captured original image and image processed one. (a) Original image, and (b) Image processed one.

Here, NAI shows the AI program-recognized number of the markers, and Nhmn denotes the human-recognized number of the marker. In the evaluation, the numbers of the marker were counted using a developed AI program automatically for NAI, and human naked eyes manually for Nhmn, respectively. And it was defined as “Good recognition” beyond Rcgn = 85% in this report’s own decision.

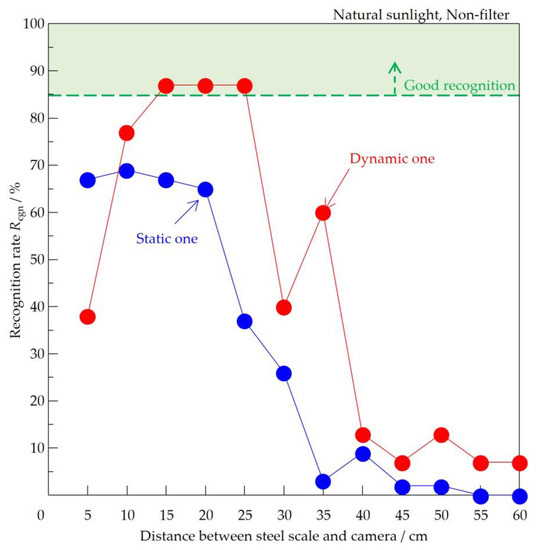

Next, the recognition rate Rcgn between static and dynamic measurement was investigated. In the dynamic experiment, the smartphone moved upward and then return downward. During the motion, the steel scale covered by snow was taken using the high-resolution smartphone’s digital camera, and some videos were captured using Microsoft Skype software. Here, the best recognition rate among multiple still images obtained from the video data was adopted as the dynamic recognition rate. In contrast, in the static experiment, the steel scale photograph was taken only once with the smartphone while fixed in the lowest position. And the still image with the lowest recognition rate among some test results was adopted as the static recognition rate.

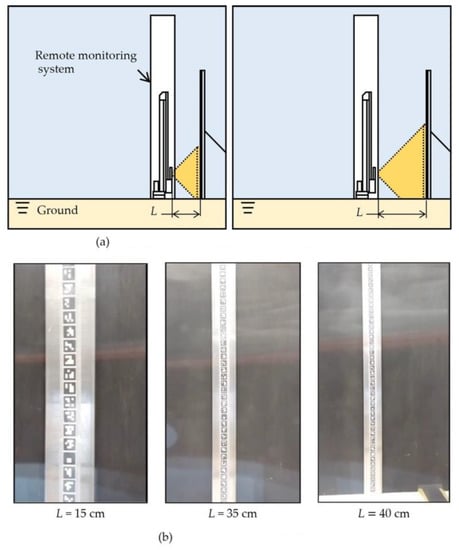

3. Acrylic Hood Shape Effect on AR Marker’s Recognition Rate Score under Fluorescent Light Conditions

First, it was not known that the developed proposed remote monitoring system’s AI recognition performance. The investigation of human and AI program recognition rates was investigated under fluorescent laboratory room conditions. Schematic illustration of the marker observation test and examples of obtained original images were shown in Figure 7. In the marker observation test, it was thought that the smartphone’s view field increased with the increase of L as shown in Figure 7a. Therefore, the relation between the human-recognized number of markers and measurement distance under fluorescent light conditions was investigated. Some clear images were obtained as shown in Figure 7b. The images with L = 15, 35, and 40 cm were shown in the figure, and the image with L = 40 cm included the floor part. The number of markers was manually counted using the PC by myself. The count was a little difficult beyond L = 40 cm because the marker was too small and difficult to detect the marker by the naked eye. Primarily, the principle of the marker observation test has a feature that three-dimension information was projected into a two-dimension image. Therefore, it was thought that somewhere in the range of measurement distance, obvious observation strain caused by the observation principal difficulty would occur.

Figure 7.

Schematic illustration of marker observation test and examples of obtained original images. (a) Schematic illustration of marker observation test, and (b) Obtained original images.

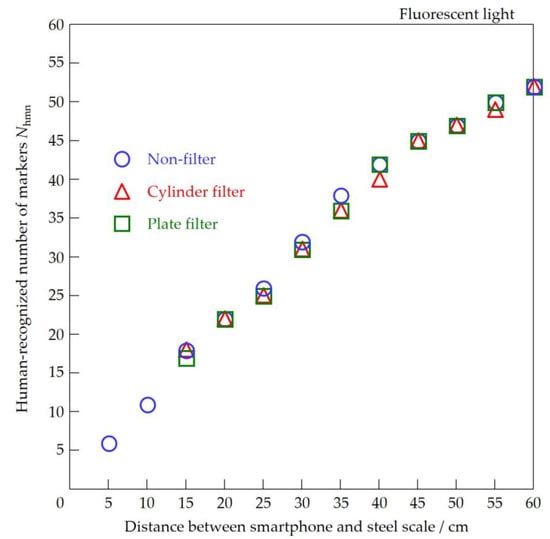

Relations between a human-recognized number of markers and the measurement distance under fluorescent light conditions were shown in Figure 8. Here, when the distance was less than 10 cm, only Non-filter case markers were detected and evaluated. Because the distance between the smartphone and the acrylic hoods wall was 10 cm. When the distance exceeded 35 cm, the number of detected markers increases rate becomes slowed down. This is because of the above-described reason, so, it was thought that it was an information strain caused by projection from a piece of three-dimension information into a two-dimension image. The obtained image would contain some kind or another nook (corner) information around the boundary between the floor part and the steel scale. The distance of less than and equal to 35 cm, the images have included mainly only steel scale. And the number of recognized markers by humans with and without the filter was almost the same. Therefore, it was thought that a suitable measurement distance of L would be ranged between L = 10–35 cm.

Figure 8.

Relations between human-recognized number of markers and measurement distance under fluorescent light conditions.

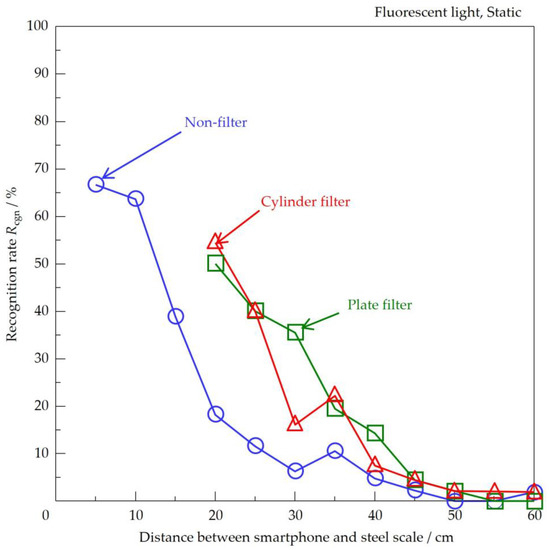

The relations between the recognition rate Rcgn and the measurement distance under fluorescent light conditions was shown in Figure 9. The recognition rate was calculated based on the numbers counted by the AI program and humans, described above. The recognition rate decreased as the distance increased. Only the non-filter one faster decreased compared with another two filters’ results. In the case of Non-filter, images were blurry at L = 5 cm because the measurement distance was too near for taking some images. And clear images were obtained at L = 10 cm for all cases, but the evaluation area was small, almost 10 cm × 10 cm. Moreover, in the case of static observation with L = 10 cm, it needed a phased array procedure to arrange every 10 cm, meaning 10 smartphones should be needed to perform a general 100 cm over snowpack depth evaluation. Therefore, the usefulness of the proposed method was expected, as the smartphone could move upward to expand the measurement field view and obtain video data.

Figure 9.

Relations between recognition rate Rcgn and measurement distance under fluorescent light conditions.

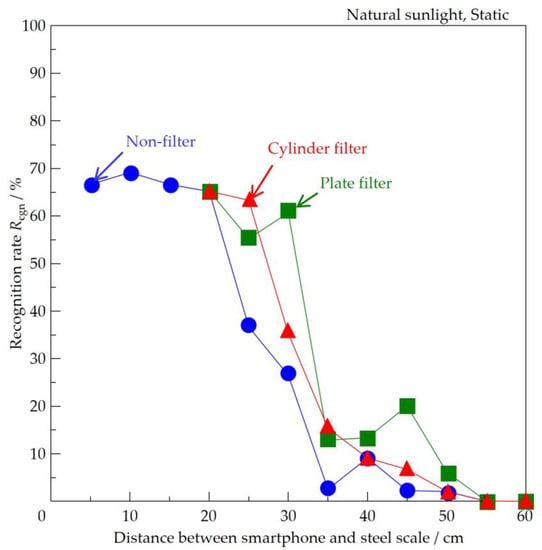

The relations between the recognition rate Rcgn and the measurement distance under natural sunlight conditions were shown in Figure 10. The AR markers recognition rate increased in all cases compared with fluorescent light conditions results. The AR markers recognition was strongly affected by light conditions. In addition to the type of light, it was thought that the reflected light from the surroundings also affected the recognition rate. And it was found that the difference is small in recognition rate between the cylinder-type acrylic hood and the plate-box type. But the cost of the cylinder-type hood was twice compared with that of the plate-box type. Therefore, a plate-box type hood was adopted in the following experiments, and its size has become 1400 mm in height, and 220 mm in width.

Figure 10.

Relations between recognition rate Rcgn and measurement distance under natural sunlight conditions.

4. Dynamic and Static Snowpack Depth Measurements and Evaluations

Dynamic and static snowpack depth measurement and evaluation were investigated. The recognition rate was evaluated under sunlight conditions using the plate filter. The snowpack depth measurement apparatus outdoors photograph was shown in Figure 11. For taking the apparatus photo, the orange color veneer board was set behind the apparatus for easy understanding. Of course, the veneer board was removed in the experiment and actual usage. In the snowpack depth measurement and evaluation experiments, both shaved ice and natural snow were used for those experiments.

Figure 11.

Snowpack depth measurement apparatus outdoors.

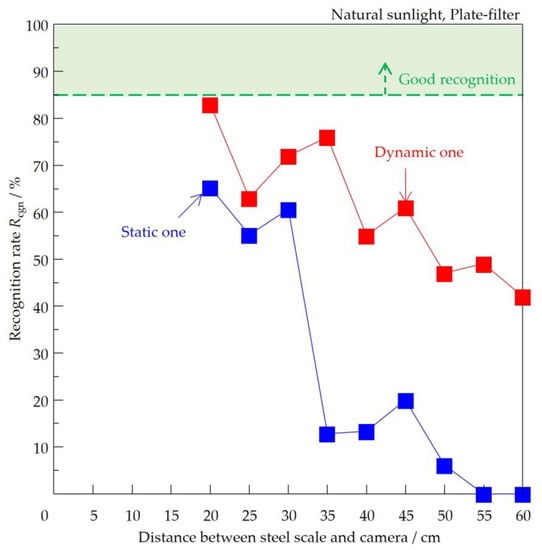

The relations between the recognition rate Rcgn and the measurement distance under non-filter on dynamic and static measurement were shown in Figure 12. The Rcgn was improved remarkably by dynamic measurement procedure. This result seemed very natural. Since the dynamic measurement procedure obtained 30 still images per second, and some hundred still images were evaluated for deciding the recognition rate, and best recognition rate was chosen as the dynamic recognition rate. And the recognition rate was beyond Rcgn = 85% at L = 15–25 cm.

Figure 12.

Relations between recognition rate Rcgn and measurement distance under the Non-filter on dynamic and static measurement.

The relations between the recognition rate Rcgn and the measurement distance with plate-filter on dynamic and static measurement were shown in Figure 13. Unfortunately, the maximum Rcgn was not reached at 85%. It was thought that the Rcgn was strongly affected by the plate-filter effect and light reflection conditions. However, this result from Figure 12 and Figure 13 has become reference data. Because, in fact, the lowest position ArUco marker was automatically evaluated in obtained multiple still images for determining the snowpack depth.

Figure 13.

Relations between recognition rate Rcgn and measurement distance with plate-filter on dynamic and static measurement.

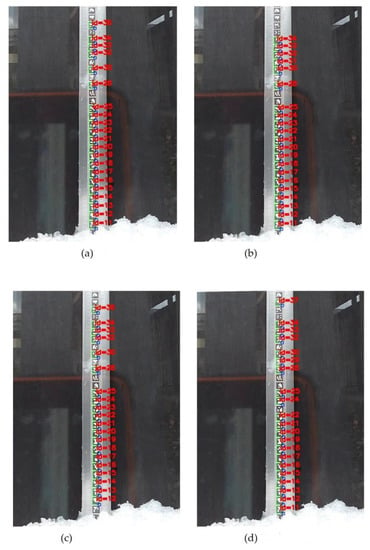

An example of the temporal change of ArUco markers at the smartphone setup position was shown in Figure 14. The still images were during the robot did not move. The 1 frame means a 1/30 s increase of elapsed time. The detected markers and their recognition rate changed with the still image frame change with elapsed time. The detected ArUco markers were not the same, even though the robot maintains the same position. The lowest position marker was decided using the developed sorting program from some hundred still images. In this report, the lowest position marker recognition rate has become 100% in the case of distances of L = 25 to 30 cm, even recognition rate was not 100% like mentioned above. And dynamic measurement for taking video data for the lowest position marker detection has been useful. And the measurement accuracy is ±1 cm.

Figure 14.

Temporal change of augmented reality markers at the smartphone setup position.: (a) δt (meant arbitrary time), (b) δt +1 frame, (c) δt + 2 frames, and (d) δt + 3 frames.

5. Cooperative Snowpack Depth Measurements and Evaluations Using Both Manual Handling and AI Automatic Programs

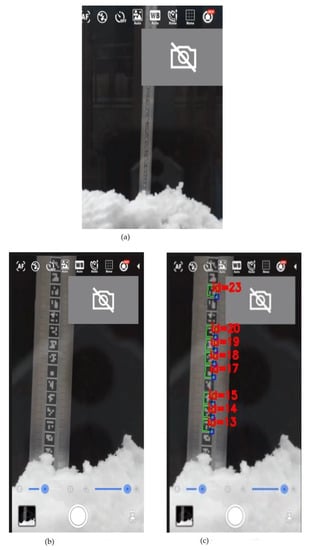

Then naked-eye snowpack depth evaluation was performed for L = 30 cm. The images were magnified using the digital camera zoom function and after that were more magnified using the PC zoom function. The magnified snowpack images using the digital camera zoom function was shown in Figure 15. The steel scale was magnified using the zoom function of the smartphones as the figure, and a clear image was obtained too.

Figure 15.

Magnified snowpack images using digital camera zoom function.: (a) Original image, (b) Zoomed image, and (c) Processed image.

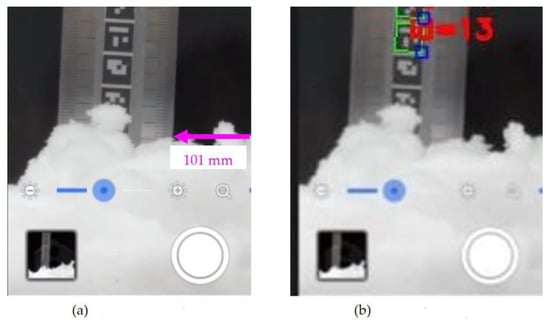

And after that, the naked-eye snowpack depth evaluation was performed. The more magnified snowpack images using the PC zoom function was shown in Figure 16. The image resolution decreased by performing the ArUco marker detection using the developed program, because of the process needed to convert a PNG image file into a JPG image file. But it was able to evaluate the snowpack depth accurately by that the snowpack depth was approximately recognized using AI detected AR marker and followed the scale indicator with the naked eye from there. In this example case, the snowpack depth was 101 mm, and the measurement accuracy is ±1 mm.

Figure 16.

More magnified snowpack images using PC zoom function. (a) Zoomed image, and (b) Processed image.

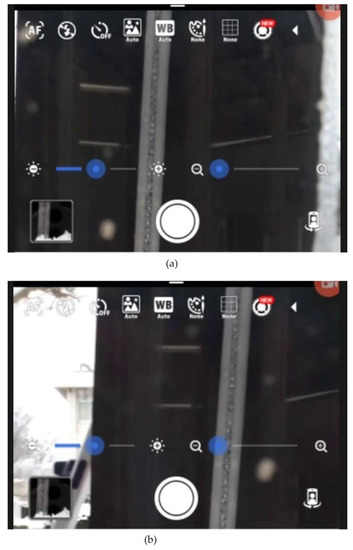

In this report, the horizontal rotation function was attached to the observation equipment compared with the previous report [4]. View field horizontal movement by smartphone fixed column rotation motion was shown in Figure 17. The smartphone rotate-moved in the left direction, and the view field rotated leftward. The present proposed equipment allowed flexible remote place measurement by upward and downward movement, horizontal rotation, and camera magnifying functions.

Figure 17.

View field horizontal movement by smartphone fixed column rotation motion: (a) δt (meant arbitrary time), and (b) δt +1 frame.

6. Conclusions

The remote-controlled snowpack depth evaluation system was architected with a general remote-control communication application and the Smartphone and Bluetooth device. Then the ArUco marker recognition rate was investigated, and the following was found.

- The remote-control process has been easier by using Bluetooth devices than in the previous report method having complex adjustments of AI sound cognition devices.

- The used network communication applications can control the remote place robot from the remote laboratory’s PC via smartphones as far as thousands of km.

- The lowest position marker recognition rate becomes 100% in the case of distances of 25 to 30 cm. And dynamic measurement using video data for the lowest position marker detection has been useful. And the automatic robot measurement accuracy is ±1 cm, and the manual measurement accuracy is ±1 mm on snowpack depth evaluation.

Author Contributions

Conceptualization, M.I. and Y.Y.; Methodology, M.I. and Y.Y.; Software, M.I.; Validation, M.I.; Investigation, M.I., T.C. and K.K.; Writing—original draft, M.I. and Y.Y.; Writing—review and editing, M.I. and Y.Y.; Project administration, M.I. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data supporting this article will be available upon request.

Acknowledgments

We sincerely thank all the contributors and graduate students related to this study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tateno, M. Fundamental Research on Automatic Hot Water Spreading Type Snow Melting Equipment for Roofs and Gardens. Bachelor’s Thesis, Toyama College, Toyama, Japan, 2021. (In Japanese). [Google Scholar]

- Futayama, T. A Fundamental Research of Remote Place Snowpack Depth Evaluation Using Sound Cognization and Image Processing. Bachelor’s Thesis, Toyama College, Toyama, Japan, 2022. (In Japanese). [Google Scholar]

- Ishiguro, M.; Yoshii, Y.; Chaki, T. Impulsive repeated snow compaction for snow removal assist. ISATE 2022, S2R5-P2, 1–6. [Google Scholar]

- Ishiguro, M.; Futayama, T.; Yoshii, Y.; Chaki, T. Simple Remote Place Snowpack Depth Evaluation Procedure using Open-Source Software. J. Inst. Ind. Appl. Eng. 2022, 10, 77–83. [Google Scholar] [CrossRef]

- Toyama Prefecture Regional Promotion Division. Fact-Finding Survey on the Living Conditions of Villages in Hilly and Mountainous Area in Toyama Prefecture; Toyama Prefecture Regional Promotion Division: Toyama, Japan, 2020; pp. 1–2. (In Japanese) [Google Scholar]

- Shugar, D.H.; Jacquemart, M.; Shean, D.; Bhushan, S.; Upadhyay, K.; Sattar, A.; Schwanghart, W.; McBride, S.; Van Wyk de Vries, M.; Mergili, M.; et al. A massive rock and ice avalanche caused the 2021 disaster at Chamoli, India Himalaya. Science 2021, 373, 300–306. [Google Scholar] [CrossRef] [PubMed]

- Gascoin, S.; Dumont, Z.B.; Deschamps-Berger, C.; Marti, F.; Salgues, G.; López-Moreno, J.I.; Revuelto, J.; Michon, T.; Schattan, P.; Hagolle, O. Estimating fractional snow cover in open terrain form sentianel-2 using the normalized difference snow index. Remote Sens. 2020, 12, 2904. [Google Scholar] [CrossRef]

- Suriano, Z.J.; Robinson, D.A.; Leathers, D.J. Changing snow depth in the Great Lakes basin. Anthropocene 2019, 26, 100208. [Google Scholar] [CrossRef]

- Wu, Q. Season-dependent effect of snow depth on soil microbial biomass and enzyme activity in a temperate forest in Northeast China. Catena 2020, 195, 104760. [Google Scholar] [CrossRef]

- Guo, D.; Pepin, N.; Yang, K.; Sun, J.; Li, D. Local changes in snow depth dominate the evolving pattern of elevation-dependent warming on the Tibetan Plateau. Sci. Bull. 2021, 66, 1146–1150. [Google Scholar] [CrossRef]

- JRevuelto; Gonzalez, E.A.; Gayan, I.V.; Lacroix, E.; Izagirre, E.; López, G.R.; Moreno, J.I.L. Intercomparison of UAV platforms for mapping snow depth distribution in complex alpine terrain. Cold Reg. Sci. Technol. 2021, 190, 10334. [Google Scholar]

- Donager, J.; Sankey, T.T.; Meador, A.J.S.; Sankey, J.B.; Springer, A. Integrating airborne and mobile lidar data with UAV photogrammetry for rapid assessment of changing forest snow depth and cover. Sci. Remote Sens. 2021, 4, 100029. [Google Scholar] [CrossRef]

- Yang, J.; Jiang, L.; Lemmetyinen, J.; Pan, J.; Luojus, K.; Takala, M. Improving snow depth estimation by coupling HUT-optimized effective snow grain size parameters with the random forest approach. Remote Sens. Environ. 2021, 264, 112630. [Google Scholar] [CrossRef]

- Zaremehrjardy, M.; Razavi, S.; Faramarzi, M. Assessment of the cascade of uncertainty in future snow depth projections across watersheds of mountainous, foothill, and plain area in northern latitudes. J. Hydrol. 2021, 598, 125735. [Google Scholar] [CrossRef]

- Li, Z.; Chen, P.; Zheng, N.; Liu, H. Accuracy analysis of GNSS-IR snow depth inversion algorithms. Adv. Space Res. 2021, 67, 1317–1332. [Google Scholar] [CrossRef]

- Maier, K.; Nascetti, A.; van Pelt, W.; Rosqvist, G. Direct photogrammetry with multispectral imagery for UAV-based snow depth estimation. ISPRS J. Photogramm. Remote Sens. 2022, 186, 1–18. [Google Scholar] [CrossRef]

- Yan, D.; Ma, N.; Zhang, Y. Development of a fine-resolution snow depth product based on the snow cover probability for the Tibetan Plateau. J. Hydrol. 2022, 604, 127027. [Google Scholar] [CrossRef]

- Sano, H. Development of snow cover detection sensor using image processing method. Annu. Rep. Ti-Ikigijutsu 2003, 16, 1–10. (In Japanese) [Google Scholar]

- Kopp, M.; Tuo, Y.; Disse, M. Fully automated snow depth measurements from time-lapse images applying a convolutional neural network. Sci. Total Environ. 2009, 697, 134213. [Google Scholar] [CrossRef]

- Liu, J.; Chen, R.; Ding, Y.; Han, C.; Ma, S. Snow process monitoring using time-lapse structure-from-motion photogrammetry with a single camera. Cold Reg. Sci. Technol. 2021, 190, 103355. [Google Scholar] [CrossRef]

- Ge, X.; Zhu, J.; Lu, D.; Wu, D.; Yu, F.; Wei, X. Effect of canopy composition on snow depth and below-the-snow-temperature regimes in the temperate secondary forest ecosystem, Northeast China. Agric. For. Meteorol. 2022, 313, 108744. [Google Scholar]

- Kang, Y.; Han, S. An alternative method for smartphone input using AR markers. J. Comput. Des. Eng. 2014, 1, 153–160. [Google Scholar] [CrossRef]

- Mihalyi, R.-G.; Pathak, K.; Vaskevicius, N.; Fromm, T.; Birk, A. Robust 3D object modeling with a low-cost RGBD-sensor and AR-markers for applications with untrained end-users. Robot. Auton. Syst. 2015, 66, 1–17. [Google Scholar] [CrossRef]

- Ito, M.; Miura, M. Evaluation of Stationary Colour AR Markers for Camera-based Student Response Analyser. Procedia Comput. Sci. 2016, 96, 904–9111. [Google Scholar] [CrossRef]

- Neges, M.; Koch, C.; König, M.; Abramovici, M. Combining visual natural markers and IMU for improved AR based indoor navigation. Adv. Eng. Inform. 2017, 31, 18–31. [Google Scholar] [CrossRef]

- Takenaka, H.; Soga, M. Development of a support system for reviewing and learning historical events by active simulation using AR markers. Procedia Comput. Sci. 2019, 159, 2355–2363. [Google Scholar] [CrossRef]

- Boonbrahm, P.; Kaewrat, C.; Boonbrahm, S. Effective Collaborative Design of Large Virtual 3D Model using Multiple AR Markers. Procedia Manuf. 2020, 42, 387–392. [Google Scholar] [CrossRef]

- Eswaran, M.; Bahubalendruni, R. Challenges and opportunities on AR/VR technologies for manufacturing systems in the context of industry 4/0. J. Manuf. Syst. 2022, 65, 260–278. [Google Scholar] [CrossRef]

- JIS B-7516; Metal Rules. Japanese Industrial Standards: Tokyo, Japan, 2005. Available online: https://www.jisc.go.jp/ (accessed on 25 May 2023).

- JIS K6718-2; Plastics—Poly(methyl methacrylate) Sheets. Japanese Industrial Standards: Tokyo, Japan, 2015. Available online: https://www.jisc.go.jp/ (accessed on 25 May 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).