Education for Sustainable Development: Mapping the SDGs to University Curricula

Abstract

1. Introduction

2. Materials and Methods

2.1. Relevancy of the Sustainability Tracking Assessment and Rating System (STARS)

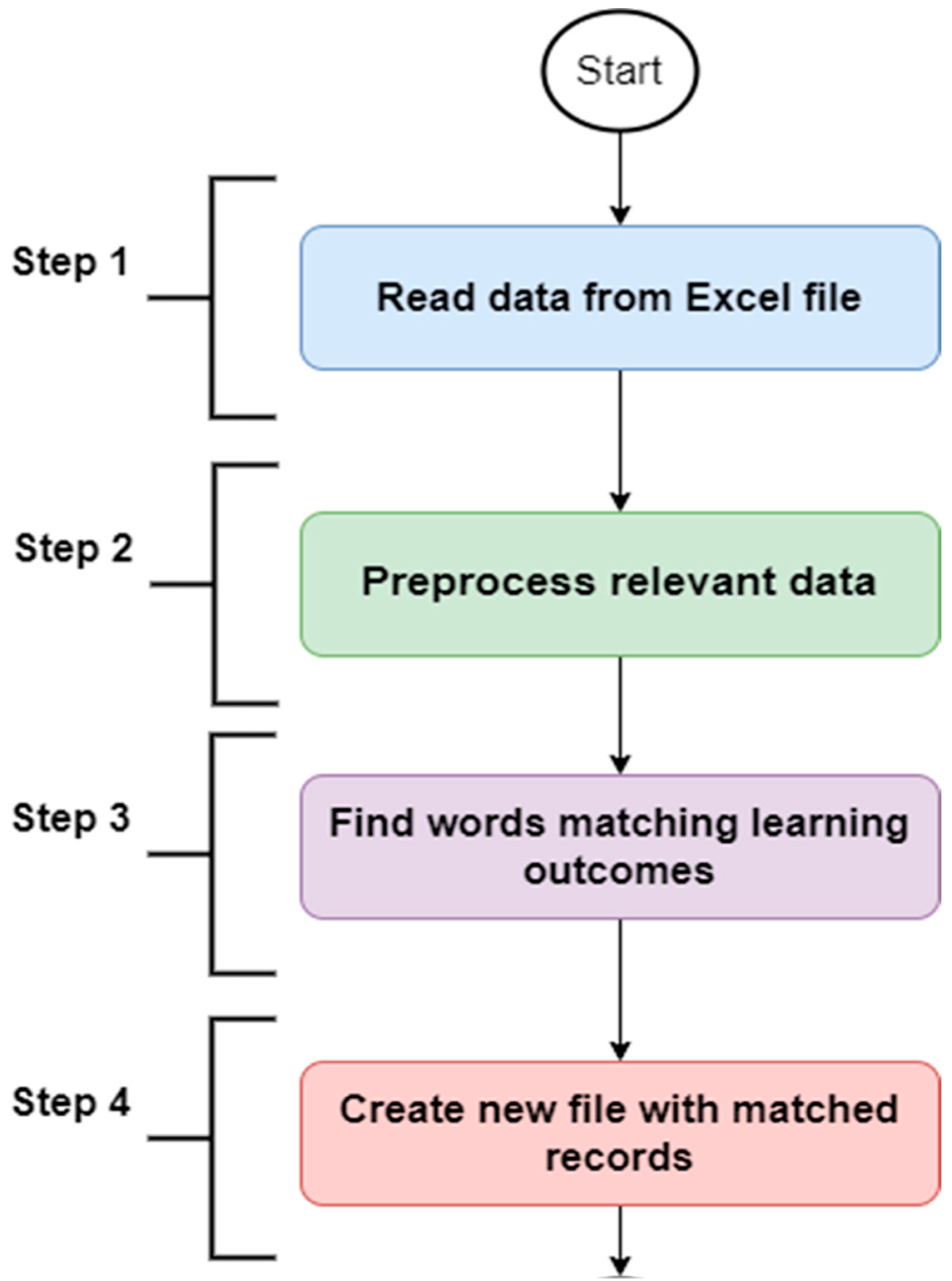

2.2. Keyword SDG Mapping Methodology

2.3. Survey SDG Mapping Methodology

- Gain an understanding of the level of interest in sustainability the respondents have.

- Collect suggestions for furthering sustainable development on campus.

- Inquire about what barriers surround ESD implementation.

- Collect suggestions for furthering ESD implementation in teaching and in research.

2.4. Keyword Sources

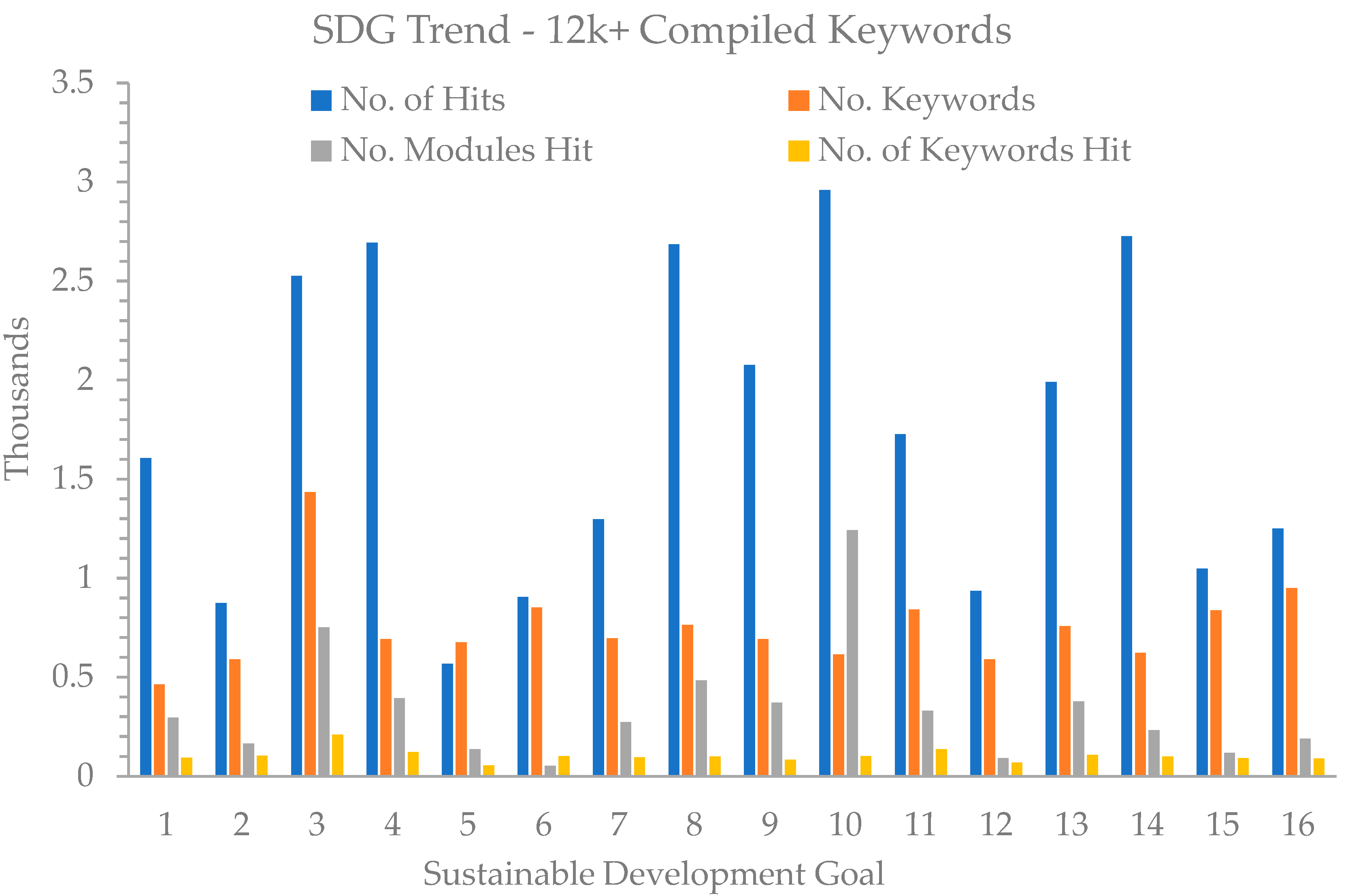

2.5. Keyword Meta-Analysis

- To identify the accuracy of each keyword list.

- To identify the frequency that keywords were hit.

- To review the level of SDGs in the curriculum for the 1617 modules.

- To investigate the correlation in SDG trends among various keyword lists.

2.6. Keyword Critical-Analysis

2.7. Keyword List Enrichment Utilising Artificial Intelligence

3. Results

3.1. Meta-Analysis

- To identify the accuracy of each keyword list.

- To identify the frequency that keywords were hit.

- To review the level of SDGs in the curriculum for the 1617 modules.

- To investigate the correlation in SDG trends among various keyword lists.

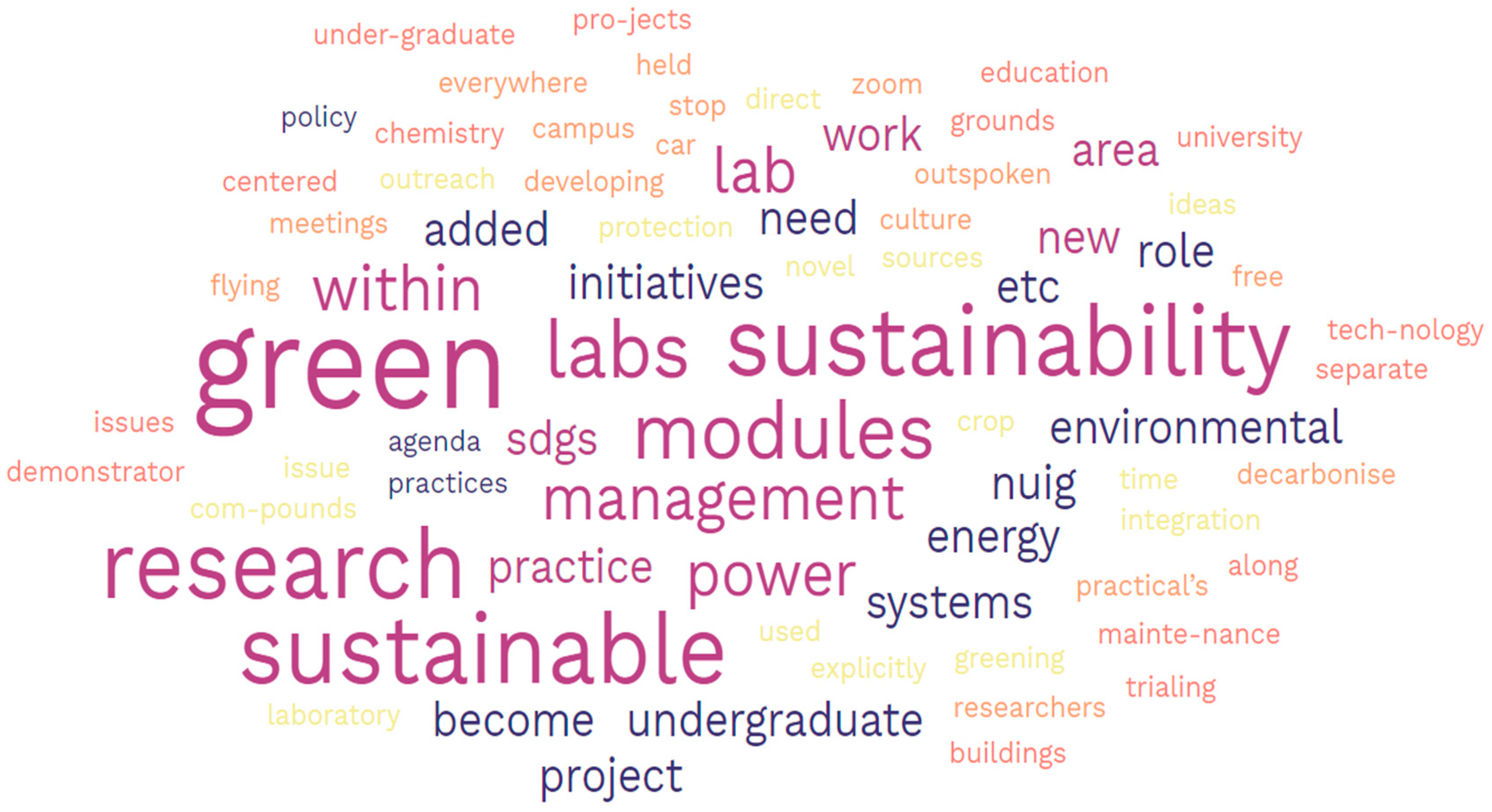

3.2. Critical Analysis and Chat GPT Enrichment

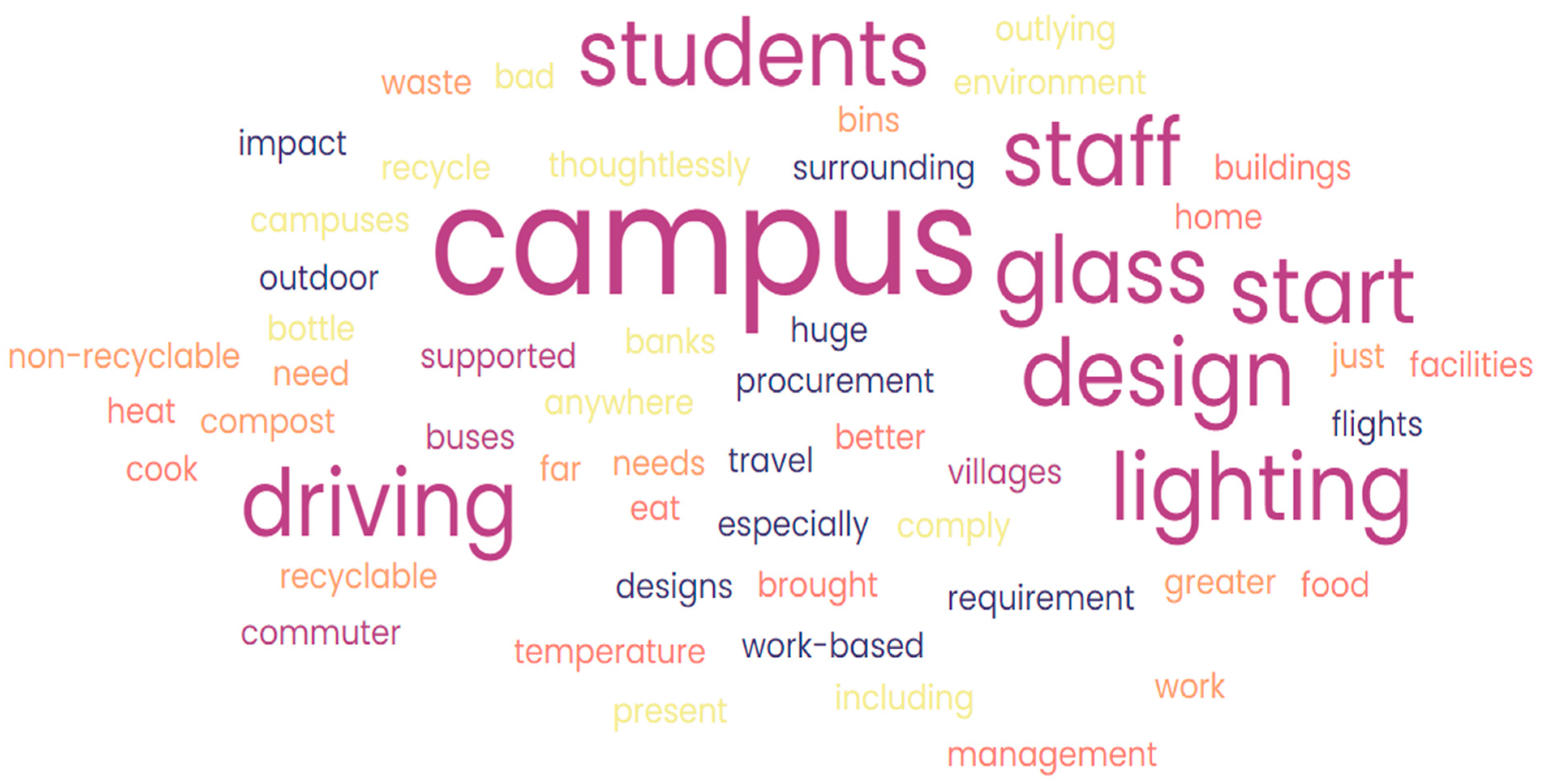

3.3. Survey Questionnaire

- Gain an understanding of the level of interest in sustainability the respondents have.

- Collect suggestions for furthering sustainable development on campus.

- Inquire about what barriers surround ESD implementation.

- Collect suggestions for furthering ESD implementation in teaching and in research.

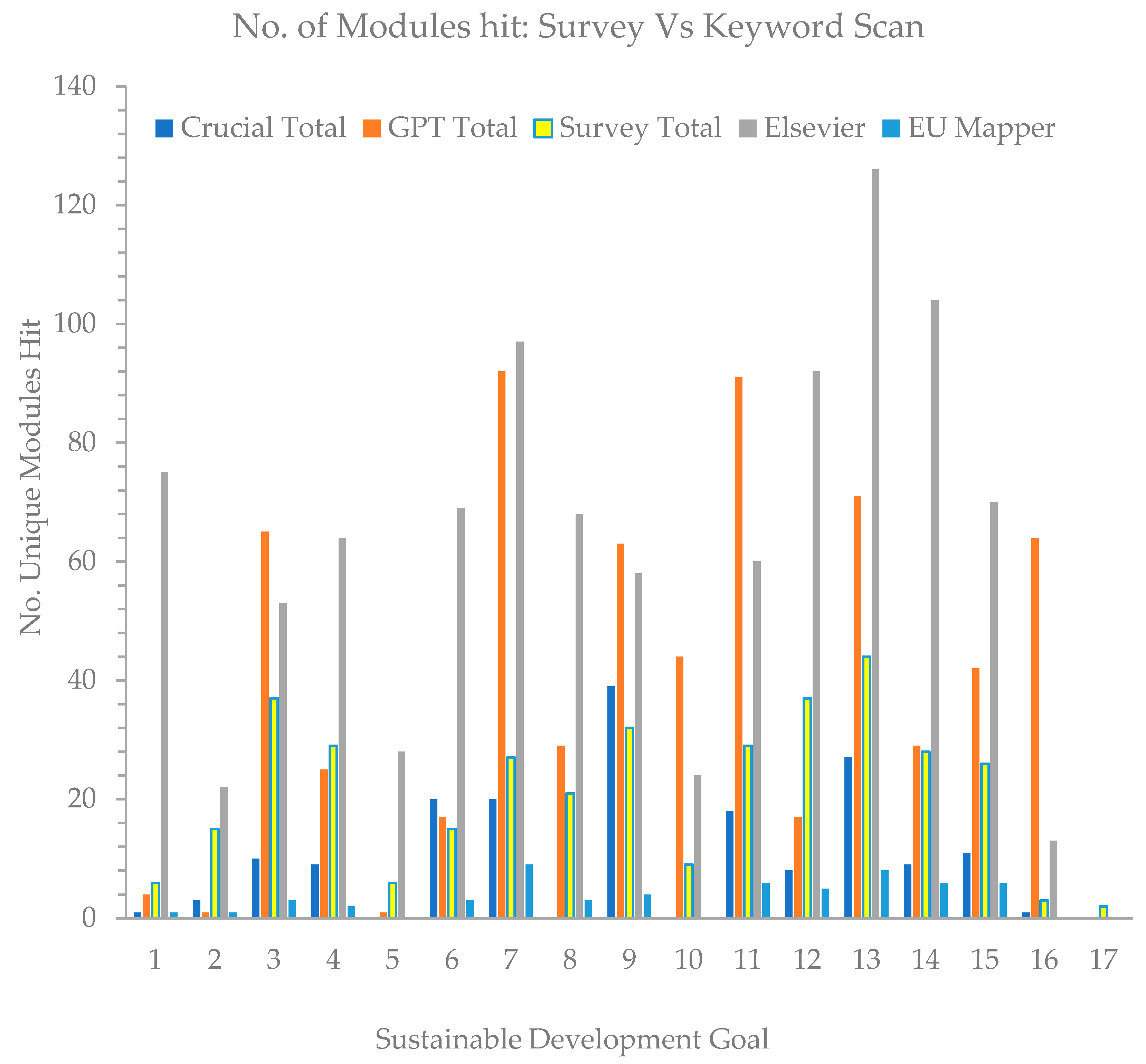

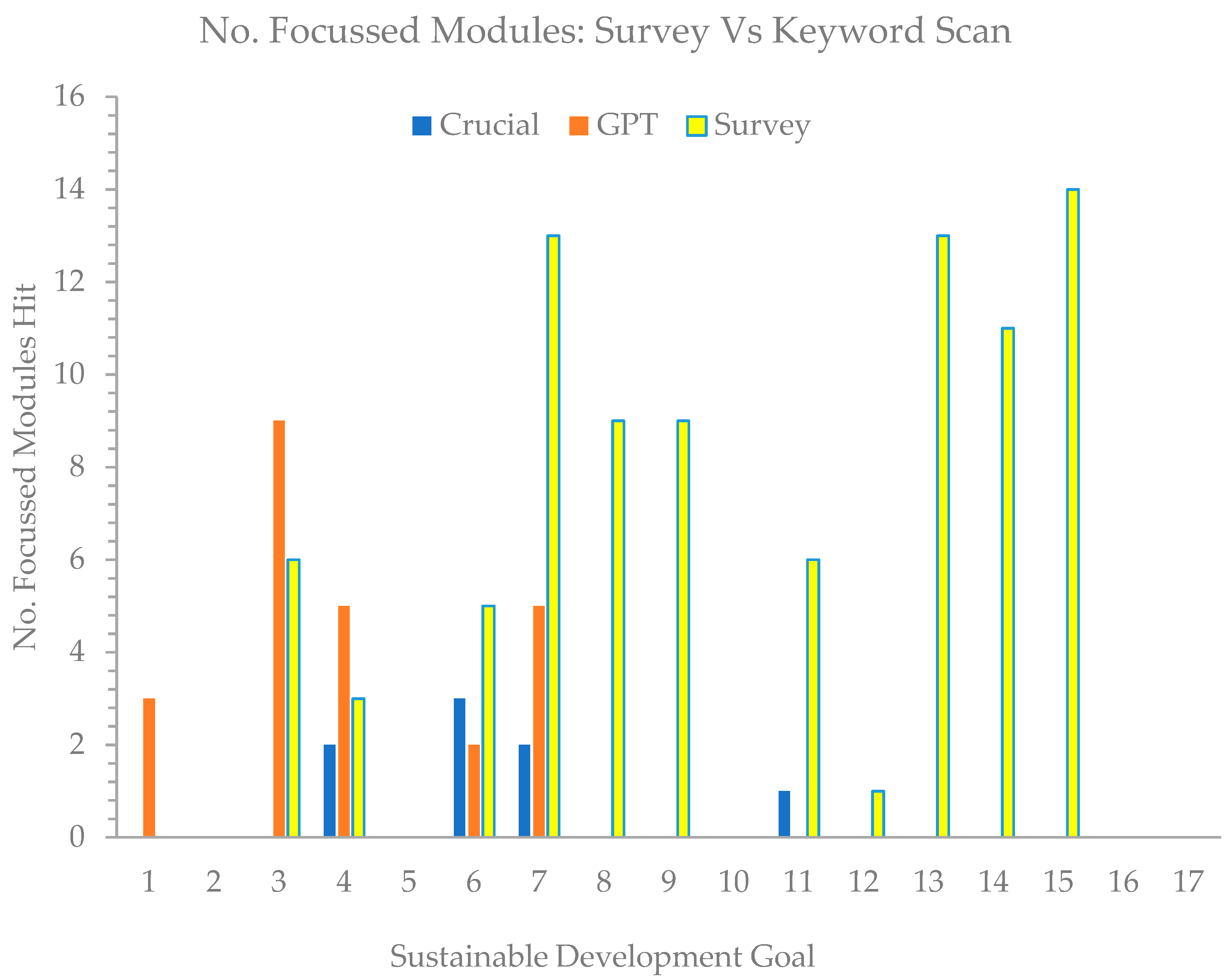

3.4. Survey vs. Keyword Scan

4. Discussion

4.1. Meta-Analysis

4.2. Critical-Analysis and Chat GPT Enrichment

4.3. Survey Questionnaire

4.4. Survey vs. Keyword Scan

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

- Students will be able to define sustainability and identify major sustainability challenges.

- Students will understand the carrying capacity of ecosystems related to providing for human needs.

- Students will be able to apply concepts of sustainable development to address sustainability challenges in a global context.

- Students will identify, act on, and evaluate their professional and personal actions with a knowledge and appreciation of interconnections among economic, environmental, and social perspectives.

- Students will be able to demonstrate an understanding of the nature of systems.

- Students will understand their social responsibility as future professionals and citizens.

- Students will be able to accommodate individual differences in their decisions and actions and negotiate across them.

- Students will analyse power, inequality structures, and social systems that govern individual and communal life.

- Students will be able to recognise the global implications of their actions.

Appendix B

- This step involves applying a series of text preprocessing operations to the raw data, which can be represented as a series of mathematical functions or operations. For example, we might apply the following functions:

- To convert text to lowercase: f(text) = lowercase(text)

- To remove punctuation: g(text) = remove_punctuation(text)

- To tokenise the text into individual words: h(text) = tokenise(text)

- To remove stop words (common words that do not carry much meaning): i(words) = remove_stopwords(words)

- These functions can be combined in various ways to create a preprocessing pipeline, such as:

- preprocess(text) = i(h(g(f(text))))

- The resulting preprocessed data can be represented as a set of records, where each record contains the preprocessed text for SDGall, sdg, and relevancy.

- This step involves comparing the preprocessed text from Step 2 against a set of learning outcomes. This can be represented as a series of functions or operations:

- To match words from a list against a piece of text: j(text, words) = count_matching_words(text, words) > 0

- To find all records that match at least one learning outcome: k(records, outcomes) = [r for r in records if j(r[’SDGall’], outcomes)]

- The result of this step is a list of records that match at least one learning outcome.

- This step involves writing the matched records from Step 3 to a new CSV file. This can be represented as a single function or operation:

- To write a list of records to a CSV file: write_csv(records, filename)

- The resulting file will contain the data from the matched records, along with any associated metadata (such as relevancy labels).

References

- UNESCO. Education for Sustainable Development. Available online: https://www.unesco.org/en/education-sustainable-development (accessed on 19 March 2023).

- Grosseck, G.; Țîru, L.G.; Bran, R.A. Education for Sustainable Development: Evolution and Perspectives: A Bibliometric Review of Research, 1992–2018. Sustainability 2019, 11, 6136. [Google Scholar] [CrossRef]

- Hallinger, P.; Chatpinyakoop, C. A Bibliometric Review of Research on Higher Education for Sustainable Development, 1998–2018. Sustainability 2019, 11, 2401. [Google Scholar] [CrossRef]

- Côrtes, P.L.; Rodrigues, R. A bibliometric study on “education for sustainability”. Braz. J. Sci. Technol. 2016, 3, 8. [Google Scholar] [CrossRef]

- Zutshi, A.; Creed, A.; Connelly, B.L. Education for Sustainable Development: Emerging Themes from Adopters of a Declaration. Sustainability 2019, 11, 156. [Google Scholar] [CrossRef]

- Tang, M.; Liao, H.; Wan, Z.; Herrera-Viedma, E.; Rosen, M.A. Ten Years of Sustainability (2009 to 2018): A Bibliometric Overview. Sustainability 2018, 10, 1655. [Google Scholar] [CrossRef]

- Wright, T.; Pullen, S. Examining the Literature: A Bibliometric Study of ESD Journal Articles in the Education Resources Information Center Database. J. Educ. Sustain. Dev. 2007, 1, 77–90. [Google Scholar] [CrossRef]

- Menon, S.; Suresh, M. Synergizing education, research, campus operations, and community engagements towards sustainability in higher education: A literature review. Int. J. Sustain. High. Educ. 2020, 21, 1015–1051. [Google Scholar] [CrossRef]

- Lozano, R.; Lukman, R.; Lozano, F.J.; Huisingh, D.; Lambrechts, W. Declarations for sustainability in higher education: Becoming better leaders, through addressing the university system. J. Clean. Prod. 2013, 48, 10–19. [Google Scholar] [CrossRef]

- UNESCO. Sustainable Development and Global Citizenship—Target 4.7. 8 November 2018. Available online: https://gem-report-2019.unesco.org/chapter/monitoring-progress-in-sdg-4/sustainable-development-and-global-citizenship-target-4-7/ (accessed on 21 March 2023).

- Zahid, M.; Rehman, H.U.; Ali, W.; Habib, M.N.; Shad, F. Integration, implementation and reporting outlooks of sustainability in higher education institutions (HEIs): Index and case base validation. Int. J. Sustain. High. Educ. 2021, 22, 120–137. [Google Scholar] [CrossRef]

- Guiry, N.; Barimo, J.; Byrne, E.; O’Mahony, C.; Reidy, D.; Dever, D.; Mullally, G.; Kirrane, M.; O’Mahony, M.J. Education for Sustainable Development Synthesis Report: Co-Creating Common Areas of Need and Concern. Green Campus Ireland 2022. Available online: https://www.ucc.ie/en/media/support/greencampus/pdfs/ESDSynthesisReport-Feb2022.pdf (accessed on 19 March 2023). [CrossRef]

- Weiss, M.; Barth, M.; von Wehrden, H. The patterns of curriculum change processes that embed sustainability in higher education institutions. Sustain. Sci. 2021, 16, 1579–1593. [Google Scholar] [CrossRef]

- Weiss, M.; Barth, M. Global research landscape of sustainability curricula implementation in higher education. Int. J. Sustain. High. Educ. 2019, 20, 570–589. [Google Scholar] [CrossRef]

- Lemarchand, P.; McKeever, M.; MacMahon, C.; Owende, P. A computational approach to evaluating curricular alignment to the united nations sustainable development goals. Front. Sustain. 2022, 3, 909676. [Google Scholar] [CrossRef]

- Advance, H.E. Education for Sustainable Development Guidance. Available online: https://www.advance-he.ac.uk/knowledge-hub/education-sustainable-development-guidance (accessed on 21 March 2023).

- Wiek, A.; Withycombe, L.; Redman, C.L. Key competencies in sustainability: A reference framework for academic program development. Sustain. Sci. 2011, 6, 203–218. [Google Scholar] [CrossRef]

- Rieckmann, M. Education for Sustainable Development Goals—Learning Objectives; UNESCO Publishing: Paris, France, 2017; Volume 2. [Google Scholar] [CrossRef]

- Amina, O.; Ladhani, S.; Findlater, E.; McKay, V. Curriculum Framework for Enabling the Sustainable Development Goals. Commonwealth Secretariat. 2017. Available online: https://www.thecommonwealth-ilibrary.org/index.php/comsec/catalog/book/1064 (accessed on 19 March 2023). [CrossRef]

- SDSN. Accelerating Education for the SDGs in Universities: A Guide for Universities, Colleges, and Tertiary and Higher Education Institutions; Sustainable Development Solutions Network (SDSN): New York, NY, USA, 2020. [Google Scholar]

- Department of Education (DoE) and Department of Further and Higher Education, Research, Innovation and Science (DFHERIS). In Proceedings of the 2nd National Strategy on Education for Sustainable Development—ESD to 2030, Dublin, Ireland, 2 June 2022; Available online: https://www.gov.ie/en/publication/8c8bb-esd-to-2030-second-national-strategy-on-education-for-sustainable-development/ (accessed on 28 October 2022).

- Department of Education (DoE) and Department of Further and Higher Education, Research, Innovation and Science (DFHERIS). In Proceedings of the ESD to 2030: Implementation Plan 2022–2026, Dublin, Ireland, June 2022; Available online: https://www.gov.ie/en/publication/8c8bb-esd-to-2030-second-national-strategy-on-education-for-sustainable-development/ (accessed on 28 October 2022).

- NUI Galway. NUI Galway Sustainability Strategy. 2021. Available online: https://www.nuigalway.ie/sustainability/strategy/ (accessed on 23 February 2022).

- D’adamo, I.; Gastaldi, M. Perspectives and Challenges on Sustainability: Drivers, Opportunities and Policy Implications in Universities. Sustainability 2023, 15, 3564. [Google Scholar] [CrossRef]

- Lambrechts, W.; Van Liedekerke, L.; Van Petegem, P. Higher education for sustainable development in Flanders: Balancing between normative and transformative approaches. Environ. Educ. Res. 2018, 24, 1284–1300. [Google Scholar] [CrossRef]

- Yarime, M.; Tanaka, Y. The Issues and Methodologies in Sustainability Assessment Tools for Higher Education Institutions: A Review of Recent Trends and Future Challenges. J. Educ. Sustain. Dev. 2012, 6, 63–77. [Google Scholar] [CrossRef]

- Findler, F.; Schönherr, N.; Lozano, R.; Stacherl, B. Assessing the Impacts of Higher Education Institutions on Sustainable Development—An Analysis of Tools and Indicators. Sustainability 2019, 11, 59. [Google Scholar] [CrossRef]

- Stough, T.; Ceulemans, K.; Lambrechts, W.; Cappuyns, V. Assessing sustainability in higher education curricula: A critical reflection on validity issues. J. Clean. Prod. 2018, 172, 4456–4466. [Google Scholar] [CrossRef]

- Vanderfeesten, M.; Otten, R.; Spielberg, E. Search Queries for “Mapping Research Output to the Sustainable Development Goals (SDGs)”v5.0.2. Zenodo 2020. [Google Scholar] [CrossRef]

- Schmidt, F.; Vanderfeesten, M. Evaluation on Accuracy of Mapping Science to the United Nations’ Sustainable Development Goals (SDGs) of the Aurora SDG Queries. Zenodo 2021. [Google Scholar] [CrossRef]

- Rivest, M.; Kashnitsky, Y.; Bédard-Vallée, A.; Campbell, D.; Khayat, P.; Labrosse, I.; Pinheiro, H.; Provençal, S.; Roberge, G.; James, C. Improving the Scopus and Aurora queries to identify research that supports the United Nations Sustainable Development Goals (SDGs) 2021. Mendeley Data 2021, 2. [Google Scholar] [CrossRef]

- Elsevier. Analyze Societal Impact Research with SciVal. Available online: https://www.elsevier.com/research-intelligence/societal-impact-research-with-scival (accessed on 20 March 2023).

- Adams, T.; Kishore, S.K.; Goggins, J.M. Embedment of the UN Sustainable Development Goals (SDG) within Engineering Degree Programmes. Civ. Eng. Res. Irel. 2020, 2027, 400–405. [Google Scholar]

- Monash University. “Compiled-Keywords-for-SDG-Mapping_Final_17-05-10,” Australia/Pacific Sustainable Development Solutions Network (SDSN). 2017. Available online: http://ap-unsdsn.org/wp-content/uploads/2017/04/Compiled-Keywords-for-SDG-Mapping_Final_17-05-10.xlsx (accessed on 3 February 2020).

- AASHE, the Association for the Advancement of Sustainability in Higher Education. The Association for the Advancement of Sustainability in Higher Education. Available online: https://www.aashe.org/ (accessed on 21 March 2023).

- Participants & Reports |Institutions| STARS Reports. Available online: https://reports.aashe.org/institutions/participants-and-reports/?sort=-date_expiration (accessed on 21 March 2023).

- Association of Advancement of Sustainability in Higher Education(AASHE). Technical Manual Version 2.2; Sustainability Tracking Assessment and Rating System (STARS), Philadelphia. 2019, pp. 1–322. Available online: https://stars.aashe.org/wp-content/uploads/2019/07/STARS-2.2-Technical-Manual.pdf (accessed on 19 March 2023).

- Lemarchand, P.; MacMahon, C.; McKeever, M.; Owende, P. An evaluation of a computational technique for measuring the embeddedness of sustainability in the curriculum aligned to AASHE-STARS and the United Nations Sustainable Development Goals. Front. Sustain. 2023, 4, 997509. [Google Scholar] [CrossRef]

- Mishra, R.K.; Urolagin, S.; Jothi, J.A.A.; Neogi, A.S.; Nawaz, N. Deep Learning-based Sentiment Analysis and Topic Modeling on Tourism During Covid-19 Pandemic. Front. Comput. Sci. 2021, 3, 775368. Available online: https://www.frontiersin.org/articles/10.3389/fcomp.2021.775368 (accessed on 21 March 2023). [CrossRef]

- Meschede, C. The Sustainable Development Goals in Scientific Literature: A Bibliometric Overview at the Meta-Level. Sustainability 2020, 12, 4461. [Google Scholar] [CrossRef]

- Kuros, A.; Rockcress, A.D.; Berman-Jolton, J.L. Critiquing and Developing Benchmarking Tools for Sustainability. December 2017. Available online: https://digitalcommons.wpi.edu/iqp-all/2169/ (accessed on 21 March 2023).

- Duran-Silva, N.; Fuster, E.; Massucci, F.A.; Quinquillà, A. A controlled vocabulary defining the semantic perimeter of Sustainable Development Goals. Dataset Zenodo DOI 2019, 10. [Google Scholar] [CrossRef]

- Mu, J.; Kang, K. The University of Auckland SDG Keywords Mapping. 2021. Available online: https://www.sdgmapping.auckland.ac.nz/ (accessed on 3 November 2021).

- Barimo, J.; O’Mahony, C.; Mullally, G.; O’Halloran, J.; Byrne, E.; Reidy, D.; Kirrane, M. SDG Curriculum Toolkit. 2021. Available online: https://www.ucc.ie/en/sdg-toolkit/teaching/tool/ (accessed on 24 March 2022).

- Joint Research Centre. “SDG Mapper | KnowSDGs,” European Commission. Available online: https://knowsdgs.jrc.ec.europa.eu/sdgmapper#api (accessed on 27 March 2023).

- Joint Research Centre. “Methodology | KnowSDGs,” European Commission. Available online: https://knowsdgs.jrc.ec.europa.eu/methodology-policy-mapping (accessed on 27 March 2023).

- ChatGPT: Optimizing Language Models for Dialogue, OpenAI, 30 November 2022. Available online: https://openai.com/blog/chatgpt/ (accessed on 15 February 2023).

- Vincent, J. “AI-generated answers temporarily banned on coding Q & A site Stack Overflow,” The Verge. 5 December 2022. Available online: https://www.theverge.com/2022/12/5/23493932/chatgpt-ai-generated-answers-temporarily-banned-stack-overflow-llms-dangers (accessed on 15 February 2023).

- WordNet. Available online: https://wordnet.princeton.edu/ (accessed on 3 April 2023).

- Content |Institutions| STARS Reports. Available online: https://reports.aashe.org/institutions/data-displays/2.0/content/?institution__institution_type=DO_NOT_FILTER (accessed on 27 March 2023).

- University of Galway. Available online: https://www.universityofgalway.ie/course-information/module/ (accessed on 13 April 2023).

| SDG | Auckland | EU Mapper | Monash | Elsevier | SIRIS |

|---|---|---|---|---|---|

| 1 | 83 | 146 | 27 | 100 | 107 |

| 2 | 168 | 145 | 46 | 100 | 131 |

| 3 | 349 | 273 | 67 | 100 | 644 |

| 4 | 176 | 245 | 45 | 100 | 125 |

| 5 | 155 | 243 | 39 | 100 | 139 |

| 6 | 253 | 135 | 57 | 100 | 305 |

| 7 | 224 | 113 | 45 | 100 | 214 |

| 8 | 205 | 230 | 61 | 100 | 168 |

| 9 | 85 | 179 | 46 | 100 | 281 |

| 10 | 125 | 184 | 51 | 100 | 153 |

| 11 | 197 | 187 | 66 | 100 | 290 |

| 12 | 145 | 133 | 57 | 100 | 155 |

| 13 | 201 | 122 | 46 | 100 | 288 |

| 14 | 174 | 132 | 46 | 100 | 169 |

| 15 | 213 | 278 | 51 | 100 | 194 |

| 16 | 144 | 310 | 66 | 100 | 330 |

| 17 | 326 | 31 | |||

| Misc | 68 | ||||

| Total | 2897 | 3381 | 915 | 1600 | 3693 |

| Keyword List Source | Number of Keywords | SDGs Covered |

|---|---|---|

| Monash University [34] | 915 | SDG 1–17 1 |

| Elsevier [31] | 1600 | SDG 1–16 |

| EU Mapper [45] | 3382 | SDG 1–17 (& targets) |

| SIRIS Academic [42] | 3693 | SDG 1–16 |

| University of Auckland [43] | 2897 | SDG 1–16 |

| College of | Number of Modules |

|---|---|

| Arts, Social Sciences & Celtic Studies | 576 |

| Science and Engineering | 568 |

| Medicine, Nursing, & Health Sciences | 228 |

| Business, Public Policy, & Law | 133 |

| Adult Learning and Professional Development | 112 |

| Total | 1617 |

| Category | Frequency | No. Words |

|---|---|---|

| Low-hitting words | 1–2 times | 546 |

| Medium-hitting words | 3–10 times | 417 |

| High-hitting words | 11–49 times | 275 |

| Outliers | 50+ times | 159 |

| Total | 1397 |

| Keyword | List | No. Times Hit | SDG |

|---|---|---|---|

| sea | Elsevier, SIRIS | 2150 | 13 & 14 |

| work | Monash, SIRIS | 1245 | 8 |

| age | Monash, SIRIS | 1092 | 10 |

| health | Auck, Monash, Elsevier, SIRIS | 1015 | 1, 3, & 10 |

| management | Auck, Elsevier, SIRIS | 955 | 6, 9, 14, & 16 |

| environment | Monash, Elsevier, SIRIS | 895 | 1, 2, 12, 13, & Misc. |

| Score | Relevancy Description |

|---|---|

| 0 | Not relevant to assigned SDG |

| 1 | Supportive of an SDG other than the SDG assigned |

| 2 | Focused on an SDG other than the SDG assigned |

| 3 | Supportive of assigned SDG |

| 4 | Focused on assigned SDG |

| SDG | Keyword | Synonyms |

|---|---|---|

| 7 | Solar | Photovoltaic |

| Sun-powered | ||

| Insolation |

| List | Auckland | EU Mapper | Monash | Elsevier | SIRIS |

|---|---|---|---|---|---|

| Total keywords | 2897 | 3381 | 915 | 1600 | 3693 |

| Unique keywords hit | 322 | 121 | 228 | 577 | 422 |

| R-value | 0.81 | 0.74 | 0.15 | −0.11 | 0.60 |

| SDG | Auckland | EU Mapper | Monash | Elsevier | SIRIS | Average |

|---|---|---|---|---|---|---|

| 1 | 2% | 3% | 48% | 52% | 20% | 20% |

| 2 | 14% | 6% | 30% | 34% | 17% | 17% |

| 3 | 23% | 8% | 22% | 42% | 7% | 15% |

| 4 | 13% | 6% | 27% | 53% | 15% | 18% |

| 5 | 1% | 0% | 28% | 37% | 2% | 8% |

| 6 | 8% | 4% | 23% | 38% | 8% | 12% |

| 7 | 9% | 6% | 36% | 34% | 8% | 14% |

| 8 | 8% | 3% | 30% | 29% | 17% | 13% |

| 9 | 1% | 2% | 13% | 37% | 13% | 12% |

| 10 | 11% | 2% | 35% | 31% | 24% | 17% |

| 11 | 15% | 4% | 30% | 33% | 16% | 16% |

| 12 | 8% | 4% | 21% | 28% | 7% | 12% |

| 13 | 12% | 5% | 24% | 42% | 9% | 14% |

| 14 | 11% | 4% | 28% | 38% | 15% | 16% |

| 15 | 12% | 3% | 22% | 25% | 11% | 11% |

| 16 | 5% | 2% | 20% | 24% | 12% | 9% |

| 17 | 1% | 10% | 2% | |||

| Misc | 13% | 13% | ||||

| Total | 11% | 4% | 25% | 36% | 11% | 13% |

| SDG | Auckland | EU Mapper | Monash | Elsevier | SIRIS | Average |

|---|---|---|---|---|---|---|

| 1 | 0.1% | 0.1% | 7% | 11% | 0.2% | 4% |

| 2 | 1% | 0.1% | 3% | 5% | 1% | 2% |

| 3 | 29% | 0.1% | 5% | 6% | 7% | 9% |

| 4 | 8% | 0.3% | 2% | 12% | 3% | 5% |

| 5 | 1% | 0% | 1% | 7% | 0% | 2% |

| 6 | 2% | 0% | 0.4% | 1% | 0.2% | 1% |

| 7 | 2% | 0% | 2% | 10% | 2% | 3% |

| 8 | 12% | 0% | 5% | 10% | 2% | 6% |

| 9 | 3% | 7.2% | 8% | 3% | 1% | 5% |

| 10 | 1% | 0.1% | 36% | 1% | 39% | 15% |

| 11 | 5% | 0% | 4% | 3% | 8% | 4% |

| 12 | 0.2% | 0% | 1% | 4% | 1% | 1% |

| 13 | 3% | 0.1% | 0.4% | 14% | 6% | 5% |

| 14 | 2% | 0% | 1% | 2% | 9% | 3% |

| 15 | 2% | 0% | 1% | 2% | 2% | 1% |

| 16 | 0.2% | 0.1% | 1% | 3% | 7% | 2% |

| Misc | 3% | 3% | ||||

| Total | 71% | 8% | 81% | 94% | 88% | 68% |

| SDG | Auckland | EU Mapper | Monash | Elsevier | SIRIS | Average |

|---|---|---|---|---|---|---|

| 1 | 0.1 | 0.04 | 14.6 | 11.2 | 0.7 | 3.5 |

| 2 | 0.4 | 0.14 | 8.8 | 2.5 | 1.1 | 1.5 |

| 3 | 2.6 | 0.32 | 4.6 | 4.7 | 1.1 | 1.8 |

| 4 | 2.6 | 0.18 | 3.0 | 18.5 | 1.7 | 3.9 |

| 5 | 0.1 | 0.004 | 2.1 | 4.5 | 0.2 | 0.8 |

| 6 | 0.4 | 0.10 | 1.7 | 3.7 | 1.1 | 1.1 |

| 7 | 0.4 | 0.11 | 2.8 | 9.2 | 0.7 | 1.9 |

| 8 | 2.0 | 0.06 | 10.5 | 8.7 | 4.4 | 3.5 |

| 9 | 2.2 | 2.99 | 12.5 | 6.0 | 0.6 | 3.0 |

| 10 | 0.4 | 0.02 | 22.0 | 5.7 | 7.9 | 4.8 |

| 11 | 0.7 | 0.04 | 5.7 | 6.5 | 1.9 | 2.1 |

| 12 | 0.2 | 0.05 | 4.4 | 5.9 | 0.4 | 1.6 |

| 13 | 0.5 | 0.30 | 2.7 | 10.8 | 2.2 | 2.6 |

| 14 | 0.6 | 0.06 | 2.5 | 8.9 | 9.5 | 4.4 |

| 15 | 0.4 | 0.05 | 2.5 | 4.8 | 1.8 | 1.3 |

| 16 | 0.1 | 0.05 | 2.2 | 2.9 | 2.4 | 1.3 |

| 17 | 0.08 | 0.1 | 0.1 | |||

| Misc | 7.2 | 7.2 | ||||

| Total | 0.9 | 0.3 | 6.0 | 7.2 | 2.1 | 2.3 |

| Auck | Eu Mapper | Monash | Elsevier | SIRIS | Crucial | GPT |

|---|---|---|---|---|---|---|

| CE3105 | MD201 | PAB5104 | PAB5104 | PAB5104 | PAB5105 | AY590 |

| CE6118 | ZO417 | PAB5105 | ZO417 | ZO417 | PAB5104 | CE514 |

| MD302 | MD304 | CE6103 | PAB5105 | OY2110 | ZO417 | PAB5105 |

| MD201 | PAB5104 | CE6118 | EG5101 | EC388 | CE343 | CE343 |

| ZO417 | CE6118 | CE3105 | EG400 | CE3105 | CE514 | MD201 |

| Module Code | Module Title | No. Lists Agree |

|---|---|---|

| PAB5104 | Gender, Agriculture & Climate Change | 5 |

| ZO417 | Marine & Coastal Ecology | 5 |

| PAB5105 | Low-Emissions & Climate-Smart Agriculture & Agri-Food Systems | 4 |

| CE3105 | Environmental Engineering | 3 |

| MD201 | Health & Disease | 3 |

| CE514 | Transportation Systems and Infrastructure II | 2 |

| CE343 | Sustainable Energy | 2 |

| Module | PAB5104 | ZO417 | PAB5105 | CE3105 | MD201 | CE514 | CE343 |

|---|---|---|---|---|---|---|---|

| No. Hits | 161 | 156 | 155 | 140 | 111 | 137 | 112 |

| No. Keywords | 61 | 53 | 63 | 59 | 55 | 47 | 44 |

| No. SDGs hit | 17 | 15 | 14 | 16 | 15 | 15 | 14 |

| Unhit SDGs | NA | 5/17 | 5/14/17 | 5 | 7/17 | 5/17 | 5/16/17 |

| Accuracy: Percent of Keywords Hit | Frequency: Hits/Words | |||||

|---|---|---|---|---|---|---|

| SDG | Crucial | GPT | Average | Crucial | GPT | Average |

| 1 | 43% | 16% | 20% | 1.3 | 0.9 | 3.5 |

| 2 | 100% | 27% | 17% | 10.3 | 0.7 | 1.5 |

| 3 | 70% | 33% | 15% | 4.9 | 3.1 | 1.8 |

| 4 | 82% | 43% | 18% | 18.8 | 8.3 | 3.9 |

| 5 | 33% | 50% | 8% | 5.3 | 7.9 | 0.8 |

| 6 | 100% | 43% | 12% | 9.3 | 2.0 | 1.1 |

| 7 | 80% | 50% | 14% | 5.9 | 9.6 | 1.9 |

| 8 | 71% | 59% | 13% | 5.4 | 5.6 | 3.5 |

| 9 | 89% | 58% | 12% | 27.4 | 13.9 | 3.0 |

| 10 | 73% | 43% | 17% | 3.6 | 7.7 | 4.8 |

| 11 | 60% | 27% | 16% | 4.0 | 8.0 | 2.1 |

| 12 | 70% | 35% | 12% | 4.0 | 2.0 | 1.6 |

| 13 | 72% | 28% | 14% | 4.2 | 4.2 | 2.6 |

| 14 | 67% | 24% | 16% | 7.2 | 9.2 | 4.4 |

| 15 | 56% | 36% | 11% | 5.3 | 5.6 | 1.3 |

| 16 | 57% | 30% | 9% | 2.9 | 4.4 | 1.3 |

| Total | 69% | 36% | 13% | 6.3 | 5.4 | 2.3 |

| Focused Hits | Supportive Hits | Average | |||

|---|---|---|---|---|---|

| SDG | Crucial | GPT | Crucial | GPT | 5 List |

| 1 | 0.1% | 1.6% | 0.1% | 0% | 4% |

| 2 | 0% | 0% | 1.6% | 0.8% | 2% |

| 3 | 1.4% | 4.9% | 7% | 20.8% | 9% |

| 4 | 2% | 7% | 6% | 11.9% | 5% |

| 5 | 0.0% | 0% | 0.4% | 1.8% | 2% |

| 6 | 0.5% | 0.7% | 0.2% | 0.4% | 1% |

| 7 | 0.2% | 2.4% | 1.0% | 5.1% | 3% |

| 8 | 0% | 0% | 2.4% | 5.9% | 6% |

| 9 | 0% | 0% | 9% | 6.7% | 5% |

| 10 | 0.1% | 0.6% | 1.4% | 2.5% | 15% |

| 11 | 0.1% | 0.5% | 1.5% | 5.4% | 4% |

| 12 | 0.5% | 0.0% | 1.2% | 0.0% | 1% |

| 13 | 1% | 1% | 1.1% | 0.0% | 5% |

| 14 | 0.4% | 0% | 0.9% | 0.1% | 3% |

| 15 | 0.0% | 0% | 1.1% | 1.1% | 1% |

| 16 | 0.4% | 0.1% | 1.4% | 1.5% | 2% |

| Total | 7% | 19% | 36% | 65% | 68% |

| Metric | Relevance | Crucial | GPT | EU | Elsevier |

|---|---|---|---|---|---|

| No. Keywords | Focused | 0.24 | 0.4 | ||

| Supportive | 0.55 | 0.13 | |||

| Both | 0.74 | 0.5 | 0.48 | 0.57 | |

| No. Modules | Focused | −0.09 | 0.28 | ||

| Supportive | 0.39 | 0.69 | |||

| Both | 0.36 | 0.64 | 0.12 | −0.45 | |

| No. Hits | Focused | 0.26 | 0.57 | ||

| Supportive | 0.29 | −0.06 | |||

| Both | 0.54 | 0.44 | 0.59 | 0.69 |

| Crucial | No. Hits | GPT | No. Hits | Survey | No. Hits | Elsevier | No. Hits |

|---|---|---|---|---|---|---|---|

| ZO417 | 19 | ZO417 | 41 | CE464 | 11 | ZO417 | 89 |

| EG400/EG5101 | 13 | BI5108 | 33 | CE6102 | 11 | BI5108 | 51 |

| BI5108 | 13 | EG400/EG5101 | 26 | CE6103 | 11 | EG400/EG5101 | 42 |

| CE343 | 11 | CE514 | 25 | CE344 | 10 | CE514 | 39 |

| CE514 | 11 | CE343 | 24 | CE514 | 10 | CE6103 | 32 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adams, T.; Jameel, S.M.; Goggins, J. Education for Sustainable Development: Mapping the SDGs to University Curricula. Sustainability 2023, 15, 8340. https://doi.org/10.3390/su15108340

Adams T, Jameel SM, Goggins J. Education for Sustainable Development: Mapping the SDGs to University Curricula. Sustainability. 2023; 15(10):8340. https://doi.org/10.3390/su15108340

Chicago/Turabian StyleAdams, Thomas, Syed Muslim Jameel, and Jamie Goggins. 2023. "Education for Sustainable Development: Mapping the SDGs to University Curricula" Sustainability 15, no. 10: 8340. https://doi.org/10.3390/su15108340

APA StyleAdams, T., Jameel, S. M., & Goggins, J. (2023). Education for Sustainable Development: Mapping the SDGs to University Curricula. Sustainability, 15(10), 8340. https://doi.org/10.3390/su15108340