A Migration Learning Method Based on Adaptive Batch Normalization Improved Rotating Machinery Fault Diagnosis

Abstract

1. Introduction

- Introduction of AdaBN layer: The AdaBN [32] method introduces the AdaBN layer in the deep neural network, which dynamically adjusts the parameters of the BN layer according to the input data to adapt to different data distributions. This dynamic adjustment mechanism enables the AdaBN method to better adapt to complex and changing data distributions, thus improving the performance of deep neural networks.

- Consideration of different data distributions: The AdaBN method considers the different data distributions and dynamically adjusts the BN layer parameters for each batch of data, enabling the model to better adapt to changes in data distribution. This adjustment mechanism tailored to different data distributions can improve the performance of the model on many datasets.

- Effectively addressing the limitations of the BN layer on small batch data: The BN layer performs poorly on small batch data, while the AdaBN method can adapt to small batch data by dynamically adjusting the parameters of the BN layer, thus improving the performance of the model. This method can effectively address the limitations of the BN layer on small batch data and also make the model training more efficient.

2. Unsupervised Deep Transfer Learning

3. The Proposed Method

3.1. Batch Normalization

3.2. Domain-Adaptive AdaBN Algorithm

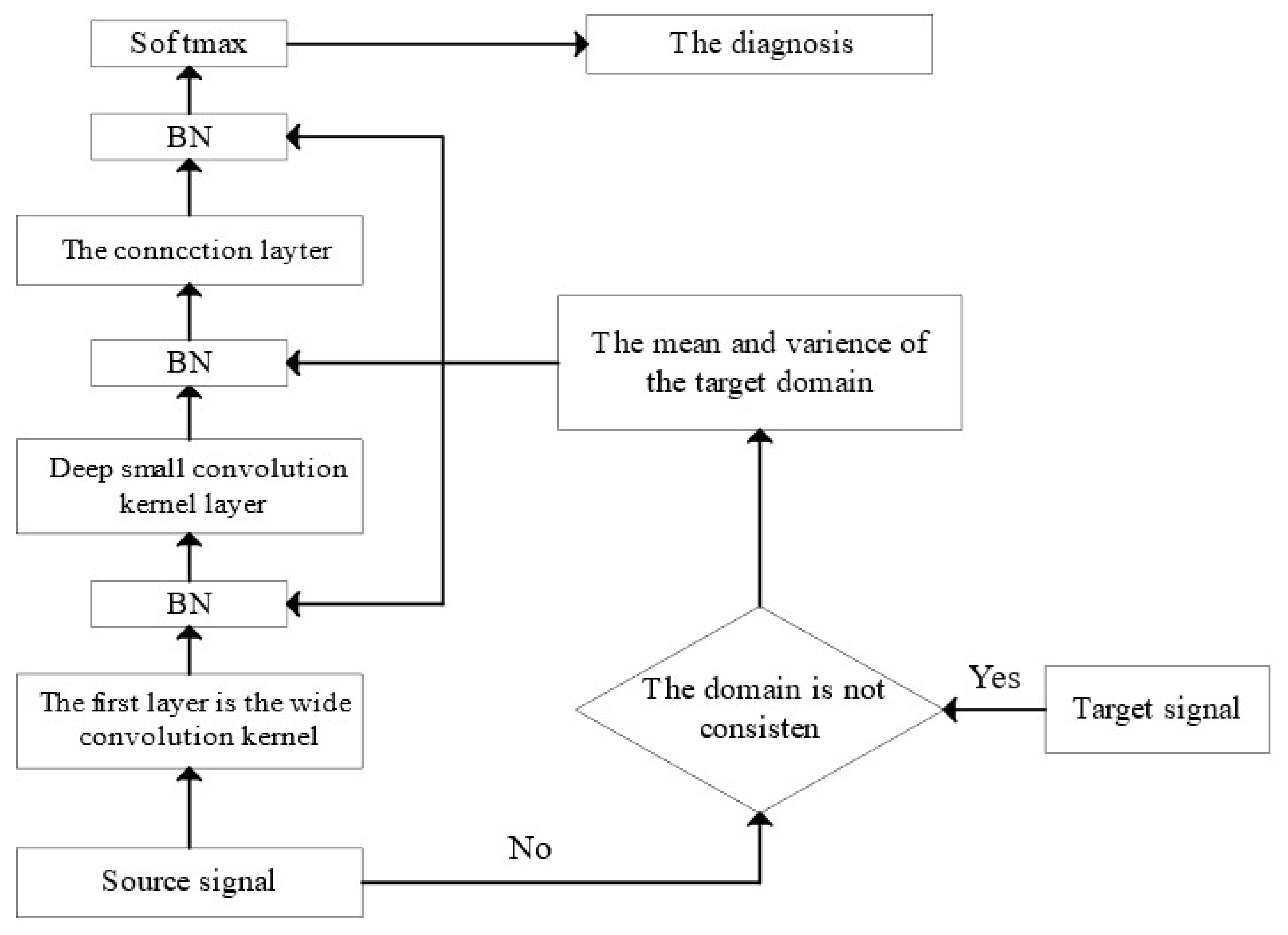

3.3. DCNN Model Based on AdaBN Algorithm

3.4. Discussion on AdaBN

4. Model Validation

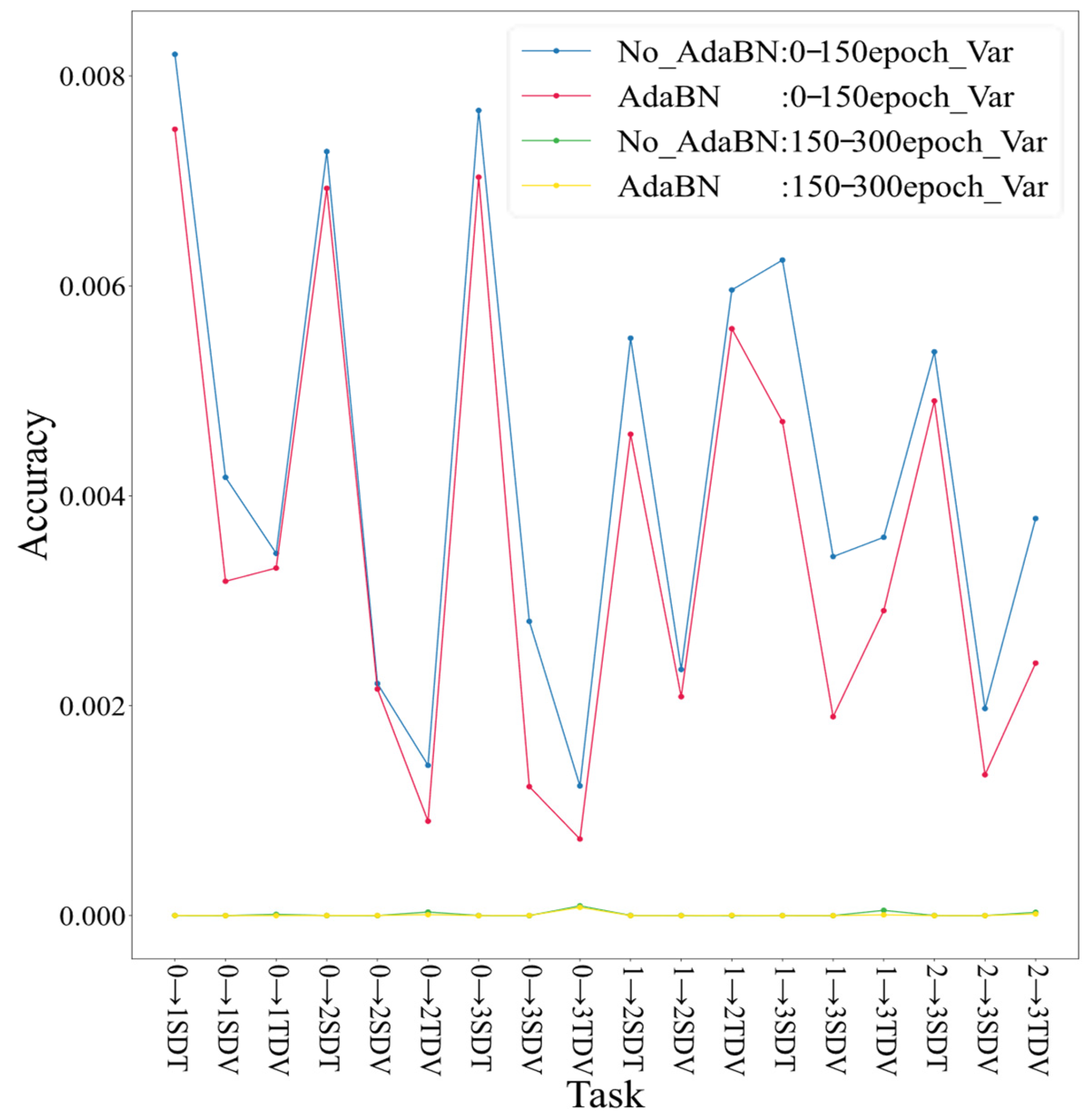

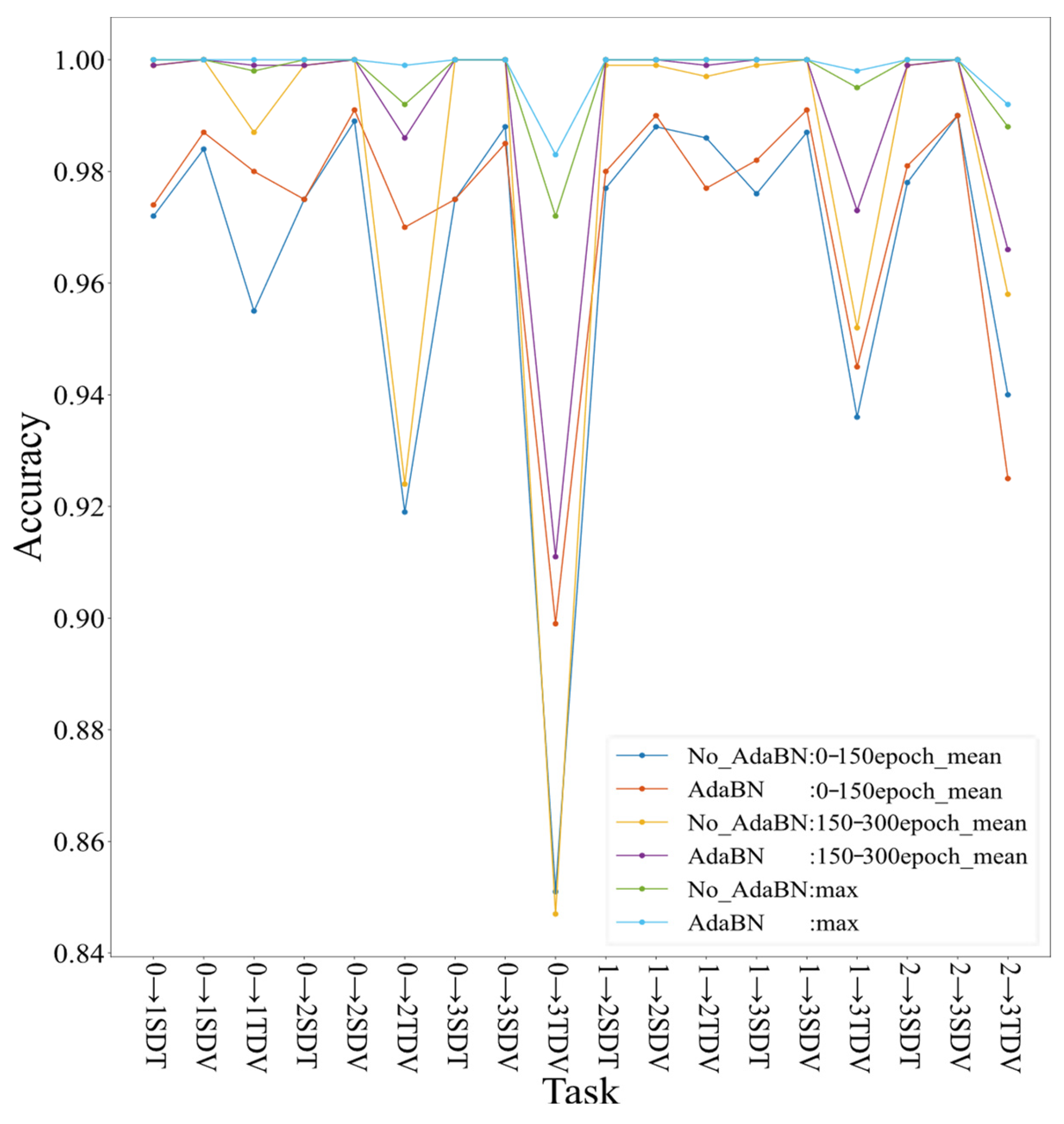

- Validation on the CWRU dataset

- 2.

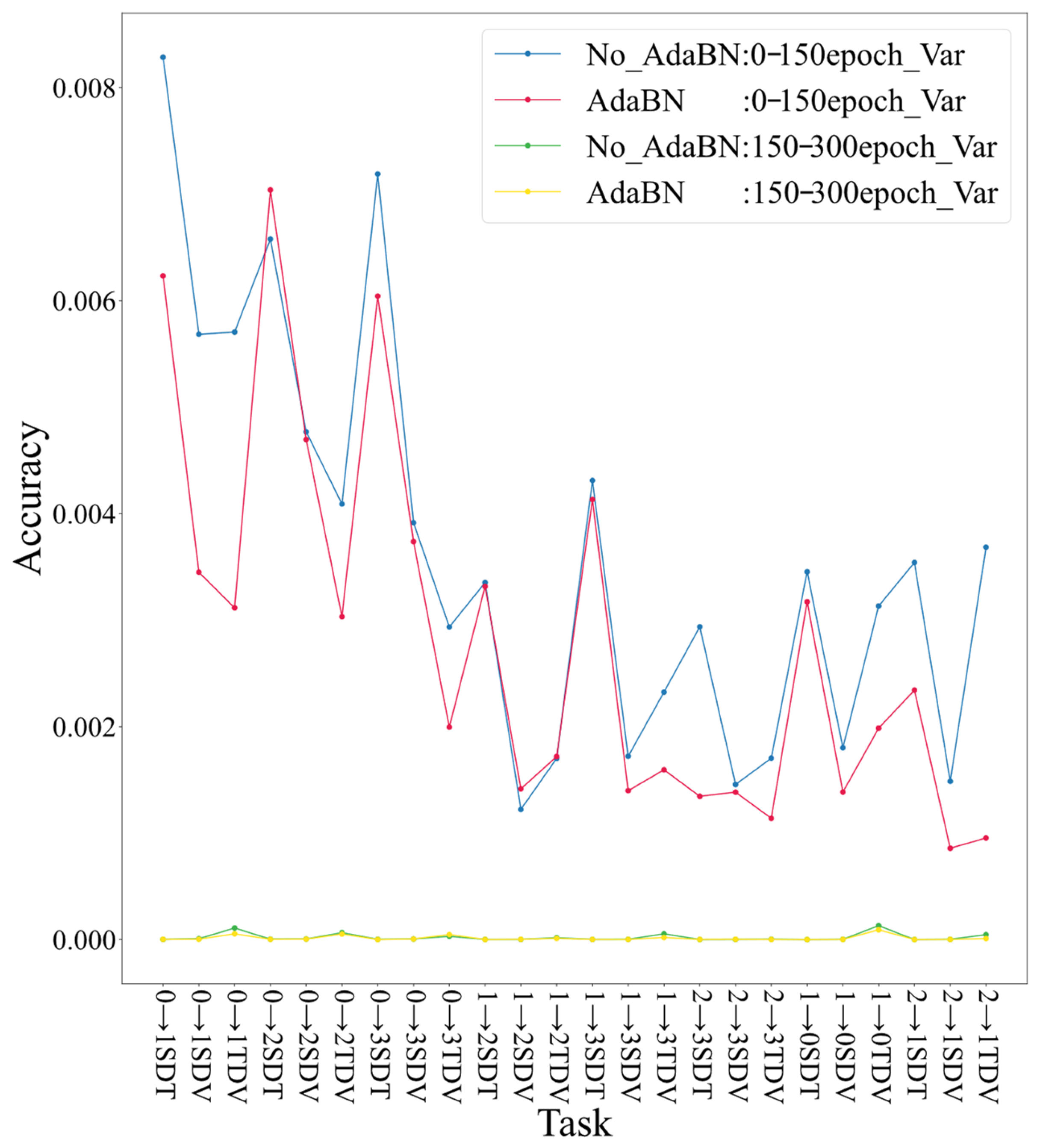

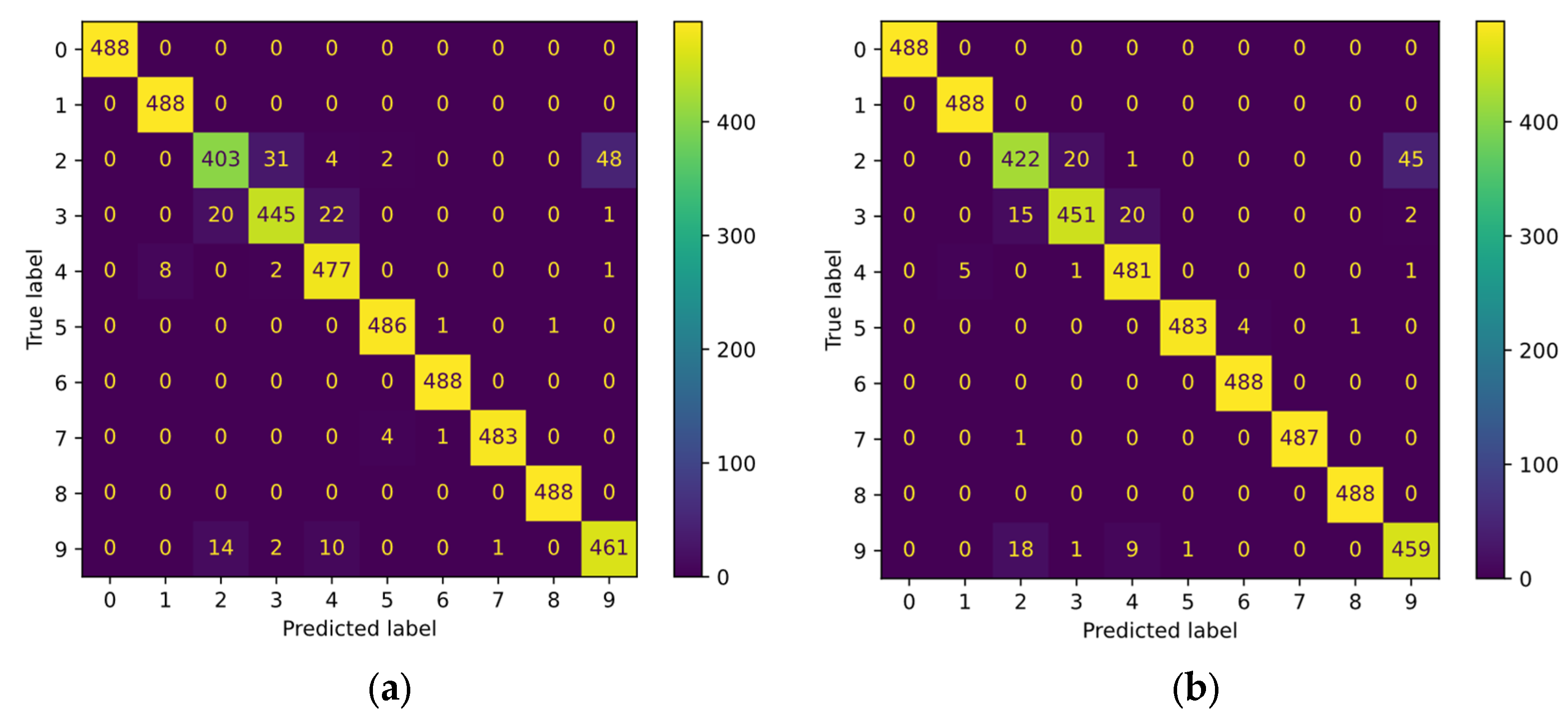

- Validation on a laboratory testbed dataset

- (1)

- Introduction to the dataset

- (2)

- Analysis of results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviation

| Adaptive Batch Normalization | AdaBN |

| Wavelet Transform | WT |

| Empirical Mode Decomposition | EMD |

| Singular Value Decomposition | SVD |

| Short-Time Fourier Transform | STFT |

| Support Vector Machines | SVM |

| Artificial Neural Network | ANN |

| Restricted Boltzmann Machines | RBM |

| Convolutional Neural Networks | CNN |

| Deep Belief Network | DBN |

| Stacked Autoencoders | SAE |

| Balanced Distribution Adaptation | BDA |

| Kullback–Leibler | KL |

| Maximum Mean Discrepancy | MMD |

| Joint Distribution Adaptation | JDA |

| Electric Discharge Machining | EDM |

| Batch Normalization | BN |

| Deep Convolutional Neural Networks | DCNN |

| Source training set | SDT |

| Source test set | SDV |

| Target test set | TDV |

| Case Western Reserve University | CWRU |

References

- Singh, N.; Hamid, Y.; Juneja, S.; Srivastava, G.; Dhiman, G.; Gadekallu, T.R.; Shah, M.A. Load balancing and service discovery using Docker Swarm for microservice based big data applications. J. Cloud Comput. 2023, 12, 1–9. [Google Scholar] [CrossRef]

- Slathia, S.; Kumar, R.; Lone, M.; Viriyasitavat, W.; Kaur, A.; Dhiman, G. A Performance Evaluation of Situational-Based Fuzzy Linear Programming Problem for Job Assessment. In Proceedings of Third International Conference on Advances in Computer Engineering and Communication Systems; ICACECS 2022; Springer: Berlin/Heidelberg, Germany, 2023; pp. 655–667. [Google Scholar]

- Tan, D.; Meng, Y.; Tian, J.; Zhang, C.; Zhang, Z.; Yang, G.; Cui, S.; Hu, J.; Zhao, Z. Utilization of renewable and sustainable diesel/methanol/n-butanol (DMB) blends for reducing the engine emissions in a diesel engine with different pre-injection strategies. Energy 2023, 269, 126785. [Google Scholar] [CrossRef]

- Tan, D.; Wu, Y.; Lv, J.; Li, J.; Ou, X.; Meng, Y.; Lan, G.; Chen, Y.; Zhang, Z. Performance optimization of a diesel engine fueled with hydrogen/biodiesel with water addition based on the response surface methodology. Energy 2023, 263, 125869. [Google Scholar] [CrossRef]

- Hu, J.; Zhang, L. Risk based opportunistic maintenance model for complex mechanical systems. Expert Syst. Appl. 2014, 41, 3105–3115. [Google Scholar] [CrossRef]

- Bao, J.; Qu, P.; Wang, H.; Zhou, C.; Zhang, L.; Shi, C. Implementation of various bowl designs in an HPDI natural gas engine focused on performance and pollutant emissions. Chemosphere 2022, 303, 135275. [Google Scholar] [CrossRef]

- Shi, C.; Chai, S.; Di, L.; Ji, C.; Ge, Y.; Wang, H. Combined experimental-numerical analysis of hydrogen as a combustion enhancer applied to Wankel engine. Energy 2023, 263, 125896. [Google Scholar] [CrossRef]

- Lei, Y.; Yang, B.; Jiang, X.; Jia, F.; Li, N.; Nandi, A.K. Applications of machine learning to machine fault diagnosis: A review and roadmap. Mech. Syst. Signal Process. 2020, 138, 106587. [Google Scholar] [CrossRef]

- Peng, T.; Gui, W.; Wu, M.; Xie, Y. A fusion diagnosis approach to bearing faults. In Proceedings of the International Conference on Modeling and Simulation in Distributed Applications, Changsha, China, 25–27 September 2001; pp. 759–766. [Google Scholar]

- Lei, Y.; Zuo, M.J.; He, Z.; Zi, Y. A multidimensional hybrid intelligent method for gear fault diagnosis. Expert Syst. Appl. 2010, 37, 1419–1430. [Google Scholar] [CrossRef]

- Stewart, G.W. On the early history of the singular value decomposition. SIAM Rev. 1993, 35, 551–566. [Google Scholar] [CrossRef][Green Version]

- Griffin, D.; Lim, J. Signal estimation from modified short-time Fourier transform. IEEE Trans. Acoust. Speech Signal Process. 1984, 32, 236–243. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef][Green Version]

- Song, Y.-Y.; Ying, L. Decision tree methods: Applications for classification and prediction. Shanghai Arch. Psychiatry 2015, 27, 130. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010; JMLR Workshop and Conference Proceedings. pp. 249–256. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Hua, Y.; Guo, J.; Zhao, H. Deep belief networks and deep learning. In Proceedings of the 2015 International Conference on Intelligent Computing and Internet of Things, Harbin, China, 17–18 January 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–4. [Google Scholar]

- Wu, Z.; Shen, C.; Van Den Hengel, A. Wider or deeper: Revisiting the resnet model for visual recognition. Pattern Recognit. 2019, 90, 119–133. [Google Scholar] [CrossRef][Green Version]

- Zhong, S.; Fu, S.; Lin, L. A novel gas turbine fault diagnosis method based on transfer learning with CNN. Measurement 2019, 137, 435–453. [Google Scholar] [CrossRef]

- Zhao, B.; Zhang, X.; Zhan, Z.; Pang, S. Deep multi-scale convolutional transfer learning network: A novel method for intelligent fault diagnosis of rolling bearings under variable working conditions and domains. Neurocomputing 2020, 407, 24–38. [Google Scholar] [CrossRef]

- Wang, K.; Wu, B. Power equipment fault diagnosis model based on deep transfer learning with balanced distribution adaptation. In Proceedings of the International Conference on Advanced Data Mining and Applications, Nanjing, China, 16–18 November 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 178–188. [Google Scholar]

- Wang, Y.; Wang, C.; Kang, S.; Xie, J.; Wang, Q.; Mikulovich, V. Network-combined broad learning and transfer learning: A new intelligent fault diagnosis method for rolling bearings. Meas. Sci. Technol. 2020, 31, 115013. [Google Scholar] [CrossRef]

- Yang, B.; Lei, Y.; Jia, F.; Li, N.; Du, Z. A polynomial kernel induced distance metric to improve deep transfer learning for fault diagnosis of machines. IEEE Trans. Ind. Electron. 2019, 67, 9747–9757. [Google Scholar] [CrossRef]

- Han, T.; Liu, C.; Yang, W.; Jiang, D. Deep transfer network with joint distribution adaptation: A new intelligent fault diagnosis framework for industry application. ISA Trans. 2020, 97, 269–281. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Qian, W.; Li, S.; Yi, P.; Zhang, K. A novel transfer learning method for robust fault diagnosis of rotating machines under variable working conditions. Measurement 2019, 138, 514–525. [Google Scholar] [CrossRef]

- Li, Y.; Song, Y.; Jia, L.; Gao, S.; Li, Q.; Qiu, M. Intelligent fault diagnosis by fusing domain adversarial training and maximum mean discrepancy via ensemble learning. IEEE Trans. Ind. Inform. 2020, 17, 2833–2841. [Google Scholar] [CrossRef]

- Zhang, Y.; Ren, Z.; Zhou, S. A new deep convolutional domain adaptation network for bearing fault diagnosis under different working conditions. Shock. Vib. 2020, 2020, 8850976. [Google Scholar] [CrossRef]

- Shao, H.; Zhang, X.; Cheng, J.; Yang, Y. Intelligent fault diagnosis of bearing using enhanced deep transfer auto-encoder. J. Mech. Eng. 2020, 56, 84–91. [Google Scholar]

- Li, C.-L.; Chang, W.-C.; Cheng, Y.; Yang, Y.; Póczos, B. Mmd gan: Towards deeper understanding of moment matching network. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; PMLR: New York, NY, USA, 2015; pp. 448–456. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Li, X.; Su, K.; He, Q.; Wang, X. Research on fault diagnosis of highway bi-lstm based on attention mechanism. Eksploat. I Niezawodn.-Maint. Reliab. 2023, 25. [Google Scholar] [CrossRef]

- Li, J.; He, D. A Bayesian optimization AdaBN-DCNN method with self-optimized structure and hyperparameters for domain adaptation remaining useful life prediction. IEEE Access 2020, 8, 41482–41501. [Google Scholar] [CrossRef]

- Li, Y.; Wang, N.; Shi, J.; Liu, J.; Hou, X. Revisiting batch normalization for practical domain adaptation. arXiv 2016, arXiv:1603.04779. [Google Scholar]

- Huang, L. Normalization ináTask-Specific Applications. In Normalization Techniques in Deep Learning; Springer: Berlin/Heidelberg, Germany, 2022; pp. 87–100. [Google Scholar]

- Jin, T.; Yan, C.; Chen, C.; Yang, Z.; Tian, H.; Guo, J. New domain adaptation method in shallow and deep layers of the CNN for bearing fault diagnosis under different working conditions. Int. J. Adv. Manuf. Technol. 2023, 124, 3701–3712. [Google Scholar] [CrossRef]

| Algorithm | DCNN Algorithm Based on AdaBN Domain Adaptive |

|---|---|

| Enter | Signal of the target domain, expressed in the neuron of the BN layer of the DCNN of which , for the neuron, has been trained to scale with parallel parameters and . |

| Output | The adjusted DCNN network |

| For | For each neuron and each signal in the target domain, compute the mean and variance of all samples in the target domain: Calculate the output of the BN layer: |

| End for |

| Number | Network Layer | Kernel Size/Step | Number of Kernel | Output Size (Width × Depth) |

|---|---|---|---|---|

| 1 | Conv1 | 1 × 15/1 | 16 | 1 × 1010 |

| 2 | Conv2 | 1 × 3/1 | 32 | 1 × 1008 |

| 3 | Pool1 | 1 × 2/2 | 32 | 1 × 504 |

| 4 | Conv3 | 1 × 3/1 | 64 | 1 × 504 |

| 5 | Conv4 | 1 × 3/1 | 128 | 1 × 502 |

| 6 | AdaptiveMaxpool | 4 | 128 | 1 × 4 |

| 7 | Fc 1 | —— | —— | 512 |

| 8 | Fc 2 | —— | —— | 256 |

| 9 | Fc 3 | —— | —— | 256 |

| 10 | Fc 4 | —— | —— | 10 |

| Task | 0→1 | 0→2 | 0→3 | 1→2 | 1→3 | 2→3 |

|---|---|---|---|---|---|---|

| SVM | 70.34% | 74.23% | 71.23% | 68.45% | 73.12% | 68.49% |

| MLP | 85.24% | 82.93% | 80.98% | 78.21% | 84.82% | 88.49% |

| DCNN | 99.12% | 98.89% | 97.53% | 99.59% | 99.53% | 98.53% |

| DCNN (AdaBN) | 99.89% | 99.85% | 98.83% | 99.59% | 99.82% | 99.12% |

| Task | No_AdaBN | AdaBN | No_AdaBN | AdaBN | No_AdaBN | AdaBN |

|---|---|---|---|---|---|---|

| 0–150 Epoch Mean | 0–150 Epoch Mean | 150–300 Epoch Mean | 150–300 Epoch Mean | 0–300 Epoch Max | 0–300 Epoch Max | |

| 0→1 | 0.833 | 0.869 | 0.862 | 0.883 | 0.951 | 0.952 |

| 0→2 | 0.942 | 0.948 | 0.996 | 0.998 | 0.999 | 0.999 |

| 0→3 | 0.904 | 0.905 | 0.942 | 0.948 | 0.946 | 0.956 |

| 1→2 | 0.744 | 0.811 | 0.765 | 0.824 | 0.853 | 0.900 |

| 1→3 | 0.936 | 0.944 | 0.997 | 0.999 | 0.999 | 1.000 |

| 2→3 | 0.902 | 0.904 | 0.951 | 0.950 | 0.956 | 0.955 |

| 1→0 | 0.723 | 0.781 | 0.730 | 0.780 | 0.838 | 0.890 |

| 2→1 | 0.966 | 0.960 | 0.999 | 0.999 | 1.000 | 1.000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Yu, T.; Li, D.; Wang, X.; Shi, C.; Xie, Z.; Kong, X. A Migration Learning Method Based on Adaptive Batch Normalization Improved Rotating Machinery Fault Diagnosis. Sustainability 2023, 15, 8034. https://doi.org/10.3390/su15108034

Li X, Yu T, Li D, Wang X, Shi C, Xie Z, Kong X. A Migration Learning Method Based on Adaptive Batch Normalization Improved Rotating Machinery Fault Diagnosis. Sustainability. 2023; 15(10):8034. https://doi.org/10.3390/su15108034

Chicago/Turabian StyleLi, Xueyi, Tianyu Yu, Daiyou Li, Xiangkai Wang, Cheng Shi, Zhijie Xie, and Xiangwei Kong. 2023. "A Migration Learning Method Based on Adaptive Batch Normalization Improved Rotating Machinery Fault Diagnosis" Sustainability 15, no. 10: 8034. https://doi.org/10.3390/su15108034

APA StyleLi, X., Yu, T., Li, D., Wang, X., Shi, C., Xie, Z., & Kong, X. (2023). A Migration Learning Method Based on Adaptive Batch Normalization Improved Rotating Machinery Fault Diagnosis. Sustainability, 15(10), 8034. https://doi.org/10.3390/su15108034