Abstract

During the COVID-19 pandemic, social media has become an emerging platform for the public to find information, share opinions, and seek coping strategies. Vaccination, one of the most effective public health interventions to control the COVID-19 pandemic, has become the focus of public online discussions. Several studies have demonstrated that social bots actively involved in topic discussions on social media and expressed their sentiments and emotions, which affected human users. However, it is unclear whether social bots’ sentiments affect human users’ sentiments of COVID-19 vaccines. This study seeks to scrutinize whether the sentiments of social bots affect human users’ sentiments of COVID-19 vaccines. The work identified social bots and built an innovative computational framework, i.e., the BERT-CNN sentiment analysis framework, to classify tweet sentiments at the three most discussed stages of COVID-19 vaccines on Twitter from December 2020 to August 2021, thus exploring the impacts of social bots on online vaccine sentiments of humans. Then, the Granger causality test was used to analyze whether there was a time-series causality between the sentiments of social bots and humans. The findings revealed that social bots can influence human sentiments about COVID-19 vaccines. Their ability to transmit the sentiments on social media, whether in the spread of positive or negative tweets, will have a corresponding impact on human sentiments.

1. Introduction

Social media has become a major mode of global communication, characterized by its potential to reach large audiences and spread information rapidly [1,2]. In contrast to traditional media, content posted need not undergo editorial management nor scientific vetting, and users frequently maintain anonymity, which allow individuals to express their views directly [3,4]. This means that there are a multitude of emotional expressions, false information, and rumors on social media, which could deviate the public opinions from the track of reason [5]. Researchers thus argue that it is important to analyze sentiments on social media [6,7,8].

In face of health emergencies, social media has become an emerging platform for the public to share opinions, express attitudes, and seek coping strategies [9,10,11,12]. During the outbreak of coronavirus disease 2019 (COVID-19), intensive global efforts toward physical distancing and isolation to curb the spread of COVID-19 may have intensified the use of social media for individuals to remain connected [13]. Researchers found that the public express their fears of infection and shock regarding the contagiousness of COVID-19 [14], along with their feelings about infection control strategies on social media [15,16,17]. However, established evidence has proven that social bots—automated accounts controlled and manipulated by computer algorithms [18]—often express sentiments in online discussions on social media [7,19], which might manipulate or even distort online public opinions [20,21].

The birth of COVID-19 vaccines has also sparked heated discussions and debates on social media [22]. Social media has long been a prominent sphere of vaccine debates [23]. With 217 million daily activities [24], Twitter is a convenient tool for discussing and debating public health topics, including vaccines and vaccination [2]. Both pro-vaccine and anti-vaccine information is prevalent on Twitter [2]. Social bots disseminate anti-vaccine messages by masquerading as legitimate users, eroding the public consensus on vaccination [25].

A previous study found that social bots have three high-intensity stages of intervention in the COVID-19 vaccines-related topics [22]. Yet, how social bots shape public sentiment and public opinion remains to be studied.

Against this backdrop, this study seeks to scrutinize whether the sentiments of social bots affect human users’ sentiments of COVID-19 vaccines. This overall objective is divided into the following two sub-objectives.

The first sub-objective is to analyze the sentiments of both social bots and human users on the discussion of COVID-19 vaccines in three stages. The semi-supervised deep learning model, i.e., the BERT-CNN sentiment analysis framework, was used for the sentiment analysis of tweets posted by social bots and humans in this study. The second sub-objective is to perform a statistical analysis of whether there was a time-series correlation between the sentiments of social bots and humans.

To fulfil these objectives, the study is divided into seven sections. Following the introduction, the second section reviews and examines other studies which have described the sentiment engagement of social bots in online vaccine discussions and the methods of sentiment analysis on social media. The third section presents data and methodology, including the collection and cleaning of tweets, the method of bots’ detection, the specific steps in building the sentiment analysis model, and the introduction of Granger causality. The findings of the empirical analysis are presented in the fourth section and explained and discussed in the fifth section. The sixth section and final section set out the study’s main conclusions, identify its limitations, and address our future directions.

2. Literature Review

2.1. Vaccine Discussions and Sentiments on Social Media

Vaccine discussions are widespread on social media. The discoursing trends of vaccines on social media are linked to real-world events [26]. Gunaratne et al. found that anti-vaccination discourses on Twitter soared in 2015, coinciding with the 2014–2015 measles outbreak. After the COVID-19 outbreak, social media became one of the main spheres for the debates and discussions related to COVID-19 and its vaccines [13]. Especially, discussions of COVID-19 vaccines are closely related to real-world events [22]. For example, as the Delta virus ravaged the world in June–July 2021, public discussion about vaccines on social media platforms mainly revolved around whether the COVID-19 vaccines could prevent the Delta variant [22].

People tend to express their sentiments in online vaccine discussions [13]. Generally speaking, one sentiment is to support vaccines and vaccination, and the other is against them. The topics of pro-vaccine and anti-vaccine have been developed on social media and have received the attention of researchers. Kang et al. examined current vaccine sentiments on social media by constructing and analyzing semantic networks of vaccine information from highly shared websites of Twitter users in the United States. The semantic network of positive vaccine sentiments demonstrates greater cohesiveness in discourse compared to the larger, less-connected network of negative vaccine sentiments [6]. Melton et al. analyzed the COVID-19 vaccine-related content and found that sentiments expressed in these social media communities are more positive but have had no meaningful change since December 2020 [27].

Misinformation [8], individual opinions [23], and health literacy [28] affect public sentiments of vaccination. The prevalence of negative vaccine sentiments is generally skeptical and distrustful of government organizations [6]. Public sentiments related to vaccination may be affected by technical factors. For example, the information delivered by a chatbot that answers people’s questions about COVID-19 vaccines positively affects attitudes and intentions toward COVID-19 vaccines [29].

2.2. Sentiment Engagement of Social Bots in Online Vaccine Discussions

Since social bots under algorithmic control are able to send messages continuously and frequently [18], they could even guide or influence public sentiments in social media [30]. In the process of interacting with humans, bots in a social system affect the way humans perceive social norms and increase human exposure to polarized views. For example, increasing their exposure to harmful contents and inflammatory narratives by disseminating large amounts of contents polarizes humans’ sentiments and emotions [31]. With the intervention of social bots, the discussion around a specific topic can easily be separated from the event and become a battle between two extreme emotions, which affect the public’s emotions and attitudes [20].

Since 2010, social bots have been active in political elections, business events, health communication, and other fields, presenting a strong influence on public sentiments [32,33]. In health-related topics, social bots have been accused of spreading misinformation, promoting polarized opinions, and manipulating public sentiments [7]. For example, social bots can influence public attitudes about the efficiency of cannabis in dealing with mental and physical problems [21]. During the COVID-19 pandemic, social bots spread and amplify false medical information and conspiracy theories, which influence the public’s correct judgments and stimulate their negative emotions [34].

Social bots have been active in various vaccine topics and guiding public sentiments for a long time. The messages spread by some social bots were more divisive and political, and their disseminations of anti-vaccine contents weakened the public consensus on vaccination and shook public confidence in vaccination [25]. Egli’s research on anti-vaccine social bots suggests that bots can arouse people’s resistance to COVID-19 vaccines and promote the spread of conspiracy theories by spreading carefully fabricated vaccine rumors [35].

2.3. Social Media and Sentiment Analysis

Sentiment analysis is a computer process developed to categorize users’ opinions or sentiments expressed in source texts [36]. It involves categorizing subjective opinions from text, audio, and video sources to determine polarities (e.g., positivity, negativity, and neutrality), emotions (e.g., anger, sadness, and happiness), or states of mind toward target topics, themes, or aspects of interest [37]. Currently, automated sentiment analysis receives increasing attention from the academia [38] and has become one of the key techniques for processing large amounts of social media data [39]. In methodology, artificial intelligence (AI) techniques such as machine learning (ML), deep learning (DL), and natural language processing (NLP) can extract topics and sentiments from largely unstructured social media data [37].

Researchers have used automated sentiment analysis to analyze the sentiments and emotions of social bots [7,40]. Yuan et al. combined sentiment analysis with supervised machine learning (SML) and community detection on social networks to unveil the communication patterns of pro-vaccine and anti-vaccine social bots on Twitter [40]. Linguistic inquiry and word count (or LIWC) was used to analyzed samples of social bots and individual accounts to investigate the different sentiment and emotion components in COVID-19 pandemic discussions on Twitter [7].

According to above references, social bots actively involved in topic discussions on social media can express their sentiments [7,20], which affect human users [35]. Social bots also participate in COVID-19 vaccine discussions on Twitter [22]. However, it is unclear on how the sentiments of social bots are expressed in online discussions about COVID-19 vaccines and whether their sentiments affect human users’ sentiments of COVID-19 vaccines. Based on previous study, the work used semi-supervised deep learning to continue the sentiments analysis of tweets posted by social bots and human users. Then, the Granger causality test was used to analyze whether there was a time-series causality between the sentiments of social bots and humans. The research questions (RQs) are as follows.

RQ1: What are the sentiments of social bots and human users on discussing COVID-19 vaccines in three stages?

RQ2: Whether there is a time-series causality between the sentiments of social bots and humans? How do the sentiments of social bots affect real users’ sentiments about COVID-19 vaccines?

3. Materials and Methodology

This study specifically uses the following materials and methods to achieve the research objectives. First, tweets related to the COVID-19 vaccine were the empirical material used in the study. We obtained the data through the official API provided by Twitter. Second, Botometer was used to detect users who post the tweets to identify bot accounts. Third, an innovative computational framework based on semi-supervised deep learning, i.e., the BERT-CNN sentiment analysis framework, was built to identify the sentiments of tweets post by bots and humans, respectively. Finally, Granger causality was used to test the correlation between social bots’ sentiments and human sentiments. In the following paragraphs, we introduce the specific application of these methods.

3.1. Data Collection and Cleaning

Discussions related to COVID-19 vaccines on Twitter were taken as the contents of the analysis in the work. The official API data interface provided by Twitter was used to collect tweets. The privacy of Twitter users was respected, and no personal information was collected or displayed.

According to Zhang et al., the three peaks of the COVID-19 vaccine discussions on Twitter started on 11 December 2020 (FDA issued emergency use authorizations for Pfizer vaccine to prevent COVID-19), 4 March 2021 (the Austrian authorities issued a statement and suspended the vaccination of AstraZeneca vaccine due to blood clots), and 10 June 2021 (CNN reported that delta virus has gone global), respectively [22]. This study also used the three dates as key time points for data collection, using the Granger causality test to analyze the effects of bots’ sentiments on human vaccine sentiments. In order to ensure the scientificity of Granger causality test and explore the impact of social bots on human vaccine emotions from a longer-term perspective, we used the three dates as the starting points for data collection to collect the data for 60 days. The three time periods are, respectively, from 11 December 2020 to 8 February 2021 (stage 1), from 4 March 2021 to 2 May 2021 (stage 2), and from 10 June 2021 to 8 August 2021 (stages 3).

In addition, hashtags for data collection include #Pfizer vaccine, #Moderna vaccine, #Johnson & Johnson vaccine, and #AstraZeneca vaccine as data collection hashtags (# represents the tag on Twitter) because users often use the names of pharmaceutical companies to represent their vaccines when talking about vaccines and vaccination on Twitter. Meanwhile, Pfizer, Moderna, Johnson & Johnson, and AstraZeneca were in the 22 vaccines already approved worldwide [41], and discussions about them can represent vaccine discussions on Twitter.

3.2. Detection of Bots

We used Botometer to identify social bots. Botometer, a specific identification algorithm integrating user behaviors, relationships, and spatiotemporal data, developed by the Institute of Network Science, is currently the most authoritative software to identify bot accounts [18,42,43]. As a machine learning framework, Botometer can extract more than 1000 features of user behaviors; analyze users’ tweeting frequency, behavioral characteristics, personal profiles, and social networks; and generate a score between 0 and 1 [44,45]. Scores closer to 1 represent a higher chance of being bots, while those closer to 0 are more likely to belong to humans [42,46,47]. Based on previous studies [18,42,43], the threshold was set to 0.5 to separate bots from humans, and a score over 0.5 was defined as a social bot.

3.3. Sentiment Analysis

Sentiment analysis was performed using the semi-supervised deep learning model. Sentiments were defined as positive, negative, and neutral expressions in natural language processing by default [48]. Those tweets expressing sentiments more strongly were examined to verify the correlation between the sentiments of bots and humans. Therefore, the positive and negative tweets were analyzed, and tweets not judged as the above sentiments were not included as study subjects. The sentiment analysis was carried out in three phases.

3.3.1. Manual-Classified Training Dataset

The deep learning approach should use a fraction of the full data as a manually classified training dataset and train classifiers to learn by examples, thus “supervising” classifications [39]. Thus, a small portion of the dataset (accounting for 2.35% of the total number of tweets we collected) was randomly selected and then hand-labeled into four class labels: positivity, negativity, neutrality, and others. However, deciding tweet’ sentiment classes is subjective as opinions may differ due to the interpretation of subtleties [39].

Before hand-labeling, we uniformly trained the three coders who perform hand-labeling to ensure the accuracy of hand-labeling. After coding, we randomly selected 500 tweets from the obtained tweets and performed inter-coder reliability testing to evaluate the consistency of the three coders on the two sentiment categories of humans and social bots. We use Gwet’s AC1 agreement coefficient metric for the IRR analysis [49]. The results of the inter-rater reliability scores were statistically significant (p < 0.05). In the coding process, the three coders marked tweets independently and did not interfere with each other. Examples of the manually labeled results are presented in Table 1. Since tweets not judged as positive and negative sentiments were not included in our subjects, their data were not counted in the subsequent analyses.

Table 1.

Examples of the hand-labeled results.

3.3.2. Building a BERT-CNN Sentiment Analysis Framework

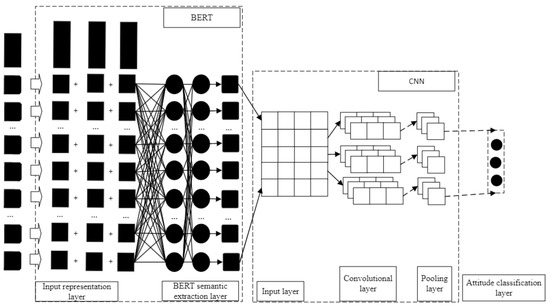

As shown in Figure 1: The work used the deep bidirectional transformers (BERT) developed by the Google AI team with strong semantic comprehension. It has been testified to be effective for understanding unlabeled text by jointly conditioning on both left and right context in all layers [50]. The BERT was trained to deal with various natural languages so that it could accurately understand the contextual word meaning of a tweet and becomes a better tweet encoder. Convolutional Neural Networks (CNNs) were used for text classifications. As a popular deep learning model, the CNN has achieved great success in processing computer vision images and speech recognition, highlighting text classification tasks [51,52,53]. Features were extracted on the tweet text using parameters publicly available by Google to pre-train BERT. Afterward, context words output by the transformer were input to the CNN model for parameter learning, with the model parameters of the CNN updated.

Figure 1.

A Sentiment analysis framework based on the BERT-CNN.

3.3.3. Model Validation and Machine Labels

The established BERT-CNN should be first trained to ensure its well-behaved performance. According to the validated logic of deep learning, validating the model involves three steps of training, validation, and test [54]. The training dataset, validated dataset, and test dataset are from the hand-labeled data corpus, accounting for 60%, 20%, and 20%, respectively [54]. The training dataset was used to train the BERT-CNN. Then, the parameters were adjusted by the validated dataset. Last, the effect was evaluated by the test dataset, and the evaluation indices of recall, precision, and F score were obtained.

The precision (p) of a machine classification method, known as a positive predictive value, is the fraction of tweets matching automated and manual classifications [39]. A higher precision result has a closer match to the manual classification [39]. Recall (r) of a machine classification method, known as sensitivity, is the proportion of positive (negative) tweets manually classified and correctly classified by the automated method [39]. The F score measure is used to provide a more balanced assessment of the performance of the sentiment classification methods [39]. The F score combines recall and precision in a single quantity as a weighted average [55]. In the work, the F score equally weighted precision and recall and corresponded to the following formula.

F score = 2 × precision × recall/(precision + recall)

The F score is bound between 0 and 1. The practical significance of the F score is that it represents a single measure of classification performance. A high F score means that the classification method has high precision and recall.

In Table 2, the BERT-CNN has an F score value of 0.77. In Table 3, the BERT-CNN identifies and classifies up to 0.92 and 0.72 for both positive and negative tweets, respectively. We also compared the BERT-CNN with the CNN model, BERT model, and common machine learning methods; calculated their identification and classification accuracy; and obtained the corresponding Kappa coefficient. The Kappa coefficient bounds between 0 and 1, and a higher score indicates better classification [50]. The result shows that the score for the BERT-CNN was 0.61, which was higher than 0.54 for CNN and 0.57 for BERT, respectively. Subsequently, the proposed BERT-CNN model entails a better identification and classification accuracy.

Table 2.

Validation results of the BERT-CNN’s performance.

Table 3.

Accuracy of automatically classifying positive (negative) tweets by BERT-CNN.

3.4. Granger Causality

The Granger causality test, a hypothesis test for determining whether one time-series can forecast another [56], is a more rigorous test for the causation between two time-series data [57]. Granger causality is defined on the principle that a cause necessarily must happen prior to its effect and contributes unique information regarding the future value of its effect. Although the Granger causality test belongs to the field of economics, the method has been used to study the Internet and social media [58]. The Granger causality test was used to analyze whether there was a time-series causality between the sentiments of social bots and humans in the work.

4. Findings

As mentioned above, data were collected by the official Twitter API, totaling 238,137 vaccine-related tweets in the three stages. The data consist of 96% English tweets and 4% non-English tweets. Considering that English is the main language of the Twitter platform, non-English tweets were excluded. In addition, repeated tweets, hyperlinks, and emoji symbols were filtered. After data cleaning, a total of 142,883 valid tweets posted by 65,238 Twitter users were obtained. Table 4 presents the number and total amount of the tweets for the three stages.

Table 4.

Number of valid tweets for the three stages.

4.1. Bots Detection

Botometer Pro was used in the work. The API calling method provided by Botometer was used to detect a large number of accounts. We statistically found that 65,238 Twitter users participated in the discussion of the COVID-19 vaccine in the three stages, posting a total of 142,883 tweets. Through a specific Python program, 65,238 Twitter users were entered to Botometer to obtain a score. Botometer detected 5784 social bots and 59,454 human users among 65,238 Twitter users, accounting for 8.87% and 91.13% of total users, respectively. Social bots posted 15,716 tweets, and humans posted 127,167 tweets, representing 11% and 89% of all tweets. Following this, we determined the statistics of the number of users and tweets in the three stages. Table 5 presents the number of users and tweets for social bots. The relevant data for the human users are presented in Table 6.

Table 5.

Number of users and tweets for bots in the three stages.

Table 6.

Number of users and tweets for humans users in the three stages.

4.2. Sentiments Analysis

The BERT-CNN identified and classified tweets published by social bots and humans at three stages. Since tweets not judged as positive and negative sentiments were not included in our subjects, their data were not counted. Table 7 is the social bots’ statistical results of the sentiment analysis. The results of the sentiment analysis for human users are presented in Table 8. These data were used for the Granger causality test.

Table 7.

Social bots statistical results of the sentiment analysis.

Table 8.

Human users’ statistical results of the sentiment analysis.

4.3. Granger Causality

4.3.1. Augment Dickey–Fuller Test

The Augment Dickey–Fuller (ADF) test proposed by Dickey and Fuller was used to test the stationarity of time-series data, thus avoiding spurious regressions. The ADF test is an extension of the DF test and allows for a higher order of the autoregressive process in the estimation. The ADF test is used to test the stationarity of time-series data.

EViews 10.0 was used to perform an ADF test on each variable to ensure its stationarity in the work. The sentiments of social bots were divided into positivity and negativity, represented by SPos and SNeg, respectively. In addition, the sentiments of humans were divided into positivity and negativity, represented by HPos and HNeg, respectively. SPos, SNeg, HPos, and HNeg were all non-stationary variables, and all data series processed by the first-order difference were stationary and single integrals at the 5% significance level. ΔSPos, ΔSNeg, ΔHPos, and ΔHNeg represented the first-order differences of corresponding variables. Table 9 shows the results of the ADF test.

Table 9.

Results of the ADF test.

4.3.2. Co-Integration Test

In the co-consolidation test, Engle-Granger (EG) tests were used to determine whether there was a co-integration relationship between the two variables. The residual-based co-integration test suggested by Engle and Granger has been used widely in references. This pioneering EG test is quite intuitive and easy to implement.

The co-ensemble regression equation was estimated using OLS (ordinary least square) in Eviews 10.0. Based on error series E obtained in the previous step, the stationarity of the sequence was tested, and the method was the ADF test. The 12 groups of relationships tested for co-integration show that SPos1-HPos1, SPos2-HPos2, and SNeg3-HNeg3 passed the test at the 0.1 significance level, proving a long-term equilibrium relationship among SPos1-HPos1, SPos2-HPos2, and SNeg3-HNeg3.

In Table 10, the three co-integration relationship variables are SPos1-HPos1, SPos2-HPos2, and SNeg3-HNeg3. The EG values of SPos1-HPos1, SPos2-HPos2, and SNeg3-HNeg3 are −5.295, −3.269, and −3.876, respectively, all less than the critical value at the 10% significance level. Therefore, the null hypothesis was rejected, and a co-integration relationship exists among the variables in SPos1-HPos1, SPos2-HPos2, and SNeg3-HNeg3.

Table 10.

Results of the co-integration test.

4.3.3. Granger Causality Test

The co-consolidation test demonstrated that the variables contained in SPos1-HPos1, SPos2-HPos2, and SNeg3-HNeg3 were stationary sequences with a co-consolidation relationship. Although there were long-term equilibrium relationships, it was unclear whether there was a Granger causality. Therefore, a Granger causality test was performed to determine a causal relationship between variables. Table 11 shows the results of the Granger causality test.

Table 11.

Results of the Granger causality test.

The results of the Granger causality test showed that SPos1-HPos1 was the mutual Granger cause, SPos2 was the Granger cause for HPos2, SNeg3 was the Granger cause for HNeg3 (p < 0.05). A bidirectional Granger relationship existed between the positive sentiments of bots and human users from 11 December 2020 to 8 February 2021. The positive sentiments of social bots were the Granger causality of the positive sentiments of human users, but the positive sentiments of human users were not that of social bots from 4 March to 2 May 2021. The negative sentiments of social bots were the Granger causality of the positive sentiments of human users, but the negative sentiments of human users were not that of social bots from 10 June 2021 to 8 August 2021.

5. Discussion

The Granger causality test showed that social bots strongly influenced human sentiments about COVID-19 vaccines. Their ability to send tweets with positive or negative sentiments had a corresponding effect on the vaccine sentiments of human users on Twitter. However, the intervention of social bots was not a “magic bullet.” The impact of social bots on human online vaccine sentiment may have been limited in some cases. We speculate that the conditions for social bots to affect the public opinion environment may be closely related to the real world and specific issues in addition to the intervention intensity of social bots discussed in previous research results.

Stage 1 (from 11 December 2020 to 8 February 2021)

At stage 1, social bots with positive sentiments have increased human tweets with positive sentiments. Interestingly, the Granger causality test also proved that the release of human positive vaccine sentiment tweets was the reason for the increased tweets of positive sentiments from social bots. Tweets of positive sentiments from social bots and humans at this stage were observed to explore this intriguing result.

Since several earliest authorized COVID-19 vaccines came one after another at this stage, the public had high hopes for COVID-19 vaccines and worried about whether vaccines were sufficiently effective and safe. Most of the human discussions were about vaccines’ effectiveness, how to obtain the vaccine, whether the quantity was sufficient, and whether it would cause other harm to the body after vaccination [22]. Our observation of social bots’ public tweets found that social bots responded positively to human concerns about these issues.

On the one hand, social bots stressed that vaccines were safe enough. Various authorized vaccines underwent rigorous safety testing experiments. They listed the probability of resistance of various COVID-19 vaccines to the virus, claiming that the vaccine would prevent the COVID-19 virus and become a “game-changer” in the fight against the COVID-19 pandemic. On the other hand, social bots informed the public that the COVID-19 vaccine had been approved for use in the United States, the United Kingdom, India, and other countries by forwarding information from news media. The social bots also claimed that the number of vaccines was sufficient, and anyone could complete vaccination conveniently after an appointment. Through these expressions, social bots responded effectively to the focus of public attention.

Social bots with positive sentiments used the form of retweeting human tweets to spread their opinions, with fewer tweets than the first two stages. Human tweets provided social bots with content to spread. When the number of positive human tweets increased, the text base that social bots could forward was also expanded, allowing social bots to spread more vaccination-promoting content. As relevant tweets accumulated, social bots’ frequency and number of retweets increased [59]. The information on Twitter was short and informal, which made it more difficult for human users to recognize automatically generated content. Social bots were treated as ordinary human accounts, which, in turn, caused humans and social bots to continue to repost each other’s tweets [60,61]. In this context, social bots increased tweets with positive human sentiments.

Stage 2 (from 4 March 2021 to 2 May 2021)

In our previous conjecture, the tweets with negative sentiments from the second-stage social bots had a noticeable effect. If the vaccine’s safety was questioned in the vaccination campaign involving one’s health, it could significantly hinder vaccination. The intervention of social bots with negative sentiments was likely to amplify this negative impact.

The focus of the discussion between humans and social bots at this stage was mainly on the topic of whether vaccination could cause blood clots. Although reportedly the probability of blood clots caused by vaccination was extremely low [62], it caused public panic about vaccines. With the governments of Australia, the United Kingdom, the United States, and other countries suspending the vaccination of the AstraZeneca vaccine and Johnson & Johnson vaccine, the safety of vaccines once again became the focus of discussion [63,64]. The controversy over the safety of vaccines greatly impacted the public’s willingness to vaccinate [65], and negative sentiments about vaccines caused by blood clots were spread on social media [66].

This stage had the most intensive social bots with positive sentiments. Social bots with positive sentiments intervened strongly in discussing COVID-19 vaccines. Under the influence of social bots, human users tweeted more positive sentiments. In addition, the social bots quoted authoritative sources, such as the FDA, in large numbers. The content published and forwarded by social bots supported vaccination, showing strong scientificity. Social bots also emphasized that the probability of vaccination-induced blood clots was low, and the potential benefits of vaccination outweighed its potential risks. Moreover, they used the actions of governments to resume the use of AstraZeneca and Johnson & Johnson vaccines to demonstrate the safety of vaccines.

The content delivered by these social bots had a solid scientific basis. Relevant studies have shown that the probability of vaccine triggering was only about one in a million, and the incidence of thrombosis in the vaccinated population was lower than that in the general population. Moreover, the combined cases of thrombosis, thrombocytopenia and bleeding, and disseminated intravascular coagulation were even rarer [67]. Not getting vaccinated posed a risk of infection and increased the safety risk to families, communities, and even the entire country [68]. In this case, people may have adopted a more positive attitude toward vaccines to protect family members, friends, or other groups at risk [69].

In this context, social bots with positive sentiments played the role of science popularizers by scientific explanations and even added links to popular science videos, allowing social bots to positively impact the public. Previous studies have proved that various information related to vaccines was complex during the pandemic, and online science popularization could improve the popularity of users’ scientific knowledge, reverse online rumors, and increase the public’s willingness to vaccinate [70]. This may be the reason that social bots could positively influenced the public sentiment at this stage.

Stage 3 (from 10 June 2021 to 8 August 2021)

At stage 3, social bots’ tweeting of positive sentiments was not a Granger cause for the positive sentiments sent by human vaccines. On the one hand, this was related to the declined proportion of tweets sent by social bots with positive sentiments (positive tweets from social bots accounted for 9.75% of all positive tweets, the least among the three stages). On the other hand, this was related to the development of the COVID-19 pandemic.

The topic of vaccine discussions on Twitter revolved around the spread of the Delta variant. Social bots with positive sentiments emphasized that the vaccine still worked, but rarely specified how well the vaccine would prevent the Delta variant. They posted information on how many vaccines were available at a given vaccination site and called on the public to get vaccinated during business hours of the day. In addition, they said authorities were expanding the tests of the vaccine’s effectiveness in preventing the variant. Unlike stage 2, the probability of a blood clot from vaccination was extremely low, as supported by scientific research. In the context of supporting facts, public panics were reduced with the continuous popularization of science [70], which provided a realistic basis for the impact of social bots with positive sentiments on human users.

However, in the absence of scientific support, the impact of social bots might be limited. At the time, there was insufficient scientific evidence that the existing vaccines were as effective against Delta variants as they were for several previous virus variants [71]. In people infected with the Delta variant, viral loads were similar in vaccinated and unvaccinated people [72,73]. The viral loads maintained after vaccination explained the Delta variant’s rapid global spread despite increasing vaccination coverage [74]. The Delta variant was nearly twice as contagious as earlier variants and may have caused more severe illness. People fully vaccinated could develop vaccine-breakthrough infections and spread the virus to others [75].

At this stage, social bots with negative sentiments mostly cited information from authoritative sources. Compared with the previous prevention rate of vaccines against other variants, it showed that the prevention ability of vaccines against the Delta variants had been reduced, and vaccination was not effective in preventing the COVID-19 pandemic. When the public believed that vaccines could not deal with current situations, they may have been more influenced by negative messages [76]. The failure of the vaccine has deepened the public’s panics about the COVID-19 pandemic, and the vaccine anxiety and anti-vaccine sentiment in social media have also continued to breed [77], creating more opportunities for negative social bots to have a harmful impact.

6. Conclusions

As the public increasingly uses social media to obtain health information, there is also growing interest in interactive social media in public health promotion. It is difficult for the public to communicate as frequently offline as in the past due to the continuous spread of the COVID-19 pandemic worldwide, which makes the discussion of health information through social media more frequent.

Vaccination, one of the most successful public health interventions and an essential means of preventing infectious diseases, has become the focus of public online discussions during the outbreak. Social media has become an important channel for the public to obtain vaccine information and to shape vaccination attitudes. However, in the discussion of this topic, there are human users and a large number of social bots that significantly affect the public’s online vaccine sentiments and offline vaccination behaviors.

In the work, we specifically analyzed 142,883 tweets from the three stages of COVID-19 vaccines. We detected 8.87% of the social bots, who posted 15,716 tweets. Later, a newly developed BERT-CNN sentiment analysis framework was constructed to perform a sentiment analysis on tweets from social bots and human users. A validation using Granger causality was performed to verify whether the sentiments of social bots affected those of human users. We found that social bots with positive sentiments increased positive human tweets at stages 1 and 2. At Stage 3, social bots with positive sentiments failed to influence human users. On the contrary, social bots with negative sentiments increased tweets with negative sentiments by online users. Social bots significantly affected the spread of positive or negative sentiments and the corresponding sentiments of humans.

The work confirmed Broniatowski’s view that social bots do not have a stable identity in their online influence on the topic of vaccination. In other words, social bots are not necessarily “good” or “bad” in online discussions of COVID-19 vaccines. The implication for us is that when other traditional means of science communication fail in future public health emergencies, some social bots can serve as communicators of correct health information. Social bots can be used to refute rumors, popularize scientific behaviors, and help people build scientific concepts of health. The intervention of social bots in the public opinion environment is not a “magic bullet,” and whether “good” or “bad” bots can produce the desired effect is affected by the development of the COVID-19 pandemic.

7. Limitations and Future Directions

The work analyzed the impact of social bots on human sentiment about vaccines at the three most-discussed stages of COVID-19 vaccines on Twitter from December 2020 to August 2021. However, we did not perform an analysis for cases after August 2021. Over time, people’s sentiments toward vaccines have been gradually stabilized, and vaccination, as a simple and effective means of epidemic prevention and control, has been accepted by more and more people. Even in the Middle East, which has the lowest willingness to vaccinate [68], the majority of people in many countries have already received at least one dose of the vaccine, such as Iran (75.3%), Saudi Arabia (74.1%), and Turkey (67.9%) [78]. Although the discussion surrounding the COVID-19 vaccine continues, discussions on this topic have plateaued. As the pandemic is ongoing and variants, such as Omicron, have emerged, existing vaccines have to a certain degree failed to provide adequate protection. How will the public feel about COVID-19 vaccines? Whether it will impact human vaccine sentiments in this case will be the focus of our follow-up research.

Author Contributions

Conceptualization, M.Z. and J.L.; Methodology, J.L., Z.C., and X.Q.; Software, Z.C. and X.Q.; Validation, M.Z. and J.L.; Formal Analysis, M.Z. and J.L.; Data Curation, Z.C.; Writing—Original Draft Preparation, X.Q. and Z.C.; Writing—Review & Editing, M.Z. and J.L.; Visualization, X.Q.; Supervision, J.L. and M.Z.; Project Administration, M.Z.; Funding Acquisition, M.Z. All authors have read and agreed to the published version of the manuscript.

Funding

The work was funded by National Social Science Foundation Youth Project “Research on the Influence of Social bots on the Climate of False Opinions in International Communication” and major projects of Jiangsu Provincial Department of Education “Research on the Influence of Social Bots on the Climate of False Opinions in International Communication and its Regulation” (Grant Nos. 21CXW028 and 2021SJZDA151). The work is the phased achievements of the Collaborative Innovation Center for New Urbanization and Social Governance of Soochow University.

Institutional Review Board Statement

The study was conducted in accordance with the China’s Personal Information Protection Law and approved by the National Social Science Fund of China (21CXW028). Ethical review and approval were waived for this retrospective study, while the personal data protection statement is available on the project’s website (https://rtgksm.wordpress.com/, in Chinese, accessed on 4 May 2022).

Informed Consent Statement

Informed consent was waived for this retrospective study, while the personal data protection statement is available on the project’s website (https://rtgksm.wordpress.com/, in Chinese, accessed on 4 May 2022).

Data Availability Statement

The data presented in this study are available on request from the first author. The data are not publicly available due to privacy protection.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Betsch, C.; Brewer, N.T.; Brocard, P.; Davies, P.; Gaissmaier, W.; Haase, N.; Leask, J.; Renkewitz, F.; Renner, B.; Reyna, V.F.; et al. Opportunities and challenges of Web 2.0 for vaccination decisions. Vaccine 2012, 30, 3727–3733. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Blankenship, E.B.; Goff, M.E.; Yin, J.; Tse, Z.T.H.; Fu, K.-W.; Liang, H.; Saroha, N.; Fung, I.C.H. Sentiment, Contents, and Retweets: A Study of Two Vaccine-Related Twitter Datasets. Perm. J. 2018, 22, 17–138. [Google Scholar] [CrossRef] [PubMed]

- Meleo-Erwin, Z.; Basch, C.; MacLean, S.A.; Scheibner, C.; Cadorett, V. “To each his own”: Discussions of vaccine decision-making in top parenting blogs. Hum. Vaccines Immunother. 2017, 13, 1895–1901. [Google Scholar] [CrossRef] [PubMed]

- Charles-Smith, L.E.; Reynolds, T.L.; Cameron, M.A.; Conway, M.; Lau, E.H.Y.; Olsen, J.M.; Pavlin, J.A.; Shigematsu, M.; Streichert, L.; Suda, K.J.; et al. Using Social Media for Actionable Disease Surveillance and Outbreak Management: A Systematic Literature Review. PLoS ONE 2015, 10, e0139701. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Resnick, P.; Carton, S.; Park, S.; Shen, Y.; Zeffer, N.R. A system for analyzing the impact of rumors and corrections in social media. In Proceedings of the Computational Journalism Conference, New York, NY, USA, 24–25 October 2014; Volume 5. [Google Scholar]

- Kang, G.; Ewing-Nelson, S.R.; Mackey, L.; Schlitt, J.T.; Marathe, A.; Abbas, K.; Swarup, S. Semantic network analysis of vaccine sentiment in online social media. Vaccine 2017, 35, 3621–3638. [Google Scholar] [CrossRef] [PubMed]

- Shi, W.; Liu, D.; Yang, J.; Zhang, J.; Wen, S.; Su, J. Social Bots’ Sentiment Engagement in Health Emergencies: A Topic-Based Analysis of the COVID-19 Pandemic Discussions on Twitter. Int. J. Environ. Res. Public Health 2020, 17, 8701. [Google Scholar] [CrossRef]

- Flores-Ruiz, D.; Elizondo-Salto, A.; de la O Barroso-González, M. Using Social Media in Tourist Sentiment Analysis: A Case Study of Andalusia during the COVID-19 Pandemic. Sustainability 2021, 13, 3836. [Google Scholar] [CrossRef]

- Tang, L.; Bie, B.; Zhi, D. Tweeting about measles during stages of an outbreak: A semantic network approach to the framing of an emerging infectious disease. Am. J. Infect. Control 2018, 46, 1375–1380. [Google Scholar] [CrossRef] [Green Version]

- Lazard, A.J.; Scheinfeld, E.; Bernhardt, J.M.; Wilcox, G.B.; Suran, M. Detecting themes of public concern: A text mining analysis of the Centers for Disease Control and Prevention’s Ebola live Twitter chat. Am. J. Infect. Control 2015, 43, 1109–1111. [Google Scholar] [CrossRef]

- Mollema, L.; Harmsen, I.A.; Broekhuizen, E.; Clijnk, R.; De Melker, H.; Paulussen, T.; Kok, G.; Ruiter, R.; Das, E. Disease Detection or Public Opinion Reflection? Content Analysis of Tweets, Other Social Media, and Online Newspapers during the Measles Outbreak in the Netherlands in 2013. J. Med. Internet Res. 2015, 17, e3863. [Google Scholar] [CrossRef]

- Han, X.; Wang, J.; Zhang, M.; Wang, X. Using Social Media to Mine and Analyze Public Opinion Related to COVID-19 in China. Int. J. Environ. Res. Public Health 2020, 17, 2788. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Puri, N.; Coomes, E.A.; Haghbayan, H.; Gunaratne, K. Social media and vaccine hesitancy: New updates for the era of COVID-19 and globalized infectious diseases. Hum. Vaccines Immunother. 2020, 16, 2586–2593. [Google Scholar] [CrossRef] [PubMed]

- Medford, R.J.; Saleh, S.N.; Sumarsono, A.; Perl, T.M.; Lehmann, C.U. An “Infodemic”: Leveraging High-Volume Twitter Data to Understand Early Public Sentiment for the Coronavirus Disease 2019 Outbreak. Open Forum Infect. Dis. 2020, 7, ofaa258. [Google Scholar] [CrossRef]

- Barkur, G.; Vibha, G.B.K. Sentiment analysis of nationwide lockdown due to COVID 19 outbreak: Evidence from India. Asian J. Psychiatry 2020, 51, 102089. [Google Scholar] [CrossRef] [PubMed]

- Pastor, C.K. Sentiment analysis of Filipinos and effects of extreme community quarantine due to coronavirus (COVID-19) Pandemic. SSRN Electron. J. 2020, 7, 91–95. [Google Scholar] [CrossRef]

- Dubey, A.D.; Tripathi, S. Analysing the Sentiments towards Work-from-Home Experience during COVID-19 Pandemic. J. Innov. Manag. 2020, 8, 13–19. [Google Scholar] [CrossRef]

- Ferrara, E.; Varol, O.; Davis, C.; Menczer, F.; Flammini, A. The rise of social bots. Commun. ACM 2016, 59, 96–104. [Google Scholar] [CrossRef] [Green Version]

- Varol, O.; Ferrara, E.; Davis, C.; Menczer, F.; Flammini, A. Online human-bot interactions: Detection, estimation, and characterization. In Proceedings of the International AAAI Conference on Web and Social Media, Montreal, QC, Canada, 15–18 May 2017. [Google Scholar]

- Edwards, C.; Edwards, A.; Spence, P.R.; Shelton, A.K. Is that a bot running the social media feed? Testing the differences in perceptions of communication quality for a human agent and a bot agent on Twitter. Comput. Hum. Behav. 2014, 33, 372–376. [Google Scholar] [CrossRef]

- Allem, J.-P.; Escobedo, P.; Dharmapuri, L. Cannabis Surveillance with Twitter Data: Emerging Topics and Social Bots. Am. J. Public Health 2020, 110, 357–362. [Google Scholar] [CrossRef]

- Zhang, M.; Qi, X.; Chen, Z.; Liu, J. Social Bots’s Involvement in the COVID-19 Vaccine Discussions on Twitter. Int. J. Environ. Res. Public Health 2022, 19, 1651. [Google Scholar] [CrossRef]

- Benis, A.; Seidmann, A.; Ashkenazi, S. Reasons for Taking the COVID-19 Vaccine by US Social Media Users. Vaccines 2021, 9, 315. [Google Scholar] [CrossRef] [PubMed]

- Omnicore Agency. Twitter by the Numbers: Stats, Demographics & Fun Facts. Available online: https://www.omnicoreagency.com/twitter-statistics/ (accessed on 22 February 2022).

- Broniatowski, D.A.; Jamison, A.M.; Qi, S.; Alkulaib, L.; Chen, T.; Benton, A.; Quinn, S.C.; Dredze, M. Weaponized Health Communication: Twitter Bots and Russian Trolls Amplify the Vaccine Debate. Am. J. Public Health 2018, 108, 1378–1384. [Google Scholar] [CrossRef] [PubMed]

- Gunaratne, K.; Coomes, E.A.; Haghbayan, H. Temporal trends in anti-vaccine discourse on Twitter. Vaccine 2019, 37, 4867–4871. [Google Scholar] [CrossRef] [PubMed]

- Melton, C.A.; Olusanya, O.A.; Ammar, N.; Shaban-Nejad, A. Public sentiment analysis and topic modeling regarding COVID-19 vaccines on the Reddit social media platform: A call to action for strengthening vaccine confidence. J. Infect. Public Health 2021, 14, 1505–1512. [Google Scholar] [CrossRef]

- Montagni, I.; Ouazzani-Touhami, K.; Mebarki, A.; Texier, N.; Schück, S.; Tzourio, C. The CONFINS group Acceptance of a COVID-19 vaccine is associated with ability to detect fake news and health literacy. J. Public Health 2021, 43, 695–702. [Google Scholar] [CrossRef]

- Altay, S.; Hacquin, A.-S.; Chevallier, C.; Mercier, H. Information delivered by a chatbot has a positive impact on COVID-19 vaccines attitudes and intentions. J. Exp. Psychol. Appl. 2021, 1–11. [Google Scholar] [CrossRef]

- Stieglitz, S.; Brachten, F.; Ross, B.; Jung, A.K. Do social bots dream of electric sheep? A categorization of social media bot ac-counts. In Proceedings of the Australasian Conference on Information Systems, Hobart, TAS, Australia, 4–6 December 2017. [Google Scholar]

- Stella, M.; Ferrara, E.; De Domenico, M. Bots increase exposure to negative and inflammatory content in online social systems. Proc. Natl. Acad. Sci. USA 2018, 115, 12435–12440. [Google Scholar] [CrossRef] [Green Version]

- Mustafaraj, E.; Metaxas, P. From Obscurity to Prominence in Minutes: Political Speech and Real-Time Search. In Proceedings of the WebSci10: Extending the Frontiers of Society On-Line, Southampton, UK, 26–27 April 2010. [Google Scholar]

- Ratkiewicz, J.; Conover, M.; Meiss, M.; Gonçalves, B.; Flammini, A.; Menczer, F. Detecting and tracking political abuse in social media. In Proceedings of the International AAAI Conference on Web and Social Media, Barcelona, Spain, 17–21 July 2011; Volume 5, pp. 297–304. [Google Scholar]

- Kim, A. Nearly Half of the Twitter Accounts Discussing ‘Reopening America’ May Be Bots, Researchers Say. CNN. 22 May 2020. Available online: https://edition.cnn.com/2020/05/22/tech/twitter-bots-trnd/index.html (accessed on 10 September 2020).

- Egli, A.; Rosati, P.; Lynn, T.; Sinclair, G. Bad Robot: A Preliminary Exploration of the Prevalence of Automated Software Programmes and Social Bots in the COVID-19# Antivaxx Discourse on Twitter. In Proceedings of the The International Conference on Digital Society, Nice, France, 18–22 July 2021; pp. 18–22. [Google Scholar]

- Tiwari, S.; Verma, A.; Garg, P.; Bansal, D. Social Media Sentiment Analysis on Twitter Datasets. In Proceedings of the 2020 6th International Conference on Advanced Computing and Communication Systems (ICACCS), Tamil Nadu, India, 6–7 March 2020; IEEE: New York, NY, USA, 2020; pp. 925–927. [Google Scholar]

- Hussain, A.; Tahir, A.; Hussain, Z.; Sheikh, Z.; Gogate, M.; Dashtipour, K.; Ali, A.; Sheikh, A. Artificial Intelligence-Enabled Analysis of Public Attitudes on Facebook and Twitter Toward COVID-19 Vaccines in the United Kingdom and the United States: Observational Study. J. Med Internet Res. 2021, 23, e26627. [Google Scholar] [CrossRef]

- Chen, H.; Zimbra, D. AI and Opinion Mining. IEEE Intell. Syst. 2010, 25, 74–80. [Google Scholar] [CrossRef]

- Dhaoui, C.; Webster, C.M.; Tan, L.P. Social media sentiment analysis: Lexicon versus machine learning. J. Consum. Mark. 2017, 34, 480–488. [Google Scholar] [CrossRef]

- Yuan, X.; Schuchard, R.J.; Crooks, A.T. Examining Emergent Communities and Social Bots within the Polarized Online Vaccination Debate in Twitter. Soc. Media Soc. 2019, 5, 1–12. [Google Scholar] [CrossRef] [Green Version]

- The First Seven COVID-19 Vaccines to Have Been Approved Internationally Received Orders for over 15 Billion Doses Altogether. 15 September 2021. Available online: https://www-statista-com.ezproxy.gavilan.edu/study/86257/covid19-vaccination-status-quo/ (accessed on 13 October 2021).

- Badawy, A.; Lerman, K.; Ferrara, E. Who Falls for Online Political Manipulation? In Proceedings of the Companion Proceedings of the 2019 World Wide Web Conference, Association for Computing Machinery, San Francisco, CA, USA, 13–17 May 2019; pp. 162–168. [Google Scholar]

- Luceri, L.; Deb, A.; Giordano, S.; Ferrara, E. Evolution of bot and human behavior during elections. First Monday 2019, 24, 1–12. [Google Scholar] [CrossRef]

- Davis, C.A.; Varol, O.; Ferrara, E.; Flammini, A.; Menczer, F. BotOrNot: A system to evaluate social bots. In Proceedings of the 25th International Conference Companion on World Wide Web, Montreal, QC, Canada, 11–15 April 2016; pp. 273–274. [Google Scholar] [CrossRef] [Green Version]

- Yang, K.; Varol, O.; Davis, C.A.; Ferrara, E.; Flammini, A.; Menczer, F. Arming the public with artificial intelligence to counter social bots. Hum. Behav. Emerg. Technol. 2019, 1, 48–61. [Google Scholar] [CrossRef] [Green Version]

- Ferrara, E. Measuring Social Spam and the Effect of Bots on Information Diffusion in Social Media. In Complex Spreading Phenomena in Social Systems; Springer: Berlin/Heidelberg, Germany, 2018; pp. 229–255. [Google Scholar] [CrossRef] [Green Version]

- Shao, C.; Ciampaglia, G.L.; Varol, O.; Yang, K.-C.; Flammini, A.; Menczer, F. The spread of low-credibility content by social bots. Nat. Commun. 2018, 9, 4787. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Fan, Y.; Jiang, B.; Lei, T.; Liu, W. A survey on sentiment analysis and opinion mining for social multimedia. Multimedia Tools Appl. 2018, 78, 6939–6967. [Google Scholar] [CrossRef]

- Wongpakaran, N.; Wongpakaran, T.; Wedding, D.; Gwet, K.L. A comparison of Cohen’s Kappa and Gwet’s AC1 when calculating inter-rater reliability coefficients: A study conducted with personality disorder samples. BMC Med Res. Methodol. 2013, 13, 61. [Google Scholar] [CrossRef] [Green Version]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. arXiv 2014, arXiv:1408.5882. [Google Scholar]

- Conneau, A.; Schwenk, H.; Barrault, L.; Lecun, Y. Very deep convolutional networks for natural language processing. arXiv 2016, arXiv:1606.01781. [Google Scholar]

- Wang, J.; Wang, Z.; Zhang, D.; Yan, J. Combining Knowledge with Deep Convolutional Neural Networks for Short Text Classification. In Proceedings of the International Joint Conference on Artificial Intelligence, Melbourne, VIC, Australia, 19–25 August 2017; pp. 2915–2921. [Google Scholar]

- Lee, S.B.; Gui, X.; Manquen, M.; Hamilton, E.R. Use of Training, Validation, and Test Sets for Developing Automated Classifiers in Quantitative Ethnography. In Proceedings of the Communications in Computer and Information Science, Dresden, Germany, 10–11 October 2019; Springer: Cham, Switzerland, 2019; pp. 117–127. [Google Scholar]

- Cohen, W.W.; Singer, Y. Context-sensitive learning methods for text categorization. ACM Trans. Inf. Syst. 1999, 17, 141–173. [Google Scholar] [CrossRef] [Green Version]

- Granger, C.W.J. Investigating Causal Relations by Econometric Models and Cross-spectral Methods. Econometrica 1969, 37, 424–438. [Google Scholar] [CrossRef]

- Towers, S.; Afzal, S.; Bernal, G.; Bliss, N.; Brown, S.; Espinoza, B.; Jackson, J.; Judson-Garcia, J.; Khan, M.; Lin, M.; et al. Mass Media and the Contagion of Fear: The Case of Ebola in America. PLoS ONE 2015, 10, e0129179. [Google Scholar] [CrossRef] [PubMed]

- Neuman, W.R.; Guggenheim, L.; Jones-Jang, M.; Bae, S.Y. The Dynamics of Public Attention: Agenda-Setting Theory Meets Big Data. J. Commun. 2014, 64, 193–214. [Google Scholar] [CrossRef]

- Stieglitz, S.; Dang-Xuan, L. Political communication and influence through microblogging—An empirical analysis of sentiment in Twitter messages and retweet behavior. In Proceedings of the 2012 45th Hawaii International Conference on System Sciences, Maui, HI, USA, 4–7 January 2012; IEEE: New York, NY, USA; pp. 3500–3509. [Google Scholar]

- Bessi, A.; Ferrara, E. Social bots distort the 2016 U.S. Presidential election online discussion. First Monday 2016, 21, 7–11. [Google Scholar] [CrossRef]

- Freitas, C.; Benevenuto, F.; Ghosh, S.; Veloso, A. Reverse Engineering Socialbot Infiltration Strategies in Twitter. In Proceedings of the 2015 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, Paris, France, 25–28 August 2015; pp. 25–32. [Google Scholar] [CrossRef] [Green Version]

- Tregoning, J.S.; Flight, K.E.; Higham, S.L.; Wang, Z.; Pierce, B.F. Progress of the COVID-19 vaccine effort: Viruses, vaccines and variants versus efficacy, effectiveness and escape. Nat. Rev. Immunol. 2021, 21, 626–636. [Google Scholar] [CrossRef] [PubMed]

- Weintraub, K. CDC Reports 13 Additional Cases of Blood Clots Linked to J&J COVID-19 Vaccine. All Happened Before 11-Day Pause in Its Use. Available online: https://www.usatoday.com/story/news/health/2021/05/12/j-j-covid-19-vaccine-cdc-additional-cases-blood-clots/5063485001/ (accessed on 5 December 2021).

- Centers for Disease Control and Prevention. CDC Recommends Use of Johnson & Johnson’s Janssen COVID-19 Vaccine Resume. Available online: https://www.cdc.gov/coronavirus/2019-ncov/vaccines/safety/JJUpdate.html (accessed on 13 July 2021).

- Raciborski, F.; Jankowski, M.; Gujski, M.; Pinkas, J.; Samel-Kowalik, P. Changes in Attitudes towards the COVID-19 Vaccine and the Willingness to Get Vaccinated among Adults in Poland: Analysis of Serial, Cross-Sectional, Representative Surveys, January–April 2021. Vaccines 2021, 9, 832. [Google Scholar] [CrossRef] [PubMed]

- Jemielniak, D.; Krempovych, Y. #AstraZeneca vaccine disinformation on Twitter. medRxiv 2021, 1–14. [Google Scholar] [CrossRef]

- Mahase, E. COVID-19: AstraZeneca vaccine is not linked to increased risk of blood clots, finds European Medicine Agency. BMJ 2021, 372, n774. [Google Scholar] [CrossRef]

- Sallam, M. COVID-19 Vaccine Hesitancy Worldwide: A Concise Systematic Review of Vaccine Acceptance Rates. Vaccines 2021, 9, 160. [Google Scholar] [CrossRef]

- Loomba, S.; de Figueiredo, A.; Piatek, S.J.; de Graaf, K.; Larson, H.J. Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the UK and USA. Nat. Hum. Behav. 2021, 5, 337–348. [Google Scholar] [CrossRef]

- Wang, X.; Li, Y.; Li, J.; Liu, Y.; Qiu, C. A rumor reversal model of online health information during the Covid-19 epidemic. Inf. Process. Manag. 2021, 58, 102731. [Google Scholar] [CrossRef]

- UK Health Security Agency. SARS-CoV-2 Variants of Concern and Variants under Investigation in England. Available online: https://www.gov.uk/government/organisations/public-health-england (accessed on 28 January 2022).

- Brown, C.M.; Vostok, J.; Johnson, H.; Burns, M.; Gharpure, R.; Sami, S.; Sabo, R.T.; Hall, N.; Foreman, A.; Schubert, P.L.; et al. Outbreak of SARS-CoV-2 Infections, Including COVID-19 Vaccine Breakthrough Infections, Associated with Large Public Gatherings—Barnstable County, Massachusetts, July 2021. MMWR Morb. Mortal. Wkly. Rep. 2021, 70, 1059–1062. [Google Scholar] [CrossRef] [PubMed]

- Pouwels, K.B.; Pritchard, E.; Matthews, P.C.; Stoesser, N.; Eyre, D.W.; Vihta, K.-D.; House, T.; Hay, J.; Bell, J.I.; Newton, J.N.; et al. Effect of Delta variant on viral burden and vaccine effectiveness against new SARS-CoV-2 infections in the UK. Nat. Med. 2021, 27, 2127–2135. [Google Scholar] [CrossRef]

- Eyre, D.W.; Taylor, D.; Purver, M.; Chapman, D.; Fowler, T.; Pouwels, K.B.; Walker, A.S.; Peto, T.E. Effect of Covid-19 Vaccination on Transmission of Alpha and Delta Variants. N. Engl. J. Med. 2022, 386, 744–756. [Google Scholar] [CrossRef] [PubMed]

- Mayo Clinic Staff. Do COVID-19 Vaccines Protect against the Variants? Available online: https://www.mayoclinic.org/coronavirus-covid-19/covid-variant-vaccine#:~:text=While%20research%20suggests%20that%20COVID,protection%20against%20severe%20COVID%2D19 (accessed on 4 March 2022).

- Jamison, A.M.; Broniatowski, D.A.; Dredze, M.; Sangraula, A.; Smith, M.C.; Quinn, S.C. Not just conspiracy theories: Vaccine opponents and proponents add to the COVID-19 ‘infodemic’ on Twitter. Harv. Kennedy Sch. Misinf. Rev. 2020, 1, 1–24. [Google Scholar] [CrossRef] [PubMed]

- Mangla, S.; Makkia, F.T.Z.; Pathak, A.K.; Robinson, R.; Sultana, N.; Koonisetty, K.S.; Karamehic-Muratovic, A.; Nguyen, U.-S.D.; Rodriguez-Morales, A.J.; Sanchez-Duque, J.A.; et al. COVID-19 Vaccine Hesitancy and Emerging Variants: Evidence from Six Countries. Behav. Sci. 2021, 11, 148. [Google Scholar] [CrossRef]

- Our World in Data. Coronavirus (COVID-19) Vaccinations. Available online: https://ourworldindata.org/covid-vaccinations (accessed on 10 March 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).