How to Assess Sustainable Planning Processes of Buildings? A Maturity Assessment Model Approach for Designers

Abstract

:1. Introduction

2. Materials and Methods

2.1. Software Process Improvement and Capability dEtermination (SPiCE)

2.2. Building Certification System DGNB

3. Results

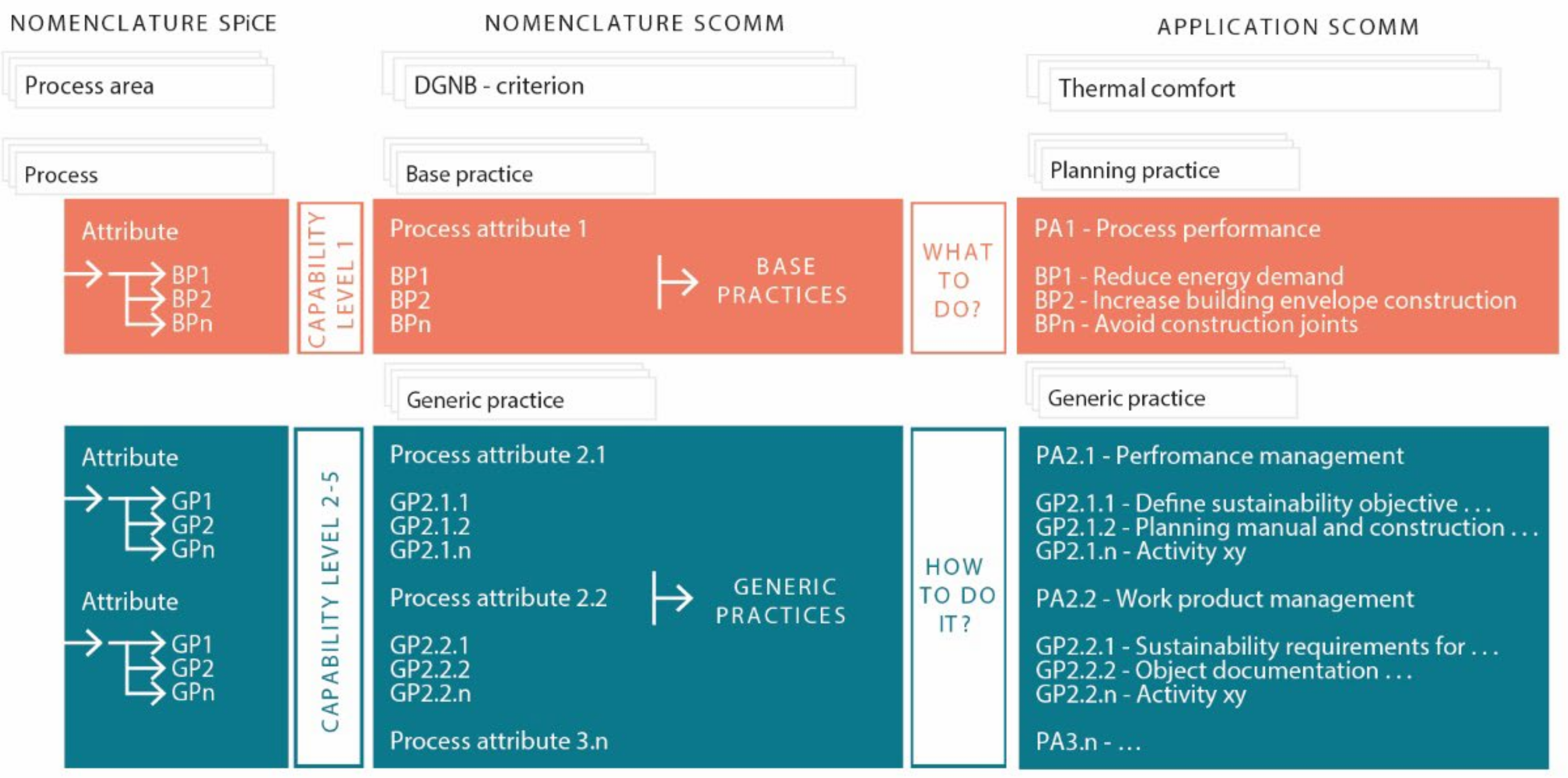

3.1. Development of a Sustainable Construction Maturity Model (SCOMM)

3.1.1. Name of the Maturity Model

3.1.2. Number of Maturity Levels

3.1.3. Classification and Number of Process Areas

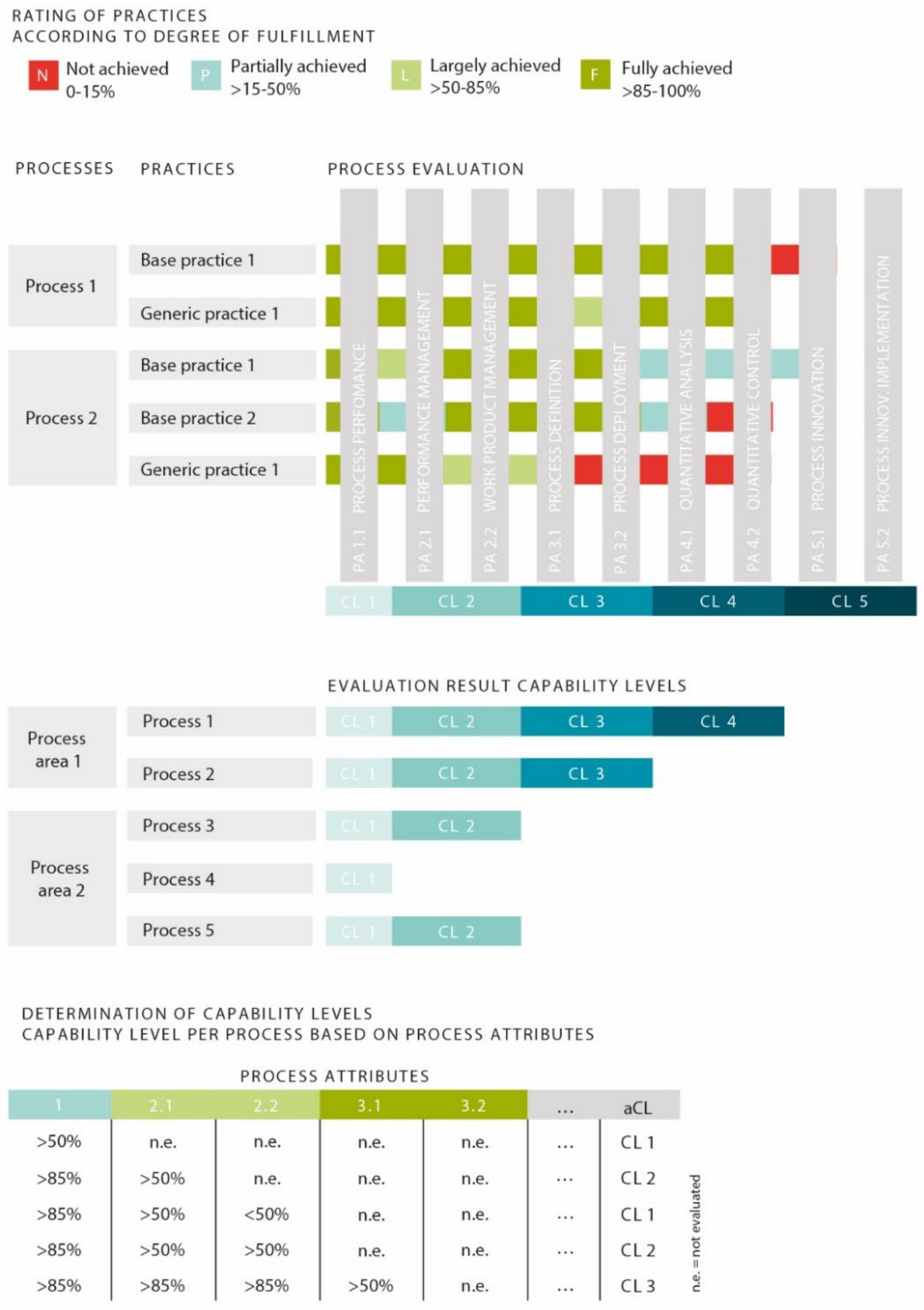

3.1.4. Maturity Level and Capability Level Definitions

3.1.5. Domain of the Maturity Model

3.1.6. Description of the Assessment Method

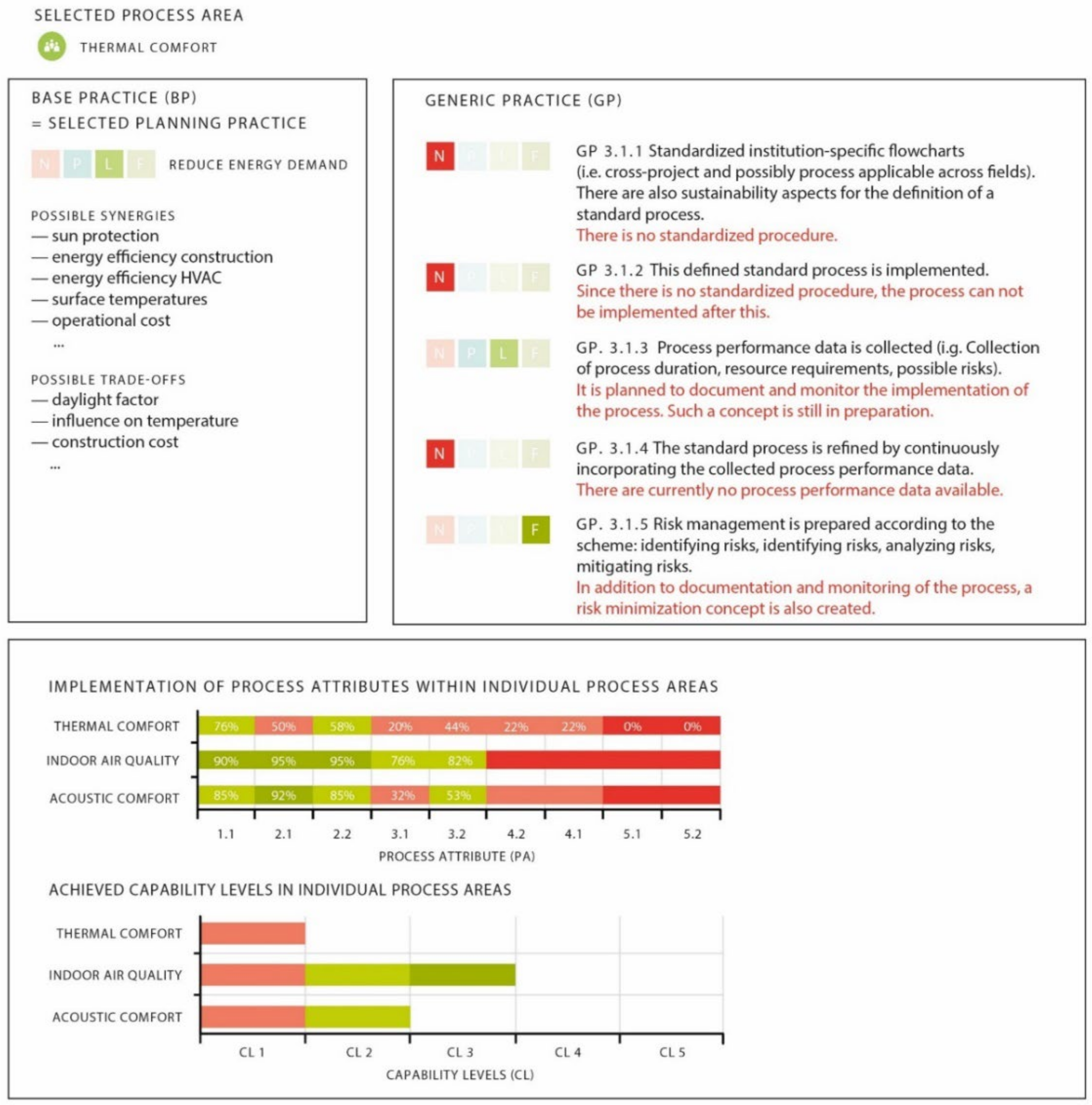

3.2. Application of SCOMM

3.3. Exemplary Output of the SCOMM

4. Discussion

5. Conclusions

- Combination of Software Process Improvement and Capability dEtermination (SPiCE) model and the building certification system of the German Sustainable Building Council (DGNB).

- The proposed process-based approach ensures a holistic consideration of all required planning practices by a systematic assessment process.

- Assessment of planning practices (i.e., base practices (BP)) and identification of their synergies and trade-offs by applying the proposed maturity model.

- Development of generic practices (GP) to evaluate process attributes and, thus, foster sustainable construction.

- Determination of the maturity level of individual planning practices or of the fulfillment of DGNB criteria or their quality sections.

- (1)

- it increases the user’s knowledge of the essential planning practices (K.O. practices and systemic relevant practices);

- (2)

- it increases the user’s knowledge and ability to visualize the interactions that occur among planning practices and processes;

- (3)

- it increases the user’s knowledge of the maturity level achieved as compared to the planned process maturity and, thus, the implementation of quality management from a holistic perspective;

- (4)

- it offers the user the possibility of preparing a sustainability report as a basis for a planning manual; and

- (5)

- by combining all of these advantages and by taking into account the maturity model’s workflow, the user has a higher chance of achieving a higher certification result.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Capability Levels

| Capability Level | Definition | Process Attribute |

|---|---|---|

| Level 0: Incomplete process/ planning practice | The process does not achieve its purpose or is not implemented. Little evidence is available. | Level 0 is the only process to which no process attributes are assigned. |

| Level 1: Performed process/ planning practice | The process is implemented and fulfils its process purpose. Input and output work products are in place and ensure that the process purpose is achieved. | PA 1.1: Process performance This attribute is a measure of the degree to which the process purpose is achieved. Indicators are work products and measures that convert input work products into output work products. |

| Level 2: Managed process/ planning practice | The process is planned, monitored and adjusted if necessary. The main differences to level 1 are the management of the execution and the work products. The result of the processes is measured by target-performance comparisons. | PA 2.1: Execution management This attribute describes to what extent the execution of a process is planned and controlled. The application of basic management techniques is affected. PA 2.2: Work product management This attribute describes how well work products are planned and controlled in the process. It is used to check the effort put into identifying, documenting, and managing work products. |

| Level 3: Established process/ planning practice | The applied and controlled process is now also defined to achieve the desired process result. The established process is a standard process that is defined and adapted. | PA 3.1: Process definition This attribute indicates how far a process is described. A process becomes a defined process, if a certain degree of customization under consideration from the outside. With regard to the desired process result a process description created. PA 3.2: Process deployment The degree to which a defined process is used as a standard process is determined by of the attribute process usage is described. Aspects that are described in the attribute are described, which contribute to the effectiveness of the implementation. |

| Level 4: Predictable process/ planning practice | The process runs as a standardized process to achieve the desired process results. The process is consistently implemented within defined limits. Deviations are recorded together with their causes. | PA 4.1: Process management This attribute reflects how well measurement results are used in the assurance process to perform processes. The measurement results are used to determine the degree to which business objectives are achieved. PA 4.2: Process control This attribute reflects how far a process is controlled. It can be used to predict whether a process is running within predefined limits. The purpose of control is to detect deviations and determine the causes of these deviations. |

| Level 5: Optimizing process/ planning practice | The existing process is continuously optimized to achieve current and future business objectives. The execution of processes is continuously improved by introducing new ideas and techniques and changing inefficient practices. | PA 5.1: Process innovation This attribute describes the degree to which process changes are implemented based on measurements, deviations, new innovations, and new ideas. Recognizing and understanding the causes of change is an important factor. PA 5.2: Process optimization The extent to which changes are made to the definition, control, and execution of processes is reflected by this attribute. The effects of changes are estimated in order to achieve the best possible improvement. Optimization must be planned carefully to minimize disruption. |

Appendix B. Maturity Levels

| Maturity Level | Definition |

|---|---|

| Level 1: Performed | The process purpose is met, based on training, experience, or work procedures following generally accepted guidelines. Company-wide process management does not exist, performance depends largely on personnel and differs from project to project. The results of the process execution are available, but the process execution relies only on the know-how of the project team members. Guidelines, if used, could be building certification systems, for example. However, their application is not consistent throughout the organization and planning measures, management measures, and repeatability measures are generally not implemented. |

| Level 2: Managed | The process execution is planned and guided. Resources, responsibilities, and instruments are planned according to realistic experiences. The project goals are defined, and the work is monitored, managed, and checked. The guidelines to be applied at project level are known and are applied. Additionally, the work is planned and executed at the individual project levels. Management of requirements and quality assurance are in place. Moreover, sub-processes (e.g., monitoring of milestones, costs, and project progress) are specified and implemented. Project-specific guidelines are planned. |

| Level 3: Established | Where appropriate, the standard processes are standardized throughout the company. Additionally, their execution is standardized using application guidelines and/or by adapting processes. This provides the foundation for standardized project management, project control, and project planning. The process is person-independent and institutionalized. There is a model for the standard procedure (process model), which has been documented and standardized on an organizational level. Simulations (e.g., LCC or thermal simulations) are performed. |

| Level 4: Predictable | The performance indicators derived from the measurements and analyses and their ongoing monitoring are capable of systematically identifying any weaknesses. This results in an improved forecasting accuracy. The qualities are quantitatively known, and the risk management is well-established. The process has been quantitatively understood and is being monitored at project level. Productivity and quality are measured, and the relevant parameters are identifiable. Effort estimations are performed methodically, and a PLAN-ACTUAL analysis is conducted. Possibilities for analysis and management can be specified. |

| Level 5: Optimizing | The information and knowledge gained from level 4 is then used to launch an optimization process. New methodologies and workflows are developed, simulated, and implemented as a prototype. If they are successful, they will be institutionalized. A systematic collection and re-use of empirical values and an error analysis is carried out. Opportunities for optimization resulting from new concepts and new technologies are explored. Long-term optimization goals and visions can be specified. |

Appendix C. Base Practices

| Exemplary Base Practices | Influenced Process Area (s) | Influenced Quality Section (s) |

|---|---|---|

| Slender design of building envelope | Quality of building envelope, thermal comfort, sound insulation, visual comfort, building life cycle assessment, life cycle cost | Environmental quality, economic quality, sociocultural and functional quality, technical quality, process quality |

| Increase window surface area | Visual comfort, thermal comfort, sound insulation, acoustic comfort, ease of cleaning building components, building life cycle assessment, life cycle cost | Sociocultural and functional quality, technical quality |

| Increase sound insulation | Sound insulation, building life cycle assessment, life cycle cost, | Sociocultural and functional quality, technical quality, |

| Reduce S/V-ratio | Land use, building life cycle assessment, life cycle cost | Technical quality, environmental quality |

| Use triple glazing windows | Thermal comfort, sound insulation, acoustic comfort, building life cycle assessment, life cycle cost | Sociocultural and functional quality, technical quality |

| Use heat pumps | User control, building life cycle assessment, life cycle cost | Technical quality |

Appendix D. Generic Practices

| Abbreviation | Generic Practice |

|---|---|

| GP 2.1.1 | Define sustainability objective and plan sustainability needs |

| GP 2.1.2 | Planning manual and construction schedule are available, taking into account sustainability aspects throughout the planning process and in preparation for execution (tendering and contracting) |

| GP 2.1.3 | Competencies and responsibilities related to the process are included in the performance specifications |

| GP 2.1.4 | Processes for supporting “sustainability” controls are defined |

| GP 2.1.1 | Define sustainability objective and plan sustainability needs |

| GP 2.1.2 | Planning manual and construction schedule are available, taking into account sustainability aspects throughout the planning process and in preparation for execution (tendering and contracting) |

| Abbreviation | Generic Practice |

|---|---|

| GP 2.2.1 | Sustainability requirements for results/products are clearly defined |

| GP 2.2.2 | Object documentation “sustainability” with regard to the use phase is prepared |

| GP 2.2.3 | Potential qualitative trade-offs/synergies resulting from sustainability optimization of a process area are identified |

| GP 2.2.4 | Results of ongoing monitoring of the process from a sustainability perspective are documented |

| Abbreviation | Generic Practice |

|---|---|

| GP 3.1.1 | Standardized institution-specific flowcharts (i.e., applicable across projects and possibly across process areas) are available to consider sustainability aspects to define a standard process |

| GP 3.1.2 | This defined standard process is implemented |

| GP 3.1.3 | Process performance data are collected |

| GP 3.1.4 | The standard process is refined by continuously entering the collected process performance data |

| GP 3.1.5 | Risk management is prepared according to the scheme: identify risks, analyze risks, mitigate risks |

| Abbreviation | Generic Practice |

|---|---|

| GP 3.2.1 | Roles, responsibilities, and required skills in relation to sustainability requirements are defined (e.g., performance charts with responsibilities and accountabilities, DEMIA-RACI diagrams) |

| GP 3.2.2 | Requirements for the (construction) project infrastructure (e.g., project references, personnel know-how, experience with the application of methods and tools for sustainable construction, site conditions with regard to the use of renewable resources) for the implementation of a sustainable planning and construction process are identified and documented |

| GP 3.2.3 | Resources for implementing the sustainability processes are provided, allocated, and used (e.g., use of sustainability-qualified personnel) |

| GP 3.2.4 | An appropriate (construction) project infrastructure (e.g., tools, documentation) exists on site (in the planning office, on the construction site) for the process |

| Abbreviation | Generic Practice |

|---|---|

| GP 4.1.1 | Objectives and related measurands are defined (Objectives: process area level and applicable planning practices; measurands: LCCA, LCA, critical and active planning practices) |

| GP 4.1.2 | Measurements are collected (evaluation LCCA and LCA for a process or individual planning practices) |

| GP 4.1.3 | Analyze trends from key performance indicators (LCCA, LCA) |

| Abbreviation | Generic Practice |

|---|---|

| GP 4.2.1 | Process control variables (LCCA, LCA) are analyzed |

| GP 4.2.2 | Sustainability process is managed using measurands |

| Abbreviation | Generic Practice |

|---|---|

| GP 5.1.1 | The definition of the standard sustainability process is changed as necessary |

| GP 5.1.2 | Impacts of proposed changes are evaluated |

| GP 5.1.3 | An implementation strategy for the changes and process flow diagrams for change management exist |

| GP 5.1.4 | The effectiveness of a process change is assessed |

| Abbreviation | Generic Practice |

|---|---|

| GP 5.2.1 | The objectives for improving the process towards sustainability are established. Change in method through innovation has occurred and the objectives for improvement are set |

| GP 5.2.2 | The potential for improvement is analyzed. Systemic SWOT analysis of the process improvement has been performed and sources of actual and potential problems have been identified |

| GP 5.2.3 | Changes are introduced according to the implementation strategy and process improvement flowcharts exist |

| GP 5.2.4 | The benefits obtained are quantified. Quantitative evaluation of the improvements of the changed process in relation to the pre-defined objectives |

References

- Intergovernmental Panel on Climate Change (IPCC). AR6 Climate Change 2022: Mitigation of Climate Change; Intergovernmental Panel on Climate Change: Geneva, Switzerland, 2022. [Google Scholar]

- Bragança, L.; Mateus, R.; Koukkari, H. Building sustainability assessment. Sustainability 2010, 2, 2010–2023. [Google Scholar] [CrossRef] [Green Version]

- Haapio, A.; Viitaniemi, P. A critical review of building environmental assessment tools. Environ. Impact Assess. Rev. 2008, 28, 469–482. [Google Scholar] [CrossRef]

- Wallhagen, M.; Glaumann, M.; Eriksson, O.; Westerberg, U. Framework for Detailed Comparison of Building Environmental Assessment Tools. Buildings 2013, 3, 39–60. [Google Scholar] [CrossRef] [Green Version]

- Ebert, T.; Essig, N.; Hauser, G. Green Building Certification Systems: Assessing Sustainability, International System Comparison, Economic Impact of Certifications; Institut für internationale Architektur-Dokumentation: Munich, Germany, 2011; Available online: http://lib.ugent.be/catalog/rug01:001691928 (accessed on 12 February 2022).

- Davies, G.R. Appraising weak and strong sustainability: Searching for a middle ground. Consilience 2013, 10, 111–124. [Google Scholar]

- Kreiner, H.; Passer, A.; Wallbaum, H. A new systemic approach to improve the sustainability performance of office buildings in the early design stage. Energy Build. 2015, 109, 385–396. [Google Scholar] [CrossRef]

- Scherz, M.; Zunk, B.M.; Passer, A.; Kreiner, H. Visualizing Interdependencies among Sustainability Criteria to Support Multicriteria Decision-making Processes in Building Design. Procedia CIRP 2018, 69, 200–205. [Google Scholar] [CrossRef]

- Neumann, K. Sustainable cities and communities—Best practices for structuring a SDG model. IOP Conf. Ser. Earth Environ. Sci. 2019, 323, 12094. [Google Scholar] [CrossRef] [Green Version]

- Kreiner, H.; Scherz, M.; Passer, A. How to make decision-makers aware of sustainable construction? In Proceedings of the Life-Cycle Analysis and Assessment in Civil Engineering; Caspeele, R., Taerwe, L., Frangopol, D., Eds.; CRC Press: Boca Raton, FL, USA, 2018. ISBN 9781138626331. [Google Scholar]

- Kreiner, H.; Scherz, M.; Steinmann, C.; Passer, A. Process model for the maturity assessment of sustainable facades. In Sustainable Design Process & Integrated Façades; Kreiner, H., Passer, A., Eds.; Verlag der Technischen Universität Graz: Graz, Austria, 2019; pp. 34–55. ISBN 978-3-85125-612-3. [Google Scholar]

- Kreiner, H.; Scherz, M.; Steinmann, C.; Passer, A. Applicability of Maturity Assessment for Sustainable Construction. In Proceedings of the World Sustainable Built Environment Conference 2017, Hong Kong Green Building Council Limited, Hong Kong, China, 5–7 June 2017; pp. 2155–2160. [Google Scholar]

- Scherz, M.; Kreiner, H.; Wall, J.; Kummer, M.; Hofstadler, C.; Schober, H.; Mach, T.; Passer, A. Practical application of the process model. In Sustainable Design Process & Integrated Façades; Kreiner, H., Passer, A., Eds.; Verlag der Technischen Universität Graz: Graz, Austria, 2019; pp. 132–155. ISBN 978-3-85125-612-3. [Google Scholar]

- Shewhart, W.A. Economic Control of Quality of Manufactured Product; MacMillan and Co. Ltd.: London, UK, 1931. [Google Scholar]

- Crosby, P.B. Quality is Free: The Art of Making Quality Certain; McGraw-Hill: New York, NY, USA, 1979. [Google Scholar]

- Nolan, R.L. Managing the crisis in data processing. Harv. Bus. Rev. 1979. Available online: https://hbr.org/1979/03/managing-the-crises-in-data-processing (accessed on 12 February 2022).

- Hsieh, P.J.; Lin, B.; Lin, C. The construction and application of knowledge navigator model (KNMTM): An evaluation of knowledge management maturity. Expert Syst. Appl. 2009, 36, 4087–4100. [Google Scholar] [CrossRef]

- Software Engineering Institute. CMMI® für Entwicklung, Version 1.3; Software Engineering Institute: Pittsburgh, PA, USA, 2011. [Google Scholar]

- Andersen, E.S.; Jessen, S.A. Project maturity in organisations. Int. J. Proj. Manag. 2003, 21, 457–461. [Google Scholar] [CrossRef]

- Backlund, F.; Chronéer, D.; Sundqvist, E. Project Management Maturity Models—A Critical Review: A Case Study within Swedish Engineering and Construction Organizations. Procedia Soc. Behav. Sci. 2014, 119, 837–846. [Google Scholar] [CrossRef] [Green Version]

- Gonçalves Machado, C.; Pinheiro de Lima, E.; Gouvea da Costa, S.; Angelis, J.; Mattioda, R. Framing maturity based on sustainable operations management principles. Int. J. Prod. Econ. 2017, 190, 3–21. [Google Scholar] [CrossRef]

- Kwak, Y.H.; Ibbs, C.W. Assessing Project Management Maturity. Proj. Manag. J. 2000, 31, 32–43. [Google Scholar] [CrossRef]

- International Organization for Standardization (ISO). ISO/IEC TS 15504–10:2011 Information Technology—Process Assessment—Part 10: Safety Extension; International Organization for Standardization: Geneva, Switzerland, 2011. [Google Scholar]

- International Organization for Standardization (ISO). ISO/IEC TS 15504–9:2011 Information Technology—Process Assessment—Part 9: Target Process Profiles; International Organization for Standardization: Geneva, Switzerland, 2011. [Google Scholar]

- International Organization for Standardization (ISO). ISO/IEC TS 15504–8:2012 Information Technology—Process Assessment—Part 8: An Exemplar Process Assessment Model for IT Service Management; International Organization for Standardization: Geneva, Switzerland, 2012. [Google Scholar]

- International Organization for Standardization (ISO). ISO/IEC TR 15504–7:2008 Information Technology—Process Assessment—Part 7: Assessment of Organizational Maturity; International Organization for Standardization: Geneva, Switzerland, 2008. [Google Scholar]

- International Organization for Standardization (ISO). ISO/IEC 15504–6:2013 Information Technology—Process Assessment—Part 6: An exemplar System Life Cycle Process Assessment Model; International Organization for Standardization: Geneva, Switzerland, 2013. [Google Scholar]

- International Organization for Standardization (ISO). ISO/IEC 15504–5:2012 Information Technology—Process Assessment—Part 5: An Exemplar Software Life Cycle Process Assessment Model; International Organization for Standardization: Geneva, Switzerland, 2012. [Google Scholar]

- International Organization for Standardization (ISO). ISO/IEC 15504–4:2004 Information Technology—Process Assessment—Part 4: Guidance on Use for Process Improvement and Process Capability Determination; International Organization for Standardization: Geneva, Switzerland, 2004. [Google Scholar]

- International Organization for Standardization (ISO). ISO/IEC 15504–3:2004 Information Technology—Process Assessment—Part 3: Guidance on Performing an Assessment; International Organization for Standardization: Geneva, Switzerland, 2004. [Google Scholar]

- International Organization for Standardization (ISO). ISO/IEC 15504–2:2003 Information Technology—Process Assessment—Part 2: Performing an Assessment; International Organization for Standardization: Geneva, Switzerland, 2003. [Google Scholar]

- International Organization for Standardization (ISO). ISO/IEC 15504–1:2004 Information Technology—Process Assessment—Part 1: Concepts and Vocabulary; International Organization for Standardization: Geneva, Switzerland, 2004. [Google Scholar]

- Wendler, R. The maturity of maturity model research: A systematic mapping study. Inf. Softw. Technol. 2012, 54, 1317–1339. [Google Scholar] [CrossRef]

- Sun, M.; Vidalakis, C.; Oza, T. A change management maturity model for construction projects. Assoc. Res. Constr. Manag. 2009, 2, 803–812. [Google Scholar]

- Rayner, P.; Reiss, G. The Programme Management Maturity Model. 2001. Available online: https://www.12manage.com/methods_reiss_pmmm.html (accessed on 12 February 2022).

- Project Management Institute (PMI). Organizational Project Management Maturity Model Knowledge Foundation; Project Management Institute: Newtown Square, PA, USA, 2003. [Google Scholar]

- Project Management Institute (PMI). OPM3 in Action: Pinellas County IT Turns Around Performance and Customer Confidence; Project Management Institute: Newtown Square, PA, USA, 2006. [Google Scholar]

- Bourne, L. A maturity model that right and ready, OPM3—Past, present and future. In Proceedings of the PMI New Zealand National Conference, Christchurch, New Zealand, 4–6 October 2006. [Google Scholar]

- Creasey, T.; Taylor, T. Best Practices in Change Management, Prosci Benchmarking Report 426—Organisations Share Best Practices in Change Management; Prosci Incorporated: Poway, CA, USA, 2007. [Google Scholar]

- Jia, G.; Chen, Y.; Xue, X.; Chen, J.; Cao, J.; Tang, K. Program management organization maturity integrated model for mega construction programs in China. Int. J. Proj. Manag. 2011, 29, 834–845. [Google Scholar] [CrossRef]

- Hermans, M.; Volker, L.; Eisma, P. A public Commissioning Maturity Model for Construction Clients. In Proceedings of the 30th Annual ARCOM Conference, Portsmouth, UK, 1–3 September 2014. [Google Scholar]

- Haigh, R.P.; Amaratunga, R.D.G.; Sarshar, M. SPICE FM (Structured Process Improvement for Construction Environments—Facilities Management); Technical Report; University of Salford: Salford, UK, 2001; Available online: http://usir.salford.ac.uk/id/eprint/10083/1/construct_it_spice_fm_report.pdf (accessed on 12 February 2022).

- Kwak, Y.H.; Ibbs, C.W. NoProject Management Process Maturity (PM)2 Model. J. Manag. Eng. 2002, 18, 150–155. [Google Scholar] [CrossRef] [Green Version]

- Office of Government Commerce (OGC). Portfolio, Programme and Project Management Maturity Model (P3M3) Introduction and Guide to P3M3; Office of Government Commerce: London, UK, 2003; Available online: https://miroslawdabrowski.com/downloads/P3M3/OGC%20branded/P3M3_v2.1_Introduction_and_Guide.pdf (accessed on 12 February 2022).

- Office of Government Commerce (OGC). Prince2 Maturity Model; Office of Government Commerce: London, UK, 2004. [Google Scholar]

- Willis, C.; Rankin, J. The construction industry macro maturity model (CIM3): Theoretical underpinnings. Int. J. Product. Perform. Manag. 2012, 61, 382–402. [Google Scholar] [CrossRef]

- Strategic Forum for Construction. Maturity Assessment Grid; Strategic Forum for Construction: London, UK, 2003. [Google Scholar]

- Finnemore, M.; Sarshar, M.; Aouad, G.; Barrett, P.; Minnikin, J.; Shelley, C. Standardised Process Improvement for Construction Enterprises (SPICE); University of Salford: Salford, UK, 2000. [Google Scholar]

- Vaidyanathan, K.; Howell, G. Construction Supply Chain Maturity Model—Conceptual Framework. In Proceedings of the 15th Annual Conference of the International Group for Lean Construction, East Lansing, MI, USA, 18–20 July 2007. [Google Scholar]

- Meng, X.; Sun, M.; Jones, M. A Maturity Model for Construction Supply Chain Relationships. J. Manag. Eng. 2011, 27, 97–105. [Google Scholar] [CrossRef]

- Cooke-Davies, T.; Arzymanow, A. The maturity of project management in different industries: An investigation into variations between project management models. Int. J. Proj. Manag. 2003, 21, 471–478. [Google Scholar] [CrossRef]

- Hartono, B.; Sulistyo, S.R.; Chai, K.H.; Indarti, N. Knowledge Management Maturity and Performance in a Project Environment: Moderating Roles of Firm Size and Project Complexity. J. Manag. Eng. 2019, 35, 4019023. [Google Scholar] [CrossRef]

- Arriagada, R.E.; Alarcón, L.F. Knowledge Management and Maturation Model in Construction Companies. J. Constr. Eng. Manag. 2014, 140, B4013006. [Google Scholar] [CrossRef]

- Xianbo, Z.; Bon-Gang, H.; Pheng, L.S. Developing Fuzzy Enterprise Risk Management Maturity Model for Construction Firms. J. Constr. Eng. Manag. 2013, 139, 1179–1189. [Google Scholar] [CrossRef]

- Heravi, G.; Gholami, A. The Influence of Project Risk Management Maturity and Organizational Learning on the Success of Power Plant Construction Projects. Proj. Manag. J. 2018, 49, 875697281878666. [Google Scholar] [CrossRef]

- Jia, G.; Ni, X.; Chen, Z.; Hong, B.; Chen, Y.; Yang, F.; Lin, C. Measuring the maturity of risk management in large-scale construction projects. Autom. Constr. 2013, 34, 56–66. [Google Scholar] [CrossRef]

- Liu, K.; Su, Y.; Zhang, S. Evaluating Supplier Management Maturity in Prefabricated Construction Project-Survey Analysis in China. Sustainability 2018, 10, 3046. [Google Scholar] [CrossRef] [Green Version]

- Karakhan, A.; Rajendran, S.; Gambatese, J.; Nnaji, C. Measuring and Evaluating Safety Maturity of Construction Contractors: Multicriteria Decision-Making Approach. J. Constr. Eng. Manag. 2018, 44, 04018054. [Google Scholar] [CrossRef]

- Chen, J.; Yu, G.H.; Cui, S.Y.; Feng, Y.R. The Maturity Model Research of Construction Project Management Informationization. Appl. Mech. Mater. 2013, 357, 2222–2227. [Google Scholar] [CrossRef]

- Wang, G.; Liu, H.; Li, H.; Luo, X.; Liu, J. A Building Project-Based Industrialized Construction Maturity Model Involving Organizational Enablers: A Multi-Case Study in China. Sustainability 2020, 12, 4029. [Google Scholar] [CrossRef]

- Goh, C.S.; Rowlinson, S. Conceptual Maturity Model for Sustainable Construction. J. Leg. Aff. Disput. Resolut. Eng. Constr. 2013, 5, 191–195. [Google Scholar] [CrossRef] [Green Version]

- International Organization for Standardization (ISO). ISO/IEC 33001:2015 Information Technology—Process Assessment—Concepts and Terminology; International Organization for Standardization: Geneva, Switzerland, 2015. [Google Scholar]

- International Organization for Standardization (ISO). NoISO/IEC 33002:2015 Information Technology—Process Assessment—Requirements for Performing Process Assessment; International Organization for Standardization: Geneva, Switzerland, 2015. [Google Scholar]

- German Sustainable Building Council. DGNB System. 2018. Available online: https://www.dgnb.de/en/ (accessed on 3 September 2020).

- Proença, D.; Borbinha, J. Maturity Models for Information Systems—A State of the Art. Procedia Comput. Sci. 2016, 100, 1042–1049. [Google Scholar] [CrossRef] [Green Version]

- Caralli, R.; Knight, M.; Montgomery, A. Maturity Models 101: A Primer for Applying Maturity Models to Smart Grid Security, Resilience, and Interoperability; Software Engineering Institute: Pittsburgh, PA, USA, 2012. [Google Scholar]

- Vester, F. Die Kunst Vernetzt zu Denken; Deutsche Verlags-Anstalt: Munich, Germany, 2008. [Google Scholar]

- Serrano, V.; Tereso, A.; Ribeiro, P.; Brito, M. Standardization of Processes Applying CMMI Best Practices BT. In Advances in Information Systems and Technologies; Rocha, Á., Correia, A.M., Wilson, T., Stroetmann, S.A., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 455–467. [Google Scholar]

- Görög, M. A broader approach to organisational project management maturity assessment. Int. J. Proj. Manag. 2016, 34, 1658–1669. [Google Scholar] [CrossRef]

- Kamprath, N. Einsatz von Reifegradmodellen im Prozessmanagement. HMD Prax. Wirtsch. 2011, 48, 93–102. [Google Scholar] [CrossRef]

- Succar, B. Building Information Modelling Maturity Matrix. In Handbook of Research on Building Information Modeling and Construction Informatics: Concepts and Technologies; IGI Global: Harshire, PA, USA, 2010. [Google Scholar]

- Scherz, M.; Passer, A.; Kreiner, H. Challenges in the achievement of a Net Zero Carbon Built Environment—A systemic approach to support the decision-aiding process in the design stage of buildings. IOP Conf. Ser. Earth Environ. Sci. 2020, 588, 32034. [Google Scholar] [CrossRef]

- Zunk, B.M. Positioning “Techno-Economics” as an interdisciplinary reference frame for research and teaching at the interface of applied natural sciences and applied social sciences: An approach based on Austrian IEM study programmes. Int. J. Ind. Eng. Manag. 2018, 9, 17–23. [Google Scholar]

| Name | Abbreviation | Source |

|---|---|---|

| Project Management Maturity Model | PMMM | [35,36] |

| Organizational Project Management Maturity Model | OPM3 | [37,38] |

| Change Management Maturity Model | CM3 | [39] |

| Risk Management Maturity System | RMMS | [40] |

| Program Management Organization Maturity Integrated Model for Mega Construction Programs | PMOMM-MCP | [40] |

| Public Commissioning Maturity Model | PCMM | [41] |

| Structured Process Improvement Framework for Construction Environments—Facilities Management | SPICE FM | [42] |

| Project Management Process Maturity Model | PM2 | [43] |

| Portfolio, Programme, and Project Management Maturity Model | P3M3 | [44] |

| PRINCE 2 Maturity Model | P2MM | [45] |

| Construction Industry Macro Maturity Model | CIM3 | [46] |

| Maturity Assessment Grid from the Strategic Forum for Construction | MAG | [47] |

| Standardised Process Improvement for Construction Enterprises | SPICE | [48] |

| Construction Supply Chain Maturity Model | CSCMM | [49] |

| Maturity Model for Construction Supply Chain Relationships | SCM | [50] |

| Abbreviation | Process Attribute |

|---|---|

| PA 1.1 | Process performance |

| PA 2.1 | Execution management |

| PA 2.2 | Work product management |

| PA 3.1 | Process definition |

| PA 3.2 | Process deployment |

| PA 4.1 | Process management |

| PA 4.2 | Process control |

| PA 5.1 | Process innovation |

| PA 5.2 | Process optimization |

| Degree of Achievement | Bandwidth | Meaning by Words |

|---|---|---|

| N—not achieved | 0–15% | Evidence of fulfilment of the PA is not available or very scarce. |

| P—partially achieved | >15–50% | An attempt was made to meet the PA, and this was partially achieved. However, some points of the process attribute are difficult to verify. |

| L—largely achieved | >50–85% | A systematic approach has been used to integrate the points of the PA. Despite some remaining weaknesses, this has been largely achieved. |

| F—fully achieved | >85–100% | The PA has been fully implemented within the process. A systematic approach is used to ensure that it is implemented correctly and evidence of this is available. There are no significant weaknesses. |

| Quality Sections | Criteria | Weights | |

|---|---|---|---|

| Environmental quality | Building life cycle assessment | ENV1.1 | 9.5% |

| Local environmental impact | ENV1.2 | 4.7% | |

| Sustainable resource extraction | ENV1.3 | 2.4% | |

| Potable water demand and wastewater volume | ENV2.2 | 2.4% | |

| Land use | ENV2.3 | 2.4% | |

| Biodiversity at the site | ENV2.4 | 1.2% | |

| Economic quality | Life cycle cost | ECO1.1 | 10.0% |

| Flexibility and adaptability | ECO2.1 | 7.5% | |

| Commercial viability | ECO2.2 | 5.0% | |

| Social and functional quality | Thermal comfort | SOC1.1 | 4.1% |

| Indoor air quality | SOC1.2 | 5.1% | |

| Acoustic comfort | SOC1.3 | 2.0% | |

| Visual comfort | SOC1.4 | 3.1% | |

| User control | SOC1.5 | 2.0% | |

| Quality of indoor and outdoor spaces | SOC1.6 | 2.0% | |

| Safety and security | SOC1.7 | 1.0% | |

| Design for all | SOC2.1 | 3.1% | |

| Technical quality | Fire safety | TEC1.1 | 2.5% |

| Sound insulation | TEC1.2 | 1.9% | |

| Quality of the building envelope | TEC1.3 | 2.5% | |

| User and integration of building technology | TEC1.4 | 1.9% | |

| Ease of cleaning building components | TEC1.5 | 1.3% | |

| Ease of recovery and recycling | TEC1.6 | 2.5% | |

| Immissions control | TEC1.7 | 0.6% | |

| Mobility infrastructure | TEC3.1 | 1.9% | |

| Process quality | Comprehensive project brief | PRO1.1 | 1.6% |

| Sustainability aspects in tender phase | PRO1.4 | 1.6% | |

| Documentation for sustainable management | PRO1.5 | 1.1% | |

| Urban planning and design procedure | PRO1.6 | 1.6% | |

| Construction site/construction process | PRO2.1 | 1.6% | |

| Quality assurance of the construction | PRO2.2 | 1.6% | |

| Systematic commissioning | PRO2.3 | 1.6% | |

| User communication | PRO2.4 | 1.1% | |

| FM-compliant planning | PRO2.5 | 0.5% | |

| Site quality | Local environment | SITE1.1 | 1.1% |

| Influence on the district | SITE1.2 | 1.1% | |

| Transport access | SITE1.3 | 1.1% | |

| Access to amenities | SITE1.4 | 1.7% | |

| Numbered Level | Capability Level |

|---|---|

| Level 0 | Incomplete process/planning practice |

| Level 1 | Performed process/planning practice |

| Level 2 | Managed process/planning practice |

| Level 3 | Established process/planning practice |

| Level 4 | Predictable process/planning practice |

| Level 5 | Optimizing process/planning practice |

| Level 0 | Incomplete process/planning practice |

| Level 1 | Performed process/planning practice |

| Level 2 | Managed process/planning practice |

| Numbered Level | Maturity Level |

|---|---|

| Level 1 | Performed |

| Level 2 | Managed |

| Level 3 | Established |

| Level 4 | Predictable |

| Level 5 | Optimizing |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Scherz, M.; Zunk, B.M.; Steinmann, C.; Kreiner, H. How to Assess Sustainable Planning Processes of Buildings? A Maturity Assessment Model Approach for Designers. Sustainability 2022, 14, 2879. https://doi.org/10.3390/su14052879

Scherz M, Zunk BM, Steinmann C, Kreiner H. How to Assess Sustainable Planning Processes of Buildings? A Maturity Assessment Model Approach for Designers. Sustainability. 2022; 14(5):2879. https://doi.org/10.3390/su14052879

Chicago/Turabian StyleScherz, Marco, Bernd Markus Zunk, Christian Steinmann, and Helmuth Kreiner. 2022. "How to Assess Sustainable Planning Processes of Buildings? A Maturity Assessment Model Approach for Designers" Sustainability 14, no. 5: 2879. https://doi.org/10.3390/su14052879

APA StyleScherz, M., Zunk, B. M., Steinmann, C., & Kreiner, H. (2022). How to Assess Sustainable Planning Processes of Buildings? A Maturity Assessment Model Approach for Designers. Sustainability, 14(5), 2879. https://doi.org/10.3390/su14052879