1. Introduction

The EEA (Engineering Education Accreditation), as a quality assurance mechanism for engineering specialties in colleges and universities, is implemented by the professional institutions and based on certain standards [

1,

2]. It has been reported that its application brings great benefits for colleges and universities to cultivate undergraduate students [

3,

4,

5,

6]. At present, there are mainly four types of EEA used worldwide, including the Washington Accord, the Sydney Accord, the Dublin Accord, and the EUR-ACE [

7,

8]. China joined the Washington Accord in 2016 [

9] and implemented the “New Engineering Research and Practice” to prompt and deepen the application of EEA [

10].

As an essential component of engineering education, internship teaching has been widely considered as the bridge between theory and practice [

11,

12,

13]. It includes cognitive, production, and graduation internships, among which a cognitive internship arranged before processional courses plays a vital role in promoting the transformation of students’ engineering knowledge from surface understanding to deeper awareness [

14,

15]. The cognitive internship is performed mainly offline, using activities such as fieldwork, enterprise visits, etc. Simultaneously, online teaching that makes use of resources such as videos and pictures has been increasingly used in some universities and colleges due to the influence of the COVID-19. To ensure the constant improvement of teaching quality under the EEA’s requirements, it is imperative to analyze and compare the effects of online and offline cognitive internships.

Academic literature related to online and offline practice has been gradually generated. For the offline practice, Walo (2001) [

16] designed a questionnaire completed by students to assess the contribution of internships in developing students’ management competencies based on the Competing Values Framework (CVF); Leydon (2013) [

13] performed a study to investigate the challenges of incorporating fieldwork into undergraduate courses using a questionnaire and an in-depth interview, and Barton (2017) [

17] explored how the fieldwork provides opportunities for students and reports its benefits on helping students internalize course content. For the online practice, Romero Oliva (2019) [

18] designed a survey for students and teachers using a questionnaire and an interview to explore the impact and value of Virtual Learning Environments on students’ oral skills; Oriji (2019) [

19] reported the application and challenges of social media in teaching and learning via adopting a questionnaire and an interview, and Darr (2019) [

20] designed a virtual practice laboratory to evaluate the impact of an online internship on students’ perception and learning with the help of a questionnaire. Additionally, researchers also designed experiments to compare the effects of online and offline practice. Seifan (2019) [

21] investigated the importance and usefulness of virtual vs. real field tripin promoting student’s knowledge and perceptions, and then Seifan (2020) [

22] investigated the students’ perception of the effect of real and virtual practice on the essential skills and career aspirations of students using a questionnaire; Sobia (2020) [

23] invited the students who are trained in both virtual and traditional simulation environments to complete the questionnaire to explore their preference for training methods. Additionally, some researchers have adopted the EEA concept to achieve the evaluation of internship teaching. Jamil (2013) [

24] adopted Outcome-Based Education (OBE) to conduct a survey with an internship host organization using a questionnaire to investigate the students’ performance in an industrial internship. Laingen (2015) [

25] developed a questionnaire to explore the approach for continuous improvement practices (CIP), adopting the data obtained from students’ competency assessments.

Given the above, the studies that refer to the EEA concept, such as OBE and CIP, place a particular emphasis on the study of the offline practice. In terms of the online mode, the above studies comparing the two methods focus on the students’ perceptions, where the questionnaire and the interview are the common research methods used. The above studies do not include the research on cognitive internships, nor do they research the online mode from the EEA concept. Additionally, none of the research combined the EEA concept and students’ perceptions to compare the effect of the online and offline modes. Therefore, it is necessary to use the method involving the EEA concept and the students’ perception to study the online and offline cognitive internships. Consequently, this study attempts to fill this gap. Specifically, this paper aims to compare the achievement effect of online and offline cognitive internship according to EEA’s standards and compare the effect of online and offline cognitive internship on students’ perception.

The remaining part of the paper is organized as follows.

Section 2 provides the methods and materials used in the study. The results, involving GADs and CIPP-based evaluation, are presented in

Section 3. We outline the findings and provide a discussion comparing the results of this study with the results of previous studies. Lastly,

Section 5 summaries the conclusions and limitations of the study, as well as future lines of research.

4. Discussion

Although many studies have examined both online [

18,

19,

20] and offline [

13,

16,

17] teaching methods, as well as compared their differences [

21,

22,

23], most authors are limited to the use of a questionnaire survey, which demonstrates that challenges remain in the area of research methods. An integrated approach involving the use of a GAD Model and a CIPP-based Evaluation Model adopted in this study provides a new perspective for evaluating the effectiveness of internship teaching.

Based on the GAD Model, this study shows that students who have been trained by an offline cognitive internship have more advantages in theoretical knowledge and environmental perception than those trained in an online cognitive internship. The GADs of sub-objective 1 and sub-objective 3 for the offline cognitive internships are good indicators of the results. This finding supports the work of Eiris Pereira et al. [

31], who argued that the offline internship mode requiring real field visits could prompt the students’ in-depth insight into theoretical concepts. As indicated in previous research [

22], real field visits provide opportunities to view specialized equipment comprehensively, communicate with professional engineers, and realize original experiences. This may be the reason why students with offline internships have a better perception of the environment. Consequently, more attention could be placed on strengthening the professional lectures in the online cognitive internships, from which students could enhance their theoretical knowledge and environmental perception via practical information and personal experience conveyed by specialists who have real fieldwork experience.

The results of GADs also reflect that the online cognitive internships can assist students in enhancing their analytical skills, as evidenced by its larger sub-objective 2 GAD scores as compared to those of the offline internship students. The online cognitive internship has many advantages over the offline cognitive internship, such as abundant online resources, teaching materials, and skills training organized by teachers. Moreover, the online teaching method can break the spatial-temporal limits of the classroom, enabling students to access internship opportunities at convenient times and locations [

18,

22]. Additionally, it offers students repeated viewing of the materials and provides plenty of time for reflection on the internship content, allowing them to revisit forgotten content and enhance their analytical skills. In the future, more resources and training which effectively stimulates opportunities for the development of students’ analytical skills could be collected and presented.

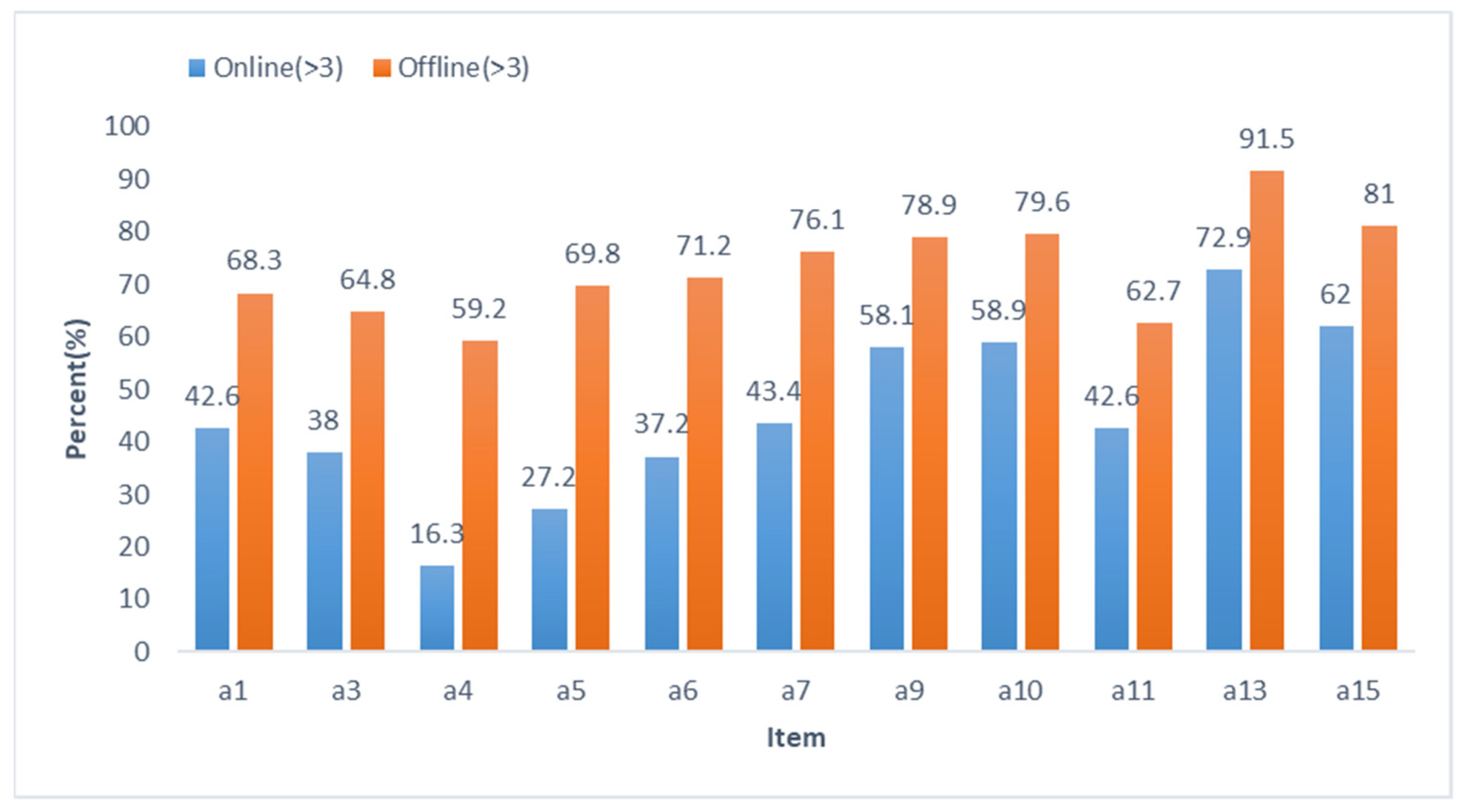

The results of the students’ perceptions based on the CIPP-based Evaluation Model in this study reflects that the students’ evaluation of the offline cognitive internship is higher. This finding aligns with previous studies [

21,

22]. The students agreed that the virtual field trip has absolute advantages in enhancing students’ perceptions and developing their essential skill. Further, results from this study have shown that the online cognitive internship can also help students improve their understanding of theoretical knowledge and clarify their career planning. Additionally, students perceive that the effects of online and offline cognitive internships on those two aspects exhibit the smallest difference when all factors are considered. Actually, the existence of the online cognitive internship provides a new way for students to acquire pre-knowledge of the majorand to learn more about a future career. However, the differences reported by the results show that the information related to theoretical knowledge and career planning needs to be increased in future online cognitive internships to produce the same effects as the offline cognitive internships.

Apart from the usefulness of the two forms of teaching, this study also reports the limitations in implementing cognitive internships. Students participating in this study perceived that insufficient teaching funds for both online and offline cognitive internships is the biggest issue; this lack of funding perceived to be more critical for the online than the offline internships. This finding supports the work of Leydon et al. [

13], where the introduction of a field trip was considered for an introductory geography class, and the challenges and rewards were explored. Thus, to address this issue, corporate sponsors could be considered by teachers who are responsible for cognitive internships, as the schools cannot support larger financial budgets for these programs.

5. Conclusions

This research proposed a comprehensive method for analyzing the difference in effectiveness between online and offline cognitive internships. Our method consists of two major processes, including a GAD-based evaluation and a CIPP-based evaluation. The data for the former are determined using students’ scores during their cognitive internships, including the performance, the report, and the defense of the internship. The results reveal that the offline cognitive internship does a favorable job of enhancing students’ theoretical knowledge and environmental perceptions, while the online cognitive internship has a better effect on strengthening students’ analytical skills. The data for the latter are collected using a questionnaire survey completed by the students. The results show that there are significant differences in the evaluation results between the online and offline cognitive internships in terms of eleven aspects, with the largest difference observed in the evaluation of the funding for the cognitive internships and the smallest difference shown in the effectiveness of the cognitive internships in promoting students’ understanding of classroom knowledge and the clarification of career planning. Furthermore, suggestions for the improvement of both internships are identified on the basis of those differences. This study offers broad implications for the implementation and improvement of cognitive internships, such as adequate preparation, sustainable internship methods, and better internship effects. By providing effective cognitive internships, new opportunities could be developed to cultivate high-quality talents for the benefit of society.

Although the effects of the two forms of cognitive internship are compared from multiple perspectives, there are still some limitations that could be improved in future studies. Since the online cognitive internship has only been implemented for one year, there is limited relevant data that can be collected in this study. Besides, as described in the Materials and Methods section, all participants came from one school. Data from students at one school may not be representative for all cases, as there may be differences in the implementation of cognitive internships at different schools. In reference to the weights used to calculate the GADs, they were determined according to the subjective judgment of the teachers, which may also affect the evaluation results.

Whereas the current study found that offline cognitive internships are more popular, a crucial goal for future research is to investigate whether the results differ by major, school, evaluation indicators, and other factors. A second goal for future research is to seek a suitable method for identifying the weights of sub-objectives, such as voting, consulting administrators of companies, expert scoring, etc. Moreover, methods used for evaluation could be continuously innovated. For example, further work could introduce several open-ended questions in future questionnaires. Additionally, since the existing literature promotes the notion that online teaching can be a powerful supplement to offline teaching, the effect of combining both online and offline internships should be of interest to educators.