Abstract

Online higher education has become a steadily more popular way of learning for university students in the post-pandemic era. It has been emphasized that active learning and interactive communication are key factors in achieving effective performance in online learning. However, due to the lack of learning motivation of students and the lack of feedback data in online learning, there are numerous problems, such as the weak self-discipline of students, unsatisfactory learning experience, a high plagiarism rate of homework, and the low utilization of online teaching resources. In this study, an online homework intelligent platform implemented by information technology (IT) was proposed. It was based on the pedagogical self-regulated learning (SRL) strategy as a theoretical foundation, and information technology as a driver. Through setting online homework assignments, a sustainable means of promoting the four components of the SRL strategy, i.e., self-disciplinary control, independent thinking, reflective learning, and interest development, can be provided to university students. Therefore, this study explained the “4A” functions in the platform and analysed the details of their implementation and value, such as assistance in locating resources, assignment of differentiated homework, assessment of warning learning, and achievement of sharing. After three years of continuous improvements since COVID-19, this online platform has been successfully applied to students and teachers at our university and other pilot universities. A comparison of student teaching data, questionnaire responses and teacher interviews from the Computer Composition Principles course illustrated the sustainability as well as the effectiveness of the method.

1. Introduction

With the worldwide comeback of COVID-19 in 2022, traditional education is once again being disrupted [1]. Online education in China continues to grow rapidly as the Chinese government advocates “classes suspended but learning continues” in all schools, including universities [2]. Unlike the original wave of the pandemic in 2020, nowadays, most teachers in China have gradually adapted to teaching using a blended approach with their own teaching resources, and students can study freely without space and time constraints. However, this new educational style also requires a higher level of self-discipline and metacognition from students [3,4,5]. The top students in higher education are able to integrate new knowledge from online and textbook sources with little guidance from the instructor, and to reconstruct their knowledge system using small- to large-scale development of self-regulated learning (SRL) strategies, such as metacognitive self-regulation, resource management skills, effort management, help-seeking, and self-evaluation, which can greatly contribute to the academic performance of these top students [6,7].

However, other students at universities may not be natural learners, and they demonstrate higher levels of concentration in offline classes, with more mutual eye contact and methodological teaching from teachers, while online education lacks these interactions [8]. The problems of unlearning and inefficiency are widespread. In the process of online teaching, the majority of learning is still controlled by some students with good foundations and active thinking, especially in the process of cooperative group learning, and students with weak foundations are still excluded from active learning, and they are more passive in accepting ideas from excellent students [9]. They are often forced to terminate their learning activities early because of the completion of the tasks by the outstanding students, which inevitably creates a situation of passive acceptance among these students.

In terms of extracurricular practice, Chinese university educators have objective pressures such as heavy workloads and limited energy, resulting in separation from learners regarding time and space. Therefore, they seek to promote students’ self-study of teaching materials or online resources in the form of homework or report practice. In fact, the majority of students’ online pre-study and post-class reviews are not strictly supervised, and the homework is in a single form with old content [10]. In addition, most students do not value the established teaching resources, because they simply seek to finish the task instead of to learn, and many watch the resources passively, like tourists. In order to complete the course tasks assigned by teachers at a rapid pace and to a high quality, they even demonstrate a lot of disciplinary violations, such as “brushing up” and “substitution”. Furthermore, they are eager to complete quizzes at the lowest cost, such as searching for questions on the Internet and copying others’ homework [11]. As a result, it is difficult for teachers to establish the actual learning situation and focus on the knowledge points that students are struggling with at present. Due to the lack of reliable pre-preparation, online teaching, such as the flipped classroom, is only a formality, which is less effective than traditional teaching [12].

As the root cause, this reduced efficiency is due to the insufficient external and internal motivation of students and their lack of learning initiative, as well as the insufficient teaching supervision efforts of teachers [13]. In order to solve the problems of the low utilization of online resources and the inaccurate understanding of the learning situation by teachers mentioned above, this paper designed and implemented an online homework intelligent platform under the guidance of the SRL strategy theory, which can automatically assign personalized homework questions for students, automatically evaluate students’ learning situations and automatically provide learning resources. It improves students’ SRL level by enhancing students’ learning initiative and provides teachers with real learning data for the later adjustment of teaching design. The experimental results proved that the platform finally, and obviously, guaranteed the quality of online education, and improved students’ knowledge significantly, along with their independent thinking and communication skills.

2. Related Work

2.1. Effects of SRL Strategies on Learning Performance of University Students

The earliest classification of self-regulated learning strategies was created by Zimmerman and Martinez Pons (1986) [14] through interviews with secondary school students, who summarized 14 learning strategies including self-evaluation, goal setting and planning, seeking information, recording and monitoring, organizing the environment, seeking help from peers, and reviewing and summarizing. Later, Pintrich, Smith, Garcia, and McKeachie (1993), based on Zimmerman’s classification, turned to university students and classified their self-regulated learning strategies into three major categories: cognitive strategies, metacognitive self-regulation strategies, and resource management strategies. They developed “Motivated Strategies for Learning Questionnaire” (MSLQ) for university students and obtained good results [15]. The questionnaire (MSLQ) has since been widely used. For examples, Kosnin (2007) [16] administered a questionnaire to, and performed multiple regression analysis on, 460 Malaysian university students, and the results confirmed that self-regulated learning strategies had a significant effect on university students’ academic achievement. Similar results were obtained by Sardareh, Saad and Boroomand (2012) [17] in a survey of 82 Iranian pre-university students, where self-reflective learning, self-regulated learning strategies such as time/place management, effort management, peer learning, and need for help were all significantly and positively associated with academic achievement in English. Chen, Wang, and Kim (2019) [18] found that students with a higher academic performance used more self-regulated learning strategies in a latent profile analysis (LPA) and one-way ANOVA of questionnaire results from sophomore students at a university in China, suggesting that self-regulated learning strategies help improve learners’ academic performance. The systematic review by Jhoni Cerón et al. (2020) [19] described the current state of the art with respect to the support for SRL in MOOCs using technologies. In particular, the platforms with research on SRL in MOOCs such as Coursera, edX and Moodle stood out. In Bai and Wang’s (2021) [20] survey study, it was also shown through structural equation modeling that self-regulated learning strategies were used more often when mediated by motivational beliefs.

Even in online educational settings, several research studies have shown the significant positive impact of self-regulated learning strategies on learners’ academic achievement.

2.2. Educational Functions and Automated Correction of Homewok in Higher Education

According to the American Bloom’s taxonomy of cognitive goals [21], coursework can be classified from simple to complex into six types, memorization, comprehension, application, analysis, evaluation, and creation, of which the first three reach primary cognitive goals and the last three reach advanced cognitive goals [22]. Primary cognitive goals are the foundation, and only when primary goals are reached are we able to achieve advanced cognitive goals. Tan [23,24] proposed that homework is an interactive tool that can connect the dialogue between teachers and students. In view of this, the researchers also tried to ensure the uniqueness of the assignments and aimed to establish the learning situation precisely so that appropriate remedial measures could be implemented in time to promote effective teaching and learning.

More and more scholars have made innovations and breakthroughs in the use of computer techniques for automated testing [25,26,27,28]. Additionally, these techniques are used in the generation and correction of homework problems. Yang Qinmin [29] proposed transforming subjective mathematics questions into tree-like multiple-choice questions by combining information extraction technology, data matching technology and one-time manual processing, which solved the bottleneck problem of automatically correcting subjective mathematics questions. Li Jianzhang [30] proposed splitting large computational questions into multiple blanks, and then using the pre-prepared conditions and answers in the database to customize the questions for students—although in a certain period of time this can effectively prevent students from committing plagiarism, the storage of static data is always limited, which is not conducive to the bulk modification of data and the opening of the self-test function to students. Huang Hengjun [31] used a coursework system based on resampling technology to achieve differentiated assignments at low cost and achieved good implementation results, but this was mainly used for data analysis courses as well as experimental data. It can be seen that the functional requirements of the current online homework system for university courses are mainly focused on the ability to randomly differentiate, to repeatedly self-test, and to automatically correct. The demands in these studies can meet the needs of practicing SRL strategies.

2.3. Major Objectives

Based on the above review, there are many studies on self-learning regulation, including intrinsic mechanisms, models, structural features and assessments [32,33,34,35]. It can also be seen that SRL is beneficial for in-depth learning. However, the interactivity of online education is not high enough. Online education is not mandatory for the client, and students can open or close educational services at will. For students who lack self-regulated learning, if the course is not interesting and interactive, it does not ensure effective long-term learning, which is not conducive to the field of results-oriented education services [1]. Therefore, this study concerns online homework, and its main goal is to motivate students to use self-learning regulation strategies for in-depth learning and flexible application by improving homework contents as well as evaluation methods. To achieve this, the online homework intelligent platform can be designed to collect and analyze data on students’ homework trajectories in a sustainable way, so that teachers can teach more effectively, and students can learn more deeply. This study can be regarded as sustainable, and needs to consider the research questions below:

- 1.

- What parts of SRL can be enhanced through the homework platform to help students?

- 2.

- What functions to design for this platform? How will these be implemented?

3. Architecture of SRL-Based Homework Platform

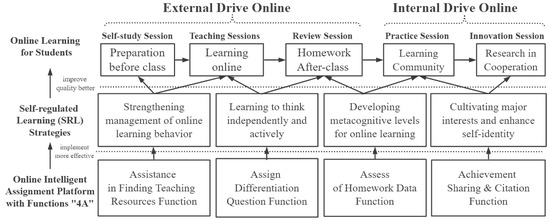

SRL is actually an active constructive process in which learners set up goals for learning, and then monitor, regulate and control cognition, motivation and behavior, guided and constrained by the goals and environmental features [36,37,38,39]. In order to achieve the goal of shifting from externally driven student learning to internally driven, the online homework intelligent platform needs to achieve at least the following four aims:

- Strengthen students’ management of their online learning behaviors. Make sure that this system provides students with self-testing and self-evaluation of assignments to guide their willingness to actively give their time to learning and to develop their cognitive focus for a long duration;

- Drive students to think independently from external forces. Make sure that this system prevents most students from copying answers to their homework. It manages to help them expand their knowledge by utilizing online learning resources. In addition, it allows them to experience the achievement of independent solving in learning;

- Develop students’ level of metacognition. First, the system helps students to record and monitor the completion trajectory of their homework activities, and the results of their completion. Second, it contributes to the analysis of student activity characteristics. Finally, it provides students with effective reflective measures;

- Cultivate students’ own professional interests and self-identity. Make sure that the system encourages students to take the initiative to join the online learning community and share the results of their own knowledge. More than that, it helps students to work collaboratively in teams to complete difficult and challenging learning tasks.

With the above requirements, besides the basic functions, the online homework intelligent platform also demands the following core functions, called “4A”:

- Assist students to self-solve their problems by providing more ways to learn online;

- Assign each student almost the same question online, but with different known conditions;

- Assess student learning based on procedural data of student answers for questions;

- Achievements of students and faculty can be shared and referenced by joining learning communities.

Based on the above considerations, the architecture of SRL-based homework platform is shown in Figure 1.

Figure 1.

This is the main architecture of the SRL-based homework platform for sustainability of student development. Each of these four core functions in this platform requires a specialized system to support.

4. Implementation of Details

4.1. Pre-Processing for Automatic Correction of Homework Questions

In this paper, we took the Chinese course Computer Composition Principles as an example (it is known as Computer Organization and Design in the USA). This course is the core of the specialization in Computer Science. It introduces the underlying architecture of computers and includes both a theoretical and an experimental component. The content of this course is recognized in China for its high difficulty and abstraction. Therefore, it is very representative as a case study of online education. First of all, in order to test students’ knowledge through the online homework platform, the checkpoints for this course were decomposed from the cognitive perspective of the Bloom model. Pre-processing work was mainly aimed at the traditional questions of the first four levels of Bloom. The improved quiz evaluation method tries to reflect such features as computability, answer uniqueness, and process specificity to meet the demand for automatic correction and random differentiation of homework in digital systems [40].

4.1.1. Memorization and Comprehension Levels

- (1)

- Topic-based choice questions

Numerous preparatory options are designed around the topical knowledge points of the course. The number of options should be large enough and confusing enough to test the learners’ understanding of the conceptual definition type of points. In this way, the teacher ensures that students are able to think independently, and the teacher guides them through the learning process to understand the details of the theory in depth.

- (2)

- Step-by-step fill-in-the-blank questions

Step-by-step fill-in-the-blank questions are set instead of fill-in-the-blank questions and calculation questions that only fill in the results, so as to realize the evaluation of students’ processes rather than the results. The questions are guided by specific cases, and the whole calculation process is broken down into several steps, with each step having a blank space to test whether students understand each calculation process, similar to the white-box test in software testing that tries to cover branches, in contrast to the black box test.

4.1.2. Application and Analysis Levels

On the basis of students’ understanding of knowledge points, completing such exercises can improve students’ ability to solve general problems. In the process of application, students think and feel the difference between human and machine calculation, summarize the easy and difficult knowledge points, and adjust their knowledge construction system.

- (1)

- Programming questions

Students are required to recreate the complete computational process of the knowledge point by writing programs to simulate the software and hardware working process [40]. The program’s fill-in-the-blank questions, i.e., set blanks on the key details of the existing program, and the programming questions are more demanding for the students and require them to master all the details and good programming skills to complete them. Just like the globally popular ICPC-ACM competition, the computer can automatically provide feedback on whether the student’s answer was accepted or not.

- (2)

- Case-based mistake correction questions

Students are asked to clarify the experimental specifications and precautions, and to point out the classic mistakes in reverse. Through well-designed homework problems, students are taught to consciously reflect on high-frequency errors and understand the truth that “a thousand-mile dike is broken by ants”. It also develops students’ ability to describe and identify problems, as well as their ability to analyze and solve problems.

4.2. Randomization for the Variables within a Single Question

After the pre-processing stage, the tedious task of assigning and correcting homework can be automatically performed by the Homework Assistance System (HAS). Next, the platform generates randomly differentiated child questions based on the parent question entered by the proposer. Similar to the functionality called “Randomization” on the open edX platform, our platform also supports this feature. This Randomization is different from “question randomization” [41]. It ensures that variables within a single problem are randomized, while question randomization presents different questions or combinations of different questions to different learners. For more learners to engage in online learning using their own SRLs, our platform makes this feature even more extensive and versatile. We can support more question types such as judgment, case-based mistake correction and program questions, as well as more types of scripted input than just PYTHON. The following section presents the implementation steps and feasibility of the mechanism in the Question Generation System (QGS).

4.2.1. Question Paradigm Setting

After pre-processing, the question is represented by the symbol E, obtained by the combination of the terms I, as expressed by Equation (1).

where I consists of the set of operational primitive problems P, conditional vectors C = {c1, ..., cn}T and embedded relations R, as in Equation (2).

In this, we assume that the corresponding answer a can be derived through a set of conditions C by a fixed calculation, then the set of condition vectors C’ = {C1,..., Cm} (m ≥ 1) can be obtained after the mapping of the computational relation R to the set of answers A = {a1,..., ak} (1 ≤ k ≤ m and each answer is different), denoted as C(R) → A(R), where C(R) is the definition domain of the computational relation R and A(R) is the value domain of the computational relation R. Therefore, for the same motherboard P, C(R) expansion has the potential to expand A(R), which can effectively reduce the possibility of student copying when A(R) is large enough. Next, we explore how to effectively construct the conditional vector.

4.2.2. Conditional Vector Construction

Constructing the conditional vector mainly involves performing random variation on the input data in the correct format of the original questions. The types of variables that can be input are determined according to the analysis of the characteristics of each question type, the allocation strategy and priority of the variables are determined, and finally some basic input data are constructed as the initial test cases based on this information.

- (1)

- Judgment and choice questions

The judgment question Ejdg is composed of numbers of terms In(n ≥ 1) connected by a Cartesian product, denoted as:

In (3), In denotes the nth item randomly extracted from the question bank candidates. The significance of preparing a number of alternative options for the selection of question types in the preprocessing work is also high in this context. By randomly generating any element c ∈ {Yes, No}, A(R) = {True, False} in the condition variable, it follows that I consists of a pair of positive and negative propositions, i.e., I = {P, ¬P}.

If the number of elements in the set is denoted by |N|, then |Ejdg| = 2n, which means there are 2n different combinations. For example, when n = 2, |Ejdg| = |I1 × I2| = | {X, ¬X} × {Y, ¬Y} | = 4, indicating that there are four different descriptions of the question for the student to make a judgment.

The multiple-choice questions are considered to be selected from the multiple judgment questions set out in Equation (3), which can be combined into single-choice or multiple-choice questions depending on the number of correct options and expressed in Equation (4).

Thus, either judgment or multiple-choice questions can express multiple combinations of similar but different answers by constructing conditional vectors that are randomly assigned to students for homework questions. In addition, there is a class of choice questions, which should be answered by selecting the calculated numbers, which will be discussed together with the conditional construction of the fill-in-the-blank questions.

- (2)

- Fill-in-the-blank and computational questions

Assuming that there exists a computational relation R(C,A) for such questions, then, in order to find a ∈ A(R), it is necessary to first understand the definition description in T, and then construct the computational model R based on the data condition c ∈ C(R) given in the question, expressed in Equation (5).

In (5), the computational relation R is the focus of the test for this type of question, and the application of students’ knowledge is repeatedly reinforced by constructing different data conditions c in order for the teacher to analyze the level of detail of students’ knowledge. It is worth mentioning that between the computational relations there is a set progression between conditions cm and cn according to the needs of the quiz.

- (3)

- Case-based mistake correction and program questions

The parent template P for such questions is changed into an experimental diagram or procedure, with n blanks pre-designed in P. A number of elements are randomly selected from C to form the set of blanks B; the set of other blanks is C-B, which is directly replaced by the corresponding answer A(R), expressed in Equation (6).

4.2.3. Condition Data Filtering

The input selection session will filter the constructed generated data and set the constraint strategy. We try to filter out invalid input data in advance, including data types, data ranges, duplicate or contradictory descriptions, compiler undefined behavior and other conflicts, and try to ensure the quality of input data as well as the accuracy of topic descriptions.

There are various kinds of setting constraint strategies—for example, the input data of some topics can be decided by comparing the run results of several general compilers, and all of them run consistently before passing; teachers can directly delineate the data types that can be input as well as the data ranges; and teachers collect feedback from students on the vulnerabilities of homework problems through the homework platform and fix them.

4.3. LearningWarning System Based on Homework Data Trajectory

4.3.1. Collection of Learners’ Homework Completion Trajectory Data

The Learning Warning System (LWS) can automatically analyze students’ knowledge score rate, question correct answer rate, code repetition rate, highest score, lowest score, median, high average score, low average score, difficulty coefficient, differentiation and the number of students in each score range, in addition to providing traditional functions such as automatic correction, score statistics, and displaying grades and rankings. Grade reports for all students are available for teachers to download and access with one click.

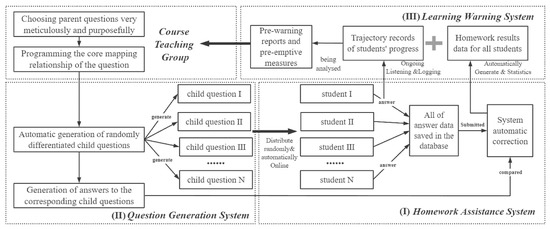

In order to allow teachers to customize personalized learning for each student and urge them to enhance metacognitive learning [42], LWS specially adds features to support the analysis of learners’ online learning track data. Learners will generate a large amount of learning log data, such as daily attendance and assignment completion level, during the course of learning on the learning platform. By collecting these log data and filtering and sifting the data, we finally obtain multiple dimensions of information and use the comprehensive score as the final indicator for evaluating learners and as a label for template judgment. The first three system partnerships of the homework platform are shown in Figure 2.

Figure 2.

This is a flow chart of the first three systems of the online homework platform, reflecting their previous partnership with teachers and students.

The data items of learners’ self-study and homework completion trajectories to be collected by the system are shown in Table 1.

Table 1.

Learner’s learning trajectory statistics.

4.3.2. Implementation of the Learning Warning System Based on Trajectory Data

- (1)

- Effective duration of homework filtering

The Learning Warning System takes the real duration of learners’ assignments as an important indicator to evaluate the validity of learners’ assignments. If only the last submission time minus the initial submission time is used as the effective duration, it is difficult to distinguish the real duration of each learner’s assignment and is not conducive to the identification of the learning alert model.

Therefore, we proposed adding the assignment submission interval as an important basis for determining the assignment length. The research hypothesis is that there will not be a long interval between two assignment answer submission time points when learners perform assignments on the online platform. This type of approach enables a better judgment of learners’ real assignment duration. The equation for judging the duration of continuous homework is shown in Equation (7).

In (7), . are the submission time points of two assignment answers, respectively, and is the interval between two submission times. ∂ is the set threshold value. If the interval between two submission times is greater than ∂, then it will not be considered as a case of continuous assignment. Otherwise, it will be considered as a continuous assignment time, and the difference between the two submission times will be considered as the assignment time.

- (2)

- Duration of homework balance

Considering that the difficulty of different courses varies, adding a course difficulty factor can better balance the duration of assignments. The higher the difficulty of the course, the higher the value of the course factor will be. If there are multiple submissions for a single assignment, the records of multiple submissions will be judged, and time will be accumulated. The time spent on the homework is calculated as shown in Equation (8).

In (8), . is the single assignment time, is the assignment difficulty factor, and the default is 1. When the level of plagiarism is greater than the previous 0.2 times, the factor will increase by 0.1, and when the level of plagiarism is less than the previous 0.1 times, the fact will be adjusted downward by 0.1. The word assignment time will be divided by the assignment difficulty factor as the real assignment time. We add up several assignments to obtain the current assignment time.

- (3)

- Plagiarism determination

It is assumed that in the real environment, there exists a certain number of learners who obtain higher scores in a shorter period of time through plagiarism in the form of searching or asking. Such behaviors lead to the collection of data samples that interfere with the early warning model’s judgment of the true learning of these learners. We designed the formula for determining student plagiarism in our system, as shown in (9).

In (9). is the length of this assignment for the learner, and n is total number of learners. If is the real assignment length of a learner, then the assignment length , is the average length of the assignment. is the set threshold, and if the length of this assignment is less than it will be judged as being an example of plagiarism of this homework.

- (4)

- Learning credibility

A learners’ ability to complete homework independently and to plagiarize or not is also an important determinant [43]. The more times a learner is judged to have plagiarized, the lower the credibility of the homework. Homework credibility is calculated as shown in Equation (10).

In (10), ϵ (0, 1). N is the total number of assignments, and the expression () calculates the total number of times a learner is judged to have plagiarized an assignment. The ratio of the number of plagiarized homework assignments to the total number of assignments is the credibility of the homework.

- (5)

- Learning motivation

Learning motivation is used to reflect the enthusiasm of learners for online learning [44]. The learner starts the assignment within a certain period of time after it is assigned, and the more instances accumulated the higher the positivity is, and vice versa. The formula for quantifying the number of times learning is active is shown in Equation (11).

In (11), F is the first homework submission time, S is the homework start time, D is the job end time, L is the homework duration, and is the set learning motivation threshold. Therefore, the learning motivation AR is shown in Equation (12).

- (6)

- Learning delay

Learning delay is a measure of students’ learning procrastination, which refers to the delayed behavior presented [45] when learners are faced with the stimulus of an event related to an assignment (or academic task), and is a common negative learning habit. The equation for determining learning procrastination is shown in (13).

In (13), is the minimum homework duration factor, and is the average duration of this homework, and Equation (13) determines whether the homework is delayed. If the duration is longer than the duration , the system judges it to be delayed. Therefore, the equation of learning delay is shown in Equation (14).

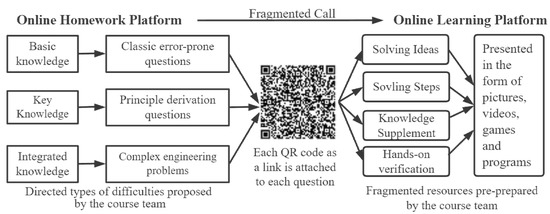

4.4. Assistance with Homework Linked to Online Teaching Resources

Whenever a student thinks independently about a difficult problem, it is inevitable that many questions will arise [46]. Therefore, the assistance system should provide students with an effective question and answer service. Providing a large number of online students with a variety of questions, the service system prioritizes the provision of self-service. Question and answer should return to the course teaching resources themselves, providing students with resources strongly related to the solution ideas, such as a PPT containing similar examples of the solution process, a catechism video of knowledge points explained by the class teacher, or even a video of the teacher then recording and uploading a solution video that provides ideas on the topic.

The homework assistance system uses QR codes as the connecting medium between topics and resources, allowing students to select a certain knowledge point for repeated self-assessment. To enhance the homework experience, certain important and abstract knowledge points are accompanied by virtual simulation experiments for students to practice. The specific process is shown in Figure 3.

Figure 3.

Each QR code is attached to each question, which can be scanned through the online learning platform to access online problem-solving fragmented resources for self-help solutions.

4.5. Sustainable Learning Community Construction

- (1)

- Co-building an on-campus online learning community

A great learning community cannot exist without educators, proposers and learners, just like an open source software community [47]. The learning community has the faculty acting as educators and organizers, attracting a group of outstanding student teaching assistants as proposers to add new assignment questions to the system, while other students remain as learners. The course instructors set the direction of the questions, review and guide the teaching assistants in proposing questions that incorporate current events, reflect positive energy and innovation, and promote learning with the questions. As before, learners complete the tasks set by the instructor on time, but unlike before, some of the exercises are created by the teaching assistants. By using the questions as a medium to connect the learners with the teaching assistants, the teacher can collect the learners’ questions and give feedback to the teaching assistants, and the teacher can play the role of a middleman and realize the role of teachers and students.

- (2)

- Co-building a national online learning community

China is now starting to establish multiple virtual teaching and research rooms across the country [48,49], which can break through time and space constraints to invite the best teachers across the country to participate in topic discussions and learning together. Similarly, our smart homework platform likewise allows college teachers to share test questions they have created, allowing college teachers to directly cite test questions shared by other college teachers and post them for students to practice, and like academic papers, this creates a mechanism for topics to be cited; the more frequently a topic is cited, the more it is welcomed by fellow teachers. The external teaching community gathers a group of excellent teachers from all over the country through Internet technology, and they can share the fruits of their labor online, meet a group of friends who love teaching online, enrich their personal online education question banks, and broaden their insights for students at the same time.

5. Sustainable Practice Achievements

5.1. Overview of Key Achievements

Since 2020, with the onset of the COVID-19 pandemic, our team members have embarked on the design and development of an online smart homework platform, with more than 20 faculty members and 90 teaching assistant students participating in the construction of an online question bank for computer science coursework, serving more than 1500 Chinese students in our university. The concept of assigning random differentiated homework and building learning community communities through paperless IT has penetrated into the minds of teachers and students. According to the statistics of the teaching platform, in the most recent semester, the number of on-campus students taking the Computer Composition Principles course was only 344, and the number of visits to online teaching resources in one semester reached 761,501, with 2214 visits per person, while the number of visits from the nationwide pilot online learning community consisting of eight universities on the platform reached 392,289, with the ratio of both visits being close to 2:1. It can be expected that this percentage will continue to decline as this platform becomes more popular and more valuable in the future.

5.2. Experimental Comparison

This semester, the teaching class of the pilot course of Computer Composition Principles was divided into two groups, the control group (172 students) and the experimental group (172 students). This ensured that they were from the same discipline and that the average score and failure rates of the previous semester’s courses were similar for both groups. They also used the online homework platform to answer the same questions. In terms of difficulty, the hard questions were comparable to the difficulty of the test questions of the master’s degree entrance examination, while the easy and medium difficulty questions were comparable to the difficulty of the textbook assignments. In order to reflect the fairness of the teaching experiment data, both groups had the same teachers, the same syllabus and schedule, and the term exams were in the form of teaching–examination separation, the exam content did not refer from the homework content, and the questions were assigned at a ratio of 3:5:2 for easy to medium difficulty, and the difference between the two groups was the traditional homework format (one answer for one question) was given to the control group, while the experimental group used similar problems presenting different versions and not the same answers.

We saw each chapter of this course as a unit to account for students’ completion of homework. In fact, there were as many assignments as there could be in each chapter, but we counted them together. These data can be seen in Table 2.

Table 2.

Completion records of homework for each chapter.

Based on Equations (10), (12) and (14), learning credibility, learning motivation, and learning delay can be calculated by substituting the data in Table 2. Additionally, the results of other five indicators are calculated in Table 3. These data were collected or computed from different data sources. For example, the indicator “Problem solving ability” is based on the students’ performance of the homework. The teacher properly assessed the students’ insightfulness of thought in solving difficult problems one by one. This assessment covers 100% of students every 1.5 months. In addition, the comparisons and weights of these terms are also shown in Table 3.

Table 3.

Comparison of learning-related indicators.

In Table 4, the weighting of important signs of SRL, decided by the course team after several discussions, was detailed to assess student SRL levels, such as time management, independent thinking, critical thinking, help-seeking, goal attainment, self-control, team organization, and task interest.

Table 4.

Weighting of important signs of SRL.

The final comparison of experimental results is shown in Table 5.

Table 5.

Final comparison of experimental performance of SRL between CG and EG.

Through the data comparison and effectiveness analysis of the online homework and teaching platform, we can see the experimental group, after the anti-plagiarism measures and self-test reinforcement of randomized differentiated course homework, had a significantly higher SRL level, such as learning initiative, more communication and discussion, deeper understanding of knowledge points, and higher achievement of course objectives than the control group. The largest difference between the two groups was seen for help-seeking, and while the smallest difference was seen for critical thinking, because the experimental group’s homework is a challenge for each student, while the control group’s homework is a challenge for only some of the students.

5.3. Questionnaire Surveys for the SRL Effectiveness

In order to investigate the SRL effectiveness of the online homework platform from the perspective of the teaching class, questionnaires [50] were regularly administered to all students in both groups, and the results of the survey showed that most students had positive opinions about the random question sampling mode. Theoretically speaking, the system almost provided one question per person, which basically avoided the phenomenon of students plagiarizing assignments due to a lack of self-discipline. Teachers could also accurately determine which students did not understand the course knowledge points enough according to the assignments and take corresponding online Q&A measures to help students.

In the questionnaire, there was a multiple-choice question indicating the propensity to complete homework, and the question was “When students do not know how to do a problem, would you ask them directly “What is the answer to this problem” or “How did you do this problem?”. Over 90% of the students in the experimental group chose the latter because they understood that the answer would change when they encountered the problem in the future, while over 54% of the students in the control group chose the former. Table 6 shows the results of a partial course questionnaire conducted in the control and experimental groups of the course using the online teaching platform, mainly showing the items with large differences between the two groups.

Table 6.

Comparison of questionnaire results between the two groups.

The questionnaire feedback shows that we incorporated the SRL strategy into this homework design strategy. The means and measures adopted by the students in response to the homework are proactive and very effective. This shows that the online assignment design platform can promote students’ online learning very well, cultivate students’ independent learning and self-management, enhance students’ criticism, self-confidence, willpower, creativity, etc., and also improve self-identity. Therefore, there is no denying that online homework platforms are uniformly appreciated by higher education students.

5.4. Faculty Workshops for the Platform Feedback and Future

The development of the online assignment wisdom platform is not only for teachers to pursue workload reduction, but also mainly to make online teaching more efficient and assessment more accurate. The number of teachers who participated in the system trial was eight, accounting for 100% of the total number of full-time teachers, including lab technicians, on the course entitled Computer Composition Principles. Through the faculty workshops, the following opinions about the feedback and future for the platform were given.

- This platform provides a lot of help to our teachers by recording the data of students’ assignments. In each work, we can obtain a clear picture of students’ learning through the various evaluation indicators of students’ SRL calculated by the platform. In fact, most students also approve of their evaluations, which shows that the platform is effective. However, one thing to mention is that the indicator items and indicator weights we set are decided by everyone together—is this really scientific? In future, we can study how to set up the evaluation model to adjust these weights;

- Although this platform makes up for the lack of functions of online teaching platforms in professional courses, we should design more in-depth homework to enrich the assessment of students’ ability to use SRL, such as sustainable improvement, under adverse conditions. Sustainable improvement means that students are constantly thinking about how a problem should be continuously improved until it is optimal. Additionally, the adverse conditions reveal students’ emotional capacity, behaviors, and attitudes toward completing tasks in certain extreme situations, such as excessive difficulty, limited resources, and unfamiliarity with the team;

- The platform’s differentiated questioning function is excellent, and the plagiarism rate of students in the class was significantly reduced. They often ask their teacher a lot of online questions after class, and we clearly see a significant change in students’ learning attitude. However, it is suggested that the platform should allow students to practice over and over again while giving them assistance through artificial intelligence. This promotes their reflective learning and rapid improvement. We are about to start the Engineering Education Certification of Computer Science and Technology. Therefore, we can develop a standardized platform system so that students can gradually develop self-discipline. Additionally, we can also open a discussion and communication module to engage students and improve their ability to think independently and critically.

From the above, it is obvious that the teachers on the course team have positive opinions regarding the effectiveness of the in-use platform and the assessment of students’ SRL. They are looking forward to using it and have proposed many constructive and valuable suggestions on how to measure students’ SRL using other scales.

6. Conclusions

In order to promote sustainable self-regulated learning (SRL) for higher education students in an online education environment, this study strengthens the barrier function of course homework. We designed and developed an online intelligent homework platform to urge students to listen carefully to lectures online and use teaching resources to study on their own. This platform guarantees the effectiveness of online teaching based on external and internal drivers, and significantly improves the utilization of online teaching resources. It brings about a significant improvement in students’ initiative for online learning.

As suggested by the teachers at the discussion meeting, most of the homework currently available on the platform mainly consists of choice, fill-in-the-blank and programming questions, etc. For future work, we are planning to research the automatic correction of subjective questions, which can more comprehensively examine students’ understanding of certain complex engineering problems. In addition, we are also studying how to use the homework platform to stimulate students’ internal motivation for longer-term lifelong learning and better future development.

Author Contributions

Conceptualization, Y.L., Y.G., W.W. and Y.S.; methodology, Y.L., Y.S., W.W., Y.G. and Y.S.; software, Y.L., W.W., X.Z. and D.Y.; validation, Y.L., Y.S. and T.Q.; investigation, Y.G., X.P. and D.Y.; data curation, Y.L. and X.P.; writing—original draft preparation, Y.L.; writing—review and editing, Y.L. and Y.G.; project administration, Y.L. and Y.G. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Teaching Reform Research Project of Hunan Province (No. HNJG-2021-0919), the Hunan Degree and Postgraduate Teaching Reform Research Project (No. 2021JGYB212), the Huaihua University Teaching Reform Project (No. HHXY-2022-068 and No. HHXY-2022-2-06), the Project of Hunan Provincial Social Science Foundation (No. 21JD046), the Scientific Research Project of Hunan Provincial Department of Education (No. 19B447), General program of Humanities and social sciences of the Ministry of Education of China (No. 19YJC880064) and the Project of Hunan Provincial Social Science Review Committee (No. XSP21YBC429).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are included within the article.

Acknowledgments

We thank the anonymous reviewers for their valuable comments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Harry, B.E.; Dimitrios, V.; Akosua, T.; Esi, E.J.; Alice, K.E. Technology-Induced Stress, Sociodemographic Factors, and Association with Academic Achievement and Productivity in Ghanaian Higher Education during the COVID-19 Pandemic. Information 2021, 12, 497. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, J.; Liu, B.; Liu, Z.; Xu, F. Suspending Classes Without Stopping Learning: Research on Maker Education Practice Teaching Based on Rain Classroom During Epidemic Period--Taking Course Experiment of “Computer Communication and Network” as Example. Exp. Technol. Manag. 2021, 3, 182–187. [Google Scholar] [CrossRef]

- Alsaati, T.; El-Nakla, S.; El-Nakla, D. Level of sustainability awareness among university students in the Eastern province ofSaudi Arabia. Sustainability 2020, 12, 3159. [Google Scholar] [CrossRef]

- García-González, E.; Jiménez-Fontana, R.; Azcárate, P. Education for Sustainability and the Sustainable Development Goals: Pre-Service Teachers’ Perceptions and Knowledge. Sustainability 2020, 12, 7741. [Google Scholar] [CrossRef]

- UNESCO. A Practical Guide to Recognition: Implementing the Global Convention on the Recognition of Qualifications Concerning Higher Education. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000374905 (accessed on 19 October 2022).

- Wu, M. Sustainable Inquiry, Visualization of Thinking: Re-conceptualization of “Learning Practice” in the Overall Teaching of the Unit. Exam. Wkly. 2022, 32, 76–79. [Google Scholar]

- Asim, H.M.; Vaz, A.; Ahmed, A.; Sadiq, S. A review on outcome based education and factors that impact student learning outcomes in tertiary education system. Can. Cent. Sci. Educ. 2021, 14, 1–11. [Google Scholar] [CrossRef]

- Dimitrios, V.; Vlad, G.; Marianna, K.; Agapi, S. Professional Development Opportunities for School Teachers within COVID-19 Pandemic: e-Learning & NLP and Mindfulness. In Proceedings of the INTED2022, Valencia, Spain, 8–10 March 2021; pp. 8791–8799. [Google Scholar] [CrossRef]

- Wati, E.B.; Fauzan, A. The influenceof cooperative learning model group investigation (gi) type and learning motivation approach with the understanding concepts and solving mathematical problem on grade 7 in upt smp negeri airpura. Int. J. Educ. Dyn. 2020, 2, 44–54. [Google Scholar] [CrossRef]

- Bayrak, F.; Tibi, M.H.; Altun, A. Development of online course satisfaction scale. Turk. Online J. Distance Educ. 2020, 21, 110–123. [Google Scholar] [CrossRef]

- Bowen, R.S.; Picard, D.R.; Verberne-Sutton, S.; Brame, C.J. Incorporating Student Design in an HPLC Lab Activity Promotes Student Metacognition and Argumentation. J. Chem. Educ. 2018, 95, 108–115. [Google Scholar] [CrossRef]

- Foo, J.Y.; Abdullah, M.F.N.L.; Adenan, N.H.; Hoong, J.Y. Study the need for development of training module based on High LevelThinking Skills for the topic Algebra Expression Form One. UPSI J. Educ. 2021, 14, 33–40. [Google Scholar]

- Dimitrios, V. Organizational Change Management in Higher Education through the Lens of Executive Coaches. Educ. Sci. 2021, 11, 269. [Google Scholar] [CrossRef]

- Zimmerman, B.J.; Martinez-Pons, M. Development of a structured interview for assessingstudents'use of self-regulated learning strategies. Am. Educ. Res. J. 1986, 23, 614–628. [Google Scholar] [CrossRef]

- Pintrich, P.R.; Smith, D.; Garcia, T.; Mc Keachie, W.J. Reliability and predictive validity of the Motivated Strategies for Learning Questionnaire (MSLQ). Educ. Psychol. Meas. 1993, 53, 801–813. [Google Scholar] [CrossRef]

- Kosnin, A.M. Self-regulated learning and academic achievement in Malaysian undergraduates. Int. Educ. J. 2007, 8, 221–228. [Google Scholar]

- Sardareh, S.A.; Saad, M.; Boroomand, R. Self-Regulated Learning Strategies (SRLS) and academic achievement in preuniversity EFL learners. Calif. Linguist. Notes 2012, 37, 1–35. [Google Scholar]

- Chen, X.J.; Wang, C.; Kim, D.H. Self-Regulated Learning Strategy Profiles Among English as a Foreign Language Learners. TESOL Q. 2019, 54, 234–251. [Google Scholar] [CrossRef]

- Ceron, J.; Baldiris, S.; Quintero, J.; Garcia, R.R.; Saldarriaga GL, V.; Graf, S.; Valentin, L.D.L.F. Self-Regulated Learning in Massive Online Open Courses: A State-of-the-Art Review. IEEE Access 2020, 9, 511–528. [Google Scholar] [CrossRef]

- Bai, B.; Wang, J. Hong Kong secondary students’ self-regulated learning strategy use and English writing: Influences of motivational beliefs. System 2021, 96, 102404. [Google Scholar] [CrossRef]

- Bloom, B.S.; Englehart, M.D.; Furst, E.J.; Hill, W.H.; Krathwohl, D.R. Taxonomy of educational objectives: The classification of educational goals. Handb. Cogn. Domain 1956, 1, 201–207. [Google Scholar]

- Saks, K.; Leijen, Ä. Distinguishing self-directed and self-regulated learning and measuring them in the e-learning context. Procedia Soc. Behav. Sci. 2014, 112, 190–198. [Google Scholar] [CrossRef]

- Tan, X. Assignment Design in American Universities—Taking Assignment Library as An Example. Stud. Foreign Educ. 2021, 48, 70–83. [Google Scholar]

- Tan, X. The Value Implication and Main Characteristi-cs of American University Assignments: Taking the As-signment Database in the National Institute for Learning Outcomes Assessment (NILOA)of United States as an Example. Meitan High. Education. 2019, 1, 5–12. [Google Scholar]

- Avsec, S.; Ferk Savec, V. Pre-Service Teachers’ Perceptions of, and Experiences with, Technology-Enhanced TransformativeLearning towards Education for Sustainable Development. Sustainability 2021, 13, 10443. [Google Scholar] [CrossRef]

- Ren, Z.; Zheng, H.; Zhang, J.; Wang, W.; Feng, T.; Wang, H.; Zhang, Y. A Review of Fuzzing Techniques. J. Comput. Res. Dev. 2021, 58, 944–963. [Google Scholar]

- Liu, Y.; Yao, D.; Yang, Y.; Peng, X.; Zhang, X. Research and practice on strategies to enhance the effectiveness of blended teaching in basic computer theoretical courses. J. Huaihua Univ. 2021, 40, 111–118. [Google Scholar]

- Wei, C.F.; Zeng, Y.N. Design and implementation of a mathematical logic examination and automatic marking system. Mod. Inf. Technol. 2021, 10, 141–143. [Google Scholar]

- Yang, Q.M.; Jiang, Z.S. Design and implementation of automatic correction for college mathematics assignments. J. East China Norm. Univ. 2022, 2, 76–83. [Google Scholar]

- Li, J.; Wang, Q. Calculation assignments ‘personalised’—The example of the ‘Fundamentals of Surveying’ course. Eng. Surv. Mapping. 2021, 30, 75–80. [Google Scholar]

- Huang, H. Strategies for real-time control of teaching quality in data analysis courses in the era of big data—A coursework programme based on resampling techniques. J. Lanzhou Inst. Technol. 2022, 29, 128–134. [Google Scholar]

- Nulty, D.D. The adequacy of response rates to online and paper surveys: What can be done? Assess. Eval. High. Educ. 2008, 33, 301–314. [Google Scholar] [CrossRef]

- Tomczak, A.; Tomczak, E. The need to report effect size estimates revisited. An overview of some recommended measures of effect size. Trends Sport Sci. 2014, 1, 19–25. [Google Scholar]

- Ahmad Pour, Z.; Khaasteh, R. Writing Behaviors and Critical Thinking Styles: The Case of Blended Learning. Khazar J. Humanit. Soc. Sci. 2017, 20, 5–24. [Google Scholar]

- Imran, M. Analysis of learning and teaching strategies in Surgery Module: A mixed methods study. JPMA J. Pak. Med. Assoc. 2019, 69, 1287–1292. [Google Scholar]

- Liu, X.; Li, Y. Opportunities and challenges for the development of online education in colleges and universities. Coast. Enterp. Sci. Technol. 2021, 5, 75–80. [Google Scholar]

- Streukens, S.; Leroi-Werelds, S. Bootstrapping and PLS-SEM: A step-by-step guide to get more out of your bootstrap results. Eur. Manag. J. 2016, 34, 618–632. [Google Scholar] [CrossRef]

- Ericson, J.D. Mapping the Relationship between Critical Thinking and Design Thinking. J. Knowl. Econ. 2022, 13, 406–429. [Google Scholar] [CrossRef]

- Giacumo, L.A.; Savenye, W. Asynchronous discussion forum design to support cognition: Effects of rubrics and instructorprompts on learner’s critical thinking, achievement, and satisfaction. Educ. Technol. Res. Dev. 2020, 68, 37–66. [Google Scholar] [CrossRef]

- Wang, G.; Li, J.; Mi, Y. The Establishment and Application of the Norm of Mathematics Metacognition Level of Junior MiddleSchool Students—A Case Study of Tianjin. J. Tianjin Norm. Univ. (Elem. Educ. Ed.) 2020, 21, 12–17. [Google Scholar]

- Edx. Building and Running an edX Course. Available online: https://edx.readthedocs.io/projects/edx-partner-course-staff/en/latest/course_components/create_problem.html#problem-randomization (accessed on 1 December 2022).

- Howard, D.M.; Adams, M.J.; Clarke, T.K.; Hafferty, J.D.; Gibson, J.; Shirali, M.; Coleman, J.R.I.; Hagenaars, S.P.; Ward, J.; Wigmore, E.M.; et al. Genome-wide meta-analysis of depression identifies 102 independent variants and highlights the importance of the prefrontal brain regions. Nat. Neurosci. 2019, 22, 343–352. [Google Scholar] [CrossRef]

- Chambers, R. Participatory Workshops: A Sourcebook of 21 Sets of Ideas and Activities; Earthscan: London, UK; Sterling, VA, USA, 2002. [Google Scholar]

- Desoete, A.; Baten, E.; Vercaemst, V.; De Busschere, A.; Baudonck, M.; Vanhaeke, J. Metacognition and motivation as predictors for mathematics performance of Belgian elementary school children. ZDM Math. Educ. 2019, 51, 667–677. [Google Scholar] [CrossRef]

- Veenman, M.V. The role of intellectual and metacognitive skills in math problem solving. In Metacognition in Mathematics Education; Desoete, A., Veenman, M., Eds.; Haupauge Digital: Haupauge, NY, USA, 2006; pp. 35–50. [Google Scholar]

- Sammalisto, K.; Sundström, A.; von Haartman, R.; Holm, T.; Yao, Z. Learning about Sustainability—What Influences Students’ Self-Perceived Sustainability Actions after Undergraduate Education? Sustainability 2016, 8, 510. [Google Scholar] [CrossRef]

- Yan, D.; Qi, B.; Zhang, Y.; Shao, Z. M-BiRank: Co-ranking developers and projects using multiple developer-project interactions in open source software community. EURASIP J. Wirel. Commun. Netw. 2020, 2020, 215. [Google Scholar] [CrossRef]

- Mckay, V. Literacy, lifelong learning and sustainable development. Aust. J. Adult Learn. 2018, 58, 390–425. [Google Scholar] [CrossRef]

- Nichols, K.; Burgh, G.; Kennedy, C. Comparing two inquiry professional development interventions in science on primarystudents’ questioning and other inquiry behaviours. Res. Sci. Educ. 2017, 47, 1–24. [Google Scholar] [CrossRef]

- Cui, B.; Li, J.; Wang, G. Design and development of a questionnaire on the metacognitive level of mathematics for junior high school students. J. Math. Educ. 2018, 27, 45–51. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).