Eye Movement Analysis and Usability Assessment on Affective Computing Combined with Intelligent Tutoring System

Abstract

1. Introduction

1.1. Research Background and Motivation

1.2. Research Purpose

- How satisfied are the users with the affective tutoring system?

- Is the user able to effectively increase the course learning duration by using the affective tutoring system?

2. Literature Review

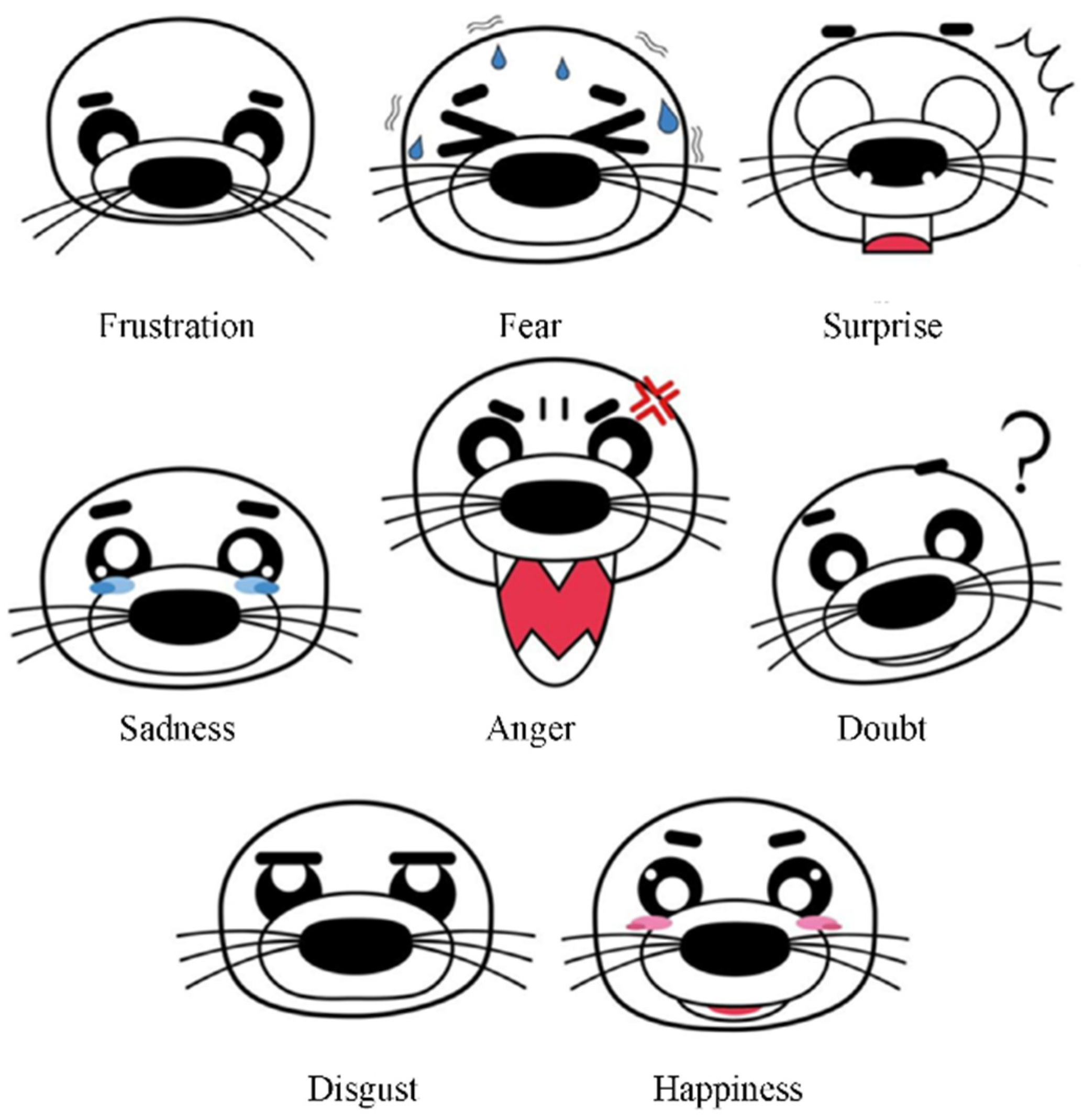

2.1. Affective Computing

2.2. Affective Tutoring System

2.3. Intelligent Virtual Agents

2.4. Emotion and Learning

2.5. Eye Movement Analysis

3. Methods

3.1. Interface Design

- A.

- Intelligent Virtual Agent: The intelligent virtual agent gives feedback to the user by combining Chinese semantic emotion recognition. When the user types in text sentences in the text input box, the system will identify the emotional keywords contained in the sentences and timely respond to the user’s emotional results. The agent will interact with the user with different emotions and the system will finally propose new emotional questions to achieve real-time interaction and communication between the user and the system.

- B.

- Course Module: The experimental textbook contains information about interactive technology describing the main development of interactive technology and other related technologies in recent years (such as wearable device, interactive technology, somatosensory and five-sense experience etc.). It helps the user to know more about the current course by combining with relevant online videos.

- C.

- Course Menu: The menu displays each chapter in the course, allowing the user to control the reading time for each chapter and then select the next chapter.

3.2. Course Model

3.3. Agent Model

3.4. Analysis of Eye Movement Statistics

- Total Time in Zone (ms)[Formula Definition: Total Time in ROI (fix.txt; duration totaling in ROI)][Meaning: Total fixation time in zone]

- Total Fixation Duration (ms)[Formula Definition: Total fixation duration of fix.txt][Meaning: Total fixation duration of the subject during the experiment]

- Average Fixation Duration (ms)[Formula Definition: Average fixation duration of fix.txt][Meaning: Average fixation duration of the subject during the experiment]

- Fixation Counts[Formula Definition: Total fixation counts of fix.txt][Meaning: Total fixation counts of the subject during the experiment]

- Percent Time Fixated Related to Total Fixation Duration (%)[Formula Definition: fix.txt; duration totaling/fixation duration in ROI (duration totaling of fix.txt)][Meaning: Fixation duration in the zone to total fixation duration]

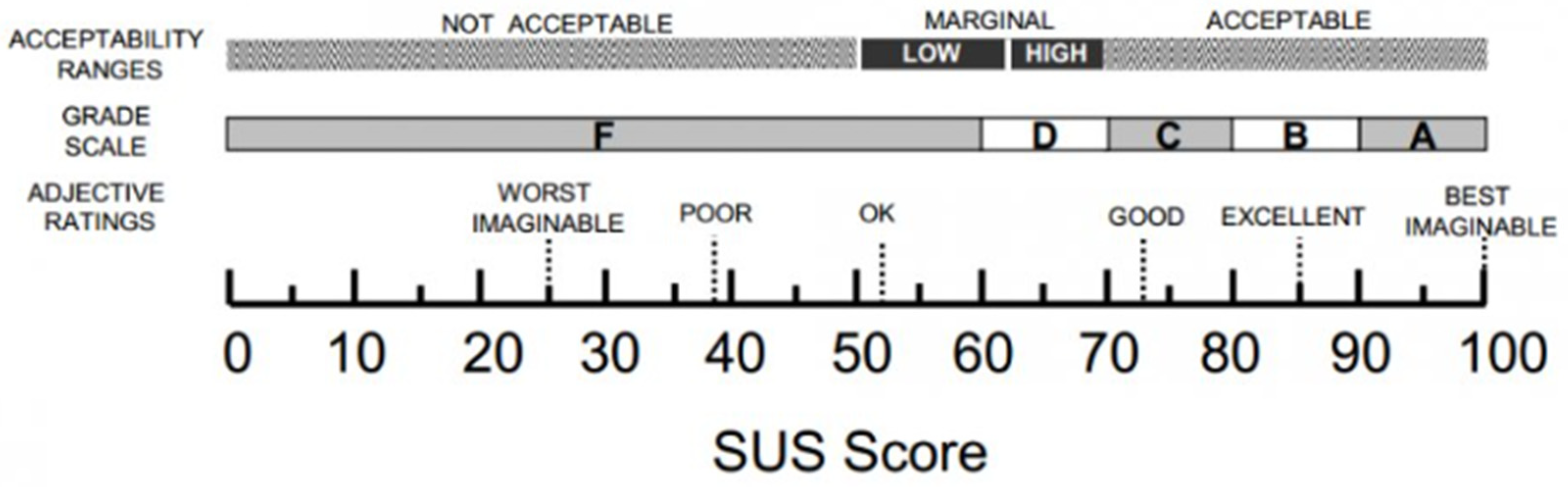

3.5. System Usability Scale

4. Data Analysis and Results

4.1. System Usability Analysis

4.1.1. System Usability Scale—Reliability Analysis

4.1.2. System Usability Scale—Descriptive Statistics

4.1.3. Users’ Satisfaction Analysis

4.2. Eye Movement Analysis

4.2.1. Eye Movement Fixation Analysis

4.2.2. Learning Duration Analysis of Eye Movement Course

4.2.3. Eye Movement ROI Block Analysis

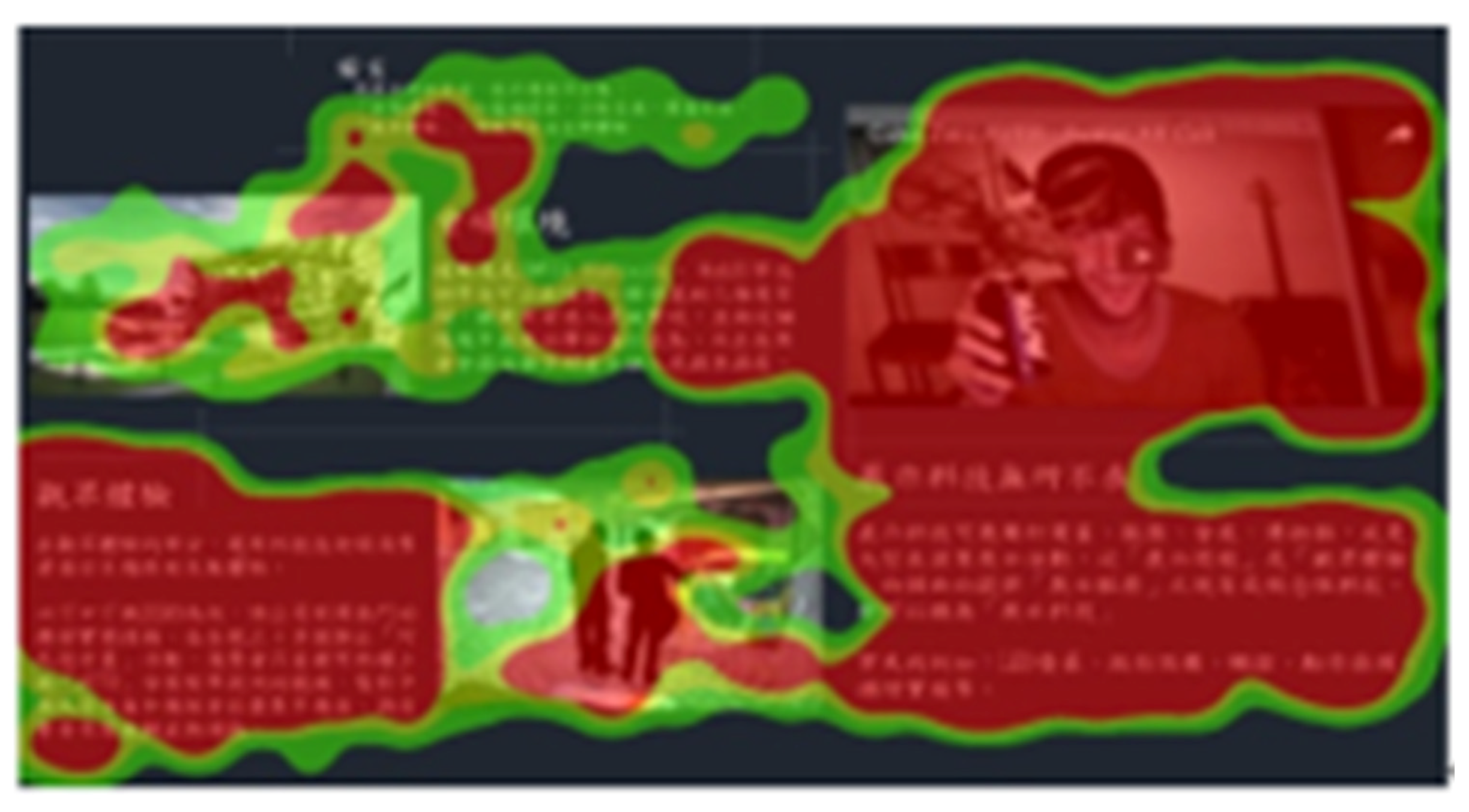

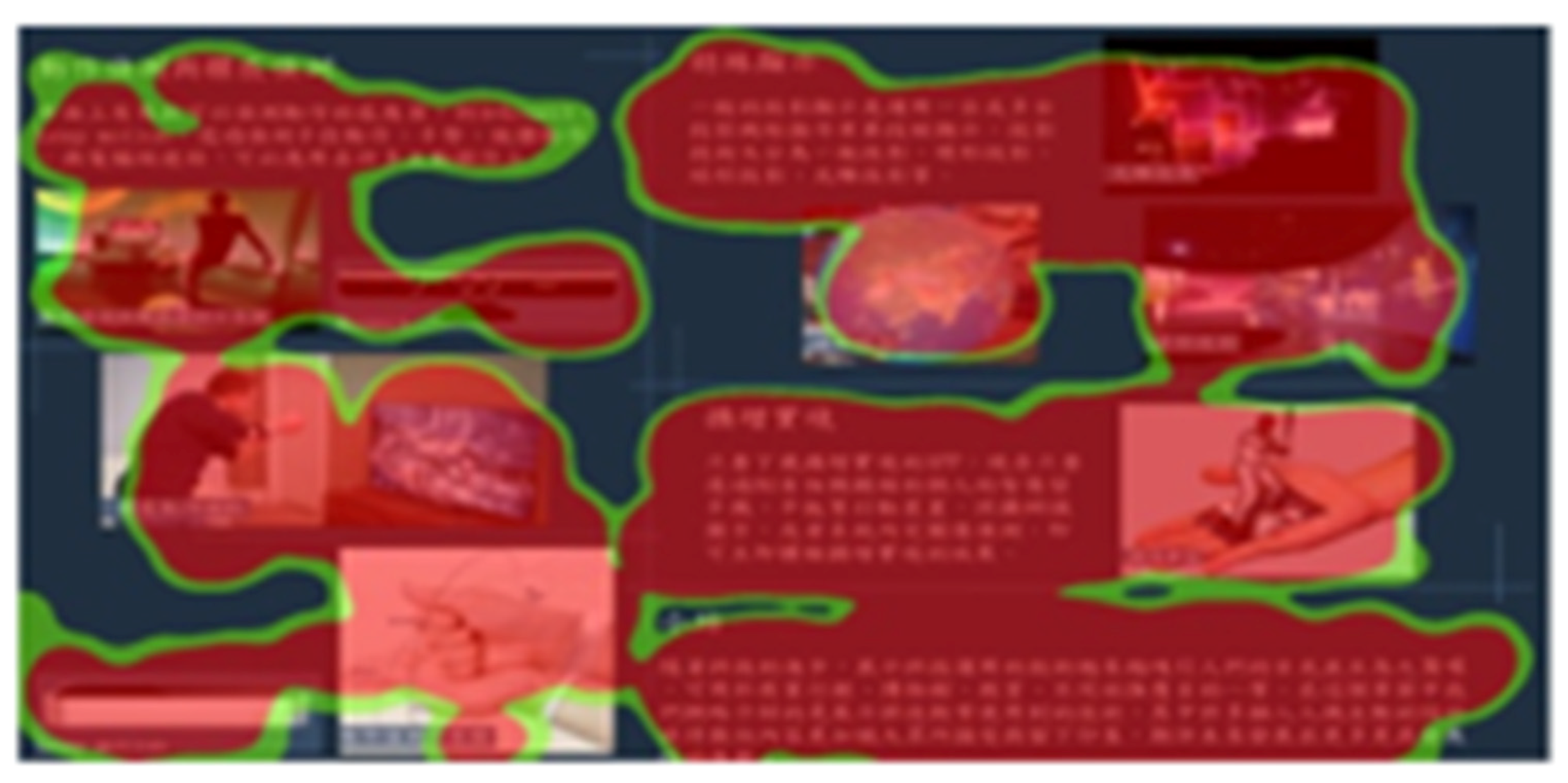

4.2.4. Analysis of Eye Movement Hot Zone

5. Discussion and Conclusions

6. Future Prospects

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Morrish, L.; Rickard, N.; Chin, T.C.; Vella-Brodrick, D.A. Emotion regulation in adolescent well-being and positive education. J. Happiness Stud. 2018, 19, 1543–1564. [Google Scholar] [CrossRef]

- Burić, I.; Sorić, I.; Penezić, Z. Emotion regulation in academic domain: Development and validation of the academic emotion regulation questionnaire (AERQ). Personal. Individ. Differ. 2016, 96, 138–147. [Google Scholar] [CrossRef]

- Picard, R.W.; Picard, R. Affective Computing; MIT Press: Cambridge, MA, USA, 1997; Volume 252. [Google Scholar]

- Picard, R.W.; Vyzas, E.; Healey, J. Toward machine emotional intelligence: Analysis of affective physiological state. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1175–1191. [Google Scholar] [CrossRef]

- Xu, J. Emotion regulation in mathematics homework: An empirical study. J. Educ. Res. 2018, 111, 1–11. [Google Scholar] [CrossRef]

- Jiménez, S.; Juárez-Ramírez, R.; Castillo, V.H.; Ramírez-Noriega, A. Integrating affective learning into intelligent tutoring systems. Univ. Access Inf. Soc. 2018, 17, 679–692. [Google Scholar] [CrossRef]

- Kort, B.; Reilly, R.; Picard, R. An Affective Model of Interplay Between Emotions and Learning: Reengineering Educational Pedagogy-Building a Learning Companion. In Proceedings of the IEEE International Conference on Advanced Learning Technologies, Madison, WI, USA, 6–8 August 2001; pp. 43–46. [Google Scholar]

- Wang, C.-H.; Lin, H.-C.K. Constructing an Affective Tutoring System for Designing Course Learning and Evaluation. J. Educ. Comput. Res. 2006, 55, 1111–1128. [Google Scholar] [CrossRef]

- Mastorodimos, D.; Chatzichristofis, S.A. Studying Affective Tutoring Systems for Mathematical Concepts. J. Educ. Technol. Syst. 2019, 48, 14–50. [Google Scholar] [CrossRef]

- Cheng, C.-H. The Power of Positive Thinking. Cult. Corp. 2010, 5, 47–49. [Google Scholar]

- Mejbri, N.; Essalmi, F.; Jemni, M.; Alyoubi, B.A. Trends in the use of affective computing in e-learning environments. Educ. Inf. Technol. 2022, 27, 3867–3889. [Google Scholar] [CrossRef]

- Wang, T.-H.; Lin, H.-C.; Chen, H.-R.; Huang, Y.-M.; Yeh, W.-T.; Li, C.-T. Usability of an Affective Emotional Learning Tutoring System for Mobile Devices. Sustainability 2021, 13, 7890. [Google Scholar] [CrossRef]

- Yadegaridehkordi, E.; Noor, N.F.B.M.; Bin Ayub, M.N.; Affal, H.B.; Hussin, N.B. Affective computing in education: A systematic review and future research. Comput. Educ. 2019, 142, 103649. [Google Scholar] [CrossRef]

- Yu-Chun, M.; Koong, L.H.-C. A study of the affective tutoring system for music appreciation curriculum at the junior high school level. In Proceedings of the 2016 International Conference on Educational Innovation through Technology (EITT), Tainan, Taiwan, 22–24 September 2016; pp. 204–207. [Google Scholar]

- Cabada, R.Z.; Estrada, M.L.B.; Hernández, F.G.; Bustillos, R.O. An affective learning environment for Java. In Proceedings of the 2015 IEEE 15th International Conference on Advanced Learning Technologies, Hualien, Taiwan, 6–9 July 2015; pp. 350–354. [Google Scholar]

- Barrón-Estrada, M.L.; Zatarain-Cabada, R.; Oramas-Bustillos, R.; Gonzalez-Hernandez, F. Sentiment analysis in an affective intelligent tutoring system. In Proceedings of the 2017 IEEE 17th international conference on advanced learning technologies (ICALT), Timisoara, Romania, 3–7 July 2017; pp. 394–397. [Google Scholar]

- Thompson, N.; McGill, T.J. Genetics with Jean: The design, development and evaluation of an affective tutoring system. Educ. Technol. Res. Dev. 2017, 65, 279–299. [Google Scholar] [CrossRef]

- Mao, X.; Li, Z. Agent based affective tutoring systems: A pilot study. Comput. Educ. 2010, 55, 202–208. [Google Scholar] [CrossRef]

- Duo, S.; Song, L.X. An E-learning System based on Affective Computing. Phys. Procedia 2012, 24, 1893–1898. [Google Scholar] [CrossRef]

- Ammar, M.B.; Neji, M.; Alimi, A.M.; Gouardères, G. The affective tutoring system. Expert Syst. Appl. 2010, 37, 3013–3023. [Google Scholar] [CrossRef]

- Gerald, C. Reading Lessons: The Debate over Literacy; Hill & Wang: New York, NY, USA, 2004. [Google Scholar]

- Sin, J.; Munteanu, C. An empirically grounded sociotechnical perspective on designing virtual agents for older adults. Hum. Comput. Interact. 2020, 35, 481–510. [Google Scholar] [CrossRef]

- Wooldridge, M.; Jennings, N.R. Intelligent agents: Theory and practice. Knowl. Eng. Rev. 1995, 10, 115–152. [Google Scholar] [CrossRef]

- Castelfranchi, C. Guarantees for autonomy in cognitive agent architecture. In International Workshop on Agent Theories, Architectures, and Languages; Springer: Berlin/Heidelberg, Germany, 1994; pp. 56–70. [Google Scholar]

- Genesereth, M.R. Software Agents Michael R; Genesereth Logic Group Computer Science Department Stanford University: Stanford, CA, USA, 1994. [Google Scholar]

- Cunha-Perez, C.; Arevalillo-Herraez, M.; Marco-Gimenez, L.; Arnau, D. On Incorporating Affective Support to an Intelligent Tutoring System: An Empirical Study. IEEE Rev. Iberoam. Tecnol. Aprendiz. 2018, 13, 63–69. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Constants across cultures in the face and emotion. J. Pers. Soc. Psychol. 1971, 17, 124–129. [Google Scholar] [CrossRef]

- Kuo, S.-C. The Meaning of Positive Psychology and Its Application in Learning; Graduate School of Education, Ming Chuang University: Taoyuan, Taiwan, 2010; pp. 56–72. [Google Scholar]

- Chiang, W.-T. The Emotion Regulation of College Students: Processes and Developmental Characteristics. Bull. Educ. Psychol. 2004, 35, 249–268. [Google Scholar]

- Sun, Y.-C. Evaluation of Learning Emotion and Performance for Learners with Visualizer/Verbalizer Cognitive Style Enrolled in Various Types of Multimedia Materials; Department of Applied Electronic Technology of National Taiwan Normal University: Taipei City, Taiwan, 2010; pp. 1–136. [Google Scholar]

- Adhanom, I.B.; Lee, S.C.; Folmer, E.; MacNeilage, P. Gazemetrics: An open-source tool for measuring the data quality of HMD-based eye trackers. In Proceedings of the ACM Symposium on Eye Tracking Research and Applications, Stuttgart, Germany, 2–5 June 2020; pp. 1–5. [Google Scholar]

- Hosp, B.; Eivazi, S.; Maurer, M.; Fuhl, W.; Geisler, D.; Kasneci, E. RemoteEye: An open-source high-speed remote eye tracker. Behav. Res. Methods 2020, 52, 1387–1401. [Google Scholar] [CrossRef]

- Boraston, Z.; Blakemore, S.J. The application of eye-tracking technology in the study of autism. J. Physiol. 2007, 581, 893–898. [Google Scholar] [CrossRef]

- Carter, B.T.; Luke, S.G. Best practices in eye tracking research. Int. J. Psychophysiol. 2020, 155, 49–62. [Google Scholar] [CrossRef]

- Tang, D.-L.; Chang, W.-Y. Exploring Eye-Tracking Methodology in Communication Study. Chin. J. Commun. Res. 2007, 12, 165–211. [Google Scholar]

- Rayner, K. Eye movements and attention in reading, scene perception, and visual search. Q. J. Exp. Psychol. 2009, 62, 1457–1506. [Google Scholar] [CrossRef]

- Rayner, K. Eye movements in reading and information processing: 20 years of research. Psychol. Bull. 1998, 124, 372–422. [Google Scholar] [CrossRef]

- Langton, S.R.; Watt, R.J.; Bruce, V. Do the eyes have it? Cues to the direction of social attention. Trends Cogn. Sci. 2000, 4, 50–59. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A ’Quick and Dirty’ Usability Scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Bangor, A.; Kortum, P.; Miller, J. Determining what individual SUS scores mean: Adding an adjective rating scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

- Costa, P. Conversing with personal digital assistants: On gender and artificial intelligence. J. Sci. Technol. Arts 2018, 10, 59–72. [Google Scholar]

| 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|

| Q1 | 0% | 0% | 6.7% | 53.3% | 40.0% |

| Q2 | 0% | 13.3% | 40% | 33.4% | 13.3% |

| Q3 | 0% | 0% | 26.7% | 40% | 33% |

| Q4 | 0% | 13.3% | 46.7% | 26.7% | 13.3% |

| Q5 | 0% | 0% | 40% | 40% | 20% |

| Q6 | 0% | 0% | 26.7% | 46.7% | 26.6% |

| Q7 | 0% | 0% | 6.7% | 46.7% | 46.6% |

| Q8 | 0% | 0% | 6.7% | 46.7% | 46.6% |

| Q9 | 0% | 0% | 20% | 26.7% | 53.3% |

| Q10 | 0% | 13.3% | 26.7% | 20% | 40% |

| N | Minimum Value | Maximum Value | Summation | Average Number | Standard Deviation | Variance | |

|---|---|---|---|---|---|---|---|

| Q1 | 15 | 3 | 5 | 65 | 4.33 | 0.617 | 0.381 |

| Q2 | 15 | 2 | 5 | 52 | 3.47 | 0.915 | 0.838 |

| Q3 | 15 | 3 | 5 | 61 | 4.07 | 0.799 | 0.638 |

| Q4 | 15 | 2 | 5 | 51 | 3.40 | 0.910 | 0.829 |

| Q5 | 15 | 3 | 5 | 57 | 3.80 | 0.775 | 0.600 |

| Q6 | 15 | 3 | 5 | 60 | 4.00 | 0.756 | 0.571 |

| Q7 | 15 | 3 | 5 | 66 | 4.40 | 0.632 | 0.400 |

| Q8 | 15 | 3 | 5 | 66 | 4.40 | 0.632 | 0.400 |

| Q9 | 15 | 3 | 5 | 65 | 4.33 | 0.816 | 0.667 |

| Q10 | 15 | 2 | 5 | 58 | 3.87 | 1.125 | 1.267 |

| Sample Size | Average Number | Median | Maximum Value | Minimum Value | Standard Deviation | |

|---|---|---|---|---|---|---|

| Overall | 15 | 81.5 | 82.5 | 95 | 67.5 | 7.245688 |

| Control Group | Experiment Group | |

|---|---|---|

| Total Average Fixation Duration | 80,889.27 | 120,477.2 |

| Course Contents Total Average Fixation Duration | 80,687.73 | 86,751.6 |

| Course Contents Total Average Fixation Counts | 796,133 | 803,533 |

| Course Contents Single Average Fixation Duration | 101,349 | 107,962 |

| Average Number | Person(s) | Standard Deviation | Standard Error Mean Value | |

|---|---|---|---|---|

| Control Group | 851,582.27 | 15 | 109,821.034 | 28,355.669 |

| Experiment Group | 1,084,836.47 | 15 | 171,437.643 | 44,265.009 |

| Levene Variance Equality Test | Test (T) to Check if the Average Value Is Equal | |||

|---|---|---|---|---|

| F | Significance | T | df | Significance (two-tailed) |

| 0.881 | 0.356 | −4.437 | 23.834 | 0.000 |

| Average Number | Person(s) | Standard Deviation | Standard Error Mean Value | |

|---|---|---|---|---|

| Control Group | 80,687.73 | 15 | 12,762.089 | 3295.157 |

| Experiment Group | 86,751.60 | 15 | 17,452.989 | 4506.342 |

| Levene Variance Equality Test | Test (T) to Check if the Average Value Is Equal | ||||

|---|---|---|---|---|---|

| F | Significance | T | df | Significance (Two-Tailed) | |

| Fixation at the course contents | 1.427 | 0.242 | −1.086 | 25.643 | 0.287 |

| Average Number | Person(s) | Standard Deviation | Standard Error Mean Value | |

|---|---|---|---|---|

| Control Group | 80,889.27 | 15 | 12,842.757 | 3315.986 |

| Experiment Group | 120,477.20 | 15 | 24,068.766 | 3315.986 |

| Levene Variance Equality Test | Test (T) to Check if the Average Value Is Equal | ||||

|---|---|---|---|---|---|

| F | Significance | T | df | Significance (Two-Tailed) | |

| Fixation at the course contents | 10.209 | 0.003 | −5.620 | 28 | 0.000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, H.-C.K.; Liao, Y.-C.; Wang, H.-T. Eye Movement Analysis and Usability Assessment on Affective Computing Combined with Intelligent Tutoring System. Sustainability 2022, 14, 16680. https://doi.org/10.3390/su142416680

Lin H-CK, Liao Y-C, Wang H-T. Eye Movement Analysis and Usability Assessment on Affective Computing Combined with Intelligent Tutoring System. Sustainability. 2022; 14(24):16680. https://doi.org/10.3390/su142416680

Chicago/Turabian StyleLin, Hao-Chiang Koong, Yi-Cheng Liao, and Hung-Ta Wang. 2022. "Eye Movement Analysis and Usability Assessment on Affective Computing Combined with Intelligent Tutoring System" Sustainability 14, no. 24: 16680. https://doi.org/10.3390/su142416680

APA StyleLin, H.-C. K., Liao, Y.-C., & Wang, H.-T. (2022). Eye Movement Analysis and Usability Assessment on Affective Computing Combined with Intelligent Tutoring System. Sustainability, 14(24), 16680. https://doi.org/10.3390/su142416680