Abstract

Fire is one of the most serious disasters in the wild environment such as mountains and jungles, which not only causes huge property damage, but also may lead to the destruction of natural ecosystems and a series of other environmental problems. Considering the superiority and rapid development of computer vision, we present a novel intelligent wildfire detection method through video cameras for preventing wildfire hazards from becoming out of control. The model is improved based on YOLOV5S architectures. At first, we realize its lightweight design by incorporating the MobilenetV3 structure. Moreover, the improvement of detection accuracy is achieved by further improving its backbone, neck, and head layers. The experiments on a dataset containing a large number of wild flame and wild smoke images have demonstrated that the novel model is suitable for wildfire detection with excellent detection accuracy while meeting the requirements of real-time detection. Its wild deployment will help detect fire at the very early stage, effectively prevent the spread of wildfires, and therefore significantly contribute to loss prevention.

1. Introduction

Fire is one of the most serious disasters in wild environments such as mountains and jungles and it may lead to a series of problems such as major economic losses, severe air pollution, human and animal deaths, and environmental pollution [1]. Table 1 shows the large wildfires that have occurred around the world since 2018. The report released by the United Nations predicts that global wildfires will become more frequent and larger in the future and that areas previously free of wildfires may also break out. Hence, the efficient detection of wild flames in the early stages of wildfires can prevent the spread of fire in a timely manner and minimize the damage caused by wildfires. In wild environments, wildfire detection systems using sensors have been widely researched. However, these traditional physical sensors have a number of limitations. For example, they need to be as close to the source of the fire as possible, otherwise it is difficult to feel the change in ambient temperature when a fire occurs [2,3]. Besides, they require many sensors, network communication modules and other devices to form a complex distributed system, resulting in expensive costs [4,5].

Table 1.

The large wildfires that have occurred around the world since 2018.

Moreover, they spend a lot of time processing information and raising fire alarms after detecting a fire [6]. In indoor environments, these sensor detection systems have been well used. Therefore, it is important to develop a new wildfire detection method to automatically detect fire hazards in the wild environment.

With the rapid development of computer vision and digital camera technology, intelligent video fire detection methods have been proposed and applied in some field scenes. There are two methods commonly used for video fire detection. First of all, fire detection was performed by using a model that can extract some features related to flames and smoke such as color, shape, and hand-designed features [7,8,9,10]. Conventional video fire detection methods converted the features of the flames in the extracted images into a feature vector and classified the feature vectors into “fire” or “non-fire” classes to address the problem. However, since the discriminative feature extractors were hand-designed, these methods would require a great deal of expertise to design a model. In addition, in actual field scenes, the characteristics of flame and smoke are greatly affected by the environment, resulting in a significant reduction in the efficiency of the model in extracting features. To sum up, these methods suffered from insufficient generalization performance and were difficult to meet the needs of different scenarios.

Since AlexNet [11] won the prestigious ImageNet Large-Scale Visual Recognition Challenge (ILSVRC) in 2012, deep learning has once again shown its potential and exploded into a revolution in artificial intelligence. In particular, the convolutional neural network (CNN) model has been extensively studied and applied in computer vision and pattern recognition. Compared to detection models that require manually designed feature extractors, the convolutional neural network (CNN) is able to learn the features of objects more comprehensively. To take full advantage of the convolutional neural network, several video wildfire detection methods based on convolutional neural networks have been proposed in the past few years, which have superior detection performance. Zhang et al. [12] proposed a cascade forest fire detection method based on the CNN. First, frames from the camera were detected by a global image-level classifier and, if a fire was detected, a fine-grained patch classifier was followed to detect the exact location of the fire patch. Kim et al. [13] exploited the spatial features of fire based on the Faster-RCNN to detect the suspected fire and non-fire regions and passed the LSTM accumulates features in bounding boxes in consecutive frames and judges whether there is a fire. Ba et al. [14] proposed a new convolutional neural network model called SmokeNet for wildfire detection, which combines spatial and channel attention in CNN to enhance feature representation and achieved high performance accuracy and Kappa coefficient. Xu et al. [15] proposed an end-to-end framework based on deep saliency smoke detection for video smoke detection. The framework combines deep feature maps with saliency feature maps to predict whether an image contains smoke. The qualitative and quantitative analyses at the frame-level and pixel-level demonstrate the superior performance of the architecture. Wu et al. [16] improved the ability of YOLOV5S to extract small-scale flames by introducing the dilation convolution module into the spp module. In addition, the activation function GELU and predictive bounding box suppression DIoU-NMS to further improve the performance of the improved network.

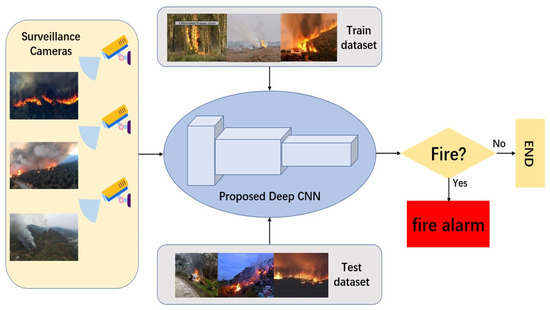

Although these methods have performed well in wildfire detection, there are some limitations during the practical applications. First of all, they have a large network size that causes it to be difficult to deploy in the camera and achieve the balance of speed, accuracy, and model size. In addition, since these methods utilized CNN as a classifier, they can only detect whether fire exists in an image or not and it is almost impossible to know the exact location of the fire. Furthermore, they are unable to detect flames and smoke that are small in the camera image and may even appear to detect objects that resemble flames and smoke. To address these issues, motivated by the recent improvements in embedded processing power and the potential of deep features, in this paper, we present a novel intelligent wildfire detection approach that aims to achieve low model size, low computational complexity, high detection accuracy, and a real-time detection rate. An overview of our framework for wildfire detection in surveillance networks is provided in Figure 1. The main contributions and novelty of this paper can be summarized as follows:

Figure 1.

An overview of our framework for wildfire detection in surveillance networks.

- First, we replaced cspdarknet53 with MobilenetV3 in the backbone layer of YOLOV5S to achieve the initial lightweight design of the network. Moreover, we adopted an efficient convolution module in the neck layer of YOLOV5S to further reduce the size and computational complexity of the network.

- To compensate for the model’s lack of detection of small flames and smoke, we designed a multi-scale feature extraction network to increase the receptive field of the model and fully learn the features of small wild flames and smoke in the image.

- In order to more comprehensively detect wild flames and smoke in the video camera and reduce the missing detection rate of the network, we designed a four-detector head structure based on the K-means algorithm.

- To avoid the false alarm problem, a coordinated attention mechanism was inserted in the network. Because it can cause the model to pay more attention to the region where the target may exist.

- To verify the effectiveness of our designed network, we collected and annotated a large number of wildfire images, creating a high-quality dataset with a total of 14,400 images. The dataset contains the wild flame images, wild smoke images, fire-like images, smoke-like images, and the background images of the wild environment.

2. Methods

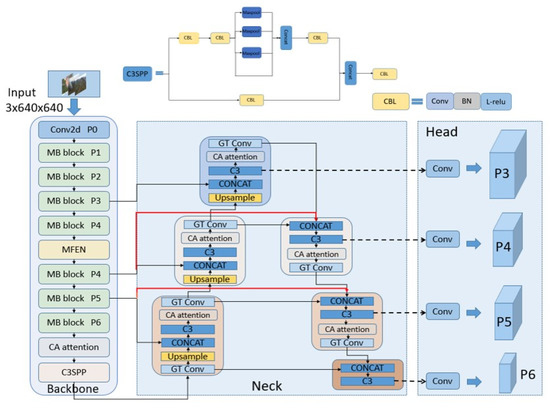

In this section, aiming at the intelligent fire detection method by using video cameras, we present a new wildfire detection network based on improved YOLOV5S suitable for edge devices. The complete framework of the proposed model is illustrated in Figure 2. The proposed wildfire detection network has three components: backbone, neck, and head. The backbone network mainly performs the feature extraction process. In the neck layer, the network presents feature fusion processing on the features from the backbone network. Additionally, the head layer contains four detection heads of different scales.

Figure 2.

Block diagram of the proposed network for wildfire detection. The new backbone network is constituted of Mobilenetv3 blocks. MFEN is used in backbone to capture larger receptive field information and fuse more multi-scale contextual information. Bi-directional feature fusion module is proposed to improve the performance of feature aggregation. Four detection heads achieve multi-scale object detection.

2.1. YOLOV5S

Currently, object detect methods are generally divided into two categories: two-stage methods and one-stage methods. Two-stage methods are based on region proposal, including R-CNN [17], Fast R-CNN [18], Faster R-CNN [19], etc.; these methods divide the object detection into two steps. First, they generate many small boxes in the image as region proposals where objects may be present. Then, these boxes are fed into a CNN model to predict the class of the region proposals’ labels and their coordinates in the image. One-stage approaches consider the detection task as a regression problem, which can directly output the class probabilities and coordinates of the object, such as YOLO (you only look once) [20], SSD [21], etc. In general, two-stage methods have higher accuracy because they first generate many region proposals and feed them all into the CNN. However, this leads to a large amount of time and computational resources being used. Compared with two-stage methods, one-stage methods can achieve faster detection speeds and more concise structure by losing accuracy to a certain extent. In the pursuit of faster detection speeds to meet real-time detection requirements, we select a one-stage method for the object detection.

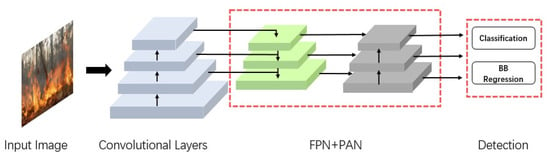

YOLOV5 [22] is one of the most advanced single-stage object detectors at present, evolved from YOLOV3 and YOLOV4 networks. The internal frame of YOLOV5 is shown in Figure 3. The structure of the YOLOV5 network is divided into an input layer, backbone layer, neck layer, and head layer. The input layer is used for the input of training and detection pictures. The size of the input picture is 3 × 640 × 640. The backbone uses CSPDarkNet-53 as the feature extraction network to extract the features of the object and the neck layer uses the structure of FPN + PAN for feature fusion. The FPN structure transmits semantic information from the top to bottom and the PAN structure transmits the location information from bottom to top. By combining the two, the network can simultaneously improve the classification accuracy and positioning accuracy. The head layer uses three detection heads of different sizes to detect objects of different sizes in the picture. The latest version of YOLOV5 has 5 versions, from small to large, YOLOV5n, YOLOV5S, YOLOV5x, YOLOV5l, and YOLOV5x. Compared to the other versions, YOLOV5S has a good balance between detection speed and precision. The parameter of YOLOV5S is 7.1 M and its model size is 14.4 MB. Furthermore, the AP of YOLOV5S on the MSCOCO dataset is 37.2%. Therefore, YOLOV5S is very suitable for edge deployment for real-time detection, so we adopt YOLOV5S as the overall framework of wildfire detectors.

Figure 3.

Illustration of the internal architecture YOLOV5.

2.2. Lightweight Networks

The methods for intelligent wildfire detection via video cameras often require the deployment of detection networks into resource-constrained environments. It is seen that despite its excellent accuracy and real-time inference capabilities, YOLOV5S requires excessive computational resources, causing it to be difficult to deploy on resource-constrained edge devices. Thus, in this section, we explored an approach to achieve high efficiency and light weight of YOLOV5S for easy deployment on edge devices. We wanted to choose an efficient lightweight network as the backbone to achieve the lightweight design of YOLOV5S.

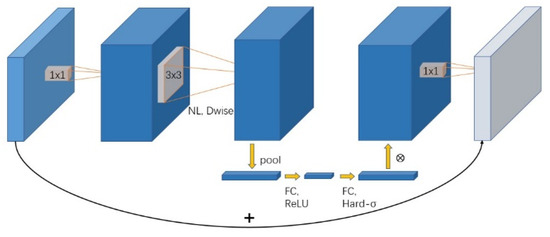

In recent years, various lightweight networks have been proposed to meet the needs of Internet of Thing (IoT) deployments. These lightweight networks are very efficient and can be easily deployed in resource-constrained environments. In 2016, SqueezeNet [23] was proposed by Iandola et al. They accomplished it by adopting three main design approaches, which are the use of smaller filters, downsampling layers and fire modules. In 2016, an extremely computationally efficient neural network called ShuffleNet [24] was introduced by Zhang et al. They believed that using group convolution can effectively circumvent the drawback of limited information flows. Therefore, a channel shuffling structure was designed, but this did not improve the inference speed of the network. In 2018, Ningning Ma et al. advocated using direct metrics such as speed or latency to measure computational complexity instead of indirect metrics such as FLOPs. Thus, ShuffleNetV2 was proposed, which outperformed other state-of-the-art models with similar complexities. MobileNetv3 [25] was evolved from MobileNetv2 [26] and MobileNetv1 [27] networks, using resource limited platform-aware neural architecture search (Platform-aware NAS) and NetAdapt methods. Howard et al. obtained the overall structure of the network by using the resource limited platform-aware neural architecture search method and then a performed local search using the NetAdapt algorithm to optimize the number of convolutional kernels per layer. Besides, MobileNetv3 uses a mixture of relu and h-swish as activation filters. For different resource use cases, they introduced two versions: MobileNetv3-large and MobileNetv3-small. MobileNetv3-small is composed of 11 bottleneck blocks while MobileNetv3-large has 15. The bottleneck blocks consist of a linear inverse residual module combined with a squeeze and excitation module. The details of the block are shown in Figure 4. All in all, it has achieved a very good performance on ImageNet. The top-1 accuracy is 75.2% while the parameters is only 5.4 M. Therefore, in our approach, we designed a new backbone network using Mobilenetv3 bottleneck blocks. The detailed structure of the new backbone network is provided in Table 2. Finally, ghost convolution generates feature information by using cheap linear transformation, which can effectively reduce the required calculation amount and model size of the network. So, we decided to use it in the neck layer of the network instead of the normal convolution.

Figure 4.

Illustration of the structure of MobileNetv3 block.

Table 2.

The detailed structure of the new backbone network. SE denotes whether there is a squeeze-and-excite in that block. NL denotes the type of nonlinearity used. here, HS denotes h-swish and RE denotes relu. S denotes stride.

2.3. Multi-Scale Feature Extraction Network (MFEN)

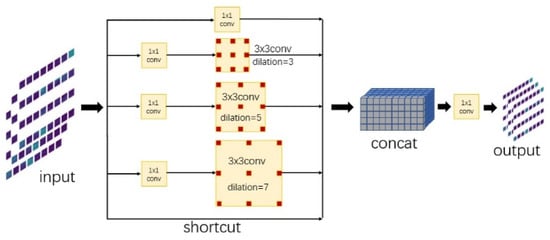

In the wild environment, surveillance cameras are often installed in locations with a wide field of view. Thus, the images from the camera contain a large number of visual scenes that enclose rich contextual information about multiple objects. The contextual information of the visual objects describes the correlation between the semantic properties of the visual objects and the visual scenes. Moreover, due to the different distances from the ignition point to the camera position, the scale of the wild flame and smoke in the camera images varies greatly. Therefore, we think it is very essential to design a module that can enlarge the receptive field of the network, thus causing the network to handle objects of different sizes and capture the contextual information of all objects in the visual scene. Inspired by the superior performance of atrous/dilated convolution, we proposed a multi-scale feature extraction network (MFEN) whose specific structure is shown in Figure 5.

Figure 5.

Illustration of the structure of multi-scale feature extraction network.

The multi-scale feature extraction network (MFEN) incorporates three parallel dilated/atrous convolutions with different dilation rates to capture larger receptive field information and fuse more multi-scale contextual information. The dilated/atrous convolutions are an extremely powerful convolution operation that can increase the receptive field of the network and capture the contextual information of multi-scale objects in complex visual scenes. Even though, because of its unique sparsely sampled convolutional operation, the local information in the feature map is lost as well as the information captured at a distance is not correlated. To address aforementioned issue, we set the dilation rate of the dilated convolution to be 3, 5, and 7, respectively. Additionally, filter 1 × 1 convolution and shortcut operations are introduced into the network, so that the information in the original feature map can be passed backwards. Finally, to take full advantage of the multi-scale feature extraction network, we inserted it between the P4 layers of the backbone network.

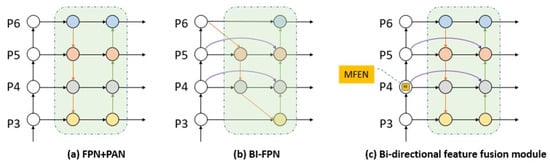

2.4. Bi-Directional Feature Fusion Module

As we all know, for a convolutional neural network, the deeper the network level is, the stronger the semantic information can be extracted, but the resolution of the feature map will be lower and the representation ability of position information will be weaker. For shallower convolutional neural networks, the opposite is true. Strong semantic information can help us accurately identify the object and strong location information can help us accurately locate the object’s position. The feature fusion module can effectively aggregate the feature map information of all levels in the network, which helps the network to classify and locate the target more accurately. In recent years, it has been extensively investigated for multi-scale object detection task [28,29]. The Feature Pyramid Network (FPN) [30] adopts a top-down path for multi-scale feature fusion. The Path Aggregation Network (PANet) [31] enhances the entire feature hierarchy by passing the precise localization information of the lower layer to the upper layer through a bottom-up path. Employing neural architecture search in a novel scalable search space covering all cross-scale connections, Ghiasi et al. [32] discovered a novel feature pyramid architecture, NAS-FPN, consisting of top-down and bottom-up path connections. The AugFPN proposed by Guo et al. [33] narrows the semantic gap between features at different scales before feature fusion through consistent supervision. In feature fusion, the residual feature enhancement module extracts scale-invariant global information to reduce information loss in the top-level pyramid feature maps. Recently, Tan et al. [34] introduced the Bidirectional Feature Pyramid Network (BIFPN) with several optimizations for cross-scale connectivity to improve the efficiency of the model and to further achieve state-of-the-art performance. Motivated by this, we have introduced bi-directional feature fusion module in the wildfire detection network to improve the performance of the network feature aggregation. The comparison of the three feature aggregation networks is shown in Figure 6.

Figure 6.

Comparison of three feature aggregation networks.

In a nutshell, at first, to cause the network to be lightweight, the bi-directional feature fusion module was not reused in the neck layer. We argued that losing input nodes in the network will reduce the efficiency of the network, therefore, in contrast to BIFPN, the bi-directional feature fusion module we presented did not discard any input nodes. Second, from the original input feature map to the output feature map at the same level, two additional edges were added to combine more information without adding any learnable layers. Third, it integrates top-down and bottom-up path approaches, which can fully aggregate multi-scale semantic and location information in feature maps. Finally, the processing of the prediction box is not affected, although the bi-directional feature fusion module is used on top of the multi-scale feature extraction network (MFEN).

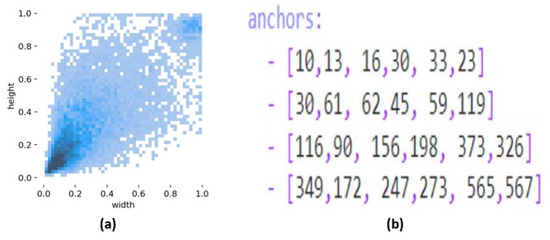

2.5. Four-Head Structure for Multi-Scale Detection

We investigated the wildfire image dataset that we produced and found it contains flame and smoke instances in the images with a large variation in the size of the region. This is due to the fact that the video camera was mounted on the tower so that the distance of the fire point from the video camera varies greatly. Therefore, combined with the other three prediction heads, we designed a four-head detection structure, which can ease the negative influence caused by violent object scale variance. From Figure 2, it can be seen that the detection head we added are generated for deep-level, low-resolution feature maps. It can effectively reduce the false detection rate of the model. The K-means clustering algorithm [35] is a common clustering algorithm, which belongs to unsupervised learning. It clusters each sample by calculating the distance between each sample and each sub cluster center and assigning each sample to the clustering center closest to it. Furthermore, the anchor boxes of the four detection heads are regenerated by using the K-means clustering algorithm to adapt to the size of the flames and smoke in the images, as shown in Figure 7.

Figure 7.

(a) Widths and heights of instances on the dataset. (b) The size of anchor boxes by using K-means clustering.

2.6. Coordinated Attention Module (CA)

The coordinate attention mechanism [36] is a relatively novel hybrid attention mechanism, which simultaneously considers the relationship between the channels of the network and the location information on the feature map, which not only captures the cross-channel information, but also contains location-sensitive information. From the perspective of its structure, the features are averagely pooled in the horizontal x direction and the vertical y direction, spatially encoded, and finally the spatial information is fused by channel-weighted fusion. In addition, the coordinate attention mechanism is a lightweight attention mechanism with strong flexibility, which is very suitable for use in lightweight neural networks to further improve performance.

The coordinate attention module encodes channel relationships and long-range dependencies with precise location information. Its structure is divided into two parts: coordinate information embedding and coordinate attention generation. The detailed procedure is as follows:

Step 1: For coordinate information embedding, given the input X, using two spatial extents of pooling kernels (H, 1) or (1, W) to encode each channel along the horizontal coordinate and the vertical coordinate, respectively. Thus, the output of the c-th channel at height h can be formulated as:

Similarly, the output of the c-th channel at width w can be written as:

Step 2: For coordinate attention generation, the authors first concatenate the aggregated feature maps generated by Equations (1) and (2) and then send them to a shared 1 × 1 convolutional transformation function F1, yielding:

Step 3: Split f along the spatial dimension into two separate tensors . Another two 1 × 1 convolutional transformation are utilized to separately transform into tensors with the same channel number to the input X, yielding:

Step 4: Finally, the output of our coordinate attention block Y can be written as:

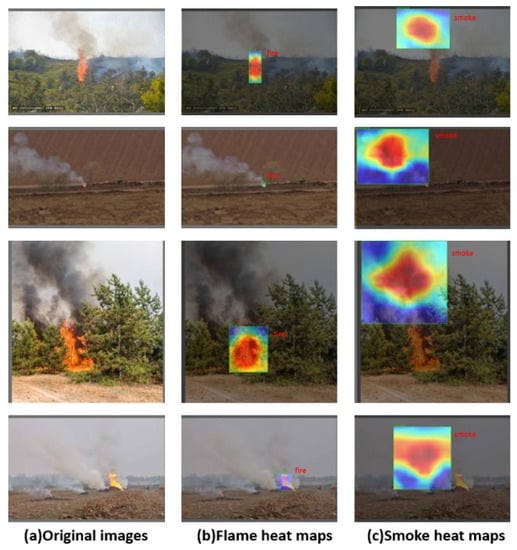

In our work, to cause the visual interpretation of the wildfire detection network to be more transparent after adding the coordinated attention module, we introduce a visualization technique called guided Gradient-weighted Class Activation Mapping (Grad-CAM) [37] with high-resolution and class-discrimination. It visually interprets the model by generating a heat map of the class activation map for the input image. It is a two-dimensional network of feature scores associated with a specific output class, with each position of the grid indicating the importance of that class. For an image that is input to the detection model and contains flames and smoke, this technique presents the similarity of each location in the image to the “flames or smoke” class in the form of a heat map. The darker the color of the heat map, the greater the degree of similarity. Therefore, this helps to understand which local locations in an original image led the detection model to make the final classification decision. As can be seen in Figure 8, the regions of interest to our network after embedding coordinated attention are very similar to the regions of wild flames and smoke in the images.

Figure 8.

Visual explanations with Grad-CAM.

3. Experiments Results

In this section, we will describe in detail the wildfire dataset, the experimental environment, and the evaluation metrics of the model. Through a series of ablation experiments and comparison experiments with different models, the new model proposed in this paper is analyzed. Finally, at the end of this section, the actual detection results of the model are shown.

3.1. Dataset Preparation

As we all know, training with sufficient datasets is a prerequisite for a model with good generalization. Hence, we collected some images that contain wild flame and smoke regions from some fire image datasets that were set up by previous researchers. These fire image datasets are from the wildfire and smoke dataset from the CVPR laboratory at Keimyung University, the VisiFire dataset from Bilkent University, and the wildfire and smoke datasets from the Split Faculty of Electrical Engineering University’s Wildfire Research Center. In addition, to further expand the wildfire image dataset, smoke images were collected on public websites. Moreover, in order to improve the generalization ability of the model and reduce the false detection rate of the model, we also focused on the training of the model on negative samples. Therefore, we collected an equal number of negative sample images without flames and smoke, as shown in Figure 9. Among them, the negative sample images we collected are mainly divided into three types, the first category is simple negative images correlated with the wildfire background, such as wild forest and grassland images in different scenes, weather, and seasons. The second category is hard negative sample images with similar characteristics to flames, such as lights at night, sunset, and some other objects in red and yellow. The third category is hard negative sample images with similar characteristics to smoke, such as clouds, water droplets, and fog. In the entire negative sample image dataset, the proportion with hard negative samples is as high as 75%. All in all, the whole wildfire image dataset contains a total of 14,400 images. The proportion of the training set is 80%, that is, 11,520 images as the training set and 2880 images as the testing set. The completed dataset is shown in Table 3. Above all, for images containing flames and smoke in wildfire datasets, each flame and smoke instance is annotated by a bounding box for describing its location, as shown in Figure 10.

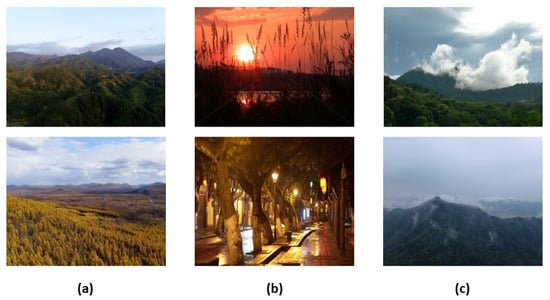

Figure 9.

The negative sample images of dataset. (a) Simple negative images. (b) Hard negative images such as flame. (c) Hard negative images such as smoke.

Table 3.

Self-made wildfire dataset in detail.

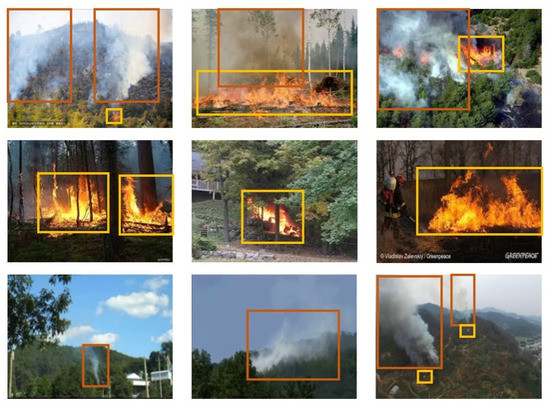

Figure 10.

The dataset for wildfire detection.

3.2. Training Details

This paper implements a lightweight field fire detection network on pytorch1.10. Besides, all of the networks in this paper are trained and tested using Tesla V100-SXM2 GPU with 16 G memory. In the training process, in order to expand the data samples, improve the generalization ability of the model, and prevent the model from overfitting, this paper uses pixel-level data augmentation such as cropping, rotation, translation, and scaling, and image-level mosaic data augmentation. Among them, mosaic data augmentation [38] involves randomly selecting four pictures from the training set to crop and stitch them into one picture for training. Finally, we trained the model on the self-made wildfire dataset for 900 epochs and use a Stochastic Gradient Descent (SGD) optimizer with a cosine annealing learning rate of 0.01 in the initial stage, a weight decay coefficient of 0.005, a momentum of 0.937, and a batch size of 32.

3.3. Evaluation Metrics

To compare and analyze the effectiveness of the model we presented with the existing algorithms, some relevant indicators were selected. For the generalization performance of the model, the relevant metrics are as follows: Precision, Recall, Accuracy, F1-score, Average precision (AP), and AP0.5. The precision refers to the proportion of samples predicted to be positive that are truly positive. The recall refers to the proportion of correct predictions among all positive samples. The accuracy refers to the proportion of correctly predicted samples to the total number of samples. The F1-score is the harmonic average of precision and recall. The AP computes the average value of P(R) over the interval from R = 0 to R = 1. The AP0.5 is the AP at an intersection over the union threshold of 0.5. Additionally, in the intelligent wildfire detection method, our model is designed for edge devices. Hence, we used model size, GFLOPs, inference time, and parameters as metrics to evaluate the hardware performance of the model. The definitions of precision, recall, accuracy, F1-score and AP are as follows:

3.4. Ablation Experiment

In this section, we conducted ablation experiments on the wildfire image dataset to verify the validity of those modules we presented. The results of the ablation experiments are provided in Table 4. We can observe that when we replace the backbone with a network consists of only a mobilenetv3 block, there is a significant drop in the size of the network compared to the original network. The size of the model is only 5.4 MB. However, there is a significant loss in the generalization performance of the network. We can obtain a precision rate of 90.7%, accuracy rate of 88.3%, and AP of 77.5%, which are lower than the YOLOV5S by approximately 7.9%. This is because scaling down the backbone network of the model leads to a lack of model feature extraction capability. Next, the multi-scale feature extraction network (MFEN) is proposed to increase the perceptual field of the model and improve the feature extraction performance of the model, which is more sensitive to small targets. The precision rate is 91.6%, accuracy rate is 89.5%, and AP is 82.3%. All of these indicators have been improved to some extent. Additionally, then, the bi-directional feature fusion module is used in the model, thus causing the model to fully aggregate feature information from different levels in the backbone network. Moreover, based on the bi-directional feature fusion network, we design a four-detection head structure to achieve a multi-scale detection of the target. The precision rate of 92.7%, accuracy rate of 89.9%, and AP of 84.0%, both higher than our previously proposed model. However, the improvement in metrics comes at the cost of a somewhat larger model size. Finally, the coordination attention modules are embedded in multiple parts of the model, which is well suited for lightweight networks. It allows for a huge improvement in the generalization performance of the model, meanwhile the model size and computational complexity remain almost constant. After plugging it into the model, we can obtain a precision rate of 93.5%, accuracy rate of 90.5%, and AP of 85.8%. In particular, compared to the previous model, the AP is improved by approximately 1.4%. In conclusion, from Table 4, we can conclude that the modules we have adopted and proposed are very effective in improving the performance of the network for field fire detection.

Table 4.

Ablation experiments on our dataset.

3.5. Performance Comparisons with Other Algorithms

In order to verify the performance of the model proposed in this paper, three well-known wildfire detection networks were selected for comparison with the model we presented. These models are, respectively, based on VGG-16 [39], DNCNN [40], and YOLOV5S [23] networks. These models are uniformly trained on the datasets we produce to ensure they achieve stable results. It is important to note that we are not sure if the results are exactly the same as those of their methods, since the source code and dataset of these methods are not publicly available. The overall performance of these networks for wild flame and smoke detection is provided in Table 5. In our dataset, the performance of the DNCNN-based method is the worst. The accuracy rate is only 71.6%, while the other four indicators are just over 82.0%. Besides, the VGG16-based approach is excellent and on our data set it obtains a precision rate of 88.7%, accuracy rate of 80.1%, recall rate of 89.2%, F1-score of 88.9%, and AP0.5 of 94.4%. These metrics are higher than the DNCNN-based method. However, its performance still falls short of that of our approach. Our proposed method has an extraordinary performance on our dataset, which is based on YOLOV5S with a series of modifications. It obtains a precision rate of 93.5%, accuracy rate of 90.5%, recall rate of 96.6%, F1-score of 95.0%, AP of 85.8%, and AP0.5 of 98.7%. Compared to the VGG16-based method, there is a huge improvement in all indicators. Furthermore, the AP and accuracy rate of our method are approximately 0.4% higher than YOLOV5S. In total, the approach we presented achieves a very superior result in the overall performance of wildfire detection, implying a better performance than existing methods.

Table 5.

The overall experimental results in our dataset.

3.6. Comparisons of Experimental Results of Each Category

For a more detailed comparison in terms of the detection performance of wild flame and smoke in the dataset, we show the experimental results for each network on flame and smoke separately, as shown in Table 6 and Table 7. For wild flame detection, the precision rates of all networks are all over 88%. Without a doubt, the DNCNN- based method is still the worst performer. The AP is only 53.9%. In addition, the VGG16-based approach obtains a precision rate of 88.6%, accuracy rate of 87.1%, recall rate of 98.1%, F1-score of 93.1%, and AP0.5 of 94.6%. These metrics are higher than that of DNCNN-based method. Among the existing methods in Table 6, it obtains the highest detection recall rate on our dataset. A high recall means that the model causes a low false alarm rate of wild flame. However, its other metrics are still lower than that of our method. For all six indicators, our method achieves the best results with the accuracy rate of 90.5%, precision rate of 93.4%, and F1-score of 95.1%. It means that our network performs well for wild flame detection.

Table 6.

The experimental results of wild flame detection in our dataset.

Table 7.

The experimental results of wild smoke detection in our dataset.

For wild smoke detection, similar to flame detection, the accuracy rate and AP of the DNCNN-based method are the lowest in Table 7. The VGG-16-based method’s accuracy rate, recall rate, and F1-score rank third. Compared to the DNCNN-based method, the VGG-16-based method has a greater progress in all six indicators. It is worth mentioning that our method achieves the five best results with the accuracy rate of 90.3%, recall rate of 96.4%, F1-score of 94.9%, and AP of 87.7% and AP0.5 of 98.8%. In particular, compared to the YOLOV5S, the accuracy rate is improved by approximately 3.2%, AP is increased by approximately 1.1%, and the recall rate is improved by approximately 1.2%. Careful observation can reveal that the experimental results of these model for wild smoke detection are usually lower than those for flames detection. This is because the flame features are simpler and easier to learn by the model compared to smoke. In this case, the excellent performance of our model means that the method and the module we proposed are efficient for wildfire detection.

3.7. Comparisons of the Model Hardware Performance

The methods for intelligent wildfire detection via video cameras often require the deployment of detection networks into resource-constrained environments. As a result, the approach our proposed model is designed to meet the deployment requirements of resource-constrained edge devices as much as possible. The hardware performance of each method is provided in Table 8. Among then, the inference time is obtained by inferring five wildfire video sequences 10 times on Tesla V100 and then using the average. We can find that the size, parameters, and speed of the DNCNN are the best in Table 8. The model size is only 5.4 MB, inference time is 5.99 ms, and parameters are 2.1 M. However, its performance for wildfire detection on our dataset is the worst, which is caused by the model size. Compared to YOLOV5S, the hardware performance of our model has a big improvement. The model size is only 10.2 MB, parameters are 5.1 M, and GFLOPs is 6.6. GFLOPs is a very important metric used to evaluate the computational complexity of a model. A low GFLOPs indicates that less computation is required and that there are low resource requirements for hardware. Moreover, the model has the highest number of layers in Table 8. The layers of the model are 521. The deeper the layers of the model, the more carefully the feature information of the target is extracted, which helps to improve the generalization ability of the model. However, although our model still meets the requirement of real-time detection, it requires longer time compared to other networks. This is the motivation for our future research. Overall, our model still performs very well in the hardware performance, causing the model to be a good choice for those resource-constrained edge devices.

Table 8.

The hardware performance comparison of each method.

3.8. Detection Performance

To further verify the actual detection performance of the model we presented for wild flame and smoke, we randomly selected a few videos of wildfires for detection. Furthermore, due to flames and smoke being characterized mainly by their color and shape, there are many flame- and smoke-like objects, such as lights and white clouds in actual field fire detection scenarios. So, we think it is very important that the model can distinguish between real flames and smoke and those things that are similar to them and can even detect wildfires at any time of day. Motivated by this, the wildfire videos we selected were shot at different times of the day and in different wilderness scenarios. The detection results are as shown in Figure 11. It can be seen that our model has excellent results for the detection of flame and smoke in the field. In addition, it is insensitive to flame-like and smoke-like objects in videos, allowing it to have an extremely low false detection rate. So, the robustness of the proposed model in detecting confusing fire objects is improved. Eventually, it is equally important that the detection accuracy rate of our model for wild flame and smoke is extraordinary, while having no missed wild flames or smoke.

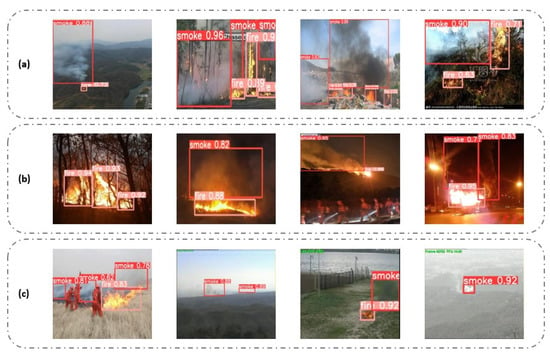

Figure 11.

The detection results of our method. (a) Normal flames and smoke during daytime. (b) Flames at night and flame-like objects. (c) Daytime smoke and smoke-like objects.

4. Conclusions

In this paper, we proposed an intelligent wildfire detection approach through video cameras based on computer vision methods. Unlike some existing wildfire detection algorithms [15,16], we believe that the size of the model is as important as the accuracy of the model, since it needs to be deployed into resource-constrained hardware. Therefore, we have improved the structure of the YOLOV5S. First of all, to meet the deployment requirements of resource-constrained edge devices as much as possible, a new backbone network was designed to replace the CSPDarknet53, resulting in a lightweight design for the YOLOV5S, which is composed of Mobilenetv3 blocks. Secondly, by investigating the wildfire images in the dataset, we found that the region of flames and smoke in the images were highly variable. Therefore, we designed a multi-scale feature extraction network (MFEN), meaning the network can capture the contextual information of multi-scale objects in complex visual scenes. Third, considering the importance of feature aggregation networks in target detection networks, we design a bi-directional feature fusion network to enhance the feature aggregation capability of the network, which can fully fuse the semantic and location information of features at different levels. Additionally, then, a four-head detection structure is proposed to improve the detection performance of the network and the anchor box is designed based on the K-means clustering algorithm. Eventually, a coordinated attention mechanism was inserted in the network. Because it can cause the model to pay more attention to the region where the target may exist. Moreover, an excellent visualization technique called guided Gradient-weighted Class Activation Mapping (Grad-CAM) was used to verify the effectiveness of the coordinated attention mechanism. The experimental results demonstrated that our method achieved a lower model size and computational effort while maintaining high accuracy.

The proposed method is developed for practical application in wild environments such as forests and other high-fire-risk industries. Most importantly, our method performed well in terms of detection accuracy while meeting the speed requirements for real-time field fire detection. Furthermore, the hardware performance of our approach is suitable for deployment to those resource-constrained edge devices. In short, its wild deployment will greatly help to detect wildfire at the very early stage and to implement relevant emergency measures in a timely manner, thus significantly contributing to loss prevention.

Author Contributions

J.X. put forward the main idea, designed the experiment and wrote the manuscript. C.W., Q.L. and S.J. participated in the experiment. C.W. participated in the revision of the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this study are available upon reasonable and legitimate request by contacting the author.

Acknowledgments

The authors are very grateful to the editors and reviewers for their efforts in processing and reviewing this manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bowman, D.M.J.S.; Kolden, C.A.; Abatzoglou, J.T.; Johnston, F.H.; van der Werf, G.R.; Flannigan, M. Vegetation fires in the Anthropocene. Nat. Rev. Earth Environ. 2020, 1, 500–515. [Google Scholar] [CrossRef]

- Antunes, M.; Ferreira, L.M.; Viegas, C.; Coimbra, A.P.; de Almeida, A.T. Low-cost system for early detection and deployment of countermeasures against wild fires. In Proceedings of the 2019 IEEE 5th World Forum on Internet of Things (WF-IoT), Limerick, Ireland, 15–18 April 2019; pp. 418–423. [Google Scholar]

- Neumann, G.B.; De Almeida, V.P.; Endler, M. Smart Forests: Fire detection service. In Proceedings of the 2018 IEEE Symposium on Computers and Communications (ISCC), Rennes, France, 25–28 June 2018; pp. 01276–01279. [Google Scholar]

- Sasmita, E.S.; Rosmiati, M.; Rizal, M.F. Integrating forest fire detection with wireless sensor network based on long range radio. In Proceedings of the 2018 International Conference on Control, Electronics, Renewable Energy and Communications (ICCEREC), Bandung, Indonesia, 5–7 December 2018; pp. 222–225. [Google Scholar]

- Bhosle, A.S.; Gavhane, L.M. Forest disaster management with wireless sensor network. In Proceedings of the 2016 International Conference on Electrical, Electronics, and Optimization Techniques (ICEEOT), Chennai, India, 3–5 March 2016; pp. 287–289. [Google Scholar]

- Fernández-Berni, J.; Carmona-Galán, R.; Martínez-Carmona, J.F.; Rodríguez-Vázquez, Á. Early forest fire detection by vision-enabled wireless sensor networks. Int. J. Wildland Fire 2012, 21, 938–949. [Google Scholar] [CrossRef]

- Yuan, F. Video-based smoke detection with histogram sequence of lbp and lbpv pyramids. Fire Saf. J. 2011, 46, 132–139. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Dimitropoulos, K.; Grammalidis, N. Smoke detection using spatio-temporal analysis, motion modeling and dynamic texture recognition. In Proceedings of the 2014 22nd European Signal Processing Conference (EUSIPCO), Lisbon, Portugal, 1–5 September 2014; pp. 1078–1082. [Google Scholar]

- Dimitropoulos, K.; Barmpoutis, P.; Grammalidis, N. Higher order linear dynamical systems for smoke detection in video surveillance applications. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 1143–1154. [Google Scholar] [CrossRef]

- Kosmas, D.; Barmpoutis, P.; Grammalidis, N. Spatio-temporal flame modeling and dynamic texture analysis for automatic video-based fire detection. IEEE Trans. Circuits Syst. Video Technol. 2014, 25, 339–351. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Zhang, Q.; Xu, J.; Guo, H. Deep convolutional neural networks for forest fire detection. In Proceedings of the International Forum on Management, Education and Information Technology Application, Guangzhou, China, 30–31 January 2016; Atlantis Press: Paris, Franch, 2016; pp. 568–575. [Google Scholar]

- Kim, B.; Lee, J. A video-based fire detection using deep learning models. Appl. Sci. 2019, 9, 2862. [Google Scholar] [CrossRef]

- Ba, R.; Chen, C.; Yuan, J.; Song, W.; Lo, S. SmokeNet: Satellite smoke scene detection using convolutional neural network with spatial and channel-wise attention. Remote Sens. 2019, 11, 1702. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, Y.; Zhang, Q.; Lin, G.; Wang, Z.; Jia, Y.; Wang, J. Video smoke detection based on deep saliency network. Fire Saf. J. 2019, 105, 277–285. [Google Scholar] [CrossRef]

- Wu, Z.; Xue, R.; Li, H. Real-Time Video Fire Detection via Modified YOLOv5 Network Model. Fire Technol. 2022, 58, 2377–2403. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 1–26 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Lecture Notes in Computer Science; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; Volume 9905. [Google Scholar] [CrossRef]

- Jocher, G. Ultralytics/Yolov5: V3.1—Bug Fixes and Performance Improvements. Available online: https://github.com/ultralytics/yolov5/releases (accessed on 5 October 2022).

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Visio, Seoul, Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Zhang, G.; Lu, S.; Zhang, W. CAD-Net: A context-aware detection network for objects in remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 10015–10024. [Google Scholar] [CrossRef]

- Chen, S.; Zhan, R.; Zhang, J. Geospatial object detection in remote sensing imagery based on multiscale single-shot detector with activated semantics. Remote Sens. 2018, 10, 820. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Nas-fpn: Learning scalable feature pyramid architecture for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7036–7045. [Google Scholar]

- Guo, C.; Fan, B.; Zhang, Q.; Xiang, S.; Pan, C. Augfpn: Improving multi-scale feature learning for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12595–12604. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Tapas, K.; Mount, D.M.; Netanyahu, N.S.; Piatko, C.D.; Silverman, R.; Wu, A.Y. An efficient k-means clustering algorithm: Analysis and implementation. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 881–892. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Khan, S.; Muhammad, K.; Mumtaz, S.; Baik, S.W.; de Albuquerque, V.H.C. Energy-efficient deep CNN for smoke detection in foggy IoT environment. IEEE Internet Things J. 2019, 6, 9237–9245. [Google Scholar] [CrossRef]

- Yin, Z.; Wan, B.; Yuan, F.; Xia, X.; Shi, J. A deep normalization and convolutional neural network for image smoke detection. IEEE Access 2017, 5, 18429–18438. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).