Exploring Useful Teacher Roles for Sustainable Online Teaching in Higher Education Based on Machine Learning

Abstract

1. Introduction

2. Rationale

2.1. Multidimensional Conceptualization of Perceived Usefulness in TAM

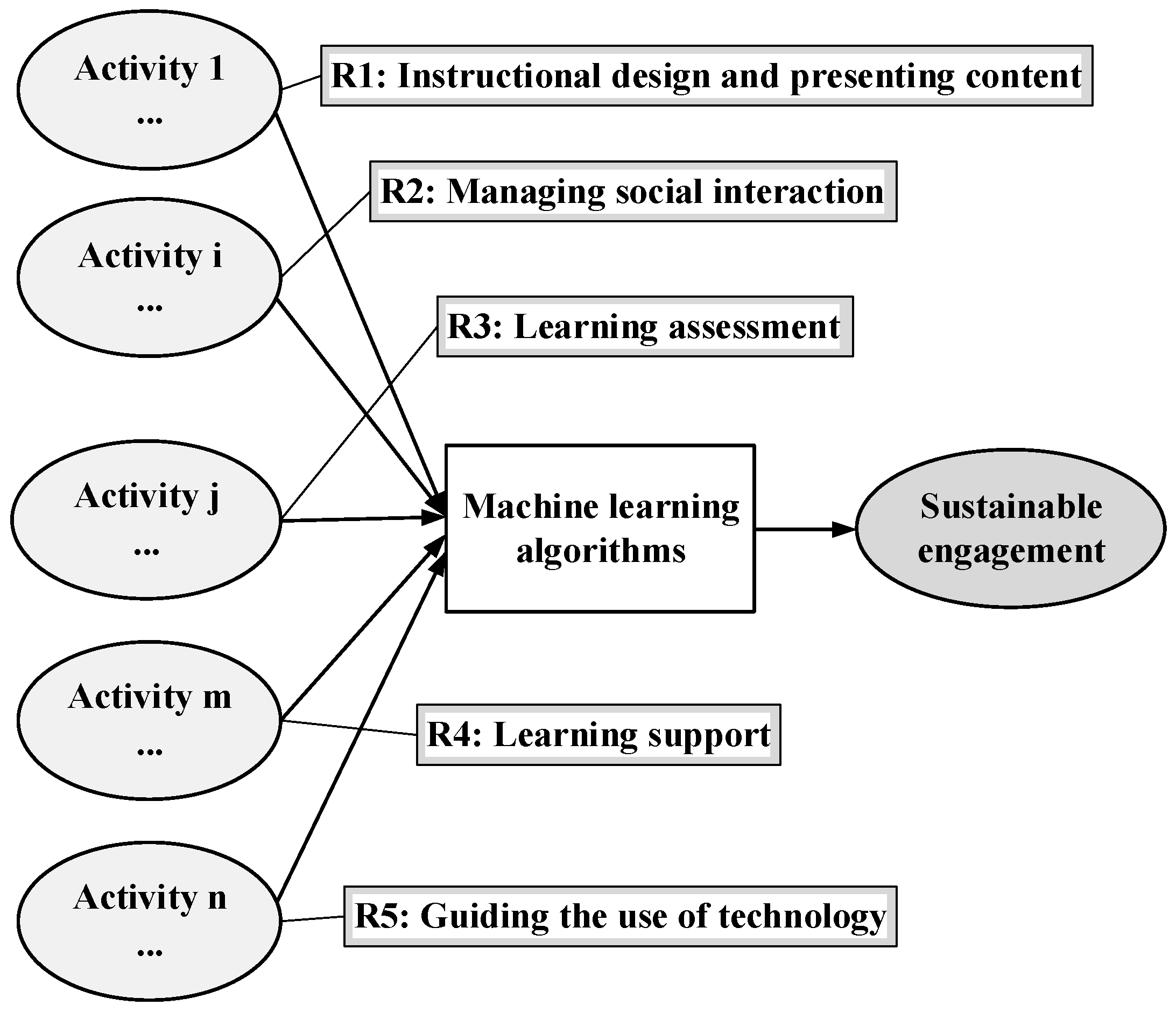

2.2. Teacher Roles in Online Teaching

2.3. Machine Learning Techniques for Prediction of E-learning

2.4. Building Predictive and Descriptive Models for Present Study

3. Materials and Methods

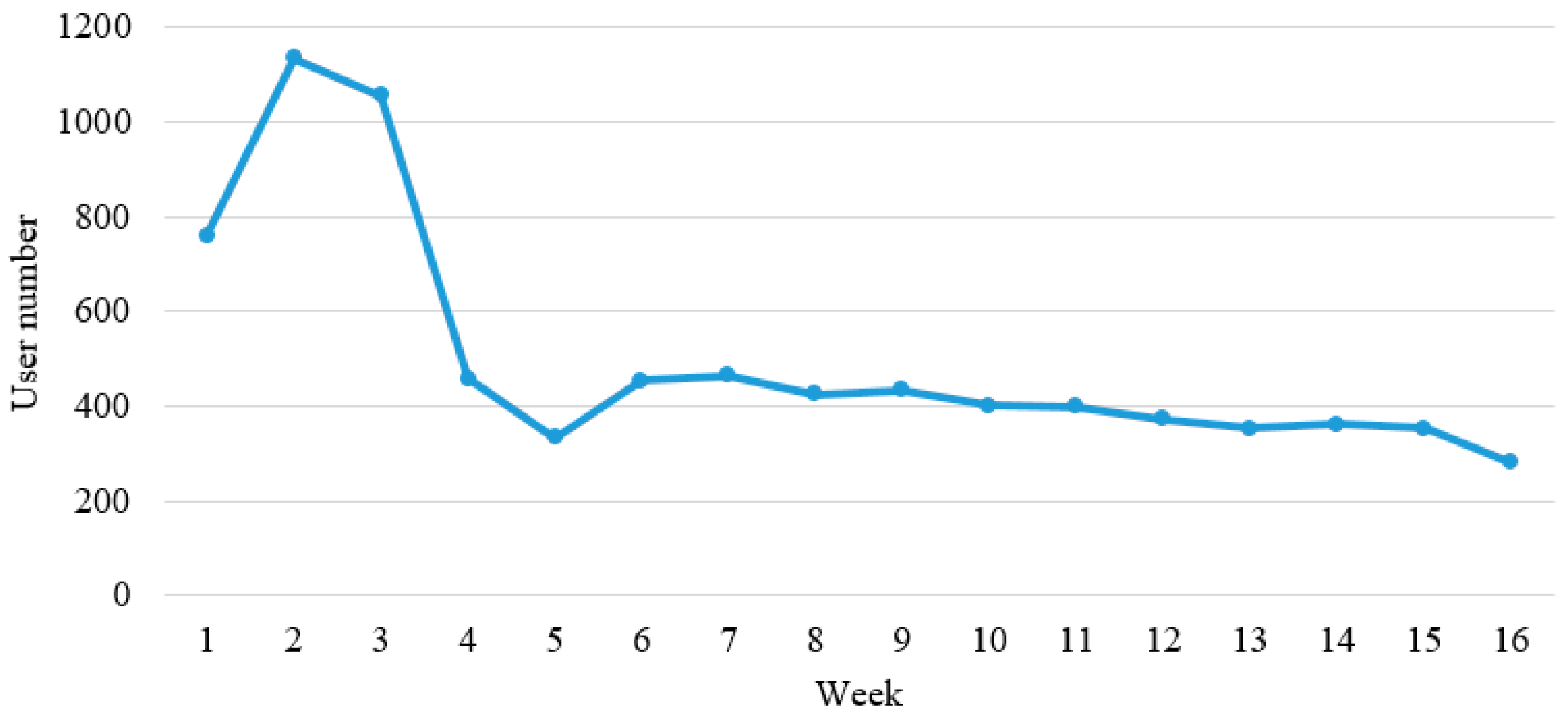

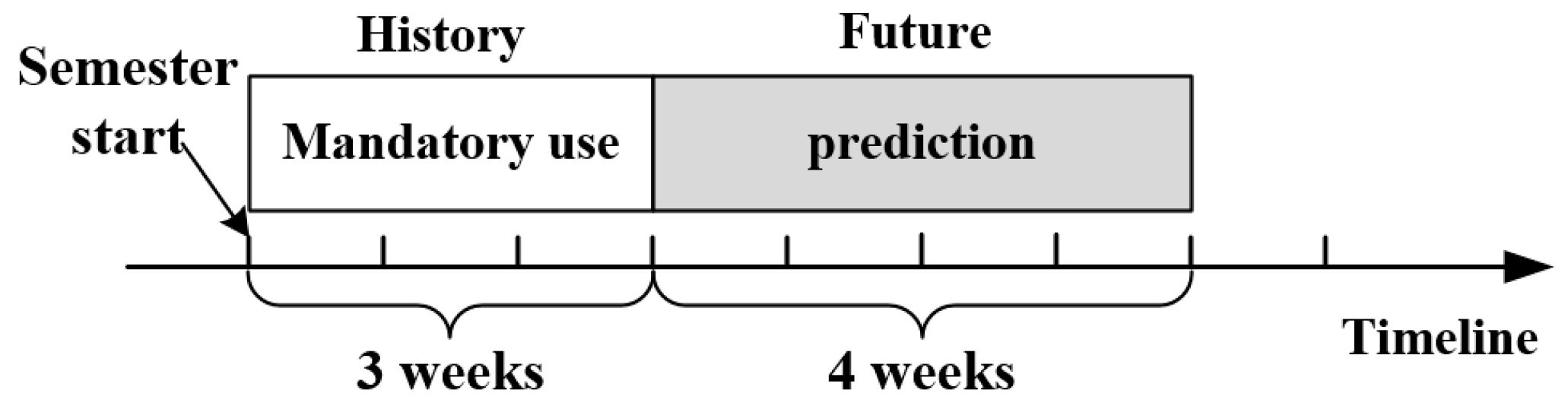

3.1. Context and Data Description

3.2. Problem Statement and Feature Extraction

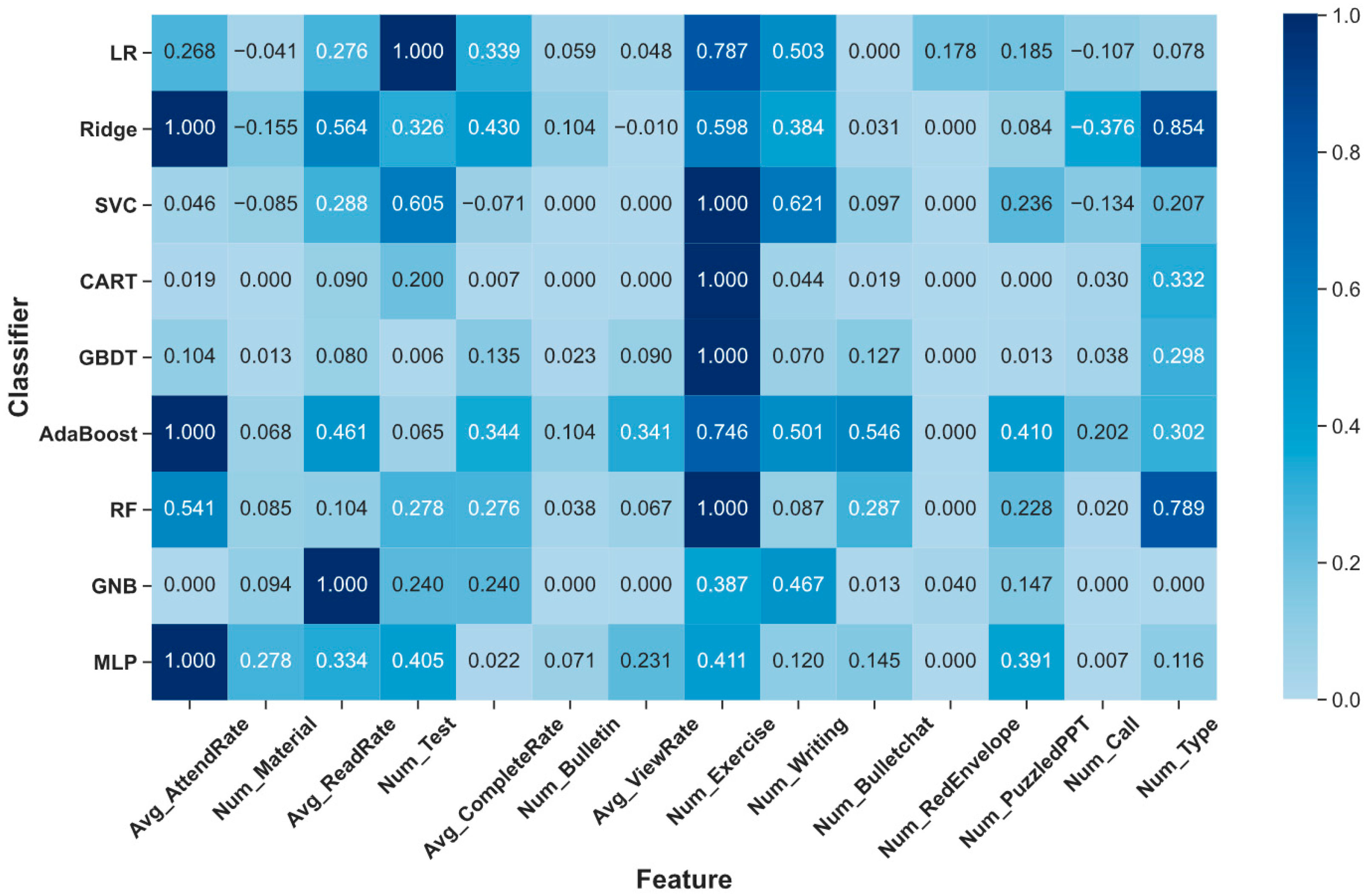

3.3. Classifiers and Feature Scoring

- Logistic Regression (LR) with L2 regularization [34];

- Ridge classifier (Ridge) [35];

- SVM, Support Vector Classification (SVC) with linear kernel [36];

- Decision tree, Classification and Regression Tree (CART) algorithm [37];

- Ensemble method, Gradient Boosted Decision Trees (GBDT) [38];

- Ensemble method, AdaBoost boosting algorithm (AdaBoost) [39];

- Ensemble method, Random Forest (RF) classifier [40];

- Gaussian Naive Bayes (GNB) algorithm [41];

- Neural network, Multi-Layer Perceptron (MLP) algorithm [42].

4. Results

4.1. Comparison of Prediction Performances

4.2. Detecting Collinearity

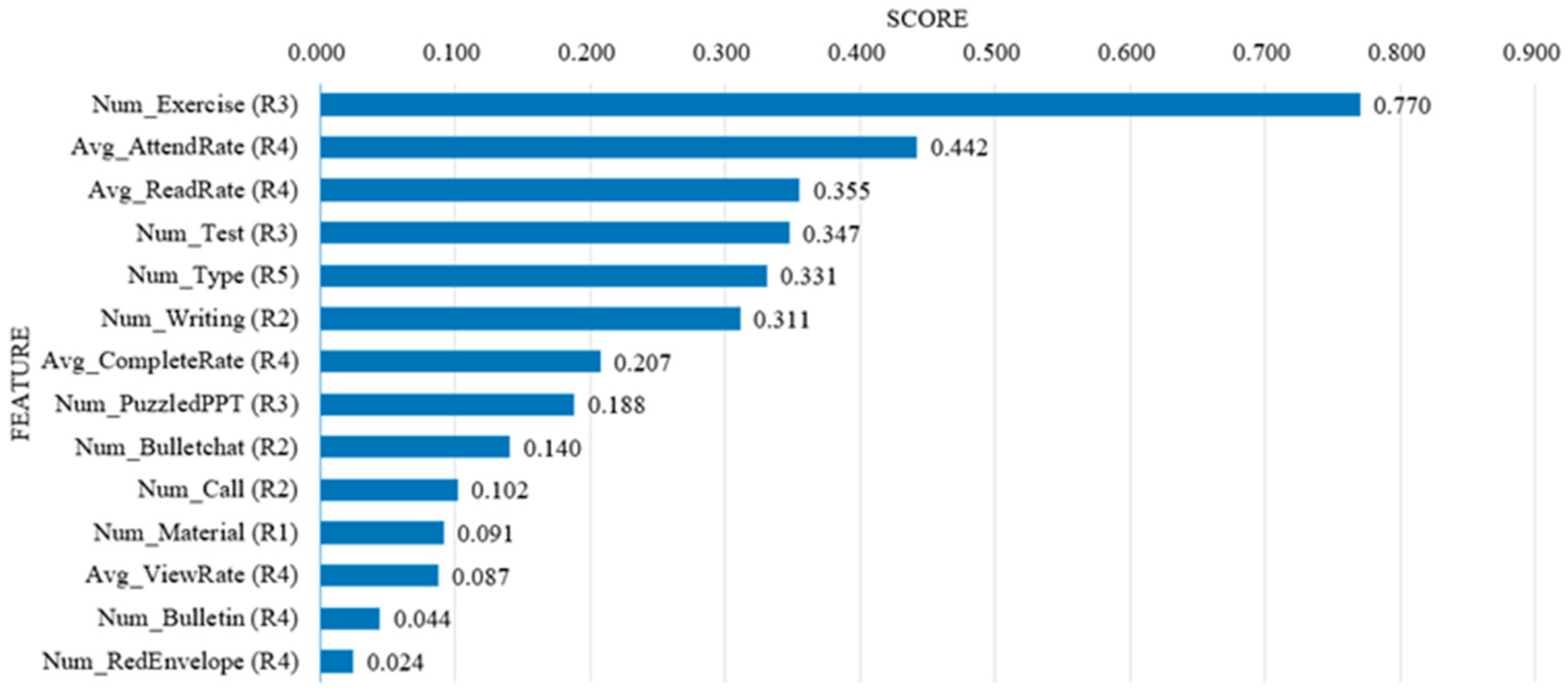

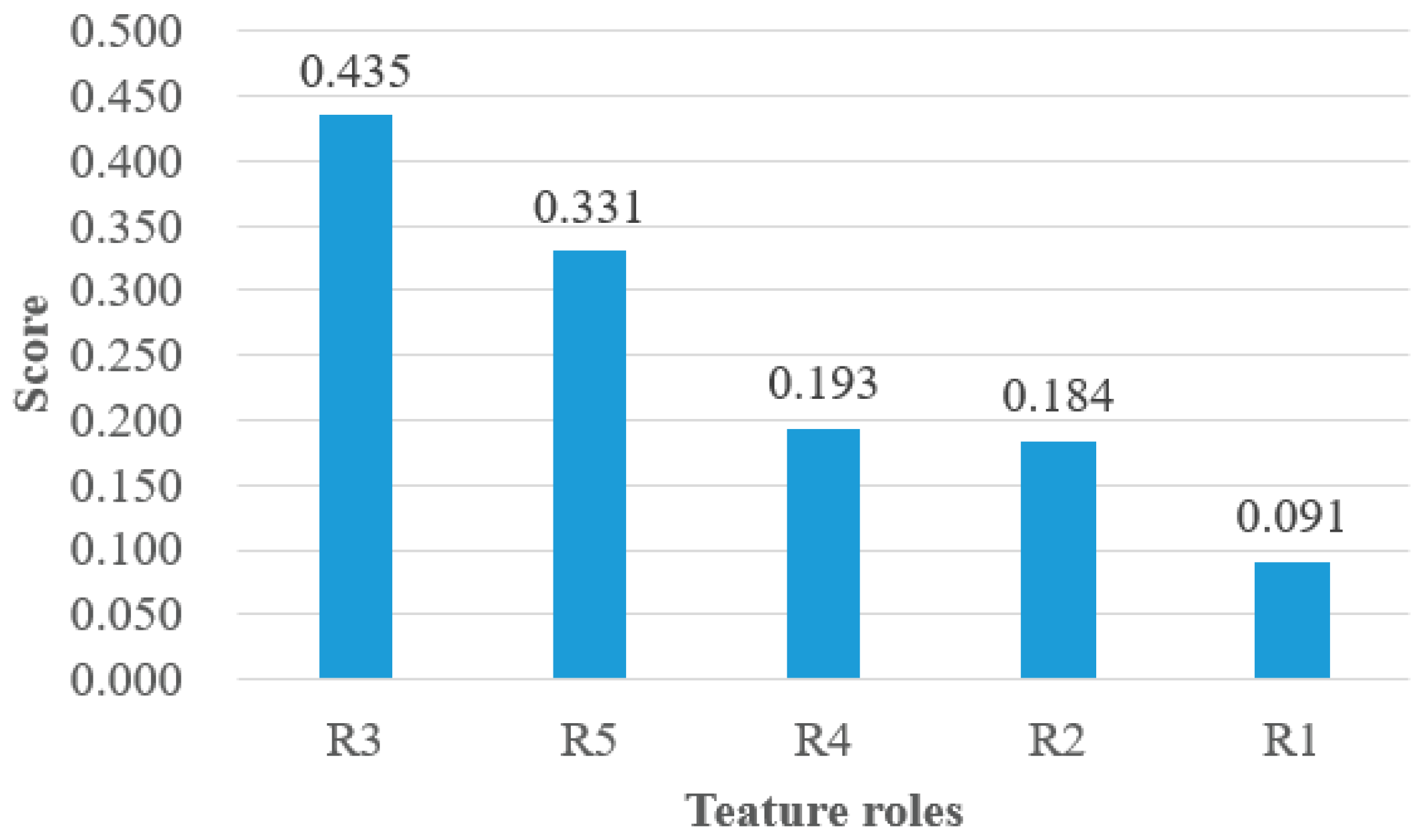

4.3. Feature Scores and Ranking

5. Discussion

6. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hofer, S.I.; Nistor, N.; Scheibenzuber, C. Online teaching and learning in higher education: Lessons learned in crisis situations. Comput. Hum. Behav. 2021, 121, 106789. [Google Scholar] [CrossRef]

- Pokhrel, S.; Chhetri, R. A Literature Review on Impact of COVID-19 Pandemic on Teaching and Learning. High. Educ. Future 2021, 8, 133–141. [Google Scholar] [CrossRef]

- Chou, H.-L.; Chou, C. A multigroup analysis of factors underlying teachers’ technostress and their continuance intention toward online teaching. Comput. Educ. 2021, 175, 104335. [Google Scholar] [CrossRef]

- Salmon, G. E-Moderating: The Key to Teaching and Learning Online, 3rd ed.; Routledge: New York, NY, USA, 2003. [Google Scholar]

- Badia, A.; Garcia, C.; Meneses, J. Approaches to teaching online: Exploring factors influencing teachers in a fully online university: Factors influencing approaches to teaching online. Br. J. Educ. Technol. 2017, 48, 1193–1207. [Google Scholar] [CrossRef]

- Goodhue, D.L.; Thompson, R.L. Task-Technology Fit and Individual Performance. MIS Q. 1995, 19, 213. [Google Scholar] [CrossRef]

- Baran, E.; Correia, A.-P.; Thompson, A. Transforming online teaching practice: Critical analysis of the literature on the roles and competencies of online teachers. Distance Educ. 2011, 32, 421–439. [Google Scholar] [CrossRef]

- Martin, F.; Sun, T.; Westine, C.D. A systematic review of research on online teaching and learning from 2009 to 2018. Comput. Educ. 2020, 159, 104009. [Google Scholar] [CrossRef]

- Alvarez, I.; Guasch, T.; Espasa, A. University teacher roles and competencies in online learning environments: A theoretical analysis of teaching and learning practices. Eur. J. Teach. Educ. 2009, 32, 321–336. [Google Scholar] [CrossRef]

- Hung, J.L.; Zhang, K. Revealing online learning behaviors and activity patterns and making predictions with data mining techniques in online teaching. MERLOT J. Online Learn. Teach. 2008, 4, 13. [Google Scholar]

- Zhang, K.; Aslan, A.B. AI technologies for education: Recent research & future directions. Comput. Educ. Artif. Intell. 2021, 2, 100025. [Google Scholar]

- Arpaci, I. A hybrid modeling approach for predicting the educational use of mobile cloud computing services in higher education. Comput. Hum. Behav. 2019, 90, 181–187. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Scherer, R.; Siddiq, F.; Teo, T. Becoming more specific: Measuring and modeling teachers’ perceived usefulness of ICT in the context of teaching and learning. Comput. Educ. 2015, 88, 202–214. [Google Scholar] [CrossRef]

- Teo, T. Modelling technology acceptance in education: A study of pre-service teachers. Comput. Educ. 2009, 52, 302–312. [Google Scholar] [CrossRef]

- Venkatesh, V.; Bala, H. Technology Acceptance Model 3 and a Research Agenda on Interventions. Decis. Sci. 2008, 39, 273–315. [Google Scholar] [CrossRef]

- Mo, C.-Y.; Hsieh, T.-H.; Lin, C.-L.; Jin, Y.Q.; Su, Y.-S. Exploring the Critical Factors, the Online Learning Continuance Usage during COVID-19 Pandemic. Sustainability 2021, 13, 5471. [Google Scholar] [CrossRef]

- Wu, B.; Chen, X. Continuance intention to use MOOCs: Integrating the technology acceptance model (TAM) and task technology fit (TTF) model. Comput. Hum. Behav. 2017, 67, 221–232. [Google Scholar] [CrossRef]

- Yu, T.-K.; Yu, T.-Y. Modelling the factors that affect individuals’ utilisation of online learning systems: An empirical study combining the task technology fit model with the theory of planned behaviour: Modelling factors affecting e-learning systems. Br. J. Educ. Technol. 2010, 41, 1003–1017. [Google Scholar] [CrossRef]

- Garrison, D.R.; Anderson, T.; Archer, W. Critical Inquiry in a Text-Based Environment: Computer Conferencing in Higher Education. Internet High. Educ. 1999, 2, 87–105. [Google Scholar] [CrossRef]

- Anderson, T.; Rourke, L.; Garrison, D.; Archer, W. Assessing teaching presence in a computer conferencing context. J. Asynchronous Learn. 2001, 5, 1–17. [Google Scholar] [CrossRef]

- Klein, J.D.; Spector, J.M.; Grabowski, B.L.; de la Teja, I. Instructor Competencies: Standards for Face-to-Face, Online, and Blended Settings; IAP: Charlotte, NC, USA, 2004. [Google Scholar]

- Bawane, J.; Spector, J.M. Prioritization of online instructor roles: Implications for competency-based teacher education programs. Distance Educ. 2009, 30, 383–397. [Google Scholar] [CrossRef]

- Akour, I.; Alshurideh, M.; Al Kurdi, B.; Al Ali, A.; Salloum, S. Using Machine Learning Algorithms to Predict People’s Intention to Use Mobile Learning Platforms during the COVID-19 Pandemic: Machine Learning Approach. JMIR Med. Educ. 2021, 7, e24032. [Google Scholar] [CrossRef]

- Prenkaj, B.; Velardi, P.; Stilo, G.; Distante, D.; Faralli, S. A Survey of Machine Learning Approaches for Student Dropout Prediction in Online Courses. ACM Comput. Surv. 2021, 53, 1–34. [Google Scholar] [CrossRef]

- Lykourentzou, I.; Giannoukos, I.; Nikolopoulos, V.; Mpardis, G.; Loumos, V. Dropout prediction in e-learning courses through the combination of machine learning techniques. Comput. Educ. 2009, 53, 950–965. [Google Scholar] [CrossRef]

- Mendez, G.; Buskirk, T.D.; Lohr, S.; Haag, S. Factors Associated with Persistence in Science and Engineering Majors: An Exploratory Study Using Classification Trees and Random Forests. J. Eng. Educ. 2008, 97, 57–70. [Google Scholar] [CrossRef]

- Hu, Y.-H.; Lo, C.-L.; Shih, S.-P. Developing early warning systems to predict students’ online learning performance. Comput. Hum. Behav. 2014, 36, 469–478. [Google Scholar] [CrossRef]

- Qiu, L.; Liu, Y.; Liu, Y. An Integrated Framework with Feature Selection for Dropout Prediction in Massive Open Online Courses. IEEE Access 2018, 6, 71474–71484. [Google Scholar] [CrossRef]

- Gray, C.C.; Perkins, D. Utilizing early engagement and machine learning to predict student outcomes. Comput. Educ. 2019, 131, 22–32. [Google Scholar] [CrossRef]

- Panagiotakopoulos, T.; Kotsiantis, S.; Kostopoulos, G.; Iatrellis, O.; Kameas, A. Early Dropout Prediction in MOOCs through Supervised Learning and Hyperparameter Optimization. Electronics 2021, 10, 1701. [Google Scholar] [CrossRef]

- Gaudioso, E.; Montero, M.; Hernandez-del-Olmo, F. Supporting teachers in adaptive educational systems through predictive models: A proof of concept. Expert Syst. Appl. 2012, 39, 621–625. [Google Scholar] [CrossRef]

- He, J.; Bailey, J.; Rubinstein, B.; Zhang, R. Identifying at-risk students in massive open online courses. In Proceedings of the AAAI Conference on Artificial Intelligence 2015, Austin, TX, USA, 25–30 January 2015. [Google Scholar]

- Wright, R.E. Logistic regression. In Reading and Understanding Multivariate Statistics; American Psychological Association: Washington, DC, USA, 1995; pp. 217–244. [Google Scholar]

- Grüning, M.; Kropf, S. A Ridge Classification Method for High-dimensional Observations. In From Data and Information Analysis to Knowledge Engineering; Springer: Berlin, Germany, 2006; pp. 684–691. [Google Scholar]

- Gunn, S.R. Support vector machines for classification and regression. ISIS Tech. Rep. 1998, 14, 5–16. [Google Scholar]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees; Routledge: New York, NY, USA, 2017. [Google Scholar]

- Friedman, J.H. Greedy Function Approximation: A Gradient Boosting Machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Hastie, T.; Rosset, S.; Zhu, J.; Zou, H. Multi-class AdaBoost. Stat. Its Interface 2009, 2, 349–360. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Rish, I. An empirical study of the naive Bayes classifier. In Proceedings of the IJCAI 2001 Workshop on Empirical Methods in Artificial Intelligence 2001, Washington, DC, USA, 4 August 2001. [Google Scholar]

- Hinton Geoffrey, E. Connectionist learning procedures. Artif. Intell. 1989, 40, 185–234. [Google Scholar] [CrossRef]

- Louppe, G. Understanding random forests: From theory to practice. arXiv 2014, arXiv:1407.7502. [Google Scholar]

- Joshi, M.V. On evaluating performance of classifiers for rare classes. In Proceedings of the 2002 IEEE International Conference on Data Mining, 2002 Proceedings, Maebashi City, Japan, 9–12 December 2002. [Google Scholar]

- Midi, H.; Sarkar, S.K.; Rana, S. Collinearity diagnostics of binary logistic regression model. J. Interdiscip. Math. 2010, 13, 253–267. [Google Scholar] [CrossRef]

- Pierson, M.E.; Cozart, A. Case Studies of Future Teachers. J. Comput. Teach. Educ. 2004, 21, 59–63. [Google Scholar]

- Li, J.; Qin, C.; Zhu, Y. Online teaching in universities during the COVID-19 epidemic: A study of the situation, effectiveness and countermeasures. Procedia Comput. Sci. 2021, 187, 566–573. [Google Scholar] [CrossRef]

- Baran, E.; Correia, A.-P.; Thompson, A.D. Tracing Successful Online Teaching in Higher Education: Voices of Exemplary Online Teachers. Teach. Coll. Rec. 2013, 115, 1–41. [Google Scholar] [CrossRef]

- Heymann, R.; Risinamhodzi, D.T. A continuous feedback system during COVID-19 online teaching. In Proceedings of the 2021 IEEE Global Engineering Education Conference (EDUCON), Vienna, Austria, 21–23 April 2021. [Google Scholar]

- Lynch, J. Teaching Presence; Pearson Education: London, UK, 2016; Available online: https://www.pearson.com/content/dam/one-dot-com/one-dot-com/ped-blogs/wp-content/pdfs/INSTR6230_TeachingPresence_WP_f.pdf (accessed on 17 October 2022).

- Bower, B.L. Distance education: Facing the faculty challenge. Online J. Distance Learn. Adm. 2001, 4, 1–6. [Google Scholar]

- Chen, T.; Peng, L.; Jing, B.; Wu, C.; Yang, J.; Cong, G. The Impact of the COVID-19 Pandemic on User Experience with Online Education Platforms in China. Sustainability 2020, 12, 7329. [Google Scholar] [CrossRef]

- Wang, K.; Zhang, L.; Ye, L. A nationwide survey of online teaching strategies in dental education in China. J. Dent. Educ. 2021, 85, 128–134. [Google Scholar] [CrossRef]

- Gurley, L.E. Educators’ Preparation to Teach, Perceived Teaching Presence, and Perceived Teaching Presence Behaviors in Blended and Online Learning Environments. Online Learn. 2018, 22, 197–220. [Google Scholar]

- Surry, D.W.; Farquhar, J.D. Adoption Analysis and User-Oriented Instructional Development. In Proceedings of the 1995 Annual National Convention of the Association for Educational Communications and Technology (AECT), Anaheim, CA, USA, 8–12 February 1995. [Google Scholar]

| Teacher Roles | Tasks and Activities |

|---|---|

| R1: Instructional design and presenting content | Design instructional strategies Develop appropriate learning resources Offer specific ideas/expert and scholarly knowledge Demonstrate effective presentation |

| R2: Managing social interaction | Promotion of relationships of trust and mutual commitment among students Enhancement of cordial and warm relations between teacher and students Resolution of group conflicts among students Facilitation of personal or professional knowledge sharing among students |

| R3: Learning assessment | Correction of students’ misunderstanding of content Providing students with information about assessment (grades, correct answers, and/or evaluation criteria) Resolution of questions from students about the content Monitoring and evaluation of students’ individual and group activities |

| R4: Learning support | Guidance and regulation of students’ individual study processes Control and monitoring of students’ learning pace and learning periods Guidance, monitoring, and evaluation of students’ participation in learning activities |

| R5: Guiding the use of technology | Guidance of students in the use of the virtual learning environment Regulation of an appropriate use of technology by students Design of certain technological tools for learning Decision to integrate new technological tools into the existing virtual environment |

| Feature Name | Description | Teacher Role |

|---|---|---|

| Avg_AttendRate | Monitor the average rate of students attending online classes over a certain time period | R4 |

| Num_Material | The number of course materials (mainly PPTs) a lecturer uploads over a certain time period | R1 |

| Avg_ReadRate | Monitor the average rate of students reading the provided materials over a certain time period | R4 |

| Num_Test | The number of formal test papers a lecturer uploads for student assessment over a certain time period | R3 |

| Avg_CompleteRate | Monitor the average rate of students completing uploaded papers over a certain time period | R4 |

| Num_Bulletin | The number of bulletins a lecturer issues to inform students of learning arrangements over a certain time period | R4 |

| Avg_ViewRate | Monitor the average rate of students viewing the issued bulletins over a certain time period | R4 |

| Num_Exercise | The number of exercises presented by a teacher to test and correct his/her student’s learning during a live broadcast in class over a certain time period | R3 |

| Num_Writing | The number of pieces of writing submitted by a lecturer or his/her students to an e-learning wall for sharing ideas during a live broadcast in class over a certain time period | R2 |

| Num_Bulletchat | The number of pieces of bullet chats between a lecturer and his/her students during a live broadcast in class over a certain time period | R2 |

| Num_RedEnvelope | The number of times a lecturer rewards his/her students performing well with money during a live broadcast in class over a certain time period | R4 |

| Num_PuzzledPPT | Monitor the number of incomprehensible PPT slides students reported over a certain time period | R3 |

| Num_Call | The number of roll calls a lecturer made randomly to interact with a particular student during a live broadcast in class over a certain time period | R2 |

| Num_Type | The number of types of activities a lecturer performed on the platform over a certain time period | R5 |

| Classifier | Track1 | Track2 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F1-Score | AUC | Accuracy | Precision | Recall | F1-Score | AUC | |

| LR | 0.75 | 0.75 | 0.75 | 0.74 | 0.74 | 0.76 | 0.76 | 0.76 | 0.75 | 0.75 |

| Ridge | 0.71 | 0.70 | 0.71 | 0.70 | 0.72 | 0.75 | 0.74 | 0.75 | 0.74 | 0.74 |

| SVC | 0.75 | 0.75 | 0.75 | 0.74 | 0.75 | 0.77 | 0.77 | 0.77 | 0.76 | 0.76 |

| CART | 0.75 | 0.77 | 0.75 | 0.73 | 0.72 | 0.75 | 0.76 | 0.75 | 0.74 | 0.71 |

| GBDT | 0.73 | 0.73 | 0.73 | 0.73 | 0.75 | 0.72 | 0.71 | 0.71 | 0.71 | 0.76 |

| AdaBoost | 0.75 | 0.75 | 0.75 | 0.75 | 0.77 | 0.75 | 0.75 | 0.75 | 0.75 | 0.77 |

| RF | 0.72 | 0.73 | 0.72 | 0.72 | 0.75 | 0.71 | 0.72 | 0.71 | 0.71 | 0.78 |

| GNB | 0.74 | 076 | 0.74 | 0.71 | 0.73 | 0.76 | 0.78 | 0.76 | 0.74 | 0.74 |

| MLP | 0.77 | 0.77 | 0.77 | 0.76 | 0.77 | 0.77 | 0.77 | 0.77 | 0.76 | 0.79 |

| Feature | VIF | Feature | VIF |

|---|---|---|---|

| Avg_AttendRate | 4.04 | Num_Exercise | 1.54 |

| Num_Material | 2.09 | Num_Writing | 1.06 |

| Avg_ReadRate | 2.08 | Num_Bulletchat | 1.35 |

| Num_Test | 7.32 | Num_RedEnvelope | 1.04 |

| Avg_CompleteRate | 7.05 | Num_PuzzledPPT | 1.37 |

| Num_Bulletin | 2.49 | Num_Call | 1.07 |

| Avg_ViewRate | 3.14 | Num_Type | 4.76 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, Y.; Guo, F. Exploring Useful Teacher Roles for Sustainable Online Teaching in Higher Education Based on Machine Learning. Sustainability 2022, 14, 14006. https://doi.org/10.3390/su142114006

Shi Y, Guo F. Exploring Useful Teacher Roles for Sustainable Online Teaching in Higher Education Based on Machine Learning. Sustainability. 2022; 14(21):14006. https://doi.org/10.3390/su142114006

Chicago/Turabian StyleShi, Yanni, and Fucheng Guo. 2022. "Exploring Useful Teacher Roles for Sustainable Online Teaching in Higher Education Based on Machine Learning" Sustainability 14, no. 21: 14006. https://doi.org/10.3390/su142114006

APA StyleShi, Y., & Guo, F. (2022). Exploring Useful Teacher Roles for Sustainable Online Teaching in Higher Education Based on Machine Learning. Sustainability, 14(21), 14006. https://doi.org/10.3390/su142114006