1. Introduction

Breast cancer treatment can be quite effective, especially if caught early in the disease’s course. Most breast cancer therapies include surgical excision, radiation therapy, and medication. These therapies are intended to target tiny cancers that have spread from a breast tumor into the bloodstream. The fact that such treatment can halt cancer growth and spread while also saving lives attests to this. Breast cancer will claim the lives of 2.3 million new patients and 685,000 people will die as a result of the disease by 2020. Breast cancer will be the most common type of cancer in the world by 2020, according to the World Health Organization (WHO) [

1]. In the last five years, 7.8 million cases of breast cancer have been diagnosed. Because it develops in the breast cells, breast cancer is one of the most prevalent forms of cancer in women. After lung cancer, breast cancer is the most common cancer in women. Breast cancer types can be distinguished using a microscope. There are two most frequent kinds of breast cancer, invasive ductal carcinoma (IDC) and ductal carcinoma in situ (DCIS), with DCIS taking longer to develop and having a smaller impact on patients’ daily lives. As many as 80% of breast cancer cases are diagnosed with IDC. The IDC form is more dangerous since it involves the entire breast tissue and is consequently more lethal than the DCIS type, which only accounts for between 20 and 53% of cases. This category includes most breast cancer patients (roughly 80%). Breast cancer is the type of cancer that is responsible for the greatest number of disability-adjusted years of life lost in female patients when compared to all other types of cancer combined [

2].

Breast cancer can strike a woman at any age after puberty in any part of the globe. However, as one ages, the risk increases. Between 1930 and 1970, the number of women losing their lives to breast cancer remained largely stable. In countries that adopted early detection programs and a variety of treatment options to eradicate invasive diseases, life expectancy began to rise in the 1980s. Breast cancer is not contagious, unlike other malignancies. As opposed to human papilloma virus (HPV) infection and cervical cancer, breast cancer has no known viral or bacterial infections. Nearly half of all breast cancer cases are caused by women who have no known risk factors for the disease other than their gender (female) and age (over 40 years old). Breast cancer is more common in women who have a family history of the disease, drink excessive amounts of alcohol, or use hormone therapy after menopause, all of which raise the risk. Breast cancer symptoms include a lump or thickening of the breast that is painless. Women should seek medical attention as soon as they notice a lump in their breasts, regardless of how painful it is [

3]. Breast lumps can arise for several reasons, the majority of which are benign. Breast lumps have a 90% chance of being benign. Breast abnormalities that are not cancerous include infections and benign tumors such as fibroadenomas and cysts. A thorough medical examination is required [

4].

Imaging of the breast and, in certain instances, the removal of a sample of tissue are both methods that can be utilized to ascertain whether a tumor is cancerous or benign. Tests, such as imaging of the breast and tissue sampling (biopsy), should be performed on women who have persistent abnormalities (typically lasting more than one month) [

5]. In the past several decades, there have been several advancements made in machine learning algorithms used for the diagnosis and categorization of breast cancer. Preprocessing, feature extraction, and classification are the three processes that comprise these methods. It is possible to improve interpretation and analysis by preprocessing the mammography films to make the pictures’ peripheral areas and intensity distributions more visible. There have been many different tactics described, and quite a few of them are effective [

6]. The use of machine learning is growing more and more widespread, to the point that it will likely soon be commercialized as a service. Unfortunately, machine learning remains a difficult field, one that almost always calls for the application of specialized knowledge and skills. To make a reliable method for machine learning, we need a wide range of skills and experience [

7], such as preprocessing, feature engineering, and classification approaches.

Breast cancer is treatable, and if caught and treated in its early stages, a positive prognosis is possible. Because of this, the accessibility of adequate screening technologies is essential to the early detection of the signs and symptoms associated with breast cancer. Mammography, ultrasound imaging, and thermography are three of the most common imaging procedures that may be utilized in the screening and diagnosis processes for this ailment. Other imaging methods may also be utilized. Although mammography is one of the most effective ways to detect breast cancer at an early stage, it is also one of the costliest. Ultrasound or diagnostic sonography techniques are gaining popularity as an alternative to mammography for women who have firm breasts because mammography is useless in this situation. Small cancerous tumors can be found without the use of radiation from X-rays, and thermography may be better at finding small cancerous tumors than ultrasonography.

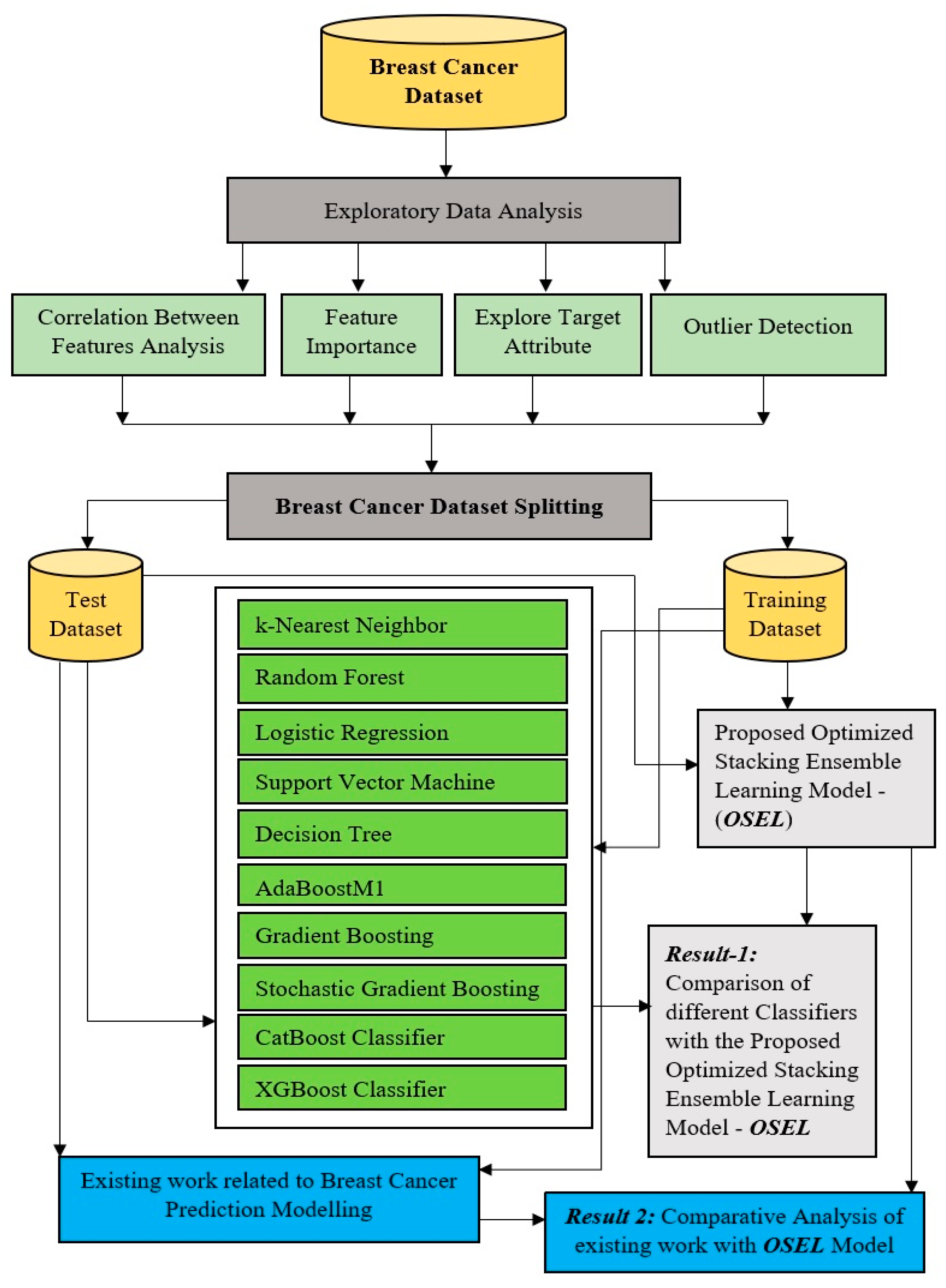

A wide range of industries, including medicine, agriculture, and smart cities, can benefit from data mining, all of which can be put to good use by the general public. Research has been done utilizing several classification methodologies, such as the k-nearest neighbor, random forest, logistic regression, support vector machine, and decision tree. A stacking ensemble approach for determining the base classifier is proposed in this study, in contrast with the traditional single prediction models that are used to predict class level in any classification task. The traditional models are to be replaced by this approach. When several learning algorithms work together to solve a single problem, this is called “ensemble learning”.

1.1. Stacking Ensemble Learning Architecture

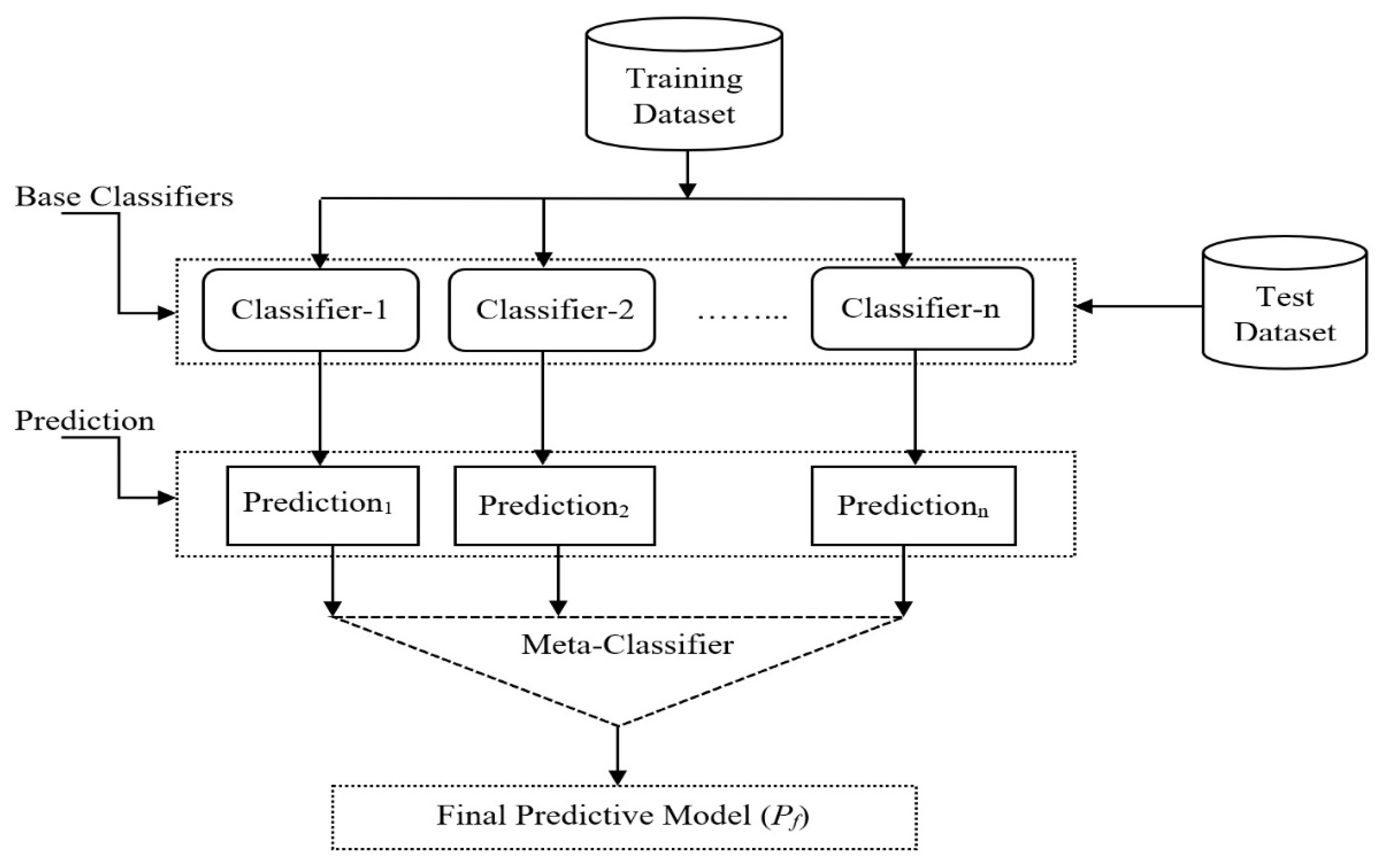

The stacking ensemble model’s architecture is depicted in

Figure 1. The meta-classifier and the base-classifiers make up the stacking ensemble learning. The training set is used in the meta-classifier to train models and make predictions. There are two ways in which data are used for classification: one is the metaclassifier, which is based on the meta-data, and the other is the base-classifiers. The Netflix team known as “The Ensemble,” which is tied for the winning submission in terms of accuracy, employs this strategy. To guarantee that the ensemble has a wide diversity of components, heterogeneous ensemble methods employ several distinct base learning algorithms. It is made up of people who make use of a variety of basic learning algorithms, such as support vector machines, artificial neural networks, and decision trees, to produce the highest quality of work that is humanly possible. Stacking is a well-known heterogeneous ensemble method that is utilized in a variety of different applications. It is analogous to the concept of boosting.

1.2. Motivation for This Study

Many previously published authors did not work on process structure to carry out discoveries, instead relying on data-centric methods. This emphasizes the importance of the present investigation. The bulk of the time, writers focus on classification issues at a single level, and the machine learning models they apply are tailored to the datasets they employ. In several early research studies, the preprocessing phases were omitted altogether, and either a single feature selection strategy or several feature selection approaches were employed. Most of the efforts were motivated by preexisting objectives. The methodology that has been proposed will automatically decide the preprocessing and classification methods and parameters to be used. An expert in machine learning determines which approach is optimal for a certain problem domain at hand. Those who are not experts in machine learning must put in a substantial amount of effort to optimize the proposed models and achieve the desired performance. By using a dozen different methods, this research will help us get closer to its goal, which is to automate the process of making machine learning models.

1.3. Problem Statement

One in every eight women will be diagnosed with breast cancer over their lifetime. This makes it the health risk that is most common for women in their forties. Early identification and diagnosis of the illness may be incredibly difficult, even though it is the leading cause of mortality throughout the globe. There has been a discernible rise in the utilization of classification-based methodologies within current medical diagnostics. Studies on cancer are the primary focus of efforts to apply modern techniques from the fields of bioinformatics, statistics, and machine learning to achieve a more precise and speedy diagnosis. With predictive and personalized medicine becoming more and more important, there is a fast-growing need to use machine learning-driven models in cancer research to make predictions and determine a patient’s prognosis.

The rest of the paper is organized as follows:

Section 2 defines the significance of past work on the topic of ensemble learning.

Section 3 describes the preprocessing work done on the identified data sets. The proposed approach is explained in

Section 4.

Section 5 presents the implementation of the proposed solution.

Section 6 includes a comparison of the proposed model to the existing models.

Section 7 concludes the analysis and includes a discussion of potential future scope orientations.

2. Related Work

Data have displaced conventional power centers. Massive growth in the utilization of data analysis across all fields has benefited from the application of data mining. There are many applications for data mining, including identifying patterns and trends in a variety of fields, such as health care and social media. The weighted idea of the naïve Bayes classifier (NBC) for the diagnosis of breast cancer was changed by Kharya, S. et al. [

8] who developed a new prediction model, the weighted naïve Bayes classifier (WNBC). On a benchmark dataset, the framework was developed and experiments were carried out to compare its performance to that of current non-weighted non-NBC and recently accessible models such as radial basis function (RBF), the weighted associative classifier (WAC), and feature weighted association classification (FWAC). Chaurasia, V. et al. [

9] used three well-known data mining methods, including naïve Bayes, RBF networks, and J48, on the Wisconsin breast cancer dataset (WBCD). The naïve Bayes method performed the best, with a classification accuracy of 97.36%. Second and third place went to the RBF network and J48 algorithms, respectively, with a classification accuracy of 96.77% and 93.41%. Data from the University of California, Irvine (UCI) machine learning repository were used by Verma, D. et al. [

10] to classify two types of cancer: breast cancer and diabetes. Methods such as naïve Bayes and sequential minimal optimization (SMO) were also used. Data mining techniques to assess the chance of breast cancer recurrence are important, but Ojha, U. et al. [

11], emphasized the importance of parameter selection. The use of clustering and classification methods is explained and it was found that classification and techniques outperformed clustering.

By comparing two machine learning algorithms, Kumar, V. et al. [

12] developed a Breast classifier model that can distinguish between benign and malignant breast tumors. The Wisconsin breast cancer diagnosis dataset is used to accomplish this purpose. Classifier overfitting and underfitting are also addressed by researchers, as well as data interpretation and handling of missing values. Sahu, B. et al. [

13] used a neural network to classify breast cancer data. Using artificial neural networks, the article examines and assesses various methods for detecting breast cancer. Abdar, M. et al. [

14] selected the top three classifiers based on F3 scores. False negatives in breast cancer categorization are emphasized by the F3 score (recall). Ensemble classification is then performed using these three classifiers and a voting mechanism. Hard and soft voting methods were also put to the test. These probabilities were averaged or multiplied for both hard and soft voting. The highest and lowest were also used. When hard voting (majority-based voting) is used, it is 99.42% better than the WBCD algorithm.

Abdar, M. et al. [

15] proposed recursive ensemble classifiers to evaluate the classification accuracy, precision, and recall of single and nested ensemble classifiers, as well as computation times. It was also important for the authors to compare the proposed model’s accuracy to that of other well-known models. Single classifiers cannot compete with the two-layer ensemble models proposed here. It was found that SV-BayesNet-3 and Naïve Bayes-3 were both 98% accurate. SV-Naive Bayes-3-MetaClassifier, on the other hand, was built much more swiftly A modified bat technique (MBT) was proposed by Jeyasingh, S. et al. [

16] as a feature selection process for reducing redundant characteristics in a dataset. They rewrote the bat algorithm to select data points at random from the dataset using basic random sampling. The dataset was ranked using the world’s greatest criteria to determine its prominent qualities. A random forest algorithm was developed using these properties. Breast cancer detection is easier with the MBT feature selection technique. The proposed jointly sparse discriminant analysis (JSDA) technique by Kong, H. et al. [

17] not only improves diagnosis accuracy when compared to the classic feature extraction and discriminant analysis algorithms, but it also learns jointly sparse discriminant vectors to investigate the critical elements of breast cancer pathologic diagnosis, as demonstrated. Several well-known subspace learning algorithms fail to beat JSDA when applied to breast cancer datasets that are sparse, even when sample counts are low. Analysis of JSDA data shows that the main risk factors for breast cancer that were looked at are true to life.

When determining the textural characteristics of mammograms, the grey level cooccurrence matrix (GLCM) feature calculated along a zero-degree axis yielded the most accurate findings by Toner, E. et al. [

18]. In an artificial neural network, the best features are then selected and used for training and classification using an artificial neural network (ANN). In addition to medical diagnosis and pattern recognition, ANNs are used in a wide range of applications. For this investigation, researchers used the mini-MIAS database, and the results indicated 99.3% sensitivity, 100% specificity, and 99.4% accuracy. Early cancer detection is essential if we wish to reduce the death rate from breast cancer. Mammograms from the miniMIAS database were used by Tariq, N. et al. [

19] in their research experiment. This database contains 322 mammograms, of which 270 are normal and 52 are malignant. Following the calculation of ten texture features from the gray-level cooccurrence matrix along 0°, the ranked features approach further reduced the number of texture features to six. The obtained results show that this technique achieves 99.4% overall accuracy, with 100% accuracy for validation and test data. The novel sliding window technique for feature extraction presented in this research makes use of the local binary pattern features. This approach produces 25 sliding panes for each image. A support vector machine classifier is made from the traits that are taken from each window and saved.

According to the findings of Alqudah, A. et al. [

20], the SVM classifier separates each picture into benign and malignant categories based on each image’s most prominent window classes. The approach may be used to locate the cancerous tissues using the whole histopathological image. The recommended strategy has overall accuracy, sensitivity, and specificity of 91.12%, 85.22%, and 94.01%, respectively. Rasti, R. et al. [

21] used the ME-CNN model, which consists of three CNN experts and one convolutional gating network, to achieve 96.39% accuracy, 97.73% sensitivity, and 94.87% specificity. In terms of classification performance, the experimental results show that it outperforms two convolutional ensemble methods and three existing single-classifier approaches. The ME-CNN model may be useful to radiologists when analyzing breast DCE-MRI images. Wahab, N. et al. [

22] recommended a strategy that greatly decreased training time when compared to the methodology that had the best F-measure of 0.78. The suggested method opens possibilities for employing CNNs’ automatic feature extraction capabilities in imbalanced medical photos to precisely diagnose these awful diseases for the benefit of all living creatures. It is used for other sorts of medical images besides breast cancer images.

Bhardwaj, A. et al. [

23] suggested a solution for dealing with classification problems by using a genetically optimized neural network (GONN) strategy. It is used to determine if a tumor is benign or malignant in breast cancer situations. The performance of GONN against the classical model and the classical backpropagation model from UCI’s machine learning library was used to show how important the obtained results are. AUC under ROC curves, ROC curves, and the confusion matrix were also compared. Curvelet moments have been shown in trials to be superior to other ways of analyzing mammograms and to be both beneficial and effective. Curvelet moments have a 91.27 % accuracy rate for detecting abnormalities or cancers when used with 10 or 8 in the case of malignancy detection, according to the mini-MIAS database. More real-world tests on the DDSM database show that Dhahbi, S. et al.’s [

24] proposed method is more accurate than all previous curvelet-based methods and reduces the total number of features.

The combined SVM and extra-trees model that Alfian, G. et al. [

25] suggested attained maximum accuracy of up to 80.23%, which was far superior to the other ML model. When compared to ML that did not use the method of feature selection, the experimental findings revealed that the average ML prediction accuracy could be improved by as much as 7.29% when extra-trees-based feature selection was used to choose features. To improve the accuracy of breast cancer classification, Safdar, S. et al. [

26] proposed a model that makes use of machine learning techniques such as SVM, logistic regression (LR), and K-nearest neighbor (KNN). They achieved the greatest accuracy possible, which was 97.7%, with a false positive rate of 0.01, a false negative rate of 0.03, and an area under the ROC curve (AUC) score of 0.99. Mohamed, E. A. et al. [

27] proposed a two-class deep learning model that is trained from scratch to differentiate between normal and diseased breast tissues based on thermal images. Additionally, it is used to extract additional features from the dataset that are beneficial in the process of training the network and improving the effectiveness of the classification procedure. Accuracy levels of up to 99.33% have been reached using the suggested approach.

Table 1 shows the publisher, the year in which the research was published, and the algorithms that were used to make the research work.

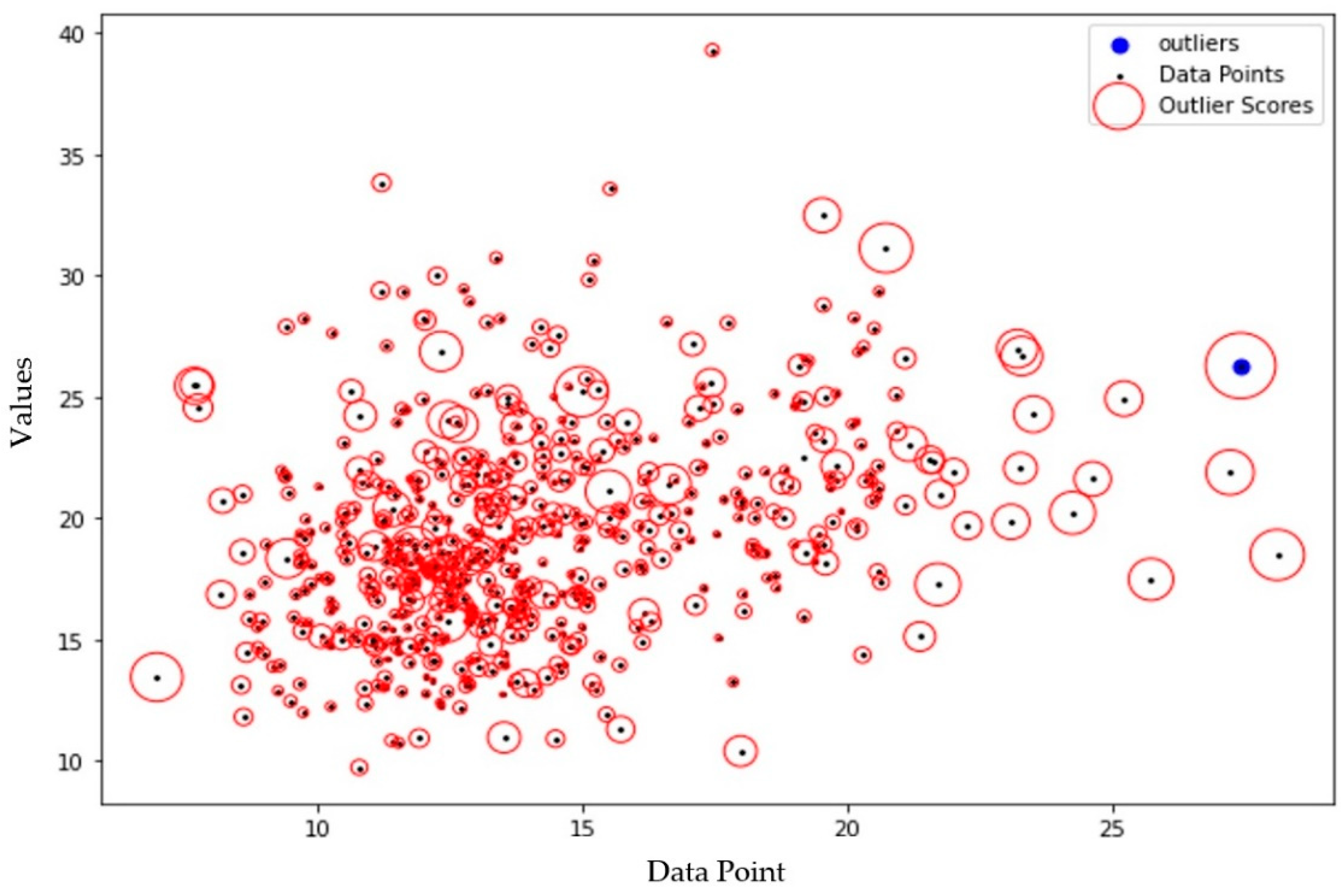

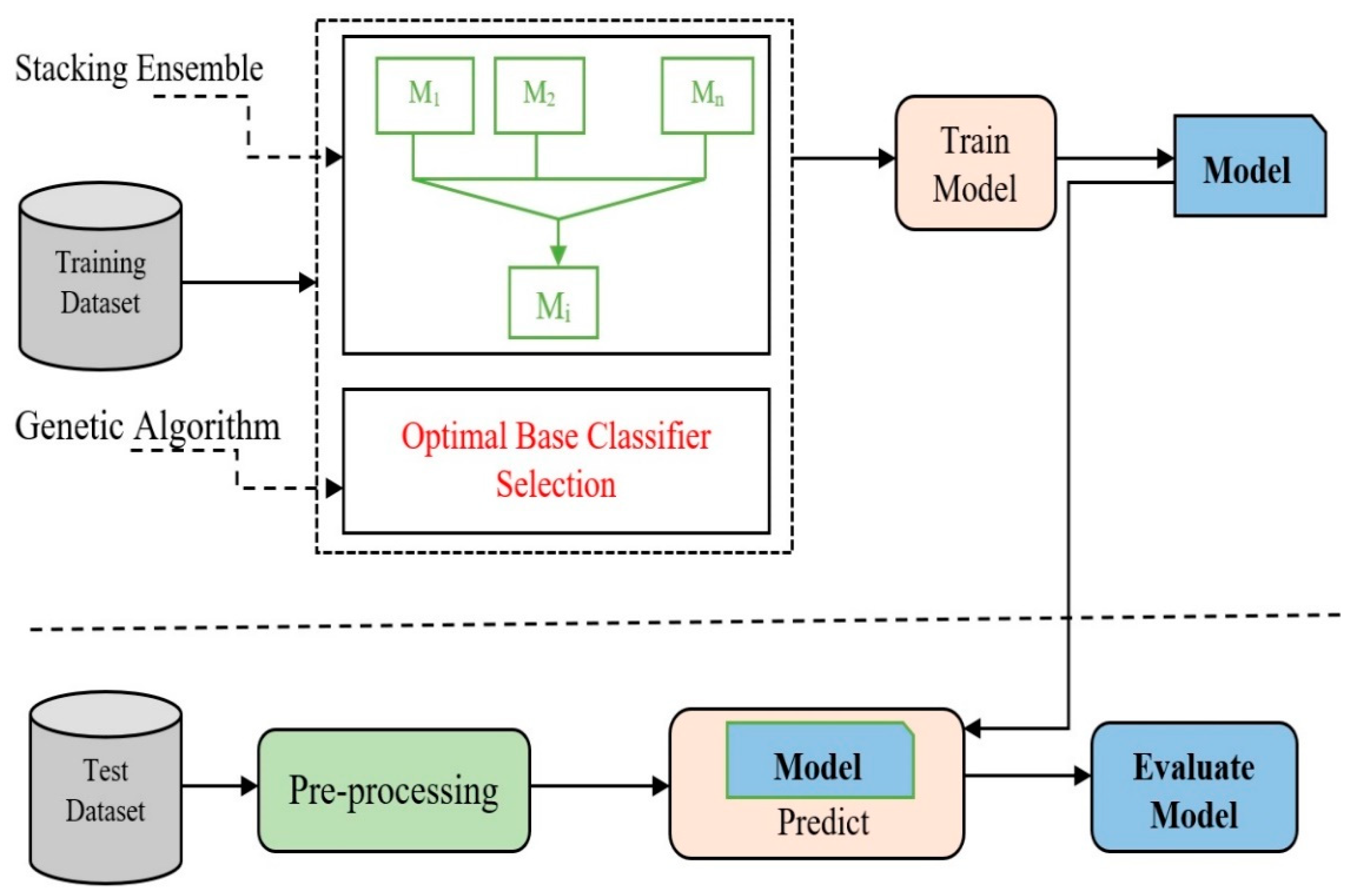

4. Proposed Optimized Stacking Ensemble Learning (OSEL) Model

Building predictive models is an iterative process that begins with a hypothesis and continues until a useful outcome is achieved. Predictive models may require statistical analysis, data mining, or data visualization technologies. In

Figure 7, an approach to machine learning, including any method of deep learning, can function as a base classifier for the OSEL model. The level of difference between the different base classifiers is a large part of how the base classifiers are chosen.

For this model, we consider the following cutting-edge classifiers as possible starting points: k-nearest neighbor; random forest; logistic regression; support vector machine; decision tree; AdaBoostM1; gradient boosting; stochastic gradient boosting; and CatBoost. These classifiers represent cutting-edge classification technology.

Figure 7 shows how the proposed model was built using the previously mentioned stacking ensemble model and the genetic algorithm. After stacking up several base classifiers, the OSEL model uses a genetic algorithm to figure out the best way to use them together.

An architectural framework for stacking ensemble learning is given in

Figure 8. For the meta-classifier layer, a new feature matrix is generated from the training set, which is used to train the meta-classifier layers. When making a final prediction, the meta-classifier layer chooses which classifier to utilize. One of the most important steps is picking the best possible combination of basic classifiers.

The genetic algorithm is an example of a heuristic optimization technique. The genetic algorithm is commonly used to search input spaces. Enumerating all items is not necessary for the evolutionary algorithm, which is equivalent to using brute force, to achieve its goal of giving the most efficient answer possible. It has the advantage of avoiding performance concerns that can be caused by many queries. In most cases, a genetic algorithm is used to simulate the process of naturalization. The adoption of the probabilistic optimization method allows the evolutionary algorithm to automatically get guidance on the optimal search space. After the optimization is complete, we do not have to worry about setting up the rules again.

Algorithm for Selecting Optimized Base Classifier for Stacking

Our proposed optimized base classifier for stacking uses different base classifiers and meta classifiers that are discussed in this section. Here, f, b, and d are feature vector sets which are generated from the dataset; b represents the base classifiers set which is implemented on different feature sets and d represents the trained base classifiers set. The n input features vector is represented by f = [f1, f2..., fn]. Let b = [b1, b2..., bk] represents the k-train base classifiers. Finally, let d = [d1, d2…, dm] represent the output of the m-train base classifiers.

The following is an example of what the

ith trained base classifier came up with:

Among the basis classifiers, the meta classifier

mc is chosen from the

b vector, thus the final output

p = [p

1, p

2, …, p

n] is represented as follows:

The actual value of the dataset sample is represented by y = [y

1, y

2, …, y

n], and we make use of the trained base classifiers having the highest accuracy in making predictions. Trained base classifiers with a greater prediction accuracy are more effective. It is possible, using the OSEL model, to produce

k additional meta-classifiers as well as the meta-classifier. The best base classifier was chosen by applying the following criteria:

where y

i represents the actual value and p

i represents the predicted value.

5. Implementation of the Proposed OSEL Model

An ensemble learning approach integrates numerous independent learning algorithms, and its performance is often either superior to or comparable to that of a single base classifier. As a result, it has grown in popularity and is proving to be an efficient strategy in the field of machine learning. There are fundamental problems that need to be fixed with the ensemble learning approaches. The first problem is figuring out how to mix base classifiers that are “excellent” and “different.” Accuracy, recall, precision, and F1 score are four prominent measures that are utilized while dealing with categorization issues. In the first place, accuracy can judge the overall judgement skill of the categorization work. The percentage of the actual sample that can be predicted can be calculated using recall. Finding the harmonic mean of the precision score and the recall score is the first step in calculating the F1 score. For the dataset of breast cancer cases in Wisconsin presented in

Table 2, a variety of categorization approaches were evaluated. Based on the different findings and a close look at them, the suggested ensemble model was built on top of the learning models that were chosen. We compared the OSEL model’s performance to other state-of-the-art classification algorithms to demonstrate its efficacy. The algorithms are as follows: k-nearest neighbor, random forest, logistic regression, support vector machine, decision tree, AdaBoostM1, gradient boosting, stochastic gradient boosting, XGBoost classifier, and CatBoost.

Table 2 summarizes the outcomes of implementing a variety of machine learning algorithms that use k-fold cross-validation. Every dataset attribute was considered in the development of these algorithms. We also considered the accuracy of the system’s recall, precision, and F-measure in our evaluations. When compared to other well-known classifiers, our suggested OSEL model does better in terms of recall, precision, and F-measure.

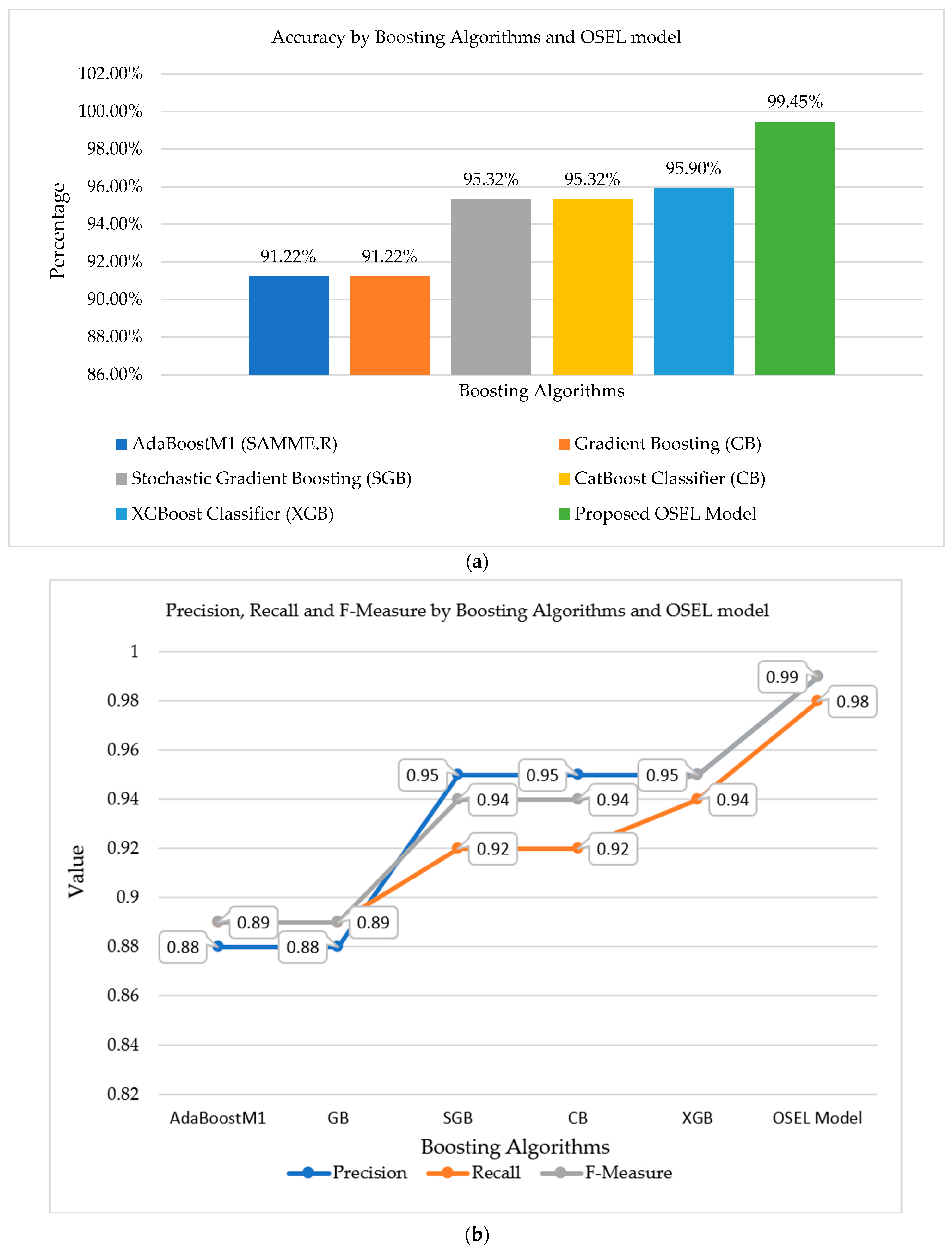

When compared to existing models’ performance metrics, the results of the suggested model reveal that it has shown better results. A diagrammatic representation of all the classifiers used on a given dataset is shown in

Figure 9. With a maximum of 99.45%, the proposed OSEL model is shown in

Figure 9. The decision tree algorithm has the lowest accuracy (91.22%). In

Table 3, the implementation results of five different boosting algorithms along with the proposed OSEL model are mentioned. In boosting algorithms, only CatBoost, XGBoost, and stochastic gradient boosting classifiers achieved maximum accuracies of 95.32%, 95.90%, and 95.32%, respectively. However, the proposed model achieved a maximum accuracy of up to 99.45% as compared to other well-known boosting classifiers.

A graphical representation of all the classifiers applied to the specified dataset is shown in

Figure 10. The proposed classification model (OSEL) had a maximum accuracy of 99.45%, as shown in

Figure 10. AdaBoostM1 (SAMME.R) and gradient boosting (GB) have the lowest accuracy, up to 91.22%, whereas other boosting algorithms reached accuracies of up to 95.9%.

The suggested model’s results clearly show that it outperforms the existing model in terms of performance parameters.

Figure 10 depicts a graphical representation of all the classifiers used on the chosen dataset. According to

Figure 10, the proposed OSEL model had a maximum accuracy of up to 99.45%. The AdaBoostM1 (SAMME.R) algorithm has the lowest accuracy of 91.22%.

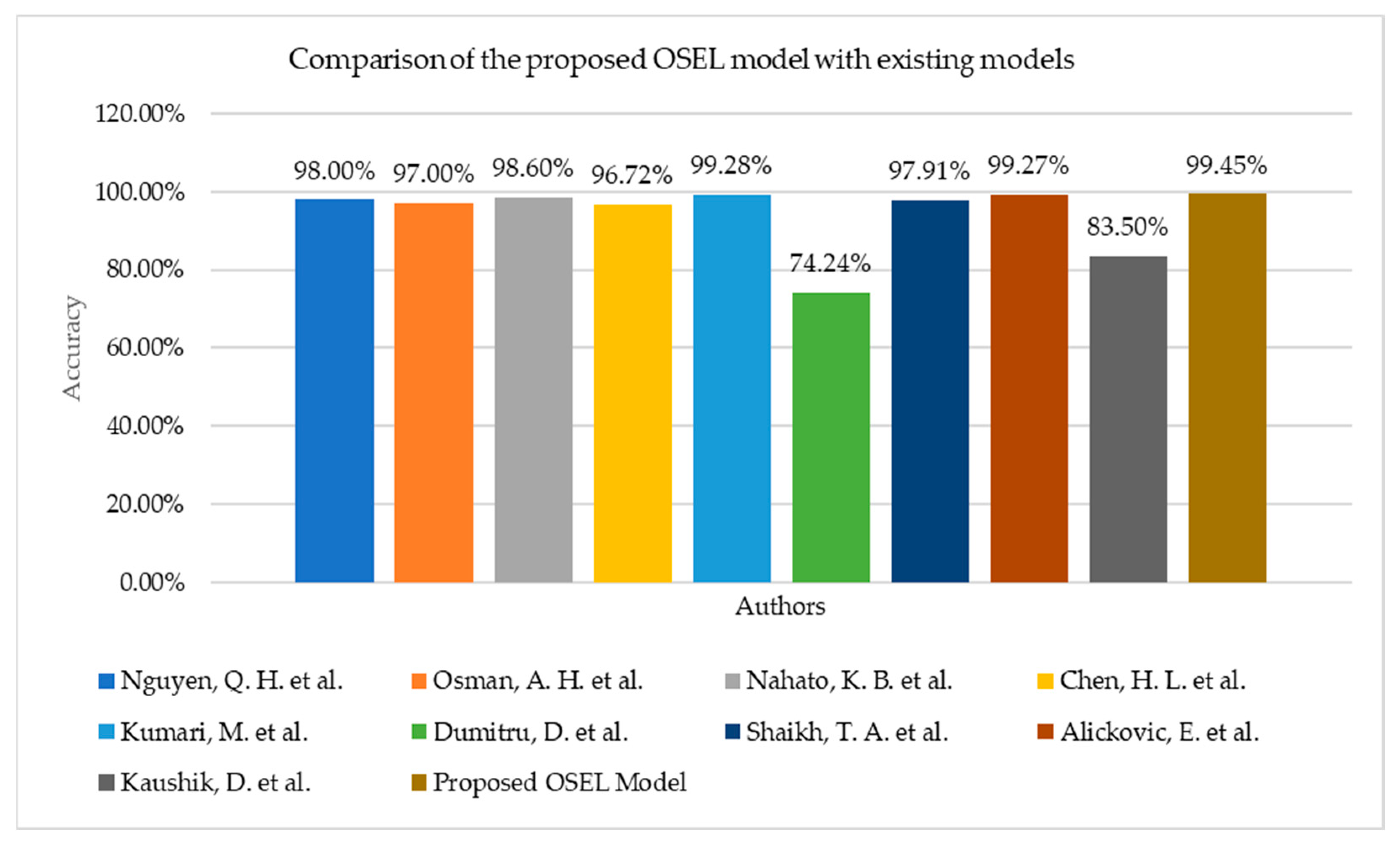

6. Comparison of OSEL Model with Existing Models

In the section below,

Table 4 displays the results of numerous models applied to the same Wisconsin breast cancer dataset by various authors. The proposed OSEL model is significantly more effective at predicting breast cancer in patients. Although a great deal of research has been conducted on the topic, only a small subset of recent contributions was selected for comparison and discussion in this article. As well as varying degrees of precision, each model also has its unique benefits and drawbacks. Therefore, in this investigation, we compare various classification models by using a wide range of classification algorithms, using accuracy as a metric. These elements are considered during the production process. Classifier accuracy, model type, and construction technique are all summarized in

Table 4.

According to the scaling and main component analyses of Nguyen, Q. H. et al. [

31], the ensemble voting technique is effective as a breast cancer prediction model. Subsequently, the random forest method is used to construct the reference model. After performing operations such as principal component analysis and feature scaling on the data, several models are trained and evaluated. The results of the cross-validation show that our model is reliable. The ensemble-voting classifier, SVM tuning, logistics regression, and AdaBoost models were the only ones that reached a minimum of 98% accuracy.

The RBF neural network (RBFNN) was developed by Osman, A. H. et al. [

32], and the properties of the ensemble were incorporated into it. When the accuracy of the proposed method was compared to that of other breast cancer diagnosis methods, such as logistic regression, k-NN, SVM, decision tree, CNN, and naïve Bayes, before and after using ensemble boosting, the proposed method was found to have a higher degree of accuracy than the other methods. Based on a limited set of clinical parameters, they developed a classifier that can determine whether a disease is present. Nahato, K. B. et al. [

33] proposed a rough set indiscernibility relation approach with a backpropagation neural network (RS-BPNN). On the dataset containing breast cancer cases, the suggested technique achieved an accuracy of 98.6%. The suggested system delivers a powerful way to classify clinical datasets that can be used to classify them.

The RS-BPNN approach is employed by Chen, H. L. et al. [

34] to determine the approximate indistinctness of sets. Breast cancer is detected with 98.6% accuracy. This strategy also works well for categorizing cases of breast cancer. The RS reduction method can be used with the SVM, which is used in the suggested method (RS-SVM), to improve diagnostic accuracy even more. The Wisconsin breast cancer dataset is used to test the RS-SVM’s accuracy, sensitivity, specificity, confusion matrix, and receiver operating characteristic (ROC) curves. RS-SVM may be able to accurately categorize things up to 696.72% based on the results of the tests.

Kumari, M. et al. [

35] developed a prediction algorithm for early breast cancer detection by analyzing a small subset of clinical dataset attributes. The potential of the suggested approach is evaluated by contrasting the true and anticipated classification accuracy. The results show that the goal of 99.28% accuracy in categorization was met. Dumitru, D. et al. [

36] examined the potential use of the naïve Bayesian classification approach as an accurate aid in the computer-aided diagnosis of such events using the widely-used Wisconsin prognostic breast cancer dataset. The outcomes proved that when comparing computing effort and speed, the naïve Bayes classifier is the most effective machine learning method. As shown by the results, the best classification accuracy (74.24%) was achieved.

Figure 11 shows the graphical representation of different models implemented by authors on the same Wisconsin breast cancer dataset with their achieved accuracy. Shaikh, T. A. et al. [

37] used Weka’s WrapperSubsetEval dimensionality reduction algorithm on the Wisconsin breast cancer dataset to reduce the dataset’s size. It was found that the naïve Bayes, J48, k-NN, and SVM models had raised their accuracy from 97.91% in the case of the Wisconsin dataset to 99.97% in the closing tests.

Alickovic, E. et al. [

38] used a normalized multi-layer perceptron neural network to design a model for accurately classifying breast cancer. The results reveal a level of accuracy of up to 99.27% based on the findings. This study has a lot of promise when compared to previous ones that used artificial neural networks. To predict the outcome of a biopsy based on attributes gleaned from the dataset, Kaushik, D. et al. [

39] recommended employing a data mining technique based on an ensemble of classifiers. According to the results of this investigation, the accuracy of the findings is 83.5%. With an F1-score of 0.914 and 0.974, respectively, Saxena, A. et al. [

40] compared the performance of AlexNet and MobileNet. They concluded that the latter deep learning approach performs better in classifying the ultrasound images of breast tissues in benign and malignant cancer types. According to Pathak, P. et al. [

41], there are two types of approaches utilized in computer-aided design (CAD) systems: the traditional technique and the artificial intelligence approach. Preprocessing, segmentation, feature extraction, and classification are only a few of the fundamental phases in image processing that are covered by the traditional method. Mangal, A. et al. [

42] used convolutional and deep learning networks for diagnostics that are covered under the AI methodology. The power of machine learning classifiers was used in this study to predict breast cancer. For a common dataset, Jain, V. et al. [

43] implemented three machine learning classifiers—LR, k-NN, and DT—that are used, and the prediction accuracy of each is measured. According to the outcomes obtained by Lahoura, V. et al. [

44] using the Wisconsin diagnostic breast cancer (WBCD) dataset, the cloud-based extreme learning machine (ELM) methodology performs better than competing methods. When comparing standalone and cloud settings, it was discovered that ELM performed well in both cases. Two machine learning models, SVM and RF, are used to evaluate the efficacy of these feature sets. The hog features perform significantly better on these models. With an F1 score of 0.92, the SVM model trained with the hog feature set performs better than the random forest model.

The proposed approach meets the need for a 99.45% accurate optimization model for predicting breast cancer in patients.

Figure 11 depicts a graphical representation of the various models and the accuracy obtained, although the proposed model in this study delivers the highest accuracy when compared to another model. Classification models that employed only one classification method in their model performed worse than those that used a combination of several classifications to generate a model. Kumari, M. et al. [

35] and Alickovic, E. et al. [

38] obtained 99.28% and 99.27% accuracy, respectively. To obtain the highest accuracy, the authors used hybrid classification algorithms.

7. Conclusions

Early detection is crucial because breast cancer is one of the leading causes of mortality in women. Early breast cancer tumor detection can be enhanced with the use of advanced machine learning classifiers. It is common knowledge that the success of improving a model’s predictive performance is dependent on a variety of model variables. An ensemble learning approach integrates numerous independent learning algorithms, and its performance is often either superior to or comparable to that of a single base classifier. As a result, it has grown in popularity and is proving to be an efficient strategy in the field of machine learning. One of the most important problems that need to be fixed concerns ensemble learning approaches. The problem is figuring out how to mix base classifiers that are both “excellent” and “different”. As a solution to these problems, we suggest using a novel model called the OSEL model. The OSEL model incorporates optimization models to determine the most effective combination of base classifiers. We employ the accuracy of the classifiers as the fitness function, and the genetic algorithm is the technique that we use for OSEL’s optimization. The “white box” that is created by stacking allows for a variety of different combinations. The genetic algorithm constantly makes small adjustments to the meta-classifier combination that is stored in the “white box” to achieve the best possible performance. When measured against the other 10 state-of-the-art classification methods created by a variety of authors, OSEL achieves the best or equivalent results in terms of accuracy, recall, and F1 score. Because of this, we have reason to think that OSEL may be a good way to solve classification problems. Although the OSEL performs well when it comes to classification in this work, there is still potential for improvement. OSEL does not consider the best possible choice of base-classifier hyper-parameters for each base-classifier section that is utilized in the ensemble in the work that has been done up until this point. This model was made for the specific purpose of determining whether a patient’s tumors were benign or malignant. The microscopic classification of abnormalities is a promising area for further study. Soon, complicated characteristics can be handled using a multilayered neural network design.

Further research can be carried out to determine their proportionality, and appropriate optimization strategies can be designed for improved future performance. Recent studies in relevant engineering fields, using automatic detection of Alzheimer’s disease progression [

45], centralized convolutional neural network (CNN)-based dual deep Q-learning (DDQN) [

46], two-tier framework based on GoogLeNet and YOLOv3 [

47], feature extraction-based machine learning models [

48], fuzzy convolutional neural networks [

49], hybrid SFNet models [

50], and intuitionistic-based segmentation models [

51] can provide insight if used in related ensemble learning approaches research. There is also room to explore other recent methods [

52,

53].