Abstract

In this study, we classified land cover using SegNet, a deep-learning model, and we assessed its classification accuracy in comparison with the support-vector-machine (SVM) and random-forest (RF) machine-learning models. The land-cover classification was based on aerial orthoimagery with a spatial resolution of 1 m for the input dataset, and Level-3 land-use and land-cover (LULC) maps with a spatial resolution of 1 m as the reference dataset. The study areas were the Namhan and Bukhan River Basins, where significant urbanization occurred between 2010 and 2012. The hyperparameters were selected by comparing the validation accuracy of the models based on the parameter changes, and they were then used to classify four LU types (urban, crops, forests, and water). The results indicated that SegNet had the highest accuracy (91.54%), followed by the RF (52.96%) and SVM (50.27%) algorithms. Both machine-learning models showed lower accuracy than SegNet in classifying all land-cover types, except forests, with an overall-accuracy (OA) improvement of approximately 40% for SegNet. Next, we applied SegNet to detect land-cover changes according to aerial orthoimagery of Namyangju city, obtained in 2010 and 2012; the resulting OA values were 86.42% and 78.09%, respectively. The reference dataset showed that urbanization increased significantly between 2010 and 2012, whereas the area of land used for forests and agriculture decreased. Similar changes in the land-cover types in the reference dataset suggest that urbanization is in progress. Together, these results indicate that aerial orthoimagery and the SegNet model can be used to efficiently detect land-cover changes, such as urbanization, and can be applied for LULC monitoring to promote sustainable land management.

1. Introduction

Land use and land cover (LULC) is categorized according to biophysical characteristics, such as vegetation, water, and artificial architecture; these biophysical characteristics are critical to the energy balance and hydrological cycle, as they affect the albedo and evapotranspiration [1,2]. LULC can vary according to natural and artificial factors, and it has changed rapidly in the postindustrial era as human activities have intensified [2]. Changes in land cover affect environmental systems at the local, regional, and global scales [3], and monitoring the changes in LULC is essential for sustainable development [4]. Thus, there is a need to develop precise land-cover maps to monitor the usage changes; such maps will improve the understanding of biospheric, atmospheric, and hydrological systems, and their sustainable development [5,6]. LULC research plays an important role in meteorology and the sustainable management and exploitation of natural resources. Changes in LULC must be analyzed to manage and develop a sustainable ecosystem, and to understand the social, economic, and environmental consequences of the changes [7,8].

Satellites with a constant view of the Earth have been used to increase the precision of land-cover maps [9,10,11]. The first satellite-based land-cover map was created in 1972 using the Landsat-1 satellite; subsequent land-cover maps were created based on observations obtained using Advanced Very-High-Resolution Radiometers, Satellite Pour l’Observation de la Terre, the Moderate Resolution Imaging Spectroradiometer, and other satellites [12]. During the 1990s and 2000s, land-cover maps of many countries were created and updated using satellite images and high-resolution aerial orthoimagery. Various remote-sensing and digital-photogrammetry techniques have been used to increase the precision of land-cover maps [13,14,15]. Before 2000, K-means and iterative self-organizing data analysis technique (ISODATA) clustering (unsupervised classification techniques) were used for land-cover classification [16]. Following advancements in computer science, supervised classification algorithms based on machine-learning techniques, such as artificial neural networks, support vector machines (SVMs), random forests (RFs), and decision trees, have been widely adopted; these methods are superior to unsupervised classification techniques. Specifically, SVMs and RFs are widely used for land-cover classification [16,17,18,19,20], and deep-learning-based image detection and classification methods have been investigated since the introduction of convolutional neural networks. Furthermore, the semantic-segmentation-classification technique (which has recently received increased attention), while relatively slow and memory intensive, outperforms machine-learning methods [21]. A fully convolutional network is a typical semantic-segmentation model that includes an upsampling path to restore images [22]. However, as a fully convolutional network cannot avoid spatial-data loss during restoration, various methods have been developed to address this limitation [23]. Badrinarayanan et al. developed SegNet in 2017, which outperformed previous models in terms of the precision, learning rate, and learning efficiency [24]. According to Garcia-Garcia et al., SegNet is widely used in the field of image recognition and classification because it is more accurate and efficient than most semantic-segmentation models [25].

This paper is organized as follows: Section 2 outlines the models, study areas, and research data used for classifying land cover and detecting changes; Section 3 details the selection of hyperparameters, and the land-cover classification and changes obtained using each model, to improve the classification precision; Section 4 and Section 5 contain the discussion and conclusion, respectively.

2. Methodology

SegNet, RF, and SVM were trained using aerial orthoimagery and a Level-3 LULC map of the Namhan and Bukhan River Basins. Aerial orthoimagery obtained in 2010 and 2012 was used for the land-cover classification and change detection. The training and validation datasets for each model included the river basins, but excluded the area around Namyangju city, which was used as a test dataset. Parameter optimization was used to select the hyperparameters for each model, and the land cover was classified as urban, crops, forests, or water. The best-performing of the three models was used to classify the land cover and detect changes around Namyangju city.

2.1. Land-Cover Classification

Deep-learning-based semantic-segmentation methods have developed substantially since the introduction of the fully convolutional network [22]; however, such networks are limited by spatial-data loss. Semantic-segmentation models were developed to overcome this restriction [23]. SegNet addresses this limitation using encoder and decoder processes. SegNet learns more efficiently than other models because the memory storage and calculation approaches are well designed, and few training parameters are required [25,26].

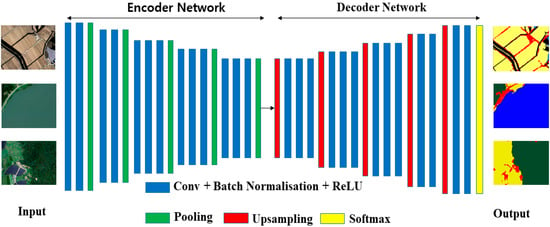

SegNet comprises an encoder that compresses images and a decoder that restores them, as shown in Figure 1. The encoder includes a VGG16-architecture-based convolutional layer and pooling layer. Features are extracted from the convolutional layer, and the image is compressed using the pooling layer. After features have been extracted by the rectified-linear-unit (ReLU) activation function and the image has been compressed, the image is decoded and restored to its original size. Unlike other semantic-segmentation models, the pooling layer used in the SegNet decoder process is identical to that used in the encoder process. Therefore, images can be restored without spatial loss. Following image restoration, the SoftMax function is used to categorize images into multiple classes [24,27].

Figure 1.

Schematic architecture of SegNet for land-cover classification and change detection. The model comprises two steps: an encoding process that compresses the images, followed by a decoding process that restores the images.

Breiman proposed an RF that comprised an ensemble model with a tree-type classifier [28]. This model classifies or regresses via bagging, which is a combination of bootstrapping and aggregation [18]. In bootstrapping, the training dataset is sampled n times to obtain up to n new training datasets, which are then fed into the classifier to train the model. Equal weights are applied to calculate the outputs, which undergo aggregation to generate the final results [29]. Bagging in an RF increases the classification accuracy by reducing the variance of the error [28]. In addition, the restricted input features relative to other decision-forest methods enables tree expansion without pruning. Finally, the out-of-bag (OOB) score can be used to evaluate the accuracy without a test dataset; a low OOB score indicates a better performance [30].

SVMs were introduced by Vapnik et al. [31], and they perform well in classification and regression tests [19]. With this approach, the hyperplane (i.e., a multidimensional plane that separates data between two classes) is calculated, and the maximum margin between a class and the hyperplane is determined. SVMs facilitate geometric analysis through data classification, and they perform well with many-dimensional data [32]. However, the SVM calculation time increases with the data volume; a good performance has been obtained for linear datasets. For nonlinear datasets, SVMs can employ the kernel-trick method, which allows the linear method to be applied after the transformation of the dataset into more than two dimensions.

2.2. Study Area and Datasets

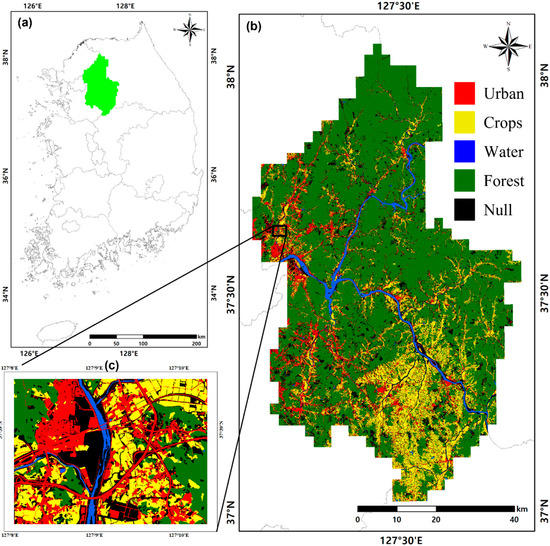

The Namhan and Bukhan River Basins cover an area of 4657 km2, which is predominantly forested. Crops and forests coexist near the Namhan River, and urban areas and forests are present near its middle and lower reaches (127°4′30″~127°51′0″ E, 37°1′30″~38°4′30″ N (Figure 2b)). Namyangju city is in the west of the study region, where many crops and forest areas have been transformed during the rapid urbanization and deforestation that occurred between 2010 and 2012. The land-cover-change detection techniques were tested around Namyangju city (Figure 2c).

Figure 2.

(a) Location of the study area (green shading) in the Republic of Korea. (b) Four land-cover types and a barren land-cover type, derived from Level-3 LULC data for the Namhan and Bukhan River Basins in 2012. (c) Test-dataset area for land-cover-change detection analysis in Namyangju city, Republic of Korea, in 2012.

Red, green, and blue (RGB) aerial orthoimagery provided by the Ministry of Land, Infrastructure, and Transport, and a Level-3 LULC map provided by the Ministry of Environment, were used as the input and reference datasets, respectively. Since 2010, the Ministry of Land, Infrastructure, and Transport has provided annual nationwide (two divisions) aerial orthoimagery with a 1 m spatial resolution. The Level-3 LULC map comprising 41 land-cover types with a 1 m spatial resolution was introduced in 2010; this map is constructed or updated annually (1:5000 scale).

Here, Level-3 LULC maps created in 2010 and 2014, and aerial orthoimagery from 2010 and 2012, were used as input data. SegNet and the RF and SVM machine-learning algorithms were selected for the land-cover classification and change-detection analysis. The input and reference datasets were separated into training, validation, and test datasets. In total, 6000 images (each at 480 × 360 pixels) of the Namhan and Bukhan River Basins, excluding the area around Namyangju city, were selected as the training and validation datasets and randomly sampled at an 8:2 ratio. The test dataset was created from aerial orthoimagery of Namyangju city and a Level-3 LULC map, which were used to create a dataset with 2450 images (each at 480 × 360 pixels).

The 41 classification criteria of the Level-3 LULC map were reclassified into seven land-cover types based on the Level-1 land-cover map. Three of the seven land-cover types were labeled as null and were excluded from the classification owing to their small areas and land-cover-change values; hence, the land cover was classified as urban, crops, forests, or water. The accuracy of each hyperparameter was assessed using the training and validation datasets. The most accurate model was used to create the land-cover-classification map and analyze the detected land-cover changes using the Namyangju city test dataset. Aerial orthoimagery acquired in two different years was used to create land-cover maps, detect changes, and evaluate land cover type-specific changes by comparison with the Level-3 LULC map.

The RF and SVM datasets were constructed using the stratified-random-sampling method, as described by Zhang et al. [33]. For large machine-learning datasets, stratified random sampling reduces the amount of data while ensuring that the ratio remains similar to that in the original dataset; the training time is efficiently decreased by reducing the amount of data, and a similar accuracy is achieved. Stratified random sampling was applied to 2500 data points from the SegNet training and validation datasets. The areal ratio of each land-cover type was maintained, and 250,000 pixels were randomly extracted to construct the training and validation datasets by splitting the data at an 8:2 ratio.

3. Results

3.1. Hyperparameters

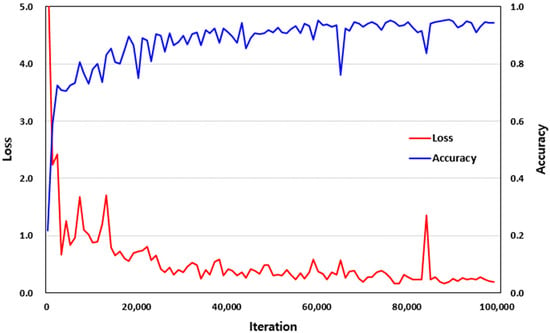

The hyperparameter selection is critical for optimizing machine-learning and deep-learning models [34]. The amount of training data, number of iterations, and batch size were selected as the hyperparameters for the SegNet optimization. The accuracy of 4000 data points was examined, beginning at 500 data points, and increasing in increments of 500. Then, the accuracy of 6000 data points was examined. The accuracy increased as the amount of training data increased from 500 to 2500 data points, and then decreased between 3000 and 3500 data points, and increased again above 4000 data points. The accuracy was lowest with the greatest number of training data points (6000). The maximum accuracy achieved was 91.54%, which was obtained with 2500 data points. The amount of training data did not consistently affect the accuracy. The optimal SegNet training dataset comprised 2500 data points. The model accuracy and loss values were calculated for different iteration numbers, and they converged when the number of iterations reached the threshold. As the number of iterations increased, the loss value decreased, and the accuracy approached 100%. The accuracy significantly decreased at 8000 iterations, and then increased steadily as the number of iterations increased further, and converged at approximately 80,000 iterations. The loss value also varied with the iteration number and converged at approximately 95,000 iterations. Therefore, 100,000 iterations was considered optimal (Figure 3).

Figure 3.

SegNet training curve according to land-cover-classification iterations. The red line indicates loss; the blue line indicates accuracy.

The SegNet batch size represents the number of images input per training session. The batch size was between one and five, depending on the computer specifications. The accuracy increased as the batch size increased, and the maximum accuracy was observed with a batch size of five. Here, we used a batch size of five to train SegNet. The areal ratio for each land-cover type in the training dataset affected the SegNet learning accuracy. The model performance was degraded because of the overfitting of land-cover types that covered large areas and the underfitting of those that represented small areas in the training data. Various class-balancing techniques have been developed to resolve imbalances by weighting the loss function; we employed the median-frequency-balancing technique proposed by Eigen and Fergus [35]. Low weights (≥2) were assigned to the crop and forest land-cover types, which comprised the majority of the study area. The area occupied by water was minimal.

The RF parameter ntree represents the number of trees, and mtry indicates the number of variables input into the tree. The OOB error and accuracy values were calculated for the hyperparameter selection. During the land-cover classification, the RF mtry value was set to 2, and the ntree ranged from 500 to 3000 in increments of 500. As the ntree increased from 500 to 2000, the OOB error decreased, and the accuracy increased. The accuracy decreased when the ntree > 2000. Therefore, the training ntree and mtry RF hyperparameters were set to 2000 and 2, respectively.

The radial-basis-function kernel was used because it delivered the best SVM kernel performance. The kernel was optimized by using the cost and gamma parameters as hyperparameters. The cost parameter represents the learning error and complexity of the model, and the gamma parameter is used for the nonlinear mapping of certain high-dimensional spaces. The hyperparameters were selected based on the grid-search method, and the accuracy was evaluated by varying the gamma from 0.5 to 2.0 and the cost from 4 to 16. The lowest error was obtained when the gamma and cost values were 2.0 and 16, respectively.

3.2. Land-Cover Classification

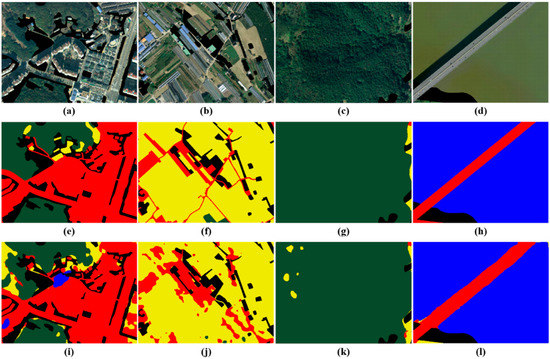

SegNet, SVM, and RF were trained using the tuned hyperparameters of each model. In total, 20 aerial orthoimages of Namyangju city were sampled to create a land-cover map and assess the prediction accuracy of the test dataset (Figure 4). The highest OA of 91.54% was achieved with SegNet, and the overall accuracies of the RF and SVM were 52.96% and 50.27%, respectively (Table 1).

Figure 4.

(a–d) Aerial orthoimagery showing regions dominated by urban, crops, forests, and water. (e–h) Label data derived from a Level-3 LULC map for the regions shown in (a–d), respectively. (i–l) SegNet land-cover-classification results for the regions shown in (a–d), respectively.

Table 1.

Overall accuracies and individual accuracies (%) for each land-cover type for the three land-cover-classification models.

The urban prediction accuracies for SegNet, RF, and SVM were 93.96%, 57.64%, and 56.22%, respectively. The SegNet OA was significantly higher than those of the machine-learning systems. However, SegNet erroneously classified some urban areas as water. The RF and SVM classified the roofs of high-rises with similar colors as crops owing to the RGB aerial orthoimagery, and they classified the shadows from high-rises as forests, which reduced their accuracies. SegNet achieved an accuracy of 87.59% for crops and urban detection, followed by the SVM (55.39%) and RF (54.13%). SegNet classified crops with a relatively low error rate, but the SVM and RF were less accurate, as they erroneously classified forested land as urban rather than crops, and they classified crops with a color similar to water as water. In all three models, the forest-detection accuracy was >80%. Some forests were classified as crops by SegNet, but most forests were accurately classified, regardless of the RGB changes. In contrast, areas with similar RGB colors to water were erroneously classified as water by the RF and SVM. The differences in the SegNet, SVM, and RF prediction accuracies were most pronounced in water. SegNet had an accuracy of 96.32%, and RF and SVM had rates of 18.21% and 6.75%, respectively. Therefore, we can conclude that RF and SVM cannot be used for reliable water classification.

3.3. Land-Cover-Change Detection

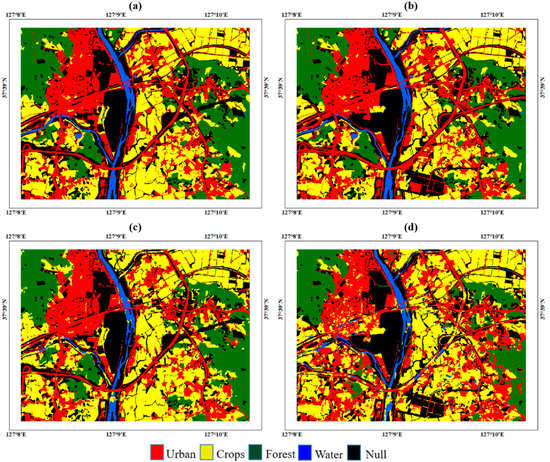

To detect the land-cover changes, the land-cover changes were analyzed using a test dataset containing 49 aerial orthoimages (480 × 360) of Namyangju city. Then, we created land-cover maps of Namyangju city for 2010 and 2012 by applying these aerial orthoimages to the trained SegNet (Figure 5); the resulting OAs for 2010 and 2012 were 86.42% and 78.09%, respectively (Table 2). In the Level-3 LULC map, the reference dataset indicated that the urban area had increased, whereas the other land-cover types had decreased. Crops showed the greatest reduction in area, followed by urban areas. The barren-land area also increased significantly, as crops and forests were rapidly converted to barren land and then urbanized. The reference dataset also showed rapid urbanization from 2010 to 2012. However, the increase in the urban area was greater in our land-cover maps than in the reference dataset due to the frequent misclassification of forests as urban areas. Changes in crops were well represented in our land-cover maps, whereas forests decreased significantly in our maps compared with the reference dataset, again due to the forest misclassification as urban or crops. The area covered by water increased according to our land-cover maps, in contrast with the reference dataset; this discrepancy was caused by the misclassification of roads as water. These results indicate that urbanization was accurately detected by the model applied in this study, with results that were similar to the reference dataset.

Figure 5.

Level-3 LULC maps and SegNet land-cover-classification maps of Namyangju city, Republic of Korea. Level-3 LULC maps for (a) 2010 and (b) 2012. SegNet land-cover-classification maps for (c) 2010 and (d) 2012.

Table 2.

Level-3 LULC map and SegNet land-cover-classification map and changes in the area (m2) of each land-cover type between 2010 and 2012 in Namyangju city, Republic of Korea.

Table 3 shows the confusion matrices for the 2010 and 2012 Level-3 LULC maps and SegNet land-cover-classification maps. In the 2010 Level-3 LULC map, the greatest change in area was from barren land to urban areas, followed by crops to urban areas and barren land to crops. However, none of the land-cover types changed to forests, which suggests that the forests decreased as the urban areas increased, which is consistent with progressive urbanization. Similar trends were observed in the SegNet land-cover-classification map, where the greatest change in land-cover type was from crops to urban, followed by urban to crops, forests to urban, forests to crops, and bare land to urban areas. The changes from urban to crops, forests to urban, and forests to crops were similar between the trained SegNet and reference datasets, although some forests were misclassified as urban or crops. Many nonurban areas in Namyangju city changed to urban areas due to urbanization, whereas others changed to crops or bare land because urban areas were occasionally classified as crops or bare land in the SegNet land-cover-classification results. In some areas that did not contain surface water, roads were misclassified as water, possibly because the RGB spectrum of water is similar to that of roads.

Table 3.

Changes in area for each land-cover type in the Level-3 LULC map and SegNet land-cover-classification map in 2010 and 2012.

4. Discussion

In this study, SegNet and RGB-based aerial orthoimagery were used to perform land-cover classification based on four land-cover types in Namyangju city, with an accuracy value of 91.54%. The land-cover-change detection accuracy values were 86.42% and 78.09% for 2010 and 2012, respectively.

Marcal et al. applied the fuzzy clustering, SVM, K-nearest-neighbor (KNN), and logistic classification algorithms to National Aeronautics and Space Administration (NASA) Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER) images, and they found that the KNN algorithm showed the highest land-cover-classification accuracy (72.2%), followed by the fuzzy classifier (70.5%) and SVM (60.9%) [36]. Cots-Folch et al. reported an accuracy of 71–74% for a neural-network method applied to 5 m-resolution aerial orthoimagery of Denominació d’Origen Qualificada Priorat in Catalonia, Spain, taken in 1986, 1998, and 2003 [37]. Cleve et al. reported an accuracy of 65% for the ISODATA unsupervised classification of land-cover types (urban, forests, and grassland) in aerial orthoimages with a spatial resolution of 0.15 m; however, the accuracy increased to approximately 80% when supervised image segmentation was applied. All of these land-cover-mapping studies, which used aerial orthoimagery, showed >70% accuracy [38]; however, the accuracy of the SegNet used in the present study was higher than those in all of these previous studies because, rather than relying on individual RGB pixel values, SegNet also calculates the values of the surrounding pixels, without losing spatial information during the encoding and decoding processes. Thus, because pixel-based classifiers are limited by the RGB-based classification [39], the SegNet model outperforms conventional classification models when RGB-based aerial orthoimagery is used.

Various studies have classified land cover using RGB-based unmanned-aerial-vehicle (UAV) aerial orthoimagery and SegNet. Pashaei et al. used UAV images with a spatial resolution of 0.03 m to classify 4 land-cover types [40], and Ning et al. used aerial orthoimagery with a spatial resolution of 0.5 m to classify 10 land-cover types, with 88% and 90% classification accuracies, respectively [41], which were lower than the accuracy obtained in the present study. Guo et al. reported an OA of 83.97% for six land-cover types, classified using a digital surface model and aerial orthoimagery with a spatial resolution of 0.05 m [42]; our study showed a higher accuracy because the spatial resolution of the aerial orthoimages was lower, and fewer land-cover types were used in the present study.

Several studies have applied RGB data obtained from high-resolution satellite images, rather than aerial orthoimages, to perform land-cover classification. For example, Zhang et al. reported an OA of 78.83% for seven land-cover types classified based on satellite images with a spatial resolution of 0.5 m [43], and Li et al. reported OA values of 80.30% and 80.03% for six land-cover types classified using two satellite images with spatial resolutions of 2 and 4 m, respectively [44]. Notably, lower accuracy was obtained based on RBG data than on aerial orthoimagery due to the impacts of spatial resolution and clouds on the satellite images.

Some studies have classified land cover using various indices that are calculated using near-infrared (NIR) data from multispectral images. Unlike aerial orthoimages, which typically use only the RGB spectrum, multispectral images present data from various wavelengths. Laban et al. used 10 bands of data from Sentinel satellite images with a spatial resolution of 10 m, and they reported an OA of 71% for eight land-cover types [45]. Zhang et al. used the RGB and NIR bands of ZY-3 images with a spatial resolution of 5.8 m, and they reported an OA of 79.4% for six land-cover types [46]. Furthermore, Atik and Ipbuker used the RGB and NIR bands of Sentinel images with a spatial resolution of 10 m, and QuickBird images with a spatial resolution of 0.61 m, to calculate three indices, and they used seven types of information to classify three land-cover types. They reported that the OA was 92.48%, which was higher than the OA reported in the present study [47]. Specifically, the land-cover-classification accuracy improved when NIR and various indices were used along with the RGB images. Zhang et al. compared four combinations of WorldView-2 and WorldView-3 satellite data (RGB with four bands, C infrared with eight bands) to perform land-cover classification, and they found that the eight-band combination showed the highest accuracy, whereas the RGB combination showed the lowest accuracy [34]. Therefore, the application of various bands and indices along with RGB can improve the classification accuracy, even within the same model.

Urban infrastructure has increased, and forests have decreased, owing to urbanization in the Namhan and Bukhan River Basins, as revealed by the changes in the LULC revealed in this study. It may be possible to preserve the ecosystem and restore biodiversity through efficient urban development and forest preservation.

5. Conclusions

In this study, the OA of the land-cover classification into four land-cover types using SegNet and aerial orthoimagery was 91.54%, which is higher than those reported in previous studies. The land-cover-change detection accuracy levels for the 2010 and 2012 SegNet land-cover-classification maps based on 49 aerial orthoimages of Namyangju city were 86.42% and 78.09%, respectively, demonstrating a high performance. Our change-detection analysis using Level-3 LULC maps, which served as a reference dataset, did not show a significant change between 2010 and 2012; however, the land-cover changes between 2010 and 2012 determined using SegNet were more dynamic than those indicated in the reference dataset due to the misclassification of crops and forests as urban. However, due to the limitations of this study, some land-cover types showed low land-cover-change detection accuracies. To address this problem, multispectral images, from which various bands and indices, such as vegetation indices, can be calculated to improve the accuracy of the land-cover classification and change detection.

Based on these results, the South Korea land-cover map can be updated every 2 years, and high-resolution land-cover maps can be produced using not only aerial orthoimagery, but also satellite data. This study could inform guidelines for urban management systems and the sustainable development of forests and crops in South Korea.

Author Contributions

Conceptualization, S.-H.L.; Formal analysis, S.S. and S.-H.L.; Funding acquisition, J.K.; Investigation, S.S. and S.-H.L.; Software, J.B. and D.L.; Supervision, J.K.; Visualization, M.R. and S.-R.P.; Writing—original draft, S.S.; Writing—review & editing, D.S. and J.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Korea Institute of Marine Science and Technology Promotion (KIMST), funded by the Ministry of Oceans and Fisheries, Korea (202204230001), and it was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT, MOE) and (No. 2019M3E7A1113103).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kaul, H.A.; Sopan, I. LULC classification and change detection using high resolution temporal satellite data. J. Environ. 2012, 1, 146–152. [Google Scholar]

- Zhu, Z.; Woodcock, C.E. Continuous change detection and classification of land cover using all available Landsat data. Remote Sens. Environ. 2014, 144, 152–171. [Google Scholar] [CrossRef]

- Alemu, B.; Garedew, E.; Eshetu, Z.; Kassa, H. Land use and land cover changes and associated driving forces in north western lowlands of Ethiopia. Int. Res. J. Agric. Sci. Soil Sci. 2015, 5, 28–44. [Google Scholar]

- Olorunfemi, I.E.; Fasinmirin, J.T.; Olufayo, A.A.; Komolafe, A.A. GIS and remote sensing-based analysis of the impacts of land use/land cover change (LULCC) on the environmental sustainability of Ekiti State, southwestern Nigeria. Environ. Dev. Sustain. 2020, 22, 661–692. [Google Scholar] [CrossRef]

- Chen, J.; Gong, P.; He, C.; Pu, R.; Shi, P. Land-use/land-cover change detection using improved change-vector analysis. Photogramm. Eng. Remote Sens. 2003, 69, 369–379. [Google Scholar] [CrossRef]

- Yirsaw, E.; Wu, W.; Shi, X.; Temesgen, H.; Bekele, B. Land use/land cover change modeling and the prediction of subsequent changes in ecosystem service values in a coastal area of China, the Su-Xi-Chang Region. Sustainability 2017, 9, 1204. [Google Scholar] [CrossRef]

- Hussain, S.; Lu, L.; Mubeen, M.; Nasim, W.; Karuppannan, S.; Fahad, S.; Tariq, A.; Mousa, B.G.; Mumtaz, F.; Aslam, M. Spatiotemporal variation in land use land cover in the response to local climate change using multispectral remote sensing data. Land 2022, 11, 595. [Google Scholar] [CrossRef]

- Hussain, S.; Mubeen, M.; Karuppannan, S. Land use and land cover (LULC) change analysis using TM, ETM+ and OLI Landsat images in district of Okara, Punjab, Pakistan. Phys. Chem. Earth Parts A/B/C 2022, 126, 103117. [Google Scholar] [CrossRef]

- DeFries, R.S.; Townshend, J.R.G. NDVI-derived land cover classifications at a global scale. Int. J. Remote Sens. 1994, 15, 3567–3586. [Google Scholar] [CrossRef]

- Hansen, M.C.; DeFries, R.S.; Townshend, J.R.; Sohlberg, R. Global land cover classification at 1 km spatial resolution using a classification tree approach. Int. J. Remote Sens. 2020, 21, 1331–1364. [Google Scholar] [CrossRef]

- Loveland, T.R.; Reed, B.C.; Brown, J.F.; Ohlen, D.O.; Zhu, Z.; Yang, L.W.M.J.; Merchant, J.W. Development of a global land cover characteristics database and IGBP DISCover from 1 km AVHRR data. Int. J. Remote Sens. 2020, 21, 1303–1330. [Google Scholar] [CrossRef]

- Belward, A.S.; Skøien, J.O. Who launched what, when and why; trends in global land-cover observation capacity from civilian earth observation satellites. ISPRS J. Photogramm. Remote Sens. 2015, 103, 115–128. [Google Scholar] [CrossRef]

- Gómez, C.; White, J.C.; Wulder, M.A. Optical remotely sensed time series data for land cover classification: A review. ISPRS J. Photogramm. Remote Sens. 2016, 116, 55–72. [Google Scholar] [CrossRef]

- Vogelmann, J.E.; Howard, S.M.; Yang, L.; Larson, C.R.; Wylie, B.K.; Van Driel, N. Completion of the 1990s National Land Cover Data Set for the conterminous United States from Landsat Thematic Mapper data and ancillary data sources. Photogramm. Eng. Remote Sens. 2001, 67, 650–655, 657–659, 661–662. [Google Scholar]

- Wulder, M.A.; White, J.C.; Goward, S.N.; Masek, J.G.; Irons, J.R.; Herold, M.; Cohen, W.B.; Loveland, T.R.; Woodcock, C.E. Landsat continuity: Issues and opportunities for land cover monitoring. Remote Sens. Environ. 2008, 112, 955–969. [Google Scholar] [CrossRef]

- Talukdar, S.; Singha, P.; Mahato, S.; Praveen, B.; Rahman, A. Dynamics of ecosystem services (ESs) in response to LULC (LU/LC) changes in the lower Gangetic plain of India. Ecol. Indic. 2020, 112, 106121. [Google Scholar] [CrossRef]

- Rogan, J.; Miller, J.; Stow, D.; Franklin, J.; Levien, L.; Fischer, C. Land-cover change monitoring with classification trees using Landsat TM and ancillary data. Photogramm. Eng. Remote Sens. 2003, 69, 793–804. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Otukei, J.R.; Blaschke, T. Land cover change assessment using decision trees, support vector machines and maximum likelihood classification algorithms. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, S27–S31. [Google Scholar] [CrossRef]

- Thanh Noi, P.; Kappas, M. Comparison of random forest, k-nearest neighbor, and support vector machine classifiers for land cover classification using Sentinel-2 imagery. Sensors 2017, 18, 18. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Guo, X.; Nie, R.; Cao, J.; Zhou, D.; Qian, W. Fully convolutional network-based multifocus image fusion. Neural Comput. 2018, 30, 1775–1800. [Google Scholar] [CrossRef] [PubMed]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Garcia-Rodriguez, J. A review on deep learning techniques applied to semantic segmentation. arXiv 2017, arXiv:1704.06857. [Google Scholar]

- Lee, S.H.; Han, K.J.; Lee, K.; Lee, K.J.; Oh, K.Y.; Lee, M.J. Classification of Landscape Affected by Deforestation Using High-Resolution Remote Sensing Data and Deep-Learning Techniques. Remote Sens. 2020, 12, 3372. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Segment-before-detect: Vehicle detection and classification through semantic segmentation of aerial images. Remote Sens. 2017, 9, 368. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Chan, J.C.W.; Paelinckx, D. Evaluation of Random Forest and Adaboost tree-based ensemble classification and spectral band selection for ecotope classification using airborne hyperspectral imagery. Remote Sens. Environ. 2008, 112, 2999–3011. [Google Scholar] [CrossRef]

- Vapnik, V.; Guyon, I.; Hastie, T. Support vector machines. Mach. Learn. 1995, 20, 273–297. [Google Scholar]

- Huang, C.; Davis, L.S.; Townshend, J.R.G. An assessment of support vector machines for land cover classification. Int. J. Remote Sens. 2002, 23, 725–749. [Google Scholar] [CrossRef]

- Zhang, P.; Ke, Y.; Zhang, Z.; Wang, M.; Li, P.; Zhang, S. Urban land use and land cover classification using novel deep learning models based on high spatial resolution satellite imagery. Sensors 2018, 18, 3717. [Google Scholar] [CrossRef]

- Bardenet, R.; Brendel, M.; Kégl, B.; Sebag, M. Collaborative hyperparameter tuning. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 199–207. [Google Scholar]

- Eigen, D.; Fergus, R. Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2650–2658. [Google Scholar]

- Marçal, A.R.S.; Borges, J.S.; Gomes, J.A.; Pinto Da Costa, J.F. Land cover update by supervised classification of segmented ASTER images. Int. J. Remote Sens. 2005, 26, 1347–1362. [Google Scholar] [CrossRef]

- Cots-Folch, R.; Aitkenhead, M.J.; Martínez-Casasnovas, J.A. Mapping land cover from detailed aerial photography data using textural and neural network analysis. Int. J. Remote Sens. 2007, 28, 1625–1642. [Google Scholar] [CrossRef]

- Cleve, C.; Kelly, M.; Kearns, F.R.; Moritz, M. Classification of the wildland–urban interface: A comparison of pixel-and object-based classifications using high-resolution aerial photography. Comput. Environ. Urban Syst. 2008, 32, 317–326. [Google Scholar] [CrossRef]

- Li, J.; Bortolot, Z.J. Quantifying the impacts of land cover change on catchment-scale urban flooding by classifying aerial images. J. Clean. Prod. 2022, 344, 130992. [Google Scholar] [CrossRef]

- Pashaei, M.; Kamangir, H.; Starek, M.J.; Tissot, P. Review and evaluation of deep learning architectures for efficient land cover mapping with UAS hyper-spatial imagery: A case study over a wetland. Remote Sens. 2020, 12, 959. [Google Scholar] [CrossRef]

- Ning, H.; Li, Z.; Wang, C.; Yang, L. Choosing an appropriate training set size when using existing data to train neural networks for land cover segmentation. Ann. GIS 2020, 26, 329–342. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, F.; Xiang, Y.; You, H. DGFNet: Dual Gate Fusion Network for Land Cover Classification in Very High-Resolution Images. Remote Sens. 2021, 13, 3755. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, L.; Chen, X.; Gao, Y.; Xie, S.; Mi, J. GLC_FCS30: Global land-cover product with fine classification system at 30 m using time-series Landsat imagery. Earth Syst. Sci. Data 2021, 13, 2753–2776. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, Y.; Zhang, Y.; Zhong, L.; Wang, J.; Chen, J. DKDFN: Domain Knowledge-Guided deep collaborative fusion network for multimodal unitemporal remote sensing land cover classification. ISPRS J. Photogramm. Remote Sens. 2022, 186, 170–189. [Google Scholar] [CrossRef]

- Laban, N.; Abdellatif, B.; Ebeid, H.M.; Shedeed, H.A.; Tolba, M.F. Sparse Pixel Training of Convolutional Neural Networks for Land Cover Classification. IEEE Access 2021, 9, 52067–52078. [Google Scholar] [CrossRef]

- Zhang, C.; Harrison, P.A.; Pan, X.; Li, H.; Sargent, I.; Atkinson, P.M. Scale Sequence Joint Deep Learning (SS-JDL) for land use and land cover classification. Remote Sens. Environ. 2020, 237, 111593. [Google Scholar] [CrossRef]

- Atik, S.O.; Ipbuker, C. Integrating convolutional neural network and multiresolution segmentation for land cover and land use mapping using satellite imagery. Appl. Sci. 2021, 11, 5551. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).