Image Recognition-Based Architecture to Enhance Inclusive Mobility of Visually Impaired People in Smart and Urban Environments

Abstract

1. Introduction

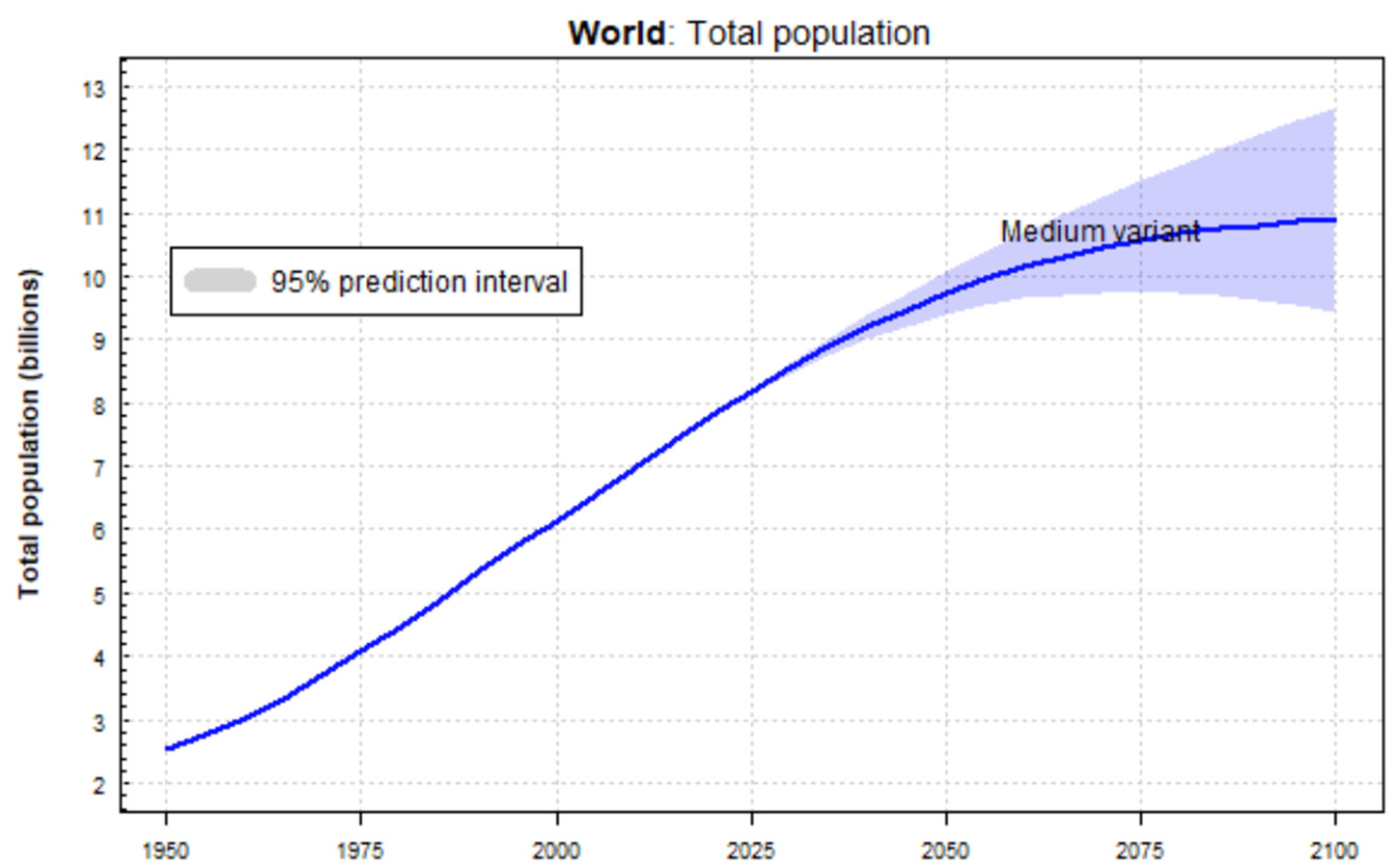

2. Urban Mobility Challenges, Trends, and Inclusiveness

3. Related Work on VIP

3.1. Navigation of Visually Impaired People

3.2. Outdoor Positioning Using Landmarks

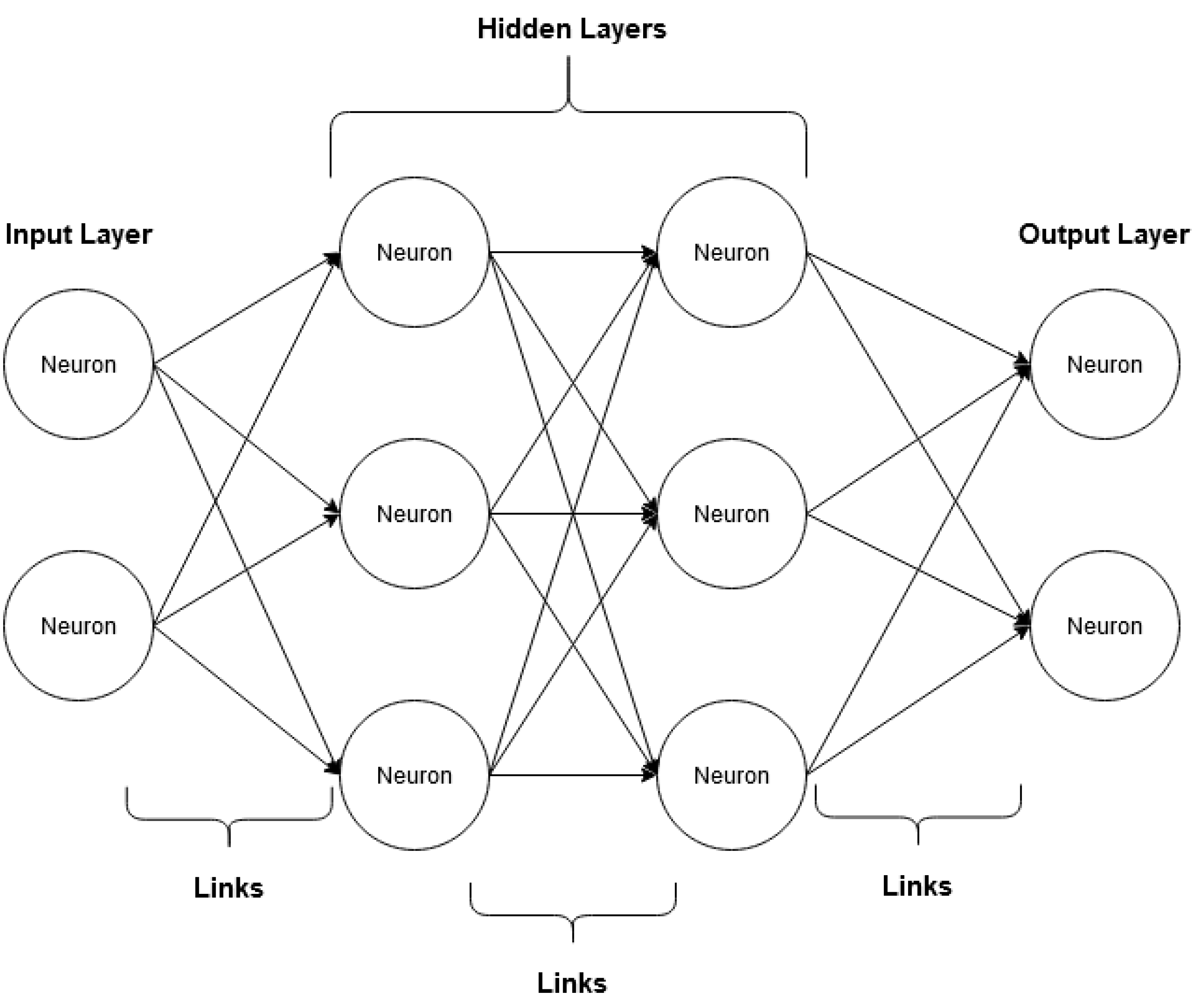

3.3. The Role of Machine Learning in Image Recognition

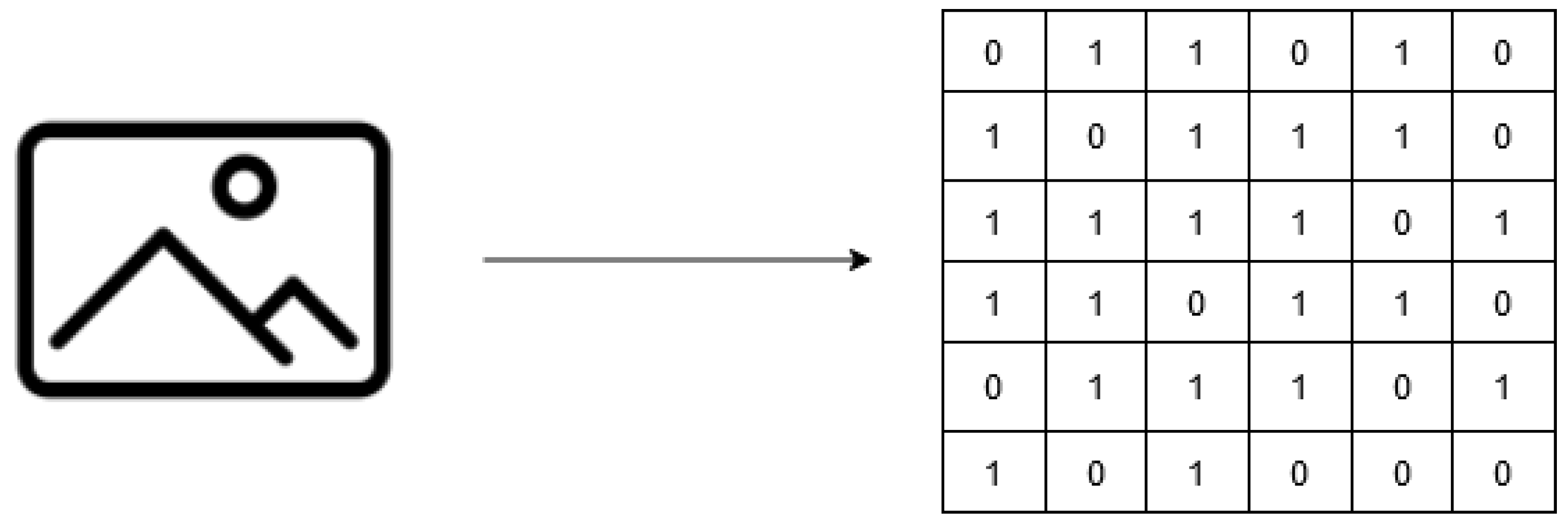

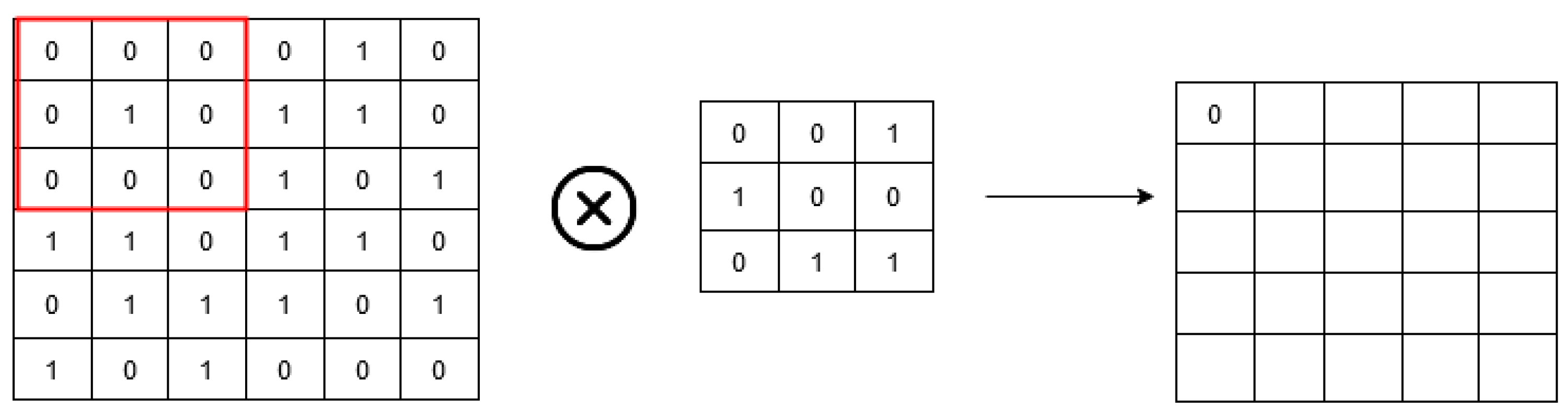

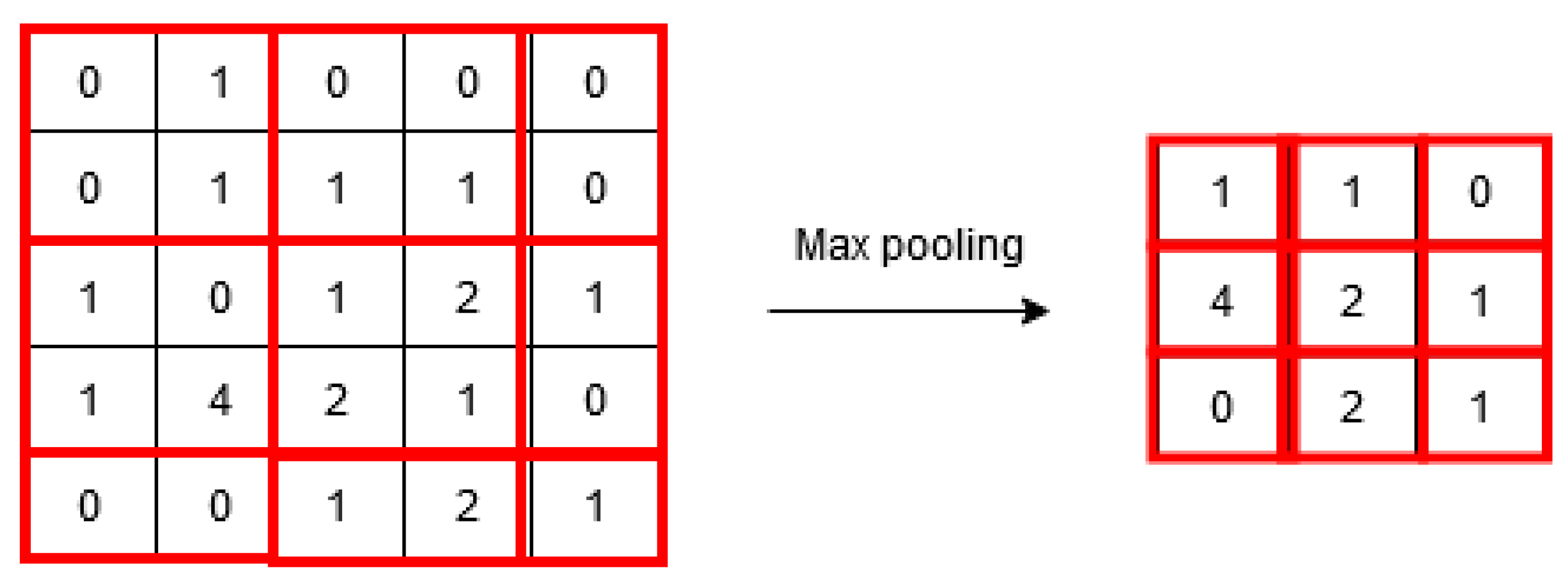

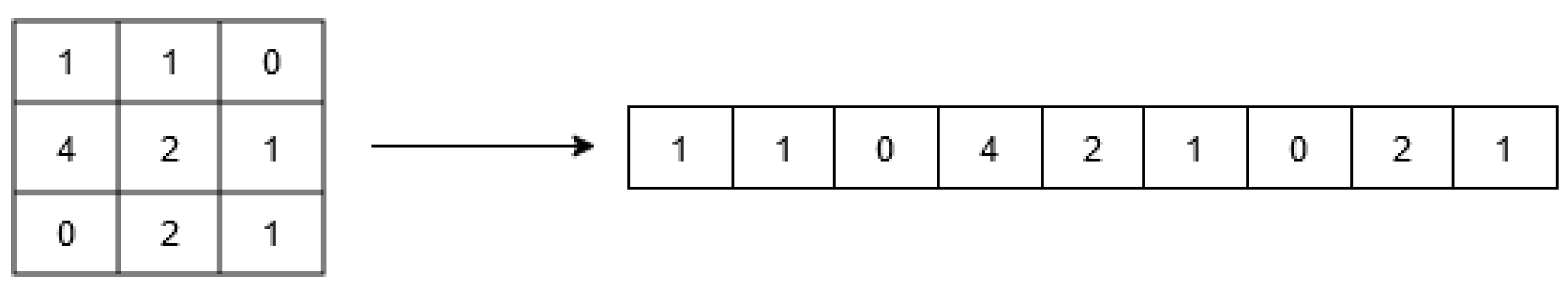

3.4. Convolutional Neural Networks for Image Recognition

- Data input

- Convolutional layer;

- Pooling layer;

- Flattening.

- Filter;

- Characteristics map.

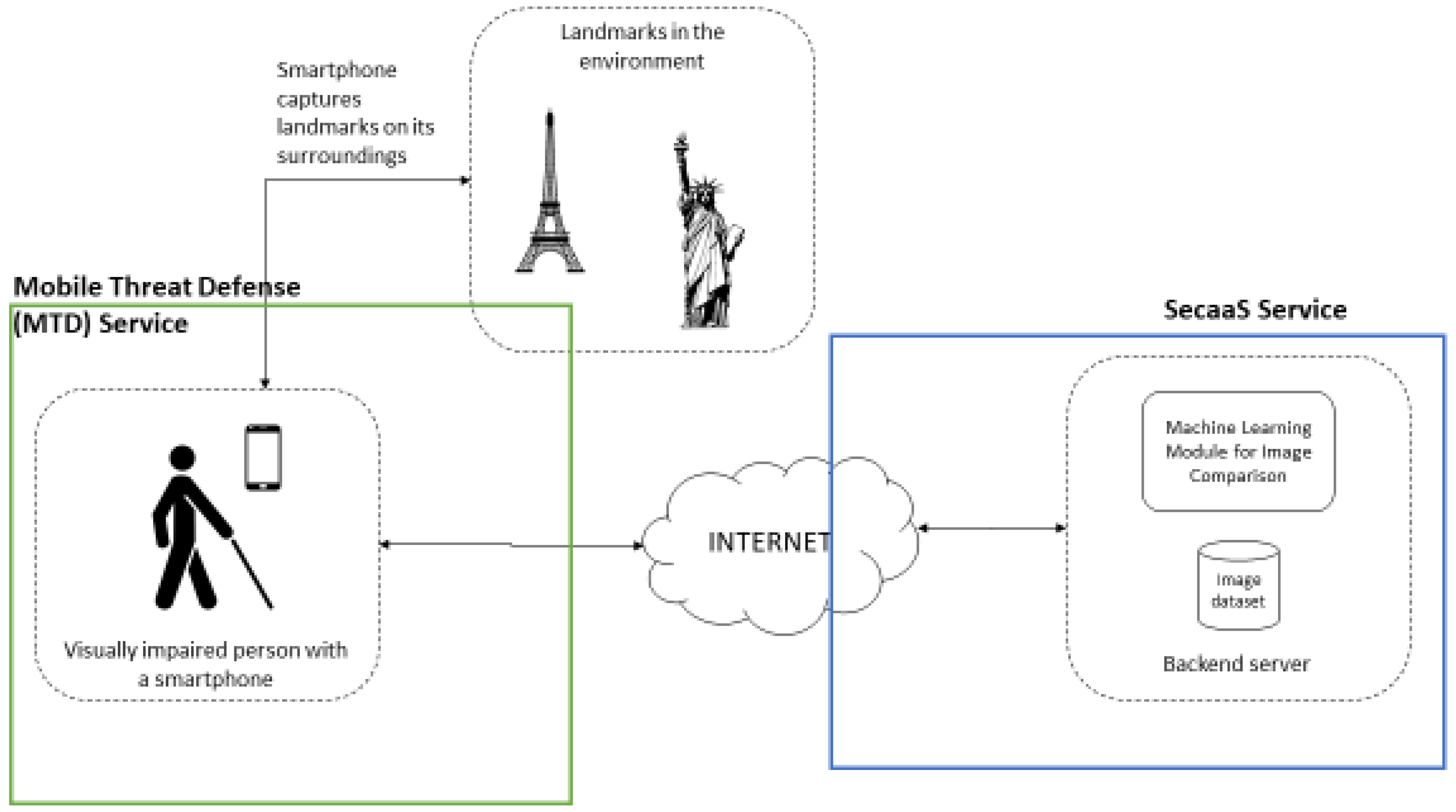

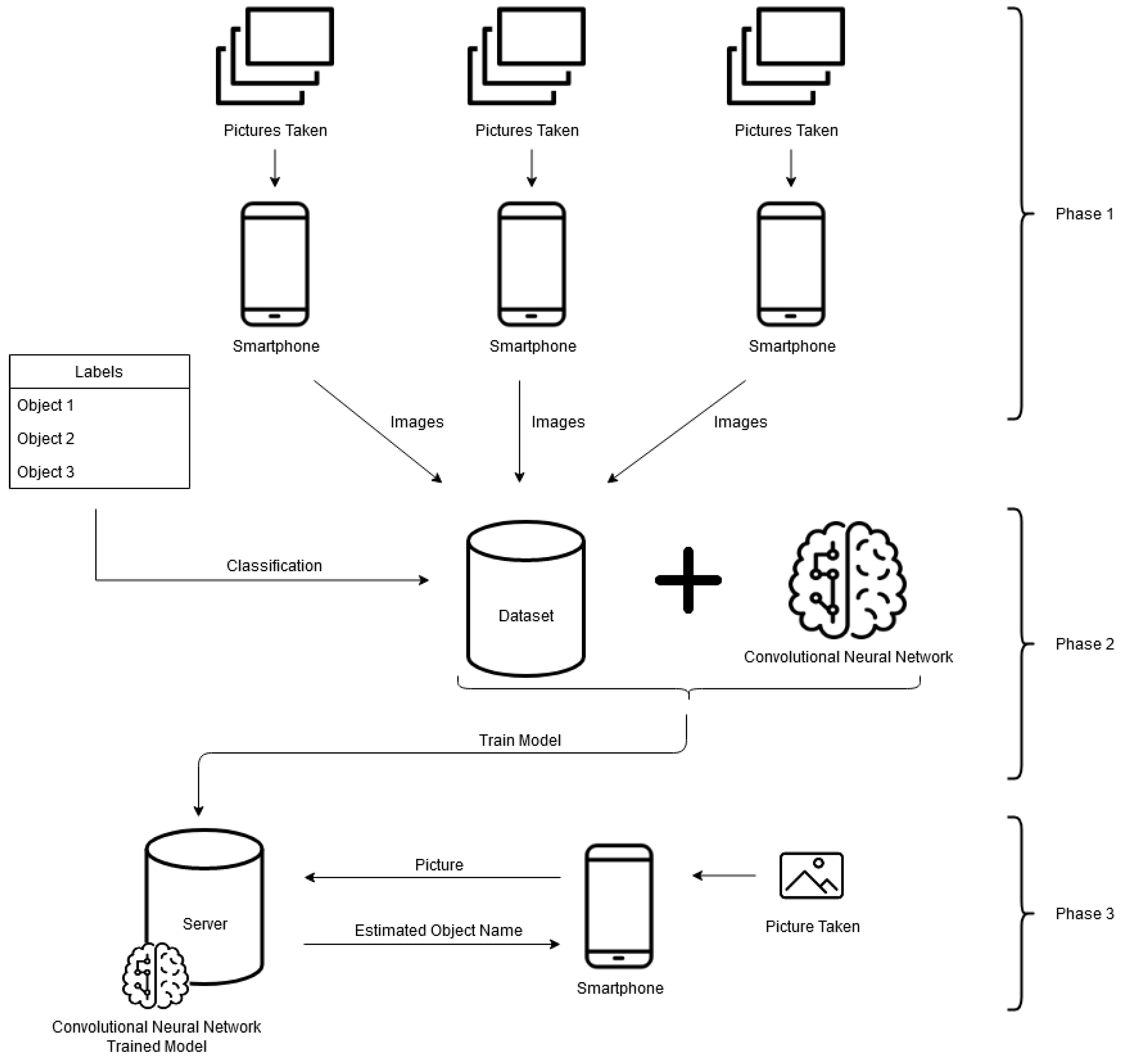

4. Proposed OPIL Framework

4.1. Overview and Architecture

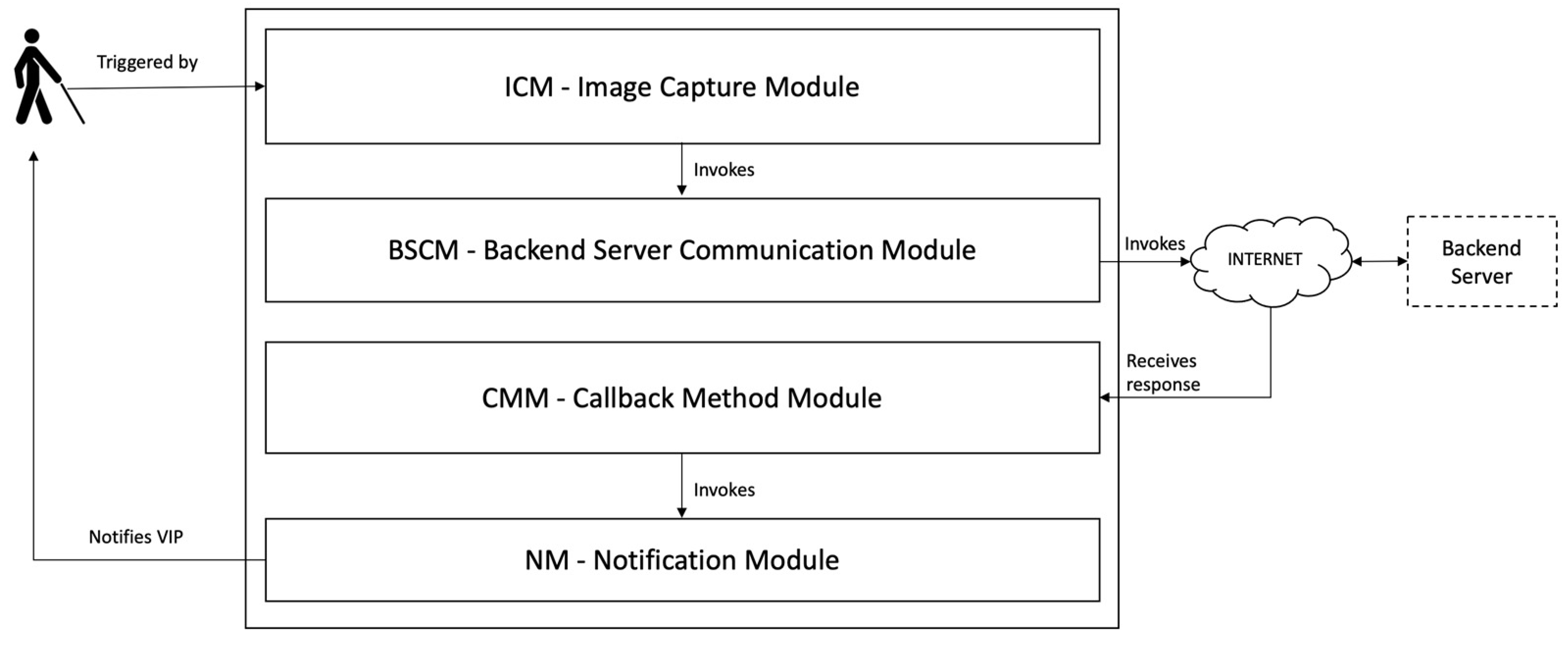

4.2. Components

4.2.1. Mobile Application

4.2.2. Backend Server and Proposed Algorithm

4.3. Framework Security Aspects

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Giduthuri, V.K. Sustainable Urban Mobility: Challenges, Initiatives and Planning. Curr. Urban Stud. 2015, 3, 261–265. [Google Scholar] [CrossRef][Green Version]

- Bezbradica, M.; Ruskin, H. Understanding Urban Mobility and Pedestrian Movement. In Smart Urban Development; IntechOpen: London, UK, 2019. [Google Scholar] [CrossRef]

- Esztergár-Kiss, D.; Mátrai, T.; Aba, A. MaaS framework realization as a pilot demonstration in Budapest. In Proceedings of the 2021 7th International Conference on Models and Technologies for Intelligent Transportation Systems (MT-ITS), Heraklion, Greece, 16–17 June 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Riazi, A.; Riazi, F.; Yoosfi, R.; Bahmeei, F. Outdoor difficulties experienced by a group of visually impaired Iranian people. J. Curr. Ophthalmol. 2016, 28, 85–90. [Google Scholar] [CrossRef] [PubMed]

- Lakde, C.K.; Prasad, D.P.S. Review Paper on Navigation System for Visually Impaired People. Int. J. Adv. Res. Comput. Commun. Eng. 2015, 4, 166–168. [Google Scholar] [CrossRef]

- WHO. Blindness and Visual Impairment. Available online: https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment (accessed on 29 July 2022).

- ANACOM. ACAPO—Associação dos Cegos e Amblíopes de Portugal. Available online: https://www.anacom.pt/render.jsp?categoryId=36666 (accessed on 29 July 2022).

- ACAPO. Deficiência Visual. Available online: http://www.acapo.pt/deficiencia-visual/perguntas-e-respostas/deficiencia-visual#quantas-pessoas-com-deficiencia-visual-existem-em-portugal-202 (accessed on 29 July 2022).

- Brito, D.; Viana, T.; Sousa, D.; Lourenço, A.; Paiva, S. A mobile solution to help visually impaired people in public transports and in pedestrian walks. Int. J. Sustain. Dev. Plan. 2018, 13, 281–293. [Google Scholar] [CrossRef]

- Paiva, S.; Gupta, N. Technologies and Systems to Improve Mobility of Visually Impaired People: A State of the Art. In EAI/Springer Innovations in Communication and Computing; Springer: Berlin/Heidelberg, Germany, 2020; pp. 105–123. [Google Scholar] [CrossRef]

- Heiniz, P.; Krempels, K.H.; Terwelp, C.; Wuller, S. Landmark-based navigation in complex buildings. In Proceedings of the 2012 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sydney, NSW, Australia, 13–15 November 2012. [Google Scholar] [CrossRef]

- Giffinger, R.; Fertner, C.; Kramar, H.; Pichler-Milanovic, N.Y.; Meijers, E. Smart Cities Ranking of European Medium-Sized Cities; Centre of Regional Science, Universidad Tecnológica de Viena: Vienna, Austria, 2007. [Google Scholar]

- Arce-Ruiz, R.; Baucells, N.; Moreno Alonso, C. Smart Mobility in Smart Cities. In Proceedings of the XII Congreso de Ingeniería del Transporte (CIT 2016), Valencia, Spain, 7–9 June 2016. [Google Scholar] [CrossRef]

- Van Audenhove, F.; Dauby, L.; Korniichuk, O.; Poubaix, J. Future of Urban Mobility 2.0. Arthur D. Little; Future Lab. International Association for Public Transport (UITP): Brussels, Belgium, 2014. [Google Scholar]

- Neirotti, P. Current trends in Smart City initiatives: Some stylised facts. Cities 2012, 38, 25–36. [Google Scholar] [CrossRef]

- Manville, C.; Cochrane, G.; Cave, J.; Millard, J.; Pederson, J.; Thaarup, R.; Liebe, A.; Wissner, W.M.; Massink, W.R.; Kotterink, B. Mapping Smart Cities in the EU; Department of Economic and Scientific Policy: Luxembourg, 2014. [Google Scholar]

- Carneiro, D.; Amaral, A.; Carvalho, M.; Barreto, L. An Anthropocentric and Enhanced Predictive Approach to Smart City Management. Smart Cities 2021, 4, 1366–1390. [Google Scholar] [CrossRef]

- Groth, S. Multimodal divide: Reproduction of transport poverty in smart mobility trends. Transp. Res. Part A Policy Pract. 2019, 125, 56–71. [Google Scholar] [CrossRef]

- Paiva, S.; Ahad, M.A.; Tripathi, G.; Feroz, N.; Casalino, G. Enabling Technologies for Urban Smart Mobility: Recent Trends, Opportunities and Challenges. Sensors 2021, 21, 2143. [Google Scholar] [CrossRef]

- Barreto, L.; Amaral, A.; Baltazar, S. Mobility in the Era of Digitalization: Thinking Mobility as a Service (MaaS). In Intelligent Systems: Theory, Research and Innovation in Applications; Springer: Cham, Switzerland, 2021; pp. 275–293. [Google Scholar]

- Turoń, K.; Czech, P. The Concept of Rules and Recommendations for Riding Shared and Private E-Scooters in the Road Network in the Light of Global Problems. In Modern Traffic Engineering in the System Approach to the Development of Traffic Networks; Macioszek, E., Sierpiński, G., Eds.; Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2020; Volume 1083. [Google Scholar] [CrossRef]

- Talebkhah, M.; Sali, A.; Marjani, M.; Gordan, M.; Hashim, S.J.; Rokhani, F.Z. IoT and Big Data Applications in Smart Cities: Recent Advances, Challenges, and Critical Issues. IEEE Access 2021, 9, 55465–55484. [Google Scholar] [CrossRef]

- Oliveira, T.A.; Oliver, M.; Ramalhinho, H. Challenges for Connecting Citizens and Smart Cities: ICT, E-Governance and Blockchain. Sustainability 2020, 12, 2926. [Google Scholar] [CrossRef]

- Muller, M.; Park, S.; Lee, R.; Fusco, B.; Correia, G.H.d.A. Review of Whole System Simulation Methodologies for Assessing Mobility as a Service (MaaS) as an Enabler for Sustainable Urban Mobility. Sustainability 2021, 13, 5591. [Google Scholar] [CrossRef]

- Gonçalves, L.; Silva, J.P.; Baltazar, S.; Barreto, L.; Amaral, A. Challenges and implications of Mobility as a Service (MaaS). In Implications of Mobility as a Service (MaaS) in Urban and Rural Environments: Emerging Research and Opportunities; IGI Global: Hershey, PA, USA, 2020; pp. 1–20. [Google Scholar]

- Amaral, A.; Barreto, L.; Baltazar, S.; Pereira, T. Mobility as a Service (MaaS): Past and Present Challenges and Future Opportunities. In Conference on Sustainable Urban Mobility; Springer: Cham, Switzerland, 2020; pp. 220–229. [Google Scholar]

- Barreto, L.; Amaral, A.; Baltazar, S. Urban mobility digitalization: Towards mobility as a service (MaaS). In Proceedings of the 2018 International Conference on Intelligent Systems (IS), Funchal, Portugal, 25–27 September 2018; pp. 850–855. [Google Scholar]

- Al-Rahamneh, A.; Javier Astrain, J.; Villadangos, J.; Klaina, H.; Picallo Guembe, I.; Lopez-Iturri, P.; Falcone, F. Enabling Customizable Services for Multimodal Smart Mobility With City-Platforms. IEEE Access 2021, 9, 41628–41646. [Google Scholar] [CrossRef]

- Turoń, K. Open Innovation Business Model as an Opportunity to Enhance the Development of Sustainable Shared Mobility Industry. J. Open Innov. Technol. Mark. Complex. 2022, 8, 37. [Google Scholar] [CrossRef]

- Abbasi, S.; Ko, J.; Min, J. Measuring destination-based segregation through mobility patterns: Application of transport card data. J. Transp. Geogr. 2021, 92, 103025. [Google Scholar] [CrossRef]

- Khan, S.; Nazir, S.; Khan, H.U. Analysis of Navigation Assistants for Blind and Visually Impaired People: A Systematic Review. IEEE Access 2021, 9, 26712–26734. [Google Scholar] [CrossRef]

- Chang, W.-J.; Chen, L.-B.; Chen, M.C.; Su, J.P.; Sie, C.Y.; Yang, C.H. Design and Implementation of an Intelligent Assistive System for Visually Impaired People for Aerial Obstacle Avoidance and Fall Detection. IEEE Sens. J. 2020, 20, 10199–10210. [Google Scholar] [CrossRef]

- El-taher, F.E.; Taha, A.; Courtney, J.; Mckeever, S. A Systematic Review of Urban Navigation Systems for Visually Impaired People. Sensors 2021, 21, 3103. [Google Scholar] [CrossRef]

- Dakopoulos, D.; Bourbakis, N.G. Wearable obstacle avoidance electronic travel aids for blind: A survey. IEEE Trans. Syst. Man Cybern. Part C 2010, 40, 25–35. [Google Scholar] [CrossRef]

- Renier, L.; De Volder, A.G. Vision substitution and depth perception: Early blind subjects experience visual perspective through their ears. Disabil. Rehabil. Assist. Technol. 2010, 5, 175–183. [Google Scholar] [CrossRef]

- Tapu, R.; Mocanu, B.; Tapu, E. A survey on wearable devices used to assist the visual impaired user navigation in outdoor environments. In Proceedings of the 2014 11th International Symposium on Electronics and Telecommunications (ISETC), Timisoara, Romania, 14–15 November 2014; pp. 1–4. [Google Scholar]

- Jacobson, D.; Kitchin, R.; Golledge, R.; Blades, M. Learning a complex urban route without sight: Comparing naturalistic versus laboratory measures. In Proceedings of the Mind III Annual Conference of the Cognitive Science Society, Madison, WI, USA, 1–4 August 1998; pp. 1–20. [Google Scholar]

- Kaminski, L.; Kowalik, R.; Lubniewski, Z.; Stepnowski, A. ‘VOICE MAPS’—Portable, dedicated GIS for supporting the street navigation and self-dependent movement of the blind. In Proceedings of the 2010 2nd International Conference on Information Technology (ICIT 2010), Gdansk, Poland, 28–30 June 2010; pp. 153–156. [Google Scholar]

- Ueda, T.A.; De Araújo, L.V. Virtual Walking Stick: Mobile Application to Assist Visually Impaired People to Walking Safely; Lecture Notes in Computer Science (Including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2014; Volume 8515 LNCS, pp. 803–813. [Google Scholar] [CrossRef]

- Minhas, R.A.; Javed, A. X-EYE: A Bio-smart Secure Navigation Framework for Visually Impaired People. In Proceedings of the 2018 International Conference on Signal Processing and Information Security (ICSPIS), Dubai, United Arab Emirates, 7–8 November 2018; pp. 2018–2021. [Google Scholar] [CrossRef]

- Kaiser, E.B.; Lawo, M. Wearable navigation system for the visually impaired and blind people. In Proceedings of the 2012 IEEE/ACIS 11th International Conference on Computer and Information Science, Shanghai, China, 30 May–1 June 2012; pp. 230–233. [Google Scholar] [CrossRef]

- Zeb, A.; Ullah, S.; Rabbi, I. Indoor vision-based auditory assistance for blind people in semi controlled environments. In Proceedings of the 2014 4th International Conference on Image Processing Theory, Tools and Applications (IPTA), Paris, France, 14–17 October 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Fukasawa, A.J.; Magatani, K. A navigation system for the visually impaired an intelligent white cane. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 4760–4763. [Google Scholar] [CrossRef]

- Bhardwaj, P.; Singh, J. Design and Development of Secure Navigation System for Visually Impaired People. Int. J. Comput. Sci. Inf. Technol. 2013, 5, 159–164. [Google Scholar] [CrossRef]

- Treuillet, S.; Royer, E. Outdoor/indoor vision-based localization for blind pedestrian navigation assistance. Int. J. Image Graph. 2010, 10, 481–496. [Google Scholar] [CrossRef]

- Serrão, M.; Rodrigues, J.M.F.; Rodrigues, J.I.; Du Buf, J.M.H. Indoor localization and navigation for blind persons using visual landmarks and a GIS. Procedia Comput. Sci. 2012, 14, 65–73. [Google Scholar] [CrossRef]

- Shadi, S.; Hadi, S.; Nazari, M.A.; Hardt, W. Outdoor navigation for visually impaired based on deep learning. CEUR Workshop Proc. 2019, 2514, 397–406. [Google Scholar]

- Chen, H.E.; Lin, Y.Y.; Chen, C.H.; Wang, I.F. BlindNavi: A Navigation App for the Visually Impaired Smartphone User. In Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 19–24. [Google Scholar] [CrossRef]

- Idrees, A.; Iqbal, Z.; Ishfaq, M. An Efficient Indoor Navigation Technique to Find Optimal Route for Blinds Using QR Codes. In Proceedings of the 2015 IEEE 10th Conference on Industrial Electronics and Applications (ICIEA), Auckland, New Zealand, 15–17 June 2015; pp. 690–695. [Google Scholar] [CrossRef]

- Zhang, X.; Li, B.; Joseph, S.L.; Muñoz, J.P.; Yi, C. A SLAM based Semantic Indoor Navigation System for Visually Impaired Users. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015; pp. 1458–1463. [Google Scholar] [CrossRef]

- Yelamarthi, K.; Haas, D.; Nielsen, D.; Mothersell, S. RFID and GPS integrated navigation system for the visually impaired. In Proceedings of the 2010 53rd IEEE International Midwest Symposium on Circuits and Systems, Seattle, WA, USA, 1–4 August 2010; pp. 1149–1152. [Google Scholar] [CrossRef]

- Tanpure, R.A. Advanced Voice Based Blind Stick with Voice Announcement of Obstacle Distance. Int. J. Innov. Res. Sci. Technol. 2018, 4, 85–87. [Google Scholar]

- Salahuddin, M.A.; Al-Fuqaha, A.; Gavirangaswamy, V.B.; Ljucovic, M.; Anan, M. An efficient artificial landmark-based system for indoor and outdoor identification and localization. In Proceedings of the 2011 7th International Wireless Communications and Mobile Computing Conference, Istanbul, Turkey, 4–8 July 2011; pp. 583–588. [Google Scholar] [CrossRef]

- Basiri, A.; Amirian, P.; Winstanley, A. The use of quick response (QR) codes in landmark-based pedestrian navigation. Int. J. Navig. Obs. 2014, 2014, 897103. [Google Scholar] [CrossRef]

- Nilwong, S.; Hossain, D.; Shin-Ichiro, K.; Capi, G. Outdoor Landmark Detection for Real-World Localization using Faster R-CNN. In Proceedings of the 6th International Conference on Control, Mechatronics and Automation, Tokyo, Japan, 12–14 October 2018; pp. 165–169. [Google Scholar] [CrossRef]

- Maeyama, S.; Ohya, A.; Sciences, I.; Tsukuba, T. Outdoor Navigation of a Mobile Robot Using Natural Landmarks. In Proceedings of the 1997 IEEE/RSJ International Conference on Intelligent Robot and Systems. Innovative Robotics for Real-World Applications. IROS ’97, Grenoble, France, 11 September 1997; Volume 3, pp. V17–V18. [Google Scholar] [CrossRef]

- Raubal, M.; Winter, S. Enriching Wayfinding Instructions with Local Landmarks; Lecture Notes in Computer Science (Including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2002; Volume 2478, pp. 243–259. [Google Scholar] [CrossRef]

- Yeh, T.; Tollmar, K.; Darrell, T. Searching the web with mobile images for location recognition. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition CVPR 2004, Washington, DC, USA, 27 June–2 July 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 2, p. II. [Google Scholar]

- Li, F.; Kosecka, J. Probabilistic location recognition using reduced feature set. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation ICRA 2006, Orlando, FL, USA, 15–19 May 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 3405–3410. [Google Scholar]

- Schindler, G.; Brown, M.; Szeliski, R. City-scale location recognition. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 1–7. [Google Scholar]

- Gallagher, A.; Joshi, D.; Yu, J.; Luo, J. Geo-location inference from image content and user tags. In Proceedings of the 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 55–62. [Google Scholar]

- Schroth, G.; Huitl, R.; Chen, D.; Abu-Alqumsan, M.; Al-Nuaimi, A.; Steinbach, E. Mobile visual location recognition. IEEE Signal Process. Mag. 2011, 28, 77–89. [Google Scholar] [CrossRef]

- Cao, S.; Snavely, N. Graph-based discriminative learning for location recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 700–707. [Google Scholar]

- Wan, J.; Wang, D.; Hoi, S.C.H.; Wu, P.; Zhu, J.; Zhang, Y.; Li, J. Deep learning for content-based image retrieval: A comprehensive study. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 157–166. [Google Scholar]

- Liu, P.; Yang, P.; Wang, C.; Huang, K.; Tan, T. A semi-supervised method for surveillance-based visual location recognition. IEEE Trans. Cybern. 2016, 47, 3719–3732. [Google Scholar] [CrossRef]

- Zhou, W.; Li, H.; Tian, Q. Recent advance in content-based image retrieval: A literature survey. arXiv 2017, arXiv:1706.06064. [Google Scholar]

- Tzelepi, M.; Tefas, A. Deep convolutional learning for content based image retrieval. Neurocomputing 2018, 275, 2467–2478. [Google Scholar] [CrossRef]

- Saritha, R.R.; Paul, V.; Kumar, P.G. Content based image retrieval using deep learning process. Clust. Comput. 2019, 22, 4187–4200. [Google Scholar] [CrossRef]

- Bhagwat, R.; Abdolahnejad, M.; Moocarme, M. Applied Deep Learning with Keras: Solve Complex Real-Life Problems with the Simplicity of Keras; Packt Publishing Ltd.: Birmingham, UK, 2019. [Google Scholar]

- Lima, R.; da Cruz, A.M.R.; Ribeiro, J. Artificial Intelligence Applied to Software Testing: A literature review. In Proceedings of the 2020 15th Iberian Conference on Information Systems and Technologies (CISTI), Seville, Spain, 24–27 June 2020. [Google Scholar]

- Lynch, K. The image of the environment. Image City 1960, 11, 1–13. [Google Scholar]

- Pfleeger, C.; Shari, L. Security in Computing, 4th ed.; Prentice Hall PTR: Hoboken, NJ, USA, 2007. [Google Scholar]

- Khan, J.; Abbas, H.; Al-Muhtadi, J. Survey on Mobile User’s Data Privacy Threats and Defense Mechanisms. Procedia Comput. Sci. 2015, 56, 376–383. [Google Scholar] [CrossRef]

- Becher, M.; Freiling, F.C.; Hoffmann, J.; Holz, T.; Uellenbeck, S.; Wolf, C. Mobile Security Catching Up? Revealing the Nuts and Bolts of the Security of Mobile Devices. In Proceedings of the 2011 IEEE Symposium on Security and Privacy, Oakland, CA, USA, 22–25 May 2011; pp. 96–111. [Google Scholar] [CrossRef]

- Stallings, W. Cryptography and Network Security: Principles and Practice, 7th ed.; Pearson: London, UK, 2020. [Google Scholar]

- Varadharajan, V.; Tupakula, U. Security as a Service Model for Cloud Environment. IEEE Trans. Netw. Serv. Manag. 2014, 11, 60–75. [Google Scholar] [CrossRef]

- Paiva, S.; Ahad, M.A.; Zafar, S.; Tripathi, G.; Khalique, A.; Hussain, I. Privacy and security challenges in smart and sustainable mobility. SN Appl. Sci. 2020, 2, 1175. [Google Scholar] [CrossRef]

| Subcategory Name | Description | Services |

|---|---|---|

| Electronic Travel Aid (ETAs). | Collected and sensed data about surrounding areas are sent to the user or a remote server via sensors, laser, or sonar. | Surrounding obstacles are identified. Texture and gaps on the surface can be provided. The distance between the user and an obstacle is determined. Great locations are identified. Self-orientation throughout an area is improved with obstacle avoidance information. |

| Electronic Orientation Aid (EOAs) | Guidelines and instructions about a path are given to the user through a device. | The best path for a particular user is determined. Clear direction and path signs are given by calculating the user’s position and tracking the path. |

| Position Locator Devices (PLDs) | The user’s location is identified, for example, using the global positioning system (GPS) | Guidance from one point to another point is given. |

| Reference | Challenge | Approach |

|---|---|---|

| [38] | Outdoor navigation | SIG, GPS receiver, magnetic compass, and gyrocompass |

| [39] | Smartphone camera, accelerometer | |

| [47] | Deep learning, camera vision-based system | |

| [48] | Voice feedback, multi-sensory clues, microlocation technology | |

| [44] | Obstacle detection and navigation | The infrared sensor in a cap for head protection |

| [40] | Obstacle detection | Wearable camera connected to the smartphone |

| [41] | Ultrasonic sensors, vibrato rand buzzer | |

| [42] | Indoor navigation | Webcam, Dijkstra Algorithm, Computer Vision |

| [50] | SLAM, inertial sensors, feedback devices | |

| [43] | Walking stick, color sensor, colored floor, RFID tags | |

| [49] | QR codes | |

| [46] | GIS, visual markers | |

| [45] | Indoor and outdoor navigation | Camera, computer vision |

| [51] | RFID, GPS, ultrasonic and infrared sensors | |

| [52] | Ultrasonic sensor |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Paiva, S.; Amaral, A.; Gonçalves, J.; Lima, R.; Barreto, L. Image Recognition-Based Architecture to Enhance Inclusive Mobility of Visually Impaired People in Smart and Urban Environments. Sustainability 2022, 14, 11567. https://doi.org/10.3390/su141811567

Paiva S, Amaral A, Gonçalves J, Lima R, Barreto L. Image Recognition-Based Architecture to Enhance Inclusive Mobility of Visually Impaired People in Smart and Urban Environments. Sustainability. 2022; 14(18):11567. https://doi.org/10.3390/su141811567

Chicago/Turabian StylePaiva, Sara, António Amaral, Joana Gonçalves, Rui Lima, and Luis Barreto. 2022. "Image Recognition-Based Architecture to Enhance Inclusive Mobility of Visually Impaired People in Smart and Urban Environments" Sustainability 14, no. 18: 11567. https://doi.org/10.3390/su141811567

APA StylePaiva, S., Amaral, A., Gonçalves, J., Lima, R., & Barreto, L. (2022). Image Recognition-Based Architecture to Enhance Inclusive Mobility of Visually Impaired People in Smart and Urban Environments. Sustainability, 14(18), 11567. https://doi.org/10.3390/su141811567