Abstract

With the constant exploration and development of intelligent manufacturing, the concept of digital twins has been proposed and applied. In view of the complexity and intellectualization of virtual workshop systems, real workshops can link with virtual workshosp based on AR under the structure of digital twins, which allows users to interact with virtual information and perceive the virtual information superimposed on the real world with great immersion. However, the three-dimensionality of virtual workshops and interaction with complex workshop information can be challenging for users. Due to a shortage of input bandwidth and the nontraditional mode of interaction, a more natural interaction technique for virtual workshops is required. To solve such problems, this paper presents a technical framework for 3D eye movement interaction applied to a virtual workshop. An eye movement interaction technique, oriented to implicit interaction and explicit interaction, is developed by establishing behavior recognition and interaction intention understanding. An eye-movement experiment verifies the former’s accuracy is above 90% and had better recognition performance. A better feature vector group of the latter is selected to establish a model and verify its feasibility and effectiveness. Finally, the feasibility of the framework is verified through the development of an application example.

1. Introduction

Intelligent manufacturing is the key to innovation and development, which is the main route of transformation and upgrading of China’s manufacturing industry and the cardinal direction of accelerating construction of manufacturing. The introduction of Industry 5.0 will revolutionize manufacturing systems and bring continuous integration of a new generation of information technology and manufacturing [1], Smart factories and intelligent workshops are established to meet individual needs. Virtual workshop systems create 3D digital models of the real workshop that are driven by the production data of real shops to realize synchronization. As AR technology, which acts as the terminal of virtual workshop systems, increasingly matures, the distance between virtual and real workshops is shortened due to visualization and monitoring of the actual production workshop—users can interact with virtual information through AR. However, in the 3D scene of virtual workshops, users are constrained by the unnatural and inappropriate problems of traditional human–computer interaction methods such as mouse, keyboard and other auxiliary hardware devices, as well as the limitations and privacy of gesture and voice interaction, which are currently well developed. In addition, the concept of “user-centered design” shows that human–computer interaction technology is developing towards the goal of being people-oriented, natural and efficient. Therefore, it is necessary to create attractive and intuitive methods for users to manage and interact with a large amount of information, and to pursue more natural and efficient input interaction techniques.

As an important organ for human beings to obtain information, the movement of eyes is closely related to people’s emotional state, attention goal and task intention. With the help of eye trackers and other devices with eye tracking functions, a variety of eye movement indicators can be collected so that people’s most subjective views on what they see can be analyzed. With the gradual rise of the application of eye tracking technology research in the field of human–computer interaction, eye movement interaction has become an important research direction of intelligent human–computer interaction because eye movement can be used not only as a channel for receiving information, but also as an input channel for human–computer interaction, i.e., bidirectional transmission. Hyrskykari et al. divided eye movement interaction into explicit interaction and implicit interaction [2]. Explicit interaction, also known as imperative interaction, requires users to initiate input-specific eye movements to complete particular interaction tasks. Implicit interaction means that devices actively collect and analyze users’ unconscious or subconscious behaviors to guess their interaction intentions through context so as to provide them with proactive services. Therefore, this paper will focus on 3D eye movement interaction technique from two aspects, which can be divided into explicit eye movement (interaction with eye movement behavior as input) and implicit eye movement (interaction based on intention understanding). The key of the research is user’s eye movement behavior recognition and interactive intention understanding.

In recent years, the powerful representative learning ability of deep learning has injected strong vitality into the research of behavior recognition. It adjusts its own parameters through training datasets to get the optimal model. In this paper, CNN and Bi-LSTM in deep learning are used for eye movement behavior recognition. CNN is used to extract the characteristics of eye movement speed, and Bi-LSTM has good processing ability for time series. However, the means of intention understanding mostly belong to the field of pattern recognition, including the related research and application of using machine-learning algorithms, such as HMM and SVM, and other algorithms, such as fuzzy theory and neural network, to establish intention recognition models. HMM is chosen as the basic model for understanding user’s interaction intention in this paper since it can describe the input signal based on time and respond to changes in user status and scene status.

The rest of this paper is summarized as follows: related research is introduced in Section 2; the overall framework of the proposed 3D eye movement interaction technique is expounded in Section 3; the confirmatory experiment of the algorithm model in the framework and the effect display of application cases is presented in Section 4; the research is summarized and the contributions and future research directions are discussed in Section 5.

2. Related Research

2.1. Related Research on Recognition of Eye Movement Behavior

Eye movements can be divided into conjugate eye movements and non-conjugate eye movements according to whether the two eyeballs rotate in the same direction. Conjugate eye movements consist of fixation, saccade, smooth pursuit and vestibula–ocular reflexes. Among these eye movements, a saccade is the rapid eye movements between two gazing areas, and fixation is the process of gazing at a particular position [3]. Non-conjugate eye movements, also called symmetric eye movements, can be divided into convergent and divergent movements [4]. Eye movement recognition is one of the key technologies in the eye movement interaction system, and its task is to determine what kind of eye movement the user is doing at a certain time. Eye movement recognition can be used to judge users’ consciousness from the objective level, so as to evaluate their state, such as fatigue or concentration, as well as the rationality and comprehensibility of what they observe. Jozsef Katona, for example, used eye tracking technology to identify eye movement and analyze the cognitive load involved in performing a complex cognitive behavior such as programming in order to assess the readability and understandability of code. In order to further improve the efficiency of the algorithm and reduce cognitive efficiency, he also collected eye movement data while subjects perceived a flowchart and a Nassi—Shneiderman diagram [5,6,7].

In the research of eye movement recognition algorithms at present, Andersson R et al. evaluated ten kinds of eye movement classification detection algorithms based on the data from a SMI HiSpeed 1250 system. They are CDT, EM, IDT, IKF, IMST, IHMM, IVT, NH, BIT and LNS [8]. Therefore, a variety of algorithms have been proposed for eye movement behavior detection, and they have been classified and evaluated. Although these algorithms can successfully detect some eye movement behaviors, they are still not reliable enough, and they are hard to use.

With the development of machine learning and artificial intelligence, deep learning models have already been applied to human behavior recognition, including the application of eye movement recognition. For example, Wang et al. presented an algorithm based on a Wavelet Transform-Support Vector Machine, which was used to classify EOG and strain signals according to different features, and improved the accuracy of eye movement recognition in the five directions of straight, up, down, left and right. The average recognition accuracy of this algorithm is up to 96.3% [9]. Wentao Dong et al. proposed an intelligent data fusion algorithm based on ANFIS for eye movement classification and eye movement recognition, and the recognition rate of the algorithm reached 90% through experiments [10]. Bing Cheng et al. proposed an eye movement recognition algorithm based on CNN that can recognize all eight gaze orientation categories of eye movement images [11]. In addition, for eight types of model classifiers, the highest accuracy of the single convolution layer used in CNN was 99.6875%. After adding another convolution layer, the highest accuracy can rise to 99.7062%. Eye movement behavior recognition based on deep learning emphasizes data learning and knowledge reasoning. The neural network structure is used to extract features from input data layer by layer. The whole process does not need expert knowledge or manual feature extraction, and the structure and parameters of the network model can be changed to meet different recognition needs. Therefore, compared with other methods, this method has higher recognition accuracy and better robustness, generalization and universality. This paper will apply these strategies to eye movement behavior recognition by studying CNN and Bi-LSTM models.

2.2. Related Research on Intention Understanding

User intention can be understood as the mental activity that users want the interactive system to reach for a certain state in a short time during interactive activities. It depends on the current mental state, cognitive situation and environment of users. With the rapid development of human–computer interaction technology, more natural and efficient interaction is always being explored to reduce the memory load and interaction load of users during the interactive process. It is necessary to constantly balance the cognitive ability between human and computer. It is the most critical part to make stronger cognitive ability by obtaining, understanding and analyzing user’s interaction intention from the computer’s point of view. It is difficult to measure the user’s intention directly, but the relationship between the user’s mentality and behavior, such as implicit emotion or intention, which are difficult to measure, can be found through the user’s behavior [12]. Some scholars divide intention understanding into three categories: speech information-based, text content-based and user behavior-based [13].

Among these, user intention understanding based on user behavior can be generalized to general research. Eye tracking is one of the methods used to infer behavior-oriented intention. After measurement, user intention prediction can be roughly divided into rules-based methods and statistical learning (or machine learning)-based methods. The former focuses on design, while the latter focuses on models and calculations, such as the Bayesian decision model, hidden Markov model and neural network model [14,15].

Tatler et al. showed for the first time that under the same visual stimulus, there are great differences in gaze patterns according to different instructions, that is, gaze patterns can be used to reveal the tasks of observers [16]. A new method of human implicit intention recognition based on eye movement mode and pupil size change is proposed by Young Min Jang et al.; it classified human implicit intention into information and navigation intention during visual stimulation [17]. This method used marked features of eyes as the input of people’s intrinsic intention classification, and its recognition performance was verified by experiments. A new nonverbal communication framework based on eye gaze for an auxiliary robot that can ensure daily life ability for the elderly and the disabled is introduced by Songpo Li et al. [18]. In this framework, eye movement can be actively tracked and analyzed to infer the user’s intention in daily life, and then the inferred intention is used to command the assistive computer to give appropriate assistance. The test results of this framework show that peoples’ subtle gaze at visual objects can be effectively used for interpersonal communication, and intentional communication based on fixation is easy to learn and use. Koochaki et al. proposed a framework that uses the spatial and temporal patterns of eye movement and deep learning technology to predict the user’s intention. In this framework, the transition sequence between ROIs is found by using HMMs to identify the temporal patterns of gaze. Transfer learning is utilized to recognize objects of interest among the pictures on display. Then, the user’s intention at both the early and final stages of the task can be predicted. The experimental results show that the average classification accuracy of this framework is 97.24% [19]. Lei Shi et al. presented a visual intention algorithm-based fixation named GazeEMD. This algorithm determines the object that a human is looking at by calculating the similarity between the hypothetical fixation point on the object and the actual fixation point obtained by mobile eye-tracking glasses, and it has proven to have good performance [20]. Antoniou et al. proposed a wheelchair control and navigation system that captures EEG signals during human eye movement by using a brain–computer interface. These signals can be classified by an RF classification algorithm for intention prediction. The performance of the system developed by using this algorithm is good, and the classification accuracy can reach about 85.39% [21].

To sum up, researchers at home and abroad have done a lot of research in the field of intention understanding of eye visual characteristics, which not only includes data, such as gaze direction and pupil size, that can be directly collected by eye-tracker, but also includes visual characteristic variables such as eye movement pattern, pupil diameters, gaze duration and fixation times. At present, the methods of intention understanding mostly belong to the field of pattern recognition. The related research and application use algorithms to build intention recognition models, including machine learning, fuzzy theory and neural network. Previous work has provided ideas for the intention understanding of eye movement interaction. The hidden Markov model, whose volume is small, is chosen as the basic problem-solving model for intention understanding in this paper. This model can describe the input signal based on time and cope with changes to users and scenes. However, there are still some challenges in constructing the intention understanding model based on 3D implicit eye movement interaction in virtual workshops, such as the selection of model feature vectors, the integration of multiple models, recognition performance, etc.

3. Technical Framework of 3D Eye Movement Interaction

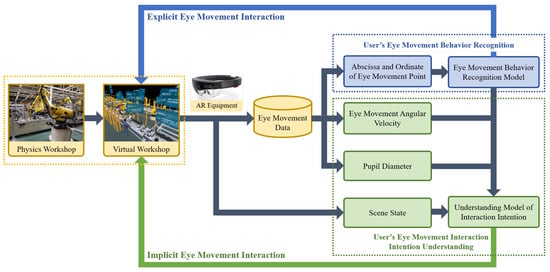

The 3D eye movement interaction technique mentioned in this research means that users actively interact with the system through eye movement. Eye movement is closely related to human’s intentions [22], so the 3D eye movement interaction technique also includes research of feedback for implicit eye movement signal systems. The eye movement behavior and interaction intention of users can be recognized by real-time eye movement data by designing an eye movement behavior recognition algorithm and interaction intention understanding algorithm. Then, they are applied to the application research of explicit eye movement interaction and implicit eye movement interaction, respectively. Figure 1 illustrates the overall framework of the 3D eye movement interaction technique.

Figure 1.

The overall framework of 3D eye movement interaction technology established in this study.

3.1. Eye Movement Behavior Recognition

Accurate recognition of eye movement behavior is the premise and key to study explicit interaction in the 3D eye movement interaction technique. Aiming at fixation and saccade, a behavior recognition model based on CNN and Bi-LSTM hybrid neural network is established to recognize real-time eye movement data.

The framework of the CNN and Bi-LSTM hybrid neural network proposed in this paper is based on U’n’ Eye, as proposed by Marie E. Bellet et al. [23] This convolutional neural network has the characteristics of universality and self-feature extraction, and can extract features for eye movement data. CNN can better extract local feature information from serialized eye movement data because of the advantage of a long training time, but it can’t connect context information well enough. Moreover, as the maximum pooling is used in CNN’s pooling layer, only the maximum feature is retained, and other features are deleted, which leads to the loss of many features, impacting recognition and classification results.

Therefore, according to the time-series characteristics of eye movement data, Bi-LSTM is added in this paper to extract such features of eye movement data series on a deeper level. Although Bi-LSTM is inferior to CNN in extracting local features, it can better preserve the historical information of data and effectively extract the global features of the series. A hybrid neural network model of CNN and Bi-LSTM formed by the fusion of CNN and Bi-LSTM is used as an eye movement behavior recognition algorithm to recognize the fixation and saccade of users.

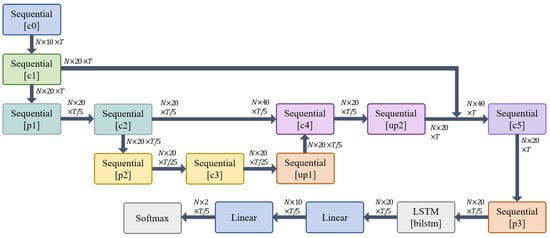

The input dimension of the model is , where N is the batch size, T is the number of time points and 2 is the dimension of eye movement velocity calculated by the first-order difference of eye movement point coordinates. CNN can effectively extract the spatial characteristics of data, and Bi-LSTM can efficaciously capture the context information and extract the time properties of data. Therefore, the feature vector output by CNN is used as the input of Bi-LSTM. Then, the classifier is used for feature classification after passing through the fully connected layer. The CNN and Bi-LSTM hybrid neural network integrates the advantages of CNN and Bi-LSTM. Its structure is shown in Figure 2.

Figure 2.

CNN and Bi-LSTM hybrid neural network model structure.

In this hybrid model, CNN consists of six convolution layers and three pooling layers with a kernel size of 5. To accelerate convergence and decrease the phenomenon of over-fitting, each convolution layer is followed by a rectified linear unit function layer (ReLU) and a batch normalization layer (Batch Norm). Bi-LSTM is used to capture the features of context information. Eye movement information is obtained by constructing two LSTM layers with different directions. In the meantime, the input layers of the two LSTMs are the same. Bi-LSTM is connected with the last pooling layer of CNN. The eye movement sequence features learned by CNN are coded according to time sequence to get the feature vectors, which are finally connected with a Softmax classification layer.

The output dimension of the model is , where 2 is the number of categories, namely, fixation and saccade. The Softmax classifier is adopted to output the prediction probability for each eye movement category at each time point, where k represents the number of categories, and is the layer corresponding to class i. Therefore, when the eye movement velocity x and weight w are determined, the output of the model represents the sample-by-sample conditional probability of each category. The final prediction represents the category that maximizes this conditional probability:

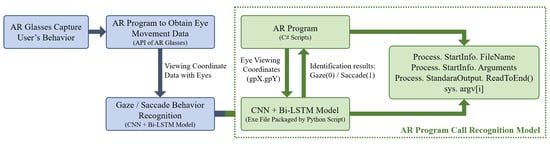

After completing the fixation/saccade behavior recognition model based on the CNN and Bi-LSTM hybrid neural network, the application and realization process framework of the eye movement behavior recognition model is built with AR equipment and the Unity 3D development platform, as shown in Figure 3; the process is briefly described as follows:

- 1.

- The eye movement behavior of the user is acquired by the eye tracking module of the AR glasses in real-time;

- 2.

- The AR program is developed by Unity 3D. The connection with the AR program is created through the API of AR glasses, so that the collected eye movement data can be acquired in real-time in the AR program;

- 3.

- The eye movement behavior is recognized by the CNN and Bi-LSTM hybrid neural network based on the eye movement data.

Figure 3.

Application and realization process of eye movement recognition model.

3.2. Intention Understanding of Eye Movement Interaction

According to the “theory of mind”, human beings can express, predict and explain the explicit or implicit intentions expressed by others in a natural way. Therefore, in order to develop an effective nonverbal human–computer interaction system, it is important to understand user intention.

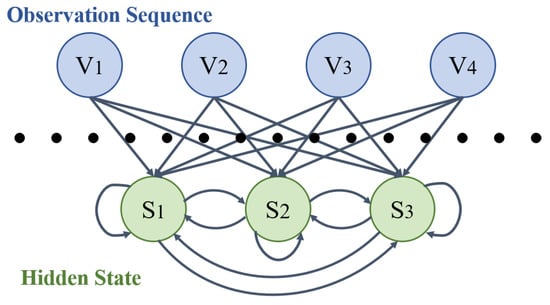

HMM is a statistical probability model of time series based on Bayesian theory, which is put forward on the basis of the Markov model. It is used to describe the double stochastic process of the transition and performance of the recessive state of a system [24]. Figure 4 shows the graph structure of HMM. HMM which has the advantages of rigorous structure and reliable results can represent invisible and unmeasured states, so it is often used as an intention recognition model for time-based continuous series data. It is usually described by parameter , which is determined by initial probability distribution , state transition probability distribution A and observation probability distribution B, and its expression is . The hidden Markov chain is determined by a state transition probability matrix and initial probability vector. Then, the unobservable state sequence is generated and the observed sequence is determined by the probability matrix and state sequence.

Figure 4.

HMM graph structure.

The data obtained in this paper come from the interactive process between users and a virtual workshop system, so the observation sequence is a continuous sequence based on time. Therefore, the continuous HMM is selected to establish the model to avoid the data distortion caused by data quantization caused by vector quantization of the observation sequence in an application. GMM models the feature distribution described by each state of HMM, and it can simulate any curve smoothly and approximately through multiple Gaussian distributions [25], so a Gaussian mixture model is chosen to describe the distribution of observation probability. Then, the GMM-HMM model is constructed. Compared with HMM, it doesn’t need a lot of training data and has better modeling ability and recognition accuracy [26].

In this paper, a set of characteristic variables based on the currently available state information is constructed as the input of the model to identify the eye movement interaction intention of users in a virtual workshop. The user intention is divided into interactive intention and non-interactive intention. Interactive intention, that is, purposeful intention, means that the user is eager to find an area of interest with a specific goal, or hopes that some kind of interactive behavior can be done in that area. Non-interactive intention, known as non-purposeful intention, means that the user finds objects or areas of interest as visual inputs without a specific purpose. Between these, different feature variables will have different effects on the recognition results of user interaction intentions. So as to distinguish user intentions accurately in order to construct a suitable combination of feature variables, the 3D eye movement interaction technique studied in this paper is applied to the virtual workshop, which can be used as the feature variables of the user interaction intention model, including scene status, eye movement characteristics, eye movement states and psychological states.

Scene state variables refer specifically to whether there are interactive virtual objects in the scene, so the scene state characteristic variables form a sequence about time t, where is true and the value is 1 when there are interactive virtual objects in the scene; otherwise, is false and the value is 0. The user’s gaze direction can be detected through eye tracking technology, which is defined as the horizontal and vertical coordinates of the eye movement points tracked at every moment, so that the change of the gazing direction in unit time, that is, the eye movement angular velocity, can be obtained. The eye movement characteristic variables can be expressed by the sequence of angular velocity with respect to time t, that is, . The user eye movement state at every moment in the interaction process can be acquired by using an eye movement behavior recognition model, which may be influenced by different tasks, interaction behaviors and scene content; thus, the user’s eye movement state also belongs to a characteristic variable of interaction intention, which can be expressed as , where represents the user’s eye movement state at time t, the value of gaze behavior is 0, and the value of glance behavior is 1. In this paper, the users’ mental state variables are defined by their cognitive load level, and the load level is evaluated by the average pupil diameter of the left and right eyes. For interactive and non-interactive intentions, the corresponding cognitive load level is high and low; that is, the average pupil diameter is large and small. Thus the sequence of mental state variables about time t can be constructed by the average pupil diameter data, where represents the user’s current cognitive load level at time t, and its input value is the average pupil diameter.

The determined characteristic variables can form six combinations as follows:

- eye movement characteristics + eye movement state;

- eye movement characteristics + psychological state;

- eye movement characteristics + eye movement state + psychological state;

- eye movement characteristics + eye movement state + scene state;

- eye movement characteristics + psychological state + scene state;

- eye movement characteristics + eye movement state + psychological state + scene state.

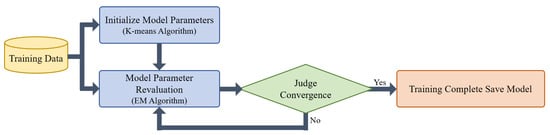

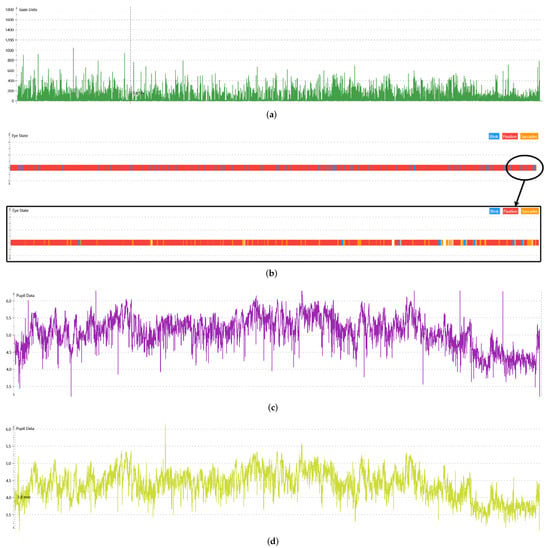

The combination of these six characteristic variables and the revaluation formula of model parameters are used to train the corresponding models with and without interaction intention. The transition sequence of the interaction state when users are in the virtual workshop scene is hidden in this paper, so there are two types of user interaction state sets S, namely, hidden state sets , where = {interactive intention state} and = {non-interactive intention state}, and the implicit state and Gaussian mixture number of the two intention models are set to 2. The model training process is shown in Figure 5. After finishing model training, the convergent intention model of each combination of characteristic variables calculated by EM algorithm can be obtained. During model testing and practical application, the output probability of the selected model is taken as the recognition result, and the model with the highest probability is selected as the current user interaction intention, in which Y_GMM-HMM and N_GMM-HMM are interactive and non-interactive intention models, respectively. Recognition by the GMM-HMM model is shown in Figure 6.

Figure 5.

Training process of GMM-HMM model.

Figure 6.

Recognition process of GMM-HMM model.

4. Materials and Methods

4.1. Participants and Data Set

The eye movement experiment dataset is designed to verify the usability and effectiveness of the GMM-HMM model. Tobii Glasses 2, sampled at 50 Hz, provide real-time eye movement data of the participants when they operated the AR application of the virtual workshop. Fourteen participants (5 males and 9 females; 22 ± 2 years old) took part in the experiment, and the average recording time was about 20 minutes. All of the participants claimed to have normal or corrected to normal vision above 5.0. None had prior experience with or knowledge of AR eye movement interaction, avoiding the influence of prior experience on data acquisition. This study was approved by the local ethical committee of Shanghai University, Shanghai, China.

A total of 14 datasets were collected through the experiment. The datasets were randomly divided into training sets and test sets in a ratio of 9:1. The original data include saccade, fixation, nictitation and other eye movements. After preprocessing the original data and removing the invalid data, labels 0 and 1 were created for fixation and saccade, respectively. The number of labels in the dataset is listed in Table 1. The processing dataset {A, B, C, D, E, F, G, H, I, J, K, L, M, N} contained eye movement track segments of 8 s with the data capacity of {174, 194, 45, 159, 146, 169, 73, 148, 173, 93, 128, 189, 110}.

Table 1.

Distribution of dataset label samples.

4.2. Verification Experiment of Eye-Movement Behavior Recognition Model

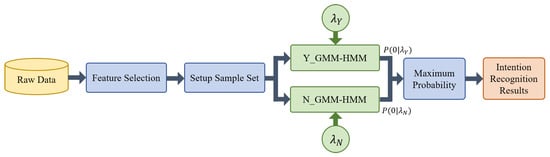

In this paper, commonly used indexes for evaluating the performance of a model are adopted, including accuracy, Kappa coefficient and F1 score. The eye movement data mentioned above are used to train and verify the eye movement behavior recognition model. The accuracy results of each model in the test set of each dataset are shown in Figure 7. The comparison results of the models based on Kappa coefficient, F1 parameter and running time are shown in Table 2.

Figure 7.

The comparison bar chart of recognition accuracy based on model verification experiments of different models.

Table 2.

Distribution of dataset label samples.

The recognition accuracies for fixation and saccade of these models are all above 90%, and the highest accuracy is achieved in 11 datasets. For I-HMM and EM, the accuracy of the mentioned model has been significantly improved. Although there are three datasets of the mentioned model with slightly lower accuracy than that of CNN, the recognition accuracy rate is still over 97%. The accuracy rate of the mentioned models in the remaining datasets is also improved, with an average increase of 0.66.

For Kappa, F1 score and running time consumption, the Kappa coefficients of I-HMM and EM in individual datasets are very low or even zero. By analyzing their confusion matrices, they have a high recognition of fixation but difficulty in identifying the characteristics of saccade data and judging the correlation between data and behavior labels. However, the neural network model shows high recognition of both behaviors. In all datasets, the mentioned models all achieved the highest values of Kappa coefficient and F1 score, which identifies a high correlation between data and behavioral labels. For CNN, all models’ Kappa coefficient and F1 score increased, indicating that the addition of Bi-LSTM is beneficial to improve classification performance. In terms of running time consumption, the neural network model greatly reduces the consumption compared with I-HMM and EM. The mentioned models increases the complexity of the structure compared with CNN, so the time consumption is increased, but remains at about 0.1 s.

The above experimental results show that the CNN and Bi-LSTM hybrid neural network model created in this paper has better recognition performance than other traditional models. Compared with CNN, adding Bi-LSTM to extract the temporal characteristics of eye movement data has certain effect on accurately identifying eye movement behavior. This verifies the ability of the CNN and Bi-LSTM hybrid neural network model in feature extraction and classification of eye movement data.

4.3. Verification Experiment of Eye-Movement Interaction Intention Understanding Model

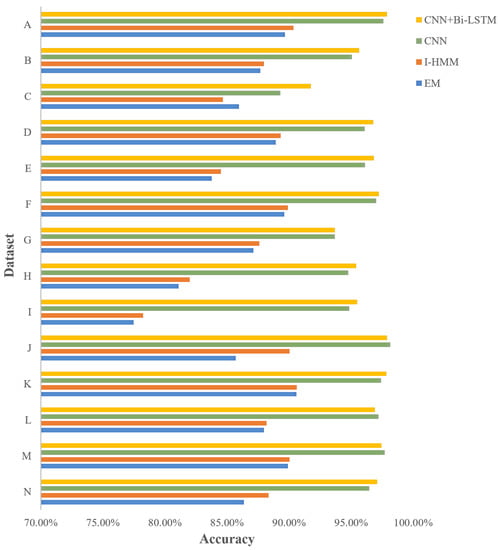

In actual applications, user interaction intention may change with time and task type in the process of interaction with the virtual workshop system. Therefore, the interaction process of eye movement experiment mentioned above is selected for the training test of the intention understanding model. The first five combinations are chosen as the training set, and the sixth combination is chosen as the test set. After getting the parameters of the model by training different characteristics of the variable combination, test sets are used to test and verify each model. Taking the model composed of eye movement characteristics, eye movement state and mental state as an example, (a) of Figure 8 shows eye movement characteristics, namely angular velocity of eye movement; (b) shows eye movement state, that is, the user’s eye movements at every moment, in which red indicates fixation, yellow indicates saccade, and blue indicates blink; (c) shows left pupil diameter; and (d) shows right pupil diameter. Average pupil diameter represents the user’s psychological state.

Figure 8.

“User’s eye movement characteristics + User’s eye movement state + User’s mental state” feature variables. (a) Eye movement angular velocity. (b) User’s eye movement state: red, fixation; yellow, saccade; blue, blink. (c) Diameter of left pupil. (d) Diameter of right pupil.

In this paper, the performance of the eye movement interaction intention understanding model with different combinations of feature variables is analyzed for accuracy. The experimental results of the model constructed by six feature variable combinations are shown in Table 3.

Table 3.

Experimental results.

It can be seen from this table that all the selected data have certain feature information, which makes the model have certain recognition ability. As can be seen from the training results, different combinations composed of different feature variables may complement each other, but they may also cause recognition interference, which may lead to decreased accuracy because of the recognition of useless noise features. The model combining four feature variables in the table has the best prediction effect, with an accuracy of 88.33%. Therefore, the feature variable combination of “eye movement characteristics + eye movement state + mental state scene state” is selected as the ultimate user interaction intent understanding model. To sum up, the eye movement interaction intention understanding model based on GMM-HMM can describe the input signals that change with time, and selecting the input signals with the appropriate combination of characteristic variables has a great influence on the recognition effect of the model. This model can deal with the changes to the system scene and user state in the process of interaction and can be used to understand eye movement interaction intention, which can identify whether the users tend to interact with system.

4.4. Application

The effectiveness of the model constructed in this paper is verified by the experiments above, so it can be applied according to the framework. There are two main interaction modes created in this paper. One is an implicit interaction mode based on user intention understanding, which is applied to the adaptive display function of the UI panel in the virtual workshop. By detecting the user’s interaction intention, when it is judged that the intention is interactive, the system will determine which UI panel the gaze direction is located in by checking the line of sight direction so that the panel becomes highlighted and rotates towards the user. Another mode is a display interaction mode based on fixation. It is mainly designed as a click interaction with interactive interfaces such as buttons. By detecting whether the user’s fixation is recognized by the eye movement behavior recognition model, that is, whether the user has gaze input, to judge whether there is an interactive interface in the direction of the detected line of sight, the control will give feedback to the user.

In the practical application development, using the ray detection technology in Unity 3D, when a ray collides with the collider, it can return the relevant information of the collider, such as the name of the object, the collision position, etc., and select the appropriate information to integrate it into the code as the characteristic variables of the two algorithm models to realize the functions related to user input and system feedback in eye movement interaction. The practical application effect display is shown in Figure 9.

Figure 9.

The practical application effect of a virtual workshop based on algorithm models established in this research.

5. Results and Discussion

Aiming at eye movement behavior recognition in a 3D eye movement interaction, a hybrid neural network model based on CNN and Bi-LSTM is constructed to recognize the eye movement behavior characteristics of users at every moment for eye movement interaction. The performance of this model is better than that of traditional models. The recognition performance of the model integrated into Bi-LSTM is improved compared with that of the model based on CNN. The CNN model has the advantages of short training time and good local features of data extraction. The Bi-LSTM model takes both historical information and future information into account during application. Based on the temporal and spatial characteristics of eye movement data, these advantages of the CNN model are used to extract spatial features of eye movement data, and the Bi-LSTM model can extract data better to obtain the temporal characteristics of eye movement data. In addition, data enhancement, Dropout technology and a Batch Norm layer are used to optimize the model to suppress the problem of over-fitting and accelerate model convergence. The eye movement behavior recognition model based on a CNN and Bi-LSTM hybrid neural network can mine deep eye movement behavior characteristics from the obtained original eye movement data. The realization of effective recognition of user eye movement behavior with short running time meets the needs of rapid and accurate recognition for virtual workshop oriented eye movement interaction.

In allusion to the research of implicit eye movement interaction, the multi-dimensional state vectors that constitute the user’s eye movement interaction channel in the virtual workshop system are analyzed. The user’s eye movement interaction intention mode is divided into interactive intention mode and non-interactive intention mode. Aiming at the immeasurable and time-dependent characteristics of interactive intention, the GMM-HMM model, which can predict the invisible state from the observable value and consider the time series, is used to model eye movement interaction intention recognition in order to construct a GMM-HMM-based understanding model of eye movement interaction intention.

The eye movement experiment is designed to collect the data needed for model verification in order to evaluate the performance of the models and then select the best one. The results of two experiments show that the CNN and Bi-LSTM hybrid neural network model is superior to others in recognition accuracy, and verifies the advantages of the four characteristic variables—scene state, gaze direction, eye movement state and psychological state—informing a characteristic variable group to represent the user’s interaction intention. Finally, according to the proposed framework of 3D eye movement interaction technique, the feasibility of the framework is verified by the application of the verified algorithm model.

However, research on eye movement interaction at the present is sparse and incomplete, especially in the field of industrial manufacturing, and there are still some limitations that deserve further study. First of all, the algorithm model needs to have certain robustness. The collected eye movement data often appear to have missing or abnormal values, so when the sensor data is used as input and eye movement features are extracted, the model should have the ability to preprocess the input data so that individual missing values or abnormal values will not affect the model’s judgment of the user’s state. Secondly, as for the division of user intention patterns in the models of eye movement interaction intention understanding, this research only broadly divides them into two patterns. It is necessary to divide user interaction intentions in the virtual workshop scene more carefully to realize more intelligent and targeted eye movement interaction. In addition, since the training data come from the interactive process of operating the AR application in a virtual workshop, a single dataset has a large number of samples, but it lacks obvious and targeted features, which may lead to insufficient model training. Therefore, it is important to further optimize and improve the structure of the model so that the intention understanding model can extract data features more efficiently. Finally, for the framework of 3D eye movement interaction technique in this paper, only a few common interaction tasks in virtual workshop are selected to develop eye movement interaction function modules, which can be extended for industrial manufacturing and virtual workshop applications in the future. In addition, by measuring electrical signals generated by the brain and heart, namely EEG and ECG, data with time continuity can be collected and combined with 3D eye movement interaction technology, achieving more reliable intention recognition. In the case of user’s actual psychological state and cognitive load, more effective interaction can be carried out [27,28,29,30]. With the gradual maturity of this technology, its application will no longer be limited to the virtual workshop or industrial manufacturing—it will have huge application potential in construction engineering management, health care, education and many other fields [31,32].

Author Contributions

Data curation, M.D.; Formal analysis, Z.G. and M.D.; Methodology, J.L.; Project administration, L.L.; Visualization, R.Y.; Writing—original draft, J.L.; Writing—review & editing, Z.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Key Research and Development Program of China under the Grant No. 2021YFB3300503, National Defense Fundamental Research Foundation of China under the Grant No. JCKY2020413C002.

Conflicts of Interest

The work described has not been submitted elsewhere for publication, in whole or in part, and all the authors listed have approved the manuscript.

Abbreviations

The following abbreviations are used in this manuscript:

| AR | Augmented reality |

| CNN | Convolutional Neural Network |

| Bi-LSTM | Bi-directional Long Short-term Memory Network |

| HMM | Hidden Markov Model |

| SVM | Support Vector Machine |

| CDT | Fixation dispersion algorithm based on covariance |

| EM | Engbert and Mergenthaler |

| IDT | Identification by dispersion threshold |

| IKT | Identification by Kalman filter |

| IMST | Identification by minimal spanning tree |

| IHMM | Identification by hidden Markov model |

| IVT | Identification by velocity threshold |

| NH | Nyström and Holmqvist |

| BIT | Binocular-individual threshold |

| LNS | Larsson, Nystrom and Stridh |

| GMM | Gaussian Mixture Model |

| EOG | Electro-Oculogram |

| ANFIS | Adaptive Neural Fuzzy Inference System |

| EEG | Electroencephalogram |

| ECG | Electrocardiograph |

| RF | Random Forest |

References

- Nahavandi, S. Industry 5.0—A Human-Centric Solution. Sustainability 2019, 11, 4371. [Google Scholar] [CrossRef]

- Hyrskykari, A.; Majaranta, P.; Räihä, K.J. From gaze control to attentive interfaces. Proc. HCII 2005, 2, 17754474. [Google Scholar]

- Ponce, H.R.; Mayer, R.E. An eye movement analysis of highlighting and graphic organizer study aids for learning from expository text. Comput. Hum. Behav. 2014, 41, 21–32. [Google Scholar] [CrossRef]

- Wang, S.; Wang, Q.; Chen, H. Research and Application of Eye Movement Interaction based on Eye Movement Recognition. MATEC Web Conf. 2018, 246, 03038. [Google Scholar] [CrossRef][Green Version]

- Katona, J. Analyse the Readability of LINQ Code using an Eye-Tracking-based Evaluation. Acta Polytech. Hung. 2021, 18, 193–215. [Google Scholar] [CrossRef]

- Katona, J. Clean and dirty code comprehension by eye-tracking based evaluation using GP3 eye tracker. Acta Polytech. Hung. 2021, 18, 79–99. [Google Scholar] [CrossRef]

- Katona, J. Measuring Cognition Load Using Eye-Tracking Parameters Based on Algorithm Description Tools. Sensors 2022, 22, 912. [Google Scholar] [CrossRef]

- Andersson, R.; Larsson, L.; Holmqvist, K.; Stridh, M.; Nyström, M. One algorithm to rule them all? An evaluation and discussion of ten eye movement event-detection algorithms. Behav. Res. Methods 2017, 49, 616–637. [Google Scholar] [CrossRef]

- Wang, X.; Xiao, Y.; Deng, F.; Chen, Y.; Zhang, H. Eye-Movement-Controlled Wheelchair Based on Flexible Hydrogel Biosensor and WT-SVM. Biosensors 2021, 11, 198. [Google Scholar] [CrossRef]

- Dong, W.; Yang, L.; Gravina, R.; Fortino, G. ANFIS fusion algorithm for eye movement recognition via soft multi-functional electronic skin. Inf. Fusion 2021, 71, 99–108. [Google Scholar] [CrossRef]

- Cheng, B.; Zhang, C.; Ding, X.; Wu, X. Convolutional neural network implementation for eye movement recognition based on video. In Proceedings of the 2018 33rd Youth Academic Annual Conference of Chinese Association of Automation (YAC), Nanjing, China, 18–20 May 2018. [Google Scholar] [CrossRef]

- Umemoto, K.; Yamamoto, T.; Nakamura, S.; Tanaka, K. Search intent estimation from user’s eye movements for supporting information seeking. In Proceedings of the International Working Conference on Advanced Visual Interfaces, Naples, Italy, 21–25 May 2012; pp. 349–356. [Google Scholar] [CrossRef]

- Chen, Y.N.; Hakkani-Tur, D.; Tur, G.; Gao, J.F.; Deng, L. End-to-End Memory Networks with Knowledge Carryover for Multi-Turn Spoken Language Understanding. In Proceedings of the Annual Conference of International Speech Communication Association, San Francisco, CA, USA, 8–12 September 2016; pp. 3245–3249. [Google Scholar] [CrossRef]

- Yang, M.H.; Tao, J.H.; Li, H.; Chao, L. Nature Multimodal Human-Computer-Interaction Dialog System. Comput. Sci. 2014, 41, 12–18. [Google Scholar] [CrossRef]

- Zhu, A.Z.; Yuan, L.; Chaney, K.; Daniilidis, K. Unsupervised Event-Based Learning of Optical Flow, Depth, and Egomotion. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Schutz, A.C.; Braun, D.I.; Gegenfurtner, K.R. Eye movements and perception: A selective review. J. Vis. 2011, 11, 89–91. [Google Scholar] [CrossRef] [PubMed]

- Jang, Y.M.; Mallipeddi, R.; Lee, S.; Kwak, H.W.; Lee, M. Human intention recognition based on eyeball movement pattern and pupil size variation. Neurocomputing 2014, 128, 421–432. [Google Scholar] [CrossRef]

- Li, S.; Zhang, X. Implicit Intention Communication in Human–Robot Interaction Through Visual Behavior Studies. IEEE Trans. Hum.-Mach. Syst. 2017, 47, 437–448. [Google Scholar] [CrossRef]

- Koochaki, F.; Najafizadeh, L. A Data-Driven Framework for Intention Prediction via Eye Movement with Applications to Assistive Systems. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 974–984. [Google Scholar] [CrossRef] [PubMed]

- Shi, L.; Copot, C.; Vanlanduit, S. Gazeemd: Detecting visual intention in gaze-based human-robot interaction. Robotics 2021, 10, 68. [Google Scholar] [CrossRef]

- Antoniou, E.; Bozios, P.; Christou, V.; Tzimourta, K.D.; Kalafatakis, K.; Tsipouras, M.G.; Giannakeas, N. Tzallas A.T. EEG-Based Eye Movement Recognition Using Brain–Computer Interface and Random Forests. Sensors 2021, 21, 2339. [Google Scholar] [CrossRef]

- Park, H.; Lee, S.; Lee, M.; Chang, M.S.; Kwak, H.W. Using eye movement data to infer human behavioral intentions. Comput. Hum. Behav. 2016, 63, 796–804. [Google Scholar] [CrossRef]

- Bellet, M.E.; Bellet, J.; Nienborg, H.; Hafed, Z.M.; Berens, P. Human-level saccade detection performance using deep neural networks. J. Neurophysiol. 2019, 121, 646–661. [Google Scholar] [CrossRef]

- Liu, S.; Zheng, K.; Zhao, L.; Fan, P. A driving intention prediction method based on hidden Markov model for autonomous driving. Comput. Commun. 2020, 157, 143–149. [Google Scholar] [CrossRef]

- Zhang, J.; Yang, J.; Su, P. Survey of Monosyllable Recognition in Speech Recognition. Comput. Sci. 2020, 47, 4. [Google Scholar]

- An, X.N.; Wang, Z.W.; Zhang, C.L. Face feature labeling and recognition based on hidden Markov model. J. Guangxi Univ. Sci. Technol. 2020, 31, 118–125. [Google Scholar]

- Newman-Toker, D.E.; Curthoys, I.S.; Halmagyi, G.M. Diagnosing stroke in acute vertigo: The HINTS family of eye movement tests and the future of the “Eye ECG”. In Seminars in Neurology; Thieme Medical Publishers: New York, NY, USA, 2015; Volume 35, pp. 506–521. [Google Scholar] [CrossRef]

- Katona, J.; Ujbanyi, T.; Sziladi, G.; Kovari, A. Examine the effect of different web-based media on human brain waves. In Proceedings of the 2017 8th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Debrecen, Hungary, 11–14 September 2017; pp. 000407–000412. [Google Scholar] [CrossRef]

- Katona, J. Examination and comparison of the EEG based Attention Test with CPT and TOVA. In Proceedings of the 2014 IEEE 15th International Symposium on Computational Intelligence and Informatics (CINTI), Budapest, Hungary, 19–21 November 2014; pp. 117–120. [Google Scholar] [CrossRef]

- Mathur, P.; Mittal, T.; Manocha, D. Dynamic graph modeling of simultaneous eeg and eye-tracking data for reading task identification. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Virtual, 6–12 June 2021; pp. 1250–1254. [Google Scholar] [CrossRef]

- Souchet, A.D.; Philippe, S.; Lourdeaux, D.; Leroy, L. Measuring visual fatigue and cognitive load via eye tracking while learning with virtual reality head-mounted displays: A review. Int. J. Hum.-Comput. Interact. 2022, 38, 801–824. [Google Scholar] [CrossRef]

- Cheng, B.; Luo, X.; Mei, X.; Chen, H.; Huang, J. A Systematic Review of Eye-Tracking Studies of Construction Safety. Front. Neurosci. 2022, 16, 891725. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).