Abstract

Combining insights from collaborative governance, performance management, and health technology assessment (HTA) literature, this study develops an integrated framework to systematically measure and monitor the performance of HTA network programmes. This framework is validated throughout an action research carried out in the Italian HTA network programme for medical devices. We found that when building up collaborative performance management systems, some elements such as the participation in the design and the use of context specific performance assessment framework, facilitate their acceptance by managers and policy makers especially in high professionalized and sector-specific organizations because it reflects their distinctive language and culture. The hybrid framework may help health authorities and policymakers to understand the HTA network, monitor its performance, and ensure network sustainability over time.

1. Introduction

Nowadays, public sector organizations are becoming more and more interdependent, moving toward various forms of horizontal governance [1] where cross-boundary collaborative actions are paramount to the solution of complex societal needs or “wicked problems” [2] like COVID-19 emergency. Multi-organizational arrangements are formed around problems or resources and commonly established to facilitate complex interactions, multilevel decision-making, service provision and delivery, transmission of knowledge and ideas, as well as other collaborative behaviors [3]. There is a growing need for public and private inter-organizational structures to address the increased complexity of such interactions and identify a set of values for assessing policy outcomes. Collaborative governance literature [4,5] provides a good ground for reflecting on the dynamics and actions of inter-organizational collaborations but still little evidence is available on how to assess such collaborations. Numerous facilitating factors or antecedents have been identified [6,7,8] and productivity dimensions of collaborative performance have been proposed [5,9]. However, measuring the performance results of cross-boundary collaborations remains difficult and complex where little agreement exists on what constitute effective performance and how to successfully manage the collaboration [3,10,11,12].

Some authors have highlighted the importance of culture, information sharing, and collaborative language as factors influencing the understanding and, hence, the collaboration among organizations [13,14,15]. Other authors have also underlined the importance to engage employees of the organization into the cross-boundary collaboration [16]. These seem to suggest that sector-specific mechanisms (such as performance measurement systems) may facilitate the collaboration, especially in those sectors where professional culture is highly specific requiring performance management systems capable to catch what professionals consider salient in their (inter-) organizational setting.

One of the industries that may benefit the most from an assessment using the collaborative governance framework is the healthcare. Indeed, there are many networks centered on both diseases (like the cancer network) and functions (like Health Technology Assessment, HTA). Focusing on the functional network of HTA (which relies upon specific methods and literature), we formulated the following research questions: (i) How can HTA networks be assessed? (ii) Which are the indicators that can help monitoring the performance of the HTA networks and its sustainability overtime?

This paper provides an integrated hybrid framework to measure the performance of an inter-organizational programme operating in a highly professional sector, that of health care throughout the case of the Italian health technology assessment (HTA) network programme. The paper develops the framework using three streams of literature: one sector-specific, the HTA literature, and the other two general streams of performance management, and the collaborative governance regimes literature. Moreover, the framework is validated by managers and policy makers of different organizations participating in the Italian HTA network programme on medical devices (MDs) throughout a constructive approach. The proposed framework may help health authorities and policy makers to understand the HTA network, monitor its performance, and ensure network sustainability over time. We define sustainability of collaborative governance regimes as the extent to which a collaborative network achieves and maintains (i.e., sustains) the desired level of quality and effectiveness of performance in the target conditions over time after the initial implementation efforts [9]. In fact, the concept of sustainability has recently evolved towards a suggested “adaptation phase” that bridges from the initial roll-out of the cross-boundary collaboration to a longer-term sustainability phase [17]. Finally, the paper closes with a discussion section drawing insights for both academics and practitioners.

2. Literature Review

2.1. Collaborative Governance Framework to Assess Performance

Emerson [4] defined collaborative governance as “the processes and structures of public policy decision making and management that engage people constructively across the boundaries of public agencies, levels of government, and/or the public, private, and civic spheres in order to carry out a public purpose that could not otherwise be accomplished.” This means that performance assessment framework should be not only multidimensional but also multi-layer and consider multi-actor involvement [5,8,9,10,18].

Among the wide range of studies dealing with different approaches and strategies to pursue effective collaborative governance, the network management outcomes of Klijn et al. [8], the performance dimensions and determinants in collaborative arrangements proposed by Cepiku [5] and the performance matrix suggested by Emerson and Nabatchi to measure the productivity of collaborative governance regimes [9] provide useful insights on how to measure the performance of collaboration management.

In particular, Klijn et al. (2010) [8] identified six elements to be considered to measure the process outcomes: (i) the level of actors’ involvement, (ii) the conflict resolution, (iii) the extent to which the process has encountered stagnations or deadlocks, (iv) the productive use of differences in perspectives, (v) the contact frequency, and (vi) the stakeholder satisfaction with the results achieved.

Cepiku [5] identified three dimensions to be considered in performance management for collaborative actions: (i) determinants (external resource, collaboration management, and internal resources), (ii) intermediate results (quality of collaboration), and (iii) final results (performance at community or organizational level) of the collaborative arrangements.

Emerson and Nabatchi [9] proposed a performance matrix constituted by nine critical dimensions assessing productivity of collaborative governance regimes. Specifically, the multidimensional matrix was developed by combining three performance levels (actions, outcomes, and adaption) with three analysis units (participant organizations, the collaborative governance regimes, and target goals).

However, more studies seeking to offer working models and tools from which to empirically analyze network performance would be valuable [11].

From this stream of literature, we identify Hypothesis 1.

Hypothesis 1 (H1).

A collaborative governance framework assessing performance should consider not only the outcomes but also the collaboration management and the quality of collaboration.

2.2. Performance Management in Collaborative Setting

The term “collaborative performance management” was defined by Choi and Moynihan “as sharing of resources and information among different actors for the purposes of achieving a formal performance goal” [16]. Hence, it is a process that help to formalize mechanisms to share goals even among cross-border actors and as a consequence is a way to enhance accountability both for performance management and collaboration [19,20,21].

Accountability is a crucial element to make agencies effective [22,23] as well as to make network working better [20,24]. Meshing collaboration with traditional management tools and systems seem a win–win strategies for both collaborative governance [25] and traditional strategic planning processes [26,27,28]. Indeed, traditional approaches to performance assessment do not capture well the nature of these complex governance systems where interactions happen across organizational boundaries. Additional complexity is brought about by the potential conflicts between performance measurement considerations of the individual organizations and that of the collaborative organization. Indeed, often collaborative organization represents a virtual organization that is additional to the organizations/units that are participating in the collaborative enterprise. Such arrangements call for the development of performance measurement following an integrated and holistic performance systems-based framework [29], not reducing performance measurement to the traditional control of results achieved by single organization, business structure, units, or processes, rather as a comprehensive tool to improve organization’s performance. Nuti et al. [30] provide an example of displaying healthcare performance results shifting the focus form a single organization’s perspectives to the performance of patients’ care pathway. Despite this evidence yet very few studies deepened the argument of collaborative performance management [16,26,31].

A recent study on collaborative performance management found that seniority, participation in goal setting, and goal salience are factors encouraging interagency collaboration and in particular, goal factors are the most influential variables [16]. In particular, in healthcare organizations the use of salient goal setting is found to be one of the most relevant successful factor of managing performance by objectives [32].

From this stream of literature, we identify Hypothesis 2.

Hypothesis 2.

Performance management in collaborative setting should consider that goal salience matters and the development of performance measurement should consider an integrated and holistic framework.

2.3. Health Technology Assessment Literature on Network Programme

From the early 1990s, almost every Member State of the European Union developed national and regional public HTA agencies or programmes [33,34] with the main aim to disseminate and implement HTA recommendations in order to influence decision-makers and clinicians [34] in the allocation of scarce resource. There are now numerous HTA organizations with different scopes and methodologies (around 50 according to the International Network of Agencies for Health Technology Assessment, see for instance http://www.inahta.org/ accessed on the 30th of March 2021). Networks between and within countries have been set up to share and compare experiences and results, such as the EUnetHTA (European network for Health Technology Assessment) [35,36,37]. HTA agencies are becoming more and more interdependent often working in networks and developing cross-boundary collaborations at an international level. More recently, there have been international actions to harmonize definitions, systems, and methods for assessing technologies in healthcare, in order to guarantee greater transparency and stability through the greater role of central and regional governments [38]. The international harmonization action represents a historical achievement concerning the collaborations among leading HTA networks, societies, and global organizations, such as HTAi, INAHTA, EUnetHTA, ISPOR, and the WHO [39,40]. Some authors have compared the organizational arrangements and governance of the various HTA bodies, the approaches and use of economic evaluations in decision-making [41,42,43,44] or have explored the issue of citizen and patient participation [45,46,47]. Other studies have explored the effectiveness of HTA bodies focusing on the quality and/or usefulness of the evaluations produced (i.e., report) and the dissemination process [48]. In the light of growing demands for public accountability, a framework for the evaluation of individual HTA agency performance has also been proposed [49], and the importance and challenges of developing standards of good practice to assess HTA bodies have been discussed by Drummond et al. (2012) [50,51]. However, multi-organizational HTA networks have received little attention with respect to their overall effectiveness as multi-organization arrangements [52].

To the best of our knowledge, no studies have been published on the performance measurement framework in helping network agencies to monitor the collaboration and the effectiveness in terms of the functioning of multi-organizational agency and their impact on the population or the health system in general.

Indeed, a more comprehensive evaluation of HTA collaborative arrangements across public and private organizations is fundamental in order to understand multi-organizational network functioning, its governance and other determinants of performance.

From this stream of literature, we identify Hypothesis 3.

Hypothesis 3.

HTA agencies or programmes are becoming more and more interdependent often working in networks, thus accountability should consider the performance of such collaborations.

3. Method to Validate the Integrated Framework

We applied a two-step methodology to design the HTA network performance assessment framework. While in the first design step, we opted for a positive approach drawing insights from the literature, in the second step we revised it in the light of a constructive approach [53] to validate the framework proposed. This approach works through the direct involvement of researchers in several phases of the research process, such as testing solutions [54,55]. The approach is widely used in technical sciences, mathematics, operations analysis and clinical medicine, and in management research [54,56], as well as in building performance evaluation systems in healthcare [30,57]. The constructive approach entailed a series of interactions and consisted of three stages (i.e., "brainstorming", "narrowing down" and "ranking") that are listed in Table 1. The sector-specific case used for validating the framework was the Italian HTA network programme on MDs. The interactions between the researchers and multi-professional actors (i.e., experts in HTA) are summarized in Table 1. Experts selected were national and regional public managers, as well as multidisciplinary professionals involved in the HTA network on MDs within the national project named PronHTA.

Table 1.

Constructive approach: stage, type, and experts involved.

In each stage of the constructive approach, the framework, as well as the list of performance indicators were iteratively adapted, and/or extended, on the basis of the strategic document of the Italian Steering Committee (act of the State-Region Conference n.157/2017) which presents the main features of the national HTA programme for MDs (details are reported in “the Italian governance HTA” paragraph).

In the first stage (i.e., brainstorming), we gathered input from several sources for the identification of meaningful indicators: peer-reviewed articles as well as books and grey literature on collaborative governance and performance evaluation systems in healthcare.

In the second stage (i.e., narrowing down), the proposed indicators were rated on the basis of six features gathered from other experiences in the development of performance measurement systems [58,59,60,61]. Specifically, the proposed indicators were rated in terms of their relevance, validity, reliability, interpretability, feasibility, and actionability. At this stage, an on-line questionnaire was sent to the participating experts from the nine regional health systems involved in the PronHTA project and representatives from the national level in order to collect their opinions and prepare a structured discussion.

The third stage (i.e., grouping and ranking) was aimed at grouping the output from the previous stage according to the categories that were coherent with the framework proposed and identifying the final list of performance indicators. More specifically, the grouping and ranking stage consisted of three rounds of iterative discussions. First, we conducted a workshop that was open to other experts from the regional health administrations participating in the PronHTA project in order to discuss all the indicators rated in the second stage and their classification. The second round included an on-line circulation to all the experts of the list of performance indicators. The final round was carried out through face-to-face meetings to reach a consensus on the performance assessment framework and validation of the full list of indicators.

Once we had obtained an initial list of performance indicators and their assessment, the results were discussed face to face with representatives from the regional health administrations and the agency for regional healthcare (Agenas) during a half-day workshop. Following the principle of parsimony, the indicators reporting a low level of feasibility, relevance and validity were not included in the final list of performance indicators.

The process lasted seven months from October 2017 to April 2018.

4. Framework of Analysis

Considering the importance of the salience factors and the capacity to make the actors more participative, we decided to use the languages and references that are already owned by policy makers and stakeholders involved.

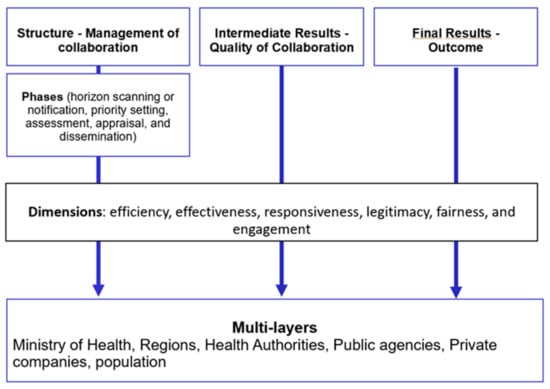

First, the categories applied by collaborative governance scholars to assess performance in the healthcare sector can be mainly traced back to the three blocks developed by Donabedian [62]: (i) structure (called determinants/conditions); (ii) intermediate results (including quality or effectiveness of collaboration in line with Cepiku [5]), and (iii) outcome. The Donabedian’s broad blocks were re-adapted using categories and terminology proposed by [5].

Second, in line with what collaborative governance scholars suggest, we considered multi-layer structures such as the community or population (system level), network, and organizations [5,9,10,18]. The sector-specific domain allows us to use the term population as reference to community level when indicating that macro level of analysis. The population-based approach is the paradigm by which decisions need to be made to maximize value for all people in the population not just the patients seeking/receiving care, ensuring the prevention of inequity related to sociodemographic conditions [63,64]. In this sense, adopting a collective perspective closer to the paradigm of the population medicine promoted by Gray [64], it is relevant highlighting the necessity to assess the collaborative network performance over time by determining how well resources are used for all the people in need in the population.

Third, in order to analyze the collaborative governance approach in relation to the HTA network assessment, we also referred to both the performance management and HTA literature. Over the last three decades, scholars of both performance management and HTA fields have reported that the performance (of an organization, a programme, or a service) should be multidimensional [65,66,67]. Unlike the HTA technique, which mainly converges towards well-identified assessment dimensions, performance management reviews highlighted that often there is divergence in the number and type of dimensions to be considered in measuring the performance of healthcare organizations [68]. Therefore, combining literature above all from performance management, collaborative governance, and HTA, a total of six dimensions were selected to be included in the proposed framework [5,18,50,51,54,60]. Moreover, it is worth highlighting also the necessity to measure the performance across the borders both outside and inside the organizations [16,29,30]. Hence, the framework of analysis for measuring the Italian HTA network for MDs was built considering:

- Donabedian’s three blocks were proposed in the light of the review made by Cepiku [5] on collaborative governance setting. Hence, we mainly referred to (i) structure—management of collaboration; (ii) intermediate results—quality of collaboration; (iii) final results—outcome;

- The multi-layer structure, considering the stakeholders that play a role in the network programme, as suggested by collaborative governance scholars [4,9,10];

- The six dimensions that are the most recurrent in the three streams of literature: efficiency, effectiveness, responsiveness, legitimacy, fairness, and engagement [5,17,49,50,53,59].

Finally, we added the five phases of the HTA network process: horizon scanning (notification), priority setting, assessment, appraisal, and dissemination [69].

The elements considered to build the performance assessment framework for the HTA network programme are shown in Figure 1.

Figure 1.

The elements considered to build up the performance assessment framework for health technology assessment (HTA) network programmes.

Figure 1 shows that the three broad blocks of structure, intermediate results, and final results apply to different actors who may cover different roles. In particular, some of the actors (such as Regional administration) may cover both the role of the final beneficiaries (when measuring the final results) and the role of members of the HTA network programme (when considering the intermediate results or the structure). The phases are present only in the structure block. The dimensions may refer to a specific phase of the structure, as well as to the final results or the intermediate results.

5. The Integrated Performance Assessment Framework of HTA Network

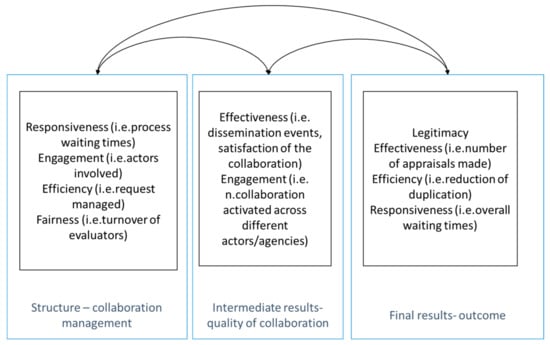

From the analysis of the three streams of literature (HTA, collaborative governance and performance management) we identified the dimensions reported in Figure 2 per each block.

Figure 2.

The integrated performance assessment framework for HTA network programme.

The three blocks are discussed in the following paragraphs.

5.1. Structure—Management of Collaboration

The proposed framework evaluates the structural and internal procedures of the network (efficiency, responsiveness, fairness, and learning), as well as representativeness of the network participants. As indicators of the network structure should be defined according to the main phases characterizing the specific-sector case of interest. However, it is possible to use general indicators also following the main phases of the illustrative network case presented in this paper, i.e., the HTA network programme: (i) notification, (ii) priority setting, (iii) assessment, (iv) appraisal, and (v) dissemination. For the notification of the medical technology by various stakeholders (public healthcare institutions, patients, manufacturers etc.) interested in introducing it into the health care system it is possible to see how many stakeholders have ever sent a request and if this request is done correctly. For the priority setting and selection of the medical technology phase, an important domain is the responsiveness, as well as the reasons linked to the requests rejected and those accepted. For the assessment phase, the technical evaluation report can be done by independent agencies, by the central or local level (depending on the programme design). In this specific case, an important aspect to be considered that also refers to the collaboration management is the potential use of benchmarking outcomes to refine processes and shift towards a culture of learning and continuous quality improvement [70,71]. For the appraisal phase, the recommendations are generally set by the central level and they can be publicly disclosed on the central level websites. Finally, the dissemination phase, which includes monitoring and education, can be analyzed with another specific set of ad-hoc indicators. Learning is often indirect and implicit in multi-organizational relationships [53], however in this case learning is not a sub product of collaboration but is strategic and directly related to the reason for developing the HTA network programmes [37,72] Indeed, both central or local levels are responsible for disseminating the activities to align knowledge and experiences across network actors, as well as the monitoring of the application of the recommendations and the results of this process in the real world. Information sharing is often reported as a main driver of the initial decision by an organization to participate in a (voluntary/partnership) network, and a mean for developing deeper collaborations and align member-value proposition (mission specific goals) [13].

5.2. Intermediate Results—Quality of Collaboration

The effectiveness or quality of collaboration can be analyzed both in positive and negative terms that arise from actions, relationships, and inter-dependencies characterizing collaborative governance regimes [5,8,9,73]. When assessing the quality or effectiveness of collaborations in a positive way, we refer to success indicators. In this sense, the performance assessment framework monitors the engagement or participation of stakeholders into the decision-making process (looking both at geographical and professional differences), which is Drummond’s tenth key principle. In addition to this, the framework measures the quality of collaborative governance regime actions, such as the quality of the technology assessment request in the HTA network programme, as well as participant satisfaction to be engaged in the cross-boundary collaboration. Previous literature showed that participant satisfaction can be the result of three factors (i.e., stakeholder involvement, network complexity and adaptive management) whose combinations lead to different configurations of network participant satisfaction [74]. Furthermore, trust among network participants is another indicator of collaborative governance success, as it is able to predict network performance in different network settings [75]. These indicators can work well as a proxy of the effectiveness of dissemination, training, and monitoring stages across collaborative governance regimes. On the other hand, when analyzing the quality or effectiveness of collaboration in a negative way, we refer to indicators of network failure. Among these, the proposed framework is able to detect static situations that can occur into the collaborative decision-making process by monitoring frequencies and timing of the deadlock.

5.3. Final Results—Outcome

Measuring outcomes is problematic because decision making processes in governance networks are lengthy and sometimes the goals of actors can change overtime [8]. For instance, one of the general outcomes deriving from inter-organization collaborations is the reduction (or even the elimination) of the duplicates [4,75] that is a more specific indicator linked to the overall efficiency [10]. Reduction of duplicates can be interesting for the first phases of the collaboration process, once the collaboration gets up to speed after its initial roll-out duplicates should not be present anymore and this indicator could be no more relevant.

Another general outcome deriving from cross-boundary collaborations is the impact that the collaborative decision-making process of an organization, a programme, or a service can have at different reference levels (i.e., community and/or organizational levels), which is an indicator associated with the effectiveness of network governance [10,50,76]. Considering the specific-sector case, the effectiveness of the HTA network can be described as the impact of the programme on central, regional, and local governments and measured in terms of guidelines provided on the introduction/use of new technologies and/or resource allocation decisions informed for example by reducing the number of duplications in technology assessment. Furthermore, collaborative governance regimes should promptly provide evidence decision, so it is also important to monitor the responsiveness of the collaborative decision-making process representing one of Drummond’s key principles (No. 13) [50]. In the illustrative case of the HTA network programme, the centralized process (through a network in this case) may lead to an increased response time. In fact, the formal implementation of HTA can have positive spillovers beyond sound and prompt reimbursement decisions, such as strengthening the dialogue between relevant stakeholders, focusing the public debate on patient-level outcomes [77], and increasing the perception of citizens and of other actors (e.g., regional health systems, and health authorities) that the decisions regarding MDs are under control. More generally, if the network collaboration is effective, it should lead to greater legitimacy and/or trust of public institutions as perceived by citizens on the basis of experience and satisfaction survey [10,75,77].

6. Case Description and Analysis

6.1. Organizational Context and Initial Conditions

Italy’s health-care system is a regionally based national health service which provides universal coverage largely free of charge at the point of delivery, with HTA activities that are still fragmented. Overall, there is no single national HTA governmental agency. To some extent, HTAs have been conducted by the National Drug Agency (AIFA) for the management of pharmaceutical technologies and by the Agency for Regional Health Services (Agenas) for the general synchronization of national activities. More specifically, Agenas was assigned the task of coordinating and supporting the regions in the process of HTA, even though the regions themselves are responsible for the implementation. In a 2008 survey, the Agenas found that five regional health systems (out of twenty-one) were strongly committed to developing HTA programmes and that an increasing number of hospitals had set up HTA units in order to support hospital-planning processes [78]. Four years later, sixteen regions have formally established a structured workgroup inside their organizations [79].

The governance of MDs has recently gained attention with the creation of a collaborative network among the regional health-care systems for the definition and use of instruments (Law 190/2014) and the appointment of the steering committee (Ministerial Decree 12 March 2015) within the remit of the Ministry of Health [80]. The current governance for MDs is an attempt to achieve a higher level of harmonization nationwide in terms of the methodology used for assessing and appraising biomedical technologies, as well as an important step towards the formal recognition of the need for ad-hoc economic evaluation methods [44]. Unique intrinsic characteristics, like high performance dependency from user skills and training, distinguish MDs from pharmaceutical technologies and make challenging the use of traditional assessment methods.

The strategic document outlining the aim, scope, and organization of the regional collaborative network and HTA process is included in the State-Region Conference agreement (n.157, 21 September 2017), which represents the “network activation” moment and “frames” the interaction of network participants [3].

The actors involved within the national HTA network programme for MDs are the Steering Committee; the 21 regional/provincial health systems, Agenas, the National Institute for health, and other relevant stakeholders involved in the HTA network (patients, MD manufacturers and producers). All the actors can propose the introduction of a (new) MD through an open access online notification form. Every six months the network sets priorities for MD assessment.

Following the available strategic document, the Steering Committee has a key governance role in the HTA network including the coordination of the assessment activities carried out at a regional level and/or by public and private entities and methodological support. The Steering Committee is also in charge of the dissemination of HTA recommendations and monitoring of the national HTA programme impact.

6.2. Driving the Performance of the Collaborative Italian HTA Network on MDs

After the three stages of the constructive approach (Table 1), a total of 25 performance indicators were identified as potential measurements building the framework for assessing the impact of the national HTA programme for MDs. For each block of the proposed framework, it is possible to identify ad-hoc indicators that can be applied to monitor the performance achieved by the national HTA network programme. Table 2 describes a set of core indicators across the three framework blocks including the type of dimension, the indicator identification code, a description of the indicator, the phase of the HTA programme (only for the structure indicators), and the network actors involved.

Table 2.

List of performance indicators to assess the HTA network programme for Medical Devices.

Detailing, six indicators refer to the outcome (A1–A6) or achievement of the programme, six describe the quality of collaboration (B1–B6), and 13 (C codes) are network structure indicators, which are based on the individual phases outlined in the strategic document of the Italian HTA programme for MDs (i.e., notification, priority setting, assessment, appraisal, and dissemination). Among those describing the quality of collaboration, three indicators (B1–B3) and a fourth indicator reporting the number of events organized to disseminate the results (B6) were selected as a good proxy of the effectiveness of dissemination, training and monitoring stages referring to Drummond principles (Drummond Nos. 12 and 14) concerning standards of good practice for HTA organizations.

Network structure indicators refer to whether or not the appropriate processes and methods of task accomplishment are being followed. Specifically, they include: one indicator on the notification and selection of MDs for appraisal (CN1); three indicators on the priority setting phase (CP1-3); four indicators on the evaluation (CA1–CA4); three indicators on the appraisal phase (CAp1–Cap3), and two indicators on dissemination (CD1–CD2). Among the network structure indicators, the proposed framework includes measures of the efficiency of the cross-boundary collaboration concerning the biomedical technologies that are assessed and appraised at the regional level and not through the national HTA programme for reasons of urgency (CA4). Moreover, it also encompasses technologies notified at the national level but not included in the priority list, which are then assessed by regional agencies and appraised at a national level in order to provide national recommendations (CA2).

For each indicator there is a specific document with the rationale, algorithm, source of data and additional notes. These documents are available upon request from the authors.

Indeed, there may be some issues relating to the operationalization of performance measures. On one hand, there is a danger of applying measures which may be simplistic; on the other hand, some relative straightforward measures are needed if effectiveness is to be appraised in a practical way so to provide input to programme management decisions.

Moreover, the interpretation of some of the indicators may change over the lifetime of the HTA network programme. For instance, the number of requests rejected because an HTA report already exists can be considered a positive sign of the reduction of duplicates in the first few years. However, when the HTA network is in a mature stage this would be interpreted as a negative sign because it suggests that the dissemination and learning efforts across the collaborative network has not been effective. In addition to this, the research process showed that the main actors in the HTA network programme involved in designing the framework provided very positive feedback, which can be considered a good proxy of its future implementation [81].

Finally, it is worthwhile highlighting that the proposed performance assessment framework has already and successfully overcome all policy validation stages required by the Steering Committee and other policy bodies to be formally included into the guidelines and recommendations issued by the central government level. In particular, in middle 2019, the framework logical structure has been incorporated into the Guidelines of the Italian Ministry of Health for the Governance of MDs and HTA [82,83].

7. Discussion

The contribution of our study is twofold: (i) theoretical, as it provided an integrated framework combining different literature streams, above all performance management and collaborative governance; (ii) empirical, as the performance assessment framework was built and validated employing a specific-sector case throughout the continuous interaction with the actors involved in the national HTA network programme.

First, collaborative governance is a relatively new form of governance engaging different stakeholders together into “consensus-oriented decision-making” [84]. Inter-organizational collaborations and collaborative strategies with stakeholders are cross-sectoral approaches used to achieve advanced collective learning and problem solving in complex societal issues [2,84]. Nowadays, as sector-specific knowledge and culture are increasing in complexity, the value of inter-organizational collaborations and collective integration is becoming crucial to adequately deal with “wicked problems” like the management of pandemic [85]. Therefore, each sector requires sector-specific mechanisms and refers to a sector-specific stream of literature, as network structure and contextual factors can work as determinants of network success [86,87].

As highlighted by previous literature, the importance of the network structural and contextual factors is even more relevant in high professionalized and sector-specific organizations, such as the HTA network programme proposed in this study as an illustrative case of collaboration governance. Thus, we combined three streams of literature: the two general streams of literature in collaborative governance and performance management of collaborative relationships with the one sector-specific in the field of HTA.

Second, the paper provided a hybrid framework to assess the HTA network programme performance using the Italian case.

The HTA process is complex and there is no single answer to the question of how to assess effectiveness of HTA programmes. Programmes can vary considerably in their structure, their values and the level of importance that are placed on the different attributes [88]. Furthermore, effectiveness of the programme can be measured from different perspectives. The discussion in this paper has tended to reflect the perspective of organizations and stakeholders involved in the network programme following more the need for internal management and accountability to governance.

Nonetheless, the assessment may depend on the level HTA programmes are formulated for (e.g., national, and regional), their institutions’ assessments and maturity of the individual institutions’ HTA programme. As reported by a recent review from leading European HTA bodies by Fuchs et al. [89], institutions with less experience addressed more themes from a structural perspective such as resource capacity, whereas institutions with medium experience addressed themes from a procedural perspective such as “Coordination of assessment”.

The integrated proposed framework was targeted to Regional, local, and central health systems, health authorities, and the other multi-professional actors involved into the national HTA network programme. Using the key characteristics that were most recurrent in the three streams of literature and though a hybridization solution integrating multiple levels and stakeholder perspectives, we developed a sophisticated measurement performance system. The advantages of the chosen methodology to develop and validate the performance assessment framework included the combination of theoretically based and empirically grounded research, the possibility to integrate qualitative and quantitative evidence, as well as the rigor and the inherent goal-directed problem-solving nature of the constructive research approach. However, we are aware that our study design does present a series of limitations that are common to qualitative research techniques. Thus, the adoption of a convenience sample of selected experts involved in all the three stages of framework development and validation was driven by feasibility, given time and resources. Furthermore, the final list of performance indicators was achieved by reaching an expert consensus view, similarly to what happens with the Delphi technique, which may lead to a compromise position rather than true consensus even though explicit criteria for ranking and selecting performance indicators were followed.

The proposed framework was constituted by a multi-layer and multi-level structure with multiple dimensions obtained using indicators of outcomes and proxy of outcomes, responsiveness, and process. Crucial dimensions, such as responsiveness and engagement/participation, appear multiple times in the framework to provide a general vision of the overall network performance effectiveness (outcome or final results) and, simultaneously, an operational vision allowing ad-hoc actions and problem solving within the collaborative network (structure). A possible limitation to be highlighted concerns the possibility of incongruence issues and/or potential collisions that might occur over time from the use of the specific performance indicators proposed in this study to effectively assess the HTA network program for MDs. In particular, the repetition of crucial framework dimensions multiple times, as well as potential subtle overlaps between different performance indicators may challenge the stakeholders that will be responsible for framework implementation, performance appraisal, and network sustainability. To address these potential pitfalls, the identification of confounding factors and incongruence problems might be anticipated at the stage of planning and priority setting. At this stage, mitigation solutions and possible uptakes should also be planned and anticipated. Furthermore, given the inherent complexity of the proposed hybrid framework engaging multiple stakeholders, other pitfalls might appear during the implementation stage of the collaborative governance framework towards HTA network specific mechanisms. For example, the substantial coordination efforts made by the steering body may reduce the agility of the hybrid system itself. Indeed, in organization lead-network structure the meta-governor or leading organization is pivotal for the success of the network and its power role should be extensively assessed [52].

The twofold contribution of our study, both theoretical and empirical, informs research scholars, policy makers, and other HTA stakeholders involved in the network in multiple ways.

The framework provides a new perspective for HTA scholars who are used to assessing programmes instead of networks. In particular, the focus of the performance framework is not just to assess the quality of information and analysis to support decision-making as immediate target of the programme but moved beyond the tangible products of a HTA program, by considering the quality of collaboration, process variables by which assessment is produced, appraisal is made, and stakeholders are involved and informed. The framework also supports multi-professional actors in the network to deal with the difficult task of identifying, through a systematic monitoring tool, the strengths and weaknesses of the network programme. Moreover, the hybrid framework may help policy makers and health authorities to understand the HTA network mechanism, monitor the effectiveness of the collaborative governance performance, and ensure the network sustainability over time. Network sustainability has been defined as “the ability to continue the demonstrated effects over time” once the cross-boundary collaboration gets up to speed after its initial roll-out on practice [9]. However, although the sophisticated measurement system presented was designed alongside the participants of the HTA network for MDs, it needs to be validated throughout its implementation over the HTA network lifetime. In fact, only the systematic use of the framework can provide suggestions regarding the real utility and appropriateness of the performance indicators. Indeed, both performance management support capacity and quality of performance information predict whether managers actually use the data [90]. Fountain [26] notes: "public managers are challenged when asked to maintain vertical accountability in their agency activities while supporting horizontal or networked initiatives for which lines of accountability are less direct and clear."

The proposed integrated framework, as well as the study design can be exploited in the healthcare sector, beyond the specific sector-case here presented. In particular, the hybrid systems of cross-boundary collaborations appear to have great potentiality to be applied in complex cross- sectoral policies, such as those integrating social and healthcare services, but also in more simple collaborative settings where healthcare professionals are encouraged to work in networks (e.g., primary care networks) or multi-disciplinary physicians are informally working in network to provide care for patients along all phases of complex care pathways [91].

Finally, it is worthwhile pointing out that the proposed performance framework to assess the HTA network programme for MDs has been politically validated by the Steering Committee and other Italian policy bodies in 2019. In particular, the value of the proposed framework has been recognized by central government and its logical structure incorporated into the Guidelines of the Italian Ministry of Health for the governance of MDs and HTA [92,93].

8. Conclusions

It is possible to identify two main practice implications from this study.

First, the integrated hybrid framework presented may help national and local health authorities, as well as policy makers to understand the HTA network specific mechanisms, monitor the network performance, and ensure its sustainability over time Network sustainability has been defined as “the ability to continue the demonstrated effects over time” after its initial roll-out on practice and in a longer-term adaptation phase [9]. However, only though an application over time, alongside the HTA network life-time, it will be possible to understand whether (and how) this sophisticated measurement performance system may significantly contribute to the network management and increase its overall performance effectiveness.

Second, an important issue concerning the meta-governor role should be stressed when dealing with the assessment of the collaborative network. The meta-governor must regulate and self-regulate the network, and often a such role is covered by the state or a central agency. In the Italian HTA network programme for MDs, the Steering Committee can be considered as the meta-governor. The power and capacity of this collegial meta-governor will determine the effective implementation of the assessment framework and the effectiveness of the network itself. The role of the meta-governor is crucial and also difficult because it has to regulate the network without undermining autonomy [92,93].

Our study informs future research in several ways. Despite the increasing interest in HTA networks, both at national and supranational level, little attention has been directed to measure their effectiveness in terms of multi-organization arrangements. Using literature above all from performance management and collaborative governance, the paper provides a framework—rather than a comprehensive guide-to assess the performance of HTA networks by considering the Italian HTA network programme for MDs as the reference case. There are significant challenges in assessing effectiveness of HTA programmes as other parties or organizations collaborating in the achievement of overall goals determine many aspects of their effectiveness. This framework places emphasis on the collaborative nature of the arrangement and on the more traditional multidimensionality and multi-layers characteristics to better understand strengthens and weakens of the HTA network. Indicators cover the outcome dimension (for the final beneficiaries like the regional health systems), the intermediate results dimension (the effectiveness and participation) and the process dimension. Further studies could focus on the use of the proposed framework not only for further empirical validation purposes but also for a better understanding of the meta-governor’s capacity to make the HTA network effective in its aims. Concerning validation, it should be stressed that the proposed framework has received a formal political validation in middle 2019, as the framework logical structure has been incorporated into the Guidelines of the Italian Ministry of Health for the governance of MDs and HTA [82,83].

Author Contributions

Conceptualization, M.V. and F.F.; methodology, M.V.; data collection, M.V. and F.F.; writing—original draft preparation, M.V. and F.F., writing—review and editing, M.V., F.F., and S.M.; supervision, M.V. All authors have read and agreed to the published version of the manuscript.

Funding

The study benefits of the funding received by the Tuscany Region to participate at the PronHTA project financed by AGENAS.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We are very grateful to Grazia Campanile from the regional health administration of Tuscany who supported our different approach in assessing the HTA network programme. We are also indebted to Maria Cristina Nardulli, Andrea Belardinelli (Tuscany) and Anna Cavazzana (Veneto). We thank all the regional health authorities for their suggestions and all the team members involved in the working group “9a Sviluppo di indicatori di monitoraggio del programma HTA” of the PronHTA project: Marina Cerbo (Agenas), Michele Tringali (Lombardia), Luciana Ballini (Emilia Romagna), Stefano Gherardi and Giandomenico Nollo (Trento), Giancarlo Conti and Marco Oradei (Marche), Alessandro Montedori (Umbria), Elisabetta Graps and Giovanni Mastrandrea (Puglia) and Gaddo Flego (Liguria).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Klijn, E. Governance and Governance Networks in Europe: An Assessment of 10 years of research on the theme. Public Manag. Rev. 2008, 10, 505–525. [Google Scholar] [CrossRef]

- Ferlie, E.; Fitzgerald, L.; Mcgivern, G.; Dopson, S.; Bennett, C. Public policy networks and “wicked problems”: A nascent solution? Public Adm. 2011, 89, 307–324. [Google Scholar] [CrossRef]

- Agranoff, R.; Mcguire, M. Big Questions in Public Network Management Research. J. Public Adm. Res. Theory J-Part 2001, 11, 195–326. [Google Scholar] [CrossRef]

- Emerson, K.; Nabatchi, T.; Balogh, S. An integrative framework for collaborative governance. J. Public Adm. Res. Theory 2012, 22, 1–29. [Google Scholar] [CrossRef]

- Cepiku, D. Collaborative Governance. In The Routledge Handbook of Global Public Policy and Administration; Routledege Taylor & Francis Group: Oxon, UK; New York, NY, USA, 2017. [Google Scholar]

- Kim, Y.; Johnston, E.W.; Kang, H.S. A Computational Approach to Managing Performance Dynamics in Networked Governance Systems. Public Perform. Manag. Rev. 2011, 34, 580–597. [Google Scholar] [CrossRef]

- Sørensen, E.V.A.; Torfing, J. Making governance networks effective and democratic through metagovernance. Public Adm. 2009, 87, 234–258. [Google Scholar] [CrossRef]

- Klijn, E.H.; Steijn, B.; Edelenbos, J. The Impact of Network Management on Outcomes in Governance Networks. Public Adm. 2010, 88, 1063–1082. [Google Scholar] [CrossRef]

- Emerson, K.; Nabatchi, T. Evaluating the productivity of collaborative governance regimes: A performance matrix. Public Perform. Manag. Rev. 2015, 38, 717–747. [Google Scholar] [CrossRef]

- Provan, K.G.; Milward, H.B. Do Networks Really Work?: A Framework for Evaluating Public-Sector Organizational Networks. Public Adm. Rev. 2001, 61, 414–423. [Google Scholar] [CrossRef]

- Cristofoli, D.; Meneguzzo, M.; Riccucci, N. Collaborative administration: The management of successful networks. Public Manag. Rev. 2017, 19, 275–283. [Google Scholar] [CrossRef]

- Kenis, P.; Provan, K.G. Towards an exogenous theory of public network performance. Public Adm. 2009, 87, 440–456. [Google Scholar] [CrossRef]

- Koliba, C.; Wiltshire, S.; Scheinert, S.; Turner, D.; Campbell, E.; Koliba, C.; Wiltshire, S.; Scheinert, S.; Turner, D. The critical role of information sharing to the value proposition of a food systems network. Public Manag. Rev. 2017, 19. [Google Scholar] [CrossRef]

- Vangen, S. Culturally diverse collaborations: A focus on communication and shared understanding. Public Manag. Rev. 2017, 19, 305–325. [Google Scholar] [CrossRef]

- Mandell, M.; Keast, R.; Chamberlain, D. Collaborative networks and the need for a new management language. Public Manag. Rev. 2017, 19. [Google Scholar] [CrossRef]

- Choi, I.; Moynihan, D. How to foster collaborative performance management? Key factors in the US federal agencies. Public Manag. Rev. 2019, 21, 1538–1559. [Google Scholar] [CrossRef]

- Chambers, D.A.; Glasgow, R.E.; Stange, K.C. The dynamic sustainability framework: Addressing the paradox of sustainment amid ongoing change. Implement. Sci. 2013, 8, 1–11. [Google Scholar] [CrossRef]

- Ansell, C.; Gash, A. Collaborative governance in theory and practice. J. Public Adm. Res. Theory 2008, 18, 543–571. [Google Scholar] [CrossRef]

- Koliba, C.J.; Mills, R.M.; Zia, A. Accountability in Governance Networks: An Assessment of Public, Private, and Nonprofit Emergency Management Practices Following Hurricane Katrina. Public Adm. Rev. 2011, 71, 210–220. [Google Scholar] [CrossRef]

- Milward, H.B.; Provan, K.G. A Manager ’s Guide to Choosing and Using Collaborative Networks Networks and Partnerships Series; IBM Center for the Business of Government: Washington, DC, USA, 2006; pp. 6–28.

- Romzek, B.; LeRoux, K.; Johnston, J.; Kempf, R.J.; Piatak, J.S. Informal accountability in multisector service delivery collaborations. J. Public Adm. Res. Theory 2014, 24, 813–842. [Google Scholar] [CrossRef]

- Bovens, M. Analysing and assessing accountability: A conceptual framework1. Eur. Law J. 2007, 13, 447–468. [Google Scholar] [CrossRef]

- Bovens, M.; Schillemans, T.; Hart, P.T. Does public accountability work? An assessment tool. Public Adm. 2008, 86, 225–242. [Google Scholar] [CrossRef]

- Provan, K.G.; Lemaire, R.H. Core Concepts and Key Ideas for Understanding Public Sector Organizational Networks: Using Research to Inform Scholarship and Practice. Public Adm. Rev. 2012, 72, 638–648. [Google Scholar] [CrossRef]

- Vangen, S.; Hayes, J.P.; Cornforth, C. Governing Cross-Sector, Inter-Organizational Collaborations. Public Manag. Rev. 2015, 17, 1237–1260. [Google Scholar] [CrossRef]

- Fountain, J.E. Implementing Cross-Agency Collaboration: A Guide for Federal Managers. IBM Cent. Bus. Gov. Collab. Across Boundaries Ser. 2013, 1–54. [Google Scholar]

- Lee, D.; McGuire, M.; Jong Ho, K. Collaboration, strategic plans, and government performance: The case of efforts to reduce homelessness. Public Manag. Rev. 2018, 20, 360–376. [Google Scholar] [CrossRef]

- McGuire, M. Collaborative public management: Assessing what we know and how we know it. Public Adm. Rev. 2006, 66, 33–43. [Google Scholar] [CrossRef]

- Bititci, U.; Garengo, P.; Dorfler, V.; Nudurupati, S. Performance Measurement: Challenges for Tomorrow. Int. J. Mangement Rev. 2012, 14, 305–327. [Google Scholar] [CrossRef]

- Nuti, S.; Noto, G.; Vola, F.; Vainieri, M. Let’s play the patients music. Manag. Decis. 2018, MD-09-2017-0907. [Google Scholar] [CrossRef]

- Noto, G.; Coletta, L.; Vainieri, M. Measuring the performance of collaborative governance in food safety management: An Italian case study. Public Money Manag. 2020, 1–10. [Google Scholar] [CrossRef]

- Vainieri, M.; Lungu, D.A.; Nuti, S. Insights on the effectiveness of reward schemes from 10-year longitudinal case studies in 2 Italian regions. Int. J. Health Plann. Manage. 2018, 1–11. [Google Scholar] [CrossRef]

- Sorenson, C.; Drummond, M.; Kanavos, P. Ensuring Value for Money in Health Care: The Role of Health Technology Assessment in the European Union; World Health Organization: Copenhagen, Denmark, 2008; pp. 1–180. [Google Scholar]

- Banta, D. The development of health technology assessment. Health Policy 2003, 63, 121–132. [Google Scholar] [CrossRef]

- Ricciardi, W.; Cicchetti, A.; Marchetti, M. Health Technology Assessment’s Italian Network: Origins, aims and advancement. Ital. J. Public Health 2005, 2, 29–32. [Google Scholar]

- Kristensen, F.B.; Mäkelä, M.; Neikter, S.A.; Rehnqvist, N.; Håheim, L.L.; Mørland, B.; Milne, R.; Nielsen, C.P.; Busse, R.; Lee-Robin, S.H.; et al. European network for health technology assessment, eunethta: Planning, development, and implementation of a sustainable european network for health technology assessment. Int. J. Technol. Assess. Health Care 2009, 25, 107–116. [Google Scholar] [CrossRef]

- Kristensen, F.B.; Lampe, K.; Wild, C.; Cerbo, M.; Goettsch, W.; Becla, L. The HTA Core Model®—10 Years of Developing an International Framework to Share Multidimensional Value Assessment. Value Health 2017, 20, 244–250. [Google Scholar] [CrossRef]

- Tarricone, R.; Torbica, A.; Ferré, F.; Drummond, M. Generating appropriate clinical data for value assessment of medical devices: What role does regulation play? Expert Rev. Pharm. Outcomes Res. 2014, 14, 707–718. [Google Scholar] [CrossRef] [PubMed]

- Erdös, J.; Ettinger, S.; Mayer-Ferbas, J.; de Villiers, C.; Wild, C. European Collaboration in Health Technology Assessment (HTA): Goals, methods and outcomes with specific focus on medical devices. Wiener Med. Wochenschr. 2019, 169, 284–292. [Google Scholar] [CrossRef] [PubMed]

- O’Rourke, B.; Oortwijn, W.; Schuller, T. The new definition of health technology assessment: A milestone in international collaboration. Int. J. Technol. Assess. Health Care 2020, 36, 187–190. [Google Scholar] [CrossRef]

- Martelli, F.; La Torre, G.; Di Ghionno, E.; Staniscia, T.; Neroni, M.; Cicchetti, A.; Von Bremen, K.; Ricciardi, W.; Carlo, F.; Maccarini, M.; et al. Health technology assessment agencies: An international overview of organizational aspects. Int. J. Technol. Assess. Health Care 2007, 23, 414–424. [Google Scholar] [CrossRef]

- Ciani, O.; Wilcher, B.; Blankart, C.R.; Hatz, M.; Rupel, V.P.; Erker, R.S.; Varabyova, Y.; Taylor, R.S. Health Technology Assessment of Medical Devices: A Survey of Non-European Union Agencies. Int. J. Technol. Assess. Health Care 2015, 31, 154–165. [Google Scholar] [CrossRef]

- Moharra, M.; Kubesch, N.; Estrada, M.; Parada, T.; Cortés, M.; Espallargues, M.; on behalf of Work Package 8, Eu. project. Survey report on HTA organisations. Barc. Catalan Agency Health Technol. Assess. Res. 2008. [Google Scholar]

- Torbica, A.; Tarricone, R.; Drummond, M. Does the approach to economic evaluation in health care depend on culture, values, and institutional context? Eur. J. Health Econ. 2017. [Google Scholar] [CrossRef] [PubMed]

- Facey, K.M.; Ploug Hansen, H.; Single, A.N. Patient Involvement in Health Technology Assessment; Springer: Singapore, 2017. [Google Scholar]

- Gagnon, M.-P.; Desmartis, M.; Lepage-Savary, D.; Gagnon, J.; St-Pierre, M.; Rhainds, M.; Lemieux, R.; Gauvin, F.-P.; Pollender, H.; Légaré, F. Introducing patients’ and the public’s perspectives to health technology assessment: A systematic review of international experiences. Int. J. Technol. Assess. Health Care 2011, 27, 31–42. [Google Scholar] [CrossRef]

- Abelson, J.; Wagner, F.; DeJean, D.; Boesveld, S.; Gauvin, F.P.; Bean, S.; Axler, R.; Petersen, S.; Baidoobonso, S.; Pron, G.; et al. PUBLIC and PATIENT INVOLVEMENT in HEALTH TECHNOLOGY ASSESSMENT: A FRAMEWORK for ACTION. Int. J. Technol. Assess. Health Care 2016, 32, 256–264. [Google Scholar] [CrossRef]

- Hailey, D. Elements of Effectiveness for Health Technology Assessment; Alberta Heritage Foundation for Medical Research (AHFMR): Edmont, AB, Canada, 2003. [Google Scholar]

- Lafortune, L.; Farand, L.; Mondou, I.; Sicotte, C.; Battista, R. Assessing the performance of health technology assessment organizations: A framework. Int. J. Technol. Assess. Health Care 2008, 24, 76–86. [Google Scholar] [CrossRef]

- Drummond, M.; Neumann, P.; Jönsson, B.; Luce, B.; Schwartz, J.S.; Siebert, U.; Sullivan, S.D. Can we reliably benchmark health technology assessment organizations? Int. J. Technol. Assess. Health Care 2012, 28, 159–165. [Google Scholar] [CrossRef]

- Kristensen, F.B.; Husereau, D.; Huić, M.; Drummond, M.; Berger, M.L.; Bond, K.; Augustovski, F.; Booth, A.; Bridges, J.F.P.; Grimshaw, J.; et al. Identifying the Need for Good Practices in Health Technology Assessment: Summary of the ISPOR HTA Council Working Group Report on Good Practices in HTA. Value Health 2019, 22, 13–20. [Google Scholar] [CrossRef]

- Provan, K.G.; Kenis, P. Modes of network governance: Structure, management, and effectiveness. J. Public Adm. Res. Theory 2008, 18, 229–252. [Google Scholar] [CrossRef]

- Provan, K.G.; Sydow, J. Evaluating inter-organizational relationships. Oxford Handb. Inter. Organ. Relat. 2008, 691–718. [Google Scholar]

- Kasanen, E.; Lukka, K.; Siitonen, A. The constructive approach in management accounting research. J. Manag. Account. Res. 1993, 5, 243–264. [Google Scholar]

- Labro, E.; Tuomela, T.S. On bringing more action into management accounting research: Process considerations based on two constructive case studies. Eur. Account. Rev. 2003, 12, 409–442. [Google Scholar] [CrossRef]

- Mitchell, F.; Nørreklit, H.; Raffnsøe-Møller, M. A pragmatic constructivist approach to accounting practice and research. Qual. Res. Account. Manag. 2008, 5, 184–206. [Google Scholar] [CrossRef]

- Nuti, S.; Seghieri, C.; Vainieri, M. Assessing the effectiveness of a performance evaluation system in the public health care sector: Some novel evidence from the Tuscany region experience. J. Manag. Gov. 2012. [Google Scholar] [CrossRef]

- Pencheon, D. The Good indicators guide: Understanding how to use and choose indicators. Association of Public Health Observatories. Assoc. Public Heal. Obs. Inst. Innov. Improv. 2007. [Google Scholar]

- Veillard, J.; Champagne, F.; Klazinga, N.; Kazandjian, V.; Arah, O.A.; Guisset, A. A performance assessment framework for hospitals: The WHO regional office for Europe PATH project. Int. J. Qual. Health Care 2005, 17, 487–496. [Google Scholar] [CrossRef] [PubMed]

- Vainieri, M.; Fabrizi, A.C.; Demicheli, V. Valutazione e misurazione dei servizi di prevenzione. Limiti e potenzialità derivanti da uno studio interregionale. Polit. Sanit. 2013. [Google Scholar]

- Gagliardi, A.R.; Fung Keep Fung, M.; Langer, B.; Stern, H.; Brown, A. Development of ovarian cancer surgery quality indicators using a modified Delphi approach. Gynecol. Oncol. 2005. [Google Scholar] [CrossRef]

- Donabedian, A. Explorations in Quality Assessment and Monitoring; Health Administration Press: Ann Arbor, MI, USA, 1980. [Google Scholar]

- Gray, M.; El Turabi, A. Optimising the value of interventions for populations. BMJ 2012, 345. [Google Scholar] [CrossRef]

- Gray, M.; Pitini, E.; Kelley, T.; Bacon, N. Managing population healthcare. J. R. Soc. Med. 2017, 110, 434–439. [Google Scholar] [CrossRef]

- Kelley, E.; Hurst, J. Health Care Quality Indicators Project. 2006. [Google Scholar] [CrossRef]

- Arah, O.A.; Westert, G.P.; Hurst, J.; Klazinga, N.S. A conceptual framework for the OECD Health Care Quality Indicators Project. Int. J. Qual. Health Care 2006, 18, 5–13. [Google Scholar] [CrossRef]

- Nielsen, C.P.; Funch, T.M.; Kristensen, F.B. Health technology assessment research trends and future priorities in Europe. J. Health Serv. Res. Policy 2010, 16 (Suppl. S2), 6–15. [Google Scholar] [CrossRef]

- Arah, O.A.; Klazinga, N.S.; Delnoij, D.M.J.; ten Asbroek, A.H.A.; Custers, T. Conceptual frameworks for health systems performance: A quest for effectiveness, quality, and improvement. Int. J. Qual. Health Care 2003, 15, 377–398. [Google Scholar] [CrossRef]

- Jommi, C.; Cavazza, M. Il processo decisionale negli Istituti di Health Technology Assessment. In Rapporto OASI; EGEA, Ed.; EGEA: MIlano, Italy, 2009; pp. 157–188. [Google Scholar]

- Marsh, K.; Ijzerman, M.; Thokala, P.; Baltussen, R.; Boysen, M.; Kaló, Z.; Lönngren, T.; Mussen, F.; Peacock, S.; Watkins, J.; et al. Multiple Criteria Decision Analysis for Health Care Decision Making—Emerging Good Practices: Report 2 of the ISPOR MCDA Emerging Good Practices Task Force. Value Health 2016, 19, 125–137. [Google Scholar] [CrossRef] [PubMed]

- Oortwijn, W.; Determann, D.; Schiffers, K.; Tan, S.S.; van der Tuin, J. Towards Integrated Health Technology Assessment for Improving Decision Making in Selected Countries. Value Health 2017, 20, 1121–1130. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; Lipska, I.; McAuslane, N.; Liberti, L.; Hövels, A.; Leufkens, H. Benchmarking health technology assessment agencies -Methodological challenges and recommendations. Int. J. Technol. Assess. Health Care 2020, 36, 332–348. [Google Scholar] [CrossRef]

- Crosby, B.C.; Bryson, J.M. Integrative leadership and the creation and maintenance of cross-sector collaborations. Leadersh. Q. 2010, 21, 211–230. [Google Scholar] [CrossRef]

- Verweij, S.; Klijn, E.H.; Edelenbos, J.; Van Buuren, A. What makes governance networks work? A fuzzy set qualitative comparative analysis of 14 dutch spatial planning projects. Public Adm. 2013, 91, 1035–1055. [Google Scholar] [CrossRef]

- Markovic, J. Contingencies and organizing principles in public networks. Public Manag. Rev. 2017, 19, 1–20. [Google Scholar] [CrossRef]

- Haynes, B. Can it work? Does it work? Is it worth it? Br. Med. J. 1999, 319, 652–653. [Google Scholar] [CrossRef] [PubMed]

- Garrido, M.V.; Kristensen, F.B.; Mielsen, C.P.; Busse, R. Health Technology Policy-Making in Europe Current Status, Challenges and Potential; European Observatory Studies Series N°14; European Observatory on Health Systems and Policies: Brussels, Belgium, 2008. [Google Scholar]

- Favaretti, C.; Cicchetti, A.; Guarrera, G.; Marchetti, M.; Ricciardi, W. Health technology assessment in Italy. Int. J. Technol. Assess. Health Care 2009, 1, 127–133. [Google Scholar] [CrossRef]

- Garattini, L.; van de Vooren, K.; Curto, A. Regional HTA in Italy: Promising or confusing? Health Policy (N. Y.) 2012, 108, 203–206. [Google Scholar] [CrossRef] [PubMed]

- Tarricone, R.; Armeni, P.; Amatucci, F.; Borsoi, L.; Callea, G.; Costa, F.; Federici, C.; Torbica, A.; Marletta, M. Programma nazionale di HTA per dispositivi medici: Prove tecniche di implementazione. In Rapporto OASI; EGEA, Ed.; EGEA: Milano, Italy, 2017; pp. 535–554. [Google Scholar]

- Ferreira, A.; Otley, D. The design and use of performance management systems: An extended framework for analysis. Mana 2009, 20, 263–282. [Google Scholar] [CrossRef]

- Ministero della Salute Cabina di Regia del Programma Nazionale HTA, Documento finale del Gruppo di lavoro, Metodi, Formazione e Comunicazione. Available online: http://www.salute.gov.it/imgs/C_17_pubblicazioni_2855_allegato.pdf (accessed on 15 March 2021).

- (AGENAS), A.N. per i S.S.R. L’utilizzo di strumenti per il governo dei dispositivi medici e per Health Technology Assessment (HTA)—(PRONHTA). 2019. Available online: https://www.trentinosalute.net/Aree-tematiche/Innovazione-e-ricerca/Health-Technology-Assessment-HTA/Report/Relazione-del-progetto-di-Ricerca-Autofinanziata-L-utilizzo-di-strumenti-per-il-governo-dei-dispositivi-medici-per-l-Health-Technology-Assessment-HTA-PRONHTA (accessed on 15 March 2021).

- Gash, A. Collaborative Governance; Edward Elgar Publishing: Cheltenham, UK, 2016. [Google Scholar]

- van den Oord, S.; Vanlaer, N.; Marynissen, H.; Brugghemans, B.; Van Roey, J.; Albers, S.; Cambré, B.; Kenis, P. Network of Networks: Preliminary Lessons from the Antwerp Port Authority on Crisis Management and Network Governance to Deal with the COVID-19 Pandemic. Public Adm. Rev. 2020, 80, 880–894. [Google Scholar] [CrossRef] [PubMed]

- Provan, K.; Milward, H.B. A Preliminary Theory of Interorganizational Network Effectiveness: A Comparative Study of Four Community Mental Health Systems. Adm. Sci. Q. 1995, 40, 1–33. [Google Scholar] [CrossRef]

- Provan, K.G.; Sebastian, J.G. Networks within Networks: Service Link Overlap, Organizational Cliques, and Network Effectiveness. Acad. Manag. 1998, 41, 453–463. [Google Scholar] [CrossRef]

- Alberta Heritage Foundation for Medical Research. Elements of Effectiveness for Health Technology Assessment Programs; Alberta Heritage Foundation for Medical Research: Edmont, AB, Canada, 2003. [Google Scholar]

- Fuchs, S.; Olberg, B.; Panteli, D.; Perleth, M.; Busse, R. HTA of medical devices: Challenges and ideas for the future from a European perspective. Health Policy 2017, 121, 215–229. [Google Scholar] [CrossRef]

- Tantardini, M.; Kroll, A. The Role of Organizational Social Capitalin PerformanceManagement. Public Perform. Manag. Rev. 2016, 39, 83–99. [Google Scholar] [CrossRef]

- Nuti, S.; Ferré, F.; Seghieri, C.; Foresi, E.; Stukel, T.A. Managing the performance of general practitioners and specialists referral networks: A system for evaluating the heart failure pathway. Health Policy 2020, 124, 44–51. [Google Scholar] [CrossRef] [PubMed]

- Sørensen, E.; Torfing, J.; Rhodes, R.A.W. The democratic anchorage of governance networks The New Governance: Governing without Government. Scan. Polit. Stud. 2005, 28, 195–218. [Google Scholar] [CrossRef]

- Sørensen, E.; Torfing, J. Political leadership in the age of interactive governance: Reflections on political aspects of governance. In Critical Reflections on Interactive Governance; Edelenbos, J., van Meerkerk, I., Eds.; Edward Elgar Publishing: Cheltenham, UK, 2016. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).