A Deep Learning Approach to Assist Sustainability of Demersal Trawling Operations

Abstract

:1. Introduction

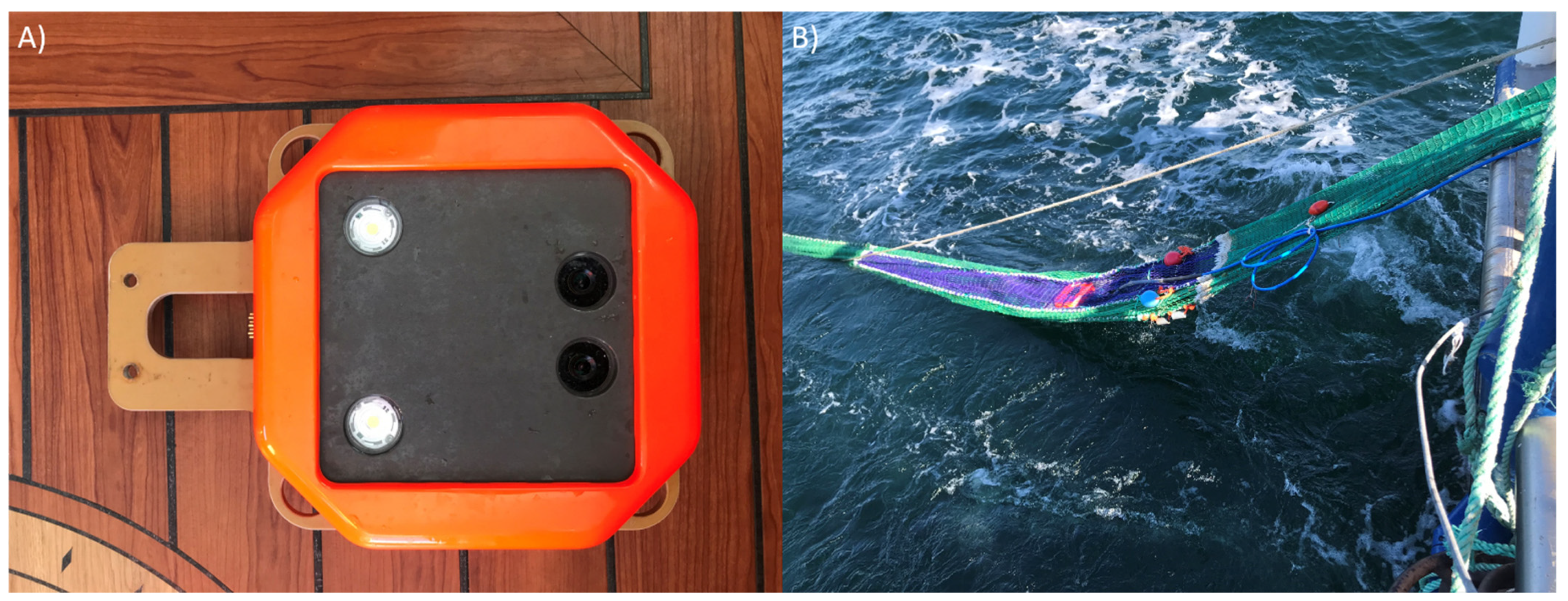

2. Methods and Materials

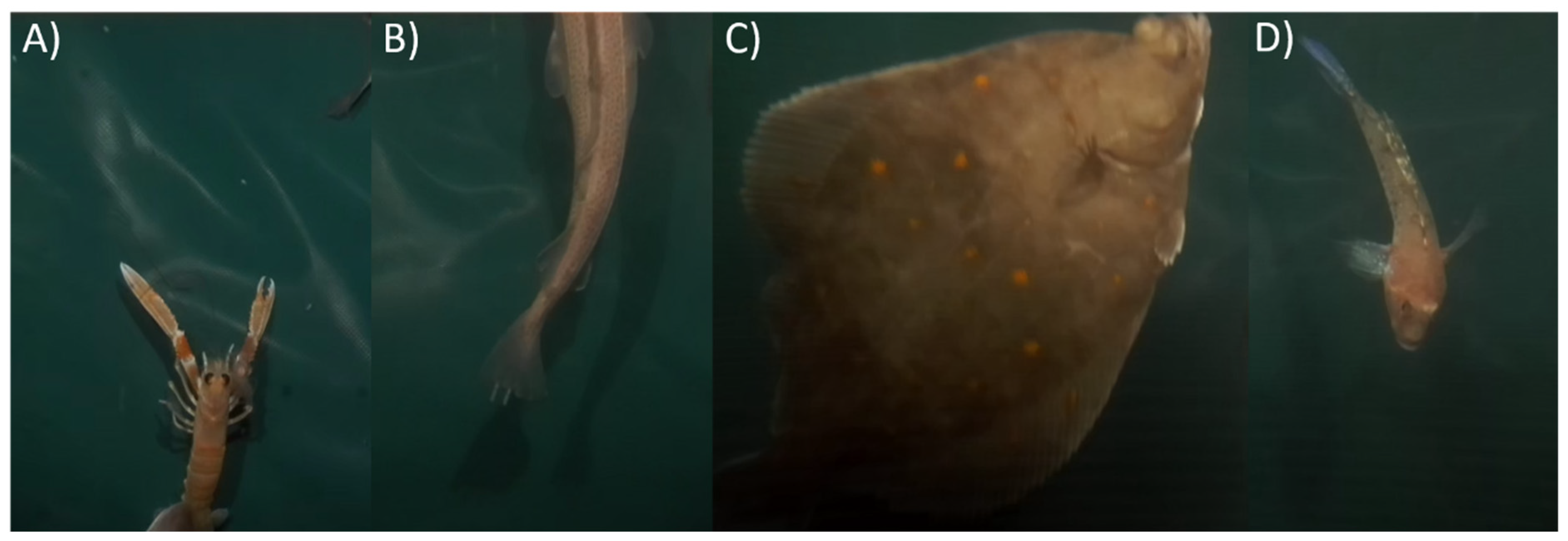

2.1. Data Preparation

2.2. Mask-RCNN Training

2.3. Data Augmentation

2.4. Tracking and Counting

2.5. Algorithm Evaluation

2.6. Automated and Manual Catch Comparison

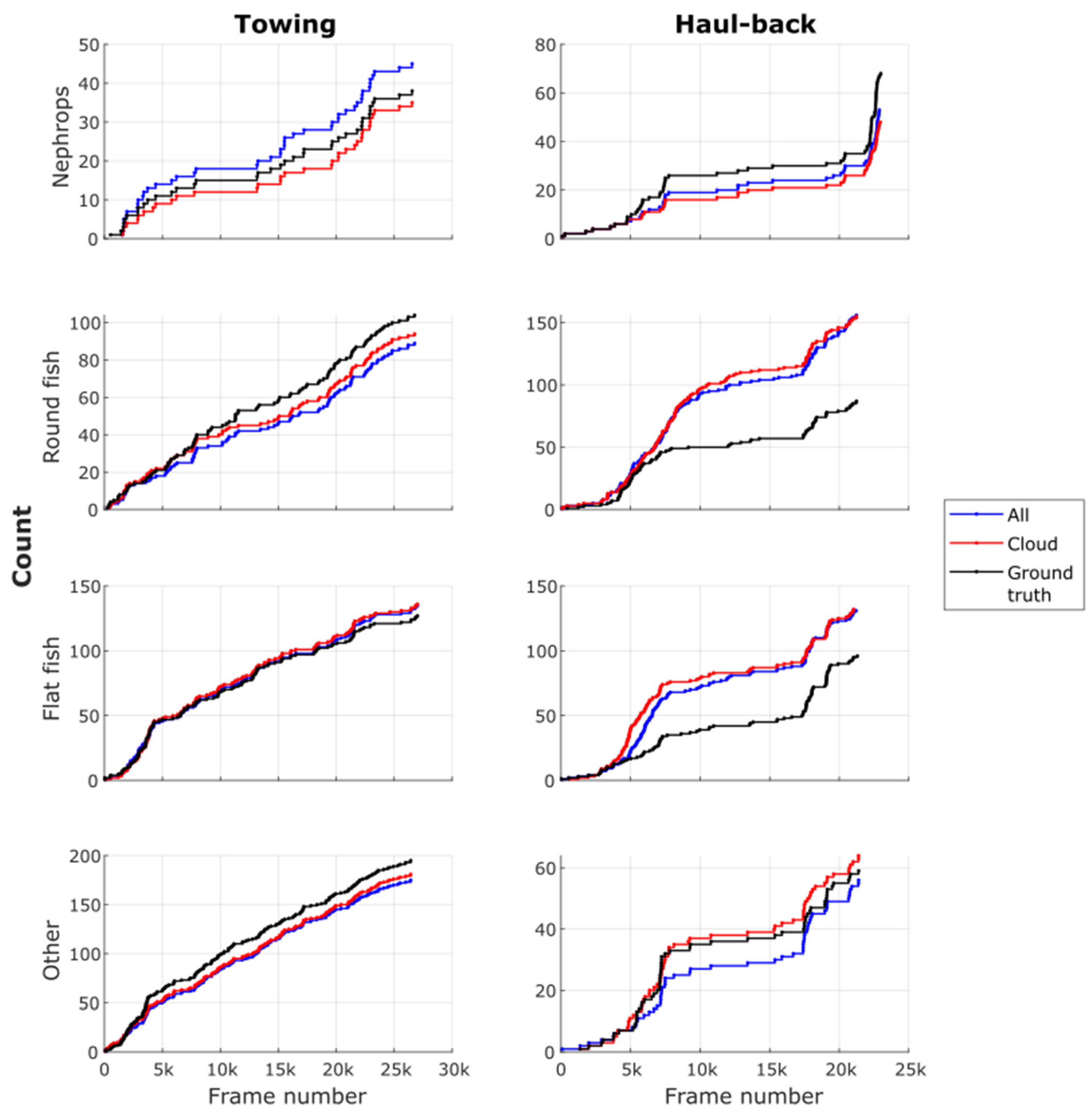

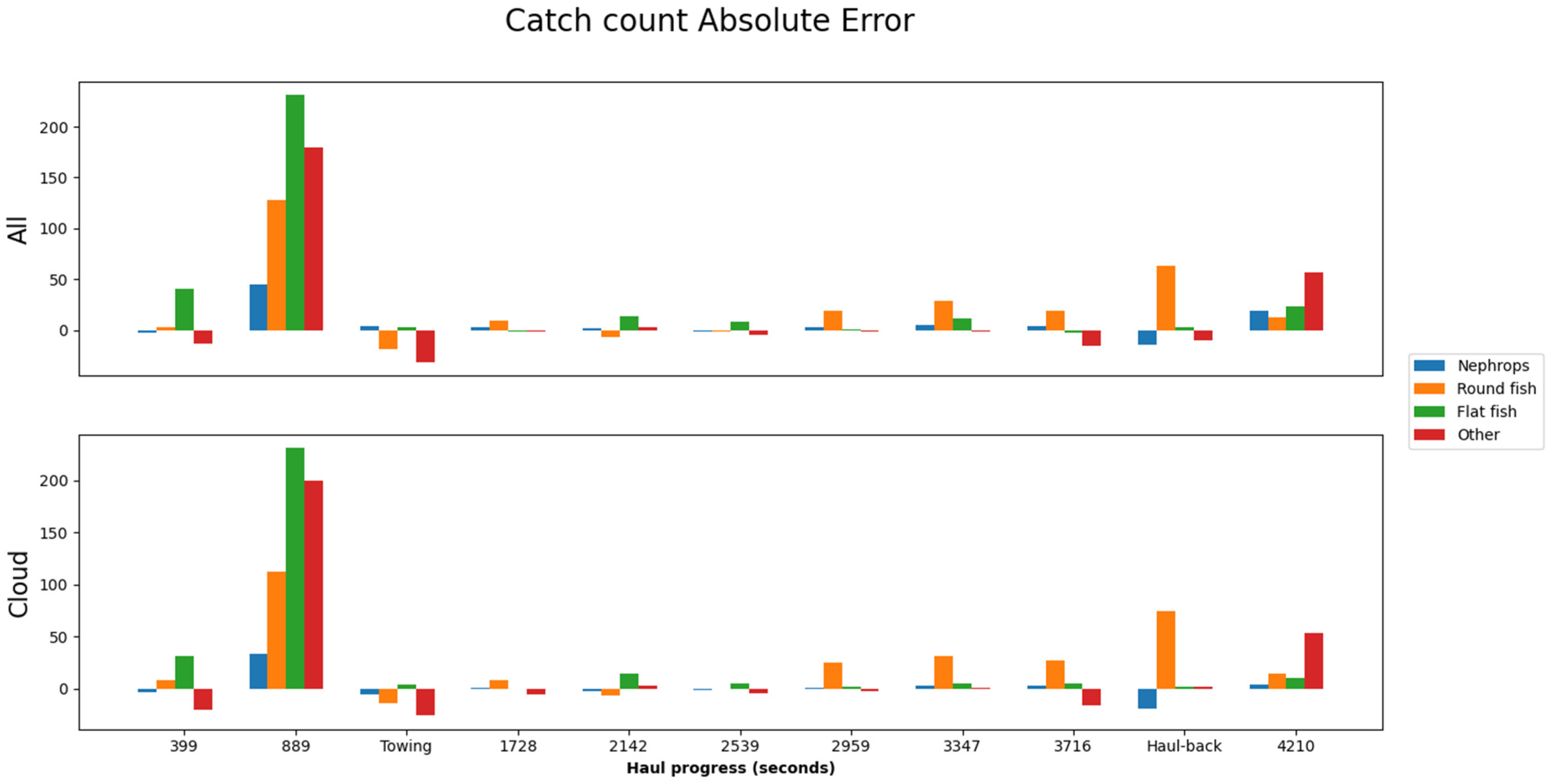

3. Results

3.1. Training

3.2. Evaluation

3.3. Comparison of Automated and Manual Catch Descriptions

4. Discussion

4.1. Towards Precision Fishing

4.2. Algorithm Performance

4.3. Algorithm Real-World Application

4.4. Prospective Applications

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Type of Augmentation | Precision | Recall | F-Score | |||

|---|---|---|---|---|---|---|

| Towing | Haul-Back | Towing | Haul-Back | Towing | Haul-Back | |

| Baseline (none) | 0.694 | 0.650 | 0.829 | 0.743 | 0.756 | 0.693 |

| 0.504 | 0.409 | 0.546 | 0.785 | 0.524 | 0.538 | |

| 0.534 | 0.437 | 0.886 | 0.909 | 0.667 | 0.590 | |

| 0.693 | 0.381 | 0.884 | 0.750 | 0.777 | 0.506 | |

| Average | 0.606 | 0.469 | 0.786 | 0.797 | 0.681 | 0.582 |

| Copy-Paste and Geometric transformations | 0.661 | 0.800 | 0.902 | 0.800 | 0.763 | 0.800 |

| 0.642 | 0.480 | 0.731 | 0.849 | 0.684 | 0.613 | |

| 0.795 | 0.588 | 0.879 | 0.879 | 0.835 | 0.704 | |

| 0.821 | 0.423 | 0.865 | 0.683 | 0.842 | 0.522 | |

| Average | 0.730 | 0.573 | 0.844 | 0.803 | 0.781 | 0.660 |

| Blur | 0.745 | 0.867 | 0.854 | 0.743 | 0.796 | 0.800 |

| 0.677 | 0.461 | 0.602 | 0.791 | 0.637 | 0.582 | |

| 0.663 | 0.515 | 0.864 | 0.869 | 0.750 | 0.647 | |

| 0.814 | 0.458 | 0.783 | 0.633 | 0.798 | 0.531 | |

| Average | 0.725 | 0.575 | 0.776 | 0.759 | 0.745 | 0.640 |

| Color | 0.761 | 0.813 | 0.854 | 0.743 | 0.805 | 0.776 |

| 0.735 | 0.500 | 0.565 | 0.802 | 0.639 | 0.616 | |

| 0.728 | 0.558 | 0.932 | 0.919 | 0.817 | 0.695 | |

| 0.785 | 0.386 | 0.865 | 0.733 | 0.823 | 0.506 | |

| Average | 0.752 | 0.564 | 0.804 | 0.799 | 0.771 | 0.648 |

| Cloud | 0.773 | 0.844 | 0.829 | 0.771 | 0.800 | 0.806 |

| 0.652 | 0.482 | 0.676 | 0.860 | 0.664 | 0.618 | |

| 0.788 | 0.506 | 0.902 | 0.838 | 0.841 | 0.631 | |

| 0.845 | 0.551 | 0.845 | 0.717 | 0.845 | 0.623 | |

| Average | 0.765 | 0.596 | 0.813 | 0.797 | 0.788 | 0.670 |

| All augmentations | 0.696 | 0.763 | 0.951 | 0.829 | 0.804 | 0.795 |

| 0.658 | 0.482 | 0.694 | 0.837 | 0.676 | 0.612 | |

| 0.805 | 0.481 | 0.909 | 0.889 | 0.854 | 0.624 | |

| 0.842 | 0.597 | 0.826 | 0.667 | 0.834 | 0.630 | |

| Average | 0.751 | 0.581 | 0.845 | 0.806 | 0.792 | 0.665 |

Appendix B. Augmentations Effect

References

- Kennelly, S.J.; Broadhurst, M.K. A review of bycatch reduction in demersal fish trawls. Rev. Fish Biol. Fish. 2021, 31, 289–318. [Google Scholar] [CrossRef]

- Rihan, D. Research for PECH Committee—Landing Obligation and Choke Species in Multispecies and Mixed Fisheries—The North Western Waters; Policy Department for Structural and Cohesion Policies, European Parliament: Brusseles, Brusseles, 2018. [Google Scholar]

- EU Council Regulation. Fixing for 2019 the Fishing Opportunities for Certain Fish Stocks and Groups of Fish Stocks, Applicable in Union Waters and for Union Fishing Vessels in Certain Non-Union Waters. In Official Journal of the European Union; European Union: Maastricht, The Netherlands, 2019. [Google Scholar]

- Pérez Roda, M.A.; Gilman, E.; Huntington, T.; Kennelly, S.J.; Suuronen, P.; Chaloupka, M.; Medley, P. A Third Assessment of Global Marine Fisheries Discards; FAO Fisheries and Aquaculture Technical Paper No. 633; FAO: Rome, Italy, 2019; p. 78. [Google Scholar]

- Graham, N.; Ferro, R.S.T. The Nephrops Fisheries of the Northeast Atlantic and Mediterranean: A Review and Assessment of Fishing Gear Design; ICES Cooperative Research Report No. 270; International Council for the Exploration of the Sea: Copenhagen, Denmark, 2004. [Google Scholar]

- DeCelles, G.R.; Keiley, E.F.; Lowery, T.M.; Calabrese, N.M.; Stokesbury, K.D.E. Development of a Video Trawl Survey System for New England Groundfish. Trans. Am. Fish. Soc. 2017, 146, 462–477. [Google Scholar] [CrossRef]

- Rosen, S.; Holst, J.C. DeepVision in-trawl imaging: Sampling the water column in four dimensions. Fish. Res. 2013, 148, 64–73. [Google Scholar] [CrossRef]

- Mallet, D.; Pelletier, D. Underwater video techniques for observing coastal marine biodiversity: A review of sixty years of publications (1952–2012). Fish. Res. 2014, 154, 44–62. [Google Scholar] [CrossRef]

- Underwood, M.J.; Rosen, S.; Engås, A.; Eriksen, E. Deep Vision: An In-Trawl Stereo Camera Makes a Step Forward in Monitoring the Pelagic Community. PLoS ONE 2014, 9, e112304. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Williams, K.; Lauffenburger, N.; Chuang, M.-C.; Hwang, J.-N.; Towler, R. Automated measurements of fish within a trawl using stereo images from a Camera-Trawl device (CamTrawl). Methods Oceanogr. 2016, 17, 138–152. [Google Scholar] [CrossRef]

- Allken, V.; Handegard, N.O.; Rosen, S.; Schreyeck, T.; Mahiout, T.; Malde, K. Fish species identification using a convolutional neural network trained on synthetic data. ICES J. Mar. Sci. 2018, 76, 342–349. [Google Scholar] [CrossRef]

- Christensen, J.H.; Mogensen, L.V.; Galeazzi, R.; Andersen, J.C. Detection, Localization and Classification of Fish and Fish Species in Poor Conditions using Convolutional Neural Networks. In Proceedings of the 2018 IEEE/OES Autonomous Underwater Vehicle Workshop (AUV), IEEE, Porto, Portugal, 6–9 November 2018; pp. 1–6. [Google Scholar]

- Tseng, C.-H.; Kuo, Y.-F. Detecting and counting harvested fish and identifying fish types in electronic monitoring system videos using deep convolutional neural networks. ICES J. Mar. Sci. 2020, 77, 1367–1378. [Google Scholar] [CrossRef]

- French, G.; Mackiewicz, M.; Fisher, M.; Holah, H.; Kilburn, R.; Campbell, N.; Needle, C. Deep neural networks for analysis of fisheries surveillance video and automated monitoring of fish discards. ICES J. Mar. Sci. 2019, 77, 1340–1353. [Google Scholar] [CrossRef]

- Garcia, R.; Prados, R.; Quintana, J.; Tempelaar, A.; Gracias, N.; Rosen, S.; Vågstøl, H.; Løvall, K. Automatic segmentation of fish using deep learning with application to fish size measurement. ICES J. Mar. Sci. 2019, 77, 1354–1366. [Google Scholar] [CrossRef]

- O’Mahony, N.; Campbell, S.; Carvalho, A.; Harapanahalli, S.; Hernandez, G.V.; Krpalkova, L.; Riordan, D.; Walsh, J. Deep Learning vs. Traditional Computer Vision. In Advances in Computer Vision; Springer International Publishing: Cham, Switzerland, 2019; pp. 128–144. [Google Scholar] [CrossRef] [Green Version]

- Mariani, P.; Quincoces, I.; Haugholt, K.H.; Chardard, Y.; Visser, A.W.; Yates, C.; Piccinno, G.; Reali, G.; Risholm, P.; Thielemann, J.T. Range-Gated Imaging System for Underwater Monitoring in Ocean Environment. Sustainability 2018, 11, 162. [Google Scholar] [CrossRef] [Green Version]

- Thomsen, B. Selective Flatfish Trawling. ICES Mar. Sci. Symp. 1993, 196, 161–164. [Google Scholar]

- Krag, L.A.; Madsen, N.; Karlsen, J.D. A study of fish behaviour in the extension of a demersal trawl using a multi-compartment separator frame and SIT camera system. Fish. Res. 2009, 98, 62–66. [Google Scholar] [CrossRef]

- Sokolova, M.; O’Neill, F.G.; Savina, E.; Krag, L.A. Test and Development of a Sediment Suppressing System for Catch Monitoring in Demersal Trawls; Submitted to Fisheries Research; National Institute of Aquatic Resources, Technical University of Denmark: Hirtshals, Denmark, 2021. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. arXiv 2017, arXiv:1703.06870. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Sokolova, M.; Thompson, F.; Mariani, P.; Krag, L.A. Towards sustainable demersal fisheries: NepCon image acquisition system for automatic Nephrops norvegicus detection. PLoS ONE 2021, 16, e0252824. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar] [CrossRef] [Green Version]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. Imagenet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Abdulla, W. Mask R-CNN for Object Detection and Instance Segmentation on Keras and TensorFlow. 2017. Available online: https://github.com/matterport/Mask_RCNN (accessed on 8 February 2021).

- Ghiasi, G.; Cui, Y.; Srinivas, A.; Qian, R.; Lin, T.-Y.; Cubuk, E.D.; Le, Q.V.; Zoph, B. Simple Copy-Paste is a Strong Data Augmentation Method for Instance Segmentation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 2917–2927. [Google Scholar]

- Jung, A. Imgaug Documentation, Release 0.4.0. 2020. Available online: https://imgaug.readthedocs.io/en/latest/ (accessed on 28 July 2021).

- Catchpole, T.L.; Revill, A.S. Gear technology in Nephrops trawl fisheries. Rev. Fish Biol. Fish. 2008, 18, 17–31. [Google Scholar] [CrossRef]

- Winger, P.D.; Eayrs, S.; Glass, C.W. Fish Behavior near Bottom Trawls. In Behavior of Marine Fishes: Capture Processes and Conservation Challenges; He, P., Ed.; Wiley-Blackwell: Ames, IA, USA, 2010; pp. 67–95. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Makhorin, A. GNU Linear Programming Kit Reference Manual for GLPK Version 4.45. 2010. Available online: https://www.gnu.org/software/glpk/ (accessed on 20 August 2021).

- Sokolova, M.; Alepuz, A.M.; Thompson, F.; Mariani, P.; Galeazzi, R.; Krag, L.A. Catch Monitoring via a Novel In-Trawl Image Acquisition System during Demersal Trawling. Data Repository. 2021. Available online: https://doi.org/10.11583/DTU.16940173.v2 (accessed on 5 November 2021).

- Christiani, P.; Claes, J.; Sandnes, E.; Stevens, A. Precision Fisheries: Navigating a Sea of Troubles with Advanced Analytics. 2019. Available online: https://www.mckinsey.com/industries/agriculture/our-insights/precision-fisheries-navigating-a-sea-of-troubles-with-advanced-analytics (accessed on 15 September 2021).

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Álvarez-Ellacuría, A.; Palmer, M.; Catalán, I.A.; Lisani, J.-L. Image-based, unsupervised estimation of fish size from commercial landings using deep learning. ICES J. Mar. Sci. 2020, 77, 1330–1339. [Google Scholar] [CrossRef]

- Yu, C.; Fan, X.; Hu, Z.; Xia, X.; Zhao, Y.; Li, R.; Bai, Y. Segmentation and measurement scheme for fish morphological features based on Mask R-CNN. Inf. Process. Agric. 2020, 7, 523–534. [Google Scholar] [CrossRef]

- Graham, N.; Jones, E.; Reid, D. Review of technological advances for the study of fish behaviour in relation to demersal fishing trawls. ICES J. Mar. Sci. 2004, 61, 1036–1043. [Google Scholar] [CrossRef] [Green Version]

- Frid-Adar, M.; Diamant, I.; Klang, E.; Amitai, M.; Goldberger, J.; Greenspan, H. GAN-based synthetic medical image augmentation for increased CNN performance in liver lesion classification. Neurocomputing 2018, 321, 321–331. [Google Scholar] [CrossRef] [Green Version]

- Taylor, L.; Nitschke, G. Improving Deep Learning Using Generic Data Augmentation. In Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Bangalore, India, 18–21 November 2018. [Google Scholar]

| Hyperparameters | Learning Rate | Number of Epochs | Steps per Epoch | Batch Size | Source Images for CP | |

|---|---|---|---|---|---|---|

| Types of Augmentation | ||||||

| Baseline (none) | 0.0005 | 60 | 1000 | 2 | 3 | |

| CP and Geometric transformations | 0.0005 | 76 | 2 | |||

| Blur | 0.0005 | 80 | 1 | |||

| Color | 0.0003 | 100 | 2 | |||

| Cloud | 0.0004 | 84 | 2 | |||

| All augmentations | 0.0005 | 76 | 2 | |||

| Class | Nephrops | Round Fish | Flat Fish | Other | |

|---|---|---|---|---|---|

| Types of Augmentation | |||||

| Manual catch count (onboard) | 323 | 464 | 556 | 9 | |

| Manual catch count (videos) | 235 | 530 | 755 | 897 | |

| Baseline (none) | 302 | 869 | 1439 | 1383 | |

| CP and Geometric transformations | 282 | 819 | 1078 | 1114 | |

| Blur | 272 | 889 | 1179 | 1027 | |

| Color | 262 | 691 | 1174 | 1256 | |

| Cloud | 249 | 808 | 1064 | 1082 | |

| All augmentations | 302 | 785 | 1084 | 1058 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sokolova, M.; Mompó Alepuz, A.; Thompson, F.; Mariani, P.; Galeazzi, R.; Krag, L.A. A Deep Learning Approach to Assist Sustainability of Demersal Trawling Operations. Sustainability 2021, 13, 12362. https://doi.org/10.3390/su132212362

Sokolova M, Mompó Alepuz A, Thompson F, Mariani P, Galeazzi R, Krag LA. A Deep Learning Approach to Assist Sustainability of Demersal Trawling Operations. Sustainability. 2021; 13(22):12362. https://doi.org/10.3390/su132212362

Chicago/Turabian StyleSokolova, Maria, Adrià Mompó Alepuz, Fletcher Thompson, Patrizio Mariani, Roberto Galeazzi, and Ludvig Ahm Krag. 2021. "A Deep Learning Approach to Assist Sustainability of Demersal Trawling Operations" Sustainability 13, no. 22: 12362. https://doi.org/10.3390/su132212362

APA StyleSokolova, M., Mompó Alepuz, A., Thompson, F., Mariani, P., Galeazzi, R., & Krag, L. A. (2021). A Deep Learning Approach to Assist Sustainability of Demersal Trawling Operations. Sustainability, 13(22), 12362. https://doi.org/10.3390/su132212362