Tacit Collusion on Steroids: The Potential Risks for Competition Resulting from the Use of Algorithm Technology by Companies

Abstract

:1. Introduction

2. Algorithms-Notion, Types, and Fields of Application

2.1. Definition of an Algorithm

2.2. Typology of Algorithms According to the Tasks They Perform

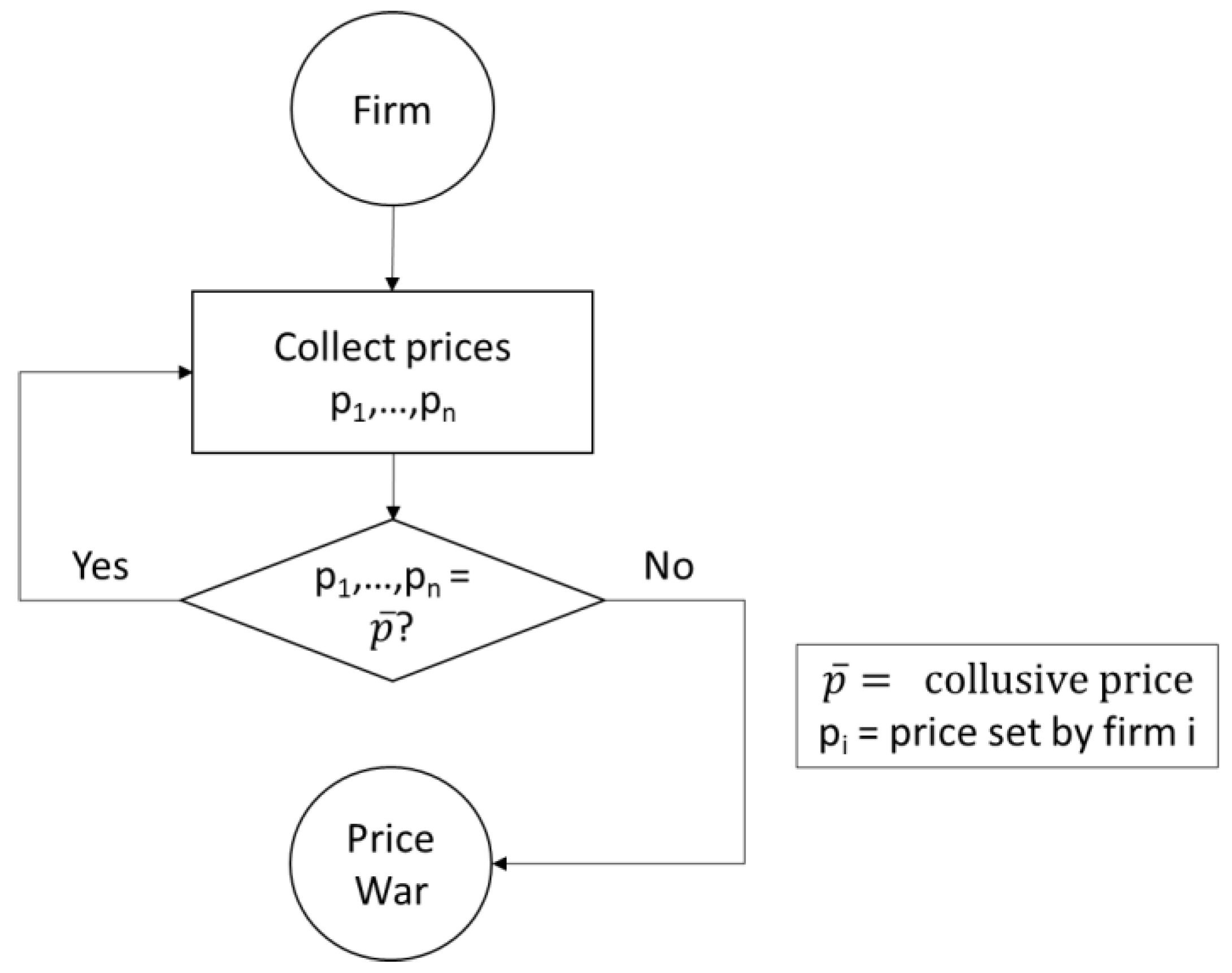

2.2.1. Monitoring and Data Collection Algorithms

2.2.2. Parallel Algorithms

2.2.3. Signaling Algorithms

3. Collusion Risks Potentially Associated with the Use of Pricing Algorithms

3.1. Collusion—Concepts and Definitions

- Explicit collusion, which relates to anti-competitive behaviors made possible by explicit agreements, whether written or oral. An agreement on the optimal level of price or production is generally for competitors the most direct way to obtain a collusive result.

- Tacit collusion, which concerns forms of anti-competitive coordination which can be achieved without an explicit agreement, but which competitors can maintain by recognizing their reciprocal interdependence. In such a background, the anti-competitive result is obtained by each participant who determines their own profit maximization strategy independently of their competitors.

3.2. Factors Likely to Increase the Stability of Collusion in Algorithm-Driven Markets

- Number of firms

- Market transparency

- Frequency of interactions

3.3. Does the Notion of “Agreement for Antitrust Purposes” Need Revisiting?

4. Discussion: Liability for Anti-Competitive Behavior Caused by Algorithms

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Whish, R.; Bailey, D. Not only collusion but also, for instance, price discrimination, e.g. selling or purchasing “different units of good or service at prices not directly corresponding to differences in the cost of supplying them”. In Competition Law, 9th ed.; Oxford University Press: Oxford, UK, 2018; p. 292. [Google Scholar]

- Ezrachi, A.; Stucke, M.E. Algorithmic Collusion: Problems and Counter-Measures; OECD: Paris, France, 2017. [Google Scholar]

- Haucap, J. Die heimlichen Kartelle im Netz. WiWo 2018, 1, 74. [Google Scholar]

- Mehra, S.K. Antitrust and the robot-seller: Competition in the time of algorithms. Minn. L. Rev. 2016, 100, 1323–1375. [Google Scholar]

- Kaseberg, T.; Von Kalben, J. Herausforderungen der Kunstilch Intelligenz fur die Wettbewerbspolitik. WuW 2018, 68, 2–8. [Google Scholar]

- Oxera. When Algorithms Set Prices: Winners and Losers. 2017. Available online: https://www.oxera.com/wp-content/uploads/2018/07/When-algorithms-set-prices.pdf.pdf (accessed on 23 August 2020).

- Pereira, V. Algorithm-Driven Collusion: Pouring Old Wine into New Bottles or New Wine into Fresh Wineskins. Eur. Compet. Law Rev. 2018, 39, 212–227. [Google Scholar]

- Roman, V.D. Digital Market and pricing algorithms—A dynamic approach towards horizontal competition. Eur. Compet. Law Rev. 2018, 1, 37–45. [Google Scholar]

- OECD. Algorithms and Collusion: Competition Policy in the Digital Age; OECD: Paris, France, 2017; p. 30. Available online: http://www.oecd.org/competition/algorithms-collusion-competition-policy-in-the-digital-age.htm (accessed on 6 August 2020).

- Knuth, D. The Art of Computer Programming: Volume 1. Fundamental Algorithms, 3rd ed.; Addison-Wesley Professional: Boston, MA, USA, 1997; p. 1. [Google Scholar]

- Lindsay, A.; McCarthy, E. Do we need to prevent pricing algorithms cooking up markets? Eur. Compet. Law Rev. 2017, 12, 533. [Google Scholar]

- Garey, M.; Johnson, D. Computers and Intractability: A Guide to the Theory of NP-Completeness; W.H Freeman: New York, NY, USA, 1979; p. 4. [Google Scholar]

- Cormen, T.; Leiserson, C.; Rivest, R.; Stein, C. Introduction to Algorithms, 3rd ed.; The MIT Press: Cambridge, MA, USA, 2009; p. 5. [Google Scholar]

- Schriek, M.; Safeti, H.; Siddiqui, S.; Pflugler, M.; Wiesche, C.; Krcmar, H. A matching algorithm for dynamic ridesharing. In Proceedings of the International Scientific Conference on Mobility and Transport: Transforming Urban Mobility, Munich, Germany, 6–7 June 2016; Elsevier: Amsterdam, The Netherlands, 2016; pp. 272–285. Available online: http://excell-mobility-il17.in.tum.de/wp-content/uploads/2017/01/A-Matching-Algorithm-for-Dynamic-Ridesharing.pdf (accessed on 13 August 2020).

- Chen, L.; Mislove, A.; Wilson, C. An Empirical Analysis of Algorithmic Pricing on Amazon Marketplace. In Proceedings of the 25th International Conference on World Wide Web, Montreal, QC, Canada, 11–15 April 2016; International World Wide Web Conferences Steering Committee: Geneva, Switzerland, 2016; pp. 1339–1349. Available online: https://mislove.org/publications/Amazon-WWW.pdf (accessed on 6 August 2020).

- Weiss, R.; Mehrotta, A. Online dynamic pricing: Efficiency, equity and the future of e-commerce. Va. J. L. Tech. 2001, 11, 1–10. [Google Scholar]

- OCDE. Personalized Pricing in the Digital Era; OECD: Paris, France, 2018; p. 9. Available online: https://one.oecd.org/document/DAF/COMP(2018)13/en/pdf (accessed on 23 August 2020).

- ADLC, Opinion no 18-A-03 of 06.03.18 on Data Processing in the Online Advertising Sector for a Discussion on the Number and Sophistication of Algorithms Dedicated to Personal Data Gathering for Publicity Purposes. Available online: https://www.autoritedelaconcurrence.fr/sites/default/files/integral_texts/2019-10/avis18a03_en_.pdf (accessed on 6 August 2020).

- Scraping Is a Method for Crawling Web Sites and Automatically Extracting Structured Data on It. For instance Scrapy Is a Python Open Source Package to Scrape Data from Websites. Available online: https://scrapy.org/ (accessed on 7 August 2020).

- European Commision. Commision Staff Working Document—Final Report on the E-Commerce Sector Inquiry. Available online: http://www.ecommercesectorinquiry.com/files/sector_inquiry_final_report_en.pdf (accessed on 6 August 2020).

- Harrington, J.E., Jr.; Zhao, W. Signaling and Tacit Collusion in an Infinitely Repeated Prisoners’ Dilemna. Math. Soc. Sci. 2012, 64, 277–289. [Google Scholar] [CrossRef] [Green Version]

- Garyali, K. Is the Competition Regime Ready to Take on the AI Decision Maker? CMS London. Available online: https://cms.law/en/gbr/publication/is-the-competition-regime-ready-to-take-on-the-ai-decision-maker (accessed on 21 August 2020).

- Harrington, J.E.; Harker, P.T. Developing Competition Law for Collusion by Autonomous Artificial Agents. J. Compet. Law Econ. 2018, 14, 331. [Google Scholar] [CrossRef]

- OECD. Roundtable on Facilitating Practices in Oligopolies. 2007. Available online: www.oecd.org/daf/competition/41472165.pdf (accessed on 8 August 2020).

- ECJ. Case T-41/96 Bayer, ECLI: EU: T2000:242, Paragraph 69. Available online: https://eur-lex.europa.eu/legal-content/EN/ALL/?uri=CELEX%3A61996TJ0041 (accessed on 13 August 2020).

- ECJ. Case 40/73 Suiker Unie v. Commission, ECLI: EU: C: 1975:174, Paragraphs 26 and 174. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A61973CJ0040 (accessed on 15 August 2020).

- ECJ. Case 48/69 Imperial Chemical Industries, ECLI: C: 1972:70, Paragraph 118. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A61969CJ0048 (accessed on 14 August 2020).

- ECJ. Case C-8/08 T-Mobile, ECLI: EU: C: 2009: 343, Paragraph 23. Available online: https://eur-lex.europa.eu/legal-content/EN/ALL/?uri=ecli:ECLI:EU:C:2009:343 (accessed on 16 August 2020).

- Ezrachi, A.; Stucke, M.E. Artificial Intelligence & Collusion. Univ. Ill. Law Rev. 2017, 1775, 1787, 1804. [Google Scholar]

- Monopolkommission. Hauptgutachten, Wettbewerb 2016; Nomos Verlagsgesellschaft: Baden, Germany, 2017; p. 1242. [Google Scholar]

- European Commission. Decision of 07.07.16 (Container Shipping), Case AT. 39850, Paragraph 80. Available online: https://ec.europa.eu/competition/antitrust/cases/dec_docs/39850/39850_3377_3.pdf (accessed on 12 August 2020).

- Ivaldi, M.; Jullien, B.; Rey, P.; Seabright, P.; Tirole, J. The Economics of Tacit Collusion, Final Report for DG Competition, March 2003. Available online: https://ec.europa.eu/competition/mergers/studies_reports/the_economics_of_tacit_collusion_en.pdf (accessed on 13 August 2020).

- Hellwig, M.; Huschelrath, K. Cartel Cases and the Cartel Enforcement Process in the European Union 2001–2015: A Quantitative Assessment. ZEW Discussion Paper No.16-063. Mannheim, Germany, September 2016. Available online: http://ftp.zew.de/pub/zew-docs/dp/dp16063.pdf (accessed on 18 August 2020).

- Interstate Circuit, Inc. v. United States, 306 U.S. 208 (1939). Available online: https://supreme.justia.com/cases/federal/us/306/208/ (accessed on 13 August 2020).

- Monsanto Co.v. Spray-Rite Serv. Corp., 465 U.S. 752, 768. 1984. Available online: https://www.lexisnexis.com/community/casebrief/p/casebrief-monsanto-co-v-spray-rite-serv-corp (accessed on 13 August 2020).

- Mitchell, T. Machine Learning; McGraw-Hill Higher Education: New York, NY, USA, 1997; p. 3. [Google Scholar]

- FTC v. Sperry & Hutchinson Co., 405 U.S. 233. 1972. Available online: https://supreme.justia.com/cases/federal/us/405/233/ (accessed on 13 August 2020).

- OECD. Roundtable on Competition Enforcement in Oligopolistic Markets. 2015. Available online: https://one.oecd.org/document/DAF/COMP(2015)2/en/pdf (accessed on 13 August 2020).

- Ethyl Corp. v. Federal Trade Commission: United States Court of Appeals for the Second Circuit 729 F.2d 128 (1984). Available online: https://www.quimbee.com/cases/ethyl-corp-v-federal-trade-commission (accessed on 13 August 2020).

- Ezrachi, A.E.; Stucke, M.E. Two Artificial Neural Networks Meet in an Online Hub and Change the Future (of Competition, Markey Dynamics and Society). CPI, April 2017 Posted by the Social Science Research Network. Available online: https://www.competitionpolicyinternational.com/two-artificial-neural-networks-meet-in-an-online-hub-and-change-the-future-of-competition-market-dynamics-and-society/ (accessed on 17 August 2020).

- ECJ. Zuchner v Bayerische Vereinsbank, Judgment of 14.07.1981, Case C-172-80. Available online: https://eur-lex.europa.eu/legal-content/EN/ALL/?uri=CELEX%3A61980CJ0172 (accessed on 21 August 2020).

- Commission, Horizontal Guidelines, Paragraph 63. Available online: https://eur-lex.europa.eu/legal-content/EN/ALL/?uri=CELEX%3A52011XC0114%2804%29 (accessed on 22 August 2020).

- ‘Eturas’ UAB and Others v. Lietuvos Respublikos Konkurencijos Taryba CaseC-74/14, Judgment of the Court of 21 January 2016. Available online: http://curia.europa.eu/juris/liste.jsf?&num=C-74/14 (accessed on 8 August 2020).

- Dohrn, D.; Huck, L. Algorithmus als “Kartellgehilfe?”-Kartellrechtliche Compliance in Zeitalter der Digitalierung. D B 2018, 173, 173–178. [Google Scholar]

- Wolf, M. Algorithmengestuzte Preissetzung im Online-Einzelhandel als abgestimmte Verhaltensweise. NZ Kartel. 2019, 1, 2–6. [Google Scholar]

- Janka, S.; Uhsler, F. Antitrust 4.0. Eur. Compet. Law Rev. 2018, 121, 112. [Google Scholar]

- Salaschek, U.; Serafimova, M. Preissetzungsalgorithmen im Lichte von Art.101 AEUV. WuW 2018, 1, 8–15. [Google Scholar]

- ECJ. AC—Treuhand v Commission, Judgment of 22.10.15, Case C-194/14 P. Available online: http://curia.europa.eu/juris/document/document.jsf?docid=170304&doclang=EN (accessed on 24 August 2020).

- ECJ. VM Remont v Konkurences Padome, Judgment of 21.07.16, Case C-542/14, Par.27 et Seq. Available online: http://curia.europa.eu/juris/liste.jsf?language=en&num=C-542/14 (accessed on 26 August 2020).

- Paroche, E.; Ritz, C.; Levy, V. Algorithms in the Spotlight of Antitrust Authorities, Hogan Lovells. Antitrust Competition and Economic Regulation Quarterly Newsletter, Autumn 2019. Available online: https://www.hoganlovells.com/~/media/hogan-lovells/global/knowledge/publications/files/acer-newsletters-autumn-2019.pdf?la=en (accessed on 16 August 2020).

- Vestager, M. Proceedings of the Bundeskartellamt 18th Conference on Competition, Berlin, Germany, 16 March 2017; Available online: https://wayback.archive-it.org/12090/20191129221651/https://ec.europa.eu/commission/commissioners/2014-2019/vestager/announcements/bundeskartellamt-18th-conference-competition-berlin-16-march-2017_en (accessed on 19 September 2020).

| Dynamic pricing algorithms are able to process a larger quantity of data and to react faster than standard pricing strategies to any change in market conditions. Very often, companies use such algorithms for price setting based on other available offers. A research paper on the algorithmic pricing of third-party sellers on Amazon Marketplace [15] found that algorithmic repricing strategies based on competitors’ prices were used for the online sale of best-selling products [15]. Businesses may also use pricing algorithms to manage stock availability or production assets to allow a more efficient allocation of resources. Taking into account the aforementioned functions and many others, pricing algorithms have been widely credited for improving market efficiency [16]. For instance, the air transport and hotel sectors have been using pricing algorithms for quite some time to quickly adjust prices of online plane tickets and hotel room bookings to changes in supply and demand [17]. Nevertheless, there might be some countervailing effects. In the offline world, many firms monitor their competitors’ prices. This can be done through the observation of competitors’ prices by the company’s employees or by purchasing price-tracking data from specialized suppliers or through interviews with customers who provide feedback information on the competitors’ best offers. However, these price monitoring methods can be expensive, are not always efficient, and above all do not allow price adjustment in “real time”. This explains why it might be difficult for a firm of the brick-and-mortar business environment to interpret the behavior of its competitors or of customers. For example, a company might not understand an invitation made by competitors to a collusion. Online prices are, on the contrary, easily accessible and highly transparent. Besides, the use of an automatic price monitoring algorithm allows its users to monitor the evolution of competitors’ prices at a lower cost and to adjust its prices accordingly almost in “real time”. Thanks to their ability to collect a greater amount of information on competitors’ prices, to accelerate collusive behavior, and to sanction deviations from collusive market outcomes more quickly, dynamic pricing algorithms may allow companies to sustain supra competitive prices more efficiently than humans. |

| In the United States, Section 5 of the Federal Trade Commission (FTC) Act gives this anti-trust agency the power to prohibit “unfair methods of competition”. Relying on a Supreme Court ruling stating that Section 5 extends beyond the Sherman Act and other US antitrust laws [37], the FTC has applied this provision to combat various anti-competitive behaviors that are difficult to prosecute under cartels or monopolization provisions. An example of such a conduct is the unilateral communication of information to competitors having anti-competitive effects, or the so-called “invitations to collude” [38]. Based on principles rather than rules [39], Section 5 gives the FTC some flexibility on which practices to tackle. For a conduct (such as the use of an algorithm) to fall under this section, the antitrust agency will have to demonstrate that (1) it causes or is likely to cause substantial harm to consumers, (2) it cannot be reasonably avoided by consumers, and (3) is not outweighed by countervailing benefits to consumers or competition. It has been suggested that if the FTC could demonstrate that, when developing their algorithms, defendants were either motivated to achieve an anti-competitive outcome or were aware of the anti-competitive consequences of their actions, it would be entitled to apply Section 5 in order to tackle algorithmic collusion [40]. |

| Type of Scenario | Parallel Algorithms | Signaling Algorithms | Hub and Spoke | Self-Learning Algorithms |

|---|---|---|---|---|

| Tacit collusion risk | Yes | Yes | Yes | Yes |

| Liability for concerted practices prohibited under Article 101 TFEU | No | Yes | Yes | Potential |

| Liability of the firm using algorithms | No | Yes | Yes | Potential |

| Liability of an employee of the firm using algorithms | No | No | Yes | Potential |

| Liability of independent 3rd party algorithm developer | No | No | Yes | Potential |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hutchinson, C.S.; Ruchkina, G.F.; Pavlikov, S.G. Tacit Collusion on Steroids: The Potential Risks for Competition Resulting from the Use of Algorithm Technology by Companies. Sustainability 2021, 13, 951. https://doi.org/10.3390/su13020951

Hutchinson CS, Ruchkina GF, Pavlikov SG. Tacit Collusion on Steroids: The Potential Risks for Competition Resulting from the Use of Algorithm Technology by Companies. Sustainability. 2021; 13(2):951. https://doi.org/10.3390/su13020951

Chicago/Turabian StyleHutchinson, Christophe Samuel, Gulnara Fliurovna Ruchkina, and Sergei Guerasimovich Pavlikov. 2021. "Tacit Collusion on Steroids: The Potential Risks for Competition Resulting from the Use of Algorithm Technology by Companies" Sustainability 13, no. 2: 951. https://doi.org/10.3390/su13020951

APA StyleHutchinson, C. S., Ruchkina, G. F., & Pavlikov, S. G. (2021). Tacit Collusion on Steroids: The Potential Risks for Competition Resulting from the Use of Algorithm Technology by Companies. Sustainability, 13(2), 951. https://doi.org/10.3390/su13020951