1. Introduction

The visual impaired are people with a different kind and degree of visual abilities reduction. Common dioptric aids are not sufficient for this group of people which negatively impacts their everyday life. The visually impaired can be divided into four basic categories as follows: weak-sighted persons, persons with a binocular vision disorder, almost blind persons and blind persons. Weak-sighted people use a pair of powered glasses, helping them to orientate better while moving in public areas. Persons with a binocular vision disorder suffer from a failure in physiological interaction between the right and the left eye which causes issues in spatial perception. Almost blind persons can see blurry images (a dioptric pair of glasses is not helpful) or they only perceive light differences of their surroundings, and thus while moving outdoors (in open spaces) they need another person’s help. Blind persons suffer from a complete loss of visual perception and for the sake of a better notion of their surrounding they use a white cane, or a white cane and a guide dog while moving outdoors. In order to move and orientate this group of people compensates their impairment with senses-touch, hearing and smell [

1]. For the sake of better comprehensibility, these four groups of people will be referenced as a blind people and people with a severe visual impairment in the following text of the paper.

Blind people and people with a severe visual impairment need to have clear and univocal identification marks of the surface available to ensure an individual and safe motion and orientation in an external environment [

2]. They also need tactual and auditory information on their surrounding and services. The most frequent safety elements in public areas designed for blind people include a guideline, a guide zone in a pedestrian crossing, a signal zone to indicate a change of the motion route, a warning zone to alert to a possible risk and an information system about the surrounding and services secured with an auditory element [

3]. The last mentioned element is the subject of the research study presented in our paper.

From the statistics perspective, out of 7.79 billion of the worldwide population, 49.1 million people were blind, 221.4 million people suffered from a moderate visual impairment, and 33.6 million people suffered from a severe visual impairment in 2020. The estimated number of the blind increased from 34.4 million in 1990 up to 49.1 million in 2020. The increase reached the value of 42.8% which can be considered alarming [

4].

The visual impairment has significant personal as well as economic impacts. From the point of view of the age the personal impact is reflected in different levels. In case of small children, the severe visual impairment causes a belated development in all fields. Mostly, these are motoric, emotional, cognitive, communication and social functions of a child and their retardation may lead to lifelong consequences. The visual impairment significantly impacts the level of educational results of the child at school. In case of adults the quality of their life is seriously impacted, too. The adults with a severe visual impairment face a problem to become employed and actively participate in a social life [

5]. This work and social exclusion from the society often leads to a higher degree of anxiety and depression. In case of the elderly population the significant sight degradation or blindness may contribute to an additional social isolation and many health problems and risks (e.g., frequent falls and development of fractures, often jeopardising the life) [

6]. From the perspective of economic impacts, the visual impairment and blindness represent huge social costs. For example, the annual global costs of productivity losses associated with the vision impairment from uncorrected myopia and presbyopia alone estimated to be US

$244 billion and US

$25.4 billion (respectively) [

7].

To reduce the effect of previously mentioned personal and economic impacts on the society it is important to support the independence and mobility of the visually impaired to the highest extent possible. This key aspect is also mentioned in the latest WHO World report on vision (2019) [

8]. As part of the introduced activities WHO emphasises the need to develop technical tools which will contribute to a better quality of life of these people, including tools based on mobile phones usage.

Mobile devices are currently tools of everyday personal usage which enables using them to support many activities usually performed by their owners. Some of these activities are as follows: checking e-mail accounts, management of personal data needed for work with various systems (such as localisation systems which are helpful for planning the process of moving to places the user will visit, or for the orientation at places where they currently occur). Predominantly, these applications are aimed at persons without any visual impairment, and in some cases, they are rather not intuitive [

9,

10].

In order to integrate blind people and people with severe visual impairment into everyday life it is required to create applications which may be useful and easy-to-use, user friendly and very functional while accessing them which will enable to improve some aspects of everyday life of this group of people. For this purpose, it is possible to use current existing technologies which are of a big value for this group of people, as suggested in some studies that developed applications based on the augmented reality [

11,

12].

Based on multiple studies results we can declare that the usage of the augmented reality may lead to a better mobility and orientation of people suffering from a certain type of visual impairment [

13,

14]. Thus, it is desirable to implement this technology to improve the way people with such a limitation can move, as well as to find out which applications use the augmented reality and which could be used for its implementation.

One of the main objectives of this research, which enables to meet the localisation and navigation needs of blind people and people with a severe visual impairment, was to develop a mobile application with the augmented reality that allows to manage the routes usually used by this type of population, in terms of their effectiveness and safety.

2. Materials and Methods

This section is divided into two parts: the first part describes the main theories the presented research is based on and which are fundamental to the understanding of this paper; in the second part, the methodology applied for the development of the “Interactive Model” is described.

2.1. Basic Conceptualisation

2.1.1. Mobility of People with Visual Impairments

While addressing the mobility of people with visual impairments it is necessary to take into account the disposition of spaces where these people can move, the degree how easy they can adapt to these spaces and places, how they can face obstacles so they do not hit against them, up to the moment when they reach better mobility and will be able to distinguish between “a closer space and a remote space” [

15], (when the comparison is carried out using the detection of objects of a short reach with touch).

Some studies related to mobility and orientation [

16,

17,

18,

19] claim that the mobility does not only regard a person’s moving from one place to another, but it is also necessary to know where that person currently is, where want to go and what resources can use to reach final destination. When a visual impaired person acquires supporting information, can realise move in a better way because there are more inputs available [

20]; these include some known properties of the environment [

21] which can more or less help to identify these places. Thanks to knowing the characteristics and abilities of the spatial identification of the visual impaired it is possible to predict their behaviour in outer spaces [

15] using various techniques that help to understand their way of orientation in open places more precisely.

2.1.2. Supporting Systems for Mobility of People with Disabilities

Supporting systems for mobility of people with the visual impairment can be divided by the group of the visually impaired users they are designed for. For persons with low vision, image enhancement applications have been developed; they are based on the improvement of the image, mostly through its stabilisation or enlargement. Furthermore, the image enhancements may be realised via improving the image contrast [

22], transforming its colours [

23] or highlighting the edges of the image [

24]. Devices serving to provide feedback in a non-visual form (mostly in an auditory form) are intended for the blind. These devices can be classified by the distance of objects the identification of which they are aimed at. Local applications are used to identify objects which are in a short distance from the user (within their reach, thus they can touch and explore them hapticly). Such applications are usable in case of all such objects which do not contain a message in Braille labels. They are based on using auditory labels and this way they provide their user with an audio-haptic survey of the object. An example of such a system is CamIO system [

25], which belongs to computer vision-based systems; it provides the user with a real-time audio feedback related to the object the user is touching. For the orientation in relation to objects which are farther from the visually impaired user, global applications are used. They provide directional information on close points of interest (POI) in their vicinity in order to facilitate the search of a route to the set destination and to improve their orientation in the environment they are currently in. Many of these systems are based on the location identification using GPS coordinates. For example, they include Loomis’s Personal Guidance System [

26] and Nearby Explorer6 system by American Printing House for the Blind. The main disadvantage of systems based on this type of localisation lies in an inaccurate resolution for approx. 10 metres in an urban space [

27]. Therefore, there have been developed multiple additional systems which apply various localisation approaches to the location identification and which reduce the inaccuracy rate using tools, such as computer vision (SLAM systems) [

28], Wi-Fi triangulation [

29] or Bluetooth beacons [

30,

31]. These systems include Talking Signs tool which is based on the Remote Audible Infrared Signage [

32], thus it uses the wireless communication to send encoded spoken versions of the contents of the signage, making it accessible for the blind people.

2.1.3. Spatial Orientation Using Mobile Phones

Intelligent mobile phones used nowadays have become supporting tools for the functioning of the entire society thanks to their numerous functionalities which besides others provide a technological platform to solve the process of moving and generating knowledge about the surrounding environment. On the other hand, there have been developed some applications which provide a higher level of comprehension and usability, as well as rigid applications with regard to their portability that allows their everyday usage; thanks to that they become more intuitive and comfortable for performing everyday activities [

33,

34].

Indoor environments bring a possibility to orientate better for the people with visual impairments because the influencing factors in their surroundings are usually not too strong (such as noise), since the sound is an essential element for the orientation of the visually impaired. Cognitive abilities, which these people have, should be known in advance because the auditory support has a big impact on the motion and recognition of objects, and it also provides a better use of intellect in indoor navigation of these people.

2.1.4. Using the Augmented Reality in a Mobile Device

The augmented reality offers the option to define a view on a physical environment of the real world whose elements are combined with virtual elements, and this way it provides the user with a mixed reality in real time. This mostly happens through mobile devices (tablets or smartphones) which usually also offer the option to increase the contents in a display system [

35]. The augmented reality unites images in real time using a geographical location of the device and respective metadata which are saved on the server responsible for reporting the required coordinate. Many applications have been developed using the augmented reality with a Layar-an open-source platform, enabling the developers to create their own applications using the augmented reality; it supports interactive experiences and enables the configuration of a layer by distributions that customers or developers want to implement for the sake of a higher performance of the system. Layar is a tool which works as a browser for mobile devices, mostly for Android, which utilises pocket displays (handheld displays), that is a mechanism of a smartphone enabling the application of the augmented reality to generate a 3D image in a digital image [

36]; it uses some internal tools, such as GPS (Global Position System) and a compass to identify the place the device points at, to determine its position; furthermore, with a camera and support for the motion sensor the recognition of the environment and then the reproduction of the image being captured are realised; Layar then collects information received with these elements, it performs the superposition of images and delivers processed and recognised data in a new image with real and virtual elements [

37].

Based on the review of literature sources we consider that the main gap of the studies concerned with the development of supporting tools for the orientation and navigation of the visually impaired lies in the fact that the majority of them do not publish the results of user studies. It is also confirmed by the source [

38] that was devoted to the review of research and innovation within the field of mobile assistive technology for the visually impaired. This aspect, together with very small sample sizes of users in cases when the results were published, complicates the realisation of a credible comparison of the tools from the users’ point of view, and even makes it impossible.

2.2. Methodology for the Development of the Interactive Model

The following subsection describes five phases of the research realisation process, from the development of the research concept up to the implementation of the proposed interactive system and its assessment by the target group of users.

2.2.1. Phase 1: The Identification of Key Routes for the Motion of Pedestrians

As part of this phase the city of Tunja, the capital of Boyacá district in Colombia, was determined as a suitable place for the development and testing of the proposed tool.

The city of Tunja presents several mobility and accessibility problems that are affecting mobility of impaired people. They are described in more detail in the source [

39]. These problems are the reason for the need to identify routes that are convenient for visually impaired people in their daily outdoor mobility activities. These main routes’ recognition was effectuated by the members of the research team, and with advice from orientation and mobility instructors who work for a local association of the visually impaired, using on site observation and analysis techniques. These techniques were applied in order to recognise the sectors with possible greater traffic jams and pedestrian bottlenecks, and to identify the streets that are intented for pedestrians only or that combine lines for pedestrians and cars or any other means of transport. For the sake of the pilot study realisation, the center of the city was identified as the most appropriate and important part from the perspective of pedestrians’ mobility.

One of the main characteristics which influence pedestrian and automobile transport in the centre of Tunja is that streets are rather narrow and uncomfortable for the pedestrians’ movement. Thanks to the fact that the centre forms a relatively small part of the city the recognition of placement of the key research elements was rather easy. It was simple to identify three main sectors of the city centre: north, central and south sector, and in each of them to identify key points for the visually impaired who would move and orientate using the support of the developed mobile application. Then, there were identified sections and crossings which could have improved the orientation of the visually impaired people on these routes within the study being prepared. After the identification of each place, sector type and the routes, the research continued with the following phase.

2.2.2. Phase 2: The Assessment of Potential access Routes to the City Centre for People with Visual Impairments

After recognising these routes with a high frequency of pedestrians’ movement there were conducted surveys among the group of people which is the subject of the research; the goal was to find out properties of roads which are considered the most important ones while taking into account the following: accessibility, road signs, degree of obstacles, width of pavements, number of necessary turnings and crossings through a road intersection, elements of the traffic control, standard presence of the police, frequency of movement of vehicles and pedestrians.

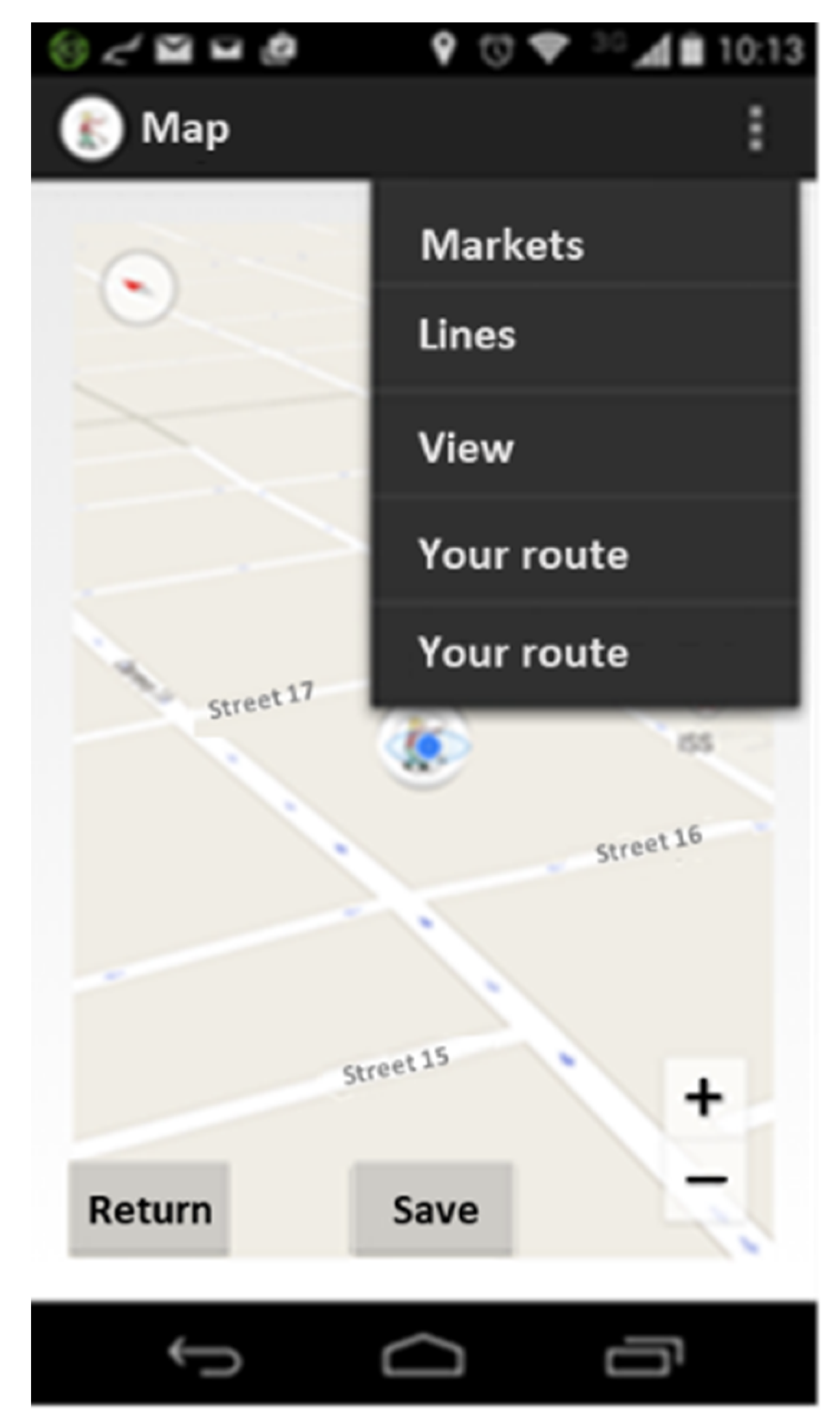

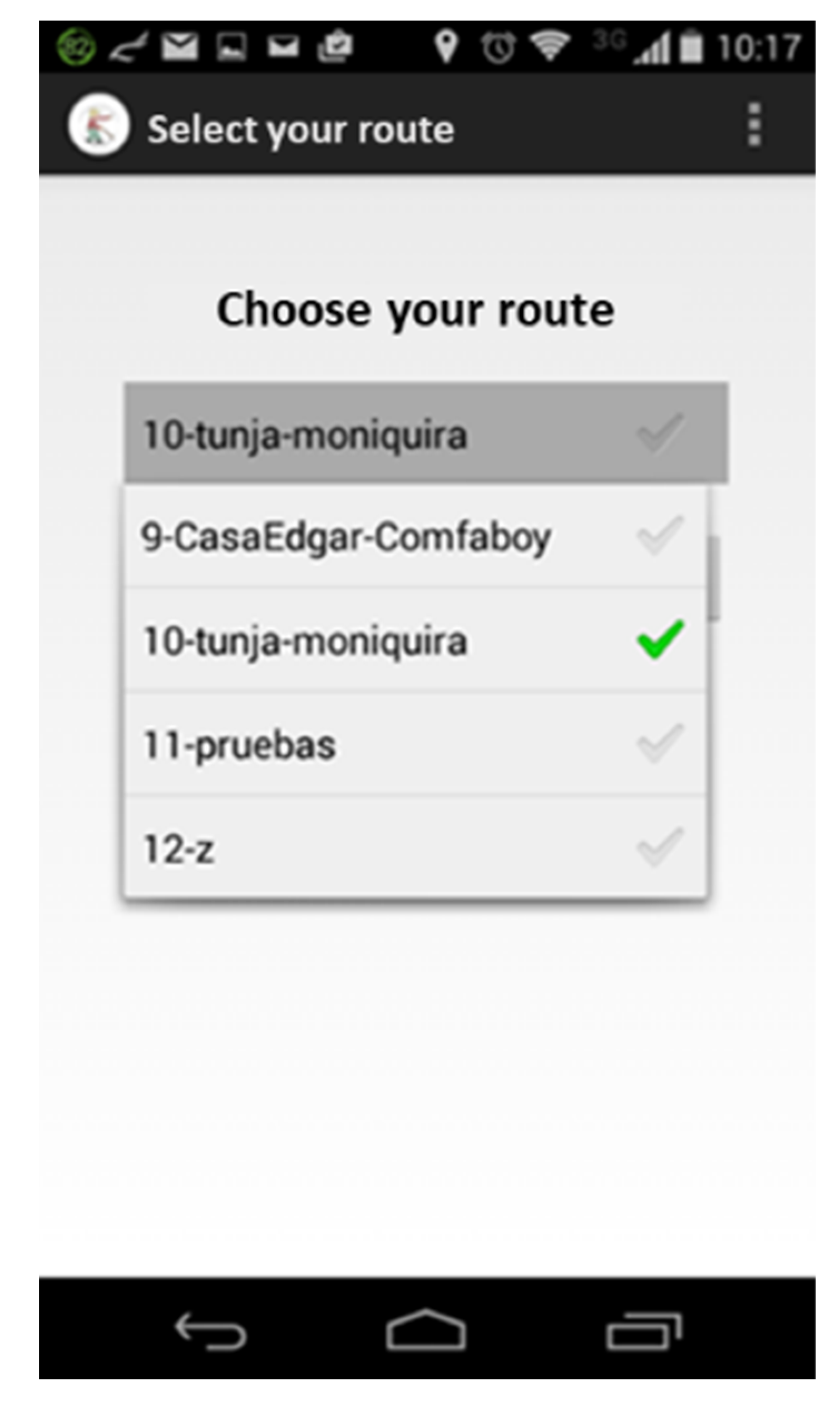

2.2.3. Phase 3: The Mobile Application Development

In order to manage the information regarding the identified routes in the centre of Tunja there was developed an application for Android operation system which manages reference points and routes of pedestrians’ movement to support the mobility of the visually impaired or the blind. These groups of people can use the system to manage, administrate and utilise the information on their position through the GPS system in their own mobile device. For this purpose, 4+1 architectural view model and MVC (Model View Controller) were applied; they offer a layered development as well as objects with java J2EE technology. Moreover, there was verified the suitability of using web services and JSON integration as a service for the data transfer in the cloud and using MySql as a database tool. Afterwards, there was worked out the design of application and implementation of the mobile application screens and there was generated an Android, version 4.2, application which is compatible with the majority of smartphone versions.

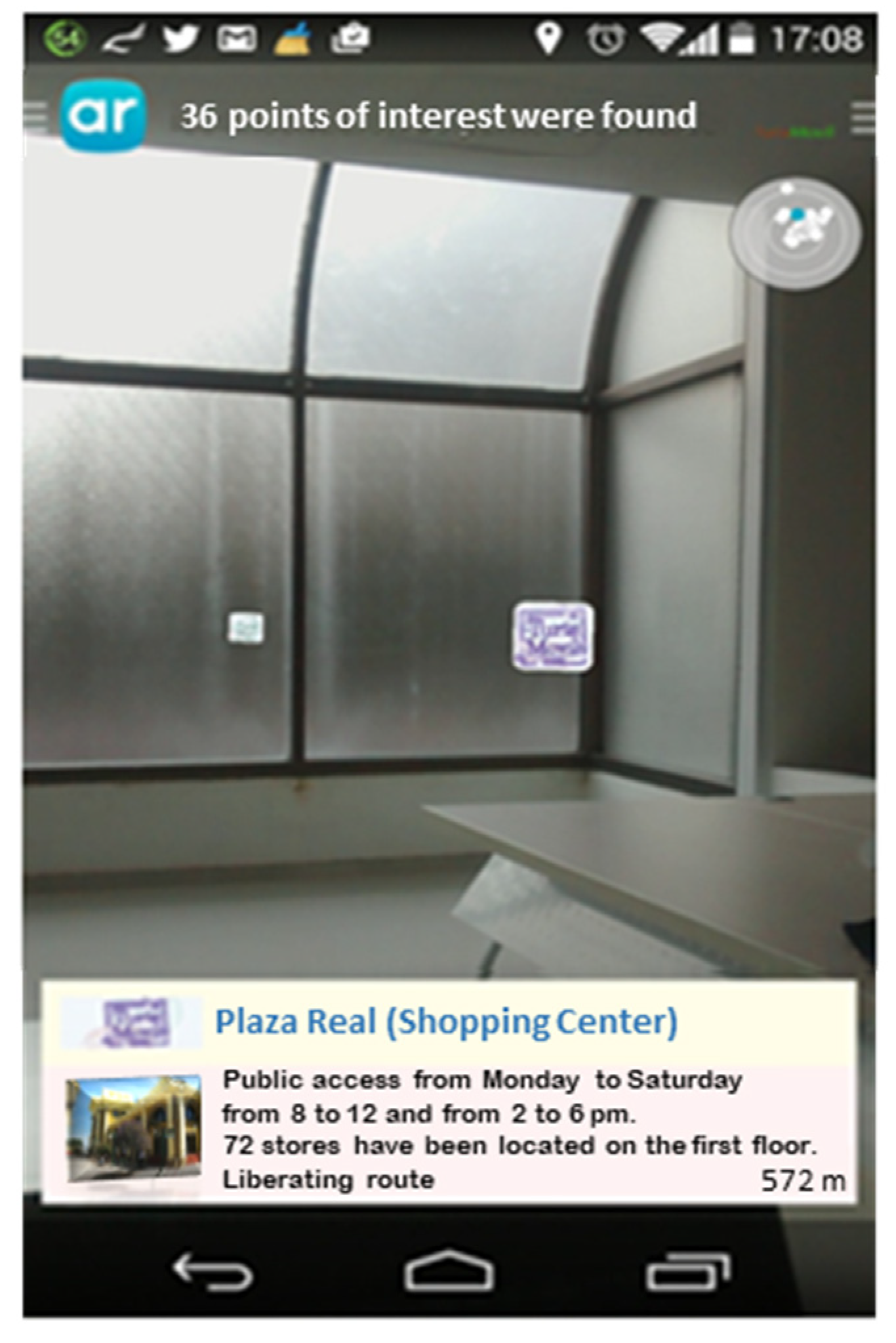

2.2.4. Phase 4: The Implementation of the Augmented Reality

When the mobile application was developed the integration of Layar as a control tool for the augmented reality started. The aim was to provide complete information needed for safe and oriented mobility of the visually impaired on the route identified as the most convenient one. The usage of the augmented reality enables the acquisition of an indicator of the precise position of a person only with locating the smartphone at a reference point, occurring in their vicinity. It works as follows: based on the information from the camera the mobile application indicates signs of the given reference point, its proximity to the user and the other key points in that place.

2.2.5. Phase 5: The Testing of the Developed Application Functioning

Last but not least the functioning of the developed application had to be verified. The verification population sample consisted of the group of 9 people who suffer from a severe visual impairment (7 blind persons and 2 almost blind persons according to the categorisation presented at the beginning of this paper), who live in Tunja city and are associated with the local entity of the visually impaired. This association is made up of a group of visually impaired people who carry out all kinds of daily work activities. To select these testing participants some additional factors were also considered, such as: technological skills, usage of devices, time availability to perform the application verification, acceptation of this kind of product, etc.

Ethical Statement was signed by all the participants prior to the realisation of functionality acceptance and usability testing.

The problem of the small sample size in terms of the evaluation of developed supporting tools for mobility of the visually impaired occurs in this specific kind of studies quite often. In the source Basosi (2020) the usability testing of navigation for guiding the visually impaired inside the campus was conducted with five blindfolded respondents. In [

40] the AR4VI tool, designed for navigation of low vision or blind people using the augmented reality menu through a relief map and a fiducial marker board, was tested by a focus group of five blind adults. Usability of the system that embeds rich textual descriptions of objects and locations in wiki OpenStreetMap, presented in the source [

41] was evaluated by 10 visually impaired participants. In the source [

42] authors identified that the even majority of this kind of research works do not publish results of user studies, so the realisation of any comparison of the tools from the users’ point of view is very complicated.

3. Results

This part introduces main research results related to the realisation of each of the phases mentioned above, and the related discussion.

3.1. The Identification of Key Routes and Their Assessment

In this section the development of the activities carried out in phases 1 and 2 of the proposed method is presented.

In the first phase of the presented research, the characteristics of access routes were identified; they would be used as strategic places for the selection of information available to the users through georeferences of routes creating the Tunja city centre with the support for digital maps and Google Maps API platform.

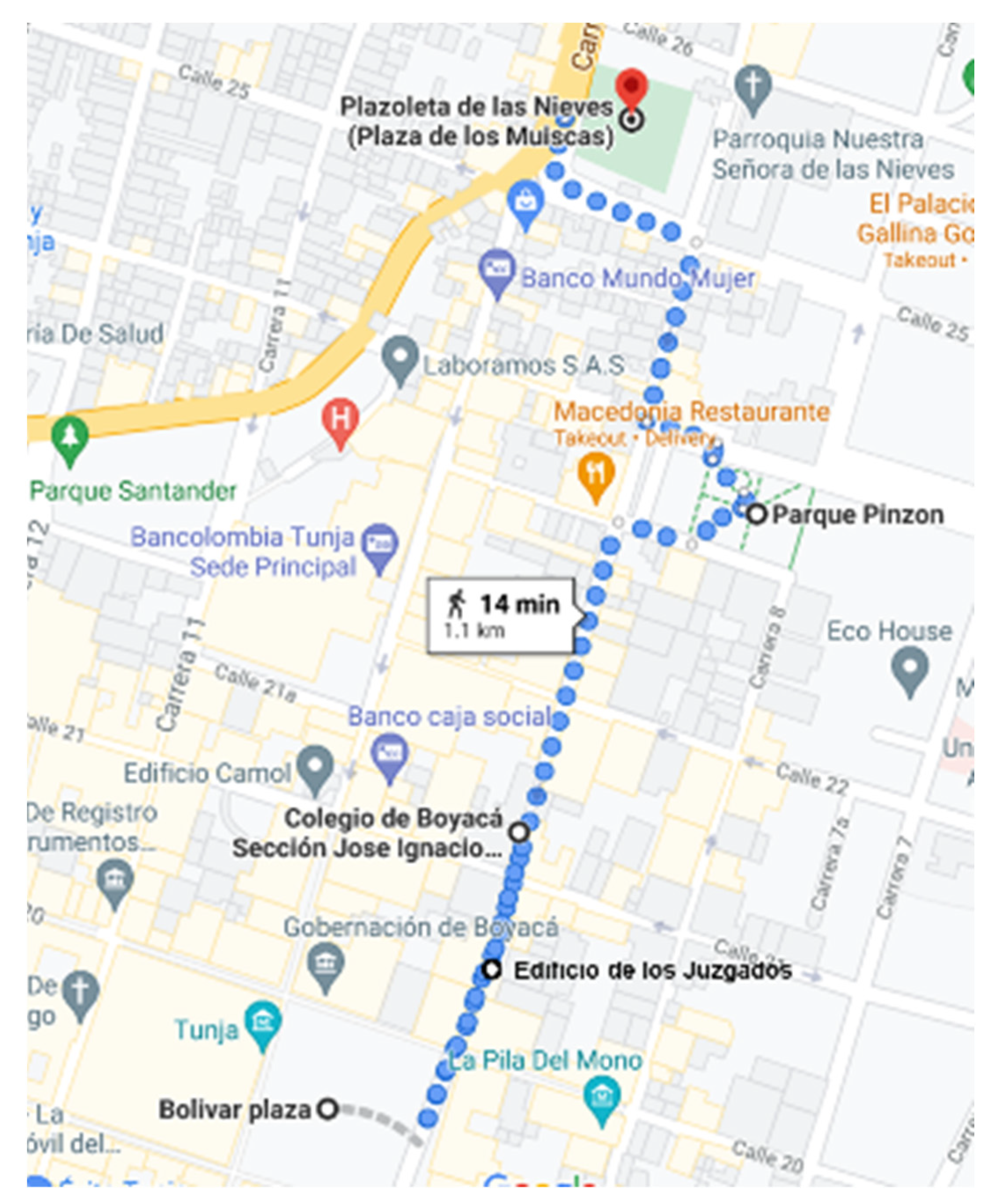

As an example of the assessment process, the evaluation of route: “Plazoleta los Muiscas” to “Plaza de Bolívar” is described. This route starts on “Plaza De Las Nieves” and ends on “Plaza de Bolívar”, while moving along the route on “Carrera 9”. There on this route, in the first reference point a famous tourist attraction: “Parque Pinzón” is located, it includes “San Agustin” library; the second reference point in the north-south direction is the educational institution of “Colegio de Boyacá”, and the third reference point is the “Edificio de los Juzgados” (See

Figure 1). Even though this route in the south-north direction is utilised for motorised transport as well, the pedestrian part of the route is a bit narrow and quite uneven; thus, pedestrians without any visual impairments use it to a rather small extent.

Table 1 presents individual items used for the route evaluation. The characteristics of the route (that were considered in the evaluation) were selected on the basis of consultation with orientation and mobility instructors for the visually impaired who work for a local association of the visually impaired in the city of Tunja.

3.2. The Mobile Application Development (Phase 3)

The development of the mobile application for the visually impaired followed. The first step in the development of this mobile application was the analysis of Google maps as sources of geographical information due to an easier instant update of changes in the placement of reference points in the city. The second step was to obtain coordinates (latitude and longitude) according to principles described in the source [

43]. This activity is carried out through the activation of a GPS device. The GPS system activation is performed automatically in the mobile application while a warning message is displayed on the screen, informing on the navigation system activation. The third step was the definition of the system characteristics that are specific to blind people and people with a severe visual impairment, which include:

The application has to be ergonomic, i.e., the menu options are appropriately placed and are of an adequate size for the control using a finger.

Screens should be simple and they should not contain much information.

The system must be fully compatible with any system for reading the screen including the mobile device operation system.

The system must be eyes-free and operational and controlled with voice.

The system must easily manage the information required by the user.

The navigation system must be able to instantly mark geographical points which the visually impaired people move through.

The augmented reality must support the information accessibility of places which should stand for reference points.

Each reference point must contain a warning, marking its proximity as well as related information depending on the distance from the place the user is located in.

Based on the characteristics mentioned above a list of requirements for the mobile application was generated. The list includes functional requirements (Register a user, Register the current position, Activate GPS, Enter the route, Register a reference point, Select a route, Classify the route by the risk level, Access to the map with the current position, Recognise the reference point by the augmented reality) and non-functional requirements (Styles of List menu, Setup of parameters, Indication sounds, Background colour, Time needed for the position update, Remember the registered user, Navigation among screens, Swivel and display of a map).

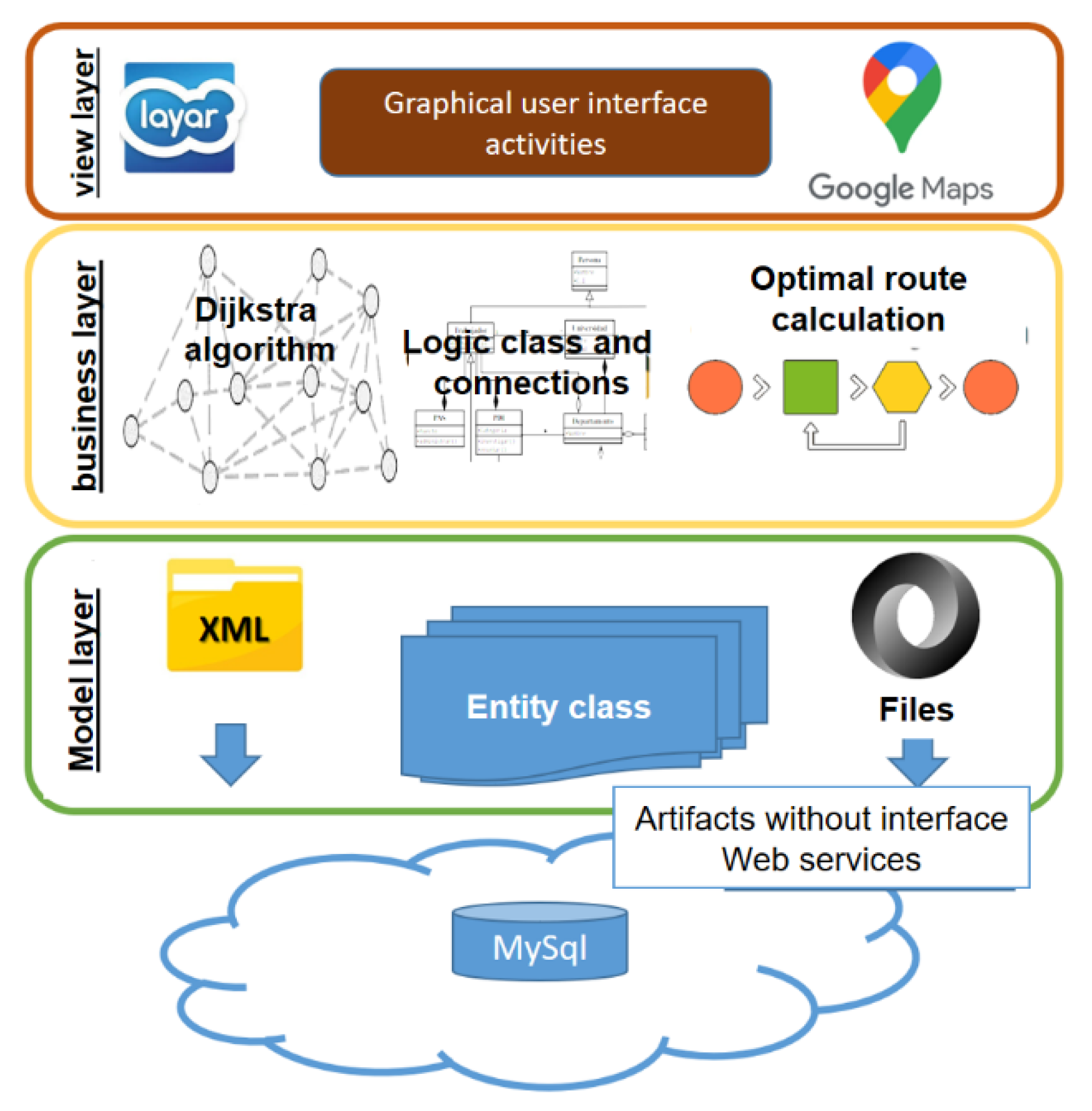

Then, the architecture of the application was defined (

Figure 2) which is governed by the principles of design patterns, safety, data protection, performance, data flow and transfer, data distribution, code maintenance and MVC (Model View Controller) model usage together with the application of layers which are as follows:

Display layer: It comprises a graphic user interface (GUI) of the system and interfaces of Android activities where access screens of applications unified via Google Maps API as a manager of routes and reference points are created. Layar as a layer of visualisation for the management of the augmented reality marks points which create/integrate the chosen route.

Business logic layer: There in this layer Dijkstra algorithm and DAO connections classes and data management are implemented in order to control logic which ensures the information control and data management for an end user. As part of this layer and on the basis of predefined parameters the algorithm calculates an optimal route for a visually impaired user.

Data model layer: There occur entities which execute the management of database tables as well as data sorters that are used during the information processing; this layer also includes control elements as well as XML files which serve for the check of sending data into a database, JSON classes which are responsible for the output of application data into MySql database, input management and their reading in order to manage information and their respective processing in the Business logic layer.

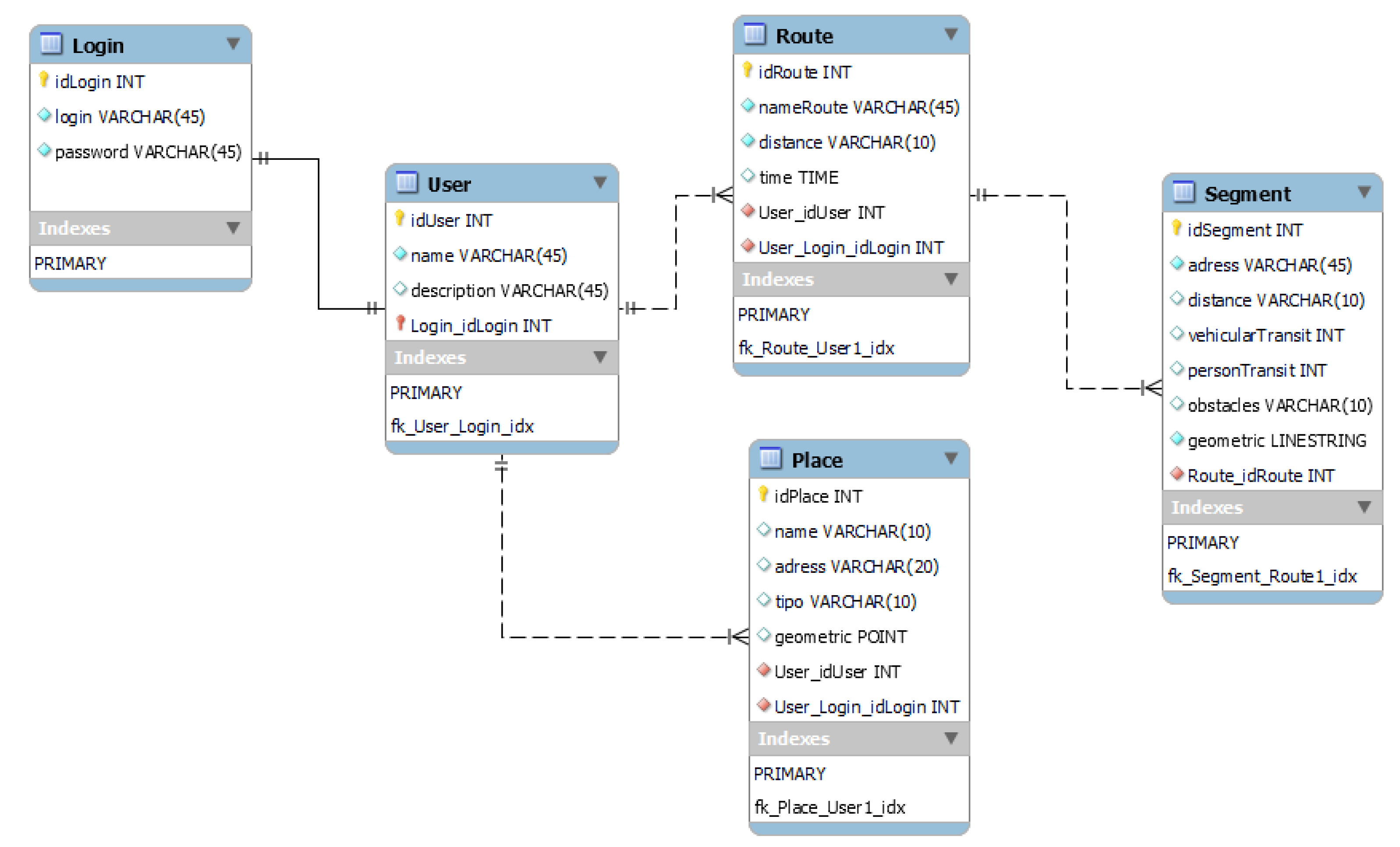

In the proposed architecture, the first component is visualised, which is the database hosted on a server located on the web, with a MySql version 5.0 storage engine. The relational model of the developed application is presented in

Figure 3.

This model supports the actions of location, insertion and calculation of routes, user administration and location of people, according to where they are, depending on the route indicated and the places that can serve as a reference points.

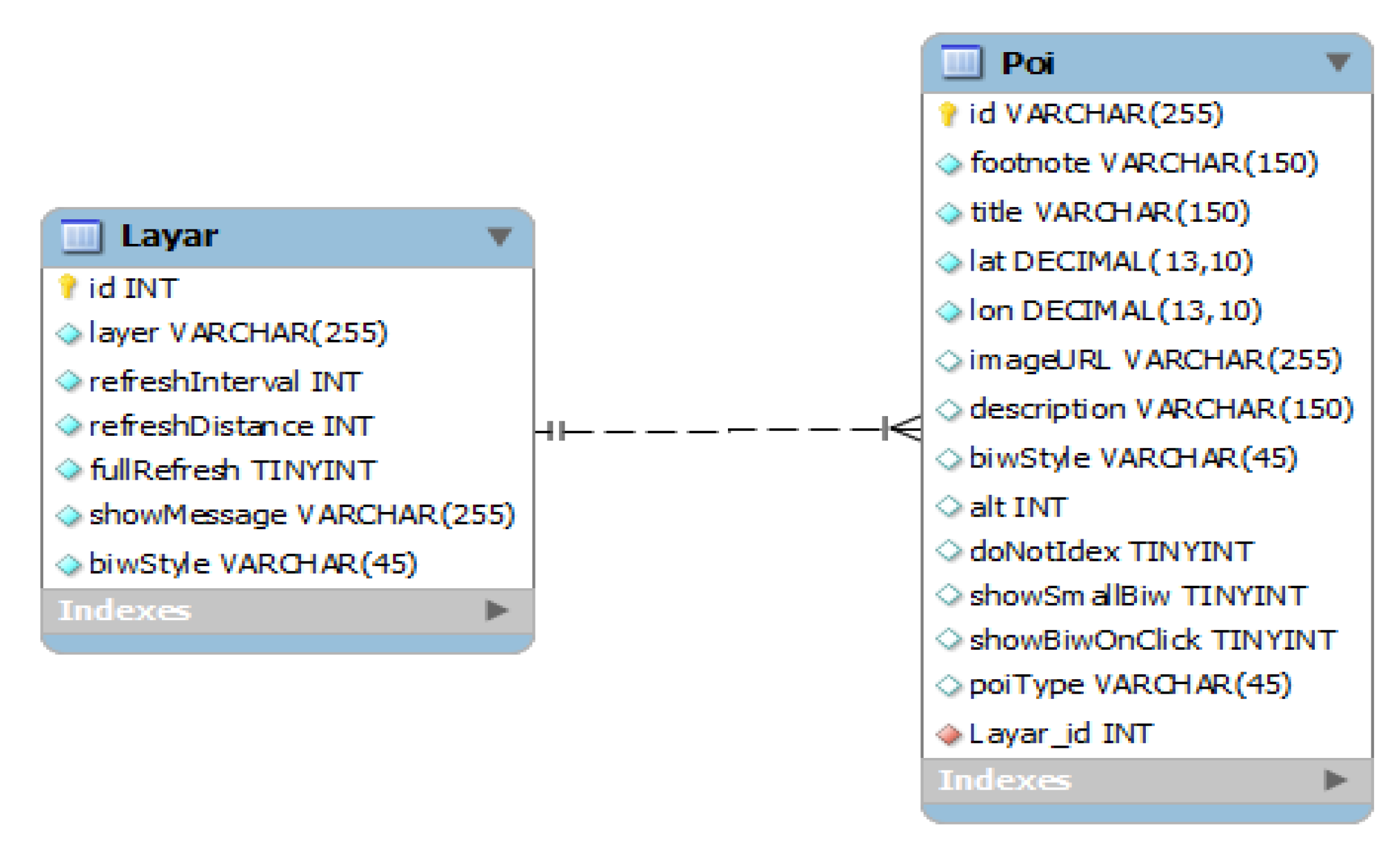

The representation of Layar layer (the augmented reality support) shown in

Figure 4 presents a relational model of two tables that are in charge of managing the stored information, responding to the queries and displaying the location of the places that have been saved as a reference points.

After the data modelling, the process of data treatment continues with a lower or a persistence layer which is in charge of mapping all the entities in order for them to be persistent and understandable by the programming language (JAVA).

3.3. The Implementation of the Augmented Reality

As a supporting tool for the localisation and mobilisation of the sightless on the identified routes the augmented reality was implemented into the developed mobile application. The application of this component allows for the graphic interface to be understandable for a visually impaired person, and this way they can easily interact with the device. The implementation of Layar as an API was accomplished; it provides full functionality for the content management, such as entering places serving as a reference point for the sightless, and a voice indication with a brief description of the place the person is becoming closer to.

As part of the implementation of the augmented reality into the mobile application the following activities were performed:

While marking routes some places which were already managed before are marked. The chosen route is configured on the basis of reference points and the distance which is calculated by the application.

Figure 7 shows how to obtain information on reference places which will help the user to recognise their vicinity and their current position better. This way using an auditory indicator they are able to determine how far they are from the places they are approaching to.

3.4. The Testing of the Developed Application Functioning

The last step of the presented research was the phase aimed at conducting the function tests. The sample consisted of 9 visually impaired persons who provided qualitative replies and suggestions for the tested application. The evaluation part consisted of 28 questions divided into three main sections. The following methods were used for the evaluation: multiple choice questions, and assessment using a Likert scale. The main objective of this research was to determine any possible difficulties that participants experienced in their navigation on the predefined route, and to express their ideas on the following features of the application:

Application acceptance: To verify this parameter a survey on the need to own an application of this type was conducted; its questions allowed knowledge of the usefulness of the application for everyday usage for the visually impaired, its strengths and weaknesses, the extent to which this application fulfils expectations of users regarding the improvement of their mobility. The replies showed that the application characteristics being evaluated fulfilled the users’ expectations with respect to improving the quality of their life, movement process control, application functioning and the interface design, up to 78% in total, since some replies suggested a need for improving the application’s design and functioning. Some users that had no previous experience with similar navigation tools perceived the work with the application a little bit complicated in the beginning of its use, but after gaining the experience they evaluated it positively. Not all of the participating users were interested in the possibility to share their notes on the route with other users.

Interfaces management (work with interfaces): The interfaces management is a crucial point in the development of an application for people with a visual impairment because some of the requirements include: the application must be simple and intuitive; it must contain a small scope of items for reading and it must contain supporting tools for reading and writing. Thus, during the application development the voice sign-in was considered in order to use features, such as personalisation of routes the user wishes to register, visualisation and auditory description of routes selected as own ones for which some text boxes, e.g., coordinates, are automatically recorded so the user (the sightless person) can manage information they prefer and consider necessary for their everyday movement across the city.

Navigation in the application (navigability of application): When speaking about the navigation in the application, what is important is that the users must first of all undergo the registration process that allows them to configure and customise parameters for their mobility. Each user is then assigned login credentials whose application is conditioned with the need to have an active Android-supported system TalkBack (a Google screen reader integrated in Android devices which allows eyes-free control and navigation) installed on their phones for the sake of validation. The system enables to read screens and functions for the phone management. The user is responsible for the selection of features they consider necessary for the management and control of the application (Enter the current position, Show the map, Enter the reference point, such as the name of the route or route segments). The initial testing processes which the user was engaged in demonstrated a high degree of acceptance, because the process was simple and well navigated with a voice module.

However, when thinking of functioning and navigability, one of the items mostly appreciated by users in the process of testing was the added value of the calculation of the most acceptable, adequate and least dangerous route for their movement among reference points. The users stated that this function brought them a reduction in time spent on the route as well as a higher degree of safety because while moving round the city centre they felt less exposed to everyday risks.

Users also positively evaluated the descriptions of the places that constitute their route by the use of the augmented reality, indicating information about the place, user’s proximity to it and the reference points that are next to this place. This information helped them to feel better oriented during their movement on the identified route and to have better awareness of their surroundings that is needed for safer mobility. The use of the augmented reality together with the possibility to add detailed descriptions of the objects and locations on their route can be highlighted as an aspect that differentiates this application from other navigation tools for the visually impaired.

Users generally appreciated the prototype; they found the tool attractive and useful. They also provided some ideas for future work. For example, they suggested extending its functionalities to real-time objects recognition.

4. Discussion

Based on the data presented in the introductory part of this paper it is clear that the increase and ageing of the world population lead to an increasing number of the visually impaired people globally. This type of disability has become a growing barrier while performing a wide range of common everyday activities which require an access to visual information. This disability also has a significant impact on the visually impaired people’s mobility and movement, especially in public areas. In case of the moving activity, it is often necessary to actively interact with different devices which contain important visual information, and the overwhelming majority of them are not tailored to needs of the visually impaired. Even though, the number of technological solutions for the mobility support increases, the majority of them is usable for persons with the visual impairment only partially or not at all [

44].

The trend towards worsening the population’s sight worldwide is also confirmed by the WHO World report on vision [

8], which provides evidence on the magnitude of eye conditions and vision impairment globally. The key proposal of the report is that all countries should provide integrated services aimed at people with the visual impairment which will ensure that throughout their entire life these people are provided with care and support based on their individual needs which will contribute to improving the quality of their life to the highest extent possible.

It is the tool introduced in this paper that could significantly contribute to the fulfilment of the given goal. Major benefits of its usage with regard to its pilot application in Tunja city can be summarised as follows:

Thanks to the conducted survey it was possible to identify and recognise routes in Tunja city not only in terms of their location and type, but also in relation to the importance of these routes for the target audience of the conducted research which enabled to sort these routes by the needs of the visually impaired, to distinguish possible routes for everyday commuting, and thus to improve the everyday mobility of these persons.

The identification of mobility needs of the visually impaired allows for the identification of routes and segments of the city in order to suggest some technological tools that will be tailored to these needs. There in our research this information contributed to the development of the application which is tailored to the target audience from various aspects. In addition to other aspects this application takes into account the degree of obstacles, number of pedestrians, traffic flow of vehicles (depending on the peak and valley hours of car transport), risk rate of the route (number of turnings and zebra crossings through a road communication while using the given route), standard presence of the police, existence of reference points, existence of markings for the sightless, etc. This way it enables to propose options for the visually impaired to move safely and calmly in the city streets. During the pilot testing of the suggested tool users highly appreciated the sense of security they felt while using the routes suggested by the application.

5. Conclusions

Based on the data presented in the introductory part of this paper it is clear that the increase and ageing of the world population lead to an increasing number of visually impaired people globally. This type of disability has become a growing barrier while performing a wide range of common everyday activities which require an access to visual information. This disability also has a significant impact on the visually impaired people’s mobility and movement, especially in public areas. In case of the moving activity it is often necessary to actively interact with different devices which contain important visual information, and the overwhelming majority of them are not tailored to needs of the visually impaired. The tool introduced in this paper was designed on the basis of the identification of mobility needs of the visually impaired. It is takes into account some different aspects that are specific to this group of people.

The developed application contains information needed for the identification of the most optimal route which is suggested to be used by the visually impaired while they are moving from a defined leaving point to a desired target point. During the identification and configuration of this route, the mobile application, through algorithms based on first-order logic, compares obtained qualitative values of 4-level rating scale (high, acceptable, medium, low) of predefined parameters (pavement width, frequency of pedestrians’ movement, frequency of vehicles’ movement, degree of obstacles, number of turnings, number of zebra crossings, traffic signalling and markings for visually impaired, standard presence of the police and existence of reference points), in order to discard the shorter routes that that involve a high degree of risk for the mobility of visually impaired. Identification and evaluation of these parameters was carried out by orientation and mobility experts and it is described more in detail in

Section 3.1. Example of the assessment of the suitability of a potential route for visually impaired is provided in

Table 1.

The essential benefit of tool usage is derived from all these aspects. It lies in preventing risky, possibly dangerous and hardly accessible places by the visually impaired. During the pilot testing of the suggested tool users highly appreciated the sense of security they felt while using the routes suggested by the application.

The main added value of designed application is that it is highly personalised. The visually impaired have some specific interest points as well as routes they usually use to access them. The presented mobile application makes it possible to add information about some well-known and regularly used routes to the system, as well as to include orientation points (these may be any long-term static objects, such as a fountain, kiosk, etc.) as a tag into these routes for the sake of a better orientation. The general database of reference objects is updated with this information upon their verification and acceptance by the system admin. This is possible also thanks to the platform management and personalisation of each of the created profiles which are a source for creating a knowledge database and an information database. The integration of the augmented reality into the developed application allows to improve the information on places which are indicated as the most frequent ones by visually impaired users on a certain route.

Another important point, that sets this application apart from other navigation tools which are supporting the visually impaired people’s mobility, is the possibility for the community participation which aims to assess the routes and related reference points from the point of view of their suitability for this group of people. The goal is to build some quality information databases connection of which enables to determine optimal routes for the mobility of this group of people.

The fact that the application is based on utilising the Google APIs interfaces means that the developed application can be customised to every city or geographical region where it is required by the population, even in case of population which does not need to be necessarily blind or visually impaired because travellers or tourists who do not know the surrounding environment could also use this tool with the voice support to know it. All of this allows for opening the door to the development of new applications on the local or national level with the support of the augmented reality as a resource for fostering the orientation and information exchange.

Presented study had also limitations that we would prefer to overcome in the next work. Our further research will be focused on the verification of the application in other environments as well as on testing its functioning by a bigger sample of users with different degrees of the visual impairment. Based on the results of the conducted research individual elements will be fine-tuned from the point of view of their design and functionality. In the future we would prefer to extend the application with real-time objects recognition which would enable its usage in a wider scope to recognise obstacles on the road for the visually impaired. This function would be excessively beneficial to the movement of the visually impaired.

Author Contributions

Conceptualisation, E.H.M.-S., M.M. and M.C.-C.; methodology, E.H.M.-S., M.M. and M.C.-C.; software, E.H.M.-S.; validation, E.H.M.-S., M.M. and M.C.-C.; formal analysis, E.H.M.-S. and M.M.; investigation, E.H.M.-S., M.M. and M.C.-C.; resources, M.M. and M.C.-C.; data curation, E.H.M.-S. and M.M.; writing—original draft preparation, M.M. and M.C.-C.; writing—review and editing: E.H.M.-S., M.M. and M.C.-C.; visualisation, E.H.M.-S.; supervision, M.M. and M.C.-C.; project administration, M.C.-C.; funding acquisition, M.M. and M.C.-C. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by Pedagogical and Technological University of Colombia (project number SGI 2657) and the APC was funded by University of Zilina.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Acknowledgments

This work was supported by the Software Research Group GIS from the School of Computer Science, Engineering Department, Pedagogical and Technological University of Colombia and by the Department of Road and Urban Transport from the Faculty of Operation and Economics of Transport and Communications, University of Zilina.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jefferson, L.; Harvey, R. Accommodating Color Blind Computer Users. In Proceedings of the 8th International ACM SIGACCESS Conference on Computers and Accessibility, Portland, OR, USA, 23–25 October 2006; pp. 40–47. [Google Scholar] [CrossRef]

- Mikusova, M. Sustainable structure for the quality management scheme to support mobility of people with disabilities. Procedia Soc. Behav. Sci. 2014, 160, 400–409. [Google Scholar] [CrossRef][Green Version]

- Gallagher, T.; Wise, E.; Li, B.; Dempster, A.G.; Rizos, C.; Ramsey-Stewart, E. Indoor positioning system based on sensor fusion for the Blind and Visually Impaired. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sydeny, Australia, 13–15 November 2012; pp. 1–9. [Google Scholar] [CrossRef]

- Bourne, R.R.A.; Adelson, J.; Flaxman, S.; Briant, P.; Bottone, M.; Vos, T.; Naidoo, K.; Braithwaite, T.; Cicinelli, M.; Jonas, J.; et al. Global Prevalence of Blindness and Distance and Near Vision Impairment in 2020: Progress towards the Vision 2020 targets and what the future holds. Investig. Ophthalmol. Vis. Sci. 2020, 61, 2317. [Google Scholar]

- Borowska-Stefańska, M.; Wiśniewski, S.; Kowalski, M. Daily mobility of the elderly: An example from Łódź, Poland. Acta Geogr. Slovenica 2020, 60, 57–70. [Google Scholar] [CrossRef]

- Bungau, S.; Tit, D.M.; Popa, V.-C.; Sabau, A.; Cioca, G. Practices and attitudes regarding the employment of people with disabilities in Romania. Qual.-Access Success 2019, 20, 154–159. [Google Scholar]

- World Health Organization. Blindness and Vision Impairment. Available online: https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment (accessed on 21 March 2021).

- World Health Organization. World Report on Vision (October 2019). Available online: https://www.who.int/publications/i/item/9789241516570 (accessed on 25 March 2021).

- Wachnicka, J.; Kulesza, K. Does the use of cell phones and headphones at the signalised pedestrian crossings increase the risk of accident? Balt. J. Road Bridge Eng. 2020, 15, 96–108. [Google Scholar] [CrossRef]

- Mikusova, M.; Wachnicka, J.; Zukowska, J. Research on the Use of Mobile Devices and Headphones on Pedestrian Crossings—Pilot Case Study from Slovakia. Safety 2021, 7, 17. [Google Scholar] [CrossRef]

- Costa, M.C.; Manso, A.; Patrício, J. Design of a mobile augmented reality platform with game-based learning purposes. Information 2020, 11, 127. [Google Scholar] [CrossRef]

- Fernández-Enríquez, R.; Delgado-Martín, L. Augmented reality as a didactic resource for teaching mathematics. Appl. Sci. 2020, 10, 2560. [Google Scholar] [CrossRef]

- Baragash, R.S.; Al-Samarraie, H.; Moody, L.; Zaqout, F. Augmented Reality and Functional Skills Acquisition Among Individuals with Special Needs: A Meta-Analysis of Group Design Studies. J. Spec. Educ. Technol. 2020. Available online: https://journals.sagepub.com/doi/full/10.1177/0162643420910413 (accessed on 1 June 2021). [CrossRef]

- Liu, Y. Use of mobile phones in the classroom by college students and their perceptions in relation to gender: A case study in China. Int. J. Inf. Educ. Technol. 2020, 10, 320–326. [Google Scholar] [CrossRef][Green Version]

- Cabrero, R.E.Z. Un Proceso de Aprendizaje a Través de su Mirada. Master’s Thesis, Universidad Politécnica de Madrid, Madrid, Spain, 2018. [Google Scholar]

- Carreiras, M.; Codina, B. Cognición espacial, orientación y movilidad: Consideraciones sobre la ceguera. Integr. Rev. Sobre Ceguera Y Defic. Vis. 1993, 11, 5–15. [Google Scholar] [CrossRef]

- Jamroz, K.; Budzynski, M.; Romanowska, A.; Zukowska, J.; Oskarbski, J.; Kustra, W. Experiences and Challenges in Fatality Reduction on Polish Roads. Sustainability 2019, 11, 959. [Google Scholar] [CrossRef]

- Budzynski, M.; Jamroz, K.; Mackun, T. Pedestrian Safety in Road Traffic in Poland. IOP Conf. Ser.: Mater. Sci. Eng. 2017, 4, 042064. [Google Scholar] [CrossRef]

- Borowska-Stefańska, M.; Kowalski, M.; Wiśniewski, S. Changes in travel time and the Load of road network, depending on the diversification of working hours: Case study the Lodz Voivodeship, Poland. Geografie 2020, 2, 211–241. [Google Scholar] [CrossRef]

- Ochaíta, E.; Huertas, J.A.; Espinosa, A. Representatión espacial en los niños ciegos: Una investigatión sobre las principales variables que la determinan y los procedimientos de objetivación más adecuados. Infanc. Y Aprendiz. 1991, 14, 53–70. [Google Scholar] [CrossRef]

- Budzynski, M.; Jamroz, K.; Antoniuk, M. Effect of the Road Environment on Road Safety in Poland. IOP Conf. Ser. Mater. Sci. Eng. 2017, 4, 042065. [Google Scholar] [CrossRef]

- Peli, E.; Woods, R.L. Image enhancement for impaired vision: The challenge of evaluation. Int. J. Artif. Intell. Tools 2009, 18, 415–438. [Google Scholar] [CrossRef] [PubMed]

- Krajcovic, M.; Gabajova, G.; Matys, M.; Grznar, P.; Dulina, L. 3D Interactive Learning Environment as a Tool for Knowledge Transfer and Retention. Sustainability 2021, 13, 7916. [Google Scholar] [CrossRef]

- Satgunam, P.; Woods, R.L.; Luo, G.; Bronstad, M.; Reynolds, Z.; Ramachandra, C.; Mel, B.W.; Peli, E. Effects of contour enhancement on low-vision preference and visual search. Optom. Vis. Sci. 2012, 89, E1364–E1373. [Google Scholar] [CrossRef] [PubMed]

- Polorecka, M.; Kubas, J.; Danihelka, P.; Petrlova, K.; Repkova Stofkova, K.; Buganova, K. Use of Software on Modeling Hazardous Substance Release as a Support Tool for Crisis Management. Sustainability 2021, 13, 438. [Google Scholar] [CrossRef]

- Loomis, J.; Golledge, R.; Klatzky, R. Personal guidance system for the visually impaired. In Proceedings of the First Annual Conference on Assistive Technologies, Marina del Rey, California, CA, USA, 31 October–1 November 1994; pp. 5–91. [Google Scholar] [CrossRef]

- Brabyn, J.; Alden, A.; Haegerstrom-Portnoy, G.; Schneck, M. GPS performance for blind navigation in urban pedestrian settings. In Proceedings of the 7th International Conference on Low Vision, Goteborg, Sweden, 27 July–2 August 2002. [Google Scholar]

- Navigation for the Visually Impaired Using a Google Tango RGB-D Tablet—Dan Andersen. Available online: http://www.dan.andersen.name/navigation-for-thevisually-impaired-using-a-google-tango-rgb-d-tablet/ (accessed on 21 January 2021).

- Gabajova, G.; Furmannova, B.; Medvecka, I.; Grznar, P.; Krajcovic, M.; Furmann, R. Virtual Training Application by Use of Augmented and Virtual Reality under University Technology Enhanced Learning in Slovakia. Sustainability 2019, 11, 6677. [Google Scholar] [CrossRef]

- Ahmetovic, D.; Gleason, C.; Ruan, C.; Kitani, K.; Takagi, H.; Asakawa, C. NavCog: A navigational cognitive assistant for the blind. In Proceedings of the 18th International Conference on Human Computer Interaction with Mobile Devices and Services, Florence, Italy, 6–9 September 2016; pp. 90–99. [Google Scholar] [CrossRef]

- Ruffa, A.J.; Stevens, A.; Woodward, N.; Zonfrelli, T. Assessing iBeacons as an Assistive Tool for Blind People in Denmark. In Proceedings of the An Interactive Qualifying Project, Denmark Project Center, Worcester Polytechnic Institute, Worcester, MA, USA, 2 May 2015. [Google Scholar]

- Hatakeyama, T.; Hagiwara, F.; Koike, H.; Ito, K.; Ohkubo, H.C.; Bond, W.; Kasuga, M. Remote Infrared Audible Signage System. Int. J. Hum.-Comput. Interact. 2004, 17, 61–70. [Google Scholar] [CrossRef]

- Akbar, S.A.; Nurrohman, T.; Hatta, M.I.F.; Kusnadi, I.A. SBVI: A low-cost wearable device to determine location of the visually-impaired safely. IOP Conf. Ser. Mater. Sci. Eng. 2019, 674, 012039. [Google Scholar] [CrossRef]

- Borowska-Stefanska, M.; Wisniewski, S. Vehicle routing problem as urban public transport optimization tool. Compu. Assist. Methods Eng. Sci. 2016, 23, 213–229. [Google Scholar]

- Wang, Z.; Bai, X.; Zhang, S.; He, W.; Zhang, X.; Yan, Y.; Han, D. Information-level real-time AR instruction: A novel dynamic assembly guidance information representation assisting human cognition. Int. J. Adv. Manuf. Technol. 2020, 107, 1463–1481. [Google Scholar] [CrossRef]

- Kipper, G.; Rampolla, J. Augmented Reality: An. Emerging Technologies Guide to AR, 1st ed.; Syngress: San Francisco, CA, USA, 2012; p. 208. ISBN 9781597497336. [Google Scholar]

- Cuervo, M.C.; Salamanca, J.G.Q.; Aldana, A.C.A. Ambiente interactivo para visualizar sitios turísticos, mediante la realidad aumentada implementando LAYAR. Cienc. E Ing. Neogranadina 2011, 21, 91–105. [Google Scholar] [CrossRef]

- Hakobyan, L.; Lumsden, J.; O’Sullivan, D.; Bartlett, H. Mobile Assistive Technologies for the Visually Impaired. Surv. Ophthalmol. 2013, 58, 513–528. [Google Scholar] [CrossRef]

- D’Otero, J.C.P.; Marquez, L.; Pena, N.A.M. Commute patterns and accessibility problems for physically impaired people in Tunja. Rev. Lasallista De Investig. 2017, 14, 20–29. [Google Scholar]

- Basori, A.H. HapAR: Handy Intelligent Multimodal Haptic and Audio-Based Mobile AR Navigation for the Visually Impaired. In Technological Trends in Improved Mobility of the Visually Impaired; Paiva, S., Ed.; EAI/Springer Innovations in Communication and Computing; Springer: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Coughan, J.; Miele, J. AR4VI: AR as an Accessibility Tool for People with Visual Impairments. In Proceedings of the International Symposium on Mixed Augmented Reality, Nantes, France, 9–13 October 2017; pp. 288–292. [Google Scholar] [CrossRef]

- Gleason, C.; Fiannaca, A.J.; Kneisel, M.; Cutrell, E.; Morris, M.R. FootNotes: Geo-referenced Audio Annotations for Nonvisual Exploratio. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 1–24. [Google Scholar] [CrossRef]

- Cuervo, M.C.; Álvarez, L.M.; Cortes-Roa, A. Desarrollo de aplicaciones móviles enfocadas Development of Tourism-Focused Mobile Applications in the Boyacá Department Développement d’applications mobiles tourisme dans le département de Boyacá projetées au Contenido. Revista Virtual Universidad Católica Del Norte 2010, 29, 166–178. [Google Scholar]

- Geruschat, D.; Dagnelie, D. Assistive Technology for Blindness and Low Vision, 1st ed.; CRC Press: Boca Raton, FL, USA, 2013; p. 441. ISBN 9781138073135. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).