Street Design for Hedonistic Sustainability through AI and Human Co-Operative Evaluation

Abstract

1. Introduction

1.1. Research Background

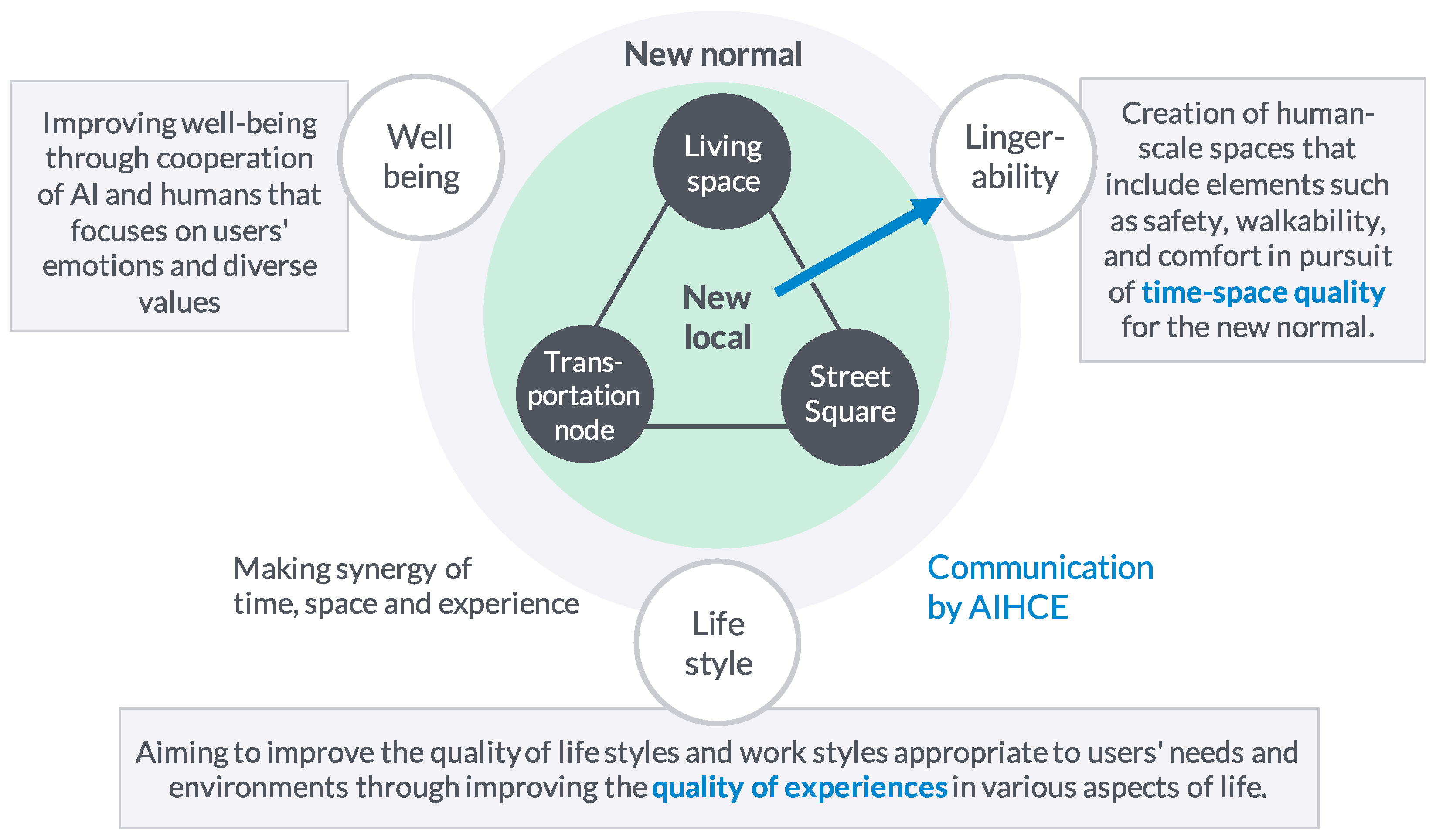

1.2. Conceptual Framework

1.3. Objectives

2. Literature Review

2.1. Co-Operative Design of Street Space

2.2. Evaluation Based on AI Image Processing

3. Methods

3.1. Data Collection of Street Space Images

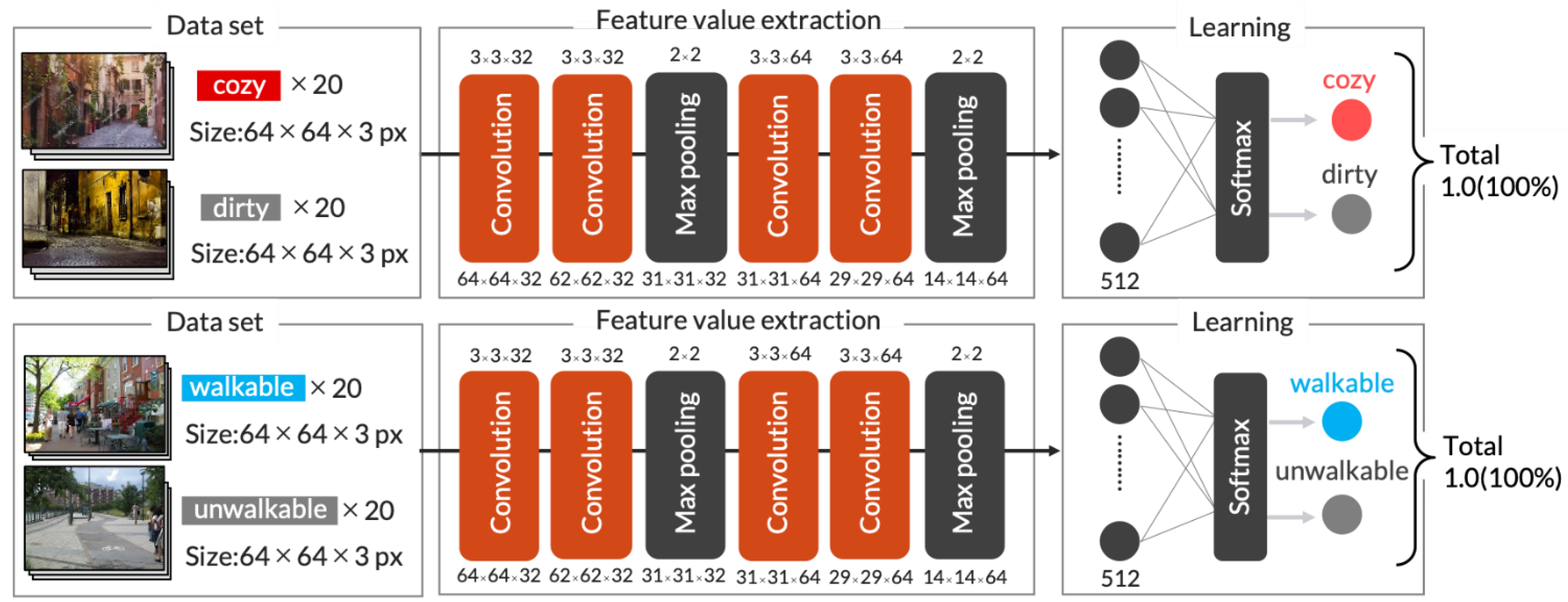

3.2. Development of CNN Models

3.3. Experiment Using Street Image Evaluation Model (SIEM)

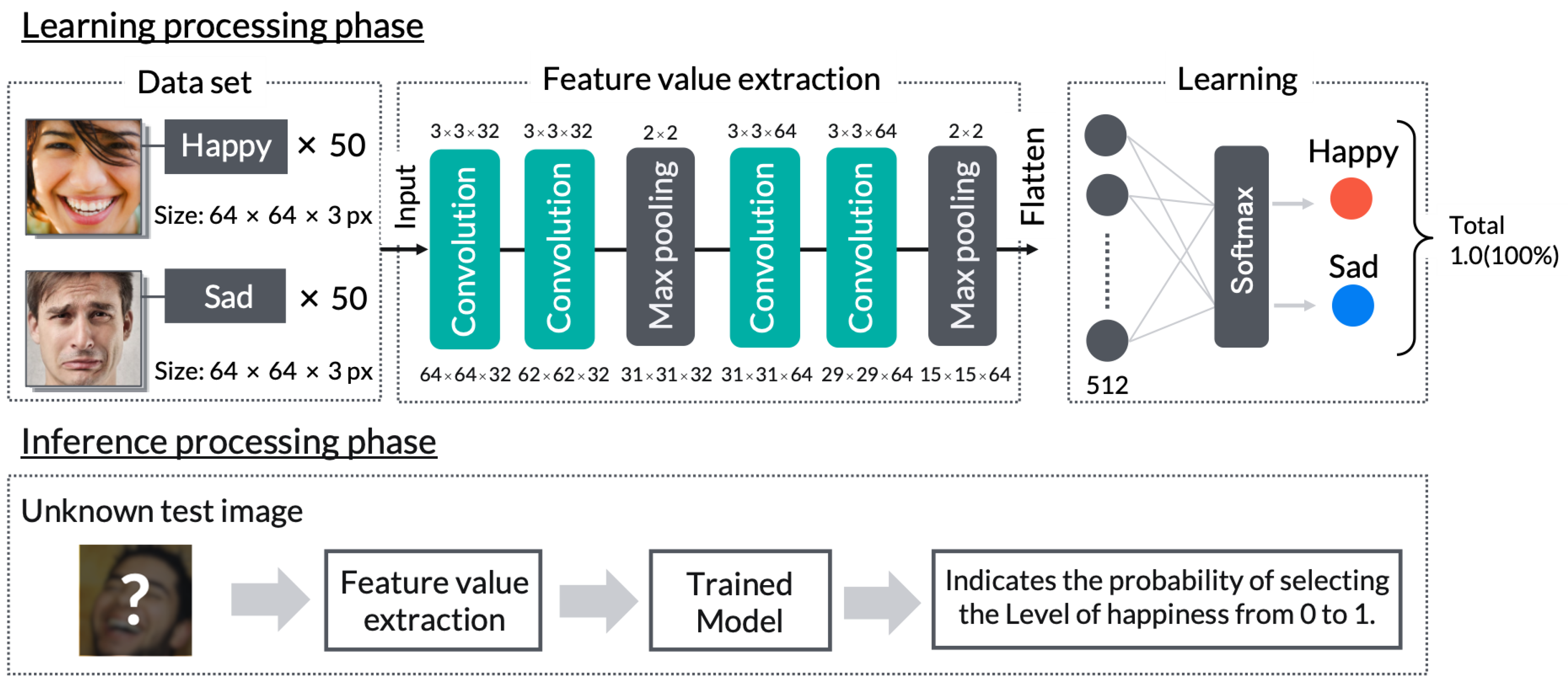

3.4. Experiment Using Facial Expression Recognition Model (FERM)

3.5. Supplemental Questionnaire Survey to Elaborate the FERM

4. Results

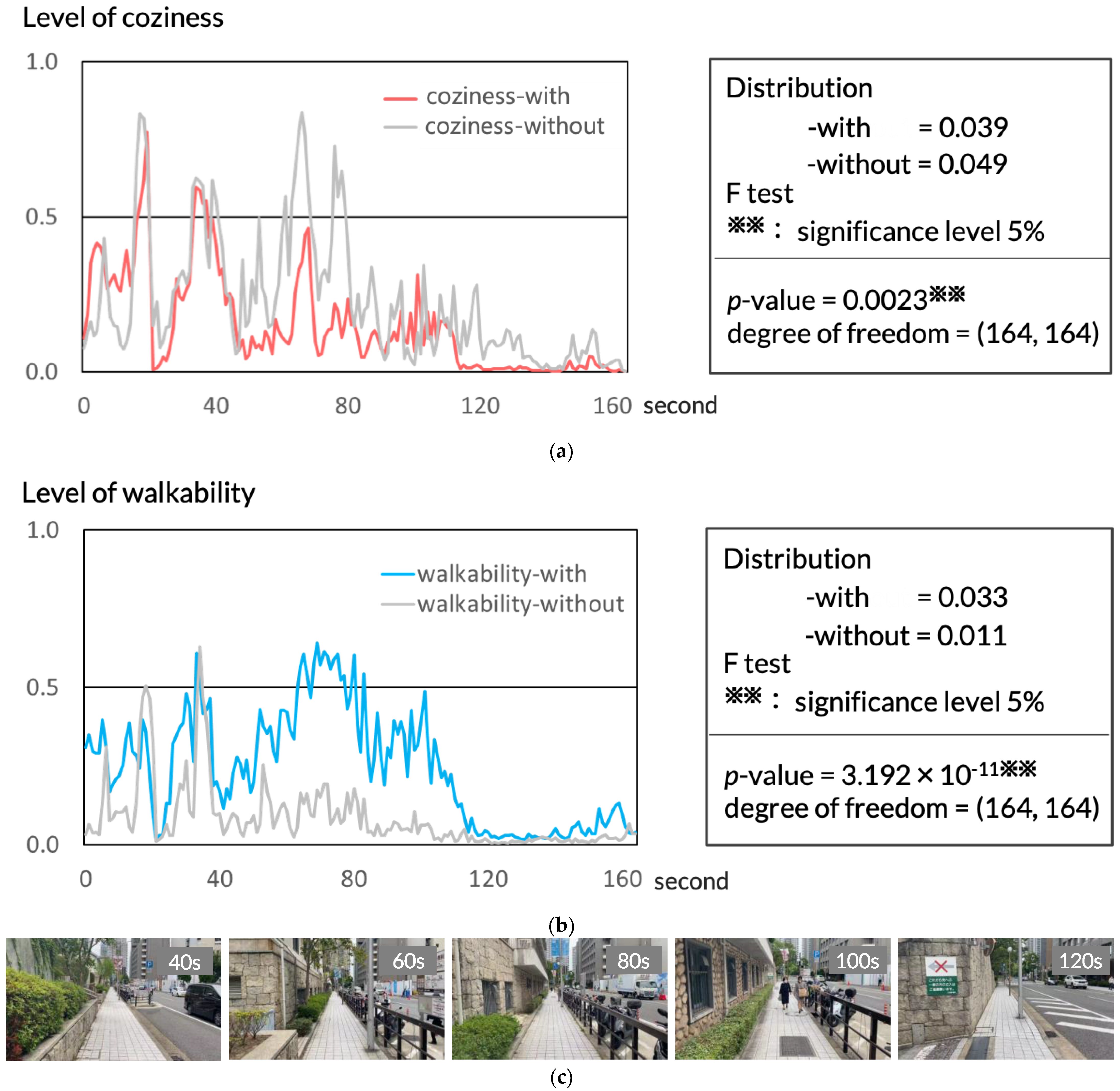

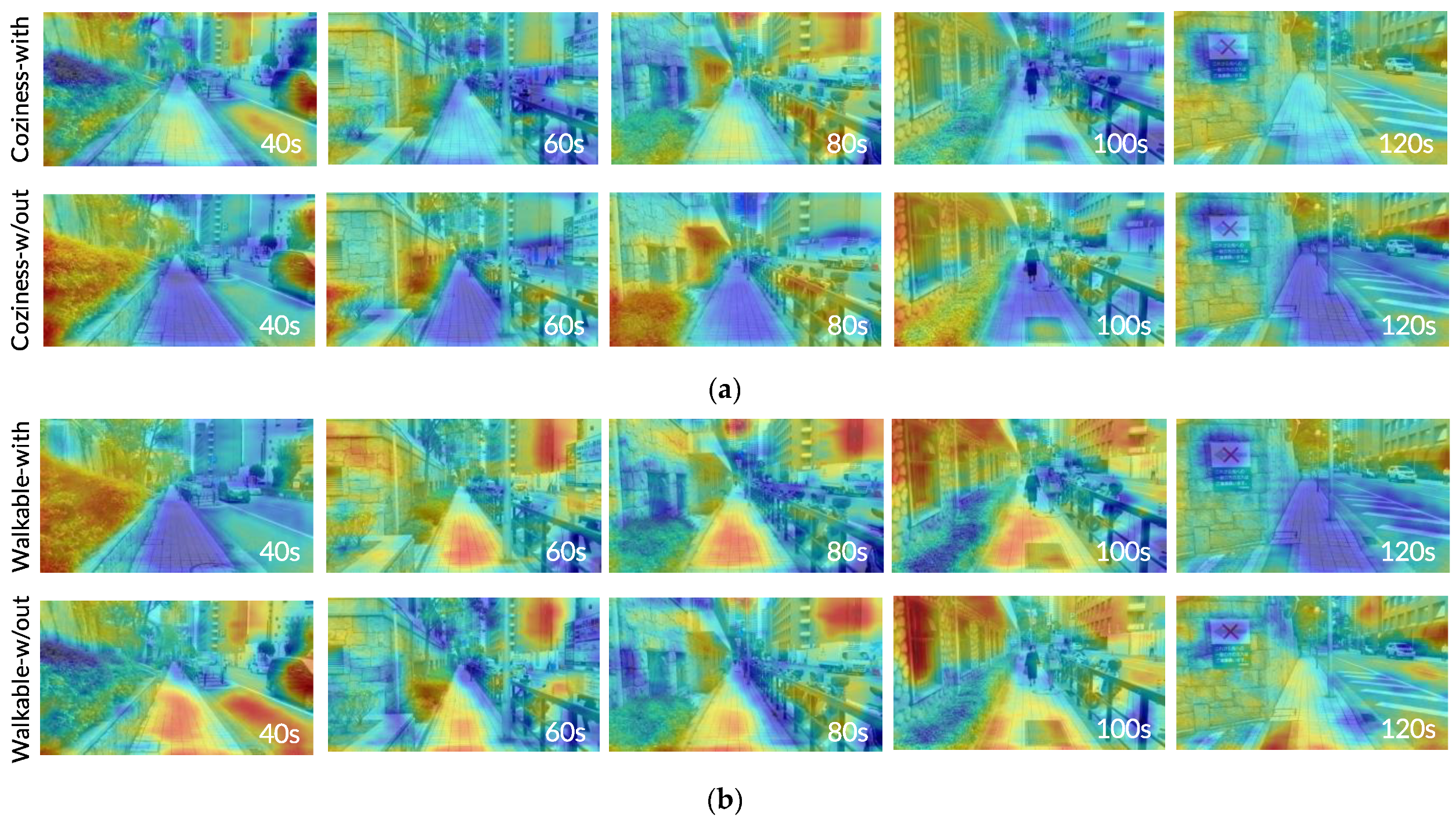

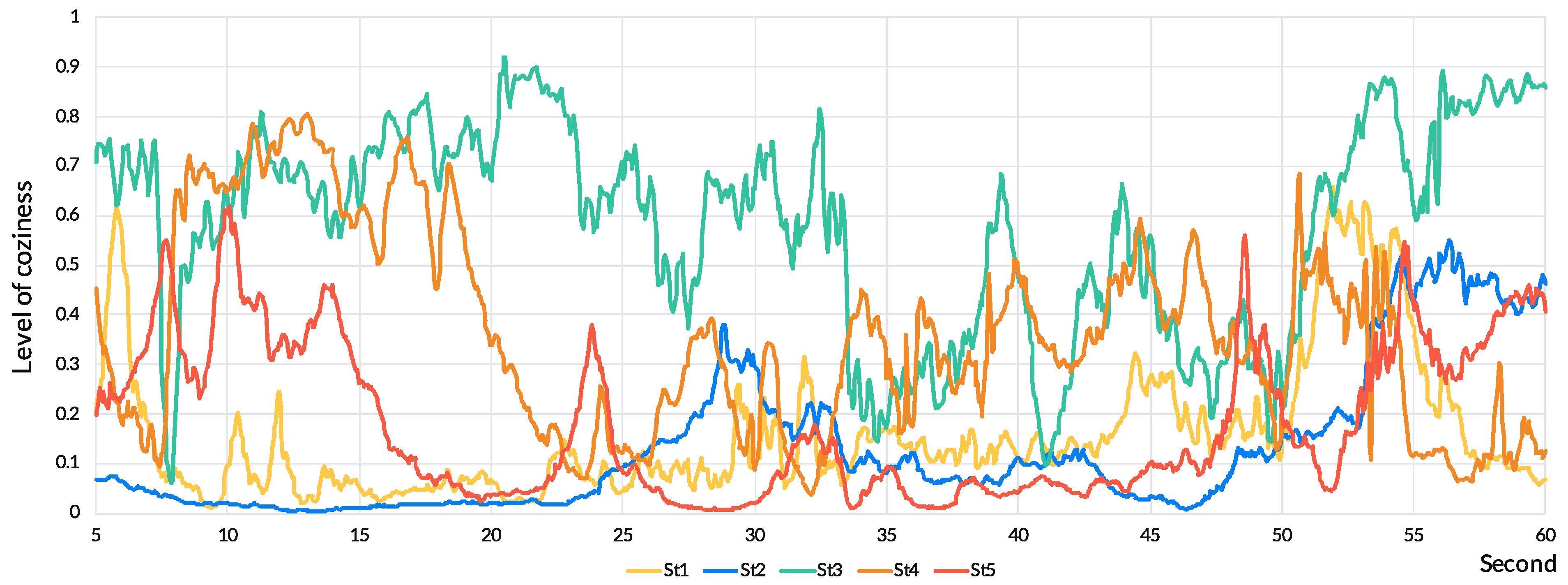

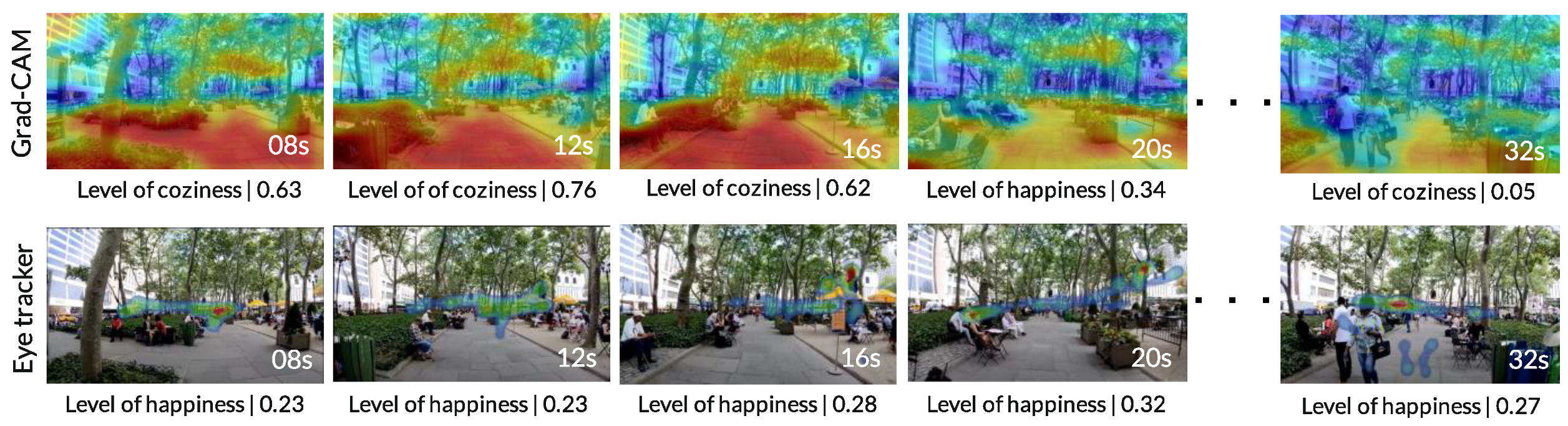

4.1. Model Behavior Based on User Feedback

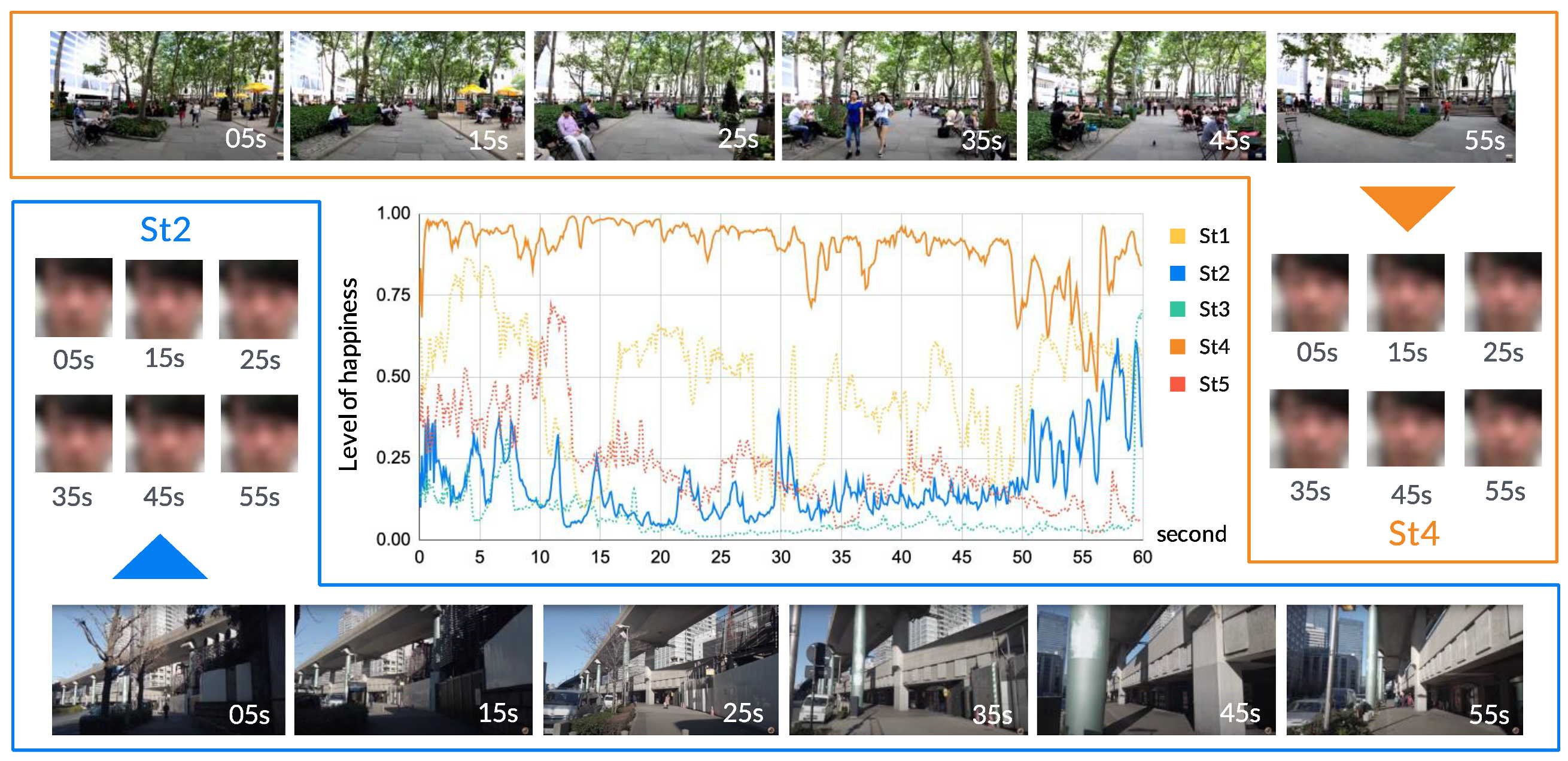

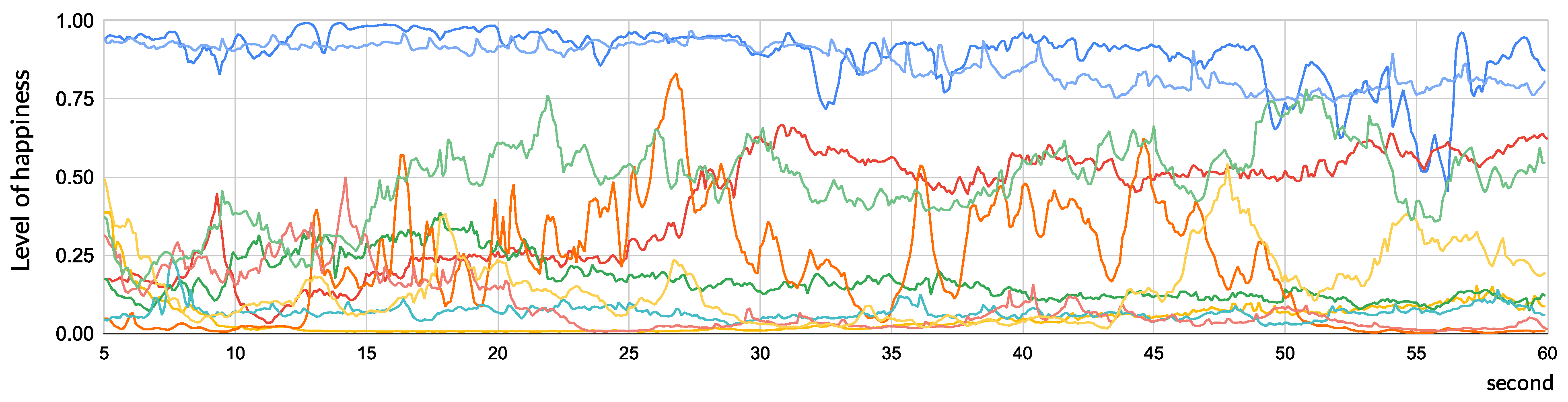

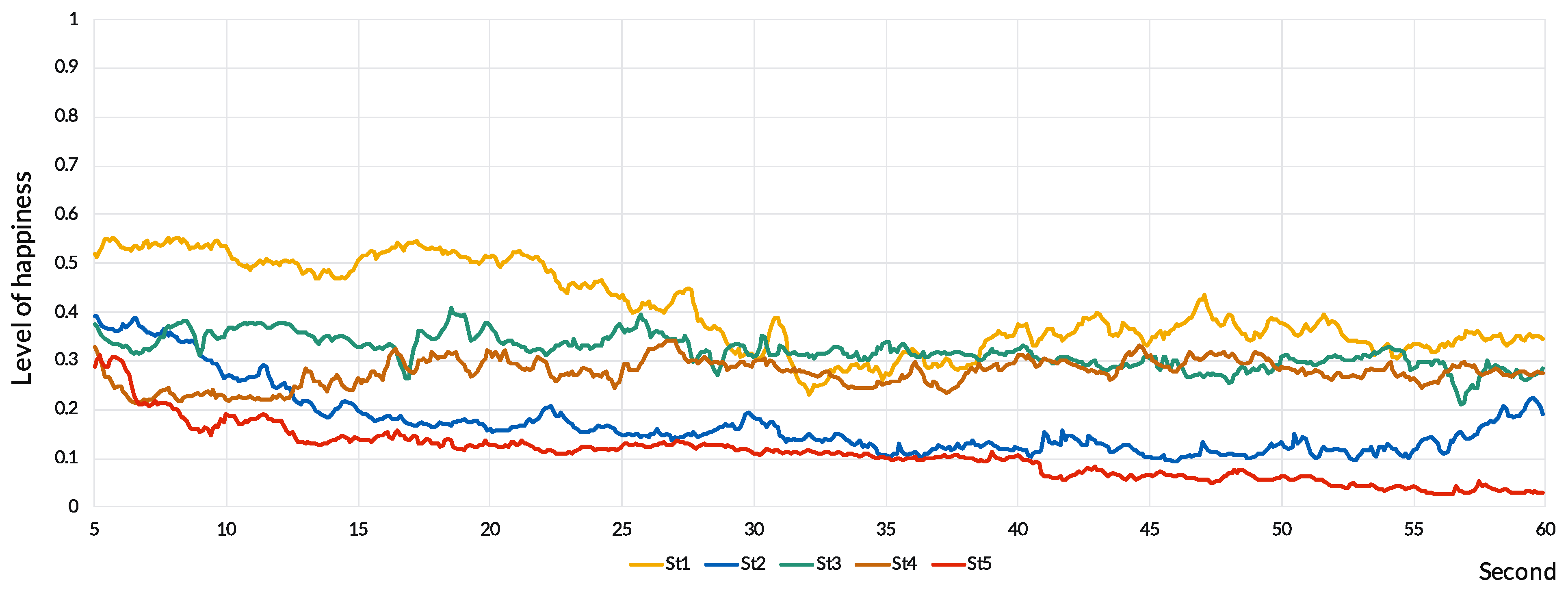

4.2. Evaluation of Streets Space Performance Based on Estimated Facial Expressions and Judged Emotions

4.3. Toward the Integrated Evaluation of SIEM and FERM

4.4. Validation under Limited Data Conditions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- “Igokoti ga Yoku Arukitakunaru Matinaka” Kara Hazimaru Tosinosaisei (Urban Regeneration Starting with Comfortable Town Centers that Make People Want to Walk around). Available online: https://www.mlit.go.jp/common/001301647.pdf (accessed on 7 April 2021).

- Singata Korona Kiki wo Keiki Tosita Matidukuri no Hōkōsei (The Direction of Urban Development in the Wake of the New Corona Crisis). Available online: https://www.mlit.go.jp/toshi/machi/content/001361466.pdf (accessed on 7 April 2021).

- Fischer, G.; Scharff, E. Meta-design: Design for designers. In Proceedings of the 3rd Conference on Designing Interactive Systems: Processes, Practices, Methods, and Techniques, New York, NY, USA, 17–19 August 2000; pp. 396–405. [Google Scholar] [CrossRef]

- Daijiro, M. Design Research Through Practice as an Interdisciplinary Field of Enquiry-Research into, for, through Design. Keio SFC J. 2014, 14, 62–80. [Google Scholar]

- Christopher, D.; Julie, G. Living Architecture, Living Cities: Soul-Nourishing Sustainability; Routledge: London, UK, 2019. [Google Scholar]

- FASTCOMPANY, Scientists Are Using Eye-Tracking To Discover How We See Design. 2017. Available online: https://www.fastcompany.com/90153582/scientists-are-finally-discovering-how-our-eyes-really-see-space (accessed on 3 June 2021).

- Archinect News, Designing through Cognitive Architecture. 2017. Available online: https://archinect.com/news/article/150040919/designing-through-cognitive-architecture (accessed on 3 June 2021).

- Fruin, J.J. Hokōsya no Kūkan—Risō to Dezain—(Pedestrian Spaces—Ideals and Design); Kajima Institute Publishing: Tokyo, Japan, 1974. [Google Scholar]

- Bill, H.; Juicnne, H. The Social Logic of Space; Cambridge University Press: Cambridge, UK, 1984. [Google Scholar]

- Davies, A.; Clark, S. Identifying and prioritizing walking investment through the PERS audit tool. In Proceedings of the Walk21, 10th Intenational Conference for Walking, New York, NY, USA, 6 October 2009. [Google Scholar]

- Christopher, A.; Kanna, H. Patan Rangēzi-Kankyō no Tebiki-(Pattern Language—A Guide to Environmental Design-); Kajima Institute Publishing: Tokyo, Japan, 1984. [Google Scholar]

- Rafael, A.C.; Dorian, P.; Jun, W.; Dominique, C. Uerubīingu Sekkeiron—Hito ga Yoriyoku Ikiru Tameno Zyōhōgizyutu—(Well-Being Design Theory—Information Technology for Better Living); BNN: Tokyo, Japan, 2017; pp. 28–47, 143–153, 233–245. [Google Scholar]

- Ryan, R.M.; Deci, E.L. On happiness and human potentials: A review of research on hedonic and eudaimonic well-being. Annu. Rev. Psychol. 2001, 52, 141–166. [Google Scholar] [CrossRef]

- Diener, E.; Suh, E.M.; Lucas, R.E.; Smith, H.L. Subjective Well-Being: Three Decades of Progress. Psychol. Bull. 1999, 125, 276–302. [Google Scholar] [CrossRef]

- Compton, W.C.; Smith, M.L.; Cornish, K.A.; Qualls, D.L. Factor structure of mental health measures. J. Personal. Soc. Psychol. 1996, 71, 406–413. [Google Scholar] [CrossRef]

- McGregor, I.; Little, B.R. Personal projects, happiness, and meaning: On doing well and being yourself. J. Personal. Soc. Psychol. 1998, 74, 494–512. [Google Scholar] [CrossRef]

- Jan, G.; Tshio, K. Ningen no Mati: Kōkyōkūkan No Dezain (Human City: Design of Public Space); Kajima Institute Publishing: Tokyo, Japan, 2014; pp. 28–32. [Google Scholar]

- Kaoru, O.; Yuji, H.; Kota, M. An approach for understanding remembered experiences in urban city. J. Jpn. Soc. Civ. Eng. Ser. D1 (Archit. Infrastruct. Environ.) 2015, 71, 133–150. [Google Scholar] [CrossRef][Green Version]

- Alex, K.; Ilya, S.; Geoffrey, E.H. ImageNet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems—Volume 1 (NIPS’12), Lake Tahoe, NV, USA, 3–6 December 2012; Curran Associates Inc.: Red Hook, NY, USA, 2012; Volume 1, pp. 1097–1105. [Google Scholar]

- Ye, Y.; Zeng, W.; Shen, Q.; Zhang, X.; Lu, Y. The visual quality of streets: A human-centred continuous measurement based on machine learning algorithms and street view images. Environ. Plan. B Urban Anal. City Sci. 2019, 46, 1439–1457. [Google Scholar] [CrossRef]

- Yin, L.; Wang, Z. Measuring visual enclosure for street walkability: Using machine learning algorithms and Google Street View imagery. Appl. Geogr. 2016, 76, 147–153. [Google Scholar] [CrossRef]

- Li, Y.; Yabuki, N.; Fukuda, T.; Zhang, J. A big data evaluation of urban street walkability using deep learning and environmental sensors—A case study around Osaka University Suita campus. In Proceedings of the 38th eCAADe Conference, TU Berlin, Berlin, Germany, 16–18 September 2020; Volume 2, pp. 319–328. [Google Scholar]

- Lun, L.; Elisabete, A.S.; Chunyang, W.; Hui, W. A machine learning-based method for the largescale evaluation of the qualities of the urban environment. Comput. Environ. Urban Syst. 2017, 65, 113–125. [Google Scholar] [CrossRef]

- Dubey, A.; Naik, N.; Parikh, D.; Raskar, R.; Hidalgo, C.A. Deep Learning the City: Quantifying Urban Perception at a Global Scale. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Zhang, F.; Zhou, B.; Liu, L.; Liu, Y.; Fung, H.H.; Lin, H.; Ratti, C. Measuring human perceptions of a large urban region using machine learning. Landsc. Urban Plan. 2018, 180, 148–160. [Google Scholar] [CrossRef]

- Ji, H.; Qing, L.; Han, L.; Wang, Z.; Cheng, Y.; Peng, Y. A New Data-Enabled Intelligence Framework for Evaluating Urban Space Perception. ISPRS Int. J. Geo Inf. 2021, 10, 400. [Google Scholar] [CrossRef]

- Seresinhe, I.C.; Preis, T.; Moat, H.S. Using deep learning to quantify the beauty of outdoor places. R. Soc. Open Sci. 2017, 4, 170170. [Google Scholar] [CrossRef] [PubMed]

- Satoshi, Y.; Kotaro, O. Development and verification of the impression deduction model for city landscape with deep learning. J. Archit. Plan. (Trans. AIJ) 2019, 84, 1323–1331. [Google Scholar] [CrossRef]

- Taichi, F.; Aya, K.; Hisashi, K. Study on evaluation method for street space focusing on pedestrian behavior. J. Jpn. Soc. Civ. Eng. Ser. D3 (Infrastruct. Plan. Manag.) 2011, 67, 919–927. [Google Scholar] [CrossRef]

- Andre, T.L.; Edilson, D.A.; Albeesrto, F.D.S.; Thiago, O.S. Facial expression recognition with Convolutional Neural Networks: Coping with few data and the training sample order. Pattern Recognit. 2017, 61, 610–628. [Google Scholar] [CrossRef]

- Deepak, K.J.; Zhang, Z.; Kaiqi, H. Multi angle optimal pattern-based deep learning for automatic facial expression recognition. Pattern Recognit. Lett. 2020, 139, 157–165. [Google Scholar] [CrossRef]

- Shungo, N.; Tatsuya, K. Analysis of Age, Gender ratio, Emotion, Density of Pedestrians in Urban Street Space with Image Recognition. J. City Plan. Inst. Jpn. 2020, 55, 451–458. [Google Scholar] [CrossRef]

- Min, S. How Emotional AI Can Benefit Businesses, Campaign. Available online: https://www.campaignasia.com/article/how-emotional-ai-can-benefit-businesses/455912 (accessed on 27 July 2021).

- Ramprasaath, R.S.; Micheal, C.; Abhishek, D.; Ramakrishna, V.; Devi, P.; Dhruv, B. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Christie, A.M.; Atkins, P.W.B.; Donald, J.N. The Meaning and Doing of Mindfulness: The Role of Values in the Link Between Mindfulness and Well-Being. Mindfulness 2017, 8, 368–378. [Google Scholar] [CrossRef]

- Fredrickson, B.L. What good are positive emotions? Rev. Gen. Psychol. 1998, 2, 300–319. [Google Scholar] [CrossRef]

- Watson, D.; Clark, L.A.; Auke, T. Development and validation of brief measures of positive and negative affect: The PANAS sclaes. J. Personal. Soc. Psychol. 1998, 54, 1063–1070. [Google Scholar] [CrossRef]

- Rowland, Z.; Wenzel, M.; Kubiak, T. A mind full of happiness: How mindfulness shapes affect dynamics in daily life. Emotion 2020, 20, 436–451. [Google Scholar] [CrossRef] [PubMed]

- Verywellmind, The Color Psychology of Yellow. 2020. Available online: https://www.verywellmind.com/the-color-psychology-of-yellow-2795823 (accessed on 7 July 2021).

- 99designs by Vistaprint, Colors and Emotions: How Colors Make You Feel. Available online: https://en.99designs.jp/blog/tips/how-color-impacts-emotions-and-behaviors/ (accessed on 16 July 2021).

| Level of Happiness | 5–10 s | 10–15 s | 15–20 s | 20–25 s | 25–30 s | 30–35 s | 35–40 s | 40–45 s | 45–50 s | 50–55 s | 55–60 s |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.75–1.00 | 22.2% | 22.2% | 22.2% | 22.4% | 23.6% | 20.9% | 22.2% | 22.2% | 20.0% | 16.9% | 18.9% |

| 0.50–0.75 | 0.0% | 0.2% | 7.1% | 9.6% | 13.6% | 16.0% | 6.4% | 20.0% | 19.8% | 24.7% | 19.8% |

| 0.25–0.50 | 15.6% | 22.4% | 22.2% | 15.6% | 17.3% | 9.6% | 22.7% | 11.6% | 18.0% | 5.8% | 12.4% |

| 0.00–0.25 | 73.3% | 66.2% | 59.6% | 63.6% | 56.7% | 64.7% | 59.8% | 57.3% | 53.3% | 63.8% | 60.0% |

| Evaluation Method | Indicator | St1 | St2 | St3 | St4 | St5 |

|---|---|---|---|---|---|---|

| Models | Level of coziness by SIEM | 0.16 | 0.13 | 0.58 | 0.36 | 0.18 |

| Level of happiness by FERM | 0.41 | 0.17 | 0.29 | 0.34 | 0.11 | |

| Questionnaire (Degree of agreement to stated emotions) | “Lingerable” | 2.8 | 3.3 | 5.0 | 4.8 | 4.8 |

| “Pleasant” | 2.6 | 1.8 | 3.5 | 3.9 | 4.1 | |

| “Unpleasant” | 2.9 | 2.1 | 1.1 | 0.9 | 0.8 | |

| “Pleasant and unaroused” | 0.8 | 2.3 | 3.5 | 3.3 | 4.2 | |

| “Pleasant and aroused” | 4.3 | 1.3 | 3.5 | 4.6 | 4.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sou, K.; Shiokawa, H.; Yoh, K.; Doi, K. Street Design for Hedonistic Sustainability through AI and Human Co-Operative Evaluation. Sustainability 2021, 13, 9066. https://doi.org/10.3390/su13169066

Sou K, Shiokawa H, Yoh K, Doi K. Street Design for Hedonistic Sustainability through AI and Human Co-Operative Evaluation. Sustainability. 2021; 13(16):9066. https://doi.org/10.3390/su13169066

Chicago/Turabian StyleSou, Kanyou, Hiroya Shiokawa, Kento Yoh, and Kenji Doi. 2021. "Street Design for Hedonistic Sustainability through AI and Human Co-Operative Evaluation" Sustainability 13, no. 16: 9066. https://doi.org/10.3390/su13169066

APA StyleSou, K., Shiokawa, H., Yoh, K., & Doi, K. (2021). Street Design for Hedonistic Sustainability through AI and Human Co-Operative Evaluation. Sustainability, 13(16), 9066. https://doi.org/10.3390/su13169066