Abstract

The COVID-19 outbreak has suddenly changed the landscape of education worldwide. Many governments have moved education completely online, with the idea that although schools are shut, classes can continue; however, the question regarding whether teachers are prepared for this massive shift in educational practice remains unanswered. This study addresses this issue through the lens of teachers’ technology, learners, pedagogy, academic discipline content, and content knowledge (TLPACK). Two groups of 250 teachers (n = 500) who teach various levels of students participated in a two-phase survey. The phases of the survey took place in 2017 and 2020 (i.e., before and during the COVID-19 pandemic). Participants answered 38 reliable and valid questions about TLPACK to address three research questions, and the collected data were subjected to frequentist and Bayesian statistical analysis. The analysis indicated that teachers’ TLPACKs were significantly different before and during the COVID-19 pandemic. This study revealed significant correlations among teachers’ TLPACK constructs and found that, among these constructs, the strongest relationship was that between learner knowledge (i.e., knowledge about the learners) and pedagogy knowledge. The study ends by reflecting on our findings’ implications, especially since the COVID-19 pandemic continues to have significant altering effects on both education and society at large.

1. Introduction

COVID-19—also known as 2019-nCov because it is caused by a novel coronavirus—first appeared in Wuhan, Hubei Province, China in December 2019 [1]. On 30 January 2020, the World Health Organization [2] declared that it considered the COVID-19 outbreak to be a Public Health Emergency of International Concern. During the initial outbreak, some countries, such as China, locked down several cities and closed schools. At the time this study was written, around 400 million students in China were unable to physically attend classes due to these policies [3]. All university lectures were, at the time of writing, being conducted online and many universities have likewise moved all their operations online [4]. High-quality information communication technology (ICT) has been a vital infrastructure for governments who wish to sustain regular education activities during the pandemic [5]. Although these new technologies create new opportunities for learning, they also present challenges for teachers [6]. Teachers are expected to acquire new content and pedagogy-related knowledge and develop new technical skills to offer effective and meaningful instruction [7,8]. The unusual circumstances due to the pandemic are very different from the relatively controlled circumstances under which online education has previously been tested and studied [9]. Thus, scholarly assessments of online education during the pandemic should be assessed as occurring “during a special period and in a special environment” [10]. Before COVID-19, many schools and countries were not ready to move their educational activities completely online [11], and it seems overly optimistic to assume that online education is the only method that might ensure the sustainable continuity of education, given some students’ lack of Internet access, and the difficulties related to training teachers remotely and instructing teachers regarding using Internet-based technologies [3].

In today’s heavily digitized world, teachers’ technological pedagogical content knowledge (TPACK) is a critical component of their ability to leverage educational technology and teach effectively [12]. This is because today’s students’ communication and learning styles are dramatically influenced by the increasingly digital environment in which they were brought up [13]. However, previous studies on teachers’ TPACK predate the pandemic and have not considered later knowledge about learners and context [14,15,16]. Hsu and Chen [15] proposed to include these two factors and build the technology, learners, pedagogy, academic discipline content, and context knowledge (TLPACK) model, which is based on TPACK [8], with additional information communication technologies—technological pedagogical content knowledge (ICT-TPCK) [14], and education technology, pedagogy and didactics—academic subject-matter discipline, educational psychology and educational sociology knowledge (TPACK-XL) [16], to explore the types of knowledge that teachers at various levels should equip themselves with in detail. Scholars have advised teachers to tailor their teaching strategies to students’ characteristics [17] and suggested that these characteristics will impact online education during the COVID-19 pandemic [18]. Therefore, this study is significant because it not only assesses teachers’ TLPACK during the pandemic but also compares teachers from two groups and their TLPACK, both pre-pandemic and during the pandemic. It asks the following three research questions (RQs):

RQ1: Are there any significant differences between the levels of teachers’ TLPACK who are required to implement online education before and during the COVID-19 pandemic?

RQ2: Do teachers of various levels have different TLPACK regarding online education before and during the COVID-19 pandemic?

RQ3: What are the relationships between the constructs of teachers’ TLPACK during the COVID-19 pandemic?

2. Literature Review

2.1. Educational Technology Used during the Covid-19 Pandemic

The advent of the ICT era has drastically changed the educational praxis on campuses. Discussions regarding the efficacy of integrating educational technology in instruction have primarily been undertaken [19] because new technology such as web 2.0 and mobile devices (e.g., smartphones and tablets, suggested by Martin et al. [20]) has changed the landscape of classroom teaching [21]. Implementing online education brings various challenges to teachers because it often requires them to learn or apply new technological knowledge and skills. Bao [22] suggests that teachers should be acquainted with their students’ learning and cognitive levels, so that they can better match instructional activities to their students’ online learning behavior. Angeli and Valanides [14] suggested that the teachers’ practical ability to use technology is vital to their ability to integrate it into their teaching and create new learning opportunities; likewise, Aydin et al. [23] pointed out that technology is only a tool to improve teaching practice. As Bai et al. [24] asserted, when ICTs are integrated into instructional activities, the teacher is advised to provide guidance to students to effectively improve their learning outcomes.

As the use of education technology becomes more prevalent [25], the application of technology in education is inevitable [26]. However, not all stakeholders in education are prepared for large-scale school closures such as those caused by the COVID-19 pandemic, which has transformed traditional face-to-face teaching methods into online teaching within a very short period [27]. Such abrupt disturbances to people’s lifestyles have propelled a society-wide digitization process, and this transformation is different from that in the past, when diligently designed online learning environments were warranted. As such, software products from Microsoft, Google, and Zoom, which were only used in business communications and conferences, have been adopted in online teaching as a response to this pandemic. These platforms offer communications software for free to help schools around the world to administer online education [11,28]. Microsoft released a premium version of its Teams software for six months, and teachers have praised its usefulness, ease of use, and secure cloud storage system [5]. Google Meet has been successfully used to train medical students in the UK [29]. Zoom has also been a popular tool for online education, despite its user privacy concerns [30]. Typically, teachers’ lesson planning and preparation would be finished six to nine months before a course began [9]; however, the circumstances surrounding the COVID-19 pandemic have forced both teachers and students to dive into online education with little to no preparation. All teachers and related personnel need to adopt the instructional plan of action (IPA) for implementing online education, that is: “All communication was to be through the faculty lead, who was to relay the information to the Department Chair, with regard to the academic, instructional or personal needs for their respective courses and students (training, instructional equipment needed by faculty or students, counseling services, writing center, program requirements, etc.)” [27].

The aforementioned synchronous online video-conferencing systems (i.e., Zoom, Microsoft Teams, Hangouts, and FaceTime) have been used as replacements for online education with face validity for interaction [31]. Nevertheless, videoconferencing will never be able to replace physical classrooms, as these two settings have distinct features in communicational dynamics and multimodal characteristics [32]. Nonetheless, in a climate of social distancing, these video-conferencing systems can be helpful and important in enabling the participants to have a sense of meaning and presence through their communicational affordance of real-time audio and video interactions [31,33]. However, some caveats regarding such applications have been pointed out; for example, situational factors, such as broadband and hardware, limit the teaching of practical skills, which make long lectures more realistic [34]. With such a limited time for switching from traditional classroom instruction to virtual environments, synchronous online video-conferencing systems may not be the perfect solution, but they can be considered a viable alternative. Particularly in this context, it seems inevitable that the COVID-19 pandemic will create opportunities to develop and cultivate new pedagogical knowledge and strategies [35].

2.2. TLPACK

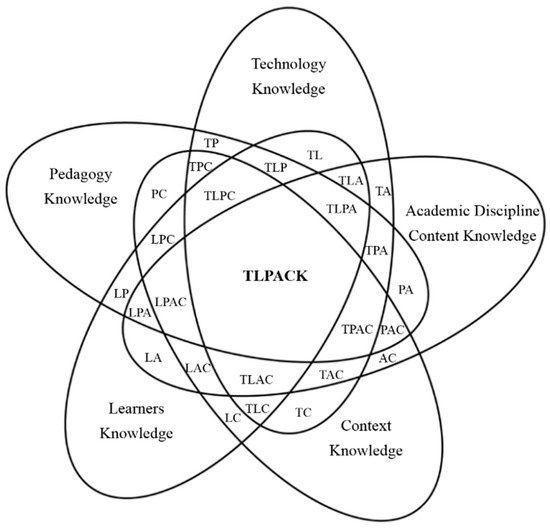

It is important to understand teachers’ readiness to face the challenges related to moving education online, from the perspective of their knowledge in various fields. As mentioned above, scholars often use the TPACK framework to discuss teachers’ knowledge regarding the design, implementation, and evaluation components of technology-based education. Many studies have applied the TPACK model to study technology-based education [7,36,37,38,39] and related subjects [40,41,42]. Despite the TPACK model’s prevalence and success, there are still some limitations. For example, Angeli and Valanides [14] pointed out that the TPACK framework does not consider factors other than technology, content, and teaching knowledge, which may lead to errors in designing integrated instruction. Therefore, Hsu and Chen [15] suggested that scholars include more variables in the TPACK framework to provide a better picture of technology-based education’s ever-changing landscape. They developed their own framework—TLPACK—from studies that had developed the TPACK [8], ICT-TPCK [14] and TPACK-XL [16] frameworks. Each model is based on the theoretical facet indicators proposed by Shulman [43], who postulated seven concepts of knowledge that teachers should possess if they are to effectively evaluate and integrate emerging technologies’ various innovations. They are listed below and are modeled in Figure 1.

Figure 1.

The TLPACK model (Hsu and Chen, 2019).

- Technology knowledge: teachers’ technological literacy and acuity, including their ability to learn new technological skills, operate technology and integrate technology into teaching activities.

- Learner knowledge (knowledge about learners: teachers’ ability to distinguish different learners’ characteristics (including their personal, learning, and cognitive traits), and adjust their teaching methods accordingly.

- Pedagogy knowledge: teachers’ ability to plan, adapt, and implement classroom management skills and teaching methods, to optimize their teaching practice.

- Academic discipline content knowledge: teachers’ mastery and understanding of the domain knowledge of the subjects they teach.

- Context knowledge: teachers’ ability to create an appropriate environment for students, including their ability to adjust the teaching environment in compliance with administrative regulations.

Compared to the original TPACK model, the TLPACK model has two more elements: teachers’ knowledge of learners and context. Regarding knowledge about learners, an in-depth understanding of learners’ characteristics and behaviors can help teachers make sound decisions on teaching, which can have a positive impact on students’ learning effects [44]. As Kirschner [45] asserts, the downside of students’ self-reports on their preferences in learning is that a student’s professed learning style may be very different from his/her actual effective learning methods. Therefore, teachers need to have the ability to identify each student’s learning characteristics [46], especially within the online learning context. A good online learning platform must be designed based on learners’ characteristics [46,47,48]. Regarding teachers’ contextual knowledge, complete learning cannot be separated from the context in which it is located [49], and a teacher’s pedagogy and use of technology will inevitably be affected by the context in which he or she is [23]; hence, he or she would need to choose strategies that fit the context to obtain the best teaching results [50]. These two elements would complement what TPACK has missed, according to Angeli and Valanides [14], which is the primary reason that this particular study chose to use the TLPACK model. However, some scholars, such as Bai et al. [24], have suggested that technology-based learning is not suitable for all subjects, and there are many factors that need to be considered when integrating technology into teaching. Similarly, Delgado et al. [51] stated that the amount that schools invested in educational technology did not receive the expected return; the actual uses of technology in classrooms are still low, even though extensive investments in instructional technology have been made by schools. That is, there is no one-size-fits-all standard for the applicability of educational technology in different subject matters [52]. However, various governments’ responses to the COVID-19 pandemic leave teachers and students with little choice but to forge ahead with online education.

This study aimed to examine teachers’ TLPACK in the unique context of the COVID-19 pandemic, by comparing teachers’ responses to the same set of TLPACK-related questionnaires before and during the pandemic. Thereby, this study hopes to help clarify the specific problems and dilemmas that teachers currently face in implementing online education and propose targeted solutions and suggestions. Per the RQs and literature review, the following research hypotheses (RHs) were proposed:

RH1: There is a significant difference between teachers’ TLPACK before and during the COVID-19 pandemic.

RH2: Teachers of various levels will have significantly different TLPACK results before and during the COVID-19 pandemic.

RH3: There is a significant relationship between the various constructs of teachers’ TLPACK during the COVID-19 pandemic.

3. Method

3.1. Procedure and Participants

This study adopted quota sampling, based on the demographic data surveyed and publicized by Taiwan’s Ministry of Education (MOE) regarding the populations of teachers of various levels, aimed to compare two groups of teachers of various levels in Taiwan at two points in time, one before and one during the COVID-19 pandemic. Additionally, this is the best way to conduct a full-scale survey that includes all the teachers, since larger sample sizes can reduce the probability of a type-II error, which leads to more accurate inferences of the actual situation of the statistical analysis results. However, it should be noted that if the sample size is too large, it may increase the probability of a type-I error. The recommended sample size for regional studies is 500–1000 people [53]. To reach a balance between sample size and representativeness, we conducted the following sampling steps.

We initiated a survey about schools in Taiwan in 2017 with the aim of understanding teachers’ TLPACK in general, and selected possible candidates who might be interested in participating in that survey. Once the potential participants agreed to join, a website link for the electronic version of the questionnaire was sent to them. In 2020, when the pandemic began, we inquired of the schools that participated in the 2017 study about their willingness to take part in this survey again. We sent out 592 and 527 surveys in 2017 and 2020, respectively. After all the questionnaires were collected, the valid ones were selected and stratified randomly, based on the structure of teacher populations at various levels in Taiwan. This was done to make the two groups’ demographic backgrounds as similar as possible. Regarding the representation of the participants, the total study population is roughly representative of the total number of full-time teachers at all levels of school in Taiwan (Table 1).

Table 1.

Detailed information about this study’s quota sampling method.

This study compared two groups of teachers’ TLPACK self-evaluations, collected from different years. In both surveys, the participants taught at a variety of levels, from elementary to higher education. These participants responded (self-reported) on five-point Likert scales to measure their TLPACK (see Appendix A Table A1). A pilot study was performed to ensure the study instruments’ reliability and validity before the actual survey. All participants completed the informed consent procedure, which provided a detailed explanation of this study’s purpose on the online questionnaire’s initial page. The questionnaire was initiated upon receiving the participant’s consent. In addition, if a participant wanted to withdraw from the study, they could simply leave the questionnaire’s web page and no information would be recorded. Only questionnaires that included participants’ informed consent and agreement were collected and counted for statistical analyses. A total of 330 and 324 valid questionnaires were collected in 2017 and 2020, respectively (Table 2). The data obtained exceeded the expected number; therefore, this study further adopted stratified random sampling to extract data that met the proportion of teachers at all levels needed for statistical analysis, resulting in 250 surveys from each year, both in 2017 and 2020 (n = 500).

Table 2.

Questionnaire distribution and recovery.

3.2. Measurement and Data Analysis

The items used in this survey were developed based on Hsu and Chen’s [16] method, the psychometric properties of which have been evaluated and validated. The questionnaires’ final draft was piloted on a small sample to ensure its comprehensibility. Rigorous translation precautions (e.g., back-translation) were undertaken, and two language professors were invited to ascertain the translated questionnaires’ quality. The questionnaire’s reliability and validity were examined via Cronbach’s alpha, exploratory factor analysis (EFA), confirmatory factor analysis (CFA), composite reliability (CR), and average variance extracted (AVE). Before conducting CFA, EFA was done to examine the items’ loading pattern [54]. Maximum likelihood estimation and Promax rotation were adopted for EFA in the present study because they are the most prevalent in empirical studies [55]. Regarding the number of factors, this study was set to extract five factors based on the TLPACK framework and retained those items with an eigenvalue greater than 1, per Kaiser’s suggestion [56]. Afterward, any item with a loading less than 0.40 was excluded, per Deng’s suggestion [57]. Items with cross-loadings of less than 0.20 were also removed from the questionnaire. Thus, 38 items were used for the surveys (see Table A1). The finalized questionnaire’s Kaiser-Meyer-Olkin (KMO) test was 0.96, which confirmed the appropriateness of EFA. The results indicated that this questionnaire was an appropriate research instrument. Each construct’s Cronbach’s alpha was higher than the threshold value of 0.80 (TK = 0.90, LK = 0.93, PK = 0.88, AK = 0.94, and CK = 0.89). The EFA results indicated that all items had a factor loading higher than 0.48—higher than the cut-off value of 0.35, per Hair et al.’s suggestion [58]. The CFA’s model fit indices also showed a positive result [59,60,61,62,63]. The CR and AVE results indicated that all constructs had high CR (TK = 0.89, LK = 0.93, PK = 0.88, AK = 0.94, CK = 0.88) although the AVE of TK was slightly lower than 0.50 (i.e., 0.46)—per Lam’s suggestion [64], if AVE is less than 0.50 but CR is more than 0.6, the construct is acceptable for inclusion. The other constructs’ AVE indicated that this questionnaire is valid (see Table A2 and Table A3). Therefore, the various instruments used to measure the questionnaire’s reliability and validity indicate that it is a reliable and valid research instrument.

Each RQ was addressed using different statistical analysis techniques. An independent t-test with Cohen’s d-effect size, analysis of variance (ANOVA), and Pearson correlational analysis were applied for RQ1, RQ2, and RQ3, respectively. These analyses were examined with a significance level of 0.05. In addition to frequentist statistical techniques, which rely on the null hypothesis’s significance tests and, therefore, limit possible interpretations, Bayes factor analysis was also employed to directly compare null and alternate hypotheses [65,66] through the likelihood ratio of null and alternate hypotheses [67,68,69]. Bayesian statistical analysis has attracted attention across various disciplines in academia; using both Bayesian and frequentist statistics is encouraged because Bayesian parameter estimation can complement the information that is lacking in frequentist statistics [70,71,72,73]. The traditional frequentist statistics method, based on a p-value without considering the prior plausibility of H0, has been criticized for being too simple to be used to explain complicated situations and because it may lead to incorrect results, due to noisy data [74]. Hence, an increasing number of journals in the field of psychology expects the use of Bayesian approaches for data analyses [75], and Bayes factors are proposed for use in hypothesis testing [73,76]. The Bayesian factor for the two-sample t-test, which is extracted using the ratio of the marginal likelihoods of H1 and H0, is the norm applied by prior studies with JASP (JASP Team 2018, jasp-stats.org, accessed on 4 May 2020) [74,77]. The most significant advantage of Bayesian statistics is that it is more intuitive than traditional statistics since it does not refer to the p-value to accept or reject the null hypothesis [71,78]. For these reasons, the present study also employed Bayes factors to provide direct estimations of the research hypotheses. Statistical analyses in this study were performed using AMOS 21.0, SPSS 22 (IBM, Chicago, IL, USA), and R 3.6.3 software (Free Software Foundation’s GNU General Public License).

4. Results

RQ1 asks whether teachers’ TLPACK regarding online education would be significantly different before and during the COVID-19 pandemic. Independent t-test results confirmed that significant differences do exist. Detailed information about the t-tests is presented in Table 3.

Table 3.

Detailed information regarding the t-tests of teachers’ TLPACK before and during the COVID-19 pandemic.

Based on the frequentist statistical analysis results, RH1 is supported. In 2017, teachers thought that their TLPACK was higher than did the teachers in 2020. According to the standard proposed by Cohen and Sawilowsky [80,81], learner knowledge and pedagogy knowledge had the largest effect sizes (Cohen’s d = 1.10 and 0.89, respectively), academic content knowledge and context knowledge had medium effect sizes (Cohen’s d = 0.76 and 0.53, respectively) and technology knowledge had the smallest effect size (Cohen’s d = 0.22). Bayes factor analysis indicated that there is decisive evidence that teachers’ context knowledge was significantly different before and during the COVID-19 pandemic (BF = 1,679,488), and there was substantial evidence that their academic content knowledge was different in these two periods (BF = 7.78). There is only anecdotal evidence to support the results obtained [79].

For the second research hypothesis, two one-way ANOVAs were performed. Table 4 depicts the ANOVA for teachers’ TLPACK before the COVID-19 pandemic.

Table 4.

ANOVA for teachers’ TLPACK before COVID-19.

The one-way ANOVA results showed that only teachers’ academic content knowledge was significantly different across various levels of teaching before the COVID-19 pandemic (F = 3.52, p < 0.05). The post hoc analysis reported that university teachers’ academic content knowledge was higher than their counterparts at elementary and junior high schools; however, the Bayes factor analysis results showed no such evidence. Detailed information regarding teachers’ TLPACK regarding implementing online education during the COVID-19 pandemic is presented in Table 5.

Table 5.

ANOVA of teachers’ TLPACK during the COVID-19 pandemic.

The one-way ANOVA results show that teachers’ technology and academic content knowledge were significantly different during the COVID-19 pandemic (F = 6.02, p < 0.01 and F = 7.80, p < 0.001, respectively) than they were before it happened. In addition, the post hoc analysis reported that university teachers’ academic content knowledge was higher than their counterparts at elementary, junior high, and high schools; junior high teachers and university-level teachers proposed that they had better knowledge of technology when administering online education during the COVID-19 pandemic. These results are supported by Bayes factors that show decisive and very strong evidence for the differences among teachers’ academic content knowledge and technology knowledge (BF = 180.26 and 14.74, respectively). Hence, based on the ANOVA statistical analysis results, RH2 is partially supported.

RH3 tests the relationship between constructs of teachers’ TLPACK during the COVID-19 pandemic. The Pearson correlation analysis results that were employed to explore RH3 are shown in Table 6.

Table 6.

Pearson correlation analysis constructs of teachers’ TLPACK.

The Pearson correlation analysis revealed significant correlations between the constructs of teachers’ TLPACK. During the COVID-19 pandemic, teachers found that their pedagogical knowledge was significantly related to their knowledge about learners (r = 0.67, p < 0.001), followed by the relationship between technology knowledge and academic content knowledge (r = 0.64, p < 0.001). The relationship between academic content knowledge and context knowledge was the weakest (r = 0.37, p < 0. 001). However, these results are not consistent with the Bayes factors, which show decisive evidence for the relationship between learner knowledge and academic content knowledge (BF = 906,448,646,071), technology knowledge and pedagogy knowledge (BF = 234,370,348,108), and academic content knowledge and context knowledge (BF = 5,766,504).

5. Discussion

Regarding RQ1, the current study’s t-test results revealed that the studied teachers in 2017 who used traditional teaching were more confident about their TLPACK compared to those who used online teaching in 2020. The biggest difference regarding various bodies of knowledge in TLPACK is that the teachers of 2017 and 2020 considered that they had a good knowledge of learners before and during the COVID-19 pandemic. In 2017, when face-to-face interactions between teachers and students were still the norm, it was easier for teachers to observe each student’s learning style in person and adjust their instruction accordingly. In the online environment, such observation opportunities become challenging and thus may possibly reduce the teachers’ knowledge of their students. Therefore, future teacher training should strengthen the ability of pre-service teachers to transform their teaching knowledge in various teaching situations to respond to various changes and emergencies.

Other elements of their TLPACK were also significantly higher in 2017 than in 2020. These findings are not consistent with previous research. For example, Miguel-Revilla et al. [40] found that teachers’ pedagogy knowledge was found to be less significant in progress, and academic content knowledge was considered to be more challenging. However, in this study, the difference between participants’ pedagogy knowledge was also significant and their scores on academic content knowledge were higher, which indicated that they felt more confident about their academic content knowledge. On the other hand, this study’s findings do suggest that participants were not confident about their competence at integrating technological knowledge to pedagogical and content knowledge, as the effect size of their technical knowledge is quite small. Thus, this study indicates that the studied teachers may not need more technological knowledge, but rather, better knowledge of how to apply what they already know.

RQ2 explored whether teachers of various levels would have significantly different TLPACK. Among the 2017 study participants, the ANOVA found that teachers of various levels had similar TLPACK; university teachers perceived themselves as having better academic content knowledge. During the COVID-19 pandemic, teachers of various levels reported significantly different levels of technological and academic content knowledge. Through data analysis, we found that, regarding technological knowledge, university-level and junior high teachers deemed themselves more proficient in technology-based education than did their peers at other levels. Possible explanations are twofold: firstly, teachers at university level have more access to ICT in their research projects, and university students have more latitude to use social media to contact their teachers [82]. Secondly, more training programs are available to in-service teachers at junior high level in Taiwan [83]. The difference in academic discipline content knowledge is more obvious. University teachers believe that their performance in academic discipline content knowledge is significantly higher than that of teachers from other levels. This could be because universities in Taiwan generally used computer technology to assist teaching even before the pandemic. Therefore, when university teachers need to teach professional knowledge online, the impact is lower than that for elementary, middle, and high school teachers. Although technology-enhanced teaching and learning have been promoted in these schools, most instructional activities are still conducted through traditional face-to-face teaching methods. Online education (or other types of technology-enhanced teaching and learning) is expected to be being implemented due to the pandemic; teachers at these levels need to put in the effort required to adapt to the changes.

Regarding the relationship between the constructs of teachers’ TLPACK (RQ3), Pearson correlation analysis results indicated that all the constructs are significantly correlated with each other. Five out of 10 correlation coefficients were considered to be strong. The strongest correlation was between teachers’ knowledge about students and their pedagogical knowledge. Since these data are based on a survey regarding teachers’ responses to remote online teaching after the outbreak of COVID-19, we can argue that, within the online education context, a teacher’s knowledge regarding learners is closely related to his or her teaching methods. If teachers can perceive students’ different characteristics through virtual environments and adjust their teaching methods accordingly, their confidence in the mastery of learner knowledge can be greatly increased. For example, Bao [22] suggests that Chinese students are not so thorough in online learning, which may limit online learning modules’ efficacy; they suggested that teachers pay extra attention to their online lectures’ volume and speed. The main reason for such a strong relationship between teachers’ learner knowledge and pedagogical knowledge can be attributed to learner knowledge being identified by Koehler and Mishra [22] as a part of pedagogical knowledge [84], which possibly led to its low-value Bayes factor as presented in this study. In addition, teachers’ technological knowledge and academic content knowledge had a strong relationship in this study. This echoes Aminah et al. [12], who suggest “inviting students to participate in learning to use technology” (p. 259). Similarly, Saubern et al. [42] suggest that technologically proficient teachers will be more confident in using technology, and that this has a positive effect on their students. This correlation’s Bayes factor shows anecdotal evidence for the research hypothesis; however, to confirm this evidence, future studies should explore this from a statistical perspective.

Teachers’ academic content knowledge and their contextual knowledge had the weakest relationship. The Bayes factor’s high value shows decisive evidence for this hypothetical relationship. This is consistent with previous studies—de Guzman [28] suggested that universities should provide teachers with training in synchronous online instruction, and Cheng [10] asserted that schools “cannot do without effective communication and coordination between administrators and teachers and students” (p. 506). Such “training initiatives should cover both technological and pedagogical aspects” of education [3], because, as Tømte [85] suggested, even teachers who are able to design technology-enhanced lessons sometimes struggle to execute these plans.

6. Conclusions, Limitations and Suggestions for Future Research

Existing and emergent technologies have become and will remain essential for future education-related initiatives—these changes are expected to outlast the emergency measures adopted during the COVID-19 pandemic and/or be permanent fixtures in the future educational landscape [86]. Thus, teachers’ readiness to integrate emergent technology is and will be an increasingly essential dimension of educational practice. This study, which assessed teachers’ readiness via Hsu and Chen’s [15] TLPACK framework, was the first of its kind, especially within the context of the COVID-19 pandemic. Its results indicate that for two groups of teachers—those who taught in 2017 using traditional methods and those who taught online in 2020 (before and during the pandemic)—the latter group’s overall perceptions about their TLPACK were lower than the former. It was also found that teachers at various levels felt that they had more academic content knowledge before the pandemic. Additionally, it was found that university and junior-high-school teachers believed that they were more technologically competent than high-school teachers. All the relationships between constructs of teachers’ TLPACK during the COVID-19 pandemic were significantly correlated with each other.

This study has three main limitations. First, its participants hail from a single cultural context, and a TLPACK construct, contextual knowledge, might be culturally determined—especially within the unique context of the COVID-19 pandemic. Therefore, cross-cultural studies are needed to verify these findings’ generalizability and assist in effectively implementing full-scale online education globally. Second, this study is limited due to its use of particular analytical techniques, which sometimes produce results that do not complement each other. Thus, future studies should administer the Bayesian method to triangulate this study’s frequentist analyses results [87]. Finally, a sampling bias may exist because, although this study’s participants were selected from the same population following the same sampling technique, it was not possible to ensure that both groups contained the same participants. Additionally, online surveys (we used a Google online questionnaire) are naturally biased against older participants, as they may not feel comfortable with the technology [88]. Furthermore, although we attempted to set the backgrounds of both groups’ participants to reflect the population of teachers in Taiwan, it was not possible to guarantee that participants did indeed reflect the population of teachers in Taiwan, which might hinder the findings’ generalizability. For example, since the participants remained anonymous, it is impossible to know if the same people participated in the 2017 and 2020 surveys. Therefore, the differences between the groups and the contexts in which they were asked to complete the questionnaire could not be examined in this study. For this reason, future research in which the same participants are recruited for repeated measurements is required.

This study also has several practical implications. First, its findings suggest that teachers who are unfamiliar with the necessary technology to implement online education are encouraged to employ technologically capable teaching assistants [22], as there may not be sufficient time for the teachers to receive training [89]. Second, school administrators must provide appropriate training for all teachers, including current teachers and teachers-in-training. Moreover, schools should work to address the challenges that some students face regarding online education (e.g., lack of access to materials, the Internet, and a suitable learning environment) [22] and should address these issues while considering that “the psychological impact[s] of quarantine [are] wide-ranging, substantial, and can be long-lasting” [90].

In short, this study indicated that teachers rated their TLPACK to be higher before the COVID-19 pandemic than they did when they had to put that knowledge into practice. This is concerning, especially considering the increasing use and need for online education. Increased and targeted training will help teachers be more resilient in the face of online education-specific challenges. Especially today, in an era of rapid technological change and proliferation, teachers should be sufficiently technologically literate to keep their students interested in learning and use technology to enhance learning outcomes [26]. However, the responsibility lies with them, not with the technology itself; as Aydin et al. [23] pointed out, technology is only a tool and not the solution.

Author Contributions

Conceptualization, Y.-J.C. and R.L.-W.H.; methodology, R.L.-W.H.; software, Y.-J.C.; validation, R.L.-W.H.; formal analysis, Y.-J.C.; investigation, Y.-J.C.; resources, R.L.-W.H.; data curation, Y.-J.C.; writing—original draft preparation, R.L.-W.H.; writing—review and editing, Y.-J.C.; visualization, Y.-J.C.; supervision, Y.-J.C. and R.L.-W.H.; project administration, Y.-J.C.; funding acquisition, R.L.-W.H. All authors have read and agreed to the published version of the manuscript.

Funding

The APC was funded by Ministry of Science and Technology, Taiwan.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

The TLPACK model’s constructs, indicators, factor loadings and Cronbach’s alpha.

Table A1.

The TLPACK model’s constructs, indicators, factor loadings and Cronbach’s alpha.

| Technology knowledge | Factor loading | Cronbach’s α | |

|---|---|---|---|

| 1-1 | I can understand information on innovative technology that is integrated in education. | 0.66 | 0.90 |

| 1-2 | I think I am capable of integrating technology in instruction. | 0.73 | |

| 1-3 | I have no problem using new technology. | 0.77 | |

| 1-4 | I like to integrate technology into instruction. | 0.61 | |

| 1-5 | I am able to integrate technology with lesson plans. | 0.70 | |

| 1-6 | When technology is integrated in instruction, I am able to make students feel that it is convenient. | 0.64 | |

| 1-7 | When technology is integrated into instruction, I can make students feel safe to use technology. | 0.56 | |

| 1-8 | I can make students feel that technology can be used for more than just in learning. | 0.71 | |

| 1-9 | I can make students like the way technology is integrated into learning and teaching. | 0.67 | |

| 1-10 | I can cultivate students’ ability to integrate technology in various aspects of life. | 0.65 | |

| Learner knowledge | |||

| 2-1 | I can understand each student’s various learning styles and preferences and provide him/her with adaptive instruction. | 0.92 | 0.93 |

| 2-2 | I can understand students’ individual differences and try to offer proper guidance. | 0.96 | |

| 2-3 | I can come up with various ways to evaluate students with different learning styles. | 0.86 | |

| 2-4 | I can understand students’ cognitive development and thinking styles and design appropriate instructional activities accordingly. | 0.65 | |

| 2-5 | I can understand each student’s level of knowledge and learning strategies and provide him/her with different guidance and instruction. | 0.83 | |

| 2-6 | I can provide students with appropriate amounts and levels of tasks and guidance, based on their individual working memory. | 0.71 | |

| Pedagogy knowledge | |||

| 3-1 | I can use proper volume and speed to effectively deliver my instruction. | 0.66 | 0.88 |

| 3-2 | I know how to ask students proper questions. | 0.70 | |

| 3-3 | I am able to provide pertinent instructions based on their learning strategies. | 0.82 | |

| 3-4 | I know how to use instruction time judiciously. | 0.61 | |

| 3-5 | I am able to adopt appropriate teaching methods based on various situations, needs, and timing. | 0.48 | |

| Academic discipline content knowledge | Factor loading | Cronbach’s α | |

| 4-1 | I clearly understand the content of the subject that I am going to teach. | 0.78 | 0.94 |

| 4-2 | I clearly understand the important concepts and theories underlying the content that I am going to teach. | 0.78 | |

| 4-3 | I know the underpinning theory about the contents that I am going to teach. | 0.81 | |

| 4-4 | I know how to apply my knowledge related to the subject that I am going to teach and whether exceptions exist. | 0.78 | |

| 4-5 | I know how to present the subject knowledge in a comprehensible way. | 0.76 | |

| 4-6 | I can handle pertinent skills regarding the subject that I am going to teach. | 0.81 | |

| 4-7 | I can conceptualize the subject-related knowledge and transform it into suitable content for instruction according to the course goal. | 0.85 | |

| 4-8 | I can handle and completely comprehend the course material. | 0.87 | |

| 4-9 | I have a great ability to plan and design curricula and implement them. | 0.69 | |

| 4-10 | I am familiar with my students’ schema and what they are supposed to learn in this class. | 0.64 | |

| 4-11 | Other than subject knowledge regarding my courses, I can integrate subject knowledge from other courses. | 0.55 | |

| 4-12 | I clearly understand what causes students’ questions and misunderstandings. | 0.56 | |

| Context knowledge | |||

| 5-1 | I think the overall atmosphere of the school is good. | 0.53 | 0.89 |

| 5-2 | I can have good interactions with co-workers and share resources with them. | 0.64 | |

| 5-3 | I think the school has a good system for administrative work. | 0.97 | |

| 5-4 | I think the school can provide me with sufficient administrative support. | 0.91 | |

| 5-5 | I can agree with the school’s expectations and values. | 0.72 | |

| Total | 0.96 | ||

Table A2.

Confirmatory factor analysis (CFA) of TLPACK measurements.

Table A2.

Confirmatory factor analysis (CFA) of TLPACK measurements.

| χ2/d.f. | GFI | IFI | CFI | PGFI | PNFI | AGFI | RMSEA |

|---|---|---|---|---|---|---|---|

| 3.00 | 0.83 | 0.91 | 0.91 | 0.72 | 0.79 | 0.80 | 0.06 |

Note. GFI = goodness of fit index, IFI = incremental fit index, CFI = comparative fit index, PGFI = parsimonious goodness-of-fit index, PNFI = parsimonious normed fit index, AGFI = adjusted goodness of fit index, RMSEA = root mean square error of approximation.

Table A3.

CR, AVE, and the square root of the questionnaire’s AVE and inter-construct correlations.

Table A3.

CR, AVE, and the square root of the questionnaire’s AVE and inter-construct correlations.

| CR | AVE | AK | TK | LK | PK | CK | |

|---|---|---|---|---|---|---|---|

| AK | 0.94 | 0.56 | 0.75 | ||||

| TK | 0.89 | 0.46 | 0.63 | 0.68 | |||

| LK | 0.93 | 0.69 | 0.62 | 0.58 | 0.83 | ||

| PK | 0.88 | 0.60 | 0.70 | 0.55 | 0.78 | 0.78 | |

| CK | 0.88 | 0.60 | 0.47 | 0.52 | 0.61 | 0.59 | 0.77 |

Note. AK = academic discipline content knowledge, TK = technology knowledge, LK = learner knowledge, PK = pedagogy knowledge, CK = context knowledge, CR = composite reliability, AVE = average variance extracted.

References

- Wang, D.; Hu, B.; Hu, C.; Zhu, F.; Liu, X.; Zhang, J.; Wang, B.; Xiang, H.; Cheng, Z.; Xiong, Y.; et al. Clinical characteristics of 138 hospitalized patients with 2019 novel coronavirus-infected pneumonia in Wuhan, China. JAMA 2020, 323, 1061–1069. [Google Scholar] [CrossRef]

- World Health Organization. Current Novel Coronavirus (2019-nCoV) Outbreak. 2020. Available online: https://www.who.int/health-topics/coronavirus (accessed on 30 January 2020).

- Vlachopoulos, D. COVID-19: Threat or opportunity for online education? High. Learn. Res. Commun. 2020, 10, 16–19. [Google Scholar] [CrossRef]

- Wang, C.; Cheng, Z.; Yue, X.G.; McAleer, M. Risk management of COVID-19 by universities in China. J. Risk Financ. Manag. 2020, 13, 36. [Google Scholar] [CrossRef]

- Almarzooq, Z.; Lopes, M.; Kochar, A. Virtual learning during the COVID-19 pandemic: A disruptive technology in graduate medical education. J. Am. Coll. Cardiol. 2020, 75, 2635–2638. [Google Scholar] [CrossRef]

- Valtonen, T.; Leppänen, U.; Hyypiä, M.; Sointu, E.; Smits, A.; Tondeur, J. Fresh perspectives on TPACK: Pre-service teachers’ own appraisal of their challenging and confident TPACK areas. Educ. Inf. Technol. 2020, 25, 2823–2842. [Google Scholar] [CrossRef]

- Brinkley-Etzkorn, K.E. Learning to teach online: Measuring the influence of faculty development training on teaching effectiveness through a TPACK lens. Internet High. Educ. 2018, 38, 28–35. [Google Scholar] [CrossRef]

- Mishra, P.; Koehler, M. Technological pedagogical content knowledge: A framework for teacher knowledge. Teach. Coll. Rec. 2006, 108, 1017–1054. [Google Scholar] [CrossRef]

- Hodges, C.; Moore, S.; Lockee, B.; Trust, T.; Bond, A. The difference between emergency remote teaching and online learning. EDUCAUSE Rev. 2020, 1, 1–9. Available online: https://er.educause.edu/articles/202 (accessed on 19 April 2020).

- Cheng, X. Challenges of “school’s out, but class’s on” to school education: A practical exploration of Chinese schools during the COVID-19 pandemic. Sci. Insights Educ. Front. 2020, 5, 501–516. [Google Scholar] [CrossRef]

- Basilaia, G.; Kvavadze, D. Transition to online education in schools during a SARS-CoV-2 coronavirus (COVID-19) pandemic in Georgia. Pedagog. Res. 2020, 5, 1–9. [Google Scholar] [CrossRef]

- Aminah, N.; Waluya, S.B.; Rochmad, R.; Sukestiyarno, S.; Wardono, W.; Adiastuty, N. Analysis of technology pedagogic content knowledge ability for junior high school teachers: Viewed TPACK framework. In International Conference on Agriculture, Social Sciences, Education, Technology and Health (ICASSETH 2019); Atlantis Press: Amsterdam, The Netherlands, 2020; pp. 257–260. [Google Scholar]

- Ettinger, K.; Cohen, A. Patterns of multitasking behaviours of adolescents in digital environments. Educ. Inf. Technol. 2020, 25, 623–645. [Google Scholar] [CrossRef]

- Angeli, C.; Valanides, N. Epistemological and methodological issues for the conceptualization, development, and assessment of ICT–TPCK: Advances in technological pedagogical content knowledge (TPCK). Comput. Educ. 2009, 52, 154–168. [Google Scholar] [CrossRef]

- Hsu, L.; Chen, Y.J. Examining teachers’ technological pedagogical and content knowledge in the era of cloud pedagogy. South. Afr. J. Educ. 2019, 39, S1–S13. [Google Scholar] [CrossRef]

- Saad, M.; Barbar, A.M.; Abourjeili, S.A. Introduction of TPACK-XL: A transformative view of ICT-TPCK for building pre-service teacher knowledge base. Turk. J. Teach. Educ. 2012, 1, 41–60. [Google Scholar]

- Harris, J.B.; Phillips, M. If there’s TPACK, is there technological pedagogical reasoning and action? In Proceedings of the Society for Information Technology and Teacher Education International Conference, Washington, DC, USA, 26–30 March 2018. [Google Scholar]

- Marshall, A.L.; Wolanskyj-Spinner, A. COVID-19: Challenges and opportunities for educators and Generation Z learners. In Mayo Clinic; Elsevier: Amsterdam, The Netherlands, 2020; pp. 1135–1137. [Google Scholar]

- Seufert, S.; Guggemos, J.; Sailer, M. Technology-related knowledge, skills, and attitudes of pre-and in-service teachers: The current situation and emerging trends. Comput. Hum. Behav. 2021, 115, 106552. [Google Scholar] [CrossRef] [PubMed]

- Martin, S.; Diaz, G.; Sancristobal, E.; Gil, R.; Castro, M.; Peire, J. New technology trends in education: Seven years of forecasts and convergence. Comput. Educ. 2011, 57, 1893–1906. [Google Scholar] [CrossRef]

- Koehler, M.J.; Mishra, P. What is technological pedagogical content knowledge? Contemp. Issues Technol. Teach. Educ. 2009, 9, 60–70. [Google Scholar] [CrossRef]

- Bao, W. COVID-19 and online teaching in higher education: A case study of Peking University. Hum. Behav. Emerg. Technol. 2020, 2, 113–115. [Google Scholar] [CrossRef]

- Aydin, G.Ç.; Evren, E.; Atakan, İ.; Sen, M.; Yilmaz, B.; Pirgon, E.; Ebren, E. Delphi technique as a graduate course activity: Elementary science teachers’ TPACK competencies. In SHS Web of Conferences; EDP Sciences: Les Ulis, France, 2016. [Google Scholar]

- Bai, Y.; Mo, D.; Zhang, L.; Boswell, M.; Rozelle, S. The impact of integrating ICT with teaching: Evidence from a randomized controlled trial in rural schools in China. Comput. Educ. 2016, 96, 1–14. [Google Scholar] [CrossRef]

- Schrader, P.G.; Rapp, E.E. Does multimedia theory apply to all students? The impact of multimedia presentations on science learning. J. Learn. Teach. Digit. Age 2016, 1, 32–46. [Google Scholar]

- Xu, A.; Chen, G. A study on the effects of teachers’ information literacy on information technology integrated instruction and teaching effectiveness. Eurasia J. Math. Sci. Technol. Educ. 2016, 12, 335–346. [Google Scholar]

- Quezada, R.L.; Talbot, C.; Quezada-Parker, K.B. From bricks and mortar to remote teaching: A teacher education programme’s response to COVID-19. J. Educ. Teach. 2020, 46, 1–12. [Google Scholar] [CrossRef]

- De Guzman, M.J.J. Business administration students’ skills and capability on synchronous and asynchronous alternative delivery of learning. Asian J. Multidiscip. Stud. 2020, 3, 28–34. [Google Scholar]

- Longhurst, G.J.; Stone, D.M.; Dulohery, K.; Scully, D.; Campbell, T.; Smith, C.F. Strength, weakness, opportunity, threat (SWOT) analysis of the adaptations to anatomical education in the United Kingdom and Republic of Ireland in response to the COVID-19 pandemic. Anat. Sci. Educ. 2020, 13, 301–311. [Google Scholar] [CrossRef] [PubMed]

- Bonifati, A.; Guerrini, G.; Lutz, C.; Martens, W.; Mazilu, L.; Paton, N.; Salles, M.A.V.; Scholl, M.H.; Zhou, Y. Holding a conference online and live due to Covid-19: Experiences and lessons learned from EDBT/ICDT 2020. ACM Sigmod Rec. 2021, 49, 28–32. [Google Scholar] [CrossRef]

- Henriksen, D.; Creely, E.; Henderson, M. Folk pedagogies for teacher transitions: Approaches to synchronous online learning in the wake of COVID-19. J. Technol. Teach. Educ. 2020, 28, 201–209. [Google Scholar]

- Oe, E.; Schafer, E. Establishing presence in an online course using zoom video conferencing. In Proceedings of the Undergraduate Research and Scholarship Conference, Boise State University, Boise, ID, USA, 15 April 2019; p. 123. [Google Scholar]

- Lowenthal, P.; Borup, J.; West, R.; Archambault, L. Thinking beyond Zoom: Using asynchronous video to maintain connection and engagement during the COVID-19 pandemic. J. Technol. Teach. Educ. 2020, 28, 383–391. [Google Scholar]

- Lederman, D. Will Shift to Remote Teaching be Boon or Bane for Online Learning? Insider HigherEd. Available online: https://www.insidehighered.com/digital-learning/article/2020/03/18/most-teaching-going-remote-will-help-or-hurt-online-learning (accessed on 6 May 2020).

- Langford, M.; Damşa, C. Online Teaching in the Time of COVID-19. Centre for Experiential Legal Learning (CELL), University of Oslo. Available online: https://khrono.no/files/2020/04/16/report-university-teachers-16-april-2020.pdf (accessed on 24 April 2020).

- Benson, S.N.K.; Ward, C.L. Teaching with technology: Using TPACK to understand teaching expertise in online higher education. J. Educ. Comput. Res. 2013, 48, 153–172. [Google Scholar] [CrossRef]

- Mishra, P.; Koehler, M.J.; Henriksen, D. The seven trans-disciplinary habits of mind: Extending the TPACK framework towards twenty-first-century learning. Educ. Technol. 2011, 51, 22–28. [Google Scholar]

- Niess, M.L. Investigating TPACK: Knowledge growth in teaching with technology. J. Educ. Comput. Res. 2011, 44, 299–317. [Google Scholar] [CrossRef]

- Ward, C.L.; Benson, S.K. Developing new schemas for online teaching and learning: TPACK. MERLOT J. Online Learn. Teach. 2010, 6, 482–490. [Google Scholar]

- Miguel-Revilla, D.; Martínez-Ferreira, J.; Sánchez-Agustí, M. Assessing the digital competence of educators in social studies: An analysis in initial teacher training using the TPACK-21 model. Australas. J. Educ. Technol. 2020, 36, 1–12. [Google Scholar] [CrossRef]

- Chai, C.S.; Jong, M.; Yan, Z. Surveying Chinese teachers’ technological pedagogical STEM knowledge: A pilot validation of STEM-TPACK survey. Int. J. Mob. Learn. Organ. 2020, 14, 203–214. [Google Scholar] [CrossRef]

- Saubern, R.; Urbach, D.; Koehler, M.; Phillips, M. Describing increasing proficiency in teachers’ knowledge of the effective use of digital technology. Comput. Educ. 2020, 147, 103784. [Google Scholar] [CrossRef]

- Shulman, L. Knowledge and teaching: Foundations of the new reform. Harv. Educ. Rev. 1987, 57, 1–23. [Google Scholar] [CrossRef]

- Andrews, T.; Tynan, B. Learner characteristics and patterns of online learning: How online learners successfully manage their learning. Eur. J. Open Distance E-learn. 2015, 18, 85–101. [Google Scholar]

- Kirschner, P.A. Stop propagating the learning styles myth. Comput. Educ. 2017, 106, 166–171. [Google Scholar] [CrossRef]

- Benedetti, C. Online instructors as thinking advisors: A model for online learner adaptation. J. Coll. Teach. Learn. 2015, 12, 171. [Google Scholar] [CrossRef][Green Version]

- Hsu, P.S. Learner characteristic based learning effort curve mode: The core mechanism on developing personalized adaptive e-learning platform. Turk. Online J. Educ. Technol. 2012, 11, 210–220. [Google Scholar]

- Kim, J.K.; Yan, L.N. The relationship among learner characteristics on academic satisfaction and academic achievement in SNS learning environment. Adv. Sci. Technol. Lett. 2014, 47, 227–231. [Google Scholar] [CrossRef]

- Bell, R.L.; Maeng, J.L.; Binns, I.C. Learning in context: Technology integration in a teacher preparation program informed by situated learning theory. J. Res. Sci. Teach. 2013, 50, 348–379. [Google Scholar] [CrossRef]

- Klenner, M. A technological approach to creating and maintaining media-specific educational materials for multiple teaching contexts. Procedia Soc. Behav. Sci. 2015, 176, 312–318. [Google Scholar] [CrossRef][Green Version]

- Delgado, A.J.; Wardlow, L.; McKnight, K.; O’Malley, K. Educational technology: A review of the integration, resources, and effectiveness of technology in K-12 classrooms. J. Inf. Technol. Educ. 2015, 14, 397–416. [Google Scholar] [CrossRef]

- Yuan, D.; Li, P. An analysis of the lack of modern educational technology in the teaching of secondary vocational English subjects and its teaching strategies—Take Pingli vocational education center as an example. J. Contemp. Educ. Res. 2021, 5, 1–3. [Google Scholar] [CrossRef]

- Sudman, S. Applied Sampling; Academic Press: New York, NY, USA, 1976. [Google Scholar]

- Amornpipat, I. An exploratory factor analysis of quality of work life of pilots. Psychology 2019, 7, 278–283. [Google Scholar]

- Goretzko, D.; Pham, T.T.H.; Bühner, M. Exploratory factor analysis: Current use, methodological developments and recommendations for good practice. Curr. Psychol. 2019, 1–12. [Google Scholar] [CrossRef]

- Kaiser, H.F. The application of electronic computers to factor analysis. Educ. Psychol. Meas. 1960, 20, 141–151. [Google Scholar] [CrossRef]

- Deng, L. The city worker mental health scale: A validation study. In Psychological and Health-Relate Assessment Tools Developed in China; Lam, L.T., Ed.; Bentham Science Publishers: Sharjah, United Arab Emirates, 2010; pp. 34–44. [Google Scholar]

- Hair, J.F.; Tatham, R.L.; Anderson, R.E.; Black, W. Multivariate Data Analysis, 5th ed.; Prentice-Hall: London, UK, 1998. [Google Scholar]

- Azim, A.M.M.; Ahmad, A.; Omar, Z.; Silong, A.D. Work-family psychological contracts, job autonomy and organizational commitment. Am. J. Appl. Sci. 2012, 9, 740–747. [Google Scholar]

- Bagozzi, R.P.; Yi, Y. On the evaluation of structural equation models. J. Acad. Mark. Sci. 1988, 16, 74–94. [Google Scholar] [CrossRef]

- Bentler, P.M. Comparative fit indexes in structural models. Psychol. Bull. 1990, 107, 238. [Google Scholar] [CrossRef] [PubMed]

- Bentler, P.M.; Bonett, D.G. Significance tests and goodness of fit in the analysis of covariance structures. Psychol. Bull. 1980, 88, 588. [Google Scholar] [CrossRef]

- Doll, W.J.; Xia, W.; Torkzadeh, G. A confirmatory factor analysis of the end-user computing satisfaction instrument. MIS Q. 1994, 18, 453–461. [Google Scholar] [CrossRef]

- Lam, L.W. Impact of competitiveness on salespeople’s commitment and performance. J. Bus. Res. 2012, 65, 1328–1334. [Google Scholar] [CrossRef]

- Allen, R.J.; Hill, L.J.; Eddy, L.H.; Waterman, A.H. Exploring the effects of demonstration and enactment in facilitating recall of instructions in working memory. Mem. Cogn. 2020, 48, 400–410. [Google Scholar] [CrossRef]

- Jarosz, A.F.; Wiley, J. What are the odds? A practical guide to computing and reporting Bayes factors. J. Probl. Solving 2014, 7, 2. [Google Scholar] [CrossRef]

- Makowski, D.; Ben-Shachar, M.S.; Lüdecke, D. Describing effects and their uncertainty, existence and significance within the Bayesian framework. J. Open Source Softw. 2019, 4, 1541. [Google Scholar] [CrossRef]

- Wagenmakers, E.-J.; Love, J.; Marsman, M.; Jamil, T.; Ly, A.; Verhagen, J.; Selker, R.; Gronau, Q.F.; Dropmann, D.; Boutin, B.; et al. Bayesian inference for psychology. Part II: Example applications with JASP. Psychon. Bull. Rev. 2017, 25, 58–76. [Google Scholar] [CrossRef]

- Wong, A.Y.; Warren, S.; Kawchuk, G.N. A new statistical trend in clinical research–Bayesian statistics. Phys. Ther. Rev. 2010, 15, 372–381. [Google Scholar] [CrossRef]

- Etz, A.; Vandekerckhove, J. Introduction to Bayesian inference for psychology. Psychon. Bull. Rev. 2018, 25, 5–34. [Google Scholar] [CrossRef] [PubMed]

- Kruschke, J.K. Bayesian estimation supersedes the t test. J. Exp. Psychol. 2013, 142, 573–604. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Liu, G. A simple two-sample Bayesian t-test for hypothesis testing. Am. Stat. 2016, 70, 195–201. [Google Scholar] [CrossRef]

- Wagenmakers, E.-J.; Marsman, M.; Jamil, T.; Ly, A.; Verhagen, J.; Love, J.; Selker, R.; Gronau, Q.F.; Šmíra, M.; Epskamp, S.; et al. Bayesian inference for psychology. Part I: Theoretical advantages and practical ramifications. Psychon. Bull. Rev. 2017, 25, 35–57. [Google Scholar] [CrossRef]

- Wagenmakers, E.J. A practical solution to the pervasive problems of p values. Psychon. Bull. Rev. 2007, 14, 779–804. [Google Scholar] [CrossRef]

- Lindsay, D.S. Replication in psychological science. Psychol. Sci. 2015, 26, 1827–1832. [Google Scholar] [CrossRef]

- Berger, J.O. Bayes factors. In Encyclopedia of Statistical Sciences, 2nd ed.; Kotz, S., Balakrishnan, N., Read, C., Vidakovic, B., Johnson, N.L., Eds.; Wiley: Hoboken, NJ, USA, 2006; Volume 1, pp. 378–386. [Google Scholar]

- Gronau, Q.F.; Ly, A.; Wagenmakers, E.J. Informed Bayesian t-tests. Am. Stat. 2020, 74, 137–143. [Google Scholar] [CrossRef]

- Held, L.; Ott, M. On p-values and Bayes factors. Annu. Rev. Stat. Appl. 2018, 5, 393–419. [Google Scholar] [CrossRef]

- Jeffreys, H. Theory of Probability, 3rd ed.; Oxford University Press: Oxford, UK, 1961. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Routledge: Abingdon, UK, 1988. [Google Scholar]

- Sawilowsky, S.S. New effect size rules of thumb. J. Mod. Appl. Stat. Methods 2009, 8, 26. [Google Scholar] [CrossRef]

- Hsu, L. Mind the gap: Exploring hospitality teachers’ and student interns’ perception of using virtual communities for maintaining connectedness in internship. J. Educ. Train. 2017, 4, 88–100. [Google Scholar] [CrossRef][Green Version]

- Tuan, H.L.; Lu, Y.L. Science teacher education in Taiwan: Past, present, and future. Asia Pac. Sci. Educ. 2019, 5, 1–22. [Google Scholar] [CrossRef]

- Njiku, J.; Mutarutinya, V.; Maniraho, J.F. Developing technological pedagogical content knowledge survey items: A review of literature. J. Digit. Learn. Teach. Educ. 2020, 36, 1–16. [Google Scholar] [CrossRef]

- Tømte, C.; Enochsson, A.B.; Buskqvist, U.; Kårstein, A. Educating online student teachers to master professional digital competence: The TPACK-framework goes online. Comput. Educ. 2015, 84, 26–35. [Google Scholar] [CrossRef]

- Goh, P.S.; Sandars, J. A vision of the use of technology in medical education after the COVID-19 pandemic. Med. Ed. Publ. 2020, 9, 19–26. [Google Scholar] [CrossRef]

- Beard, E.; Dienes, Z.; Muirhead, C.; West, R. Using Bayes factors for testing hypotheses about intervention effectiveness in addictions research. Addiction 2016, 111, 2230–2247. [Google Scholar] [CrossRef] [PubMed]

- Saleh, A.; Bista, K. Examining factors impacting online survey response rates in educational research: Perceptions of graduate students. J. Multidiscip. Eval. 2017, 13, 63–74. [Google Scholar]

- Peimani, N.; Kamalipour, H. Online education and the COVID-19 outbreak: A case study of online teaching during lockdown. Educ. Sci. 2021, 11, 72. [Google Scholar] [CrossRef]

- Brooks, S.K.; Webster, R.K.; Smith, L.E.; Woodland, L.; Wessely, S.; Greenberg, N.; Rubin, G.J. The psychological impact of quarantine and how to reduce it: A rapid review of the evidence. Lancet 2000, 395, 912–919. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).