Abstract

The professional development of experienced teachers has received considerably less attention than that of novice teachers. This study focuses on four experienced secondary mathematics teachers in Shanghai, China, with two participating in a year-long professional development program (treatment teachers) and the other two received conventional knowledge-based professional development (comparison teachers). The program introduced productive classroom talk skills which can facilitate teachers’ formative assessment of student learning during class. To encourage teachers to reflect on their classroom discourse when reviewing recordings of their teaching, we used visual learning analytics with the treatment teachers and theorized the use of this technology with activity theory. After completing the program, the treatment teachers were better able to use productive talk moves to elicit student responses and to provide timely formative feedback accordingly. Specifically, the percentage of word contributions in lessons from students and the length of their responses increased noticeably. Qualitative findings suggest that the use of visual learning analytics mediated the treatment teachers and improved classroom discourse. Based on these findings and activity theory, we provide recommendations for future use of visual learning analytics to improve teachers’ classroom talk and designing professional development activities for experienced teachers.

1. Introduction

Experienced teachers are every school’s important resource. They know the curriculum and are experienced in classroom management. However, Doan and Peters [1] observed that some teachers may stop searching for opportunities to improve their teaching as they gain experience. To address a decline of interest in innovative teaching, the researchers believed that teachers should learn new skills via professional development programs. Even so, the professional development of experienced teachers has received relatively less attention than that of novice teachers [2].

This study focuses on experienced mathematics teachers who participated in a professional development project on classroom talk in Shanghai, China. Giving teachers new insights into classroom talk is important. With effective classroom talk, teachers can elicit student responses which provide information for teachers to assess students’ current level of learning and modify their instructions accordingly [3,4]. This kind of assessment is formative because student responses are used as evidence “to make decisions about the next steps in instruction that are likely to be better, or better founded, than the decisions they would have taken in the absence of the evidence that was elicited” [5] (p. 9). With student voices, teachers can better support their learning during class.

Although documenting dialog in the classroom is useful for teachers to reflect on their classroom talk, it is difficult to do without using classroom recording technology to capture the richness and complexity of classroom activities. Thus, the use of videos to support teacher professional development has been increasingly popular (e.g., [6,7,8,9]). For example, Watters et al. [9] used classroom videos of an experienced mathematics teacher as a resource for pre-service teacher development. Based on video recordings of real lessons, the participants were able to discuss classroom practices meaningfully and engage in productive conversations.

Nevertheless, the complexity and richness of data in classroom videos can be overwhelming [10]. Teachers watching classroom videos may thus experience cognitive overload or exhaustion, which hinders them from noticing relevant events for reflection [11]. A possible solution to this problem is to incorporate visual learning analytics into video-viewing activities, which highlights human reasoning and judgement in using analytics and visualization to understand educational problems [12,13]. However, few studies have explored and theorized the application of visual learning analytics in teacher professional development settings [14]. Moreover, the effect of in-service teacher training on student learning is still unexplored [15,16]. Further research is required to (1) test and theorize the use of visual learning analytics in teacher professional development and (2) evaluate the effect of this analytics-supported program on student learning. This study, therefore, aims to address the following research questions (RQ1 to RQ3):

- How does the use of visual learning analytics in professional development influence experienced mathematics teachers’ classroom talk?

- What are the teachers’ perceptions of the program?

- How does the program influence students’ mathematics achievement?

2. Conceptual Framework

The framework for our professional development program is developed in three stages to ensure the program is practical with relevant techniques and technologies [17]. First, we leverage the research in relation to formative assessment and effective classroom talk to establish the content of the program. Second, we use recent visual learning analytics technology to support the teachers’ reflections on classroom discourse when reviewing their lesson videos. Third, we theorize the use of visual learning analytics with activity theory.

2.1. Formative Assessment and Classroom Talk

Formative assessment is vitally important to student learning, and the benefits are associated with teacher feedback [3,5,18,19,20,21]. Haug and Ødegaard [21] thus emphasize teachers’ sensitivity to student responses which uncover their thoughts and ideas. However, teacher-dominated classrooms are common in China, based as they are on a Confucian culture in which students often expect teachers to “spoon-feed” knowledge to them and seldom speak up [22]. Without student voices, teachers’ formative assessment is hardly possible inside the classroom.

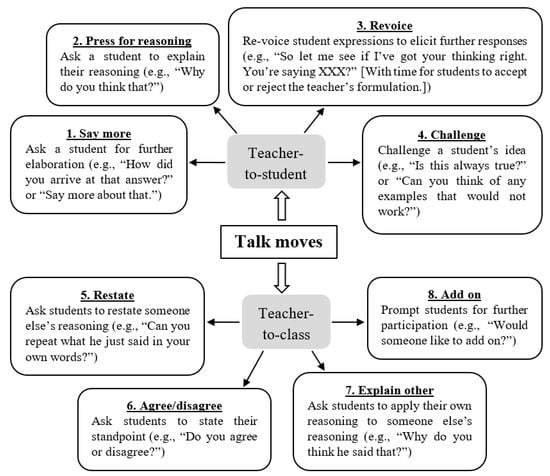

Michaels et al. [23] and Resnick et al. [24] established a framework of productive talk for classroom teaching based on their 15 years of experience working with frontline teachers. They proposed a system of academically productive talk moves to elicit student responses. These talk moves can be classified into teacher-to-student and teacher-to-class productive talk moves, as presented in Figure 1. Teacher-to-student talk moves involve dialog between the teacher and student. For example, in “press for reasoning” Garcia-Mila et al. [25] encouraged their teacher participants to scaffold a student to further explain his/her answer. In teacher-to-class talk moves, the teacher would involve other students in the dialog, prompting them to explain the student’s ideas and reasoning. The teacher can thus identify the students’ correctness or correct any mistakes in their responses [26].

Figure 1.

Teachers’ productive talk moves and examples based on Michaels et al. [23] and Resnick et al. [24].

2.2. Video Viewing and Visual Learning Analytics in Professional Development

At this point, we have established the professional development content (i.e., productive classroom talk). How, then, can we support teacher learning and reflections on their classroom practice? Video viewing is one possible way [11,15,27], and studies show that video viewing in professional development can improve teacher cognition and classroom practice [9,11]. Using video recordings, teachers can watch their classroom teaching repeatedly, if necessary, and according to their needs. According to Gröschner et al. [27], video-based reflection can further increase their self-efficacy and facilitate instructional changes. However, the use of classroom videos in professional development does not guarantee success. Gaudin and Chaliès [11] caution that because abundant data in classroom videos can be overwhelming, teachers often get sidetracked and fail to identify relevant information for reflection.

As Martín-García et al. [28] suggested, visual techniques can facilitate reflective learning. Thanks to technological advances, visual analytics— “the science of analytical reasoning facilitated by interactive visual interfaces” [29] (p. 10)—can help provide insights into complex data. According to Vieira et al. [13], the use of visual analytics has become increasingly common in education. Although the researchers coined the phrase visual learning analytics in their review of learning and visual analytics research, they lamented that “little work has been done to bring visual learning analytics tools into classroom settings” (p. 119). Further research is therefore needed.

For this reason, we developed a visual learning analytics tool, the classroom discourse analyzer, to facilitate teachers’ reflections on classroom talk (see Appendix A for an interface; [12,30]). The analyzer can automatically visualize low-inference discourse information (e.g., distributions of words, turns, and turn-taking patterns) from transcriptions of classroom video-recordings, while human coding is needed for the analyzer to represent high-inference discourse information such as the types of talk moves shown in Figure 1. The analyzer visualizes classroom talk using (1) timelines of the teacher and each student and (2) bubbles of various sizes to represent the number of words in a talk turn. Graphs present information on classroom talk (e.g., speaker statistics, turn-taking patterns, and distribution of each productive talk move), and teachers can click on the graphs to navigate and view details in the corresponding video segments and/or transcripts. Visual representations, transcripts and video footage are synchronized, providing multiple resources for supporting teacher reflections and discussion. The use of the classroom discourse analyzer follows the principles of multiple views in information visualization [31] and the visual information seeking mantra—“Overview first, zoom and filter, then details-on-demand” [32] (p. 366), which are consistent with human information processing rules, such as focusing from general to specific.

2.3. Theorizing the Use of Visual Learning Analytics with Activity Theory

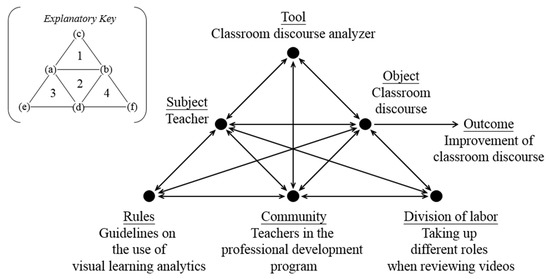

The use of visual learning analytics is theorized with activity theory. This theory focuses on the link between a human agent and social structure [33] and can be drawn to theorize the use of digital technology [14]. From the perspective of activity theory, human cognition can be restructured using language and artefacts instead of a stimulus-response process described by behaviorists [34]. In fact, activity theorists view every individual as an active agent situated in an activity system of six elements: (a) subject, (b) object, (c) tool, (d) community, (e) rules, and (f) division of labor. In Figure 2, a pictorial representation of activity theory shows how these elements interact with each other, forming four sub-triangles (triangles 1 to 4), each of which represents a sub-system of mediation [14,33]:

Figure 2.

The theoretical framework of the analytics-supported video-based professional development program (right) with the explanatory key (left).

- Triangle 1 (Vygotsky’s mediated-action model): a human agent pursuing an object is mediated by tools;

- Triangle 2: the human agent entering a community to pursue the object is facilitated by coordinated action and communication;

- Triangle 3: a set of rules mediates between the human agent and community; and

- Triangle 4: division of labor (or roles) mediates between the community and object.

Recently, Blayone [14] theorized the uses of digital technology with activity theory. Leveraging his theoretical work, we show in Figure 2 the framework of the analytics-supported professional development program. In the program for mathematics teachers (a: subject), the object (b) was the teacher’s classroom discourse. In Triangle 1, the classroom discourse analyzer (c: tool) served as a mediator for teachers to improve their classroom discourse (outcome). In Triangle 2, teachers formed a learning community (d) in which they discussed in groups and reflected on the visual learning analytics of their classroom videos. To facilitate peer interactions, we provided teachers with guidelines (e: rules) on the effective use of visual learning analytics as evidence of discussion and reflection. When reviewing the videos, the teachers were encouraged to discuss and seek improvement on two levels: (1) the overall learning analytics of classroom discourse, such as the use of productive talk moves and the word contributions of students, and (2) the quality of each teacher’s talk turn. To increase the efficiency of the discussion process, teachers worked collaboratively (f: division of labor) to monitor the various aspects when reviewing their classroom videos. Grounded in activity theory, the coherence of the overall activity system was increased. The use of visual learning analytics thus became a structured approach to teacher professional development.

3. Method

3.1. Research Context and Participants

This study was conducted over a year-long professional development project for mathematics teachers in Shanghai. We recruited teachers from 16 local secondary schools. More than 50 teachers were randomly assigned to the treatment group (attended video-based workshops) and the comparison group (attended conventional knowledge-based workshops). Their students were also involved in the study. Informed consent was obtained from participants involved. The study was authenticated by the Human Research Ethics Committee of The University of Hong Kong.

From each group, we randomly selected two teachers who had taught for 10 years or more to participate in this in-depth study. Table 1 shows that the teachers were similar in background. At the start of the program, their classes were Junior Secondary 2. At the end of the program, the students became Junior Secondary 3. The pre-test results suggest that, in the two groups, the students’ initial mathematics ability levels were equivalent, providing common ground for further comparison after the intervention.

Table 1.

Information on the teacher participants.

3.2. Procedure and Professional Development Program

All teacher participants attended the first knowledge-based workshop (about two hours) with an introduction to academically productive talk in the classroom [23,24]. The treatment group then attended five 2-h video-based workshops held every 1 to 2 months over the year. In the first two workshops, we introduced the eight types of productive talk moves (see Figure 1) of Michaels et al. [23] and Resnick et al. [24]. In the subsequent three workshops, we strengthened the teachers’ classroom talk knowledge and skills and focused on the integrated use of different productive talk moves. Before each workshop, the treatment teachers handed in videos of their classroom teaching, which were loaded into the classroom discourse analyzer [12,30] for reflection in the workshop.

The professional development activities of each video-based workshop were similar. Within the theoretical framework of the analytics-supported professional development program (Figure 2), teachers in the treatment group first evaluated their own classroom practices with a focus on classroom discourse. They then reviewed their classroom videos in groups. Based on the visual learning analytics of their classroom videos, they discussed and reflected collaboratively on their classroom practice and were able to navigate the various learning analytics data of a classroom lesson, including visualizations of the teacher’s and student’s words and turns, as well as the teacher’s use of the eight types of productive talk moves (see Figure 1). Supported by visual learning analytics, teachers could also identify meaningful segments and review the corresponding transcripts and videos for in-depth discussions. These analytics were used to identify areas for improvement. Toward the end of each workshop, we engaged teachers in a whole-class discussion during which we shared a few appropriate examples and highlighted common problems to be addressed in future practice.

For the comparison group, teachers handed in their videos to the research team concurrently with the treatment group’s schedule, but instead of participating in the video-based workshops, they attended two 2-h conventional knowledge-based workshops (at the end of semesters 1 and 2, respectively) in addition to the introductory workshop. In those workshops, offered every 6 months, teachers learned the knowledge and skills of classroom talk through lectures. Group discussions and teacher reflections were major activities.

3.3. Data Collection and Analysis

Data were collected from four major sources: (1) classroom videos, (2) teacher reflections, (3) teacher interviews, and (4) student tests. First, we recorded on video the lessons of the four teacher participants. To examine the changes in classroom discourse, we compared their classroom videos at the start, middle and toward the end of the program. In total, 12 classroom videos of about 40 min each (i.e., the duration of a lesson) were analyzed in the study. No constraints were imposed on their lesson objectives or topics. However, we asked the teacher participants to videotape the lessons in which they taught new mathematics knowledge (instead of, for example, exam review) to make sure that the lessons and dialogic practices were comparable in our analysis. The research team transcribed the videos and coded the classroom discourse into the eight types of academically productive talk moves [23,24], as presented in Figure 1. Table 2 shows a discourse excerpt of Tiffany’s lesson for an illustration. A total of about 88,000 words and 2400 talk turns were contributed by the teachers and their students and were transcribed and analyzed. Transcripts were double coded by two team members. To ensure the quality of coding, all transcripts and identified productive talk moves were reviewed and evaluated by a mathematics teacher (13 years of teaching experience) and an expert on productive classroom talk in the research team. Any disagreements in coding were resolved through discussion until consensus was achieved.

Table 2.

Discourse excerpt of Tiffany’s lesson (translated into English for reporting).

For each classroom video, we analyzed the following three aspects as indicators of instructional change. First, we considered the number of productive talk moves that the teachers used. This number indicated their mastery of the talk moves and their awareness of using them to elicit student responses. The quality of each talk move was assessed by the researchers and the mathematics teacher. Talk moves with low quality (e.g., irrelevant to the discussion) were excluded. Second, we considered the percentage of the students’ word contributions in the lessons. Tracking the change in this percentage helped monitor whether the teacher participants could, after the program, elicit more student responses. Third, we considered the average number of students’ words per turn. The length of their responses indicated whether they could elaborate on their ideas in detail, providing more information for teachers to formatively assess their learning. The influence of our program could thus be determined by comparing these statistics across the videos of different teachers recorded at different times.

Teacher reflection data were collected at the end of each workshop using a written reflection form, and teacher interviews were conducted at the end of the program. The purpose of the teacher reflection activities and interviews was to understand the teacher’s perceptions of the content (i.e., classroom talk) and the use of visual learning analytics in the program. Guiding questions on the reflection form included “How did I and my students perform in my lesson?” and “In what ways can I improve my classroom instruction and talk?” For the teacher interviews, examples of questions were “What kind of talk moves could help achieve your teaching objectives? (Probe for reasons and examples)” and “Did you change your classroom instruction based on the visual learning analytics of your classroom videos? (Probe for reasons and examples).” The reflection and interview data were transcribed into Chinese and then thematically analyzed and organized into categories [35]. Some data were translated into English for reporting purposes. To establish coding reliability, interview data were double coded by the two authors. Any disagreements were resolved through discussion until consensus was achieved. To enhance validity, member checking asked participants to read and confirm the accuracy of their interview transcriptions, which can eliminate misunderstandings and misinterpretations of the interview data [36]. In addition, interview findings were reported in direct quotations [37].

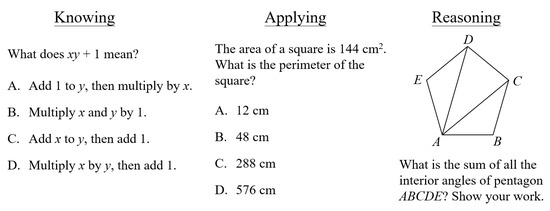

Tests were used to evaluate the students’ mathematics achievement. Before the start of the program, a pre-test assessed the students’ initial equivalence in the treatment and comparison groups. A post-test compared their mathematics achievement at the end of the program. The pre- and post-test lasted for 40 min each and were similar in scope and level of difficulty. To ensure validity and reliability, the design of the test papers was based on Trends in International Mathematics and Science Study (TIMSS) and were graded by experienced mathematics teachers. The total score of each test was 47 and covered three cognitive domains: knowing, applying, and reasoning. As Mullis and Martin [38] explained, these three domains basically cover the appropriate range of cognitive skills across the content domains of mathematics, such as algebra and geometry. Figure 3 shows a sample question, as adopted from TIMSS [39], in each of the three domains. The procedure of data analysis followed guidelines by López et al. [40]. According to the Kolmogorov–Smirnov test, there was a significant deviation from normality of the two research groups in the pre- and post-test scores. Therefore, a non-parametric test, the Mann–Whitney U test, was run to analyze the student test data [41]. Specifically, the overall scores as well as the scores in each cognitive domain were compared between the treatment and comparison groups.

Figure 3.

Sample questions of knowing, applying, and reasoning in student tests.

4. Results

4.1. RQ1: How Does the Use of Visual Learning Analytics in Professional Development Influence Experienced Mathematics Teachers’ Classroom Talk?

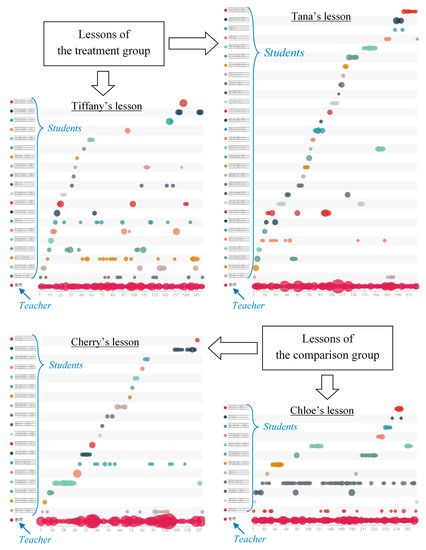

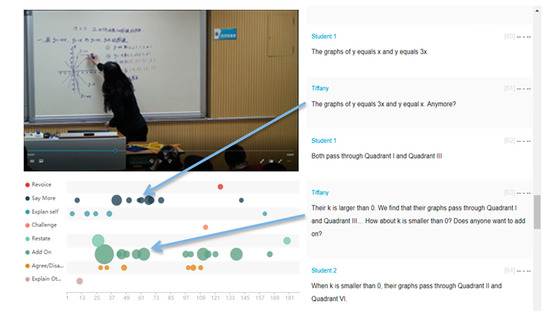

After the program, we observed differences in the classroom practices of the treatment group (i.e., Tiffany and Tana) and the comparison group (i.e., Cherry and Chloe). Figure 4 shows the visual learning analytics of classroom videos of the four teacher participants toward the end of the program. The horizontal axis represents a lesson’s turn number and, on the vertical axis, each timeline represents a speaker. The students of the two treatment groups provided more responses (i.e., more bubbles in student timelines) than those of the comparison group (i.e., fewer bubbles in student timelines). More quantitative evidence is presented as follows.

Figure 4.

Screenshots of the classroom visual learning analytics of the teacher participants (taken from the classroom discourse analyzer tool).

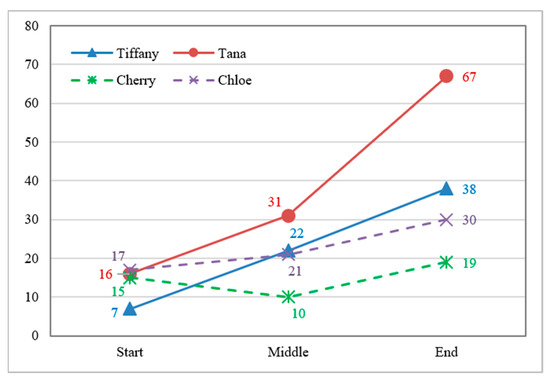

Figure 5 shows the number of talk moves of Michaels et al. [23] and Resnick et al. [24] by the four teachers over time. The treatment group showed an increasing trend. Specifically, Tiffany’s number increased from 17 to 31 and 67, while Tana’s increased gradually from seven to 22 and 38. Toward the end of the program, Tana’s (N = 67) use of productive talk moves were more than double Cherry’s (N = 19) and Chloe’s (N = 30). Although Tiffany (N = 38) used these talk moves slightly more than the comparison group, her improvement is worth noting because she used just seven productive talk moves at the start of the program.

Figure 5.

Teachers’ use of productive talk moves in lessons.

Table 3 shows the distributions of teachers’ productive talk moves in lessons over time. For both groups, teachers often used “say more” and “add on” to elicit responses from students. Figure 6 shows a discourse excerpt of these two talk moves from the classroom discourse analyzer. The teacher was able to confirm the student’s correct answer (i.e., “The graphs of y = x and y = 3x”) and further the discussion. Except for “add on,” the appearance of other teacher-to-class talk moves (i.e., “restate,” “agree/disagree” and “explain other”) was rare.

Table 3.

Distributions of teachers’ productive talk moves in lessons over time.

Figure 6.

Discourse excerpt of “say more” and “add on” from the classroom discourse analyzer tool (translated into English for reporting).

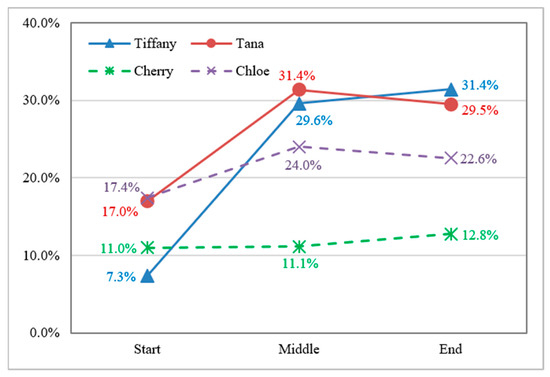

As Figure 7 shows, the percentages of students’ word contributions in the lessons were low in both groups at the start of the program and ranged from 7.3% to 17.4%. Starting from the middle of the program, the percentages of student responses in the treatment group increased by about 15% to nearly 30%. This level of word contribution was sustained toward the end of the program, in contrast to 22.6% (Chloe) and 12.8% (Cherry) in the comparison group.

Figure 7.

Percentage of students’ word contributions over time.

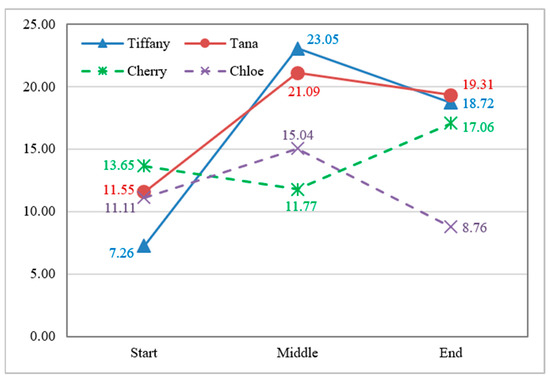

As Figure 8 shows, the average number of words per turn by students was less than 15 for both groups at the start of the program. Beginning from the middle of the program, the average number of words per turn increased to around 20 in the treatment group but did not improve substantially in the comparison group over time. When the student provided longer responses, more information was available for teachers to formatively assess student learning during class.

Figure 8.

Average number of students’ words per turn over time.

4.2. RQ2: What Are the Teachers’ Perceptions of the Program?

The teachers’ perceptions were reported in three main aspects: (1) the use of visual learning analytics, (2) the changes in their teaching styles, and (3) the use of formative assessment.

Generally, the teacher participants positively perceived the analytics-supported professional development program. Based on their reflections and interview data, two principal benefits of using visual learning analytics were identified. First, the analytics increased the teachers’ understanding of their classroom instruction, such as their word choice and types of productive talk moves they used. For example, according to Tana, “There are exact words and question types in the classroom videos (as shown in the classroom discourse analyzer). These can increase our understanding of our concepts and classroom instruction” (reflection in Workshop 3) and “enhance our knowledge and skills of questioning” (reflection in Workshop 5). Second, the analytics supported teacher reflections and helped improve classroom instruction, especially formative feedback. Tiffany highlighted a few aspects: “In addition to the number of students’ word contributions, I should pay attention to the quality of my instruction, my immediate follow-up responses, my guidance, and my probes into deeper thinking” (reflection in Workshop 2) and “the wording of talk moves, such as add on and agree/disagree” (reflection in Workshop 4).

As for the changes in teaching styles, we found that using visual learning analytics raised teachers’ awareness of engaging students in class discussions. Taking the “press for reasoning” talk move as an example, Tana said that she used this to probe the students’ explanations:

“Now, I would give students more chances to talk and express themselves, asking simple yes/no questions to draw their attention or probing for further elaboration. For example, I would ask them: Why do you think so? How did you come up with this solution?”

In addition, Tiffany shared that she had become aware of the wait-time provided for students to think about and answer her questions:

“In the past, I provided students with limited time to think about my questions. When a student did not respond instantly, I would invite another student to answer. After participating the program, I would give my students more time—maybe around one to two minutes.”

Finally, the teacher participants confirmed that they would use the productive talk moves to elicit student responses, and thus to create opportunities of formative assessment. When her students could not answer a question correctly, Tana shared that she would “tell them which concepts they did not fully understand and which part of course materials they should review.” As for Tiffany, she would show student work in class to engage students in formative assessment. Various talk moves were used to probe student thinking:

“I would show their common mistakes using an overhead projector. Some students made mistakes repeatedly, meaning that they had some difficulties understanding the concepts. When their work was shown in class, all of the students helped analyze the reasons for the mistakes: Why is it wrong here? How can we avoid the problem? … Letting students correct other students’ mistakes seems quite effective.”

4.3. RQ3: How Does the Program Influence Students’ Mathematics Achievement?

To evaluate the program, we tested the students’ mathematics achievement. Eleven students in the treatment group and six students in the comparison group did not complete either the pre- or post-test. These students were excluded from the analysis of mathematics achievement. Analyses of the pre-test scores indicate that students in the treatment group (N = 61) were not statistically different than those in the comparison group (N = 56), z = −1.18, p = 0.24.

The results of a Mann–Whitney U test indicate that after one year of mathematics, the overall post-test scores of the treatment group (Mdn = 40.00) and the comparison group (Mdn = 39.00) were still at the same level, z = −0.66, p = 0.51. Table 4 shows that the two groups of students did not perform differently on the test’s three cognitive domains: knowing (z = −0.14, p = 0.89), applying (z = −0.55, p = 0.58), and reasoning (z = −0.49, p = 0.96).

Table 4.

Post-test results of the treatment group (N = 61) and control group (N = 56) by cognitive domain.

Based on the findings of the teacher interviews, we identified two major themes (i.e., student learning behavior and teacher brief) that could explain the non-significant difference between the treatment and comparison groups. First, Tana expressed that despite the use of productive talk moves, the passive learning behavior of some underperforming students had not changed:

“They simply don’t care about the [open-ended] questions because they perceive that the questions are not posed for them. … Then, they will not think about the questions, lack the sense of involvement, and disengage. Therefore, they learn nothing.”

Tiffany also had similar observation and reflection. She thus planned to ask more simple questions and specifically invite the underperforming students in her classes to answer:

“I find that there is a need of designing various levels of questions. Some questions should be simpler. Also, the teacher should invite the underperforming students to answer the questions because they can never learn if we only ask those outstanding students.”

Second, the teacher participants continued to believe in the effectiveness of traditional teaching, emphasizing learning outcomes instead of the discussion process. As a result, their lessons might occasionally lack interactive engagements. This is illustrated by the following remarks:

“Students still require our guidance. The efficiency of our lesson should be ensured. Otherwise, if we allow our students free to speak, they may talk casually. The lesson then goes to waste.”(Tiffany)

“For the conversation between students, what they said may not be to the point. Of course, we can spend time on such a conversation, but class time is limited.”(Tana)

5. Discussion

In this study, teacher participants learned how to use the productive talk moves of Michaels et al. [23] and Resnick et al. [24] and engage students in class discussion. The study further used visual learning analytics of classroom videos in the treatment group, while teachers in the comparison group received conventional knowledge-based professional development. Echoing Gröschner et al. [27], we found that video-based activities can facilitate instructional changes. The results of the lesson observations indicate that, after the professional development program, teachers in the treatment group improved their use of productive talk moves (see Figure 5), increased the percentage of word contributions by students (see Figure 7), and elicited longer responses from them (see Figure 8). The teachers could thus have more information to formatively assess student learning and inform subsequent teaching [3,4]. The results are discussed in the context of (1) the use of visual learning analytics technology, (2) sustainable development of analytics-supported, video-based professional development programs, and (3) ways to improve professional development content for experienced teachers.

5.1. The Use of Visual Learning Analytics Technology

A distinctive feature of the professional development program was the use of visual learning analytics. The design of the program was grounded in activity theory and established a holistic activity system [14] to facilitate improving teacher discourse and expand the use of technology in the classroom [13]. From the reflections and interview data, the major themes we identified are situated in Vygotsky’s mediated-action model (Triangle 1 of Figure 2), where a human agent pursuing an object is mediated by tools [14,33]. In our program, the process by which participants sought instructional improvement was mediated by the visual learning analytics technology. The findings suggest that the visualization of classroom video data can increase the teachers’ understanding of their reflections on their classroom instruction and lead to instructional change.

5.2. Sustainable Development of Analytics-Supported, Video-Based Professional Development Programs

Although our program could lead to instructional improvement, the most labor-intensive procedure was to transcribe the videos manually. In the current program, we needed time to process the classroom video data, hence the limited schedule. In addition, the research team carefully identified the speaker in each talk turn to ensure the accuracy of the speaker statistics. To enable teachers’ timely reflection on their teaching, we will adopt a two-stage approach to processing their lesson videos in future professional development programs. In the first stage, we will transcribe and code the video segments by which teachers facilitate the construction of student knowledge. From our experience, these segments would usually appear at the start of a lesson and problem-solving. We will then upload the lesson videos together with partial visual learning analytics for teacher reflection. It is worth noting that although the video data processing has not been completed, teachers are still able to watch their whole lesson videos with partial visual learning analytics. In the second stage, we will transcribe and code the rest of their lessons. The lesson videos with full visual learning analytics will then be re-uploaded for teacher reflection.

In addition, establishing a long-term platform is important for sustainable development in education [42]. Further efforts should incorporate automatic speech and speaker recognition technology (e.g., [43]) into the future practice of visual learning analytics-supported or other similar video-based professional development programs. These technologies can enhance the sustainability of running the programs. At the time of our intervention, the major obstacle of automatic speech recognition in a Chinese context was a high error rate. Due to the phenomenon of swallowing, dragging, accents and dialects of Chinese speakers, four types of text errors are common using computerized recognition, including redundant words, missing words, bad word selection and mixing errors [44]. However, with advances in speech recognition technology, some researchers (e.g., [44]) are now able to use, for example, Pinyin as a method of machine translation for Chinese speech recognition error correction. By leveraging this kind of technology, the burden of transcribing classroom videos can be lifted. We can thus focus on the design and implementation of professional development activities.

5.3. Ways to Improve Professional Development Content for Experienced Teachers

Despite positive influences on the classroom instruction of teachers, the students in the treatment group did not perform significantly better than those in the comparison group in mathematics achievement (see Table 4). This non-significant result is not surprising since a recent review by Lindvall and Ryve [45] suggested that teacher professional development programs generally have a limited impact on student learning outcomes. In this study, however, we can identify areas for improvement. The results of our lesson observations suggest that teachers were less likely to ask their students to comment on their classmates. Specifically, they tended to confirm students’ answers and clarify their misunderstandings directly with limited use of teacher-to-class productive talk moves (see Table 3) that encourage students to think with others (e.g., “Why do you think he said that?”). The findings of the teacher interviews suggest that their inclination to provide correct answers was rooted in a desire to ensure the effectiveness of teaching. However, this kind of teacher-led discussion might sacrifice the benefits for students in the discussion process [46]. In other words, the limited use of teacher-to-class productive talk moves was not sufficient to engage the students in a cognitive exercise [47]. According to Topping and Ehly [48], simplification, clarification and exemplification in the peer-assisted learning process can trigger further cognitive challenges and promote deeper learning. In contrast, some novice teachers appear to be open-minded about allowing students to make mistakes and they stress the discussion process [46]. Future professional development programs should increase the awareness of experienced teachers about the value and use of teacher-to-class productive talk moves and support their specific learning needs [49,50].

6. Conclusions and Limitation

In this study, we examined the influence of an analytics-supported, video-based professional development program on two experienced secondary school mathematics teachers relative to two comparison teachers. The program elicited several changes in the treatment of the teachers’ classroom discourse. Specifically, the teachers used more productive talk moves and their students spoke up more often. The study contributes to our knowledge of using visual learning analytics with the theoretical foundation of activity theory in teacher professional development. Such professional development programs can be improved by using automatic speech and speaker recognition technology to lighten teacher educators’ burden of video data processing.

As for the study’s limitation, we collected data from four sources throughout the year with a specific focus on four experienced teachers and their students. In subsequent studies, it would be valuable to look for teachers’ instructional change on a larger scale. In this way, we can gain a more general insight into teacher learning under an analytics-supported, video-based professional development environment.

Author Contributions

Formal analysis, investigation, writing—original draft preparation, writing—review and editing, and visualization, C.K.L.; conceptualization, methodology, software, validation, resources, data curation, supervision, project administration, funding acquisition, G.C. Both authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Research Grants Council (RGC), University Grants Committee of Hong Kong, grants number 27606915 and 17608318.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by Human Research Ethics Committee for Non-Clinical Faculties of The University of Hong Kong (protocol code: EA1502067; date of approval: 5 March 2015).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data samples and detailed coding procedures can be accessed by contacting the author.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Figure A1 shows a screenshot of the interface of the classroom discourse analyzer tool with the visualization of teacher and student talk in a lesson. In the visualization area (lower left area), the x-axis indicates the turn number of talk and each line (color) on the y-axis represents a speaker in the lesson. The teacher’s bubbles (red) are shown at the bottom. Bubble size indicates the number of words in a turn. At the right side of each line, the integer indicates total words spoken by the corresponding speaker in the lesson, while the percentage is the ratio to the total words by all speakers in the whole-class talk of the lesson.

Figure A1.

Screenshot of the interface of the classroom discourse analyzer tool.

References

- Doan, K.; Peters, M. Scratching the seven-year itch. Principal 2009, 89, 18–22. [Google Scholar]

- Hargreaves, A.; Fullan, M. The power of professional capital: With an investment in collaboration, teachers become nation builders. J. Staff Dev. 2013, 34, 36–39. [Google Scholar]

- Black, P.; Harrison, C.; Lee, C.; Marshall, B.; Wiliam, D. Assessment for Learning: Putting It into Practice; Open University Press: Maidenhead, UK, 2003. [Google Scholar]

- Jiang, Y. Exploring teacher questioning as a formative assessment strategy. RELC J. 2014, 45, 287–304. [Google Scholar] [CrossRef]

- Black, P.; Wiliam, D. Developing the theory of formative assessment. Educ. Assess. Eval. Account. 2009, 21, 5–31. [Google Scholar] [CrossRef] [Green Version]

- Borko, H.; Koellner, K.; Jacobs, J.; Seago, N. Using video representations of teaching in practice-based professional development programs. ZDM 2011, 43, 175–187. [Google Scholar] [CrossRef]

- Kang, H.; van Es, E.A. Articulating design principles for productive use of video in preservice education. J. Teach. Educ. 2019, 70, 237–250. [Google Scholar] [CrossRef]

- Sherin, M.G.; Dyer, E.B. Mathematics teachers’ self-captured video and opportunities for learning. J. Math. Teach. Educ. 2017, 20, 477–495. [Google Scholar] [CrossRef]

- Watters, J.J.; Diezmann, C.M.; Dao, L. Using classroom videos to stimulate professional conversations among pre-service teachers: Windows into a mathematics classroom. Asia-Pac. J. Teach. Educ. 2018, 46, 239–255. [Google Scholar] [CrossRef]

- Erickson, F. Ways of seeing video. In Video Research in the Learning Sciences; Goldman, R., Pea, R., Barron, B., Derry, S.J., Eds.; Routledge: New York, NY, USA, 2007; pp. 145–155. [Google Scholar]

- Gaudin, C.; Chaliès, S. Video viewing in teacher education and professional development: A literature review. Educ. Res. Rev. 2015, 16, 41–67. [Google Scholar] [CrossRef]

- Chen, G.; Clarke, S.N.; Resnick, L.B. Classroom discourse analyzer (CDA): A discourse analytic tool for teachers. Technol. Instr. Cogn. Learn. 2015, 10, 85–105. [Google Scholar]

- Vieira, C.; Parsons, P.; Byrd, V. Visual learning analytics of educational data: A systematic literature review and research agenda. Comput. Educ. 2018, 122, 119–135. [Google Scholar] [CrossRef]

- Blayone, T.J.B. Theorising effective uses of digital technology with activity theory. Technol. Pedagog. Educ. 2019, 28, 447–462. [Google Scholar] [CrossRef]

- Major, L.; Watson, S. Using video to support in-service teacher professional development: The state of the field, limitations and possibilities. Technol. Pedagog. Educ. 2018, 27, 49–68. [Google Scholar] [CrossRef]

- Roca-Campos, E.; Renta-Davids, A.I.; Marhuenda-Fluixá, F.; Flecha, R. Educational impact evaluation of professional development of in-service teachers: The case of the dialogic pedagogical gatherings at Valencia “on giants’ shoulders”. Sustainability 2021, 13, 4275. [Google Scholar] [CrossRef]

- Ramírez-Montoya, M.S.; Andrade-Vargas, L.; Rivera-Rogel, D.; Portuguez-Castro, M. Trends for the future of education programs for professional development. Sustainability 2021, 13, 7244. [Google Scholar] [CrossRef]

- Choi, Y.; Cho, Y.I. Learning analytics using social network analysis and Bayesian network analysis in sustainable computer-based formative assessment system. Sustainability 2020, 12, 7950. [Google Scholar] [CrossRef]

- Jeong, J.S.; González-Gómez, D.; Prieto, F.Y. Sustainable and flipped STEM education: Formative assessment online interface for observing pre-service teachers’ performance and motivation. Educ. Sci. 2020, 10, 283. [Google Scholar] [CrossRef]

- Villa-Ochoa, J.A.; Sánchez-Cardona, J.; Rendón-Mesa, P.A. Formative assessment of pre-service teachers’ knowledge on mathematical modeling. Mathematics 2021, 9, 851. [Google Scholar] [CrossRef]

- Haug, B.S.; Ødegaard, M. Formative assessment and teachers’ sensitivity to student responses. Int. J. Sci. Educ. 2015, 37, 629–654. [Google Scholar] [CrossRef]

- Nguyen, P.M.; Terlouw, C.; Pilot, A. Culturally appropriate pedagogy: The case of group learning in a Confucian heritage culture context. Intercult. Educ. 2006, 17, 1–19. [Google Scholar] [CrossRef]

- Michaels, S.; O’Connor, C.; Resnick, L.B. Deliberative discourse idealized and realized: Accountable talk in the classroom and in civic life. Stud. Philos. Educ. 2008, 27, 283–297. [Google Scholar] [CrossRef]

- Resnick, L.B.; Michaels, S.; O’Connor, M.C. How (well-structured) talk builds the mind. In Innovations in Educational Psychology: Perspectives on Learning, Teaching, and Human Development; Preiss, D.D., Sternberg, R.J., Eds.; Springer: New York, NY, USA, 2010; pp. 163–194. [Google Scholar]

- Garcia-Mila, M.; Miralda-Banda, A.; Luna, J.; Remesal, A.; Castells, N.; Gilabert, S. Change in classroom dialogicity to promote cultural literacy across educational levels. Sustainability 2021, 13, 6410. [Google Scholar] [CrossRef]

- Shute, V.J. Focus on formative feedback. Rev. Educ. Res. 2008, 78, 153–189. [Google Scholar] [CrossRef]

- Gröschner, A.; Schindler, A.K.; Holzberger, D.; Alles, M.; Seidel, T. How systematic video reflection in teacher professional development regarding classroom discourse contributes to teacher and student self-efficacy. Int. J. Educ. Res. 2018, 90, 223–233. [Google Scholar] [CrossRef]

- Martín-García, R.; López-Martín, C.; Arguedas-Sanz, R. Collaborative learning communities for sustainable employment through visual tools. Sustainability 2020, 12, 2569. [Google Scholar] [CrossRef] [Green Version]

- Thomas, J.J.; Cook, K.A. A visual analytics agenda. IEEE Comput. Graph. Appl. 2006, 26, 10–13. [Google Scholar] [CrossRef] [PubMed]

- Chen, G. A visual learning analytics (VLA) approach to video-based teacher professional development: Impact on teachers’ beliefs, self-efficacy, and classroom talk practice. Comput. Educ. 2020, 144, 103670. [Google Scholar] [CrossRef]

- Baldonado, M.Q.; Woodruff, A.; Kuchinsky, A. Guidelines for using multiple views in information visualization. In AVI ‘00: Proceedings of the Working Conference on Advanced Visual Interfaces, Palermo, Italy, May 2000; Association for Computing Machinery: New York, NY, USA, 2000; pp. 110–119. [Google Scholar]

- Shneiderman, B. The eyes have it: A task by data type taxonomy for information visualizations. In The Craft of Information Visualization: Readings and Reflections; Bederson, B.B., Shneidermanm, B., Eds.; Morgan Kaufmann: San Francisco, CA, USA, 2003; pp. 364–371. [Google Scholar]

- Engeström, Y. Learning by Expanding: An. Activity-Theoretical Approach to Developmental Research, 2nd ed.; Cambridge University Press: New York, NY, USA, 2015. [Google Scholar]

- Wertsch, J.V.; Tulviste, P.L.S. Vygotsky and contemporary developmental psychology. Dev. Psychol. 1992, 28, 548–557. [Google Scholar] [CrossRef]

- Corbin, J.M.; Strauss, A.L. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory; SAGE: Los Angeles, CA, USA, 2008. [Google Scholar]

- Maxwell, J.A. Qualitative Research Design: An Interactive Approach, 2nd ed.; SAGE: Thousand Oaks, CA, USA, 2005. [Google Scholar]

- Johnson, R.B. Examining the validity structure of qualitative research. Education 1997, 118, 282–292. [Google Scholar]

- Mullis, V.S.; Martin, M.O. TIMSS 2019 Assessment Frameworks; Boston College: Chestnut Hill, PA, USA, 2017. [Google Scholar]

- International Association for the Evaluation of Educational Achievement. TIMSS 2011 Grade 8 Released Mathematics Items. 2013. Available online: https://nces.ed.gov/timss/pdf/TIMSS2011_G8_Math.pdf (accessed on 1 July 2021).

- López, X.; Valenzuela, J.; Nussbaum, M.; Tsai, C.C. Some recommendations for the reporting of quantitative studies. Comput. Educ. 2015, 91, 106–110. [Google Scholar] [CrossRef]

- Field, A. Discovering Statistics Using SPSS, 3rd ed.; SAGE: London, UK, 2009. [Google Scholar]

- Jeong, J.S.; González-Gómez, D. Adapting to PSTs’ pedagogical changes in sustainable mathematics education through flipped e-learning: Ranking its criteria with MCDA/F-DEMATEL. Mathematics 2020, 8, 858. [Google Scholar] [CrossRef]

- Kelly, S.; Olney, A.M.; Donnelly, P.; Nystrand, M.; D’Mello, S.K. Automatically measuring question authenticity in real-world classrooms. Educ. Res. 2018, 47, 451–464. [Google Scholar] [CrossRef]

- Duan, D.; Liang, S.; Han, Z.; Yang, W. Pinyin as a feature of neural machine translation for Chinese speech recognition error correction. In Chinese Computational Linguistics. CCL 2019. Lecture Notes in Computer Science; Sun, M., Huang, X., Ji, H., Liu, Z., Liu, Y., Eds.; Springer: Cham, Switzerland, 2019; Volume 11856, pp. 651–663. [Google Scholar]

- Lindvall, J.; Ryve, A. Coherence and the positioning of teachers in professional development programs. A systematic review. Educ. Res. Rev. 2019, 27, 140–154. [Google Scholar] [CrossRef]

- Li, H.C.; Tsai, T.L. Investigating teacher pedagogical changes when implementing problem-based learning in a year 5 mathematics classroom in Taiwan. Asia-Pac. Educ. Res. 2018, 27, 355–364. [Google Scholar] [CrossRef]

- Resnick, L.B.; Asterhan, C.S.; Clarke, S.N.; Schantz, F. Next generation research in dialogic learning. In The Wiley Handbook of Teaching and Learning; Hall, G.E., Quinn, L.F., Gollnick, D.M., Eds.; John Wiley & Sons: Newark, NJ, USA, 2018; pp. 323–338. [Google Scholar]

- Topping, K.J.; Ehly, S.W. Peer-Assisted Learning; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1998. [Google Scholar]

- Chen, G.; Chan, C.K.; Chan, K.K.; Clarke, S.N.; Resnick, L.B. Efficacy of video-based teacher professional development for increasing classroom discourse and student learning. J. Learn. Sci. 2020, 29, 642–680. [Google Scholar] [CrossRef]

- Chen, G. Visual learning analytics to support classroom discourse analysis for teacher professional learning and development. In International Handbook of Research on Dialogic Education; Mercer, N., Wegerif, R., Major, L., Eds.; Routledge: Abingdon, UK, 2019; pp. 167–181. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).