Detecting Apples in the Wild: Potential for Harvest Quantity Estimation

Abstract

1. Introduction

2. Methods and Methodology

- information about chosen fruits, such as color and quality rate, resulting from the counting.

- Convolutional Neural Networks (CNN)—This method is more accurate than the latest one based on Gaussian Mixture Models (GMMs). The multi-class classification approach used in this method provides an accuracy of 80% to 94% without the need for any pre-or post-processing steps. The deep learning network reacts to different fruit colors and lighting conditions. To check the suitability of the method for yield estimation, tests were conducted. The described method allows the achievement of approximately 96% accuracy concerning the actual number of apples [14].

- Deep Simulated Learning (DSL)—Automatic number of fruits alone estimation based on robotic agriculture provides a real solution in this area. The network is fully trained on synthetic data and tested on real data. To capture functions on multiple scales, a modified version of the Inception-ResNet architecture was used. The algorithm counts effectively even if the fruits are hidden in the shade, obscured by leaves or branches, or if the fruits overlap to some extent. Experimental results show 91% average test accuracy in real images and 93% in synthetic images. The proposed methodology works effectively even if the variant has a lighting deviation [23].

- Mixed method—This method combines deep segmentation, frame-to-fruit tracking and 3D location to accurately count the visible fruits in the image sequence. Segmentation is performed on the monocular camera image stream, both in natural light and under controlled night-time lighting. The first step is to train a fully revolutionary network (FCN) and to divide the video frame images into fruit and fruit pixels. Then, frame-by-frame fruit is tracked using a Hungarian algorithm, in which an objective result is determined based on the improved Kalman filter, i.e., Kanade–Lucas–Tomasi (KLT) [42].

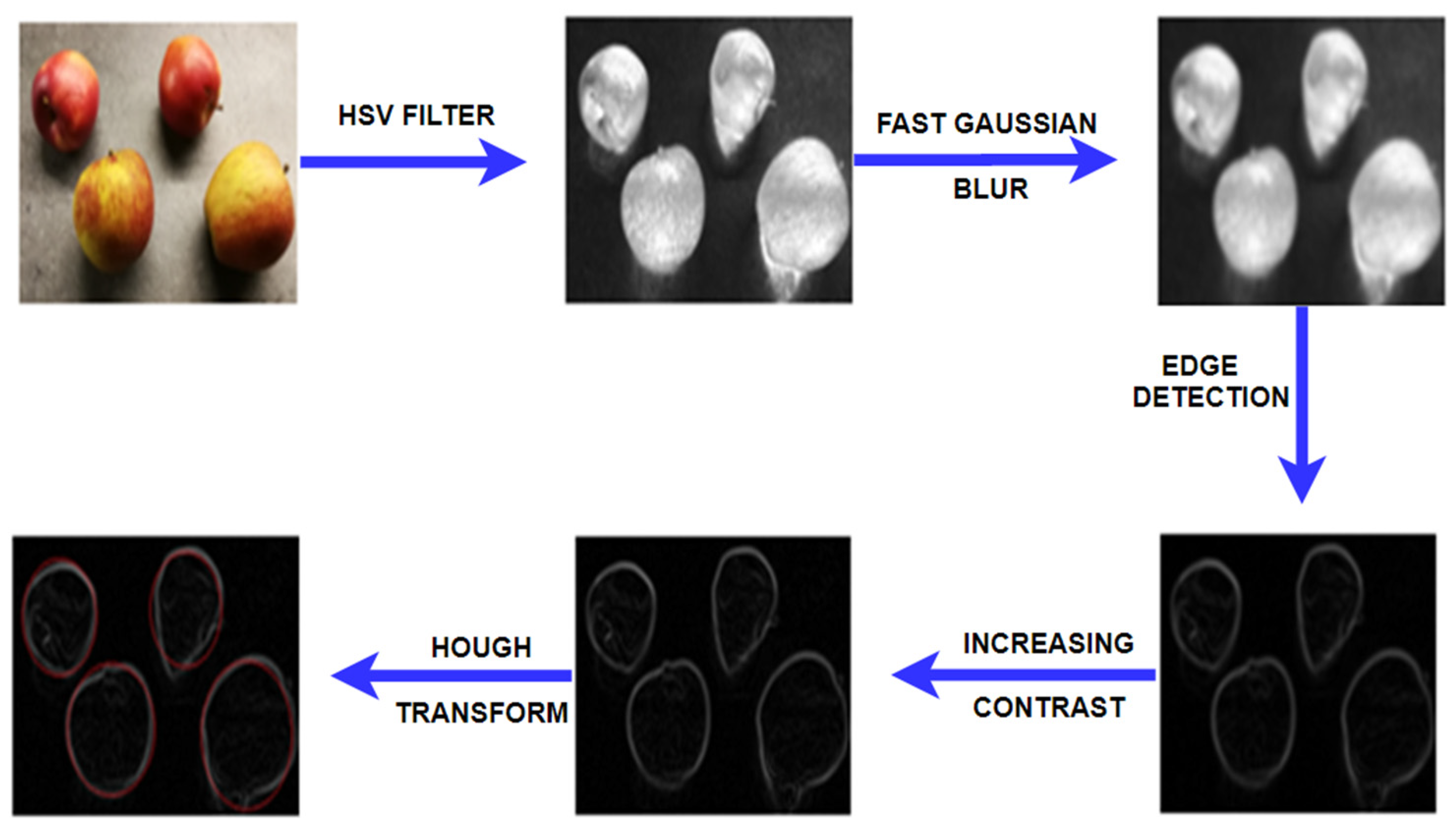

2.1. The Use of Image Filtration and Hough Transform—Solution A

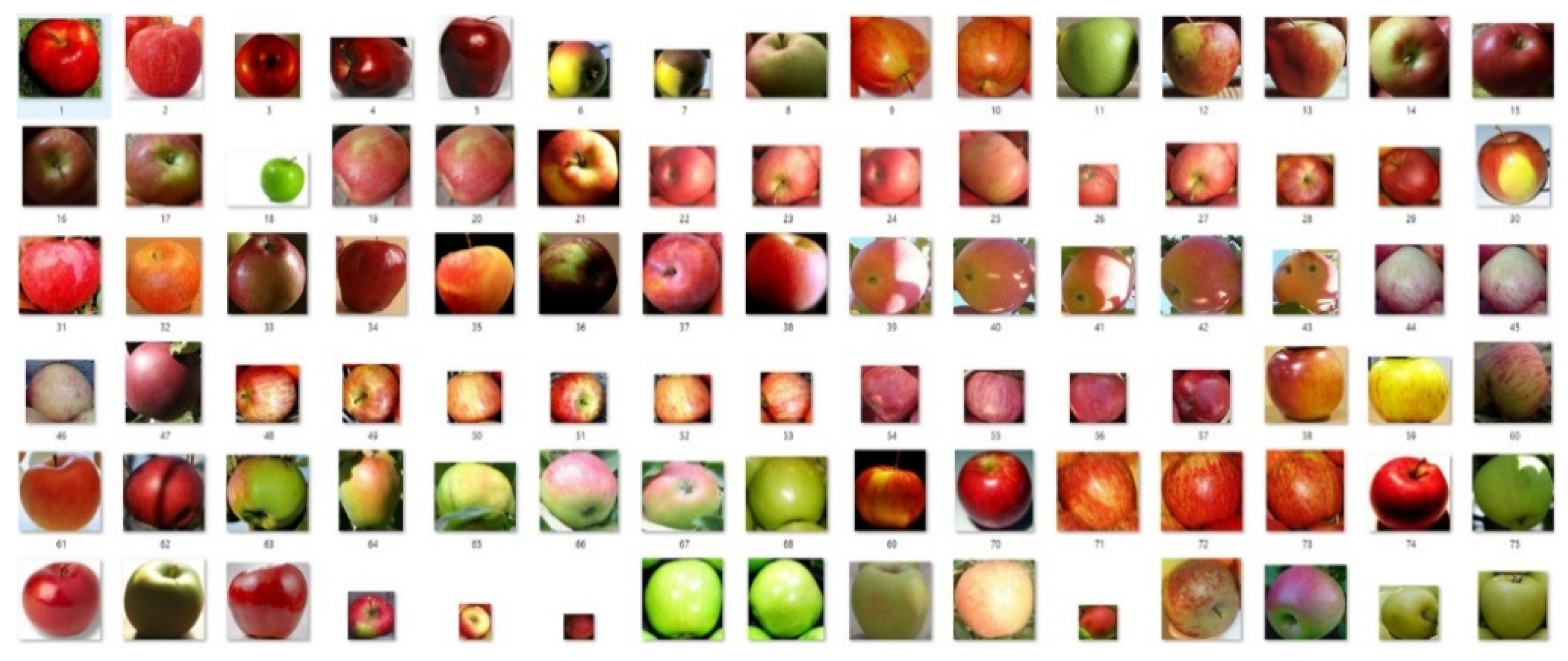

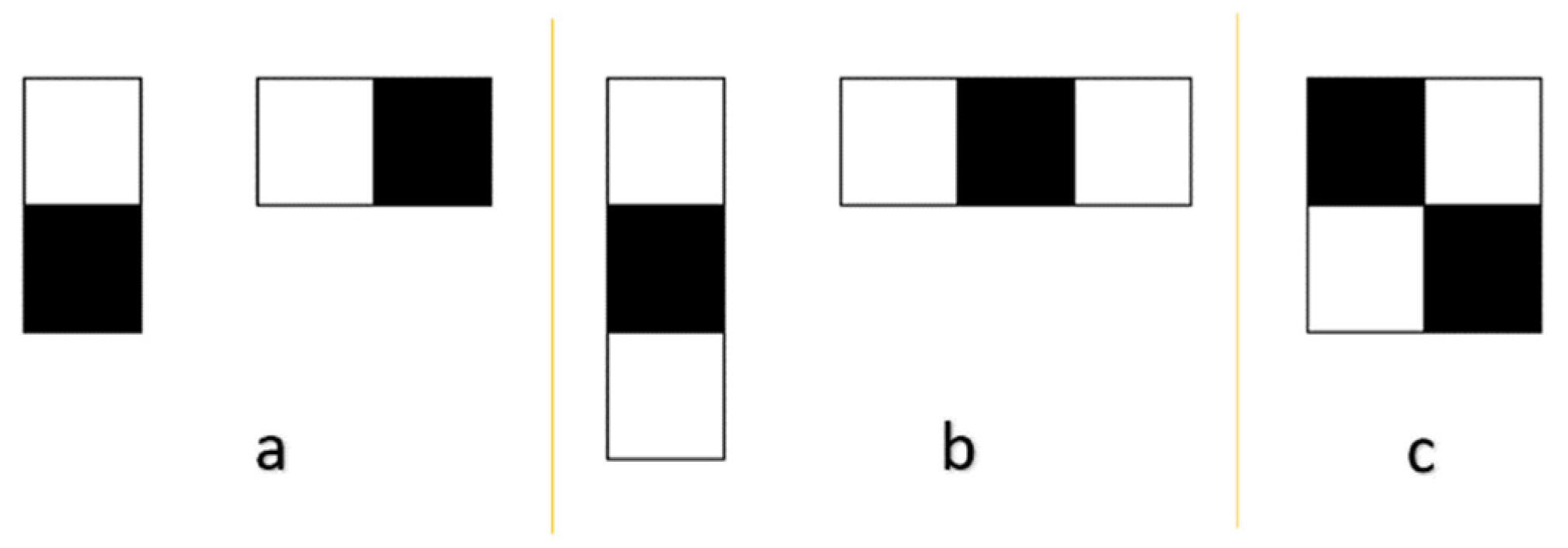

2.2. Viola–Jones Object Detection—Solution B

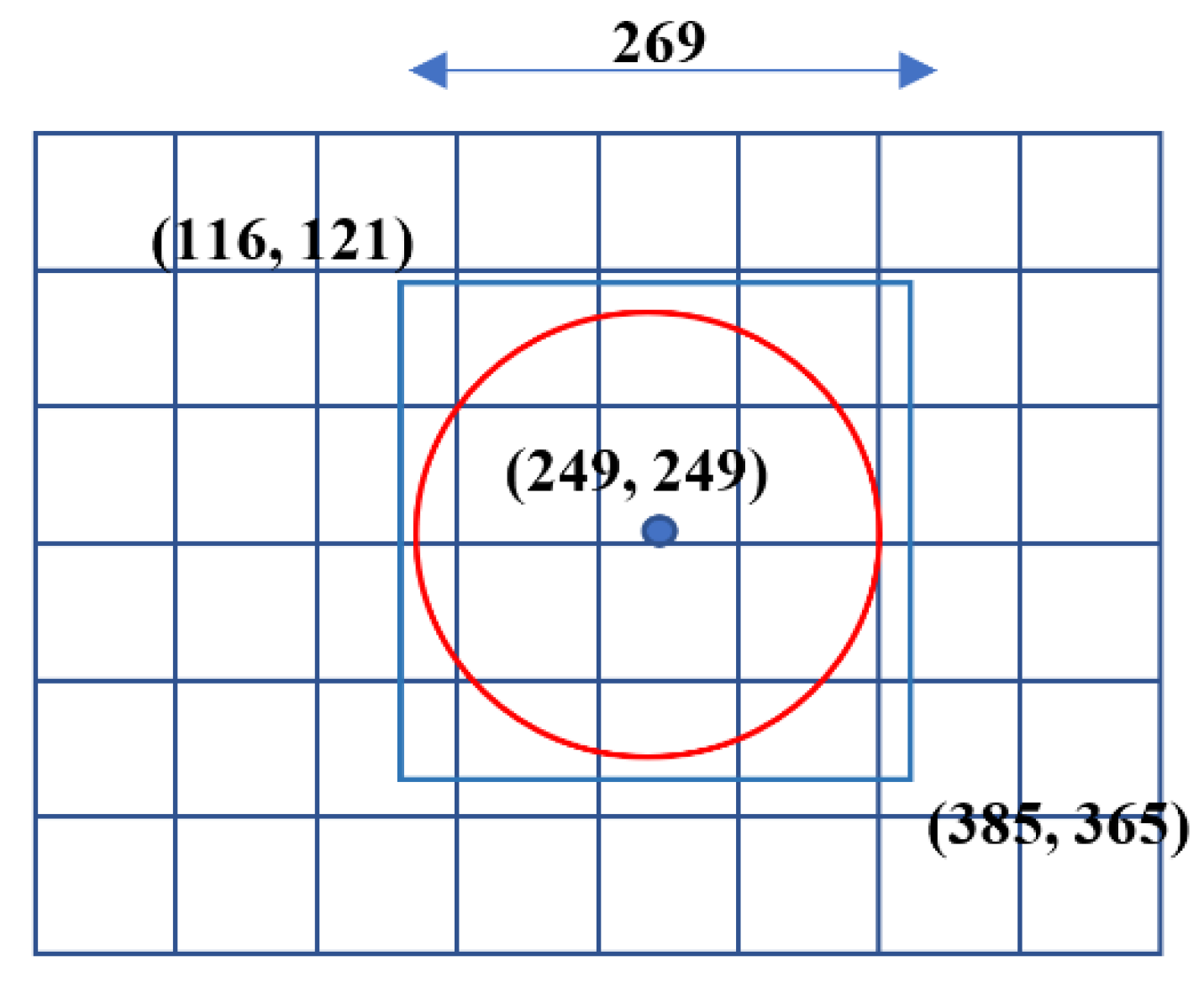

2.3. YOLO: Real-Time Object Detection—Solution C

- x blue box = (385 − 116)/2 = 135 but normalized and related to the corresponding grid cell, here: (135 − 100)/100 = 0.35

- y blue box = (365 − 121)/2 = 122 but normalized and related to the relevant grid cell, here: (122 − 100)/100 = 0.22

- w width blue box = (385 − 116)/500 = 0.54 (normalization in relation to the width of the whole image)

- h height blue box = (365 − 121)/500 = 0.49 (normalization relative to the height of the whole image)

- c confidence, which is the Intersection over Union (IoU) between the predicted box and the ground truth (c = area of overlap/area of union).

3. Results

- change of the clarity in the arbitrary range from −18 to 18 levels,

- noise removal using the non-local means algorithm,

- Gamma correction from 1.1 to 1.6,

- Increase in the number of detectable items from 27% to 80% (Figure 8).

- Anti-noise filters: non-local means filters are suggested but it is possible to experiment with local ones,

- Edge detecting algorithms, for example, operators based on the first derivative (Prewitt, Sobel, Canny, Scharr or Roberts) or second derivative (Marr–Hildreth algorithms [10]),

- Use of a thermal imaging camera. The literature related to the subject of study includes an effective attempt to use a thermal infrared camera; however, its cost is extremely high, which limits the scale of the task, and the achievable resolution is still not satisfactory.

4. Discussion

5. Conclusions

- efficiency,

- possibility of implementation on mobile devices,

- effectiveness,

- ability to increase the effectiveness by constantly supplementing the YOLO training patterns requiring time for specific apple cultivars.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wu, D.; Lv, S.; Jiang, M.; Song, H. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Wang, X.A.; Tang, J.; Whitty, M. Side-view apple flower mapping using edge-based fully convolutional networks for variable-rate chemical thinning. Comput. Electron. Agric. 2020, 178, 105673. [Google Scholar] [CrossRef]

- Vasconez, J.P.; Delpiano, J.; Vougioukas, S.; Cheein, F.A. Comparison of convolutional neural networks in fruit detection and counting: A comprehensive evaluation. Comput. Electron. Agric. 2020, 173, 105348. [Google Scholar] [CrossRef]

- Samiei, S.; Rasti, P.; Richard, P.; Galopin, G.; Rousseau, D. Toward joint acquisition-annotation of images with egocentric devices for a lower-cost machine learning application to apple detection. Sensors 2020, 20, 4173. [Google Scholar] [CrossRef] [PubMed]

- Gao, F.; Fu, L.; Zhang, X.; Majeed, Y.; Li, R.; Karkee, M.; Zhang, Q. Multi-class fruit-on-plant detection for an apple in SNAP system using Faster R-CNN. Comput. Electron. Agric. 2020, 176, 105634. [Google Scholar] [CrossRef]

- Fu, L.; Majeed, Y.; Zhang, X.; Karkee, M.; Zhang, Q. Faster R–CNN–based apple detection in dense-foliage fruiting-wall trees using RGB and depth features for robotic harvesting. Biosyst. Eng. 2020, 197, 245–256. [Google Scholar] [CrossRef]

- Przyborski, M.; Szczechowski, B.; Szubiak, W.; Szulwic, J.; Widerski, T. Photogrammetric Development of the Threshold Water at the Dam on the Vistula River in Wloclawek from Unmanned Aerial Vehicles (UAV). In Proceedings of the 15th International Multidisciplinary Scientific GeoConference SGEM 2015, Albena, Bulgaria, 18–24 June 2015; Volume I, pp. 493–500. [Google Scholar] [CrossRef]

- Zhang, X.; He, L.; Karkee, M.; Whiting, M.D.; Zhang, Q. Field evaluation of targeted shake-and-catch harvesting technologies for fresh market apple. Trans. ASABE 2020, 63. [Google Scholar] [CrossRef]

- Jidong, L.; De-An, Z.; Wei, J.; Shihong, D. Recognition of apple fruit in natural environment. Optik 2016, 127, 1354–1632. [Google Scholar] [CrossRef]

- Maldonado, W., Jr.; Barbosa, J.C. Automatic green fruit counting in orange trees using digital images. Comput. Electron. Agric. 2016, 127, 572–581. [Google Scholar] [CrossRef]

- Liu, J.; Yuan, Y.; Zhou, Y.; Zhu, X.; Syed, T.N. Experiments and analysis of close-shot identification of on-branch citrus fruit with realsense. Sensors 2018, 18, 1510. [Google Scholar] [CrossRef]

- Kowalczyk, C.; Nowak, M.; Źróbek, S. The concept of studying the impact of legal changes on the agricultural real estate market. Land Use Policy 2019, 86, 229–237. [Google Scholar] [CrossRef]

- Renigier-Bilozor, M.; Wisniewski, R.; Bilozor, A. Rating attributes toolkit for the residential property market. Int. J. Strateg. Prop. Manag. 2017, 21, 307–317. [Google Scholar] [CrossRef]

- Hani, N.; Pravakar, R.; Volkan, I. Apple counting using convolutional neural networks. Int. Conf. Intell. Robot. Syst. 2018. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems, Proceedings of the Neural Information Processing Systems Conference, Montreal, QC, Canada, 7–12 December 2015; Neural Information Processing Systems Foundation, Inc.: Ljubljana, Slovenia, 2015; pp. 91–99. [Google Scholar]

- Laliberte, A.S.; Ripple, W.J. Automated wildlife counts from remotely sensed imagery. Wildl. Soc. Bull. 2003, 31, 362–371. [Google Scholar]

- Del Río, J.; Aguzzi, J.; Costa, C.; Menesatti, P.; Sbragaglia, V.; Nogueras, M.; Sarda, F.; Manuèl, A. A new colorimetrically-calibrated automated video-imaging protocol for day-night fish counting at the OBSEA coastal cabled observatory. Sensors 2013, 13, 14740–14753. [Google Scholar] [CrossRef]

- Ryan, D.; Denman, S.; Fookes, C.; Sridharan, S. Crowd counting using multiple local features. In Proceedings of the Digital Image Computing: Techniques and Applications, Melbourne, Australia, 1–3 December 2009; pp. 81–88. [Google Scholar]

- Ma, Y.; Wu, X.; Yu, G.; Xu, Y.; Wang, Y. Pedestrian detection and tracking from low-resolution unmanned aerial vehicle thermal imagery. Sensors 2016, 16, 446. [Google Scholar] [CrossRef] [PubMed]

- Ramos, F.A.; Prieto, E.C.; Montoya, C.E. Oliveros, Automatic fruit count on coffee branches using computer vision. Comput. Electron. Agric. 2017, 137, 9–22. [Google Scholar] [CrossRef]

- Liu, X.; Chen, S.W.; Aditya, S.; Sivakumar, N.; Dcunha, S.; Qu, C.; Taylor, C.J.; Das, J.; Kumar, V. Robust fruit counting: Combining deep learning, tracking, and structure from motion. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1045–1052. [Google Scholar] [CrossRef]

- Patel, H.N.; Jain, R.K.; Joshi, M.V. Fruit detection using improved multiple features based algorithm. Int. J. Comput. Appl. 2011, 13, 1–5. [Google Scholar] [CrossRef]

- Rahnemoonfar, M.; Sheppard, C. Deep count: Fruit counting based on deep simulated learning. Sensors 2017, 17, 905. [Google Scholar] [CrossRef] [PubMed]

- Bagheri, N.; Mohamadi-Monavar, H.; Azizi, A.; Ghasemi, A. Detection of Fire Blight disease in pear trees by hyperspectral data. Eur. J. Remote Sens. 2018, 51. [Google Scholar] [CrossRef]

- Martinelli, F.; Scalenghe, R.; Davino, S.; Panno, S.; Scuderi, G.; Ruisi, P.; Villa, P.; Stroppiana, D.; Boschetti, M.; Goulart, L.R.; et al. Advanced methods of plant disease detection. A review. Agron. Sustain. Dev. 2015, 35, 1–25. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, Y.; Deng, Y.; Yi, X. Endozoochory by granivorous rodents in seed dispersal of green fruits. Can. J. Zool. 2018. [Google Scholar] [CrossRef]

- Bhargava, A.; Bansal, A. Fruits and vegetables quality evaluation using computer vision: A Review. J. King Saud Univ. Comput. Inf. Sci. 2018. [Google Scholar] [CrossRef]

- Al Ohali, Y. Computer vision based date fruit grading system: Design and implementation. J. King Saud Univ. Comput. Inf. Sci. 2011, 23, 29–36. [Google Scholar] [CrossRef]

- Blasco, J.; Aleixos, N.; Molto, E. Computer vision detection of peel defects in citrus by means of a region oriented segmentation algorithm. J. Food Eng. 2007, 81, 535–543. [Google Scholar] [CrossRef]

- Lak, M.B.; Minaei, S.; Amiriparian, J.; Beheshti, B. Apple fruits recognition under natural luminance using machine vision. Adv. J. Food Sci. Technol. 2010, 2, 325–327. [Google Scholar]

- Lingua, A.; Marenchino, D.; Nex, F. Performance analysis of the SIFT operator for automatic feature extraction and matching in photogrammetric applications. Sensors 2009, 9, 3745–3766. [Google Scholar] [CrossRef]

- Luna, E.; San Miguel, J.C.; Ortego, D.; Martínez, J.M. Abandoned object detection in video-surveillance: Survey and comparison. Sensors 2018, 18, 4290. [Google Scholar] [CrossRef]

- Zhou, R.; Zhong, D.; Han, J. Fingerprint identification using SIFT-based minutia descriptors and improved all descriptor-pair matching. Sensors 2013, 13, 3142–3156. [Google Scholar] [CrossRef]

- Jiang, N.; Song, W.; Wang, H.; Guo, G.; Liu, Y. Differentiation between organic and non-organic apples using diffraction grating and image processing—A cost-effective approach. Sensors 2018, 18, 1667. [Google Scholar] [CrossRef]

- Wang, Z.; Koirala, A.; Walsh, K.; Anderson, N.; Verma, B. In field fruit sizing using a smart phone application. Sensors 2018, 18, 3331. [Google Scholar] [CrossRef] [PubMed]

- Akin, C.; Kirci, M.; Gunes, E.O.; Cakir, Y. Detection of the pomegranate fruits on tree using image processing. In Proceedings of the 2012 First International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Shanghai, China, 2–4 August 2012; IEEE: New York, NY, USA; pp. 1–4. [Google Scholar] [CrossRef]

- Sahu, D.; Potdar, R.M. Defect identification and maturity detection of mango fruits using image analysis. Am. J. Artif. Intell. 2017, 1, 5–14. [Google Scholar] [CrossRef]

- Blazek, M.; Janowski, A.; Kazmierczak, M.; Przyborski, M.; Szulwic, J. Web-cam as a means of information about emotional attempt of students in the process of distant learning. In Proceedings of the 7th International Conference of Education, Research and Innovation, Seville, Spain, 17–19 November 2014; pp. 3787–3796. [Google Scholar] [CrossRef]

- Nagrodzka-Godycka, K.; Szulwic, J.; Ziolkowski, P. State-of-the-art framework for high-speed camera and photogrammetric use in geometry evaluation of prestressed concrete failure process. In Proceedings of the 16th International Multidisciplinary Scientific Geoconference (SGEM 2016), Albena, Bulgaria, 30 June–6 July 2016. [Google Scholar]

- Ziolkowski, P.; Niedostatkiewicz, M. Machine learning techniques in concrete mix design. Materials 2019, 12, 1256. [Google Scholar] [CrossRef]

- Bellocchio, E.; Ciarfuglia, T.A.; Costante, G.; Valigi, P. Weakly supervised fruit counting for yield estimation using spatial consistency. IEEE Robot. Autom. Lett. 2019, 4, 2348–2355. [Google Scholar] [CrossRef]

- Xu, Y.; Yu, G.; Wang, Y.; Wu, X.; Ma, Y. A hybrid vehicle detection method based on Viola-Jones and HOG+ SVM from UAV images. Sensors 2016, 16, 1325. [Google Scholar] [CrossRef] [PubMed]

- Dorj, U.O.; Lee, M.; Yun, S.S. An yield estimation in citrus orchards via fruit detection and counting using image processing. Comput. Electron. Agric. 2017, 140, 103–112. [Google Scholar] [CrossRef]

- Al-Saddik, H.; Laybros, A.; Billiot, B.; Cointault, F. Using image texture and spectral reflectance analysis to detect yellowness and esca in grapevines at leaf-level. Remote Sens. 2018, 10, 618. [Google Scholar] [CrossRef]

- Yamamoto, K.; Guo, W.; Yoshioka, Y.; Ninomiya, S. On plant detection of intact tomato fruits using image analysis and machine learning methods. Sensors 2014, 14, 12191–12206. [Google Scholar] [CrossRef]

- Argialas, D.P.; Mavrantza, O.D. Comparison of Edge Detection and Hough Transform Techniques for the Extraction of Geologic features. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 2004, Volume 34 (Part XXX). Available online: https://www.cartesia.org/geodoc/isprs2004/comm3/papers/376.pdf (accessed on 17 December 2020).

- Janowski, A. The circle object detection with the use of M split estimation. E3S Web Conf. 2018, 26, 00014. [Google Scholar] [CrossRef]

- Janowski, A.; Bobkowska, K.; Szulwic, J. 3D modelling of cylindrical-shaped objects from lidar data—An assessment based on theoretical modelling and experimental data. Metrol. Meas. Syst. 2018, 25, 47–56. [Google Scholar] [CrossRef]

- Aashish, K.; Vijayalakshmi, A. Comparison of Viola-Jones and Kanade-Lucas-Tomasi face detection algorithms. Orient. J. Comput. Sci. Technol. 2017. [Google Scholar] [CrossRef][Green Version]

- Viola, P.; Jones, M.J. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Jarolmasjed, S.; Khot, L.R.; Sankaran, S. Hyperspectral imaging and spectrometry-derived spectral features for bitter pit detection in storage apples. Sensors 2018, 18, 1561. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Grishik, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2018, arXiv:1506.02640. Available online: https://pjreddie.com/media/files/papers/yolo.pdf (accessed on 17 December 2020).

- Wei, X.; Jia, K.; Lan, J.; Li, Y.; Zeng, Y.; Wang, C. Automatic method of fruit object extraction under complex agricultural background for vision system of fruit picking robot. Optik 2014, 125, 5684–5689. [Google Scholar] [CrossRef]

- Linker, R.; Cohen, O.; Naor, A. Determination of the number of green apples in RGB images recorded in orchards. Comput. Electron. Agric. 2012, 81, 45–57. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Maleva, T.; Soloviev, V. Using YOLOv3 algorithm with pre-and post-processing for apple detection in fruit-harvesting robot. Agronomy 2020, 10, 1016. [Google Scholar] [CrossRef]

- Payne, A.; Walsh, K.; Subedi, P.; Jarvis, D. Estimating mango crop yield using image analysis using fruit at ‘stone hardening’stage and night time imaging. Comput. Electron. Agric. 2014, 100, 160–167. [Google Scholar] [CrossRef]

- Zhao, Y.; Gong, L.; Huang, Y.; Liu, C. Robust tomato recognition for robotic harvesting using feature images fusion. Sensors 2016, 16, 173. [Google Scholar] [CrossRef] [PubMed]

- Qiang, L.; Jianrong, C.; Bin, L.; Lie, D.; Yajing, Z. Identification of fruit and branch in natural scenes for citrus harvesting robot using machine vision and support vector machine. Int. J. Agric. Biol. Eng. 2014, 7, 115–121. [Google Scholar]

- Kelman, E.E.; Linker, R. Vision-based localisation of mature apples in tree images using convexity. Biosyst. Eng. 2014, 118, 174–185. [Google Scholar] [CrossRef]

- Kurtulmus, F.; Lee, W.S.; Vardar, A. Immature peach detection in colour images acquired in natural illumination conditions using statistical classifiers and neural network. Precis. Agric. 2014, 15, 57–79. [Google Scholar] [CrossRef]

- Häni, N.; Roy, P.; Isler, V. A comparative study of fruit detection and counting methods for yield mapping in apple orchards. J. Field Robot. 2020, 37, 263–282. [Google Scholar] [CrossRef]

- Database of Images Used to This Article—Multicolour. Available online: https://mostwiedzy.pl/en/open-research-data/images-of-apples-for-the-use-of-the-viola-jones-method-data-set-no-1-multicolor,614102311611642-0 (accessed on 17 December 2020).

- Database of Images Used to This Article—Greyscale. Available online: https://mostwiedzy.pl/en/open-research-data/images-of-apples-for-the-use-of-the-viola-jones-method-data-set-no-2-grey-scale,10310105381038599-0 (accessed on 17 December 2020).

| Method A | Method B | Method C | |

|---|---|---|---|

| image filtration and Hough transformers | Viola–Jones object detection | YOLO: Real-Time Object Detection | |

| Color | The color of this assumption is important. Initial image filtration is based on the ranges of individual color components. | Does not matter | Does not matter |

| Shape | Only shapes were similar to circles. | A well-prepared descriptor works properly on different shapes but you should put them yourself in the training set. | Using a well-trained network or having trained it with new images, there is no need to place fragments of the image of the fruit if its detection is desired. The algorithm does it. |

| Size | Relaxation of the radius causes considerable elongation of the object search operation. | Does not matter | Does not matter |

| Processing time | Very long | Medium (not in real-time) | In real-time |

| Tree No. | The Real Number of Apples | Hough Transform | Viola–Jones | YOLO | |||

|---|---|---|---|---|---|---|---|

| Detected Apples | Effectiveness [%] | Detected Apples | Effectiveness [%] | Detected Apples | Effectiveness [%] | ||

| 1 | 220 | 90 | 41 | 121 | 55 | 176 | 80 |

| 2 | 82 | 25 | 30 | 40 | 49 | 73 | 89 |

| 3 | 52 | 15 | 29 | 29 | 55 | 43 | 83 |

| 4 | 30 | 14 | 46 | 19 | 63 | 26 | 84 |

| Average | 37 | 55 | 84 | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Janowski, A.; Kaźmierczak, R.; Kowalczyk, C.; Szulwic, J. Detecting Apples in the Wild: Potential for Harvest Quantity Estimation. Sustainability 2021, 13, 8054. https://doi.org/10.3390/su13148054

Janowski A, Kaźmierczak R, Kowalczyk C, Szulwic J. Detecting Apples in the Wild: Potential for Harvest Quantity Estimation. Sustainability. 2021; 13(14):8054. https://doi.org/10.3390/su13148054

Chicago/Turabian StyleJanowski, Artur, Rafał Kaźmierczak, Cezary Kowalczyk, and Jakub Szulwic. 2021. "Detecting Apples in the Wild: Potential for Harvest Quantity Estimation" Sustainability 13, no. 14: 8054. https://doi.org/10.3390/su13148054

APA StyleJanowski, A., Kaźmierczak, R., Kowalczyk, C., & Szulwic, J. (2021). Detecting Apples in the Wild: Potential for Harvest Quantity Estimation. Sustainability, 13(14), 8054. https://doi.org/10.3390/su13148054