Rational Herding in Reward-Based Crowdfunding: An MTurk Experiment

Abstract

1. Introduction

2. Background

3. Research method

3.1. The Theoretical Model

3.2. Dealing with Strategic Uncertainty

3.3. The Impact of New Information

3.4. Timing Summary

| Platform | Backers | Platform | Backers |

| A, B | Choices | Release of partial information | Choices |

| t = 1 | t | t = 2 | |

| Theoretical updating of | Backers’ updating | Theoretical updating of | |

3.5. Experimental Design and Procedures

3.6. Hypothesis

4. Results

4.1. Descriptive Overview

4.2. Analysis of the Aggregate Results

4.3. Rationalizing Backers´ Behavior

5. Discussion and Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Calic, G.; Mosakowski, E. Kicking Off Social Entrepreneurship: How a Sustainability Orientation Influences Crowdfunding Success. J. Manag. Stud. 2016, 53, 738–767. [Google Scholar] [CrossRef]

- Flórez-Parra, J.M.; Rubio Martín, G.; Rapallo Serrano, C. Corporate Social Responsibility and Crowdfunding: The Experience of the Colectual Platform in Empowering Economic and Sustainable Projects. Sustainability 2020, 12, 5251. [Google Scholar] [CrossRef]

- Horisch, J. ‘Think big’ or ‘small is beautiful’? An empirical analysis of characteristics and determinants of success of sustainable crowdfunding projects. Int. J. Entrep. Ventur. 2018, 10, 111–129. [Google Scholar] [CrossRef]

- Petruzzelli, A.M.; Natalicchio, A.; Panniello, U.; Roma, P. Understanding the crowdfunding phenomenon and its implications for sustainability. Tech. Forecast. Soc. Chang. 2019, 141, 138–148. [Google Scholar] [CrossRef]

- Ryoba, M.J.; Qu, S.; Ji, Y.; Qu, D. The Right Time for Crowd Communication during Campaigns for Sustainable Success of Crowdfunding: Evidence from Kickstarter Platform. Sustainability 2020, 12, 7642. [Google Scholar] [CrossRef]

- Mollick, E. The Dynamics of Crowdfunding: An Exploratory Study. J. Bus. Ventur. 2014, 29, 1–16. [Google Scholar] [CrossRef]

- Cosh, A.; Cumming, D.; Hughes, A. Outside Entrepreneurial Capital. Econ. J. 2009, 119, 1494–1533. [Google Scholar] [CrossRef]

- Gierczak, M.M.; Bretschneider, U.; Haas, P.; Blohm, I.; Leimeister, J.M. Crowdfunding: Outlining the New Era of Fundraising. In Crowdfunding in Europe. State of the Art in Theory and Practice; Springer: Cham, Switzerland, 2016; pp. 7–23. [Google Scholar]

- Leboeuf, G.; Schwienbacher, A. Crowdfunding as a New Financing Tool. In The Economics of Crowdfunding. Startups, Portals, and Investor Behavior; Palgrave Macmillan: Cham, Switzerland, 2018; pp. 11–28. [Google Scholar]

- Astebro, T.B.; Fernández, M.; Lovo, S.; Vulkan, N. Herding in Equity Crowdfunding Paris December 2018 Finance Meeting EUROFIDAI—AFFI. 2019. Available online: http://dx.doi.org/10.2139/ssrn.3084140 (accessed on 16 September 2020).

- Zhang, J.; Liu, P. Rational Herding in Microloan Markets. Manag. Sci. 2012, 58, 892–912. [Google Scholar] [CrossRef]

- Simonsohn, U.; Ariely, D. When Rational Sellers Face Nonrational Buyers: Evidence of Herding on eBay. Manag. Sci. 2008, 54, 1624–1637. [Google Scholar] [CrossRef]

- Banerjee, A.V. A Simple Model of Herd Behavior. Q. J. Econ. 1992, 107, 797–817. [Google Scholar] [CrossRef]

- Bikhchandani, S.; Hirshleifer, D.; Welch, I. A Theory of Fads, Fashion, Custom, and Cultural Change as Informational Cascades. J. Polit. Econ. 1992, 100, 992–1026. [Google Scholar] [CrossRef]

- Bi, S.; Liu, Z.; Usman, K. The influence of online information on investing decisions of reward-based crowdfunding. J. Bus. Res. 2017, 71, 10–18. [Google Scholar] [CrossRef]

- Chan, C.S.R.; Parhankangas, A.; Sahaym, A.; Oo, P. Bellwether and the herd? Unpacking the u-shaped relationship between prior funding and subsequent contributions in reward-based crowdfunding. J. Bus. Ventur. 2020, 35, 105934. [Google Scholar] [CrossRef]

- Kapounek, S.; Kučerová, Z. Overfunding and Signaling Effects of Herding Behavior in Crowdfunding, CESifo Working Paper No. 7973. 2019. Available online: https://ssrn.com/abstract = 3502020 (accessed on 22 September 2020).

- Kraus, S.; Richter, C.; Brem, A.; Cheng-Feng, C.; Man-Ling, C. Strategies for reward-based crowdfunding campaigns. J. Innov. Knowl. 2016, 1, 13–23. [Google Scholar] [CrossRef]

- Delfino, A.; Marengo, L.; Ploner, M. I did it your way. An experimental investigation of peer effects in investments choices. J. Econ. Psychol. 2016, 54, 113–123. [Google Scholar] [CrossRef]

- Drehmann, M.; Oechssler, J.; Roider, A. Herding with and without payoff externalities -An internet experiment. Int. J. Ind. Org. 2007, 25, 391–415. [Google Scholar] [CrossRef]

- Huang, J.H.; Chen, Y.F. Herding in Online Product Choice. Psychol. Mark. 2006, 23, 413–428. [Google Scholar] [CrossRef]

- Muchnik, L.; Aral, S.; Taylor, S.J. Social Influence Bias: A Randomized Experiment. Science 2013, 341, 647–651. [Google Scholar] [CrossRef]

- Sasaki, S. Majority size and conformity behavior in charitable giving: Field evidence from a donation-based crowdfunding platform in Japan. J. Econ. Psychol. 2019, 70, 36–51. [Google Scholar] [CrossRef]

- Van de Rijt, A.; Kang, S.M.; Restivo, M.; Patil, A. Field experiments of success-breeds-success dynamics. Proc. Natl. Acad. Sci. USA 2014, 111, 6934–6939. [Google Scholar] [CrossRef]

- Wessel, M.; Adam, M.; Benlian, A. The impact of sold-out early birds on option selection in reward-based crowdfunding. Decis. Support Syst. 2019, 117, 48–61. [Google Scholar] [CrossRef]

- Belleflamme, P.; Lambert, T.; Schwienbacher, A. Crowdfunding: Tapping the right crowd. J. Bus. Ventur. 2014, 29, 585–609. [Google Scholar] [CrossRef]

- Bayus, B.L. Crowdsourcing New Product Ideas Over Time: An Analysis of the Dell IdeaStorm Community. Manag. Sci. 2013, 59, 226–244. [Google Scholar] [CrossRef]

- Agrawal, A.; Catalini, C.; Goldfarb, A. Some simple economics of crowdfunding. Innov. Policy Econ. 2014, 14, 63–97. [Google Scholar] [CrossRef]

- Cumming, D.; Hornuf, L. Preface & Introduction. In The Economics of Crowdfunding. Startups, Portals, and Investor Behavior; Palgrave Macmillan: Cham, Switzerland, 2018; pp. 1–8. [Google Scholar]

- Mollick, E. Democratizing Innovation and Capital Access: The Role of Crowdfunding. Calif. Manag. Rev. 2016, 58, 72–87. [Google Scholar] [CrossRef]

- Miglo, A.; Miglo, V. Market imperfections and crowdfunding. Small Bus. Econ. 2019, 53, 51–79. [Google Scholar] [CrossRef]

- Alaei, S.; Malekian, A.; Mostagir, M. A Dynamic Model of Crowdfunding. In Proceedings of the 2016 ACM Conference on Economics and Computation, Maastricht, The Netherlands, 24–28 July 2016; p. 363. [Google Scholar]

- Steigenberger, N. Why supporters contribute to reward-based crowdfunding. Int. J. Entrep. Behav. Res. 2017, 23, 336–353. [Google Scholar] [CrossRef]

- Gerber, E.; Hui, J. Crowdfunding: Motivations and Deterrents for Participation. ACM Trans. Comput.-Hum. Interact. 2013, 20, 1–32. [Google Scholar] [CrossRef]

- Ordanini, A.; Miceli, L.; Pizzetti, M.; Parasuraman, A. Crowdfunding: Transforming customers into investors through innovative service platforms. J. Serv. Manag. 2011, 22, 443–470. [Google Scholar] [CrossRef]

- Bento, N.; Gianfrate, G.; Groppo, S.V. Do crowdfunding returns reward risk? Evidences from clean-tech projects. Technol. Forecast. Soc. Chang. 2019, 141, 107–116. [Google Scholar] [CrossRef]

- Moysidou, K. Motivations to contribute financially to crowdfunding projects. In Open Innovation: Unveiling the Power of the Human Element; World Scientific Publishing: Singapore, 2016; pp. 283–318. [Google Scholar]

- Kuppuswamy, V.; Bayus, B.L. Crowdfunding Creative Ideas: The Dynamics of Project Backers. In The Economics of Crowdfunding. Startups, Portals, and Investor Behavior; Palgrave Macmillan: Cham, Switzerland, 2018; pp. 151–182. [Google Scholar]

- Solomon, J.; Ma, W.; Wash, R. Don’t wait!: How timing affects coordination of crowdfunding donations. In Proceedings of the 18th ACM Conference on CSCW, Vancouver, BC, Canada, 14–18 March 2015; pp. 547–556. [Google Scholar]

- Bandura, A. Human agency in social cognitive theory. Am. Psychol. 1989, 44, 1175–1184. [Google Scholar] [CrossRef] [PubMed]

- Colombo, M.G.; Franzoni, C.; Rossi-Lamastra, C. Internal Social Capital and the Attraction of Early Contributions in Crowdfunding. Entrep. Theory Pr. 2015, 39, 75–102. [Google Scholar] [CrossRef]

- Koning, R.; Model, J. Experimental Study of Crowdfunding Cascades: When Nothing is Better than Something. 2013. Available online: https://ssrn.com/abstract = 2308161 (accessed on 16 September 2020).

- Zaggl, M.A.; Block, J. Do small funding amounts lead to reverse herding? A field experiment in reward-based crowdfunding. J. Bus. Ventur. Insights 2019, 12, e00139. [Google Scholar] [CrossRef]

- Majid, S.; Lopez, C.; Megicks, P.; Lim, W.M. Developing effective social media messages: Insights from an exploratory study if industry experts. Psychol. Mark. 2019, 36, 551–564. [Google Scholar] [CrossRef]

- Herrera, H.; Hörner, J. Biased social learning. Games Econ. Behav. 2013, 80, 131–146. [Google Scholar] [CrossRef]

- Smith, L.; Sørensen, P. Pathological outcomes of observational learning. Econometrica 2000, 68, 371–398. [Google Scholar] [CrossRef]

- Welch, I. Sequential sales, learning, and cascades. J. Financ. 1992, 47, 695–732. [Google Scholar] [CrossRef]

- Avery, P.; Zemsky, P. Multidimensional uncertainty and herd behavior in financial markets. Am. Ec. Rev. 1998, 88, 724–748. [Google Scholar]

- Décamps, J.P.; Lovo, S. Informational cascades with endogenous prices: The role of risk aversion. J. Math. Ec. 2006, 42, 109–112. [Google Scholar] [CrossRef]

- Park, A.; Sabourian, H. Herding and contrarian behavior in financial markets. Econometrica 2011, 79, 973–1026. [Google Scholar] [CrossRef][Green Version]

- Cong, L.W.; Xiao, Y. Up-Cascaded Wisdom of the Trowd. 2018. Available online: https://ssrn. com/abstract 3030573 (accessed on 23 October 2020).

- Chakraborty, S.; Swinney, R. Signaling to the Crowd: Private Quality Information and Rewards-Based Crowdfunding. Manufact. Serv. Oper. Manag. 2020. Available online: https://doi.org/10.1287/msom.2019.0833 (accessed on 23 October 2020).

- Mason, W.; Siddharth, S. Conducting behavioral research on Amazon’s Mechanical Turk. Behav. Res. Methods 2012, 44, 1–23. [Google Scholar] [CrossRef] [PubMed]

- Poalacci, G.; Chandler, J.; Ipeirotis, P.G. Running Experiments on Amazon Mechanical Turk. Judgm. Decis. Mak. 2010, 5, 411–419. [Google Scholar]

- Goodman, J.K.; Cryder, C.E.; Cheema, A. Data collection in a Flat World: The Strengths and Weaknesses of Mechanical Turk Samples. J. Behav. Decis. Mak. 2013, 26, 213–224. [Google Scholar] [CrossRef]

- Hauser, D.J.; Schwarz, N. Attentive Turkers: MTurk participants perform better on online attention checks than do subject pool participants. Behav. Res. Methods 2016, 48, 400–407. [Google Scholar] [CrossRef]

- Peer, E.; Vosgerau, J.; Acquisti, A. Reputation as a sufficient condition for data quality on Amazon Mechanical Turk. Behav. Res. Methods 2014, 46, 1023–1031. [Google Scholar] [CrossRef]

| Situation 1 (Travel Books) (Testing the Effect of Information about Early Backers) | |||

| Treatment I without information | Treatment II with information | ||

| Book A | Book B | Book A | Book B |

| $525 raised 35 backers | $60 raised 4 backers | ||

| Situation 2 (Cookery Books) (Testing the effect of peer and expert opinion) | |||

| Treatment I without information | Treatment II with information | ||

| Book C | Book D | Book C | Book D |

| $425 raised 30 backers | $425 raised 30 backers | ||

| 2 negative peers’ reviews | 2 positive peers’ reviews | ||

| 1 positive expert’s review | 1 negative expert’s review | ||

| Panel A. Change in subject choice between Treatments 1 and 2 (with added information) | |||||||

| H0: A/B = B/A | Men | Women | Men + Women | ||||

| Country | A/B 1 | B/A | A/B | B/A | A/B | B/A | |

| USA | Number | 6 | 89 | 7 | 94 | 13 | 183 |

| % | 6.32 | 93.68 | 6.93 | 93.07 | 6.63 | 93.37 | |

| Proportion test | p < 0.0001 | p < 0.0001 | p < 0.0001 | ||||

| India | Number | 21 | 72 | 15 | 25 | 36 | 97 |

| % | 22.58 | 77.42 | 37.50 | 62.50 | 27.07 | 72.93 | |

| Proportion test | p < 0.0001 | p = 0.125 | p < 0.0001 | ||||

| USA + India | Number | 27 | 161 | 22 | 119 | 49 | 280 |

| % | 14.36 | 85.64 | 15.60 | 84.40 | 14.89 | 85.11 | |

| Proportion test | p < 0.0001 | p < 0.0001 | p < 0.0001 | ||||

| Panel B. Subject choice in Treatment 2 (with added information) | |||||||

| H0: A = B | Men | Women | Men + Women | ||||

| Country | A | B | A | B | A | B | |

| USA | Number | 168 | 82 | 157 | 93 | 325 | 175 |

| % | 67.20 | 32.80 | 62.80 | 37.20 | 65.00 | 35.00 | |

| Proportion test | p < 0.0001 | p < 0.0001 | p < 0.0001 | ||||

| India | Number | 151 | 99 | 55 | 42 | 206 | 141 |

| % | 60.40 | 39.60 | 56.70 | 43.30 | 59.37 | 40.63 | |

| Proportion test | p = 0.001 | p = 0.191 | p < 0.0001 | ||||

| USA + India | Number | 319 | 181 | 212 | 135 | 531 | 316 |

| % | 63.80 | 36.20 | 61.10 | 38.90 | 62.69 | 37.31 | |

| Proportion test | p < 0.0001 | p < 0.0001 | p < 0.0001 | ||||

| Panel A. Change in subject choice between Treatments 1 and 2 (with added information). | |||||||

| H0: C/D = D/C | Men | Women | Men + Women | ||||

| Country | C/D 2 | D/C | C/D | D/C | C/D | D/C | |

| USA | Number | 52 | 3 | 83 | 4 | 135 | 7 |

| % | 94.55 | 5.45 | 95.40 | 4.60 | 95.07 | 4.93 | |

| Proportion test | p < 0.0001 | p = 0.017 | p < 0.0001 | ||||

| India | Number | 49 | 26 | 28 | 9 | 77 | 35 |

| % | 65.33 | 34.67 | 75.68 | 24.32 | 68.75 | 31.25 | |

| Proportion test | p = 0.011 | p = 0.005 | p < 0.0001 | ||||

| USA + India | Number | 101 | 29 | 111 | 13 | 212 | 42 |

| % | 77.69 | 22.31 | 89.52 | 10.48 | 83.46 | 16.54 | |

| Proportion test | p < 0.0001 | p < 0.0001 | p < 0.0001 | ||||

| Panel B. Subject choice in Treatment 2 (with added information). | |||||||

| H0: C = D | Men | Women | Men + Women | ||||

| Country | C | D | C | D | C | D | |

| USA | Number | 86 | 164 | 83 | 167 | 169 | 331 |

| % | 34.40 | 65.60 | 33.20 | 66.80 | 33.80 | 66.20 | |

| Proportion test | p < 0.0001 | p < 0.0001 | p < 0.0001 | ||||

| India | Number | 121 | 129 | 43 | 54 | 164 | 183 |

| % | 48.40 | 51.60 | 44.33 | 55.67 | 47.26 | 52.74 | |

| Proportion test | p = 0.613 | p = 0.267 | p = 0.315 | ||||

| USA + India | Number | 207 | 293 | 126 | 221 | 333 | 514 |

| % | 41.40 | 58.60 | 36.31 | 63.69 | 39.32 | 60.68 | |

| Proportion test | p < 0.0001 | p < 0.0001 | p < 0.0001 | ||||

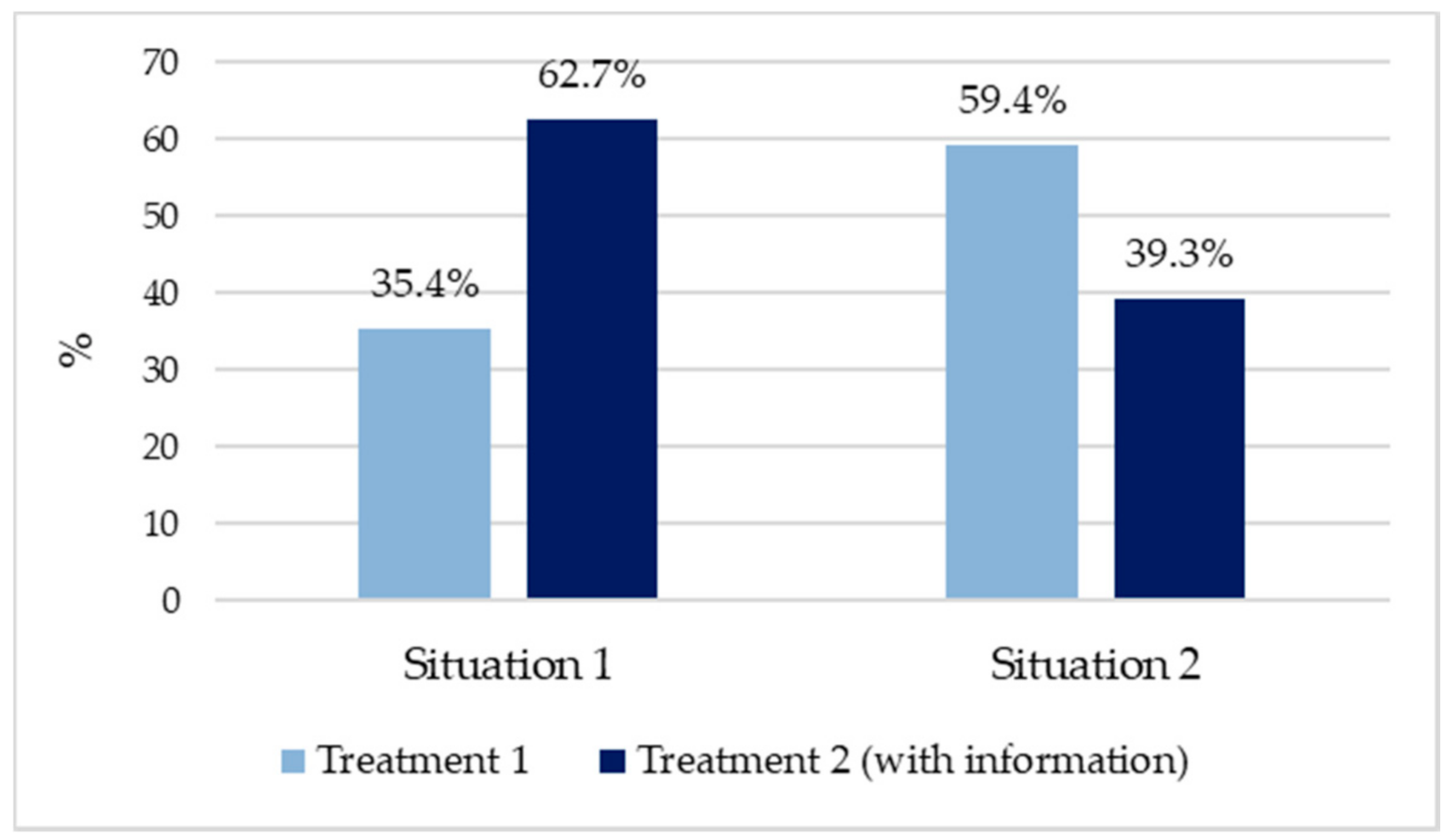

| Situation 1 | ||||

|---|---|---|---|---|

| Treatment 2 (with Added Information) | ||||

| Treatment I | Book | A | B | Total |

| A | 251 29.6% | 49 5.8% | 300 35.4% | |

| B | 280 33.06% | 267 31.52% | 547 64.58% | |

| Total | 531 62.7% | 316 37.3% | 847 100% | |

| Situation 2 | ||||

|---|---|---|---|---|

| Treatment 2 (with Added Information) | ||||

| Treatment I | Book | C | D | Total |

| C | 291 34.35% | 212 25.03% | 503 59.38% | |

| D | 42 4.96% | 302 35.66% | 344 40.62% | |

| Total | 333 39.32% | 514 60.68% | 847 100% | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Comeig, I.; Mesa-Vázquez, E.; Sendra-Pons, P.; Urbano, A. Rational Herding in Reward-Based Crowdfunding: An MTurk Experiment. Sustainability 2020, 12, 9827. https://doi.org/10.3390/su12239827

Comeig I, Mesa-Vázquez E, Sendra-Pons P, Urbano A. Rational Herding in Reward-Based Crowdfunding: An MTurk Experiment. Sustainability. 2020; 12(23):9827. https://doi.org/10.3390/su12239827

Chicago/Turabian StyleComeig, Irene, Ernesto Mesa-Vázquez, Pau Sendra-Pons, and Amparo Urbano. 2020. "Rational Herding in Reward-Based Crowdfunding: An MTurk Experiment" Sustainability 12, no. 23: 9827. https://doi.org/10.3390/su12239827

APA StyleComeig, I., Mesa-Vázquez, E., Sendra-Pons, P., & Urbano, A. (2020). Rational Herding in Reward-Based Crowdfunding: An MTurk Experiment. Sustainability, 12(23), 9827. https://doi.org/10.3390/su12239827