1. Introduction

The emergence in the 21st century of the new pedagogical concept of competencies represented a new challenge and impetus for the concept of educational evaluation. In this sense, there is a need to educate in competences, since—among other aspects—it favors interdisciplinarity with the rest of the subjects as is the case of language teaching. However, for this approach to be effective, a high level of procedural content and the application of knowledge to specific situations is necessary, for which there should be a greater presence of formative evaluation in this educational process [

1]. In Spain, these competencies have become integral elements of the curriculum both in the Organic Law on Education (OLE) and in the Organic Law on the Improvement and Quality of Education (OLIQUE). All of these legislative changes show the need to develop evaluation systems that gather information on the three types of content (concepts, procedures, and attitudes) but also the mobilization of these contents in an adequate way, through the approach of true situations that require students to solve real problems, in which they can apply their knowledge in a creative way [

2]. It also requires the use of a wide range of assessment test formats that help to verify complex thinking or problem-solving skills during and at the end of the process [

3]. These assessment tests are already being designed in other European countries and have proven to be effective in detecting what historical and geographic thinking skills students have, and how far they have progressed in acquiring competencies in subjects such as social sciences in secondary education.

Furthermore, the implementation, development, and evaluation of competencies must be carried out transversally in all areas. In the opinion of [

4], geography and history are disciplines that make possible the development and learning of these basic educational competences, not only the social and civic ones, redefined in the current OLIQUE as “Social and civic competences”. It is necessary to try to overcome an interpretation of competencies that connects only to competitiveness. Being competent means that students interact and are able to argue and make proposals for improvement [

5,

6]. These operations require knowledge about how society is and how it works, how human relations have been generated and modified over time, what consequences the actions carried out by individuals and groups have had and still have [

7]. Understanding the meanings of human actions in different contexts is part of the essence of the social sciences. It is not necessarily the person who accumulates the greatest amount of scholarly information on a subject, whether geographical, historical, or otherwise, who is the most competent, but the person who knows how to use it correctly in the right context. Such skills are part of the methods with which geographers and historians work. Incorporating the geographic and historical method into education seems to be a good strategy for training more competent people [

8].

At present, there are numerous studies that show that a teaching method based on the memorization of content is not the most appropriate for achieving significant learning in students, understanding—therefore—the teaching of history is a broad and complex challenge that must revise disciplinary knowledge and travel along the path of historical thought [

9,

10].

Even recent opinion articles have pointed out the urgency of alleviating the pressing lack of knowledge of history among young people [

11]. There is, therefore, a real demand that specialists in social science education, among others, have to meet in order to alleviate the levels of ignorance of some subjects, which are fundamental for understanding the society in which we live. In this context, teachers who teach the area of social sciences in secondary education must be aware that social knowledge is essential for the formation of competent persons capable of functioning in today’s world. However, in order to be effective and to meet the social demands that the curricula make explicit, certain routines must be overcome and teaching practice reformulated. It is not a matter of dispensing disciplinary knowledge but rather of using it in a manner that is more linked to current challenges and to the experiences of students. In this sense, it is necessary that we understand the close link between the social, geographic, and historical formation of students and the development of democratic, critical, and responsible citizenship. This does not mean that social sciences should necessarily be at the service of civic and citizen education, but rather that they should contribute to it from the values that are derived from the teaching of geography and history and that foster the training of individuals to recognize and orient themselves in today’s world. To achieve this, it is necessary that geographic and historical knowledge be connected to the acquisition of complex cognitive abilities and social, civic, and educational skills [

12,

13].

The inclusion of basic competencies in the education system implies having to revise the current concept of evaluation, in which the acquisition of conceptual knowledge is still mainly valued in the field of social sciences, and in which the use of the exam has almost uncontested supremacy as a measurement instrument [

14,

15]. Research on social science teaching shows that assessment procedures and criteria are still linked to culturalist purposes, to a supposed “objectivity”, the almost exclusive use of the textbook as a teaching material, and the predominance of contents that are excessively conceptual and out of context with social reality [

16,

17]. The evaluation process of the disciplinary content is carried out as if it were static, imperishable, and immutable knowledge. The presentation of the evaluation of facts and data out of context is a general trend, and the most worrying thing is that the teaching staff gives more importance to quantity than to quality [

18]. Directing education towards the achievement of basic educational skills requires a change in the conception of teaching and classroom practice that also involves a reflection on the nature of student assessment and the methods and instruments used for it. The idea behind this approach is that learning cannot be conceived exclusively or mainly as the acquisition of disciplinary knowledge—as it has traditionally been done in most curricular areas, particularly in the social sciences—but that teachers must take into account the ability to apply this knowledge in new situations that may arise in everyday life as adults [

19].

These circumstances are partly a consequence of the fact that evaluation is still confused with examination, perhaps due to the influence of international assessments (PISA, PIRLS, ICCS, etc.) which, supposedly, seek excellence and measure the levels of quality of educational institutions and systems, classifying students into the three main categories: excellent, ordinary, and failed. In this sense, the introduction of basic competences can be an opportunity to include in teaching a democratization of assessment models. The final objective would be to be able to carry out what some authors have called authentic assessment [

20,

21]. With this concept, we want to link student assessment with the real needs of future citizens and professional performance. Trying to find out what they know or are able to do, using new strategies and assessment procedures, including complex and contextualized tasks that require assessment over time and not only at the end of the process [

22,

23]. To this end, it is important to pay attention to all elements of the curriculum, such as methodology and resources, encouraging the joint participation of all educational agents in the evaluation carried out in the school, and exploring new evaluation instruments that go beyond the final exam [

24]. In this sense, combining quantitative and qualitative methods in student assessment is indispensable for the correct development of complex cognitive skills and competences in the area of Social Sciences. For this reason, there is an urgent need to design assessment tests that measure students’ cognitive skills in social sciences, with the aim of evaluating not only students’ knowledge in disciplines such as geography and history but the skills and abilities related to them. The results of these tests will allow the development of a scale of progression in the acquisition of competences that currently does not exist at a national level, but which is being developed and applied in other Northern European countries with better academic performance in students, such as the cases of the United Kingdom and Finland [

25].

The aim of this research is to find out the students’ perception of the level of development of competences according to what they have learned in the area of Social Sciences, Geography, and History by applying a scale called EPECOCISO. Two main objectives were proposed to achieve this end:

RQ: To analyze the validity of the construct by means of exploratory factorial analysis, the Kaiser–Meyer–Olkin test (KMO test), and the Bartlett sphericity test.

RQ: To know the relationship between competences, acquired knowledge, evaluation instruments, and the transfer of learning in Social Sciences, Geography, and History.

2. Materials and Methods

2.1. Design

The research design was based on a descriptive study that follows the sequence of a quantitative methodology, whereby a questionnaire (see

Appendix A) called Evaluation of Perception of Social Science Skills (EPECOCISO) was developed. Once the scale was applied, an exploratory factorial analysis of the data was carried out, from which it was possible to extract seven factors.

When extracting the components, the maximum likelihood method was used on the covariance matrix [

26], resulting in the seven factors present in

Table 1. In addition, the calculation of the standardized values of each variable was considered and, subsequently, a factorial analysis was carried out, standardizing the variables with the aim of being able to operate from the same scale. On the other hand, it was necessary to apply the Varimax rotation in order to rotate the factorial solution, thus minimizing the number of variables that could have high saturations in each factor, simplifying—at the same time—the interpretation of the results. In this sense, the factorial solution was not carried out by previously determining an established number (maximum) of factors, but rather this chose those whose eigenvalue is greater than 1 [

27].

2.2. Sample

The research was developed in the Region of Murcia (Spain), where the sample size was calculated according to [

28] from a total population of 14,714 students of the fourth year of secondary education, where the calculation of the necessary sample sizes for a significance level of 0.05—and an error of no more than 0.3—requires the survey of no less than 996 subjects, this figure being widely exceeded in this research. Thus, according to the analysis of the sample size carried out with the help of the Research Support Service of the University of Murcia, the sample of the population studied is representative, with 1573 students being surveyed, which is much more than is necessary for the conclusions to be extrapolated with adequate scientific rigor. An intentional type of sampling was used, with a standard error of 0.7% for the total universe (according to the results of the STATSTM analysis).

It should be noted that before concluding the final selection of the sample, and from the desire to detect possible hidden errors in the questionnaire used (in terms of understanding for students, internal organization aspects, etc.), it was decided to make a prior application for a group of students (n = 35) belonging also to a compulsory secondary school in the Region of Murcia. This phase was carried out under the same conditions as those reproduced later, during the mass application of the instrument. It was precisely the incorporation of this initial application phase that allowed the drafting of some items of the scale to be refined.

Another aspect to be assessed is that, in terms of gender, this is a practically equivalent group (51% ♂; 49% ♀), whose ages are between 15 and 18 years. Moreover, the total number of subjects selected from the sample (

n = 1422) was obtained after eliminating all those data collection instruments that contained errors (or missing data), reaching, finally, a very high confidence level (over 95%, according to the analysis with STATS) and with a maximum error of 0.7% [

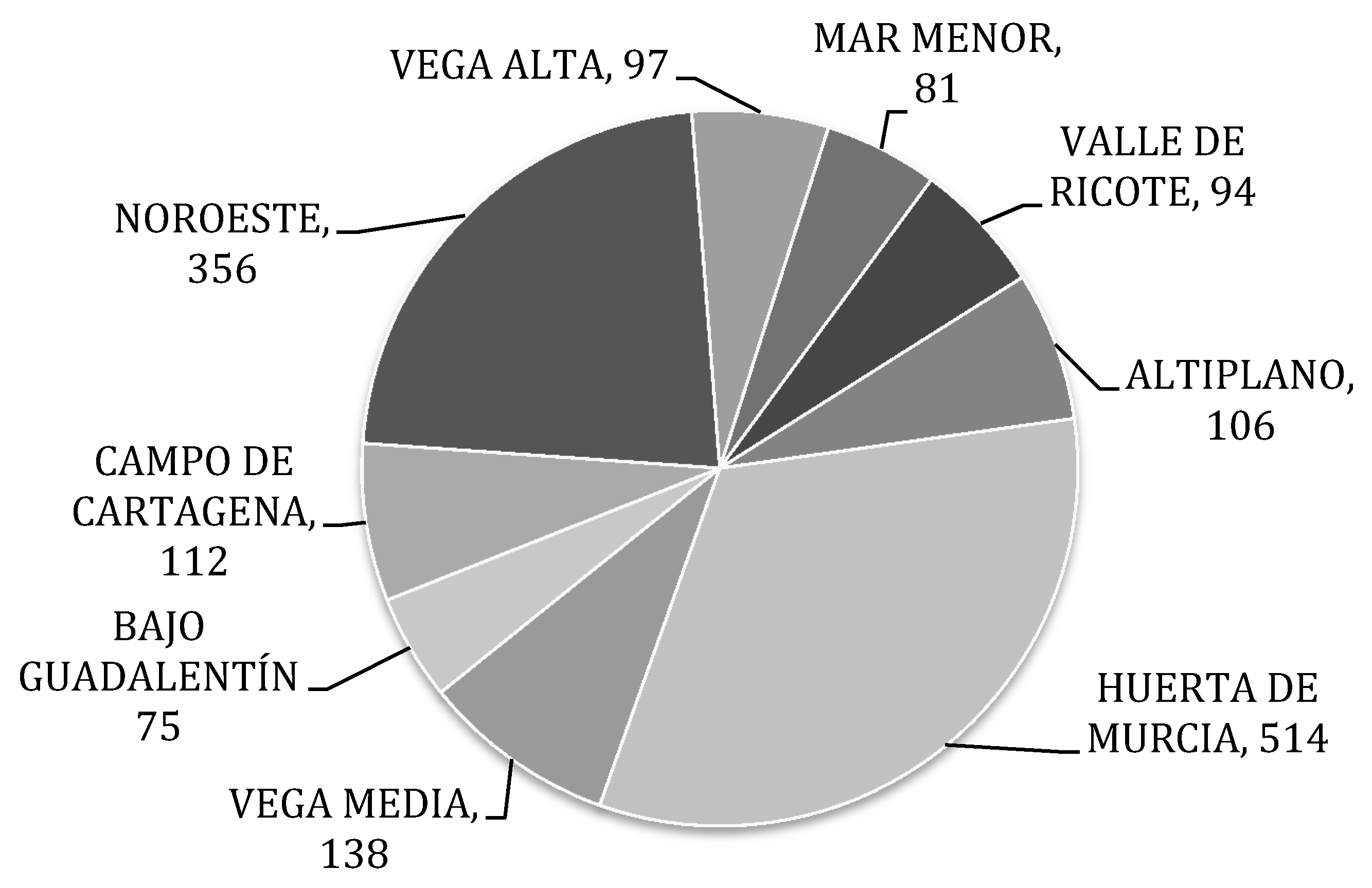

29]. Overall, it should be noted that the final sample is made up of a total of 18 secondary schools. More specifically, as can be seen in

Figure 1, these are schools belonging to the municipalities of the Northwest, Huerta de Murcia, Vega Alta, Vega Media, Bajo Guadalentín, Mar Menor, Valle del Ricote, Altiplano, and Campo de Cartagena, a variety which undoubtedly enriches the validity of the data obtained.

2.3. Instrument

It is an instrument that shows the different significant relationships between the variables that influence the perception of the level of development of competences according to what has been learned in Social Sciences, Geography, and History, the degree of difficulty that students have in assimilating them, the instruments used to evaluate the degree of achievement of competences and the transfer of what has been learned in social sciences to real situations. Regarding the characteristics it presents, it is important to highlight that it is balanced (because the number of students is similar), reliable (because of the stability and consistency of what has been measured), and valid (because it measures what it is intended to measure). To do so, students had to answer on a Likert-type scale (of six options) choosing the one they most identified with (1 = totally disagree; 2 = disagree; 3 = neither agree nor disagree; 4 = quite agree; 5 = totally agree, and NS = don’t know).

With regard to the design followed to develop the instrument, it is important to highlight that it was developed in four major stages: the construction and definition of the items on the scale, the analysis process by expert judges for the validation of the information collection instrument, the application of the scale and finally the data analysis process. It should be noted that the items of the EPECOCISO scale were developed in two ways: firstly, an exhaustive documentary analysis was carried out in the area of Didactics of the Social Sciences and, secondly, a consultation process was carried out by experts of recognized experience from secondary or higher education centers. At all times, the aim was to evaluate the relevance of the questions designed, the degree of success in the dimensions, as well as the semantic suitability and understanding in the writing of each item. As a whole, the group of judges was formed by nine professional experts in areas related to the content or nature of the research itself (evaluation, social perception, and research methodology), in which to preserve an item, the criterion of agreement equal to or greater than 75% of the judges was used. As a result of this process, of the 52 initial items, 44 were retained (after discarding 8), precisely because they were those which best managed to describe and limit the students’ perception of competences. Likewise, once the grammatical suggestions and proposals of the experts had been taken into account, these indicators were screened by virtue of a more refined process. Finally, once the first (previous) application of the questionnaire was made to a group of selected students (n = 35), it was necessary to discard four indicators, leaving 40 in the end.

The scale for the Perception of evaluation in Social Sciences, Geography and History, and its relation to the development of competences (EPECOCISO) was definitively established with the following structure: (1) objective of the questionnaire and instructions, (2) sociodemographic data, (3) student perception of the competences according to what has been learned in the area of Social Sciences, Geography, and History, made up of eight items, (4) student perception of the degree of difficulty that the competences have in assimilating them, made up of another eight questions, (5) students’ perception of the instruments used to assess the degree of achievement of the competences, which also consists of eight questions, (6) students’ perception of the transfer of what they have learned in Social Sciences to a real situation, consisting of 16 questions divided into two blocks, one to give an opinion on general questions and the other to answer questions applied to a practical case related to a possible job offer; giving rise to the 40 final items of the questionnaire.

The Kuder–Richardson method was used to calculate the level of reliability of the questionnaire, applying Cronbach’s α coefficient for each of the seven factors analyzed. As a result of this process, and by virtue of the recommendations contained in the specialized literature [

30], those items whose α coefficients extracted did not reach the reference value for satisfactory reliability were discarded, resulting in an original tool in printed format whose data would have to be emptied from a digital reader.

As can be imagined for this type of study, before starting the data collection, the appropriate permission was requested from the responsible academic authorities. Once it was granted, it was applied to the different groups of fourth year students.

On the other hand, the criteria of applicability and efficiency were also considered, following the recommendations of [

31] so that the instrument designed would be easy to apply and would consume little time for the participants, taking into account the high number of the sample with which work was done. In this sense, the completion of the scale was carried out by the research team going directly to each of the classrooms of the participating centers where—in real-time—the questionnaires would be distributed among the students for their response (with a duration of between 15 and 20 min). Therefore, taking into account that for the coefficient α with a value of 0.914, we can say that we are facing an instrument with high reliability.

3. Results

The extraction of the answers collected in the questionnaires was organised by setting up a database which, once codified, became a template for the SPSS version 24 program, with which the information was analyzed. Firstly, an exploratory factorial analysis was carried out in which, taking into account the large study sample (as well as the diversity of variables), a reduction of the data could be applied by grouping them into variables (as shown in

Table 1).

On this basis, it is possible to analyze the way in which the different variables correlate with each other, allowing the study of the existing (statistical) significance between these relationships and ensuring independence between the different groups established. As we know, factorial analysis requires that there be a correlation between different items; in this case, the conditions established for the analysis carried out were calculated on the basis of the sample adaptation of Kaiser–Meyer–Olkin (KMO test) and, also, on the basis of the Barlett sphericity test, the result of both being fully satisfactory. In this sense, according to [

32] if the KMO value reached values below 0.6, it would be considered inappropriate (and not at all relevant) to carry out a factorial analysis; taking into account that in the case of this research, the KMO value is 0.926, it should be valued as a fully reliable and acceptable level, and at the same time, it is totally recommendable to carry out an analysis of this type. Secondly, Bartlett’s sphericity test allows us to contrast the hypothesis that the correlations between the items are not null [

33] so that the presence of a significant result will be satisfactory (

p < −995). From this base, since the resulting value in this research was

p = 0.000, it can be affirmed that in this study, it is completely pertinent to carry out this type of analysis (the null hypothesis of the sphericity of the data can be rejected).

Finally, in order to facilitate the interpretation of the factorial solution, a rotation was applied through the Varimax method which, as we know, involves a type of orthogonal rotation (which considers the factors independent of each other), while minimizing the number of variables that offer high saturations in each factor. In this way, the interpretation of the factors is greatly facilitated, since the linked items make up a smaller number of factors (thus simplifying the denomination of each factor). For this research, the variance explained is 83.113% of the total variance, as we can see in

Table 2, where all the factors whose own values are greater than 1 are included.

In this sense, the first factor (1), which was called “Perception of the application of the acquisition of key competences in life in society”, is made up of seven items which indicate the relationship between the key competences and their application in the student’s real life (it explains 58.1% of the total variance). The second factor (2), called “Perception of competences in terms of learning”, is made up of another seven items which express the relationship between the competences acquired and the knowledge learned (explaining 24.9% of the total variance). The third factor (3) is made up of five items that explain 4.9% of the total variance, which can be interpreted as the degree of difficulty involved in the assimilation of the competences. The fourth factor (4) is made up of two items which explain 3.3% of the total variance and is interpreted as the perception of the methodology used by the teaching staff in order to acquire the competences. The fifth factor (5) is made up of three items that explain 3.2% of the total variance and defines the perception of the importance of mathematical competence in the social sciences. A sixth factor (6), made up of two items, would explain 2.9% of the total variance and represents the students’ perception of the transfer of what they have learned in a real situation. The seventh and last factor is interpreted as the perception of the assessment instruments used to evaluate the degree of achievement of competences and is made up of two items which explain 2.3% of the total variance. Once the analysis of the matrix of the residuals of the correlations reproduced for each of the seven factors studied is carried out, it should be noted that only 5% of the residuals have reached absolute values greater than 0.05, so it can be said that the adjustment of the model is good, as it can explain 83.1% of the total variance.

Along the same lines, the internal consistency coefficient (Cronbach’s alpha) was carried out, the purpose of which is to calculate the reliability and internal consistency between items; this index has shown a value of 0.914 for the scale, thus showing high quality (let us remember that this index reaches a maximum value of 1, so that the closer it is to this value, the greater the reliability of the instrument). In this sense, and according to more or less tacit considerations among authors [

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24] it is considered that alpha values higher than 0.7 or 0.8 (depending on the source) are sufficient to guarantee the reliability of the scale. From this point of view, the EPECOCISO scale has a factorial structure that is fully adequate when it comes to evaluating how students in the fourth year of Obligatory Secondary Education perceive the competences linked to the area of Social Sciences. In order to try to check the possible relationships between the different factors, the Pearson correlation was practiced.

In

Table 3, we have highlighted all those correlations that, being statistically significant, express positive or negative relationships between the factors. In this sense, positive correlations were detected between factor 1 (F1) and factors F2, F4, F5, F6, and F7 (which means that the more F1 increases, the more factors F2, F4, F5, F6, and F7 also increase). Along with these, positive correlations were also found between different factors, of which the following are worth highlighting: the relationship found between factor 2 (F2) with factors F1, F4, F5, F6, and F7; the relationship between factor F4 with F1, F2, F5, F6, and F7; between F5 with F1, F2, F4, F6, and F7; F6 with F1, F2, F4, F5 and F7; and finally, F7 with F1, F2, F4, F5, and F6.

4. Discussion

As we observed in the previous section, and in response to the first research question, the instrument presents a high degree of reliability and validity. As for the second research question, taking into account the variety and richness of the data presented for analysis, it was considered appropriate to apply—as descriptive statistics—the cross tables. This is a test that allows the analysis of the direction in which the responses given by the key informants to the different data contained for each of the factors specified above are polarized.

In this line, the existence of a positive relationship between factors F3 and F4 is highlighted, insofar as an adequate methodology for the teaching of competences reduces the difficulty in the assimilation of such competences, making it easier for the students to perceive this process than when working from a traditional methodology. On the other hand, the factor F3 was correlated with F7, showing that the assimilation of competences is easy for them as long as the assessment instruments proposed in the questionnaire are used (summaries, maps, etc.). Similarly, we have correlated factor F4 with factor F7, showing that students who are satisfied with the assessment instruments, also with regard to the methodology used by the teaching staff, thus observing the existence of a close relationship between the examination and the methodology. After applying a correlation between factor F2 and factor F6, the existence of concordant values between the two factors is highlighted, insofar as—for the students—what they have learned in the subject allows them to acquire a series of competences, while at the same time working by competences facilitates the transfer of learning to situations of their daily lives. Something similar happens when correlating F2 with F4, appreciating a degree of positive agreement in both factors which translates into an adequate methodology (such as that suggested through the designed questionnaire) helping to acquire the competences by virtue of the contents worked on in the classroom. To conclude, it was also considered totally pertinent to correlate factors F4 and F6, extracting a positive relationship in terms of the degree of agreement, as shown by the participants, those who are in favor of the methodology suggested in the questionnaire (use of images, commentary on texts, documentaries) also consider that the transfer of what has been learned (by that means) to a real, significant situation would be greater; that is to say, an adequate methodology would help to better transfer the knowledge and competences acquired to everyday situations for the students.

In addition to the analysis that we can extract from these cross-referenced tables (referenced ut supra), it is also convenient to carry out other types of considerations that confer a greater scope to this study (given the power of its instrument). In this sense, and once we have followed the development of the research process carried out, it becomes clear that the first of the factors configured to explain the proposed scale (F1) incorporates a whole series of indicators that express the present correspondence between the key competences and their transfer to the student’s real life, the greater their perception of these skills, the greater their assessment of their preparation (as students) to undertake their own actions related to these skills (knowing how to calculate costs, design itineraries for third parties, create presentations, identify heritage elements in leisure trips, etc.). Without a doubt, the items which make up this factor explain the highest accumulated percentage of the total variance and show to what extent the perception of competences is linked to their most applied facet. Therefore, it is a factor that would confirm this importance, already detected in the specialized literature [

34] referring to the contribution that, from the area of Social Sciences, is made with regard to the development of key competences in secondary education. The second of the factors extracted (F2) groups together a series of indicators that refer to the perception that the students have of the competences according to what they have learned during the course. A whole series of items are included here that group together the perception that the students have extracted in this respect from the key competences and the contribution that all that has been dealt with (what has been learned) from the area of Social Sciences has made to their development, highlighting the marked transversal nature of this area. In this sense, it expresses the value that, for the student the contribution of what has been learned in Social Sciences, Geography, and History has when it comes to expressing their emotions (in a way that can be understood by others), in the face of the challenge of forging a social commitment with the environment that surrounds them, by virtue of the development of technological skills, or in the function of what such knowledge has served to awaken the initiative to undertake social participation, to better understand artistic productions or even to develop that much needed critical sense implicit in the construction of learning itself.

According to this study, and in line with other authors, the development of this type of competence represents a decisive advance in reducing the level of uncertainty of students when taking standardized tests—generally exams [

35,

36]—and therefore work on competences of this nature is recommended. Closely related to the previous and subsequent factors, we highlight the factor (F3), because according to the perception, the possibility of transfer, the methodology used, and the way of evaluating the competences, the student will show more or less difficulty in the acquisition of them.

Another factor (F4), referring to the methodology used by the teaching team, shows that the improvement of the written expression, the comprehension they experience about the reading of the texts, as well as the interpretation of graphics, are the result of the use of adequate methodologies that, in the end, facilitate the development of the calculation, interpretation, or synthesis of the information; standing out among such methodologies is the realization of summaries, as well as the use of graphics for their analysis. Another of the factors analyzed (F5) refers to the importance that students give to mathematical competence within the social sciences; thus, it was verified the existence of a positive association between three items that analyze the relevance of that competence. In this way, mathematical knowledge is sublimated as vital support for the resolution of those problems which take place in everyday life, granting an outstanding value when it comes to adequately understanding certain concepts related to the (nearby) economy. The sixth factor (F6) values the knowledge learned and its transfer to a real situation (applicable to other knowledge or close to the student’s daily life). It is a factor directly related to the second one (F2), insofar as the competences enable students to put into practice those historical, geographical, cultural, or artistic concepts in real-life situations, relevant to the conservation of the environment and natural resources, as well as to forge their own criteria about them, together with the importance of their contribution as citizens towards their conservation. Finally, the seventh factor (F7) focuses exclusively on assessment instruments (including objective tests), evaluating the perception that students have of the practice and the results obtained in the assessments of the subject in terms of the type of test (test or development type). These instruments are shown to be valuable measures of the skills and knowledge acquired in the subject in relation to history and its evolution, as well as the expression of a critical analysis of the facts and their impact on societies throughout history.

5. Conclusions

Following the recommendations of the European Parliament and the Council (of 18 December 2006, on key competences for lifelong learning, 2006/962/EC), as well as what the OECD is doing in 2019, competences are defined as “a combination of knowledge, skills, and attitudes appropriate to the context; [...] they are those which all individuals need for their personal fulfillment and development, as well as for active citizenship, social inclusion and employment” [

37].

In addition to the OECD, some authors and research works establish them as a key factor in education [

38,

39]. For this reason and due to the importance of the competences, in this study, some categories (factors) were delimited in order to be able to relate them to what has been learned in the field of Social Sciences. On this basis (and as has been shown), each factor relates—directly or indirectly—to the students’ perception of the acquisition of these competences based on knowledge, facts, or situations that occur in the classroom. Thus, we have seen that, for example, the first factor (F1) shows how students perceive the acquisition of skills for their application in society; that is, if these skills are valid for decision-making, for travel, or even for preparing a curriculum that allows them to undertake an active search for employment. On this basis, and as we have seen, the correlated analysis between the factors F3 and F7 has shown the existence of a positive relationship towards the development of an assessment by competencies; however—to our great regret—we suspect that, in practice, they have completely forgotten the subject to whom all possible options for improvement are directed: the student. For this reason, giving students a voice—collecting their perception—becomes a task that is consubstantial with that authentic assessment that the specialized literature speaks of [

40], and to that end—to that empowerment—we contribute as much with this work as with others already developed in this line. Finally, and by virtue of the data obtained in this research, we are in a position to state that this instrument is entirely appropriate and relevant for use within the field of social sciences, which is why we invite the scientific community that wishes to investigate and expand this line of research to use and apply it. For our part, based on the information provided by this phase of our study (completely covered with its own meaning, content, and structure), and once the instrument validated here is presented with this unbeatable profile to meet the objectives of the research, our next aim will be to undertake the design of proposals for action that could be valid and relevant when it comes to facilitating teachers’ understanding of the teaching and learning process through the incorporation of competences as a navigation chart (and always from the perspective proposed in this study).

For all the above reasons, it can be concluded that this questionnaire is a valid tool for measuring the transfer of the acquisition of competences by students in particular and of learning in general. Similarly, it can be used to evaluate methodological and assessment processes and to be able to adapt them more to the individual reality of the student. However, one limitation it can have is that the questionnaire is focused on the field of social sciences and on methodologies of the same, so it is absolutely necessary to take this information into account in the case of using it as an assessment of the teaching-learning process.