Distributed Deep Features Extraction Model for Air Quality Forecasting

Abstract

1. Introduction

- The first problem is the very slow training speed, since the huge amount of data coming from the different air quality monitoring stations is trained by using a centralized deep learning architecture. In some worst cases these models need retraining because of their degradation caused by the variation of data distribution over time. So, the problem of the time and resource-consuming during the training step is definitively a big challenge in air quality forecasting;

- The second shortcoming of these approaches is that they do not consider the fact that there is usually some noise in air quality data and meteorological data, which affects, to a certain extent, the accuracy and the performance of their predictions, since they are not able to extract suitable features and information from pollution gas and meteorological data.

- Study of current state-of-the-art machine learning and deep learning approaches for air quality forecasting;

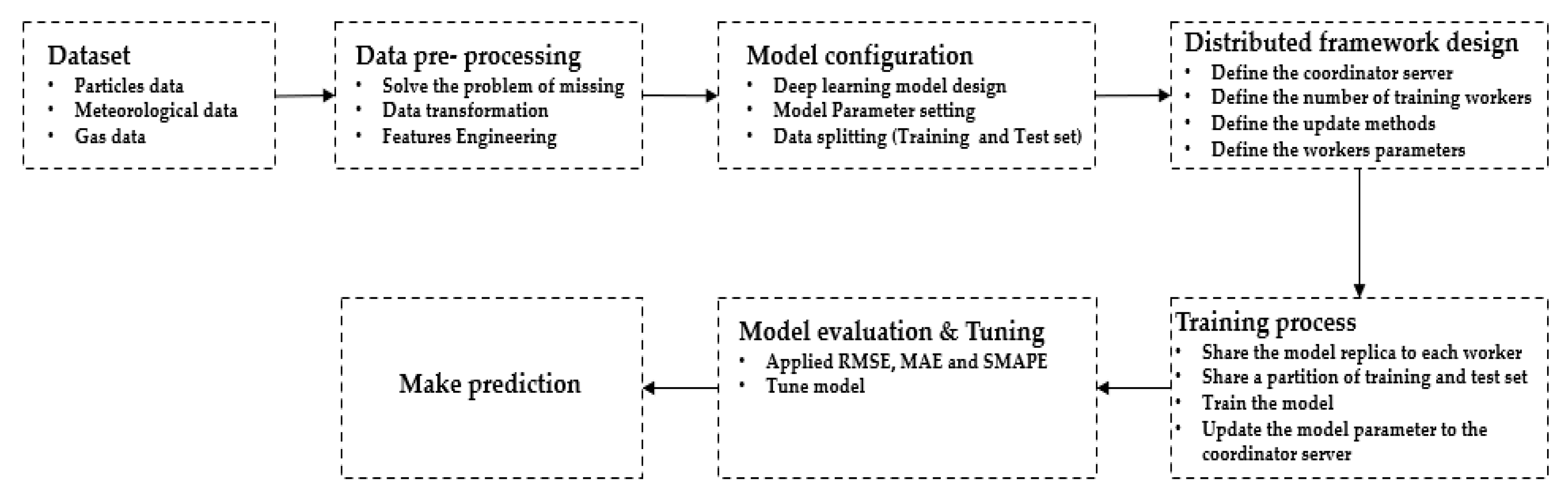

- Design and implementation of a distributed deep learning approach based on two-stage feature extraction for air quality prediction. In the first stage, a stacked autoencoder extracts useful features and information from pollution gas data and meteorological data. In the second stage, considering the properties of multivariate time series air quality particles data, we use a one-dimension convolutional layer (1D-CNN) to extract the local pattern features and the deep spatial correlation features from air quality particles data. The CNN model is widely used in object recognition and image processing area, but due to its one-dimensional characteristics it can also be applied to time series forecasting tasks. Finally, the extracted features are interpreted by a Bi-LSTM layer throughout time steps to make the final prediction;

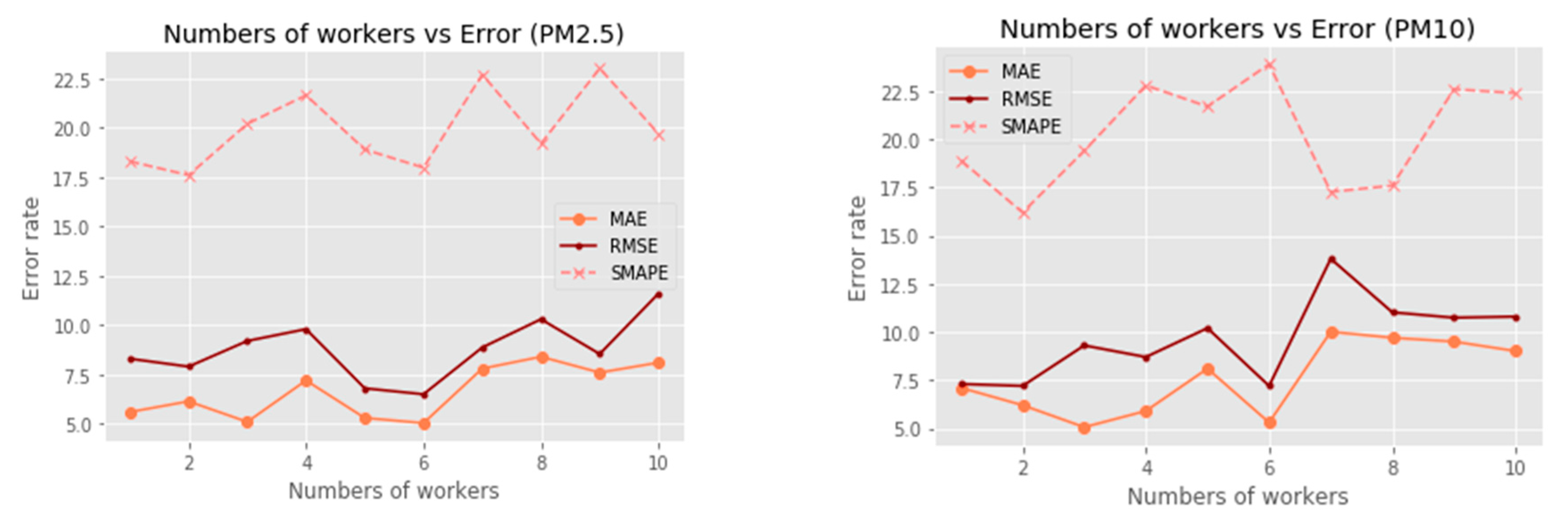

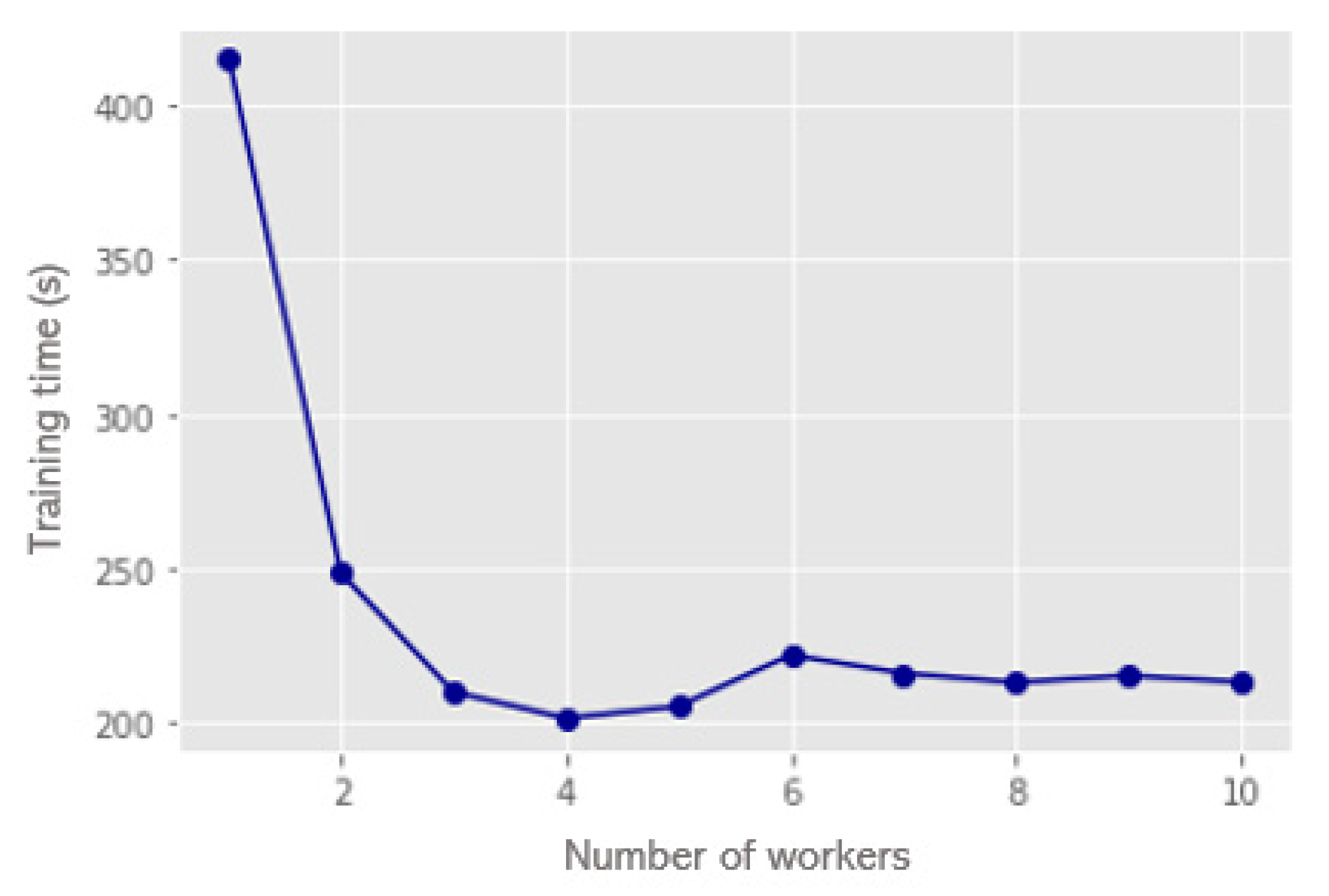

- Evaluation of the proposed approach based on two specific phases. In the first phase we train our deep learning framework within a centralized architecture with a single training server and we compare it against ten state-of-the-art models. In the second phase we use a distributed deep learning architecture called data parallelism to train the proposed framework on several training workers to optimize its accuracy and its training time.

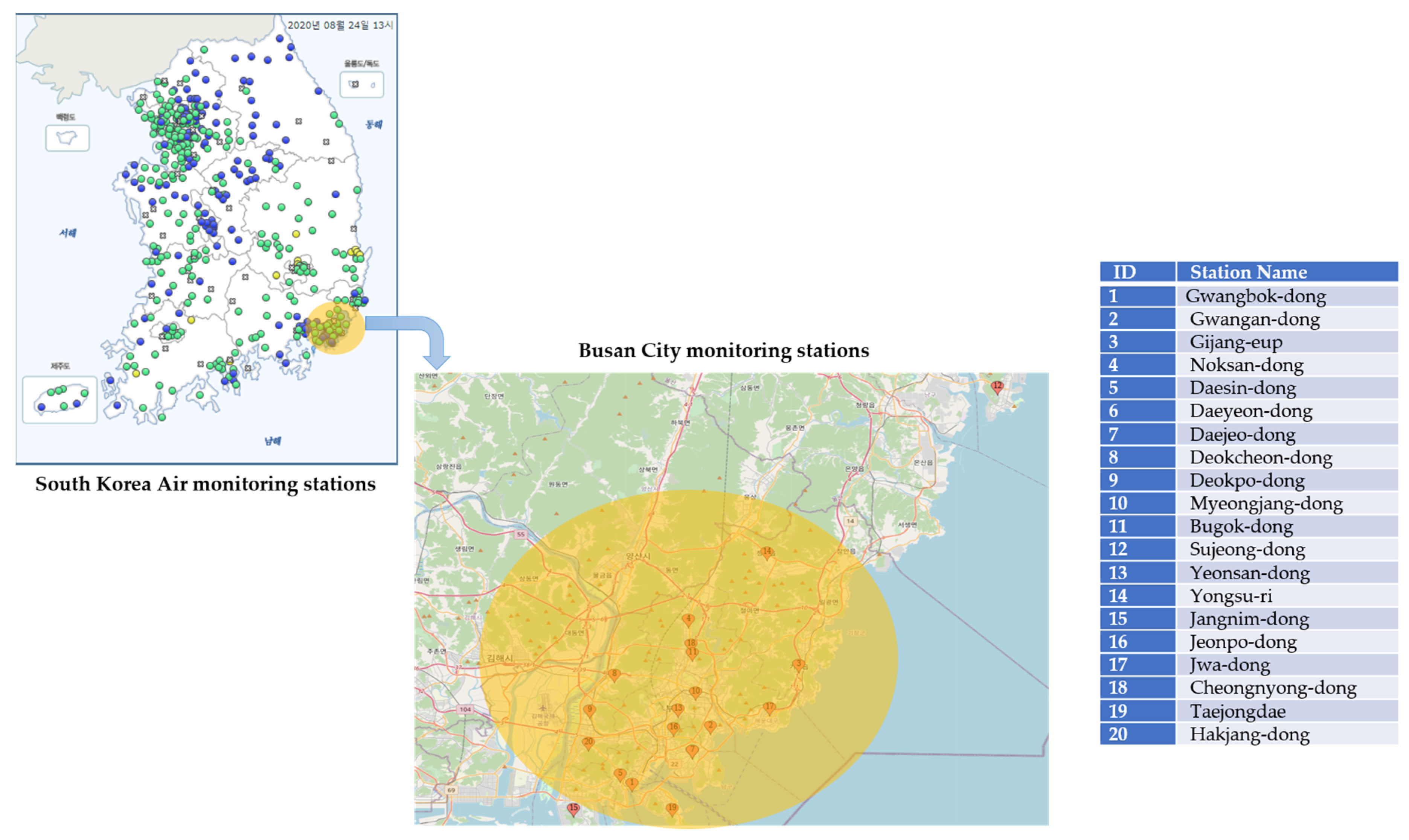

2. Data Collection and Features Correlation

2.1. Data Collection

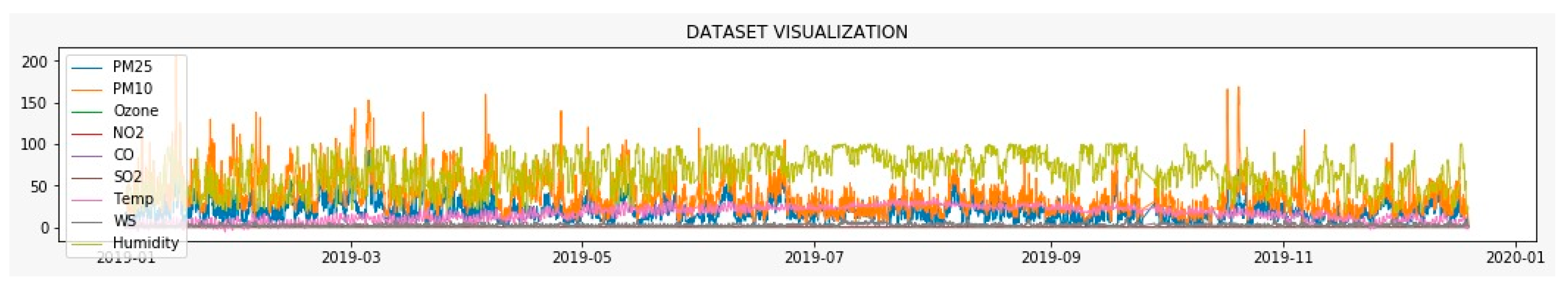

2.2. Statistical Analysis

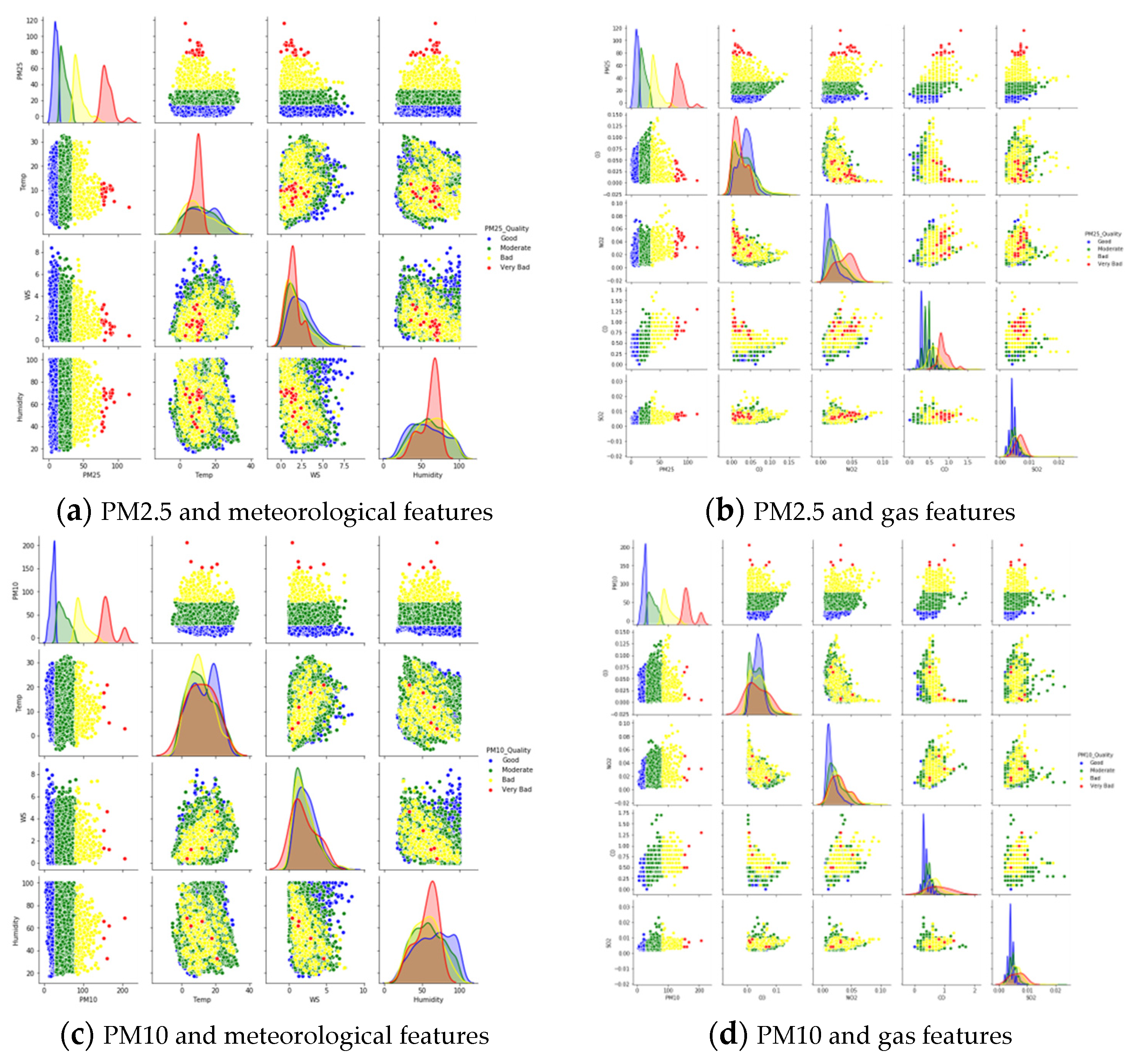

2.3. Features Correlation

3. Proposed Deep Learning Architecture

3.1. 1D-CNN for Deep Features Extraction on and Particles Data

3.2. Using Stacked Autoencoders for Gas Features and Meteorological Patterns Encoding

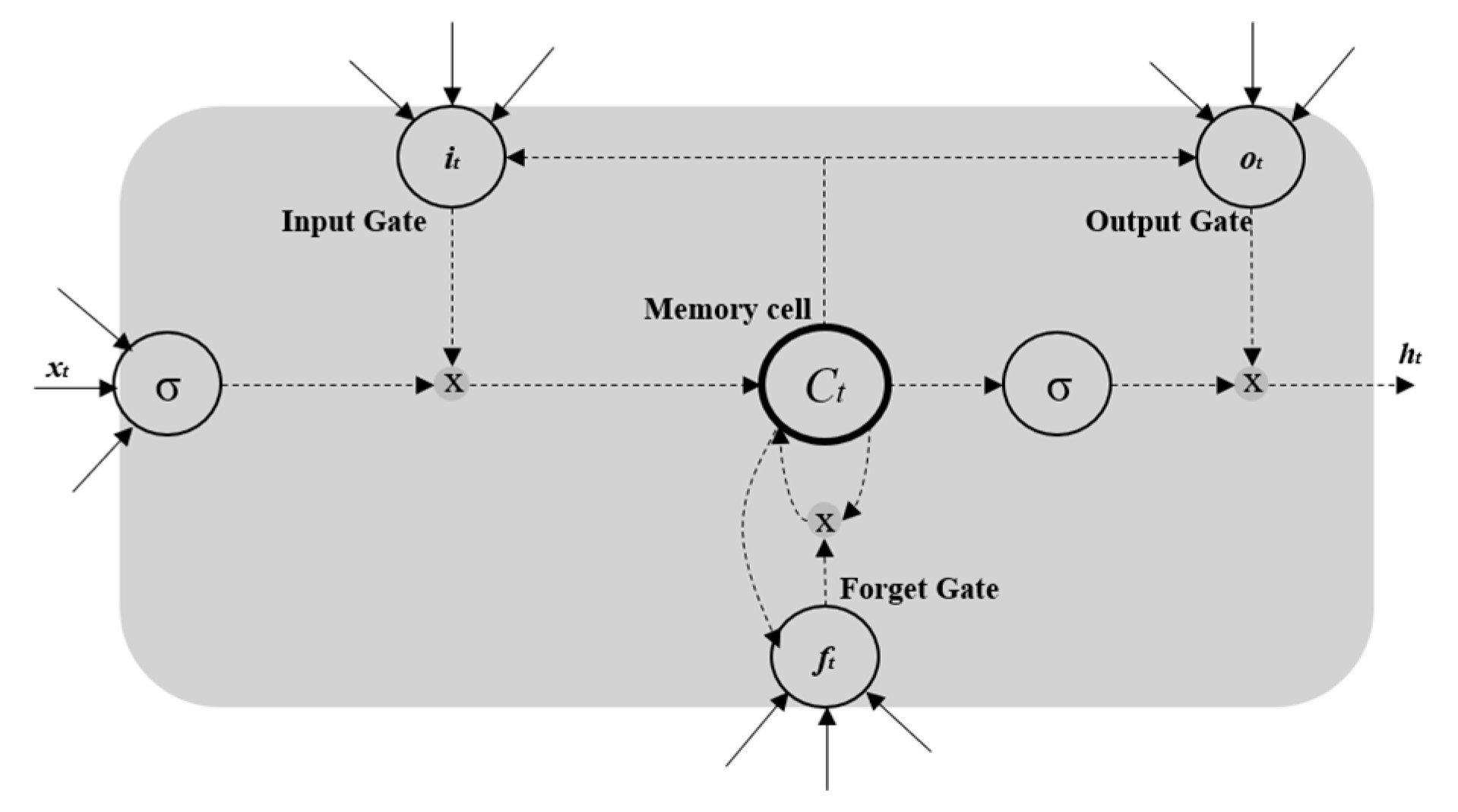

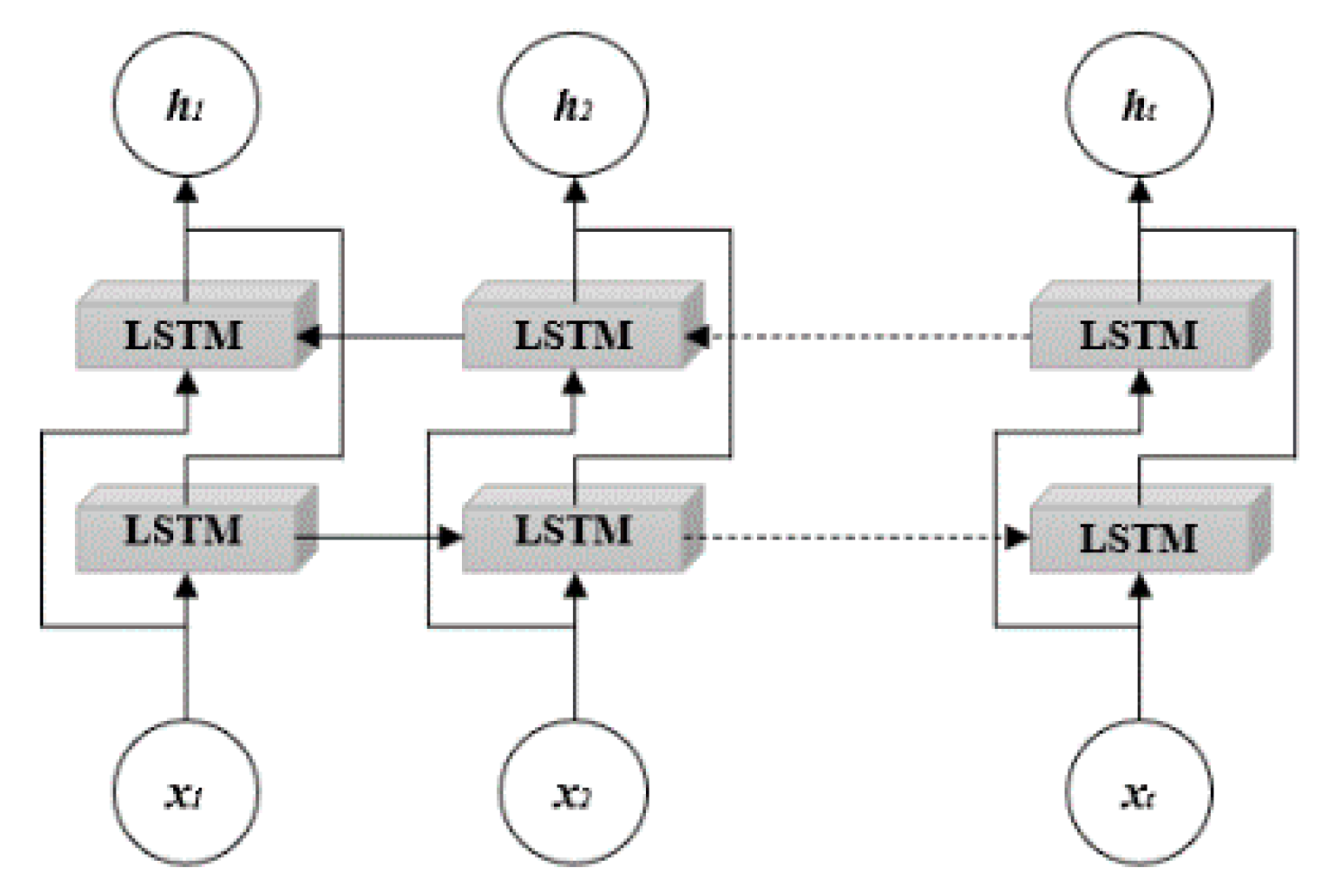

3.3. Implementation of Stacked BiLSTM for Capture Temporal Dependencies Considering Forward and Backward LSTM Directions Simultaneously

3.4. Using a Distributed Deep Learning Architecture to Train The Proposed Approach

| Algorithm 1 Distributed training process |

| 1: Initialization of coordinator node parameters 2: Define the number of training nodes N 3: Dataset portioning into n shards 4: for each monitoring station 5: datashard ← dataset/N 6: end for 7: for each node i {1,2, 3, …, N} do 8: LocalTrain(Model[parameters], datashard) 9: ∇fi ← Backpropagation () //send gradient to coordinator node 10: end for 11: UpdateMode ← Asynchronous () // Model replicas will be asynchronously aggregated via peer-to-peer communication with the coordinator node 12: Aggregate from all nodes: ∇f ← 1/N 13: for each node i {1,2, 3, …, N} do 14: CoordinatorNode.Push (ParametersUpdate) 15: end for |

4. Experiment Evaluation

4.1. Experiments Setup

- ARIMA [2]: autoregressive integrated moving average (ARIMA) is a time series prediction model which combines moving average and autoregression components;

- Random Forest regression [8]: a machine learning algorithm which aims to generate every tree in the ensemble from a sample with replacement (bootstrapping) from the training set;

- GBDT [8]: Gradient Boosting Decision Tree (GBDT) is a powerful and widely used machine learning method in time series data;

- Lasso: Lasso is a popular regression analysis algorithm that performs both variable selection and regularization;

- SVR: support vector regression is a machine learning method used for time series forecasting. This method is based on five specific kernels, which are linear, poly, RBF, sigmoid, and precomputed. In this experiment we used the linear kernel to predict the air quality and compare with the proposed approach;

- ANN multi-layer perceptron regression: a multilayer perceptron (MLP) is a class of feedforward ANN that learns a function by training on a dataset, where m is the number of dimensions for input and 0 is the number of dimensions for output;

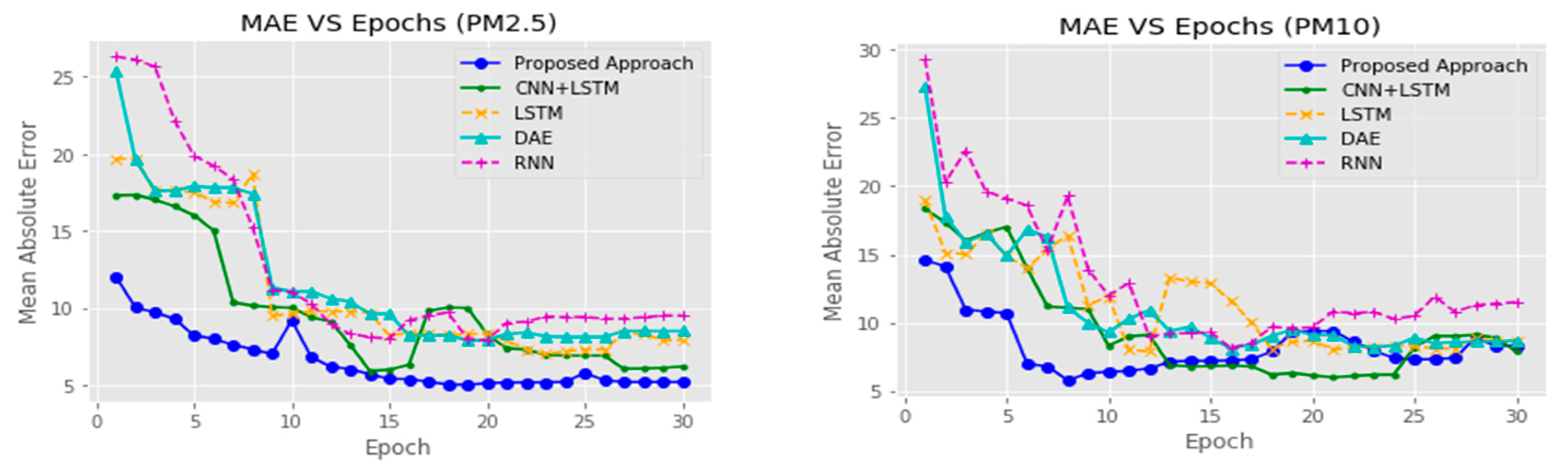

- RNN: the recurrent neural network is a specific kind of neural network that allow previous output to be used as inputs while having hidden states;

- LSTM [11]: long short-term memory network (LSTM) is a special kind of recurrent neural network, widely used for time series forecasting;

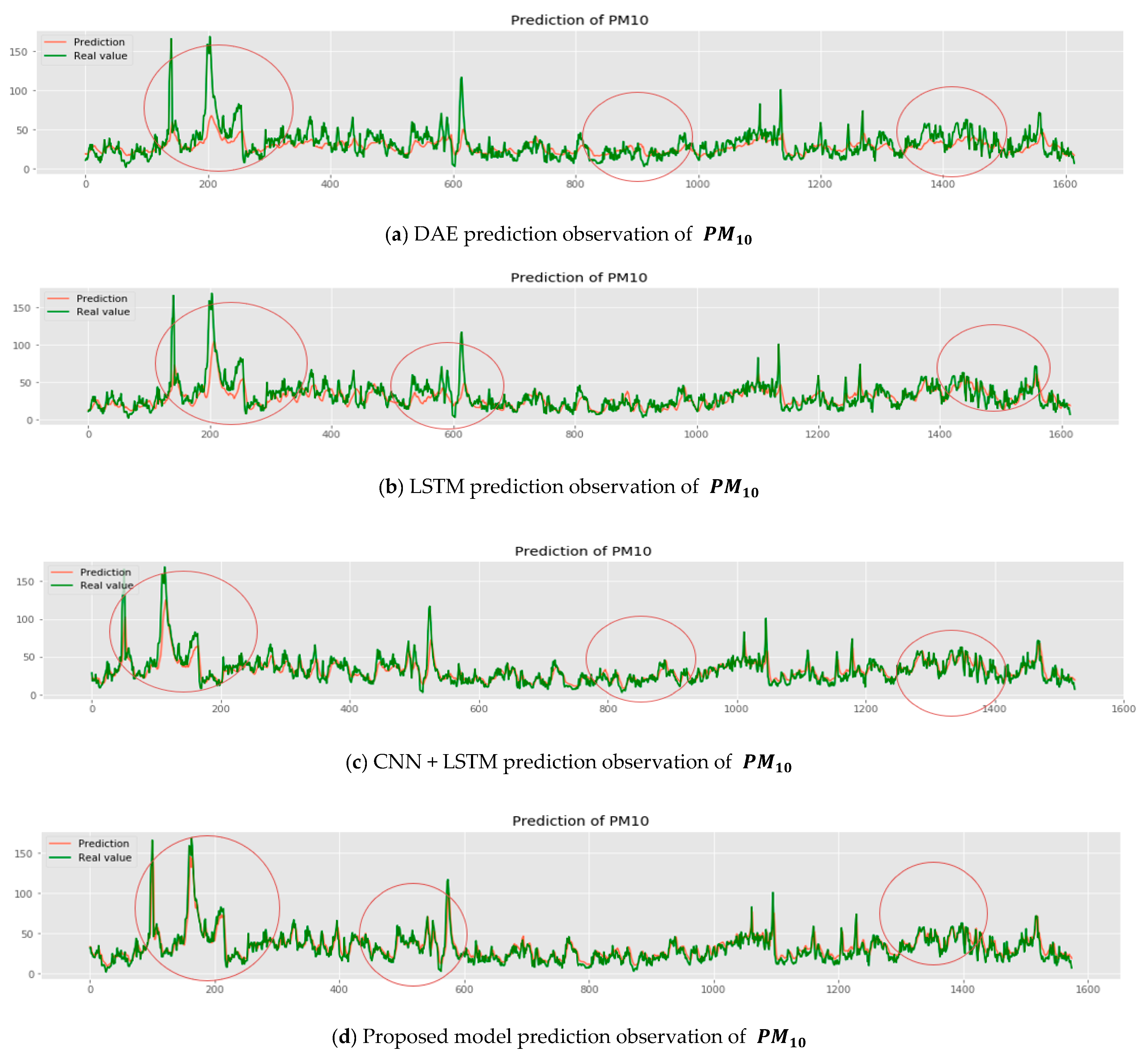

- Stacked deep autoencoder (DEA) [15]: autoencoder is a kind of unsupervised learning structure that owns three layers: input layer, hidden layer, and output layer;

- CNN+LSTM [12]: hybrid model based on convolution neural network and LSTM.

- MAE (mean absolute error): mean absolute error is used to measure the average magnitude of the errors in a set of data values (predictions), without any consideration of direction. This metric is based on the following statement:

- RMSE (root mean square error): the root mean square error is used to aggregate the magnitudes of the errors in predictions for various times into a single measure of predictive power. It is a measure of accuracy, which is widely used to compare the prediction error of multiple models for a specific dataset:

- SMAPE (symmetric mean absolute percentage error): the SMAPE is an accuracy metric based on percentage errors. This metric can be defined as follows:

4.2. Experiment Setup

4.2.1. Phase 1

4.2.2. Phase 2

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Air Korea. Available online: https://www.airkorea.or.kr/web (accessed on 26 July 2020).

- Nimesh, R.; Arora, S.; Mahajan, K.K.; Gill, A.N. Predicting air quality using ARIMA, ARFIMA and HW smoothing. Model. Assist. Stat. Appl. 2014, 9, 137–149. [Google Scholar] [CrossRef]

- Dong, M.; Yang, D.; Kuang, Y.; He, D.; Erdal, S.; Kenski, D. PM2.5 concentration prediction using hidden semi-Markov model-based times series data mining. Expert Syst. Appl. 2009, 36, 9046–9055. [Google Scholar] [CrossRef]

- Ma, J.; Yu, Z.; Qu, Y.; Xu, J.; Cao, Y. Application of the xgboost machine learning method in pm2.5 prediction: A case study of shanghai. Aerosol Air Qual. Res. 2020, 20, 128–138. [Google Scholar] [CrossRef]

- Sayegh, A.S.; Munir, S.; Habeebullah, T.M. Comparing the performance of statistical models for predicting PM10 concentrations. Aerosol Air Qual. Res. 2014, 14, 653–665. [Google Scholar] [CrossRef]

- Wang, W.; Men, C.; Lu, W. Online prediction model based on support vector machine. Neurocomputing 2008, 71, 550–558. [Google Scholar] [CrossRef]

- Martínez-España, R.; Bueno-Crespo, A.; Timón, I.; Soto, J.; Muñoz, A.; Cecilia, J.M. Air-pollution prediction in smart cities through machine learning methods: A case of study in Murcia, Spain. J. Univers. Comput. Sci. 2018, 24, 261–276. [Google Scholar]

- Ameer, S.; Shah, M.A.; Khan, A.; Song, H.; Maple, C.; Islam, S.U.; Asghar, M.N. Comparative Analysis of Machine Learning Techniques for Predicting Air Quality in Smart Cities. IEEE Access 2019, 7, 128325–128338. [Google Scholar] [CrossRef]

- Guo, G.; Zhang, N. A survey on deep learning based face recognition. Comput. Vis. Image Underst. 2019, 102805. [Google Scholar] [CrossRef]

- Inés, A.; Domínguez, C.; Heras, J.; Mata, E.; Pascual, V. DeepClas4Bio: Connecting bioimaging tools with deep learning frameworks for image classification. Comput. Biol. Med. 2019, 108, 49–56. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Deng, F.; Cai, Y.; Chen, J. Long short-term memory—Fully connected (LSTM-FC) neural network for PM2.5 concentration prediction. Chemosphere 2019, 220, 486–492. [Google Scholar] [CrossRef] [PubMed]

- Qi, Y.; Li, Q.; Karimian, H.; Liu, D. A hybrid model for spatiotemporal forecasting of PM 2.5 based on graph convolutional neural network and long short-term memory. Sci. Total Environ. 2019, 664, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Wen, C.; Liu, S.; Yao, X.; Peng, L.; Li, X.; Hu, Y.; Chi, T. A novel spatiotemporal convolutional long short-term neural network for air pollution prediction. Sci. Total Environ. 2019, 654, 1091–1099. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.J.; Kuo, P.H. A deep cnn-lstm model for particulate matter (Pm2.5) forecasting in smart cities. Sensors 2018, 18, 2220. [Google Scholar] [CrossRef] [PubMed]

- Bai, Y.; Li, Y.; Zeng, B.; Li, C.; Zhang, J. Hourly PM 2.5 concentration forecast using stacked autoencoder model with emphasis on seasonality. J. Clean. Prod. 2019, 224, 739–750. [Google Scholar] [CrossRef]

- Wang, H.; Zhuang, B.; Chen, Y.; Li, N.; Wei, D. Deep Inferential Spatial-Temporal Network for Forecasting Air Pollution Concentrations. arXiv 2018, arXiv:1809.03964v1. [Google Scholar]

- Air Korea Data. Available online: https://www.airkorea.or.kr/web/pastSearch?pMENU_NO=123 (accessed on 30 July 2020).

- Korea Meteorological Administration. Available online: https://web.kma.go.kr/eng/index.jsp (accessed on 30 July 2020).

| Models | ||||||

|---|---|---|---|---|---|---|

| MAE | RMSE | SMAPE | MAE | RMSE | SMAPE | |

| GBDT | 16.17 | 22.96 | 33.59 | 23.05 | 25.55 | 34.17 |

| MLP | 15.63 | 19.03 | 32.11 | 22.38 | 19.91 | 36.09 |

| SVR | 14.28 | 18.72 | 33.17 | 15.71 | 19.32 | 34.18 |

| RFR | 12.73 | 16.31 | 29.41 | 13.64 | 14.63 | 30.41 |

| LASSO | 10.35 | 13.99 | 28.89 | 12.31 | 14.21 | 29.37 |

| ARIMA | 9.03 | 13.49 | 27.81 | 9.34 | 12.89 | 27.78 |

| RNN | 7.90 | 10.02 | 24.17 | 8.47 | 12.33 | 25.23 |

| DAE | 7.89 | 10.31 | 24.29 | 8.10 | 11.17 | 24.94 |

| LSTM | 7.19 | 8.93 | 23.15 | 6.99 | 9.33 | 24.61 |

| CNN + LSTM | 5.90 | 8.33 | 21.03 | 6.21 | 8.27 | 21.93 |

| Proposed Approach | 5.07 | 6.93 | 18.27 | 5.83 | 7.22 | 17.27 |

| Models | Average Training Time (s) |

|---|---|

| RNN | 482 |

| DAE | 463 |

| LSTM | 345 |

| CNN + LSTM | 341 |

| Proposed model | 310 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mengara Mengara, A.G.; Kim, Y.; Yoo, Y.; Ahn, J. Distributed Deep Features Extraction Model for Air Quality Forecasting. Sustainability 2020, 12, 8014. https://doi.org/10.3390/su12198014

Mengara Mengara AG, Kim Y, Yoo Y, Ahn J. Distributed Deep Features Extraction Model for Air Quality Forecasting. Sustainability. 2020; 12(19):8014. https://doi.org/10.3390/su12198014

Chicago/Turabian StyleMengara Mengara, Axel Gedeon, Younghak Kim, Younghwan Yoo, and Jaehun Ahn. 2020. "Distributed Deep Features Extraction Model for Air Quality Forecasting" Sustainability 12, no. 19: 8014. https://doi.org/10.3390/su12198014

APA StyleMengara Mengara, A. G., Kim, Y., Yoo, Y., & Ahn, J. (2020). Distributed Deep Features Extraction Model for Air Quality Forecasting. Sustainability, 12(19), 8014. https://doi.org/10.3390/su12198014