FIViz: Forensics Investigation through Visualization for Malware in Internet of Things

Abstract

1. Introduction

2. Literature Review

- Analyze the date and day based on the occurrence of an activity

- Integrate log files as per need.

- Identify the desired parameters for the analysis that assist in the collection of evidence for a malicious activity.

- Search for frequent users activity in integrated log files with their IP addresses, file access, bytes transferred.

- For the confirmation of maliciousness, analyze the behavior of their activity and IP address etc.

- Reconstruct the whole event timeline hypothesis by analyzing log files and evidence.

- Generate report.

- Chronological artifacts collection.

- Timeline artifacts analysis.

- Exploratory phase

- Investigative phase

- Correlation phase

- Level 1: Display individual activity in sections i.e., accessed registry of a process to get overview of what a process is doing.

- Level 2: Extends level 1 and shows related API calls names executed in each section.

- Level 3: Extends level 2 and add most significant argument of each API call.

- Level 4: This level considering even more argument for each API call.

Critical Analysis of Existing Work

3. Proposed Framework and Working

3.1. System Assumptions and Design Goal

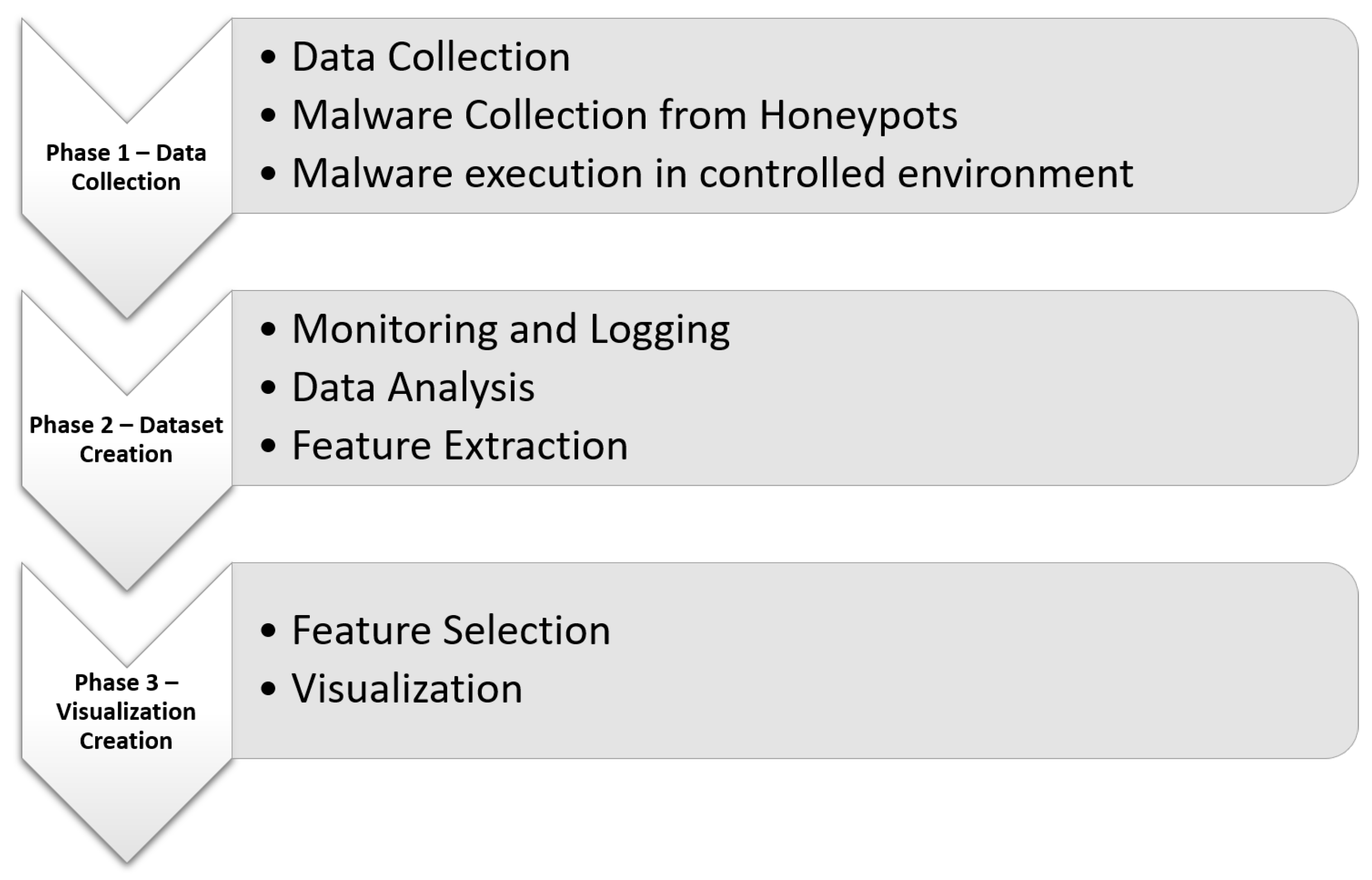

3.2. Phase 1: Data Collection

3.2.1. Malware Samples Collection

3.2.2. Controlled Virtual Environment Creation

3.2.3. Monitoring and Logging Activity

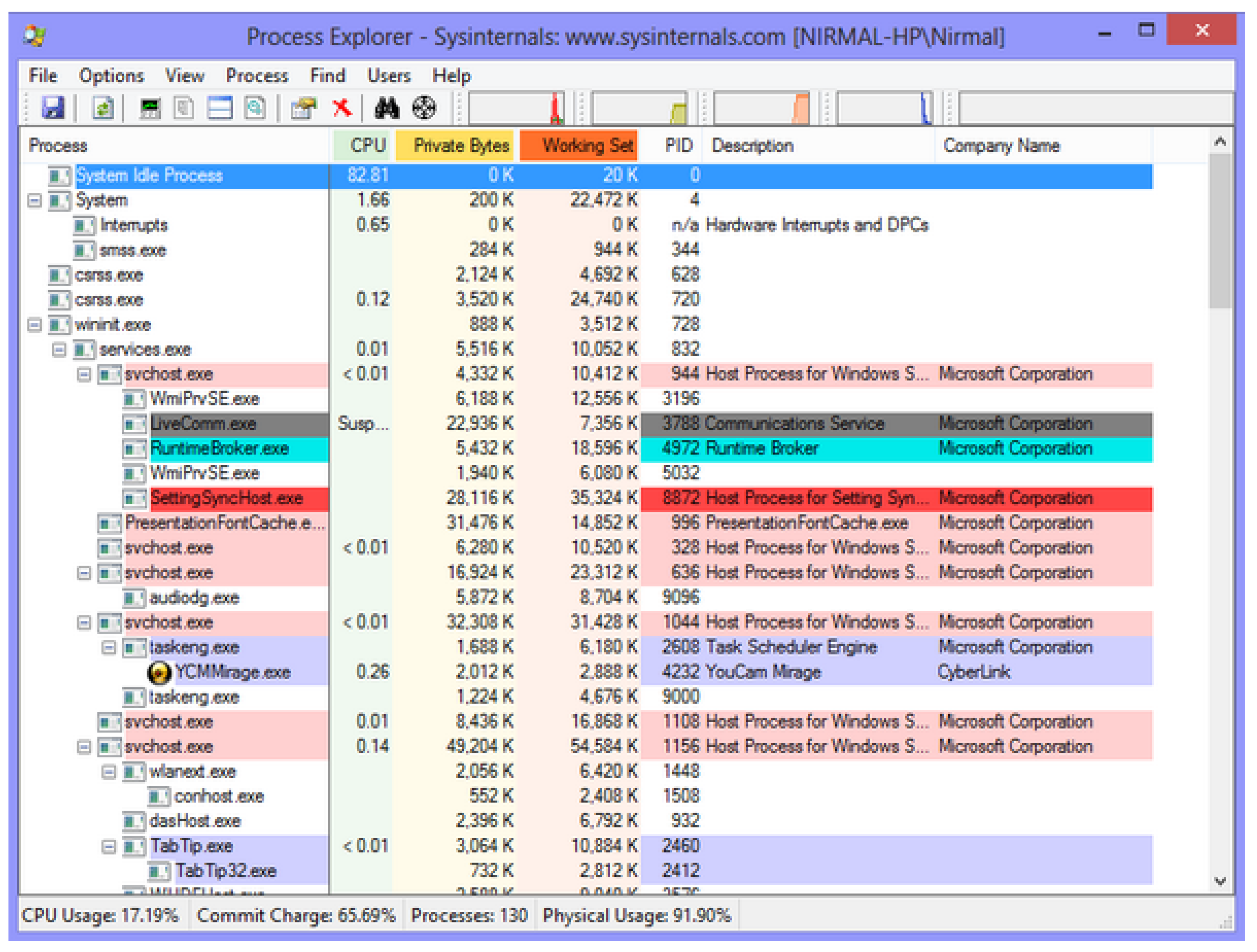

Process Explorer

Process Monitor

Portable Executable Explorer (PE Explorer)

TCP View

Wireshark

3.3. Cuckoo Sandbox

- To manipulate the data we used Pandas.

- Numpy was used to create the data array.

- For splitting data into training and test set Skicit-learn was used.

- We needed to separate year, month and date, for this purpose Datetime library was used.

- Matplotlib to plot the results.

- Seaborn library to find and remove the missing values

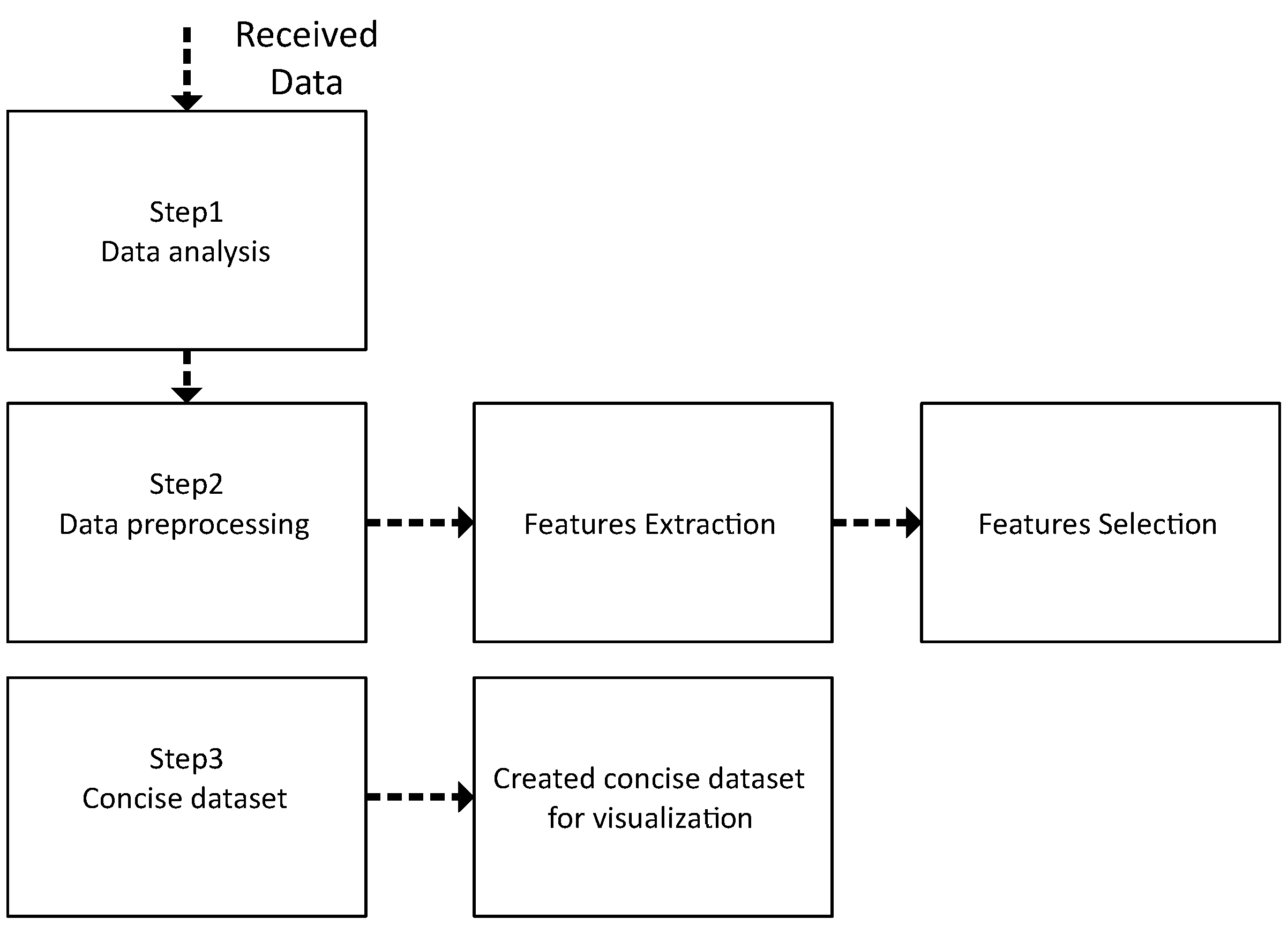

3.4. Phase 2: Features Extraction and Selection

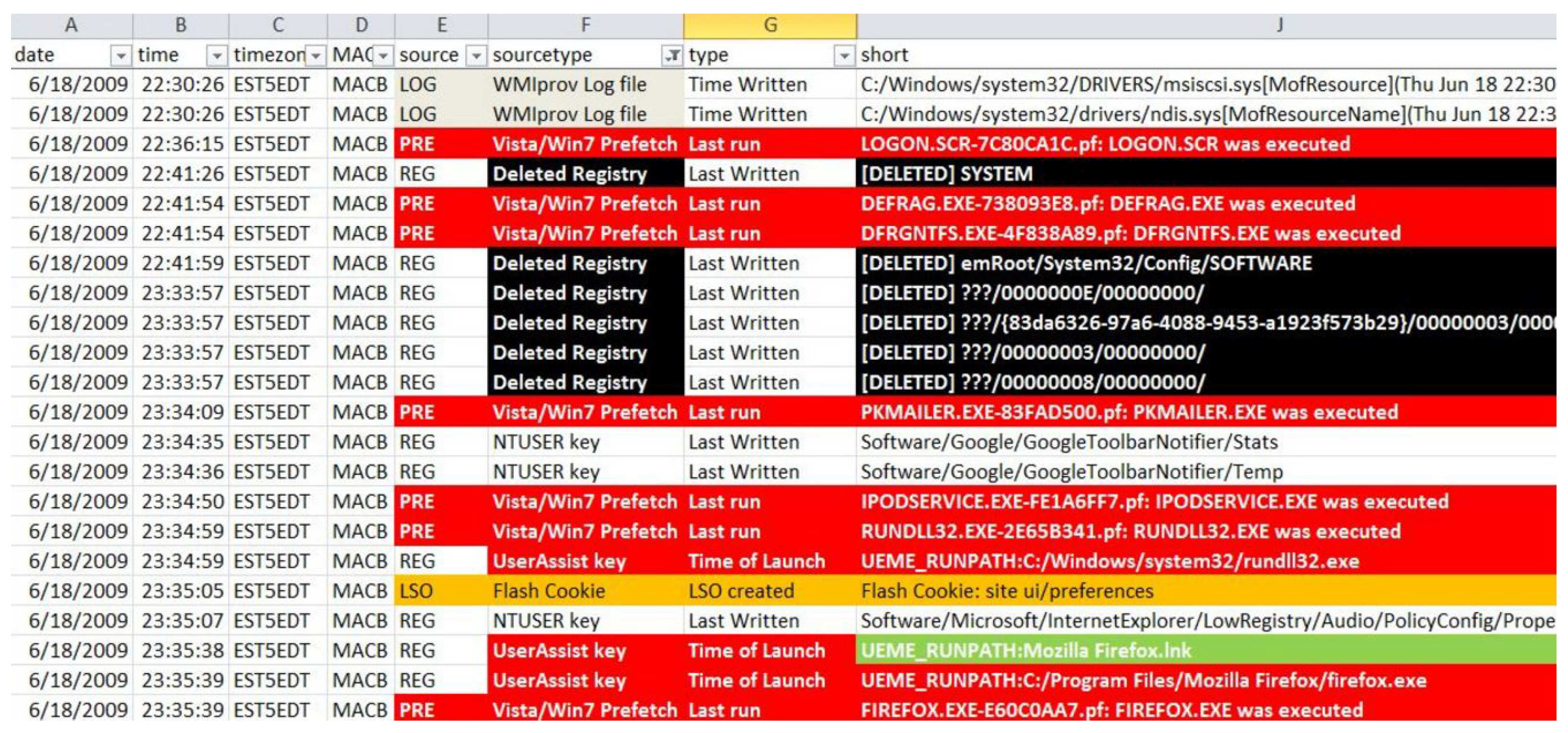

3.4.1. Data Analysis

Data Analysis Goals

3.4.2. Types of Malware Analysis

Static Analysis

Dynamic Analysis

3.4.3. Features Extraction and Selection

DLL (Dynamic-Link Library)

Time-Stamp

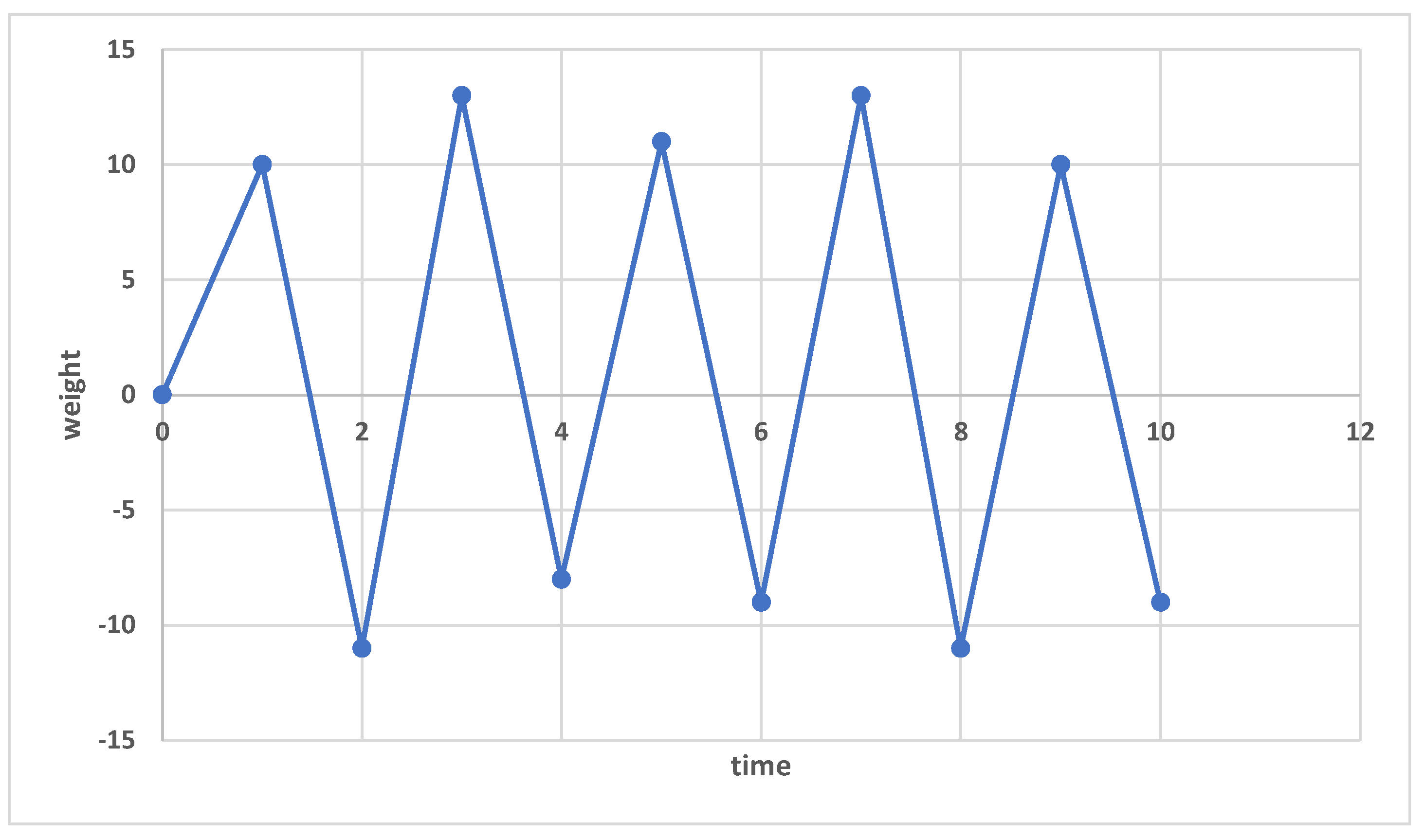

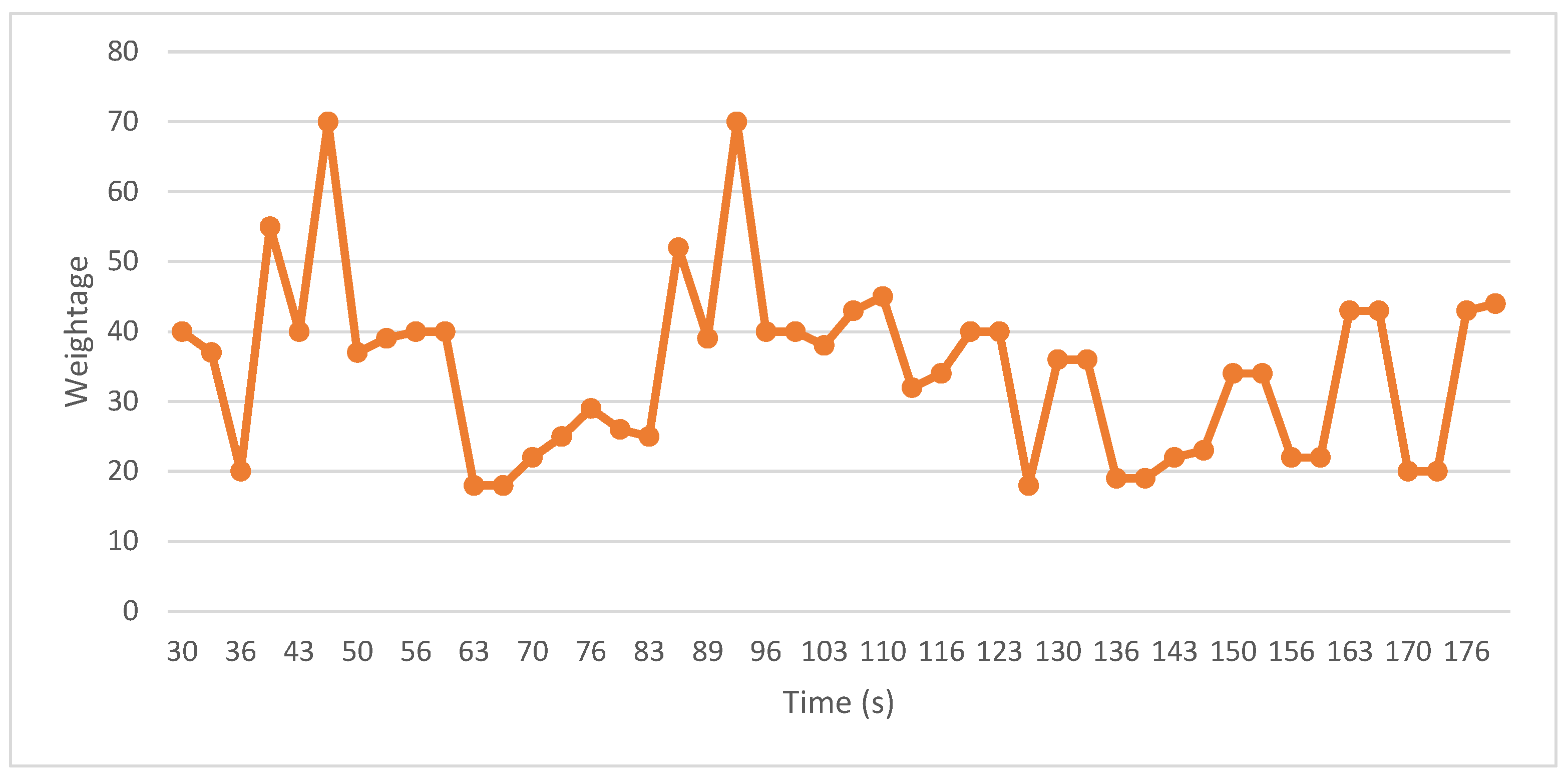

Weight-Age

3.5. Phase 2: Data Set Creation

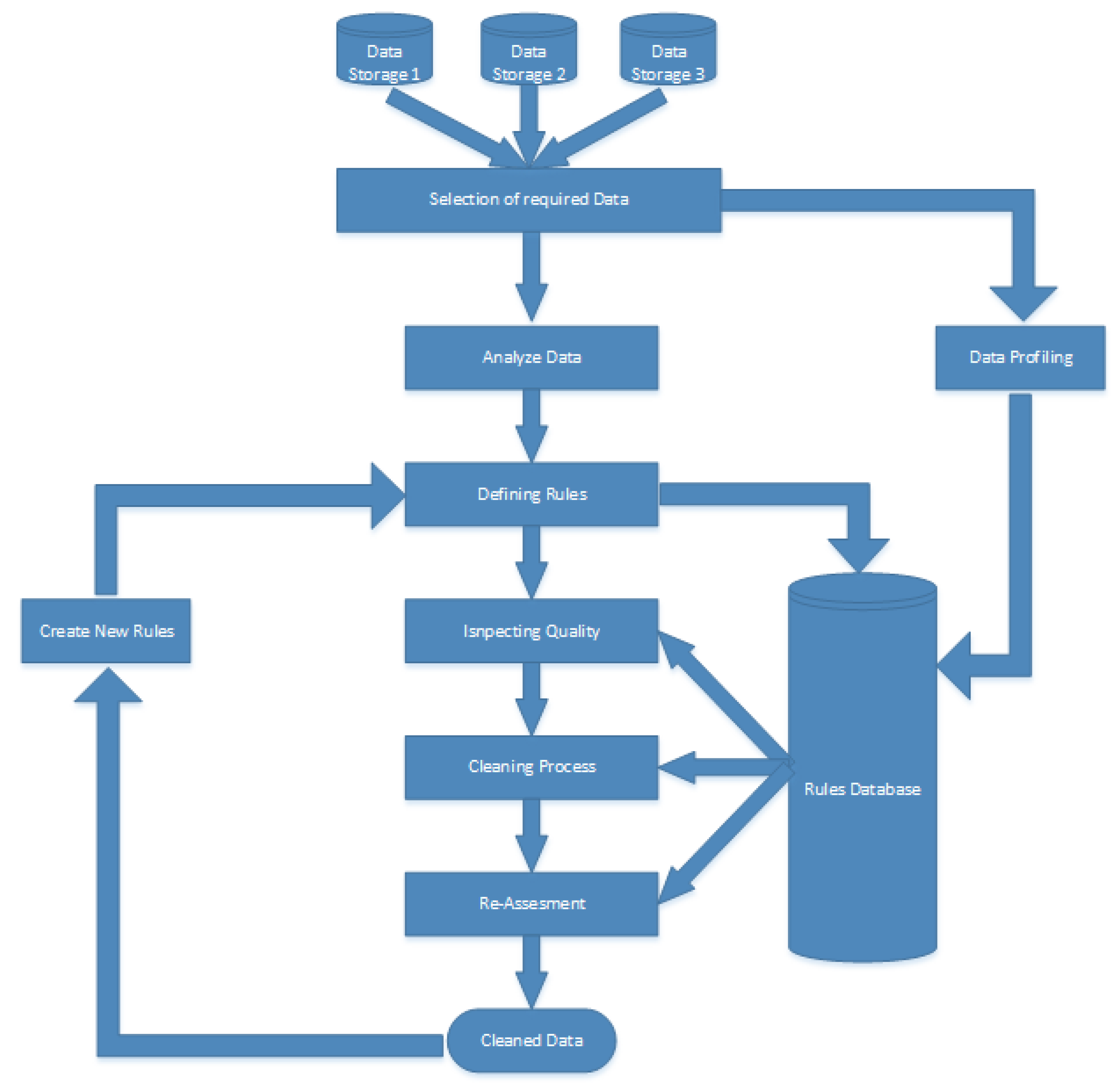

3.5.1. Data Analysis

3.5.2. Feature Extraction

3.5.3. Feature Selection

3.5.4. Concise Data Set

3.5.5. Data Preprocessing

- DLL that is present only in malicious file, then assign weight is equal and above 50.

- DLL that is present only in benign file, then assign weight below fifty (50).

- If DLL is present in both malicious and benign, then check count and if count is greater than 10, assign weight according to formula which is greater or equal to 50 but if this count is less than 10, assign weight less than 50.

3.5.6. Data Transformation

3.6. Phase 3: Visualization Creation

4. Experimental Analysis

4.1. Experimental Setup

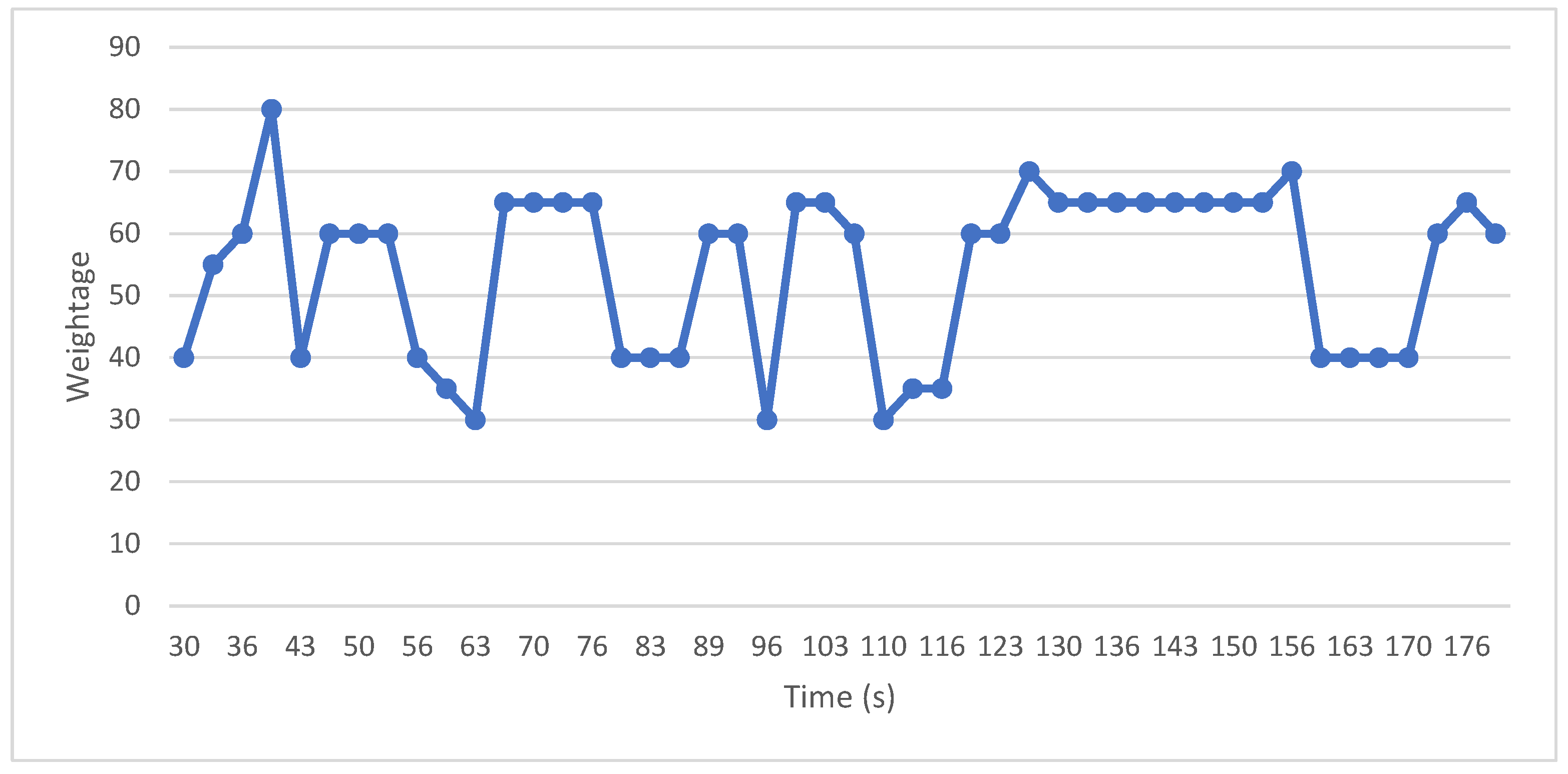

4.2. Normal vs Abnormal Activity Visualization

5. Results and Discussion

5.1. Visual Benign vs Malicious Process Analysis Evaluation

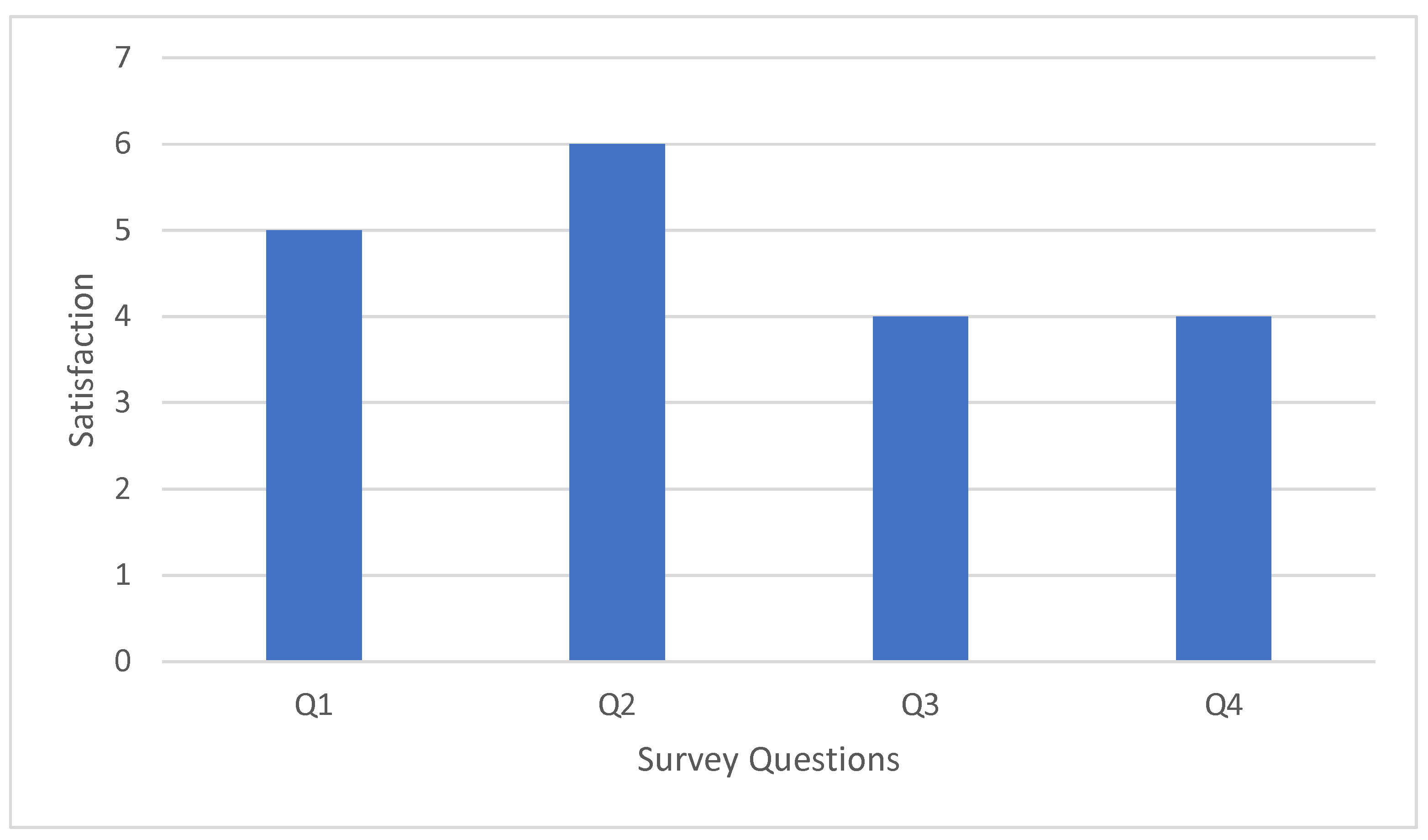

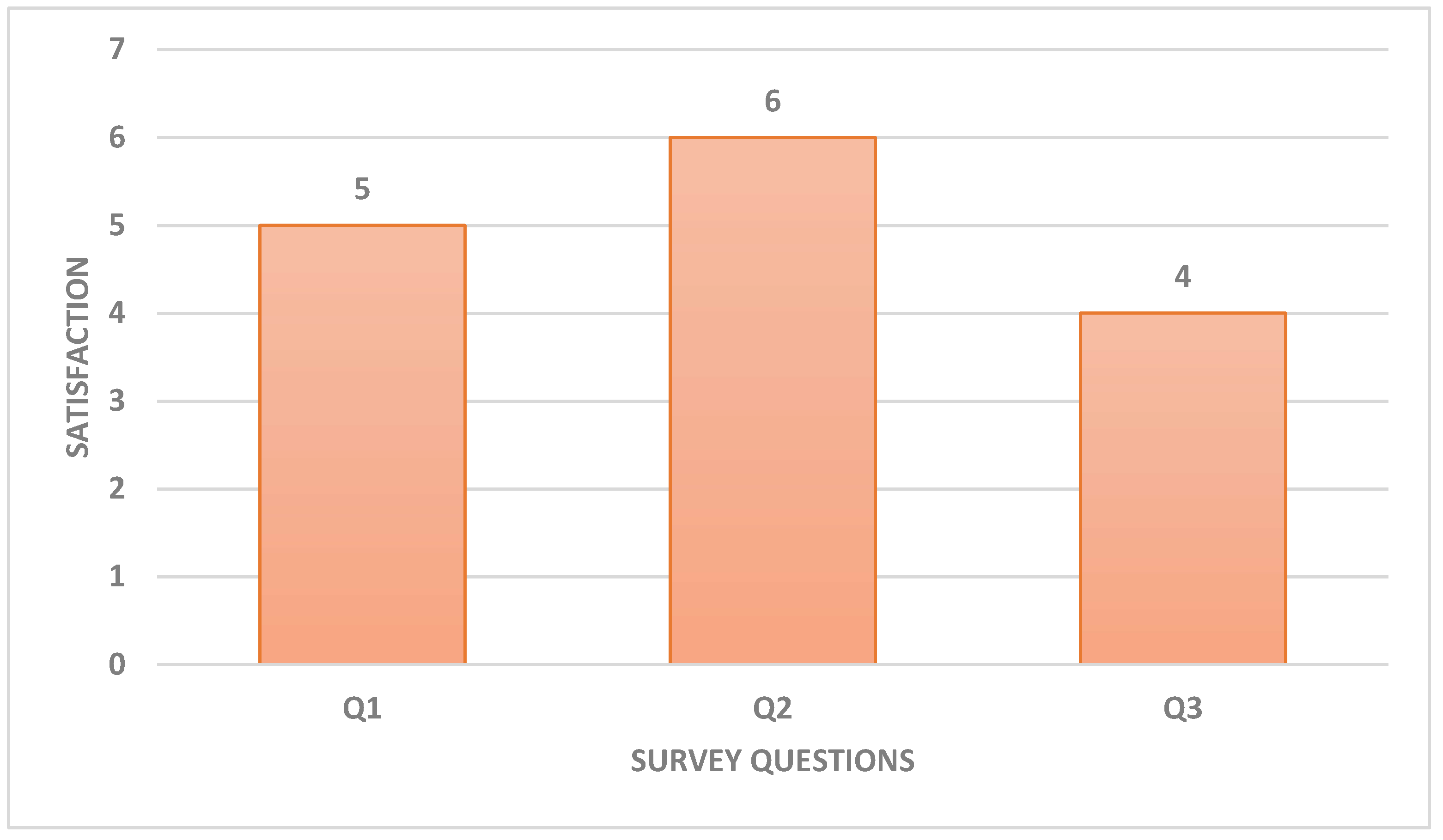

5.2. Response Collection and Analysis

5.3. Visualization vs Textual methods Analysis Evaluation from Laymen w.r.t Time

- Can you identify any malicious DLL?

- What type of operation was performed on DLLs and their result?

- On the basis of different activity, can we distinguish between benign and malicious files?

- According to assigned weights which DLL got maximum weight and what was his operation?

- According to assigned weights which DLL got minimum weight and what was his operation?

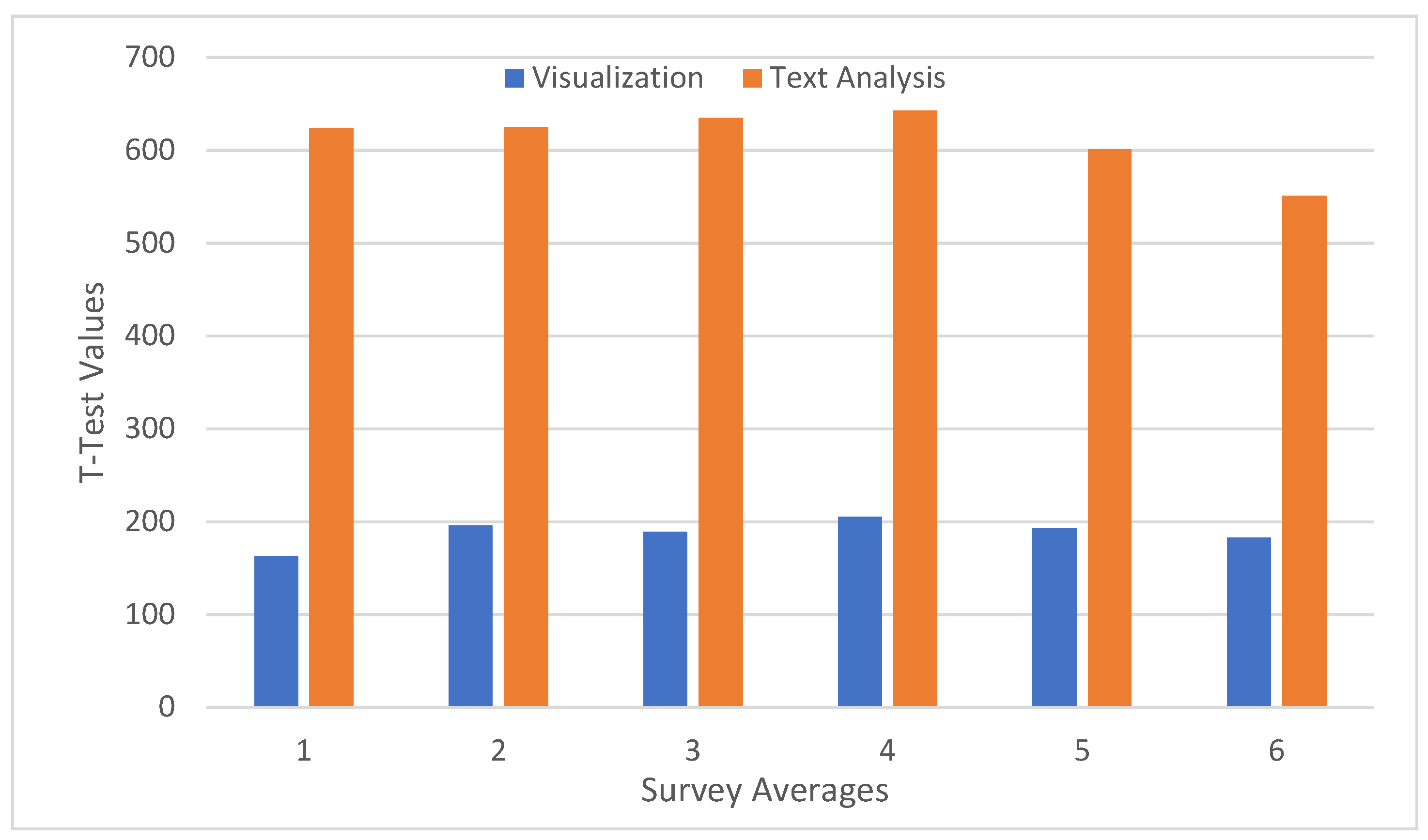

5.4. T-Test

5.5. Hypothesis

6. Conclusions and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Shafiq, M.; Yu, X.; Bashir, A.K.; Chaudhry, H.N.; Wang, D. A machine learning approach for feature selection traffic classification using security analysis. J. Supercomput. 2018, 74, 4867–4892. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, C.; Nakahira, Y. Medical Application on Internet of Things. In Proceedings of the IET International Conference on Communication Technology and Application (ICCTA 2011), Beijing, China, 14–16 October 2011; pp. 660–665. [Google Scholar]

- Philip, V.; Suman, V.K.; Menon, V.G.; Dhanya, K. A review on latest internet of things based healthcare applications. Int. J. Comput. Sci. Inf. Secur. 2017, 15, 248. [Google Scholar]

- Deshkar, S.; Thanseeh, R.; Menon, V.G. A review on IoT based m-Health systems for diabetes. Int. J. Comput. Sci. Telecommun. 2017, 8, 13–18. [Google Scholar]

- Bhuyan, S.S.; Kabir, U.Y.; Escareno, J.M.; Ector, K.; Palakodeti, S.; Wyant, D.; Kumar, S.; Levy, M.; Kedia, S.; Dasgupta, D.; et al. Transforming Healthcare Cybersecurity from Reactive to Proactive: Current Status and Future Recommendations. J. Med. Syst. 2020, 44, 1–9. [Google Scholar] [CrossRef]

- Qiu, H.; Qiu, M.; Memmi, G.; Liu, M. Secure Health Data Sharing for Medical Cyber-Physical Systems for the Healthcare 4.0. IEEE J. Biomed. Health Inform. 2020. [Google Scholar] [CrossRef]

- Freet, D.; Agrawal, R. A virtual machine platform and methodology for network data analysis with IDS and security visualization. In Proceedings of the SoutheastCon 2017, Charlotte, NC, USA, 30 March–2 April 2017; pp. 1–8. [Google Scholar]

- Chen, L.; Takabi, H.; Le-Khac, N.A. Security, Privacy, and Digital Forensics in the Cloud; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Petroni, N.L., Jr.; Walters, A.; Fraser, T.; Arbaugh, W.A. FATKit: A framework for the extraction and analysis of digital forensic data from volatile system memory. Digit. Investig. 2006, 3, 197–210. [Google Scholar] [CrossRef]

- Hargreaves, C.; Patterson, J. An automated timeline reconstruction approach for digital forensic investigations. Digit. Investig. 2012, 9, S69–S79. [Google Scholar] [CrossRef]

- Studiawan, H.; Sohel, F.; Payne, C. A survey on forensic investigation of operating system logs. Digit. Investig. 2019, 29, 1–20. [Google Scholar] [CrossRef]

- Butler, J.; Murdock, J. Physical Memory Forensics for Files and Cache. 2011. Available online: https://paper.bobylive.com/Meeting_Papers/BlackHat/USA-2011/BH_US_11_ButlerMurdock_Physical_Memory_Forensics-WP.pdf (accessed on 3 September 2020).

- Pfeffer, A.; Ruttenberg, B.; Kellogg, L.; Howard, M.; Call, C.; O’Connor, A.; Takata, G.; Reilly, S.N.; Patten, T.; Taylor, J.; et al. Artificial intelligence based malware analysis. arXiv 2017, arXiv:1704.08716. [Google Scholar]

- Somarriba, O.; Zurutuza, U.; Uribeetxeberria, R.; Delosières, L.; Nadjm-Tehrani, S. Detection and visualization of android malware behavior. J. Electr. Comput. Eng. 2016, 2016, 8034967. [Google Scholar] [CrossRef]

- Koven, J.; Bertini, E.; Dubois, L.; Memon, N. InVEST: Intelligent visual email search and triage. Digit. Investig. 2016, 18, S138–S148. [Google Scholar] [CrossRef]

- Teelink, S.; Erbacher, R.F. Improving the computer forensic analysis process through visualization. Commun. ACM 2006, 49, 71–75. [Google Scholar] [CrossRef]

- Grégio, A.R.; Santos, R.D. Visualization techniques for malware behavior analysis. In Sensors, and Command, Control, Communications, and Intelligence (C3I) Technologies for Homeland Security and Homeland Defense X; International Society for Optics and Photonics: Bellingham, WA, USA, 2011; Volume 8019, p. 801905. [Google Scholar]

- Lowman, S.; Ferguson, I. Web History Visualisation for Forensic Investigations. Master’s Thesis, Department of Computer and Information Sciences, University of Strathclyde, Glasgow, Scotland, 2010. [Google Scholar]

- Nordbø, A. Data Visualization for Discovery of Digital Evidence in Email. Master’s Thesis, Faculty of Computer Science and Media Technology, Gjøvik University, Gjøvik, Norway, 2014. [Google Scholar]

- Gupchup, N.; Mishra, N. A Systematic Survey on Mobile Forensic Tools Used for Forensic Analysis of Android-Based Social Networking Applications. In Data, Engineering and Applications; Springer: Berlin, Germany, 2019; pp. 205–215. [Google Scholar]

- Ali, A.; Ahmed, M.; Imran, M.; Khattak, H.A. Security and Privacy Issues in Fog Computing. In Fog Computing: Theory and Practice; John Wiley & Sons: Hoboken, NJ, USA, 2020; Volume 1, pp. 105–138. [Google Scholar]

- Manzoor, A.; Shah, M.A.; Khattak, H.A.; Din, I.U.; Khan, M.K. Multi-tier authentication schemes for fog computing: Architecture, security perspective, and challenges. Int. J. Commun. Syst. 2019, e4033. [Google Scholar] [CrossRef]

- Shafqat, N. Forensic investigation of user’s web activity on Google Chrome using various forensic tools. IJCSNS Int. J. Comput. Sci. Netw. Secur 2016, 16, 123–132. [Google Scholar]

- Akbal, E.; Günes, F.; Akbal, A. Digital Forensic Analyses of Web Browser Records. JSW 2016, 11, 631–637. [Google Scholar] [CrossRef]

- Fletcher, D. Forensic timeline analysis using Wireshark. SANS 2015, 14, 2015. [Google Scholar]

- Debinski, M.; Breitinger, F.; Mohan, P. Timeline2GUI: A Log2timeline CSV parser and training scenarios. Digit. Investig. 2019, 28, 34–43. [Google Scholar] [CrossRef]

- Khatik, P.; Choudhary, P. An implementation of time line events visualization tool using forensic Digger algorithm. JCSE Int. J. Comput. Sci. Eng. 2014, 2, 216–220. [Google Scholar]

- Kälber, S.; Dewald, A.; Idler, S. Forensic zero-knowledge event reconstruction on filesystem metadata. In Proceedings of the GI Sicherheit 2014, Vienna, Austria, 19–21 March 2014. [Google Scholar]

- Inglot, B.; Liu, L.; Antonopoulos, N. A framework for enhanced timeline analysis in digital forensics. In Proceedings of the 2012 IEEE International Conference on Green Computing and Communications, Besancon, France, 20–23 November 2012; pp. 253–256. [Google Scholar]

- Esposito, S.J. Analysis of Forensic Super Timelines; Air Force Institute of Technology, Wright-Patterson Air Force Base: Dayton, OH, USA, 2012. [Google Scholar]

- Osborne, G.; Turnbull, B.; Slay, J. Development of InfoVis software for digital forensics. In Proceedings of the 2012 IEEE 36th Annual Computer Software and Applications Conference Workshops, Izmir, Turkey, 16–20 July 2012; pp. 213–217. [Google Scholar]

- Edwards, D. Computer Forensic Timeline Analysis with Tapestry; SANS Institute: Rockville, MD, USA, 2011. [Google Scholar]

- Schrenk, G.; Poisel, R. A discussion of visualization techniques for the analysis of digital evidence. In Proceedings of the 2011 Sixth International Conference on Availability, Reliability and Security, Vienna, Austria, 22–26 August 2011; pp. 758–763. [Google Scholar]

- Guðjónsson, K. Mastering the Super Timeline with Log2timeline; SANS Institute: Rockville, MD, USA, 2010. [Google Scholar]

- Olsson, J.; Boldt, M. Computer forensic timeline visualization tool. Digit. Investig. 2009, 6, S78–S87. [Google Scholar] [CrossRef]

- Buchholz, F.P.; Falk, C. Design and Implementation of Zeitline: A Forensic Timeline Editor. In Proceedings of the Digital Forensic Research Workshop (DFRWS), New Orleans, LA, USA, 17–19 August 2005; pp. 1–8. [Google Scholar]

- Xie, X.; Wang, W. Lightweight examination of dll environments in virtual machines to detect malware. In Proceedings of the 4th ACM International Workshop on Security in Cloud Computing, Xi’an, China, 30 May–3 June 2016; pp. 10–16. [Google Scholar]

- Ahmadi, M.; Ulyanov, D.; Semenov, S.; Trofimov, M.; Giacinto, G. Novel feature extraction, selection and fusion for effective malware family classification. In Proceedings of the Sixth ACM Conference on Data and Application Security and Privacy, New Orleans, LA, USA, 9–11 March 2016; pp. 183–194. [Google Scholar]

- Veeramani, R.; Rai, N. Windows api based malware detection and framework analysis. In Proceedings of the International Conference on Networks and Cyber Security, Alexandria, Virginia, 14–16 December 2012; Volume 3, pp. 1–6. [Google Scholar]

- Mosli, R.; Li, R.; Yuan, B.; Pan, Y. Automated malware detection using artifacts in forensic memory images. In Proceedings of the 2016 IEEE Symposium on Technologies for Homeland Security (HST), Waltham, MA, USA, 10–11 May 2016; pp. 1–6. [Google Scholar]

- Case, A.; Richard III, G.G. Detecting objective-C malware through memory forensics. Digit. Investig. 2016, 18, S3–S10. [Google Scholar] [CrossRef]

- Ahmadi, M.; Sami, A.; Rahimi, H.; Yadegari, B. Malware detection by behavioural sequential patterns. Comput. Fraud Secur. 2013, 2013, 11–19. [Google Scholar] [CrossRef]

- Trinius, P.; Holz, T.; Göbel, J.; Freiling, F.C. Visual analysis of malware behavior using treemaps and thread graphs. In Proceedings of the 2009 6th International Workshop on Visualization for Cyber Security, Atlantic City, NJ, USA, 11 October 2009; pp. 33–38. [Google Scholar]

- Margosis, A.; Russinovich, M.E. Windows Sysinternals Administrator’s Reference; Pearson Education: London, UK, 2011. [Google Scholar]

- Orebaugh, A.; Ramirez, G.; Beale, J. Wireshark & Ethereal Network Protocol Analyzer Toolkit; Elsevier: Amsterdam, The Netherlands, 2006. [Google Scholar]

- Guarnieri, C.; Tanasi, A.; Bremer, J.; Schloesser, M. The Cuckoo Sandbox. 2012. Available online: https://www.cuckoosandbox.org (accessed on 3 September 2020).

- Symantec, I. 2019 Internet Security Threat Report; Symantec Corporation: Mountain View, CA, USA, 2019. [Google Scholar]

- Norouzi, M.; Souri, A.; Samad Zamini, M. A data mining classification approach for behavioral malware detection. J. Comput. Netw. Commun. 2016, 2016, 8069672. [Google Scholar] [CrossRef]

- Guilfanov, I. The IDA Pro disassembler and debugger. DataRescue; Version 6; Prosoft Engineering, Inc.: Livermore, CA, USA, 2011. [Google Scholar]

- Rieck, K.; Holz, T.; Willems, C.; Düssel, P.; Laskov, P. Learning and classification of malware behavior. In Detection of Intrusions and Malware, and Vulnerability Assessment, Proceedings of the DIMVA 2008, Paris, France, 10–11 July 2008; Springer: Berlin, Germany, 2008; pp. 108–125. [Google Scholar]

- Jang, M.S.; Kim, H.C.; Yun, Y.T. Apparatus and Method for Detecting Dll Inserted by Malicious Code. U.S. Patent US20090133126A1, 21 May 2009. [Google Scholar]

- Khan, T.; Alam, M.; Akhunzada, A.; Hur, A.; Asif, M.; Khan, K. Towards augmented proactive cyber threat intelligence. J. Parallel Distrib. Comput. 2018, 124, 47–59. [Google Scholar] [CrossRef]

- Watson, J. Virtualbox: Bits and bytes masquerading as machines. Linux J. 2008, 2008, 1. [Google Scholar]

| Ref. | Data Set | Methodology | Data Features | Approach Used Features | Limitations |

|---|---|---|---|---|---|

| [33] | Web Logged Data set | Web Forensics analysis | Cookies, History, Downloads, Restore Points, Hiber File, Page File | Potential Locations To Search For A Specific Activity On Web | No Visualization, No Auto-correlation, Used Existing Forensics Tools |

| [15] | Existing Enron E-mail data set | Intelligent Emails Visualization | Source, Destination, Location, Datetime, Content, Organization | data set Cleansing, Filtering Related Artifacts, Visualization Based On Relationships Between Entities, Keywords, And Correspondents | Email Specific i.e., No Relationships Between Information From Different Sources Of System, False Positives |

| [25] | Super Timeline data set of a System | Forensics timeline Analysis | Time, Source, User, MACB, Filenames | Analysis Profile, Filtering, Colorization, Marking And Annotation Of Events | No Correlation, Lack Of advanced Visualization |

| [27] | Web Server data set | Forensics Digger Algorithm | IP Address, Datetime, Mac Address, URLs | Web URLs Connections, Visualization | No Advanced Visualization, Algorithm is Specific to Web Server Logfile Investigation |

| [29] | System Logging data set | Timeline analysis through Visualization | – | Filtering, Searching, Grouping, Reporting. | Lack Of Autocorrelation & Advance Visualization |

| [30] | Super Timeline data set | Forensics analysis Microsoft Access Database | Date time, Mac, Source, Source type, Type, User, Host, Short, Desc, Version, Filename, Inode, Notes, Format, Extra | Queries for Some Specific Type Of Activity Locations | No Visualization, No Correlation among these Locations |

| [32] | Super Timeline data set | Timeline analysis with database | Date time, Mac, Source, Source type, Type, User, Host, Short, Desc, Version, Filename, Inode, Notes, Format, Extra | Grouping Of Data According to Log2timeline Output Attributes and Visualization Features Like Filtering, Highlighting and Word Search, etc. | No Correlation, Lack Of Advanced Visualization |

| [20] | Super Timeline data set | Automatic Timeline creation | Date time, Mac, Source, Source type, Type, User, Host, Short, Desc, Version, DFilename, Inode, Notes, Format, Extra | Automatic Construction of a Super Timeline | Auto-Analysis, Correlation and Visualization |

| [35] | System Timestamps data set | Timeline visualization tool | Time, Source type, Event type, Information | Visualize Timeline On The Basis Of Time to find Coherent Evidence | No Correlation among Sources |

| [37] | dynamic execution states of DLL’s | monitoring and comparison of dynamic execution states | DLL path, loading order of DLLs and RVAs (relative virtual addresses) | Light weight non-intrusive virtual environment examination | No visualization, computational over-head |

| [38] | malware data set from google | content and structure based feature extraction and fusion | n-gram, opcode, APIs, Metadata, Entropy etc | obfuscated malware classification, hybrid approach (features from Hex based and disassembled files) | No visualization and high computa-tion |

| [39] | Static API calls data set | API calls extraction and selection | API calls, Relevant API calls | Static data set creation and training classifier | No visualization, less features |

| [40] | Dynamic Registry, DLLs, and APIs called | Features extraction and selection | Registry keys, DLLs and called APIs | Dynamic data set creation and training model to check accuracy | No visualization, computational over-head |

| [14] | Dynamic function calls | Monitoring and Visualization | API calls | Rules applied, Visualization | Less features, No interactive visualization |

| [42] | API calls sequence data set | Features extraction and training classi-fier | API calls sequence | Hooking, preprocessing and training classifier | No visualization and computational overhead |

| Techniques | Datetime | Labeling | Weights | DLLs | File Activity | Registry | Threat Intelligence (TI) Rule Generation | Visualization | Sample Set | |

|---|---|---|---|---|---|---|---|---|---|---|

| FIViz | 🗸 | 🗸 | 🗸 | 🗸 | 0 | 0 | 🗸 | 🗸 | 🗸 | 400 |

| Behavioral signature based | 0 | 0 | 0 | 🗸 | 🗸 | 0 | 🗸 | 🗸 | 0 | 100 |

| Analysis through Wireshark features | 🗸 | 🗸 | 0 | 0 | 🗸 | 🗸 | 0 | 0 | 🗸 | 0 |

| Training classifier | 0 | 🗸 | 🗸 | 🗸 | 0 | 🗸 | 🗸 | 🗸 | 0 | 400 |

| Training classifier | 0 | 0 | 0 | 🗸 | 0 | 0 | 🗸 | 🗸 | 0 | data set1 = 838 data set2 = 1082 |

| Description | Hypothesis |

|---|---|

| ≥ | |

| < | |

| critical value | - |

| 0.05 | |

| df | 14 |

| visualization | 125.90 |

| textual | 646.80 |

| t-value | −29.681 |

| p-value | 0.000 |

| Result | Significant—Reject null hypothesis |

| Visual Techniques | Platform | API Calls | File Activity | DLL | Key Activity | Malware Analysis |

|---|---|---|---|---|---|---|

| Dendrogram or Tree [14] | Platform Independent | 🗸 | 🗸 | 0 | 0 | 🗸 |

| Link graph Time series [17] | Windows x64 | 0 | 0 | 0 | 🗸 | 🗸 |

| Tree map Thread graph [43] | Windows x64 | 0 | 0 | 0 | 🗸 | 🗸 |

| Timeline graph FIViz | Windows x64 | 🗸 | 🗸 | 🗸 | 0 | 🗸 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmad, I.; Shah, M.A.; Khattak, H.A.; Ameer, Z.; Khan, M.; Han, K. FIViz: Forensics Investigation through Visualization for Malware in Internet of Things. Sustainability 2020, 12, 7262. https://doi.org/10.3390/su12187262

Ahmad I, Shah MA, Khattak HA, Ameer Z, Khan M, Han K. FIViz: Forensics Investigation through Visualization for Malware in Internet of Things. Sustainability. 2020; 12(18):7262. https://doi.org/10.3390/su12187262

Chicago/Turabian StyleAhmad, Israr, Munam Ali Shah, Hasan Ali Khattak, Zoobia Ameer, Murad Khan, and Kijun Han. 2020. "FIViz: Forensics Investigation through Visualization for Malware in Internet of Things" Sustainability 12, no. 18: 7262. https://doi.org/10.3390/su12187262

APA StyleAhmad, I., Shah, M. A., Khattak, H. A., Ameer, Z., Khan, M., & Han, K. (2020). FIViz: Forensics Investigation through Visualization for Malware in Internet of Things. Sustainability, 12(18), 7262. https://doi.org/10.3390/su12187262