Optimized Energy Cost and Carbon Emission-Aware Virtual Machine Allocation in Sustainable Data Centers

Abstract

1. Introduction

- Optimal DVFS-based VM scheduling is performed to distribute the load among the servers to minimize the operating temperature.

- Formulation of an objective function for data center selection with the consideration of varying carbon tax, electricity cost and carbon intensity.

- Investigation on the effect of renewable energy source-based data center selection on total cost, carbon cost and CO2 emission.

- The efficient utilization of VMs is carried out by appropriate VM sizing and mapping of containers to available VM types.

- K-medoids algorithm is used to identify container types.

- Examined the upshot of workload-based tuning of cooling load on total power consumption.

2. Related Works

2.1. DVFS and Energy-Aware VM Scheduling

2.2. Regional Diversity of Electricity Price and Carbon Footprint-Aware VM Scheduling in Multi-Cloud Green Data Centers

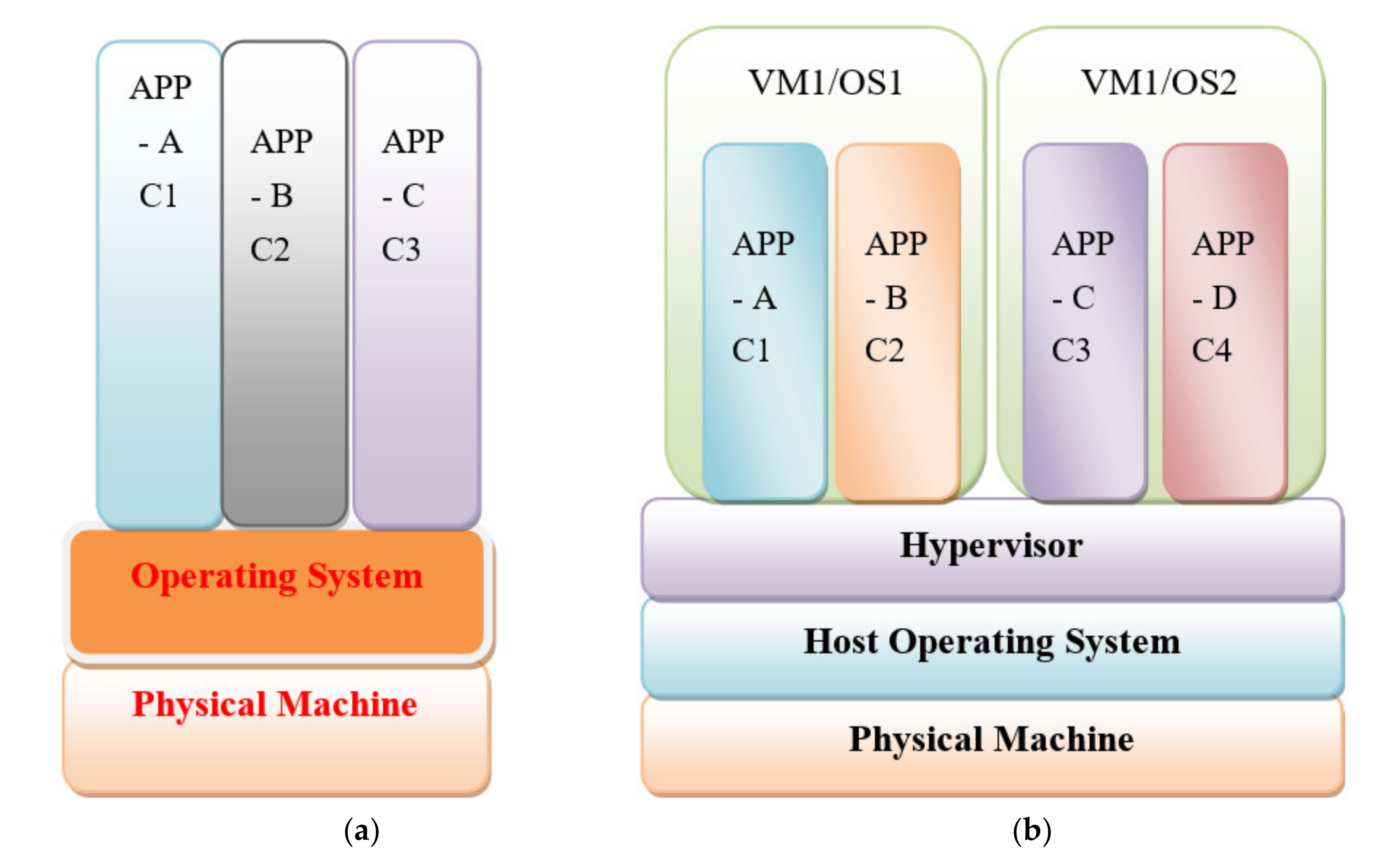

2.3. Containers

3. The Architecture of the Proposed System

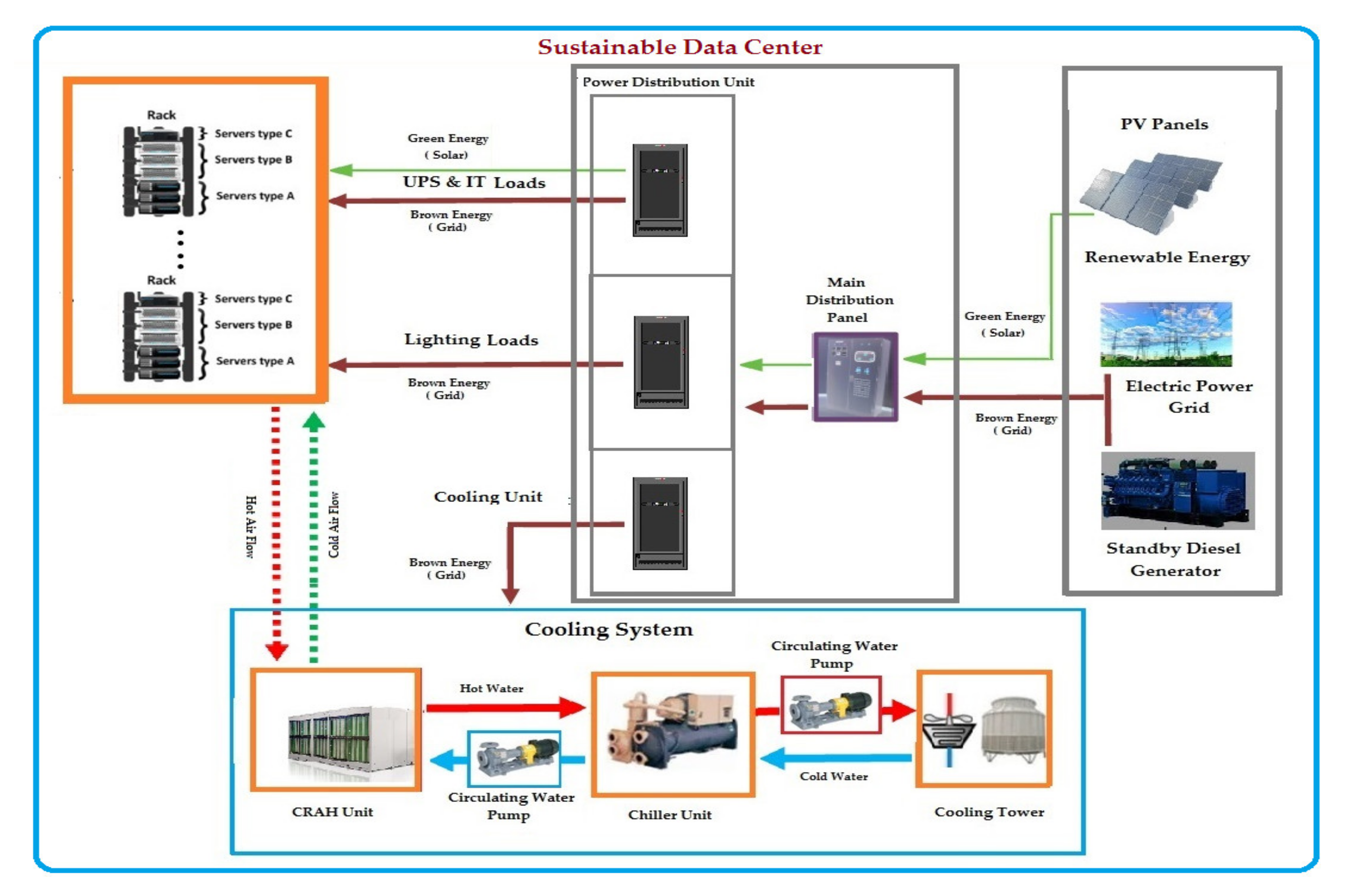

3.1. Sustainable Data Center Model

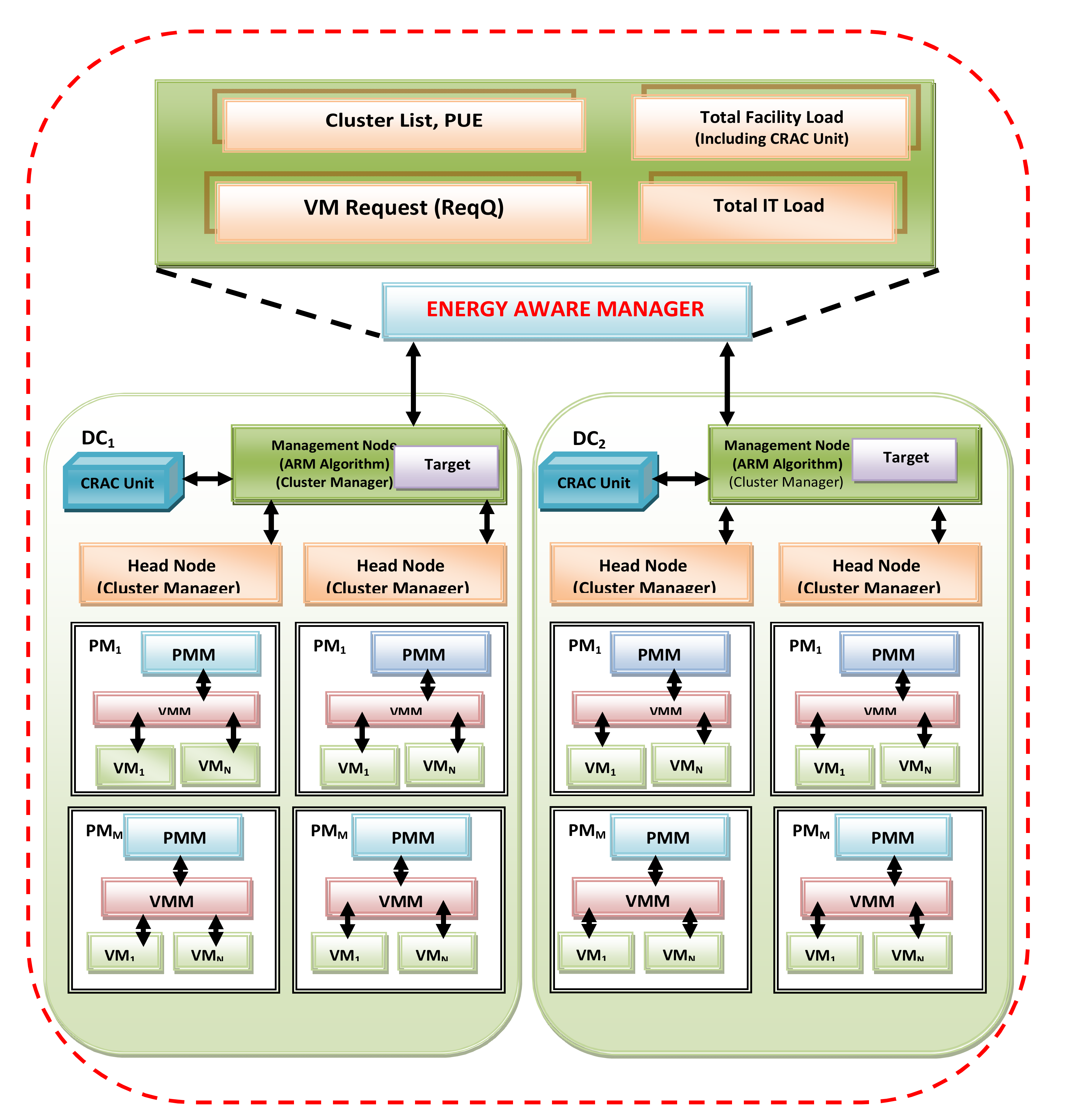

3.2. Proposed Structure of Management System Model

- Energy-Aware Manager (EAM): The data centers of a cloud provider are located in geo-distributed sites. In addition to physical servers, data centers have additional energy-related parameters PUE, carbon footprint rate with different energy sources, varying electricity prices and proportional power. The EAM is the centralized node responsible to coordinate the input request distribution. It is responsible to direct the request to the data centers to attain minimum operating cost, carbon footprint rate and energy consumption. Each data center registers the cloud information service to EAM and updates it frequently. The energy-aware manager maintains information about the list of clusters, carbon footprint rate (CFR), data center PUE, total cooling load, server load, carbon tax, carbon cost, and the carbon intensity of the data centers.

- Management Node (MN): Each data center holds several clusters with heterogeneous servers. The cluster manager of each cluster updates the cluster’s current utilization, power consumption, number of servers on/off to MN. The MN receives user requests from the EAM and based on the cluster utilization, distributes the load to the clusters through cluster manager. The main scheduling algorithm responsible for the allocation of VM to PM and the de-allocation of resources after VM termination is the ARM algorithm (Algorithm 1). It is implemented in the management node.

- Cluster Manager (CM): Each cluster contains heterogeneous servers with different CPU and memory configurations. The power model of the systems in the cluster is considered homogeneous. Each node in the cluster updates information about its power consumption, resource utilization, number of running VMs, resource availability, and its current temperature to the CM. The cluster manager is the head node in the cluster that maintains cluster details concerning total utilization, server power consumption, resource availability, power model, type of energy consumed (grid or green) and temperature of the cluster nodes.

- Physical Machine Manager (PMM): The PMM is a daemon responsible for maintaining the host CPU utilization percentage, resource allocation for VMs, power consumption, current server temperature, status of VM requests, number of VM request received, and so on. The PMM shares its resources to the virtual machines and increases its utilization through virtual machine manager (VMM). It is responsible to update the aforementioned details to the cluster manager.

- Virtual Machine Manager (VMM): The VMM utilizes the virtualization technology to share the physical machine resources to the virtual machines with process isolation. It decides on the number of VMs to be hosted, provisioning of resources to VMs and monitors each hosted VM utilization of physical machine resources. It maintains information about CPU utilization, memory utilization, power consumption, arrival time, execution time and remaining execution time of all active VMs, number of tasks under execution in each VM, current state of the VMs, and other resource and process information.

| Algorithm 1: ARM Algorithm Approach |

| Input: DCList, VMinstancelist |

| Output: TargetVMQ |

| 1 For each interval do |

| 2 ReqQ← Obtain VM request based on VMinstancelist; |

| 3 DCQ← Obtain data centers from DCList; |

| 4 TargetVMQ← Activate placement algorithm; |

| 5 If interval >min-exe-time then |

| 6 Compl-list← Collect executed VMs from TargetVMQ; |

| 7 For each VM in Compl-list do |

| 8 Recover the resources related to the VM; |

| 9 Return TargetVMQ. |

4. Problem Formulation

4.1. Power Model of Server

4.2. Overhead Power Model

4.3. Green Energy

4.4. Carbon Cost (CC) and Electricity Cost (EC)

4.5. Objective Function

Constraints Associated with the Objective Function

4.6. Performance Metrics

5. VM Placement Policies

5.1. ARM Algorithm

5.2. Renewable and Total Cost-Aware First-Fit Optimal Frequency VM Placement (RC-RFFF)

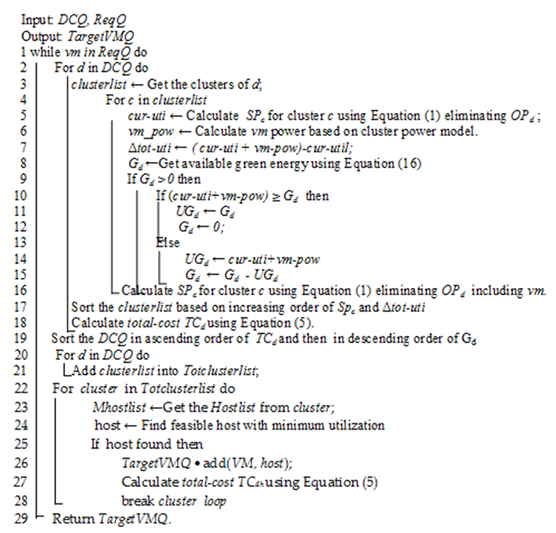

| Algorithm 2: ARM RC-FFF Virtual Machine Placement Algorithm |

|

- Step 1:

- Lines 2–18 identifies the data center to schedule the VM based on renewable energy availability.

- Step 2:

- Line 17 sorts the clusters within the data centers in increasing order of its energy consumption.

- Step 3:

- Line 19 sorts the data centers, first in increasing order of total cost (renewable energy electricity cost and carbon tax are set to 0) and then in non-increasing order of green energy availability.

- Step 4:

- Lines 22–28 performs on-demand dynamic optimal frequency-based node selection within the cluster and is carried out to decide the placement of VM.

5.3. Cost-Aware First-Fit Optimal Frequency VM Placement (C-FFF)

5.4. Renewable and Energy Cost-Aware First-Fit Optimal Frequency VM Placement (REC-RFFF)

5.5. Energy Cost with First-Fit Optimal Frequency VM Placement (EC-FFF)

5.6. Renewable and Carbon Footprint-Aware First-Fit Optimal Frequency VM Placement (RCF-RFFF)

5.7. Carbon Footprint Rate-Aware First-Fit Optimal Frequency VM Placement (CF-FFF)

5.8. Renewable and Carbon Cost-Aware First-Fit Optimal Frequency VM Placement (RCC-RFFF)

5.9. Carbon Cost-Aware First-Fit Optimal Frequency VM Placement (CC-FFF)

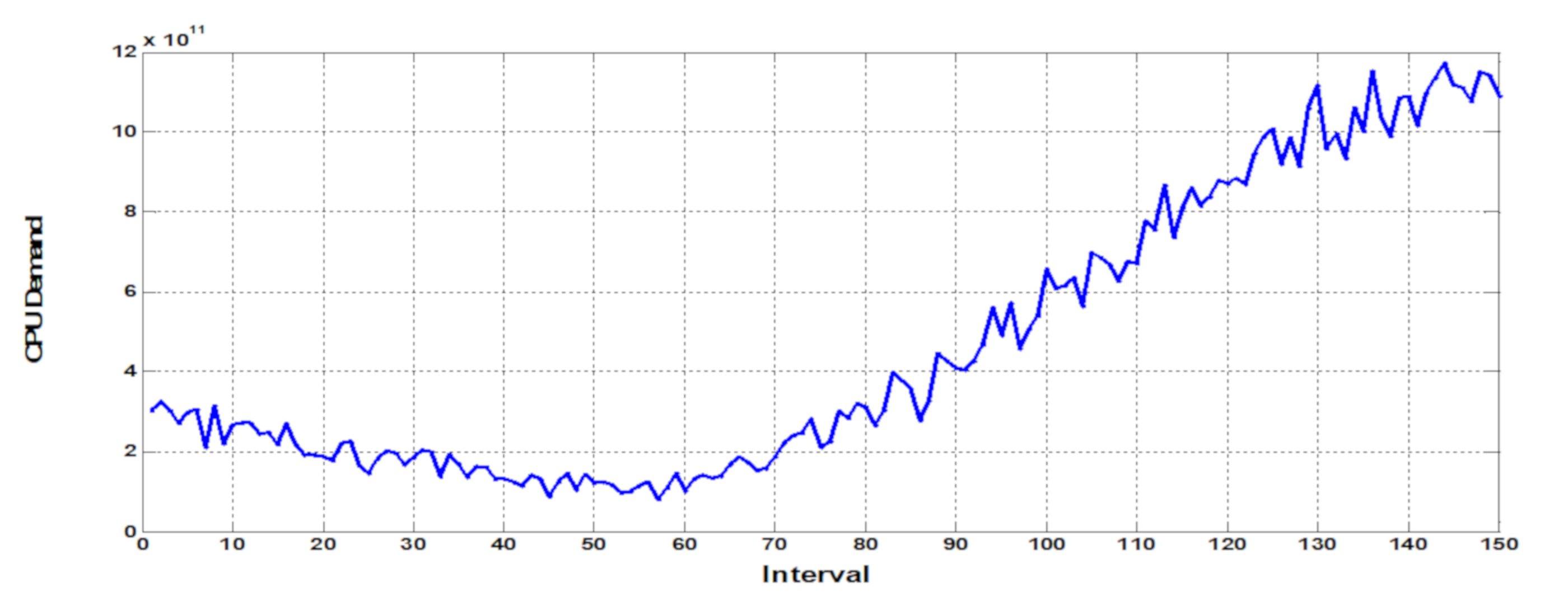

6. Google Cluster Workload Overview

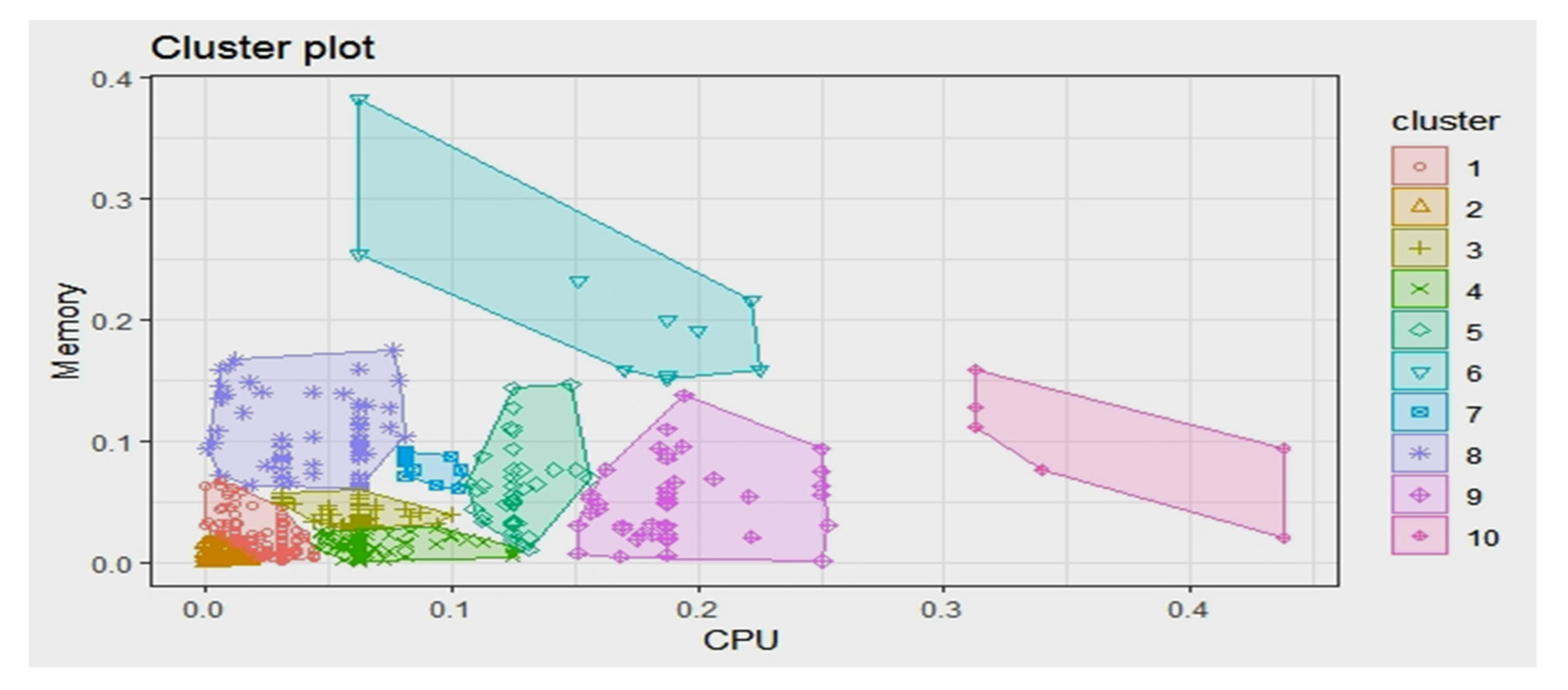

6.1. K-Medoids Clustering

- Step 1:

- K-values from the dataset are identified as medoids.

- Step 2:

- Calculate Euclidean distance and associate every data point to the closest medoid.

- Step 3:

- Swapping of a selected object and the new object is done based on the objective.

- Step 4:

- Steps 2 and 3 are repeated until there is no change in medoids.

- The current cluster member may be shifted out to another cluster.

- Other cluster members may be assigned to the current cluster with a new medoid.

- The current medoid may be replaced by a new medoid.

- The redistribution does not change the objects in the cluster resulting in smaller square error criteria.

6.2. Characteristics of Task Clusters

6.3. Resource Request-Based Optimal VM Sizing for Container Services (CaaS)

6.4. Determine Optimum Number of Tasks for VM Types

| Algorithm 3: Identify optimum number of tasks from each cluster for a VM type |

| Input: Task-List, VM-instanceist, |

| Output: NT (task-type, VMtype) |

| For each tasktype in Task-List |

| For each VMtype in VM-instancelist |

| Nt = Find the minimum number of tasks of tasktype that causes maximum utilization of |

| VMtype resources. |

| i.e., Min (Ntmax-CPU,Ntmax-Mem) |

| NT (tasktype,Vmtype).add(Nt) |

| End |

| End |

7. Performance Evaluation

7.1. Experimental Environment for Investigation of Resource Allocation Policies

7.1.1. Data Center Power Requirement

7.1.2. Data Center Physical Machine Configuration

7.1.3. Solar Energy

7.2. Experimental Results

7.2.1. Energy and Cost Efficiency of the Proposed Algorithms

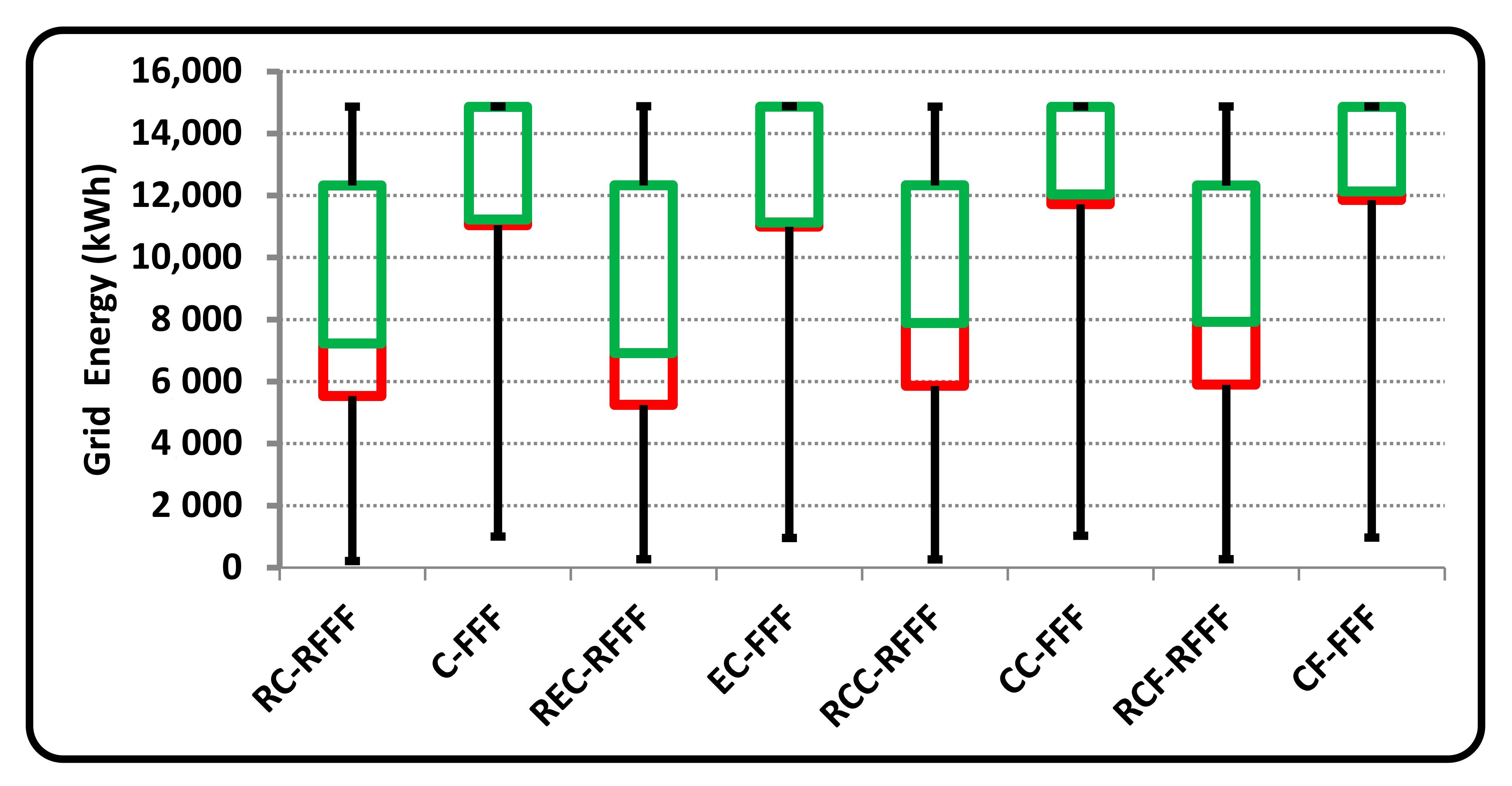

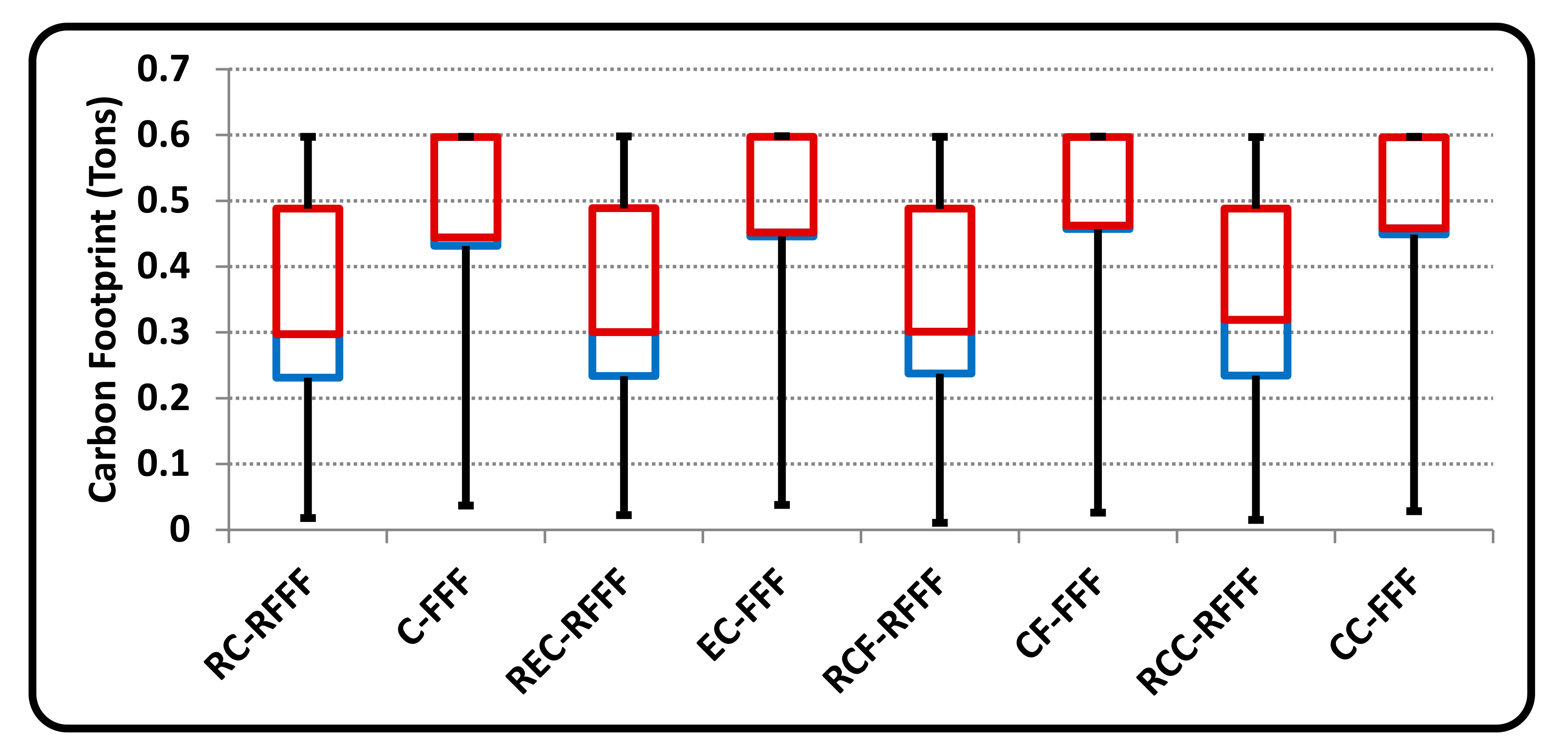

7.2.2. Discussion on Grid Energy Consumption and Carbon Footprint Emission

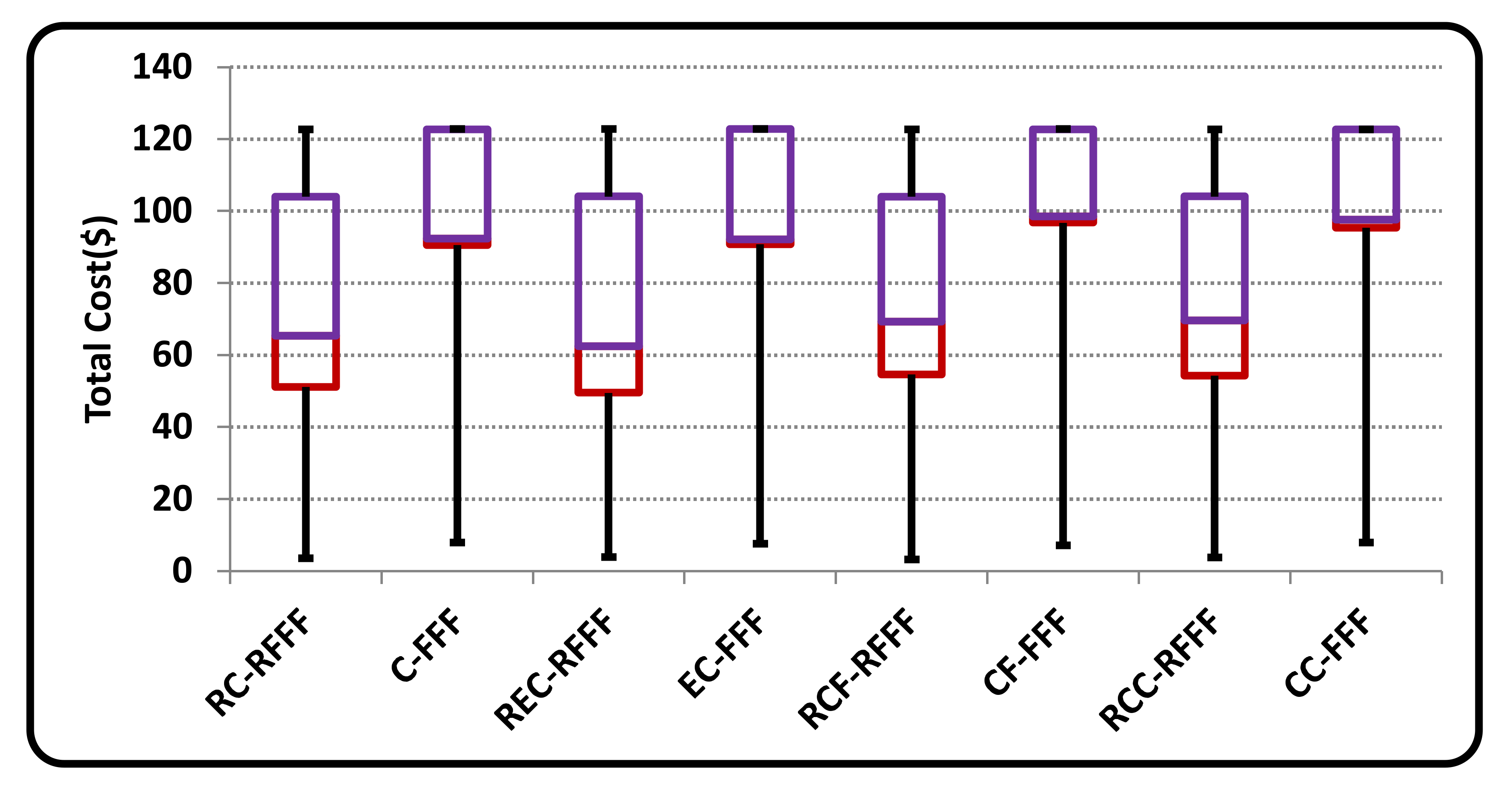

7.2.3. Discussion on Total Cost

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ghatikar, G. Demand Response Opportunities and Enabling Technologies for Data Centers: Findings from Field Studies. Available online: https://escholarship.org/uc/item/7bh6n6kt (accessed on 10 January 2020). [CrossRef]

- Hamilton, J. Cooperative expendable micro-slice servers (CEMS): Low cost, low power servers for internet-scale services. In Proceedings of the Conference on Innovative Data Systems Research (CIDR’09), Asilomar, CA, USA, 4–7 January 2009. [Google Scholar]

- Grid, G. The Green Grid Power Efficiency Metrics: PUE &DCiE. 2007. Available online: https://www.missioncriticalmagazine.com/ext/resources/MC/Home/Files/PDFs/TGG_Data_Center_Power_Efficiency_Metrics_PUE_and_DCiE.pdf (accessed on 10 January 2020).

- Belady, C.; Andy, R.; John, P.; Tahir, C. Green Grid Data Center Power Efficiency Metrics: PUE and DCIE; Technical Report; Green Grid: Beaverton, OR, USA, 2008; Available online: https://www.academia.edu/23433359/Green_Grid_Data_Center_Power_Efficiency_Metrics_Pue_and_Dcie (accessed on 10 January 2020).

- Huang, W.; Allen-Ware, M.; Carter, J.B.; Elnozahy, E.; Hamann, H.; Keller, T.; Lefurgy, C.; Li, J.; Rajamani, K.; Rubio, J. TAPO: Thermal-aware power optimization techniques for servers and data centers. In Proceedings of the 2011 International Green Computing Conference and Workshops, Orlando, FL, USA, 25–28 July 2011; pp. 1–8. [Google Scholar] [CrossRef]

- Breen, T.J.; Walsh, E.J.; Punch, J.; Shah, A.J.; Bash, C.E. From chip to cooling tower data centermodeling: Part I Influence of server inlet temperature and temperature rise across cabinet. In Proceedings of the 12th IEEE Intersociety Conference on Thermal and Thermo mechanical Phenomena in Electronic Systems, Las Vegas, NV, USA, 2–5 June 2010; pp. 1–10. [Google Scholar] [CrossRef]

- Mukherjee, R.; Memik, S.O.; Memik, G. Temperature-aware resource allocation and binding in high-level synthesis. In Proceedings of the 42nd Annual Design Automation Conference, Anaheim, CA, USA, 13–17 June 2005; pp. 196–201. [Google Scholar] [CrossRef]

- Akbar, S.; Malik, S.U.R.; Khan, S.U.; Choo, R.; Anjum, A.; Ahmad, N. A game-based thermal-aware resource allocation strategy for data centers. IEEE Trans. Cloud Comput. 2019. [Google Scholar] [CrossRef]

- Villebonnet, V.; Da Costa, G. Thermal-aware cloud middleware to reduce cooling needs. In Proceedings of the 2014 IEEE 23rd International WETICE Conference, Parma, Italy, 23–25 June 2014; pp. 115–120. [Google Scholar] [CrossRef][Green Version]

- Song, M.; Zhu, H.; Fang, Q.; Wang, J. Thermal-aware load balancing in a server rack. In Proceedings of the 2016 IEEE Conference on Control Applications (CCA), Buenos Aires, Argentina, 19–22 September 2016; pp. 462–467. [Google Scholar] [CrossRef]

- Latest Microsoft Datacenter Design Gets Close to Unity PUE. Available online: https://www.datacenterknowledge.com/archives/2016/09/27/latest-microsoft-data-center-design-gets-close-to-unity-pue (accessed on 10 January 2020).

- Shehabi, A.; Smith, S.J.; Horner, N.; Azevedo, I.; Brown, R.; Koomey, J.; Masanet, E.; Sartor, D.; Herrlin, M.; Lintner, W. United States Data Center Energy Usage Report; Lawrence Berkeley National Laboratory: Berkeley, CA, USA, 2016. Available online: https://www.osti.gov/servlets/purl/1372902/ (accessed on 10 March 2020).

- APAC Datacenter Survey Reveals High PUE Figures Across the Region. Available online: https://www.datacenterdynamics.com/news/apac-data-center-survey-reveals-high-pue-figures-across-the-region/ (accessed on 10 January 2020).

- Varasteh, A.; Tashtarian, F.; Goudarzi, M. On reliability-aware server consolidation in cloud datacenters. In Proceedings of the 2017 16th International Symposium on Parallel and Distributed Computing (ISPDC), Innsbruck, Austria, 3–6 July 2017; pp. 95–101. [Google Scholar] [CrossRef]

- Wang, X.; Du, Z.; Chen, Y.; Yang, M. A Green-Aware Virtual Machine Migration Strategy for Sustainable Datacenter Powered by Renewable Energy. Simul. Model. Pract. Theory 2015, 58, 3–14. [Google Scholar] [CrossRef]

- Apple Now Globally Powered by 100 Percent Renewable Energy. Available online: https://www.apple.com/newsroom/2018/04/apple-now-globally-powered-by-100-percent-renewable-energy/ (accessed on 10 January 2020).

- Google Environmental Report 2018. Available online: https://sustainability.google/reports/environmental-report-2019 (accessed on 10 January 2020).

- Microsoft Says Its Datacenters Will Use 60% Renewable Energy by 2020. Available online: https://venturebeat.com/microsoft-says-it-now-uses-60-renewable-energy-to-power-its-data-centers/ (accessed on 2 February 2020).

- Renugadevi, T.; Geetha, K.; Prabaharan, N.; Siano, P. Carbon-Efficient Virtual Machine Placement Based on Dynamic Voltage Frequency Scaling in Geo-Distributed Cloud Data Centers. Appl. Sci. 2020, 10, 2701. [Google Scholar] [CrossRef]

- Pahl, C.; Brogi, A.; Jamshidi, J.S. Cloud Container Technologies: A State-of-the-Art Review. IEEE Trans. Cloud Comput. 2019, 7, 677–692. [Google Scholar] [CrossRef]

- Shuja, J.; Gani, A.; Shamshirband, S.; Ahmad, R.W.; Bilal, K. Sustainable Cloud Data Centers: A Survey of Enabling Techniques and Technologies. Renew. Sustain. Energy Rev. 2016, 62, 195–214. [Google Scholar] [CrossRef]

- Borgetto, D.; Casanova, H.; Da Costa, G.; Pierson, J.M. Energy-Aware Service Allocation. Future Gener. Comput. Syst. 2012, 28, 769–779. [Google Scholar] [CrossRef]

- Terzopoulos, G.; Karatza, H. Performance Evaluation and Energy Consumption of a Real-Time Heterogeneous Grid System Using DVS and DPM. Simul. Model. Pract. Theory 2013, 36, 33–43. [Google Scholar] [CrossRef]

- Tanelli, M.; Ardagna, D.; Lovera, M.; Zhang, L. Model Identification for Energy-Aware Management of Web Service Systems. In Service-Oriented Computing—ICSOC 2008: Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2008; Volume 5364, pp. 599–606. [Google Scholar] [CrossRef]

- Wu, C.-M.; Chang, R.-S.; Chan, H.-Y. A Green Energy-Efficient Scheduling Algorithm Using the DVFS Technique for Cloud Datacenters. Future Gener. Comput. Syst. 2014, 37, 141–147. [Google Scholar] [CrossRef]

- Wang, L.; von Laszewski, G.; Dayal, J.; Wang, F. Towards energy aware scheduling for precedence constrained parallel tasks in a cluster with DVFS. In Proceedings of the 2010 10th IEEE/ACM International Conference on Cluster, Cloud and Grid Computing, IEEE Computer Society, Melbourne, VIC, Australia, 17–20 May 2010; pp. 368–377. [Google Scholar] [CrossRef]

- Guérout, T.; Monteil, T.; da Costa, G.; Calheiros, R.N.; Buyya, R.; Alexandru, M. Energy-Aware Simulation with DVFS. Simul. Model. Pract. Theory 2013, 39, 76–91. [Google Scholar] [CrossRef]

- Rossi, F.D.; Xavier, M.G.; de Rose, C.A.; Calheiros, R.N.; Buyya, R. E-Eco: Performance-Aware Energy-Efficient Cloud Data Center Orchestration. J. Netw. Comput. Appl. 2017, 78, 83–96. [Google Scholar] [CrossRef]

- Wang, S.; Qian, Z.; Yuan, J.; You, I. A DVFS Based Energy-Efficient Tasks Scheduling in a Data Center. IEEE Acesss 2017, 5, 13090–13102. [Google Scholar] [CrossRef]

- Cotes-Ruiz, I.T.; Prado, R.P.; García-Galán, S.; Muñoz-Expósito, J.E.; Ruiz-Reyes, N. Dynamic Voltage Frequency Scaling Simulator for Real Workflows Energy-Aware Management in Green Cloud Computing. PLoS ONE 2017, 12, e0169803. [Google Scholar] [CrossRef] [PubMed]

- Deng, N.; Stewart, C.; Gmach, D.; Arlitt, M. Policy and mechanism for carbon-aware cloud applications. In Proceedings of the 2012 IEEE Network Operations and Management Symposium, Maui, HI, USA, 16–20 April 2012; pp. 590–594. [Google Scholar] [CrossRef]

- Le, K.; Bianchini, R.; Martonosi, M.; Nguyen, T.D. Cost-and energy-aware load distribution across data centers. In Proceedings of the SOSP Workshop on Power Aware Computing and Systems (Hot Power 2009), Big Sky, MT, USA, 10 October 2009; pp. 1–5. [Google Scholar]

- Chen, C.; He, B.; Tang, X. Green-aware workload scheduling in geographically distributed data centers. In Proceedings of the 4th IEEE International Conference on Cloud Computing Technology and Science Proceedings, Taipei, Taiwan, 3–6 December 2012; pp. 82–89. [Google Scholar] [CrossRef]

- Giacobbe, M.; Celesti, A.; Fazio, M.; Villari, M.; Puliafito, A. An approach to reduce energy costs through virtual machine migrations in cloud federation. In Proceedings of the 2015 IEEE Symposium on Computers and Communication (ISCC), Larnaca, Cyprus, 6–9 July 2015; pp. 782–787. [Google Scholar] [CrossRef]

- Giacobbe, M.; Celesti, A.; Fazio, M.; Villari, M.; Puliafito, A. Evaluating a cloud federation ecosystem to reduce carbon footprint by moving computational resources. In Proceedings of the 2015 IEEE Symposium on Computers and Communication (ISCC), Larnaca, Cyprus, 6–9 July 2015; pp. 99–104. [Google Scholar] [CrossRef]

- Lin, M.; Liu, Z.; Wierman, A.; Andrew, L.L. Online algorithms for geographical load balancing. In Proceedings of the 2012 International Green Computing Conference (IGCC), San Jose, CA, USA, 4–8 June 2012; pp. 1–10. [Google Scholar] [CrossRef]

- Rao, L.; Liu, X.; Xie, L.; Liu, W. Minimizing electricity cost: Optimization of distributed internet data centers in a multi-electricity-market environment. In Proceedings of the 2010 Proceedings IEEE INFOCOM, San Diego, CA, USA, 14–19 March 2010; pp. 1–9. [Google Scholar] [CrossRef]

- Khosravi, A.; Andrew, L.L.; Buyya, R. Dynamic Vm Placement Method for Minimizing Energy and Carbon Cost in Geographically Distributed Cloud Data Centers. IEEE Trans. Sustain. Comput. 2017, 2, 183–196. [Google Scholar] [CrossRef]

- Goiri, Í.; Katsak, W.; Le, K.; Nguyen, T.D.; Bianchini, R. Parasol and greenswitch: Managing data centers powered by renewable energy. In ACM SIGARCH Computer Architecture News; ACM SIGPLAN Notices: Houston, TX, USA, 2013; pp. 51–64. [Google Scholar] [CrossRef]

- Deng, N.; Stewart, C.; Gmach, D.; Arlitt, M.; Kelley, J. Adaptive green hosting. In Proceedings of the 9th International Conference on Autonomic Computing, ACM, San Jose, CA, USA, 16–20 September 2012; pp. 135–144. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, Y.; Wang, X. GreenWare: Greening cloud-scale data centers to maximize the use of renewable energy. In Proceedings of the ACM/IFIP/USENIX International Conference on Distributed Systems Platforms and Open Distributed Processing, Lisbon, Portugal, 12–16 December 2011; pp. 143–164. [Google Scholar] [CrossRef]

- Bird, S.; Achuthan, A.; Maatallah, O.A.; Hu, W.; Janoyan, K.; Kwasinski, A.; Matthews, J.; Mayhew, D.; Owen, J.; Marzocca, P. Distributed (Green) Data Centers: A New Concept for Energy, Computing, and Telecommunications. Energy Sustain. Dev. 2014, 19, 83–91. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, M.; Wierman, A.; Low, S.H.; Andrew, L.L. Greening geographical load balancing. In Proceedings of the ACM SIGMETRICS Joint International Conference on Measurement and Modeling of Computer Systems, ACM, San Jose, CA, USA, 7–11 June 2011; pp. 233–244. [Google Scholar] [CrossRef]

- Liu, Z.; Chen, Y.; Bash, C.; Wierman, A.; Gmach, D.; Wang, Z.; Marwah, M.; Hyser, C. Renewable and cooling aware workload management for sustainable data centers. In Proceedings of the 12th ACM SIGMETRICS/PERFORMANCE Joint International Conference on Measurement and Modeling of Computer Systems, London, UK, 11–15 June 2012; pp. 175–186. [Google Scholar] [CrossRef]

- Toosi, A.N.; Qu, C.; de Assunção, M.D.; Buyya, R. Renewable-Aware Geographical Load Balancing of Web Applications for Sustainable Data Centers. J. Netw. Comput. Appl. 2017, 83, 155–168. [Google Scholar] [CrossRef]

- Chen, T.; Zhang, Y.; Wang, X.; Giannakis, G.B. Robust geographical load balancing for sustainable data centers. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP, Shanghai, China, 20–25 March 2016; pp. 3526–3530. [Google Scholar] [CrossRef]

- Adnan, M.A.; Sugihara, R.; Gupta, R.K. Energy efficient geographical load balancing via dynamic deferral of workload. In Proceedings of the 2012 IEEE Fifth International Conference on Cloud Computing, Honolulu, HI, USA, 24–29 June 2012; pp. 188–195. [Google Scholar] [CrossRef]

- Neglia, G.; Sereno, M.; Bianchi, G. Geographical Load Balancing across Green Datacenters: A Mean Field Analysis. ACM SIGMETRICS Perform. Eval. Rev. 2016, 44, 64–69. [Google Scholar] [CrossRef]

- Dua, R.; Raja, A.R.; Kakadia, D. Virtualization vs. containerization to support PaaS. In Proceedings of the 2014 IEEE International Conference on Cloud Engineering, (IC2E), Boston, MA, USA, 11–14 March 2014; pp. 610–614. [Google Scholar] [CrossRef]

- Felter, W.; Ferreira, A.; Rajamony, R.; Rubio, J. An updated performance comparison of virtual machines and Linux containers. In Proceedings of the 2015 IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS), Philadelphia, PA, USA, 29–31 March 2015; pp. 171–172. [Google Scholar] [CrossRef]

- Kozhirbayev, Z.; Sinnott, R.O. A Performance Comparison of Container-Based Technologies for the Cloud. Future Gener. Comput. Syst. 2017, 68, 175–182. [Google Scholar] [CrossRef]

- Tao, Y.; Wang, X.; Xu, X.; Chen, Y. Dynamic resource allocation algorithm for container-based service computing. In Proceedings of the IEEE 13th International Symposium on Autonomous Decentralized System (ISADS), Bangkok, Thailand, 22–24 March 2017; pp. 61–67. [Google Scholar] [CrossRef]

- Raj, V.K.M.; Shriram, R. Power aware provisioning in cloud computing environment. In Proceedings of the 2011 International Conference on Computer, Communication and Electrical Technology (ICCCET), Tamilnadu, India, 18–19 March 2011; pp. 6–11. [Google Scholar] [CrossRef]

- Tchana, A.; Palma, N.D.; Safieddine, I.; Hagimont, D.; Diot, B.; Vuillerme, N. Software consolidation as an efficient energy and cost saving solution for a SaaS/PaaS cloud model. In Proceedings of the 21st International Conference on Parallel and Distributed Computing, Vienna, Austria, 24–28 August 2015; pp. 305–316. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, T.; Chen, M.; Wei, T.; Zhou, J.; Hu, S.; Buyya, R. Energy-Aware Virtual Machine Allocation for Cloud with Resource Reservation. J. Syst. Softw. 2019, 147, 147–161. [Google Scholar] [CrossRef]

- Moore, J.D.; Chase, J.S.; Ranganathan, P.; Sharma, R.K. Making Scheduling “Cool”: Temperature-AwareWork load Placement in Data Centers. In Proceedings of the USENIX Annual Technical Conference, Marriott Anaheim, CA, USA, 10–15 April 2005; pp. 61–75. [Google Scholar]

- Wang, L.; Khan, S.U.; Dayal, J. Thermal Aware Work Load Placement with Task-Temperature Profiles in a Data Center. J. Supercomput. 2012, 61, 780–803. [Google Scholar] [CrossRef]

- Three Versions of the Cloud Dataset. Available online: https://github.com/google/cluster-data (accessed on 9 December 2019).

- Google Workload Version 2. Available online: https://github.com/google/cluster-data/blob/master/ClusterData2011_2.md (accessed on 10 January 2020).

- Sawyer, R. Calculating Total Power Requirements for Data Centers. White Paper, American Power Conversion. 2004. Available online: http://accessdc.net/Download/Access_PDFs/pdf1/Calculating%20Total%20Power%20Requirements%20for%20Data%20Centers.pdf (accessed on 9 December 2019).

- Standard Performance Evaluation Corporation. SPEC Power 2008; Standard Performance Evaluation Corporation: Gainesville, VA, USA, 2008; Available online: http://www.spec.org/power_ssj2008 (accessed on 9 December 2019).

- Appendix F. Electricity Emission Factors. Available online: http://cloud.agroclimate.org/tools/deprecated/carbonFootprint/references/Electricity_emission_factor.pdf (accessed on 2 February 2020).

- EIA-Electricity Data. Available online: https://www.eia.gov/electricity/monthly/ (accessed on 9 December 2019).

- The Hourly Solar Irradiance and Temperature Data. Available online: http://www.soda-pro.com/web-services/radiation/nasa-sse (accessed on 2 February 2020).

- Solarbayer: Energy Efficient Heating Systems by Renewable Heat Production. Available online: https://www.solarbayer.com/ (accessed on 2 February 2020).

- Nguyen, D.T.; Le, L.B. Optimal Bidding Strategy for Microgrids Considering Renewable Energy and Building Thermal Dynamics. IEEE Trans. Smart Grid 2014, 5, 1608–1620. [Google Scholar] [CrossRef]

- Lublin, U.; Feitelson, D.G. The Work Load on Parallel sUper Computers: Modeling the Characteristics of Rigid Jobs. J. Parallel Distrib. Comput. 2003, 63, 1105–1122. [Google Scholar] [CrossRef]

| Ref. No. | Approach | Environment | Metrics Considered | |||||

|---|---|---|---|---|---|---|---|---|

| DVFS | Green Energy | Workload Shifting | Multi-Cloud | Energy | Cost of Electricity | SLA | Carbon Foot- Print | |

| [25] | Yes | Yes | ||||||

| [26] | Yes | Yes | Yes | |||||

| [27] | Yes | Yes | Yes | |||||

| [28] | Yes | Yes | Yes | |||||

| [44] | Yes | Yes | Yes | Yes | ||||

| [46] | Yes | Yes | Yes | Yes | ||||

| [45] | Yes | Yes | Yes | Yes | ||||

| [47] | Yes | Yes | Yes | Yes | Yes | |||

| [48] | Yes | Yes | Yes | Yes | Yes | |||

| [38] | Yes | Yes | Yes | Yes | Yes | |||

| [39] | Yes | Yes | Yes | Yes | ||||

| Proposed Approach | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Cluster Type | vCPU | Memory (MB) |

|---|---|---|

| 1 | 0.5 | 186.496 |

| 2 | 2.5 | 1889.28 |

| 3 | 6 | 4890.88 |

| 4 | 6.25 | 2234.88 |

| 5 | 12.5 | 9781.76 |

| 6 | 22.19 | 27,686.4 |

| 7 | 8.5 | 9781.76 |

| 8 | 6.25 | 10,968.32 |

| 9 | 18.75 | 7304.96 |

| 10 | 30 | 9781.76 |

| Task Type | VM Type-1 | VM Type-2 | VM Type-3 | VM Type-4 | VM Type-5 |

|---|---|---|---|---|---|

| 1 | 12 | 24 | 48 | 36 | 60 |

| 2 | 2 | 5 | 7 | - | 12 |

| 3 | 1 | 2 | 3 | - | 5 |

| 4 | - | 2 | 4 | 3 | 5 |

| 5 | - | - | - | 1 | 2 |

| 6 | - | - | - | - | 1 |

| 7 | - | 1 | - | 2 | 3 |

| 8 | - | - | - | 1 | 3 |

| 9 | - | - | 1 | - | 2 |

| 10 | - | - | - | - | 1 |

| Machines | Core Speed (GHz) | No. of Cores | Power Model | Memory (GB) |

|---|---|---|---|---|

| M1 | 1.7 | 2 | 1 | 16 |

| M2 | 1.7 | 4 | 1 | 32 |

| M3 | 1.7 | 8 | 2 | 32 |

| M4 | 2.4 | 8 | 2 | 64 |

| M5 | 2.4 | 8 | 2 | 128 |

| Power Model | Idle | Utilization Percentage | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 10 | 20 | 30 | 40 | 50 | 60 | 70 | 80 | 90 | 100 | ||

| 1 | 60 | 63 | 66.8 | 71.3 | 76.8 | 83.2 | 90.7 | 100 | 111.5 | 125.4 | 140.7 |

| 2 | 41.6 | 46.7 | 52.3 | 57.9 | 65.4 | 73 | 80.7 | 89.5 | 99.6 | 105 | 113 |

| VM Type | vCPU | Memory (GB) |

|---|---|---|

| Type-1 | 1 | 7.2 |

| Type-2 | 2 | 14.4 |

| Type-3 | 4 | 15.360 |

| Type-4 | 3 | 17.510 |

| Type-5 | 5 | 35.020 |

| Data Center | Carbon Footprint Rate (tons/MWh) | Carbon Tax (dollars/ton) | Energy Price (cents/kWh) |

|---|---|---|---|

| DC1 | 0.124 | 24 | 6.1 |

| DC2 | 0.350 | 22 | 6.54 |

| DC3 | 0.466 | 11 | 10 |

| DC4 | 0.678 | 48 | 5.77 |

| Renewable Energy-Based Algorithms | Brown Energy-Based Algorithms | |||||||

|---|---|---|---|---|---|---|---|---|

| RC- RFFF | REC- RFFF | RCF- RFFF | RCC- RFFF | C- FFF | EC- FFF | CF- FFF | CC- FFF | |

| Total Energy (kWh) | 2,154,847 | 2,115,749 | 2,219,782 | 2,228,525 | 2,137,648 | 2,104,882 | 2,236,434 | 2,232,912 |

| Grid Energy (kWh) | 1,260,817 | 1,227,280 | 1,308,291 | 1,311,969 | 1,751,854 | 1,730,336 | 1,830,431 | 1,821,311 |

| Carbon Footprint (tons) | 51.3580 | 51.72792 | 51.6695 | 51.9723 | 68.7930 | 69.9528 | 70.7007 | 70.4851 |

| Total Cost ($) | 10,958.48 | 10,787.1 | 11,287.64 | 11,384.81 | 14,368.43 | 14,261.55 | 14,950.9 | 14,960.33 |

| Total Server Energy (kWh) | 1,777,480 | 1,739,362 | 1,815,334 | 1,814,790 | 1,751,854 | 1,730,336 | 1,830,431 | 1,821,311 |

| Solar Energy (kWh) | 516,663 | 512,082.5 | 507,043.1 | 502,820.7 | - | - | - | - |

| Total No. of Instructions | 3.63075 × 1014 | 3.60687 × 1014 | 3.6682 × 1014 | 3.66849 × 1014 | 3.61387 × 1014 | 3.60258 × 1014 | 3.70066 × 1014 | 3.67871 × 1014 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Renugadevi, T.; Geetha, K.; Muthukumar, K.; Geem, Z.W. Optimized Energy Cost and Carbon Emission-Aware Virtual Machine Allocation in Sustainable Data Centers. Sustainability 2020, 12, 6383. https://doi.org/10.3390/su12166383

Renugadevi T, Geetha K, Muthukumar K, Geem ZW. Optimized Energy Cost and Carbon Emission-Aware Virtual Machine Allocation in Sustainable Data Centers. Sustainability. 2020; 12(16):6383. https://doi.org/10.3390/su12166383

Chicago/Turabian StyleRenugadevi, T., K. Geetha, K. Muthukumar, and Zong Woo Geem. 2020. "Optimized Energy Cost and Carbon Emission-Aware Virtual Machine Allocation in Sustainable Data Centers" Sustainability 12, no. 16: 6383. https://doi.org/10.3390/su12166383

APA StyleRenugadevi, T., Geetha, K., Muthukumar, K., & Geem, Z. W. (2020). Optimized Energy Cost and Carbon Emission-Aware Virtual Machine Allocation in Sustainable Data Centers. Sustainability, 12(16), 6383. https://doi.org/10.3390/su12166383