Quality Control Systems in Higher Education Supported by the Use of Mobile Messaging Services

Abstract

1. Introduction

- Principle of purpose: Educational actions pursue the attainment of previously defined objectives, both at the operational level and at the strategic level;

- Principle of accountability: All elements or agents of the system must be audited to evaluate the degree of attainment achieved in the objectives preliminarily set;

- Principle of subsidiarity: Although decisions should initially be made at the same level in which they will be applied, there is the possibility of transferring decision-making to a higher level with strategic competencies; and

- Principle of self-organization and development: It is understood that the system is not static and, consequently, that agents have the obligation to manage themselves efficiently to face future changes.

1.1. Quality Control in Teaching

1.2. The Problem of Response Rates for Determining Quality Control in Teaching

1.3. Objectives

- RQ1:

- Are the response rates achieved in the delivery of quality control surveys for teaching efficiency using mobile messaging services greater than those obtained with traditional online delivery systems?

- RQ2:

- Are there significant differences between the response rates achieved in the delivery of quality control surveys for teaching efficiency using mobile messaging services and those obtained with traditional online delivery systems?

2. Materials and Methods

2.1. Sample and Participants

2.2. Procedure

2.2.1. Delivery through Mobile Messaging Services (Group A)

“UNIVERSIDAD REY JUAN CARLOS-Calidad Docente: Haz clic en el enlace para cumplimentar la encuesta: http://bit.ly/2ywPZBr Tu colaboración es esencial. GRACIAS”

“REY JUAN CARLOS UNIVERSITY–Teaching Quality: Click on the link to complete the survey: http://bit.ly/2ywPZBr Your collaboration is essential. THANK YOU”

2.2.2. Delivery through the Student Services Portal (Group B)

3. Results

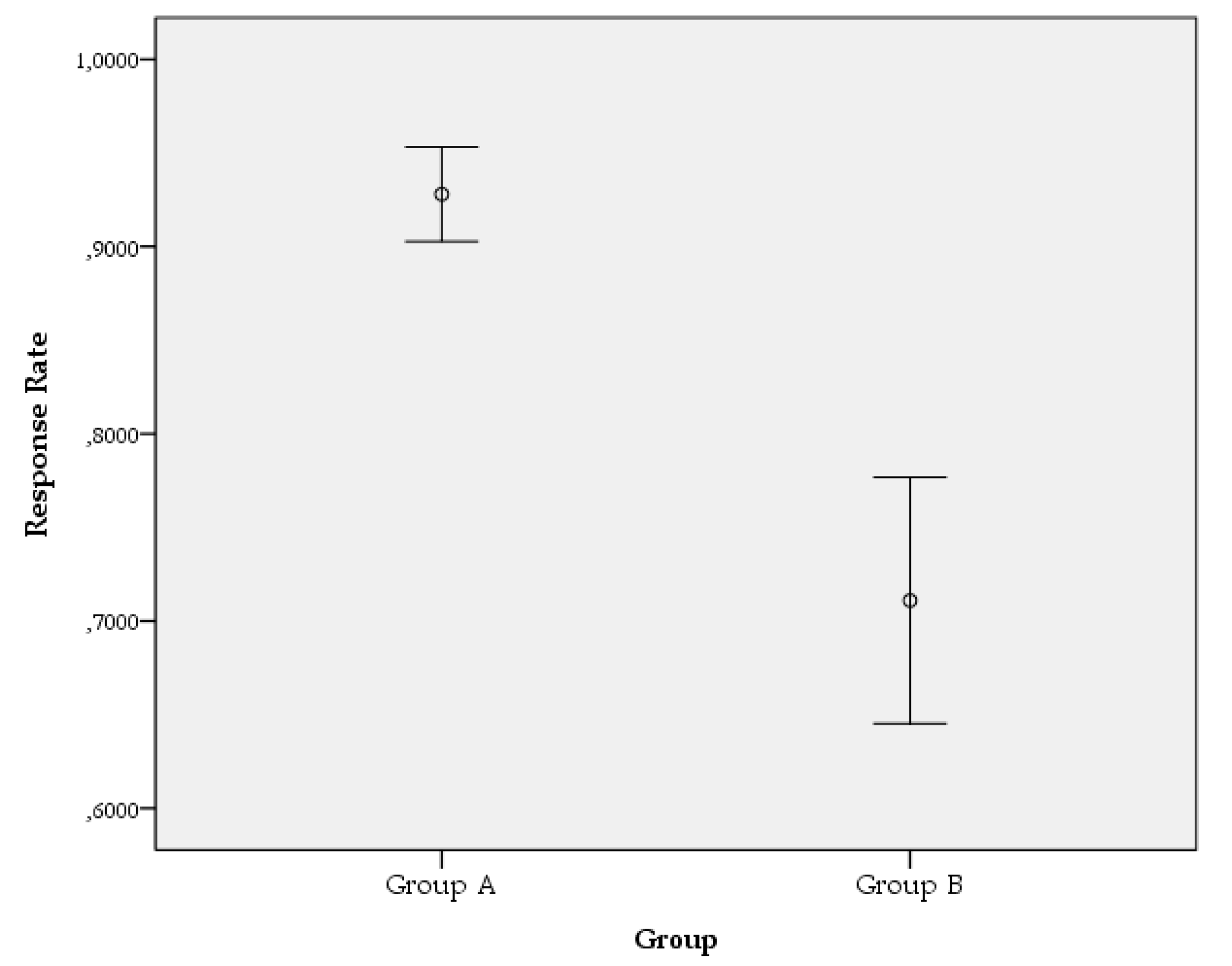

3.1. Results in Response to Research Question RQ1

3.2. Results in Response to Research Question RQ2

4. Discussion

Limitations and Further Research

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Mateo, J. La evaluación del profesorado y la gestión de la calidad de la educación. Hacia un modelo comprensivo de evaluación sistemática de la docencia. Rev. Investig. Educ. 2000, 18, 7–34. [Google Scholar]

- Resino Blázquez, J.J.; Chamizo González, J.; Cano Montero, E.I.; Gutiérrez Broncano, S. Calidad de vida universitaria: Identificación de los principales indicadores de satisfacción estudiantil. Rev. Educ. 2013, 362, 458–484. [Google Scholar]

- Sierra Sánchez, J. Factors influencing a student’s decision to pursue a communications degree in Spain. Intang. Cap. 2012, 8, 43–60. [Google Scholar] [CrossRef]

- De La Orden, A. Evaluación del rendimiento educativo y la calidad de la enseñanza. En Instituto de Pedagogía “San José de Calasanz”. In La Calidad de la Educación; Consejo Superior de Investigaciones Científicas (CSIC): Madrid, Spain, 1981; pp. 111–131. [Google Scholar]

- De La Orden, A. Evaluación y calidad: Análisis de un modelo. Estud. Sobre Educ. 2009, 16, 17–36. [Google Scholar]

- Alvarado Lagunas, E.; Ramírez, D.M.; Téllez, E.A. Percepción de la calidad educativa: Caso aplicado a estudiantes de la Universidad Autónoma de Nuevo León y del Instituto Tecnológico de Estudios Superiores de Monterrey. Rev. Educ. Super. 2016, 45, 55–74. [Google Scholar] [CrossRef]

- Gazïel, H.; Warnet, M.; Cantón Mayo, I. La Calidad en Los Centros Docentes Del Siglo XXI: Propuestas y Experiencias Prácticas; Muralla: Madrid, Spain, 2000; ISBN 8471336995. [Google Scholar]

- Muñoz Cantero, J.M.; Ríos De Deus, M.P.; Abalde Paz, E. Evaluación docente vs Evaluación de la calidad. RELIEVE Rev. Electrón. Investig. Eval. Educ. 2002, 8, 103–134. [Google Scholar] [CrossRef][Green Version]

- Debón Lamarque, S.; Romo Castillejo, A. El liderazgo del director como factor de cambio de la calidad de la enseñanza. In Factores Que Favorecen la Calidad Educativa; Ruiz Carrascosa, J., Pérez Ferra, M., Eds.; Universidad de Jaén: Jaen, Spain, 1995; pp. 134–156. ISBN 8488942354. [Google Scholar]

- Fernández Millán, J.M.; Fernández Navas, M. Elaboración de una escala de evaluación de desempeño para educadores sociales en centros de protección de menores. Intang. Cap. 2013, 9, 571–589. [Google Scholar]

- Torres González, J.A. La formación del profesorado como factor favorecedor de la calidad educativa. In Factores Que Favorecen la Calidad Educativa; Ruiz Carrascosa, J., Pérez Ferra, M., Eds.; Universidad de Jaén: Jaén, Spain, 1995; pp. 69–133. ISBN 8488942354. [Google Scholar]

- Bienayme, A. Eficiencia y calidad en la educación superior. In Calidad, Eficiencia y Equidad en la Educación Superior; Universidad Autónoma de Guadalajara: Guadalajara, Mexico, 1986; p. 312. [Google Scholar]

- González López, I. Determinación de los elementos que condicionan la calidad de la universidad: Aplicación práctica de un análisis factorial. RELIEVE Rev. Electrón. Investig. Eval. Educ. 2003, 9, 83–96. [Google Scholar] [CrossRef][Green Version]

- Hernández, H.; Martínez, D.; Rodríguez, J. Gestión de la calidad aplicada en el mejoramiento del sector universitario. Rev. Espac. 2017, 38, 29. [Google Scholar]

- Lago de Vergara, D.; Gamoba Suárez, A.A.; Montes Miranda, A.J. Calidad de la educación superior: Un análisis de sus principales determinantes. Saber Cienc. Lib. 2014, 9, 157–170. [Google Scholar] [CrossRef][Green Version]

- Ruiz Carrascosa, J. La evaluación de la enseñanza por los alumnos en el plan nacional de evaluación de la calidad de las universidades. Construcción de un instrumento de valoración. Rev. Investig. Educ. 2000, 18, 433–445. [Google Scholar]

- Gil Edo, M.T.; Roca Puig, V.; Camisón Zornoza, C. Hacia modelos de calidad de servicio orientados al cliente en las universidades públicas: El caso de la Universitat Jaume I. Investig. Eur. Dir. Econ. Empresa 1999, 5, 69–92. [Google Scholar]

- Veciana Vergés, J.M.; Capelleras Segura, J.L. Calidad de servicio en la enseñanza universitaria desarrollo y validación de una escala media. Rev. Eur. Dir. Econ. Empres. 2004, 13, 55–72. [Google Scholar]

- Álvarez Rojo, V.; García Jiménez, E.; Gil Flores, J. La calidad de la enseñanza universitaria desde la perspectiva de los profesores mejor valorados por los alumnos. Rev. Educ. 1999, 319, 273–290. [Google Scholar]

- Linse, A.R. Interpreting and using student ratings data: Guidance for faculty serving as administrators and on evaluation committees. Stud. Educ. Eval. 2017, 54, 94–106. [Google Scholar] [CrossRef]

- Nygaard, C.; Belluigi, D.Z. A proposed methodology for contextualised evaluation in higher education. Assess. Eval. High. Educ. 2011, 36, 657–671. [Google Scholar] [CrossRef]

- Sproule, R. Student Evaluation of Teaching: A Methodological Critique. Educ. Policy Anal. Arch. 2000, 8, 50. [Google Scholar] [CrossRef]

- Huybers, T. Student evaluation of teaching: The use of best–worst scaling. Assess. Eval. High. Educ. 2014, 39, 496–513. [Google Scholar] [CrossRef]

- Matosas-López, L.; Leguey-Galán, S.; Doncel-Pedrera, L.M. Converting Likert scales into Behavioral Anchored Rating Scales(Bars) for the evaluation of teaching effectiveness for formative purposes. J. Univ. Teach. Learn. Pract. 2019, 16, 1–24. [Google Scholar]

- Remmers, H.H. The relationship between students’ marks and student attitude toward instructors. Sch. Soc. 1928, 28, 759–760. [Google Scholar]

- Leguey-Galán, S.; Leguey-Galán, S.; Matosas-López, L. ¿De qué depende la satisfacción del alumnado con la actividad docente? Espacios 2018, 39, 13–29. [Google Scholar]

- Lizasoain-Hernández, L.; Etxeberria-Murgiondo, J.; Lukas-Mujika, J.F. Propuesta de un nuevo cuestionario de evaluación de los profesores de la Universidad del País Vasco. Estudio psicométrico, dimensional y diferencial. RELIEVE Rev. Electrón. Investig. Evaluac. Educ. 2017, 23, 1–21. [Google Scholar] [CrossRef][Green Version]

- Molero López-Barajas, D.M.; Ruiz Carrascosa, J. La evaluación de la docencia universitaria. Dimensiones y variables más relevantes. Rev. Investig. Educ. 2005, 23, 57–84. [Google Scholar]

- Layne, B.H.; Decristoforo, J.R.; Mcginty, D. Electronic versus traditional student ratings of instruction. Res. High. Educ. 1999, 40, 221–232. [Google Scholar] [CrossRef]

- Vanacore, A.; Pellegrino, M.S. How Reliable are Students’ Evaluations of Teaching (SETs)? A Study to Test Student’s Reproducibility and Repeatability. Soc. Indic. Res. 2019. [Google Scholar] [CrossRef]

- Zhao, J.; Gallant, D.J. Student evaluation of instruction in higher education: Exploring issues of validity and reliability. Assess. Eval. High. Educ. 2012, 37, 227–235. [Google Scholar] [CrossRef]

- Marsh, H.W. Student evaluations of university teaching: Dimensionality, reliability, validity, potential biases, utility. J. Educ. Psychol. 1984, 76, 707–754. [Google Scholar] [CrossRef]

- Marsh, H.W. Students’ Evaluations of University Teaching: Dimensionality, Reliability, Validity, Potential Biases and Usefulness. In The Scholarship of Teaching and Learning in Higher Education: An Evidence-Based Perspective; Perry, R.P., Ed.; Springer Netherlands: Dordrecht, The Netherlands, 2007; pp. 319–383. [Google Scholar]

- Sharon, A.T.; Bartlett, C.J. Effect of instructional conditions in producing leniency on two types of rating scales. Pers. Psychol. 1969, 22, 251–263. [Google Scholar] [CrossRef]

- Bernardin, H.J. Behavioural expectation scales versus summated scales. J. Appl. Psychol. 1977, 62, 422–427. [Google Scholar] [CrossRef]

- Mitchell, K.M.W.; Martin, J. Gender Bias in Student Evaluations. PS 2018, 51, 648–652. [Google Scholar] [CrossRef]

- Wilson, J.H.; Beyer, D.; Monteiro, H. Professor Age Affects Student Ratings: Halo Effect for Younger Teachers. Coll. Teach. 2014, 62, 20–24. [Google Scholar] [CrossRef]

- Gannaway, D.; Green, T.; Mertova, P. So how big is big? Investigating the impact of class size on ratings in student evaluation. Assess. Eval. High. Educ. 2017, 43, 1–10. [Google Scholar] [CrossRef]

- Griffin, B.W. Grading leniency, grade discrepancy, and student ratings of instruction. Contemp. Educ. Psychol. 2004, 29, 410–425. [Google Scholar] [CrossRef]

- Matosas-López, L.; García-Sánchez, B. Beneficios de la distribución de cuestionarios web de valoración docente a través de mensajería SMS en el ámbito universitario: Tasas de participación, inversión de tiempo al completar el cuestionario y plazos de recogida de datos. Rev. Complut. Educ. 2019, 30, 831–845. [Google Scholar] [CrossRef]

- Ha, T.S.; Marsh, J.; Jones, J. A Web-based System for Teaching Evaluation. In Proceedings of the New Challenges and Innovations in Teaching and Training into the 21st Centruy (NCITT), Hong Kong, China, May 1998; Volume 11, pp. 1–11. [Google Scholar]

- Woodward, D.K. Comparison of course evaluations by traditional and computerized on-line methods. Am. J. Pharm. Educ. 1998, 62, 90S. [Google Scholar]

- Thorpe, S.W. Online student evaluation of instruction: An Investigation of non-response bias. In Proceedings of the 42nd Annual Forum for the Association for Institutional Research, Toronto, Japan, 2–5 June 2002; pp. 1–14. [Google Scholar]

- Watt, S.; Simpson, C.; McKillop, C.; Nunn, V. Electronic Course Surveys: Does automating feedback and reporting give better results? Assess. Eval. High. Educ. 2002, 27, 325–337. [Google Scholar] [CrossRef]

- Dommeyer, C.J.; Baum, P.; Hanna, R.W.; Chapman, K.S. Gathering faculty teaching evaluations by in-class and online surveys: Their effects on response rates and evaluations. Assess. Eval. High. Educ. 2004, 29, 611–623. [Google Scholar] [CrossRef]

- Anderson, H.; Cain, J.; Bird, E. Online student course evaluations review of literature and a pilot study. Am. J. Pharm. Educ. 2005, 2, 1–10. [Google Scholar] [CrossRef]

- Ballantyne, C. Moving student evaluation of teaching online: Reporting pilot outcomes and issues with a focus on how to increase student response rate. In Proceedings of the 2005 Australasian Evaluations Forum: University Learning and Reaching: Evaluating and Enhancing the Experience, Sydney, Australia, 28–29 November 2005. [Google Scholar]

- Avery, R.J.; Bryant, W.K.; Mathios, A.; Kang, H.; Bell, D. Electronic Course Evaluations: Does an Online Delivery System Influence Student Evaluations? J. Econ. Educ. 2006, 37, 21–37. [Google Scholar] [CrossRef]

- Nowell, C.; Gale, L.R.; Handley, B. Assessing faculty performance using student evaluations of teaching in an uncontrolled setting. Assess. Eval. High. Educ. 2010, 35, 463–475. [Google Scholar] [CrossRef]

- Morrison, R. A comparison of online versus traditional student end-of-course critiques in resident courses. Assess. Eval. High. Educ. 2011, 36, 627–641. [Google Scholar] [CrossRef]

- Stowell, J.R.; Addison, W.E.; Smith, J.L. Comparison of online and classroom-based student evaluations of instruction. Assess. Eval. High. Educ. 2012, 37, 465–473. [Google Scholar] [CrossRef]

- Gerbase, M.W.; Germond, M.; Cerutti, B.; Vu, N.V.; Baroffio, A. How Many Responses Do We Need? Using Generalizability Analysis to Estimate Minimum Necessary Response Rates for Online Student Evaluations. Teach. Learn. Med. 2015, 27, 395–403. [Google Scholar] [CrossRef]

- Stanny, C.J.; Arruda, J.E. A comparison of student evaluations of teaching with online and paper-based administration. Scholarsh. Teach. Learn. Psychol. 2017, 3, 198–207. [Google Scholar] [CrossRef]

- Dommeyer, C.J.; Baum, P.; Hanna, R.W. College Students’ Attitudes Toward Methods of Collecting Teaching Evaluations: In-Class Versus On-Line. J. Educ. Bus. 2002, 78, 11–15. [Google Scholar] [CrossRef]

- Sorenson, D.L.; Reiner, C. Charting the Uncharted Seas of Online Student Ratings of Instruction. New Dir. Teach. Learn. 2003, 2003, 1–24. [Google Scholar] [CrossRef]

- Nair, C.S.; Adams, P. Survey platform: A factor influencing online survey delivery and response rate. Qual. High. Educ. 2009, 15, 291–296. [Google Scholar] [CrossRef]

- Ballantyne, C. Online Evaluations of Teaching: An Examination of Current Practice and Considerations for the Future. New Dir. Teach. Learn. 2003, 103–112. [Google Scholar] [CrossRef]

- Goodman, J.; Anson, R.; Belcheir, M. The effect of incentives and other instructor-driven strategies to increase online student evaluation response rates. Assess. Eval. High. Educ. 2015, 40, 958–970. [Google Scholar] [CrossRef]

- Matosas-López, L.; Leguey-Galán, S.; Leguey-Galán, S. Evaluación de la calidad y la eficiencia docente en el contexto de la educación superior: Alternativas de mejora. In La Educación Superior en el Siglo XXI: Una Mirada Multidisciplinaria; Gómez-Galán, J., Martín-Padilla, A.H., Cobos, D., y López-Meneses, E., Eds.; UMET: Wheaton, IL, USA, 2019; pp. 240–257. ISBN 978-1-943697-21-2. [Google Scholar]

- Standish, T.; Joines, J.A.; Young, K.R.; Gallagher, V.J. Improving SET Response Rates: Synchronous Online Administration as a Tool to Improve Evaluation Quality. Res. High. Educ. 2018, 59, 812–823. [Google Scholar] [CrossRef]

- Boswell, S.S. Ratemyprofessors is hogwash (but I care): Effects of Ratemyprofessors and university-administered teaching evaluations on professors. Comput. Hum. Behav. 2016, 56, 155–162. [Google Scholar] [CrossRef]

- Salcines-Talledo, I.; González-Fernández, N. Diseño y Validación del Cuestionario “Smartphone y Universidad. Visión del Profesorado” (SUOL). Rev. Complut. Educ. 2016, 27, 603–632. [Google Scholar] [CrossRef]

- Al-Emran, M.; Elsherif, H.M.; Shaalan, K. Investigating attitudes towards the use of mobile learning in higher education. Comput. Hum. Behav. 2016, 56, 93–102. [Google Scholar] [CrossRef]

- Champagne, M.V. Student use of mobile devices in course evaluation: A longitudinal study. Educ. Res. Eval. 2013, 19, 636–646. [Google Scholar] [CrossRef]

- Young, K.; Joines, J.; Standish, T.; Gallagher, V. Student evaluations of teaching: The impact of faculty procedures on response rates. Assess. Eval. High. Educ. 2018, 44, 37–49. [Google Scholar] [CrossRef]

- United Nations (UN). Post-2015 Development Agenda; United Nations: New York, NY, USA, 2015; Volume A 69/L.85. [Google Scholar]

- Albert Gómez, M.J. La Investigación Educativa: Claves Teóricas; McGraw-Hill: Madrid, Spain, 2006; ISBN 9788448159429. [Google Scholar]

- Matosas-López, L.; Romero-Ania, A.; Cuevas-Molano, E. ¿Leen los universitarios las encuestas de evaluación del profesorado cuando se aplican incentivos por participación? Una aproximación empírica. Rev. Iberoam. Sobre Calid. Efic. Cambio Educ. 2019, 17, 99–124. [Google Scholar] [CrossRef]

- Hernández Pina, F. Diseños de investigación experimental. In Métodos de Investigación en Psicopedagogía; Buendía, L., Colas Bravo, P., Hernández Pina, F., Eds.; McGraw-Hill: Madrid, Spain, 1997; pp. 91–117. ISBN 84-481-1254-7. [Google Scholar]

- Buendía Eisman, L.; Berrocal de Luna, E. La ética de la investigación educativa. Agora Digit. 2001, 1, 1–14. [Google Scholar]

- Nulty, D.D. The adequacy of response rates to online and paper surveys: What can be done? Assess. Eval. High. Educ. 2008, 33, 301–314. [Google Scholar] [CrossRef]

- Mohd Razali, N.; Bee Wah, Y. Power comparisons of Shapiro-Wilk, Kolmogorov-Smirnov, Lilliefors and Anderson-Darling tests. J. Stat. Model. Anal. 2011, 2, 21–33. [Google Scholar]

- Matosas-López, L. Diferencias en las puntuaciones de las encuestas de valoración del profesorado en función del tipo de cuestionario: Comparativa cuestionarios Likert vs cuestionarios BARS. Rev. Infancia Educ. Aprendiz. 2019, 5, 371–378. [Google Scholar] [CrossRef]

- Nair, C.; Wayland, C.; Soediro, S. Evaluating the student experience: A leap into the future. In Proceedings of the Australasian Evaluations Forum: University Learning and Teaching: Evaluating and Enhancing the Experience, Sydney, Australia, 28–29 November 2005. [Google Scholar]

- Chapman, D.D.; Joines, J.A. Strategies for Increasing Response Rates for Online End-of-Course Evaluations. Int. J. Teach. 2017, 29, 47–60. [Google Scholar]

- Dillman, D.A. Mail and Internet Surveys: The Tailored Design Method, 2nd ed.; John Wiley and Sons: New York, NY, USA, 2000. [Google Scholar]

- Moss, J.; Hendry, G. Use of electronic surveys in course evaluation. Br. J. Educ. Technol. 2002, 33, 583–592. [Google Scholar] [CrossRef]

| Authors | Approach of Quality | Aspects of Quality Considered |

|---|---|---|

| Gil Edo, Roca Puig and Camisón Zornoza [17] | Service-based approach | Faculty technical dimension, faculty functional dimension, academic structure, service personnel, facilities, staff, complementary services |

| Veciana Vergés and Capelleras i Segura [18] | Service-based approach | Teachers´ competence, curriculum, equipment and facilities, organization |

| Resino Blázquez et al. [2] | Student perspective-based | Facilities, academic aspects, social aspects |

| Alvarado Lagunas et al. [6] | Student perspective-based | Infrastructure, teaching staff, teaching materials, student´s development |

| González López [13] | Student perspective-based | Competencies, skills for the labor market, critical thinking, institutional evaluation, services, representative bodies, student involvement, professional specialization, students´ performance, associative movements, academic information, supplementary training, career opportunities |

| Álvarez Rojo, García Jiménez and Gil Flores [19] | Teacher perspective-based | Teaching skills, vocation of teaching, structural and social conditions, management of the university environment |

| Authors | Paper Response Rate | Online Response Rate | Difference |

|---|---|---|---|

| Ha, Marsh and Jones [41] | 0.6 | 0.23 | + 0.37 |

| Woodward [42] | 0.45 | 0.33 | + 0.12 |

| Layne et al. [29] | 0.60 | 0.47 | + 0.13 |

| Thorpe [43] | 0.50 | 0.46 | + 0.04 |

| Watt, Simpson, McKillo and Nunn [44] | 0.33 | 0.32 | + 0.01 |

| Dommeyer, Baum, Hanna and Chapman [45] | 0.75 | 0.43 | + 0.32 |

| Anderson, Cain and Bird [46] | From 0.80 to 0.81 | From 0.75 to 0.89 | From + 0.05 to − 0.08 |

| Ballantyne [47] | 0.55 | 0.47 | + 0.08 |

| Avery, Bryant, Mathios, Kang and Bell [48] | 0.72 | 0.48 | + 0.24 |

| Nowell, Gale and Handley [49] | 0.72 | 0.28 | + 0.44 |

| Morrison [50] | 0.97 | 0.21 | + 0.76 |

| Stowell, Addison and Smith [51] | 0.81 | 0.61 | + 0.20 |

| Gerbase, Germond, Cerutti, Vu and Baroffio [52] | 0.74 | 0.30 | + 0.44 |

| Stanny and Arruda [53] | From 0.71 to 0.72 | From 0.32 to 0.34 | From + 0.39 to + 0.38 |

| Age | Gender | |||

|---|---|---|---|---|

| Group | Mean | Standard Deviation | Male | Female |

| Group A | 20.01 | 1.14 | 46.61% | 53.39% |

| Group B | 20.86 | 1.73 | 49.24% | 50.76% |

| Total, sample | 20.52 | 1.68 | 47.99% | 52.01% |

| Response Rates * | |||

|---|---|---|---|

| Course-Program | Group A | Group B | Difference |

| Program 1 | 0.97 | 0.66 | + 0.31 |

| Program 2 | 0.96 | 0.84 | + 0.12 |

| Program 3 | 0.94 | 0.81 | + 0.13 |

| Program 4 | 0.93 | 0.71 | + 0.22 |

| Program 5 | 0.91 | 0.64 | + 0.27 |

| Program 6 | 0.88 | 0.59 | + 0.29 |

| Program 7 | 0.91 | 0.74 | + 0.17 |

| Program 8 | 0.87 | 0.71 | + 0.16 |

| Program 9 | 0.97 | 0.82 | + 0.15 |

| Program 10 | 0.94 | 0.59 | + 0.35 |

| Total | 0.92 | 0.71 | + 0.21 |

| Levene’s Test for Equality of Variances | t-Test for Equality of Means | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| F | Sig. | t | d.f. | Sig. (Two-Tailed) | Difference in Means | Standard Error of the Difference | 95% Confidence Interval | ||

| Lower | Upper | ||||||||

| Variances assumed equal | 7.055 | 0.016 | 6.969 | 18 | 0.000 | 0.2170000 | 0.0311359 | 0.1515859 | 0.2824141 |

| Variances not assumed equal | 6.969 | 11.584 | 0.000 | 0.2170000 | 0.0311359 | 0.1488898 | 0.2851102 | ||

| Course-Program | Total, Students in the Course | Liberal Conditions (10% Sampling Error; 80% Confidence Interval) | Stringent Conditions (3% Sampling Error; 95% Confidence Interval) | Response Rate Current Study * |

|---|---|---|---|---|

| Program 1 | 27 | Between 0.48 and 0.58 | Between 0.96 and 0.97 | 0.97 |

| Program 2 | 29 | Between 0.48 and 0.58 | Between 0.96 and 0.97 | 0.96 |

| Program 3 | 31 | Between 0.35 and 0.40 | Between 0.93 and 0.95 | 0.94 |

| Program 4 | 32 | Between 0.35 and 0.40 | Between 0.93 and 0.95 | 0.93 |

| Program 5 | 55 | Between 0.25 and 0.31 | Between 0.90 and 0.92 | 0.91 |

| Program 6 | 42 | Between 0.35 and 0.40 | Between 0.93 and 0.95 | 0.88 |

| Program 7 | 61 | Between 0.25 and 0.31 | Between 0.90 and 0.92 | 0.91 |

| Program 8 | 58 | Between 0.25 and 0.31 | Between 0.90 and 0.92 | 0.87 |

| Program 9 | 29 | Between 0.48 and 0.58 | Between 0.96 and 0.97 | 0.97 |

| Program 10 | 44 | Between 0.35 and 0.40 | Between 0.93 and 0.95 | 0.94 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Matosas-López, L.; Bernal-Bravo, C.; Romero-Ania, A.; Palomero-Ilardia, I. Quality Control Systems in Higher Education Supported by the Use of Mobile Messaging Services. Sustainability 2019, 11, 6063. https://doi.org/10.3390/su11216063

Matosas-López L, Bernal-Bravo C, Romero-Ania A, Palomero-Ilardia I. Quality Control Systems in Higher Education Supported by the Use of Mobile Messaging Services. Sustainability. 2019; 11(21):6063. https://doi.org/10.3390/su11216063

Chicago/Turabian StyleMatosas-López, Luis, Cesar Bernal-Bravo, Alberto Romero-Ania, and Irene Palomero-Ilardia. 2019. "Quality Control Systems in Higher Education Supported by the Use of Mobile Messaging Services" Sustainability 11, no. 21: 6063. https://doi.org/10.3390/su11216063

APA StyleMatosas-López, L., Bernal-Bravo, C., Romero-Ania, A., & Palomero-Ilardia, I. (2019). Quality Control Systems in Higher Education Supported by the Use of Mobile Messaging Services. Sustainability, 11(21), 6063. https://doi.org/10.3390/su11216063